Abstract

This study evaluates the effectiveness of math placement policies for entering community college students on these students’ academic success in math. We estimate the impact of placement decisions by using a discrete-time survival model within a regression discontinuity framework. The primary conclusion that emerges is that initial placement in a lower-level course increases the time until a student at the margin completes the higher-level course they were not assigned to by about a year on average but in most cases, after this time period, the penalty was small and not statistically significant. We found minor differences in terms of degree applicable and degree transferable credit accumulation between students placed initially in the lowerlevel course.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

A large proportion of the high school graduates who enroll in postsecondary education take at least one developmental, remedial, or basic skills course in either mathematics or English.Footnote 1 Recent evidence suggests that nationwide approximately 60 % of all incoming freshmen enroll in at least one developmental education course (NCPPHE and SREB 2010). The rationale behind placing students in developmental courses is based on the premise they can successfully prepare students for higher-level coursework (Boylan et al. 1994, 1997; Lazarick 1997). However there are significant costs associated with developmental education. Current estimates indicate that the annual cost of remedial education ranges between $2 billion (Strong American Schools 2008) and almost $4 billion (Schneider and Yin 2011). And these estimates are not taking into account the opportunity costs in terms of time and money for students of being enrolled in developmental education (Melguizo et al. 2008). Students in developmental courses usually pay tuition and fees but often do not receive degree-applicable credit and are sometimes unable to take college-level courses while in remediation (Bettinger et al. 2013). Since these costs often hinge on the amount of time that students spend in developmental education, it is important to understand the effectiveness of initial placement decisions in developmental courses and the impact of these decisions on the duration of remediation for community college students.

A number of rigorous studies have investigated the impact of developmental education, focusing on understanding its effectiveness. These studies have capitalized on large-scale assessment, placement, and outcome data in several states (e.g., Ohio, Florida, New York, North Carolina, Tennessee, and Texas) and have focused on large community college systems to understand the effect of placing students in developmental courses relative to college-level courses at the time of assessment (Bettinger and Long 2009; Calcagno and Long 2008; Clotfelter et al. 2014; Martorell and McFarlin 2011; Scott-Clayton and Rodriguez 2015). One study provided further nuance by examining differential impacts by level of academic preparedness in Tennessee (Boatman and Long 2010). Using regression discontinuity designs (RDDs) and other estimation strategies, this set of studies provides evidence on the impact of developmental education on important outcomes such as early college persistence (e.g., enrollment after one semester or year), college attainment (e.g., credits and credentials completed; transfers), and even labor market returns. Although the findings of these studies (which are discussed in further detail in the next section) have been mixed, they have improved our understanding of how developmental education shapes community college student experiences. Most of the evidence points towards a “diversion” function of remediation, by which students in remediation simply take remedial courses instead of college-level courses (Scott-Clayton and Rodriguez 2015).

We see our study as making two important contributions to this literature. First, most of these studies evaluated the impact of taking a developmental course versus a college level course (i.e., they did not differentiate the impact of different courses within the developmental math sequence). Like Boatman and Long (2010), we examine whether there are heterogeneous impacts across different levels of developmental math, but we also investigate how the estimated impacts of placement decisions to these courses change over time and how these placement decisions affect the duration of time before an outcome is achieved.

Second, most of these studies focused on longer-term outcomes that are not always relevant to students enrolled in these courses because they may not align with their objectives and they may occur too far in the future to be observable (e.g., total numbers of credits completed, degrees attained, and transfers to 4-year colleges within a three to 6 year window). Although some of the aforementioned studies have examined shorter-term outcomes such as completion of subsequent college-level courses (Boatman and Long 2010; Calcagno and Long 2008), or grades in the remediated subject (Scott-Clayton and Rodriguez 2015), our paper focuses on an underexplored but essential outcome: the eventual completion of the next course in the sequence (i.e., the course that students placed in a lower-level remedial course would have been placed in if they had scored higher on the initial placement test). Given that developmental sequences can be as many as four courses long in some community college systems, and that increasing persistence (i.e., attempt rates) through sequences appears to be more important to improve overall outcomes than increasing completion rates (i.e., pass rates) (Bailey et al. 2010; Ngo and Kwon 2015), it is important to examine student success in the next course. This is arguably the main objective of offering a prerequisite remedial course. In addition to these shorter-term course completion outcomes, we also examine whether students are able to complete 30 degree-applicable and 30 transferable credits, important indicators of student persistence and attainment (Melguizo et al. 2011). Importantly, the identification strategy we use—a discrete time hazard model embedded within a RDD—enables us to understand how placement decisions affect the amount of time spent in remediation in different college contexts before a student achieves these goals.

The findings presented in this paper examine mathematics placement decisions and student outcomes in a Large Urban Community College District (LUCCD) in California, which is the largest community college district in California and one of the largest in the U.S., serving a very diverse population of students. The data enable us to track students for at least 4 years following their initial placement decisions, allowing us to examine the impact of these decisions over a long period of time. LUCCD students were initially placed into one of four distinct math levels: arithmetic, pre-algebra, elementary algebra, or intermediate algebra.Footnote 2 For different colleges we present the effects of being placed below the placement cut point between each of these levels. That is, we present how student outcomes are affected by being placed in arithmetic instead of pre-algebra, in pre-algebra instead of elementary algebra, or in elementary algebra instead of intermediate algebra. These impacts are a good focal point for researchers and policymakers aiming to improve students’ community college experiences and outcomes because (a) the initial placement decisions are the primary determinant of a student’s community college trajectory, and (b) the data and research methods produce valid impact estimates right at the cut points between different college placement levels. Measured at the cut points, these impact estimates evaluate the quality of the placement decisions themselves.

Our study estimates the impact of placement decisions in the preparatory math sequence over time by using a discrete-time hazard model (Singer and Willett 1993, 2003) within a RDD framework (Caliendo et al. 2013; Ham and LaLonde 1996; Lesik 2007). The relevant policy question (at least one that can be answered rigorously) is whether placement decisions are effective. If they are, students at the margin do similarly well regardless of which course they are placed into. If they are not, this is either because students are placed below or above their level of expertise or because the courses they are placed into are poorly taught or otherwise ineffective for them. It is impossible to conclusively disentangle these different possibilities. Thus, our analyses estimate the impact of placements, which do not necessarily generalize to the coursework and services associated with those placements.

We used RDD because this is a technique that enables the researcher to make rigorous causal inferences when randomization is not feasible. The general idea is that, controlling for the score on their placement test, students at the margin are essentially “assigned” at random to one of two placements (the one above and the one below the cut point), provided that they do not know what their score is relative to the cut point as they are taking the test and provided that their score is not manipulated afterwards. We estimated our RDD models as discrete-time survival models to allow the impacts to vary over time and to minimize any bias due to censoring of observations. Descriptive evidence indicates that community college students often delay enrollment in developmental math courses (Bailey 2009; Fong et al. 2015), and that students spend a substantial amount of time (5 years on average) before attaining a degree or certificate or transferring to a 4-year college (Melguizo et al. 2008). This in turn reflects the fact that many community college students attend college part-time. The methodological strategy we used enabled us to get precise estimates of the effects of different placements on educational outcomes over time, including the additional time that certain placements may add to a student’s achievement of those outcomes.

Results of our study suggest that there is variation in the effectiveness of the placement decisions both by math level (i.e., students may benefit from being placed into arithmetic first rather than moving straight into pre-algebra but they may not benefit from being placed into elementary algebra before moving into intermediate algebra) and by college (i.e., students may benefit from being placed into arithmetic in college A but not in college C). In terms of the short-term outcomes (e.g., passing the following course in the sequence), we found that in some colleges the initial “penalty” of being placed in a lower level math course (i.e., a lower initial likelihood of passing the higher-level course in the sequence) disappeared over time, typically after about 1 year. This was not the case in other colleges where such a “penalty” remained even after 5 years. These findings suggest that studies that focus on a limited set of outcomes and do not follow students long enough may draw erroneous conclusions regarding the effectiveness of developmental education.

We interpret the results as information with which to direct cutoff adjustments to better serve marginal students. Under a well-designed placement policy one would expect that the results of the RDD models would yield placement coefficients that are close to zero or modestly positive to account for the extra time involved in first completing a lower-level course (Robinson 2011). When the placement coefficients are negative, students at the margin are not benefiting from being placed in a remedial class, which suggests that the cut-scores should be lowered so that these students are placed directly in a higher-level course. Similarly, when the placement coefficients are large and positive, this suggests that students at the margin benefit substantially from the developmental preparation, and that the cut scores may need to be raised so that students who score just above the margin are also placed in the developmental math course.

The structure of this paper is as follows: in the next section we present a brief description of literature followed by the current assessment and placement policies in math at the LUCCD. The following methods section includes a description of the data, the sample, and the models estimated. This is followed by a results section, which in turn is followed by a discussion that highlights the conclusions and policy implications of the study.

Literature Review

In recent years a number of studies have used rigorous quasi-experimental methods to evaluate the impact of developmental math on various educational outcomes. Capitalizing on placement test and student outcome data from different states and community college systems, researchers have used econometric methods such as RDD that can account for selection bias to examine the consequences of placing students in developmental courses relative to college-level courses. RDD exploits variation around the minimum required score on placement tests to estimate causal effects of placement in developmental courses.

The findings of these studies largely have been mixed. For example, using data from Florida, Calcagno and Long (2008) found that developmental education coursework had a positive effect on short-term persistence, but little effect on eventual 4-year degree completion. Bettinger and Long (2009), using an instrumental variables (IV) estimation strategy, found that community college students in developmental courses in Ohio were more likely to persist and graduate than similar students who did not receive remediation. In contrast, other studies have found that placement in developmental math negatively affected short-term outcomes. A study by Clotfelter et al. (2014) also using IV estimation concluded that, for a sample of community college students in North Carolina, remediation reduced the probability of passing a college math course by about 22 %. In the long run, being placed into developmental education did not appear to improve students’ likelihood of completing a degree (Calcagno and Long 2008; Martorell and McFarlin 2011; Scott-Clayton and Rodriguez 2015). Similarly, using data from six community colleges in a large district, Scott-Clayton and Rodriguez (2015) found that 3 years after enrollment there was basically no impact of being placed in developmental courses in terms of enrollment, persistence, or degree attainment with the exception of a negative impact for students who failed the reading test. They concluded developmental courses are largely diverting students from enrolling directly in college level courses, rather than discouraging students or developing their skills. These findings underscore the “diversion hypothesis”—that developmental education policies funnel students to tracks where they complete courses, but not ones that count towards college degrees or lead to transfer (Scott-Clayton and Rodriguez 2015).

We note that most of these studies focused only on the cutoff between the final course of the developmental sequence and the college-level course, yet a number of students are placed into courses that are two or more levels below the college level course (Bailey et al. 2010; Melguizo et al. 2014; Ngo and Kwon 2015). One study did investigate whether there were heterogeneous effects of development education by focusing on different courses of a developmental sequence. Using data from Boatman and Long 2010 found differential impacts of developmental and remedial education based on the level of math and academic preparation of the students. There were less negative and sometimes even positive effects of remediation for students with the lowest levels of academic preparedness. Similarly, using data from Texas, Martorell and McFarlin (2011) examined whether the effects of remediation on college persistence and completion differed by student ability by comparing effects after the placement cutoff was raised by about a third of a standard deviation. They found that these effects became slightly more negative after cutoffs were raised, suggesting that lower-ability students may have benefitted more from placement in remedial courses.

Existing studies also tended to examine longer-term outcomes that, while important to examine, arguably are not the primary goals of developmental education policy (e.g., total numbers of credits completed, degrees attained, and transfers to a 4-year college) (Bettinger and Long 2009; Calcagno and Long 2008; Martorell and McFarlin 2011). These outcomes may not align with students’ objectives and they may occur too far in the future to be observable, causing a censoring problem. While some studies mentioned above examined short-term outcomes such as semester-to-semester persistence and completion of gatekeeper courses, few studies examined whether developmental courses adequately prepared students for the next course in the sequence, which is a primary goal of placing students into lower levels of remedial math sequences. A recent study by Moss et al. (2014) used both RDD and a randomized control trial (RCT) design to test whether students assigned to a developmental math course benefitted in terms of earning better grades in a college level course, which is an outcome more aligned with the goals of developmental education policy. They found that students placed in the developmental course had an increase of about one quarter to one-third in the grade in a college level math course. In contrast, Scott-Clayton and Rodriguez (2015) found that placement in remediation did not improve grades in subsequent college-level courses.

Our study contributes to the literature by not only exploring the impact of developmental math on all the levels of the sequence and focusing on relevant short-term outcomes associated with the policy (i.e., passing the following course in the sequence), but also by accounting for variation of the effect over time as a way to better understand the impact of placement decisions on student outcomes. All studies referenced examined whether outcomes were achieved by a certain amount of time (e.g., 3 and 6 years in Boatman and Long (2010); 6 years in Calcagno and Long (2008); 3 or more years in Scott-Clayton and Rodriguez (2015)). We take an alternative approach by examining the impact of placement decisions on when the outcomes are achieved. Thus the outcome of interest is not whether students had achieved a given outcome by a certain time point following remedial assignment, but rather, how remedial assignment impacts the duration of remediation. The approach we use—a discrete time hazard model embedded within a RDD—has been applied previously to understand the impact of receiving unemployment benefits on the duration of unemployment (Caliendo et al. 2013) and the impact of college remediation on time to dropout (Lesik 2007). We further develop the intuition for this approach in the Methods section. Finally, consistent with Robinson (2011), we argue that this application of RDD could also be used as a method to test and calibrate whether colleges are indeed setting the placement cutoffs correctly.

Math Assessment and Placement Policies in a Large Urban Community College District in California

We investigate the impact of placement decisions on student outcomes over time in the LUCCD. Because California community college districts operate under a decentralized governance structure, districts have considerable discretion over how they place students in course sequences and when students are placed in developmental math or English. Unlike other districts in California, the LUCCD further decentralizes decision-making about developmental education placement to each individual college (Melguizo et al. 2013; Fong et al. 2015). As a result, LUCCD colleges have autonomy in three key areas in terms of who is assigned to developmental education: selecting a placement instrument, setting cut scores, and selecting so-called “multiple measures”, which represent a legally mandated alternative to placing students based on the score of a single testing instrument.

Placement Instrument

Colleges can use a wide variety of placement instruments to assign students to developmental education. These instruments include placement tests, student interviews, academic transcripts, and attitude surveys (CCCCO 2011). Colleges using placement tests have the choice of selecting a state-approved instrument or developing their own, which, however, requires the college to prove its validity. State-approved instruments in California include: College Board’s ACCUPLACER, ACT’s COMPASS, UC/CSU’s MDTP, and the Comprehensive Adult Student Assessment Systems (CASAS). All nine LUCCD colleges use placement tests to determine developmental education placements. This decision reflects faculty perceptions that a test is the most efficient and effective instrument to capture a student’s math knowledge and ability (Fong et al. 2015).

Cut Scores

After selecting an assessment, college faculty and administrators have the freedom to select cut scores to sort students into different courses, including developmental courses. In their research on the math placement process in LUCCD, Melguizo et al. (2014) found that this score-setting process usually involved a deliberate trial-and-error calibration effort by math faculty, who tried to avoid placing students in classes for which they were not academically ready. Because student characteristics and course offerings varied by college, the cut points between placement levels varied considerably across the colleges as well. Online Appendix Table A.1 summarizes the cut score decisions of the colleges in our sample during the study period.

The colleges reported using several procedures to calibrate their cut scores (Melguizo et al. 2014). Most common was for several math faculty members to take the tests themselves and then come to an agreement about what scores would indicate a student’s readiness for each course in the sequence. The test vendors’ recommendations would also have been considered when a new instrument was first adopted. In earlier years, the state required periodic re-evaluation of placement procedures, but during our study period those re-evaluations were done less frequently, usually only if prompted by faculty dissatisfaction with the preparation level of the students enrolled in their particular courses. Some of the colleges in our study compared the academic success of students placed into a given level via a placement test with that of those who progressed to that level from preceding courses and used the resulting information to calibrate placement scores.

Notably, the calibration processes we encountered were largely informed by faculty perceptions and satisfaction with the level of student preparation for each course and on student performance in that particular course. Neither state regulations for the validation of cut points nor the procedures we observed included information linking initial placements with subsequent success in the course sequence. Though state accountability measures have more recently focused on student movement from the initial level to success in at least one subsequent level, that information has not been systematically used to calibrate or improve placement procedures.

Multiple Measures

Title 5 of the California Code of Regulations requires community colleges to consider information other than a student’s assessment score to make placement decisions. Known as “multiple measures”, these measures are intended to give emphasis to other cognitive and non-cognitive factors that play a role in determining student performance. According to the CCCCO, a number of factors can be treated as multiple measures, including, for example, scores from additional standardized placement tests, writing samples, performance-based assessments, surveys and questionnaires, and past educational experience. Online Appendix Table A.2 summarizes the multiple measure points given to students by each of the colleges in our sample during the study period.

Each of the colleges in the study collected additional information via an educational background questionnaire. However, different LUCCD colleges established different rules for which measures counted, how many placement test score points they contributed, and under what circumstances multiple measures were applied. While many students earned additional points based on these multiple measures, (Fong et al. 2013; Ngo et al. 2013) showed that in only a small percentage of cases (about 5 % across the district) were students placed in a higher-level course than their placement test score would have supported. As discussed below, our analytical approach took account of the use of multiple measures by the individual colleges and found that they did not compromise the integrity of our analyses.

Methods

Regression Discontinuity Design

The research presented here is based on an analytical framework known as a RDD that depends on the fact that students’ assignment to specific entering levels of math instruction is based on their score on an initial placement test and selected background variables collected before the placement decisions were made or test scores were communicated. As described above, the different LUCCD colleges use specific pre-determined point cutoffs in the distribution of the placement test scores to place students in specific courses and have pre-established rules for augmenting these scores with information from “multiple measures”. The assignment variable is therefore a combination of the raw placement test score and any additional multiple measure points where applicable. After statistically controlling for this composite placement test score, any differences in student’s subsequent outcomes can be reliably attributed to the placement decision itself, i.e., the place in the adjusted test score distribution where the cut point was set. As discussed in the introduction, this RDD method has been widely used in studies attempting to evaluate remedial education programs (Boatman and Long 2010; Calcagno and Long 2008; Martorell and McFarlin 2011; Moss and Yeaton 2006; Scott-Clayton and Rodriguez 2015). As a viable alternative to RCTs, RDD studies have been used in program evaluation and policy research across a wide range of policy areas, ranging from labor research to financial analysis to medical research (Angrist and Lavy 1999; Gamse et al. 2008; Robinson 2011; Urquiola and Verhoogen 2009; Zuckerman et al. 2006). The internal validity of their causal inferences is considered on par with fully experimental RCTs, provided that certain assumptions are met (Imbens and Lemieux 2008). These assumptions are discussed in detail below.

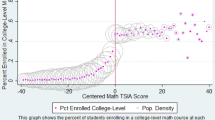

Figure 1 illustrates how RDD works in the context of this particular study. It depicts the relationship between an educational outcome on the Y-axis and the adjusted placement test score on the X-axis. (The outcome could be attainment of a certain number of math credits or the probability of successfully completing a given course). As illustrated in the figure, the hypothesized relationship between the initial placement score and attainment of the subsequent outcome is positive: students scoring higher on the placement test are predicted to be more successful college students. As indicated with a vertical line, there is a cut point at point X in the distribution of the adjusted placement test score. Students who score above this cut point are placed in a higher-level class (e.g., elementary algebra) and students who score below the cut point are placed in a lower-level class (e.g., pre-algebra). The visible shift in the relationship between the placement test score and the outcome is an indication of the effect of the placement decision. As discussed before, the lack of such a pronounced shift is an indication of a well-calibrated placement scheme. A pronounced positive shift on the left-hand side of the cut point means that too few students are placed in the lower-level course and conversely a positive shift on the right-hand side means that too many students are placed in the lower-level course (as shown in the figure). By statistically controlling for the placement test score, the magnitude and statistical significance of this shift can be precisely estimated.

As mentioned in the introduction, our study differs from other research on the effects of community college placement policies in that (a) it estimated placement effects at multiple points in the distribution of placement test scores, and (b) it used discrete time hazard analysis (Singer and Willet 2003) to describe how placement effects develop and change over time. These refinements are important because placement effects cannot be assumed to be constant throughout the distribution of placement test scores and because placement effects are unlikely to be constant over time. That is, a student who is placed in pre-algebra instead of elementary algebra may take longer to complete elementary algebra but may ultimately have a higher likelihood of doing so. Thus, a placement effect estimated after 6 months may be negative while the same effect estimated 12 months later may be positive. Similarly, the effect of placement in a lower-level course may be positive at the cut point between arithmetic and pre-algebra, but may be negative at the next higher cut point between pre-algebra and elementary algebra.

The precision of RDD estimates depends on the size of the analysis sample and the degree to which the sample is closely clustered around the cut point of interest (Murnane and Willett 2011; Schochet 2006). Sophisticated RDD estimation procedures consider these properties by establishing an “optimal bandwidth” for each estimate, which optimizes the tightness of the distribution and the size of the available sample. We use the Fan and Gijbels (1996) procedure to establish optimal bandwidths for all estimates. We also tested sensitivity of the estimates to bandwidth size to ensure that this procedure did not materially affect the estimated placement effects and found no such interference. Results of these sensitivity analyses (varying the RDD bandwidths to five points above and below, and one point above the optimal bandwidth) are available in the Online Appendix.

Discrete Time Hazard Analysis

Typically, estimates of the effects of community college placement decisions focus on outcomes at a given point in time. For example, researchers may estimate whether a student graduates with a college degree or successfully transfers to a 4-year college within a 2-year follow-up period. In this study we moved beyond these point-in-time outcomes and estimated how outcomes (and placement effects) changed over time, which also helps us to identify the duration of time before an outcome event is observed. To conduct such analyses we used a framework developed by Singer and Willet (1993) in which student-level outcomes are transformed into student-time-based units (in this case, based on student-quarters), thus creating multiple observations for each individual sample member. Each of these observations is associated with a single time period (a quarter in this case) and describes whether an outcome event (successfully completing a course or earning 30 credits) has occurred by the end of this time period. The parameter of interest is the conditional probability of the outcome occurring in a particular quarter, conditional on it not yet having occurred in any of the preceding quarters. In a discrete-time hazard model, these conditional probabilities are allowed to vary non-linearly from time period to time period and from student to student. Thus, within the RDD framework, the probability of successfully completing a course within a given quarter is allowed to vary by (a) the placement decision itself, (b) the adjusted score on the placement test, and (c) which quarter is examined (time). This framework thus provides flexibility to estimate how time, academic ability at placement, and placement decisions interact to impact student outcomes. Moreover, this analytical method explicitly addresses the problem of censoring in longitudinal outcome data like those used here. Sample members who never attain a particular outcome contribute fully to all the period-specific parameter estimates for which the outcome could have been observed. It is not necessary to impute data for unobserved quarters, curtail the sample to enforce a uniform observation period, or use complex statistical adjustments (e.g., Cox regression) to adjust for censoring.

We used discrete time hazard analysis to investigate the effect of course placement on the successful completion of the next course of each college’s remedial math sequence, the completion of 30 degree-applicable credits, and the completion of 30 transferable credits. We conducted separate outcome analyses for each of the student-quarter observations of these outcomes to estimate the effects of placement decisions at each specific time point. By converting the resulting regression coefficients into predicted outcome variables (e.g., quarterly educational attainment variables) we were then able to present cumulative incidence profiles for outcomes such as credit attainment and successful completion of milestone math courses. As detailed by Singer and Willet (2003), this method maximizes the use of available data by estimating each time-specific effect on the entire available sample for that time point. As a result, effects for time periods close to the initial placement test are more precisely estimated than they would have been had the entire sample been constrained to students with a minimum amount of available follow-up (e.g., 36 or 48 months). This is especially important in research involving community college students, many of whom attain their educational objectives across multiple episodes with frequent and sometimes lengthy interruptions (Bailey et al. 2010).

A concern with this approach is that there are compositional changes in the sample over time. Earlier time points include more sample members than later ones and later time points rely more on early cohorts of students. If these compositional changes were somehow correlated with the placement decision, they could introduce a bias into the impact estimates. However, for this to happen, either the cut points (and associated placement decisions) would have to change within the study sample over time or the conditional likelihood of students scoring above or below the cut point (after controlling for the adjusted placement test score) would have to change over time.Footnote 3 Also, as Singer and Willett (1993) acknowledge, the shifting samples underlying discrete time hazard models can pose interpretation problems if the estimates do not represent the same underlying population over time. This is not the case here since there were no major shifts in LUCCD admission policies, economic conditions, or population characteristics during the study period.Footnote 4

Another concern with discrete time hazard analyses is the fact that person-time observations are serially correlated. Without appropriate statistical adjustments such serial correlation can bias the standard errors of regression estimates considerably. To address this concern we used the SAS (version 9.3) software’s survey logistic regression procedure. This method accounts for the correlation that exists between observations of the same student across time periods by adjusting the degrees of freedom so that standard errors are computed using the number of students in the sample, rather than the number of person-time observations (Allison 2005).

Specifically, we estimated the following model:

This model predicts the logit of the conditional probability (hazard) that student i will successfully complete outcome t in quarter j, given his or her college placement score and his or her placement into a higher or lower level math course. It allows both the baseline conditional probability of achieving the outcome as well as the effect of placement into the lower level of the remedial math sequence (trt) to vary over the 16 observed quarters (each indicated by a dummy variable D ·ij ), by estimating a specific baseline and treatment coefficient for each time period j within each student i.

In order to illustrate the impact of course placement on outcomes for students who scored immediately above or below the threshold for placement into the higher level of the remedial math course sequence, we estimated the model’s survivor function based on predicted hazards for both groups of students. The survivor function estimate for each time period is the predicted proportion of students who have not successfully completed an outcome by quarter j. We then subtracted the survivor function estimates from one, and plotted this rate for students who were placed into the higher and lower level remedial math courses. These rates can be interpreted as the cumulative incidence of completing an outcome by quarter j, or in other words, the predicted proportion of students who successfully achieved a given outcome. We generated confidence intervals for these estimated rates using Greenwood’s approximation (Greenwood 1926).

Three Potential Sources of Bias

All RDD estimates are subject to three distinct types of bias. The first stems from the fact that students may be able to enroll in classes to which they were not assigned based on their placement test result. This may occur when students skirt the official placement decisions or when their placement decision is based on factors other than their placement test result. As discussed in the introduction, California community colleges are mandated to use “multiple measures” to inform placement decisions because exclusive use of standardized placement tests is considered disadvantageous to certain groups of students (Fong et al. 2013, 2015; Ngo et al. 2013). Five out of the six colleges in the sample used multiple measures to place students. For these colleges, test scores were adjusted to take into account these decisions and these adjusted test scores were then used as the forcing variable in our RDD analyses. For this to be appropriate, the use of multiple measures for individual students and the amount of “multiple measure points” each student was awarded must be independent of what the placement decision would have been if it were based only and exclusively on the standardized placement test. We confirmed this in our qualitative research (Melguizo et al. 2014, 2013) and also confirmed it by examining the distribution of adjusted test scores around the placement cut point using a McCrary test (detailed below). In addition, we expressly examined whether there was a discontinuity in multiple measure points awarded around the placement cut point, which would be the most direct evidence of “tampering” with multiple measures (to influence placement decisions). As detailed below, all of these safeguards were successfully met in most cases and where they were not, we excluded that particular contrast from our RDD analyses.

In addition to the “multiple measure” cases, we know that some students were able to enroll in a class other than the one they were formally assigned to, an issue of non-compliance. Because of administrative controls this number is very small. Fewer than 5 % of the sample first enrolled in a class other than the one they were formally assigned to. To avoid biasing the results, these students are included in our analysis as if they had enrolled in the class in which they were placed. (In random assignment-based research, the estimates resulting from this inherently conservative analytical approach are known as “Intent to Treat” or “ITT” estimates). We also ran all models with and without these non-compliers, and obtained similar results.

A more serious type of bias is one in which the placement test results themselves are manipulated to achieve a specific placement outcome. This can happen, for example, when instructors help students as they take the test, correct items, manipulate scores, or allow students to retake the test if they score close to a cut point. In general, the ACCUPLACER and COMPASS placement tests used by the LUCCD colleges we studied do not allow for such manipulation and we have no indication that any such manipulation occurred during the study period for our sample. However, because the integrity of the testing and placement process is so crucial for the validity of RDD studies like these, we also used the McCrary test (McCrary 2008),Footnote 5 which is specifically developed to detect these types of manipulations. This test looks for anomalies in the distribution of subject test scores across the cut points. (A spike of scores just above a cut point would indicate possible manipulation of test results to place more students in a higher-level course). Across the seventeen comparisons presented in this paper we found only one case where we could not statistically rule out such manipulation. We excluded this case from the findings presented in this paper, and focus on the sixteen remaining comparisons.

A final major assumption underlying RDD estimates is that the regression model must properly represent the relationship between the forcing variable (in this case the continuous adjusted placement score) and the outcome variable (in this case the various measures of student course milestones). Misspecification of this relationship can bias RDD estimates. Although it is not possible to rule out all misspecification bias, our estimates were found not to be sensitive to changes in model specification or changes in the optimal bandwidth used to estimate the RDD effects. Specifically, we ran models in which we included polynomial terms and covariates, and found no major differences in our subsequent estimates from these changes in our model specifications.

Interpretation of the Results

A major limitation of RDD research in general is that estimated effects apply only “at the cut point”. That is, they represent what would have happened to a student scoring just below the cut point if that same student would have scored just above the cut point and cannot be extrapolated to larger bandwidths of students without threats to internal to validity. This places important limitations on the interpretation of subsequent placement effects. If a marginal student who is placed in pre-algebra would have done better had he or she been placed in elementary algebra this does not mean that all students in pre-algebra would have done better in the higher-level course or that pre-algebra is ineffective or a waste of time. Thus, consistent with Robinson (2011) we argue that the best way to interpret such a negative marginal effect is that the cut point is too high: it should be lowered until a marginal student neither benefits nor suffers from being placed on either side of the cut point. (As discussed above, because of the cost associated with initially placing a student in a lower-level course, it may be acceptable or even desirable if the effect of placement below the cut point is modestly positive).

Data

The present study describes the math placement trajectories and subsequent educational outcomes for a sample of 16,553Footnote 6 first-time community college students in six community colleges in the LUCCD. These students took a standardized placement test (either the ACCUPLACER or the COMPASS) between the summer of 2005 and the spring of 2008, and subsequently enrolled in college. At the time of their enrollment they completed an education planning form, which for most students included the following demographic background variables: age, gender, race and ethnicity, whether English was their primary language, and residence status (i.e., U.S. citizen, permanent resident and other visa). The outcome data included quarterly enrollment data (for up to four quarters per year; three in the school year and one in the summer), quarterly course completion and credit attainment data, and variables capturing a number of educational outcomes (i.e., passed the following course in the math trajectory, and total degree applicable and transfer level credits attained). All student data used in our analyses came from the LUCCD Office of Institutional Research’s computerized system.

We transformed these data such that each student in our sample had a series of quarterly status variables beginning with the quarter of their enrollment (usually immediately following the placement test) and ending with the final quarter of the 2011–2012 school year. We then transformed those data into a series of student-quarter observations for use in the discrete-time hazard analyses described above. We did not pool these data across the six colleges due to variation in placement policies across the district, but instead estimated separate effects for each cut point in each college for which the distribution of the placement test scores met the criteria of the McCrary test.Footnote 7

Table 1 shows the various relevant sample sizes by college and cut point. The second column shows the number of students included in each analysis (after applying the optimal bandwidth criteria described above) and the third column shows the number of person-time observations generated from each sample of students. These are the sample sizes underlying the results presented later in this paper.

Results

Table 2 presents selected background characteristics and placement outcomes for each of the key groups in the study (up to four placement levels in six colleges). The table shows that the student samples were quite different both between levels within colleges and within levels across colleges. This is the case both in terms of their background characteristics and in terms of their educational outcomes. For example, compared to students placed into arithmetic at College A, nearly three times the proportion of students at college F were under 20. Additionally, the percent of students in the sample who indicated that English is not their primary language varied widely by college. For students placed into elementary algebra, it ranged from 19 % at College E to 42.9 % at College A. Similar variation across colleges appears to exist in racial and ethnic background as well as student gender. Moreover, the distribution of placement decisions across the different colleges varied considerably. For example, in college C, which serves a more academically disadvantaged student population than the other colleges, as many as 38 % of the students were placed in arithmetic (the lowest level), compared to as few as 12.6 % in college F, which tends to serve a more academically advantaged student population.

When examining educational outcomes, a similar pattern of variation within and across levels and colleges is apparent. The first key outcome presented in Table 3 is the rate of enrollment in any math class. As expected, not all students who took a math placement test subsequently enrolled in a math course and these enrollment rates varied across levels and colleges. In general, students who had higher placement test scores (and higher-level placements) were more likely to enroll in a math class, although those patterns were not entirely consistent across the different colleges. Subsequent outcomes shown in Table 3 are completion of the class in which a student was first placed, completion of the next higher class (where applicable), attainment of 30 degree-applicable credits within 4 years after initial placement, and attainment of 30 transferable credits during that timeframe.

For the most part, these outcomes show the expected patterns. Students who were placed at lower levels had less academic success across the board. As will become apparent later, there was some long-term catch-up among these students when outcome data were analyzed over longer timeframes but overall the inescapable conclusion is that few students placed at the lowest levels reached meaningful milestones in math within the first 4 years of their college career. We next focus on how placement decisions impact these observed differences.

Figures 2, 3 and 4 show the first set of results of the RDD analyses. Each of the figures shows several lines, each corresponding to a different college, that describe the difference (over time) in the predicted rate of completion of the higher-level course for students placed at that level, compared to students placed at the level below it. These estimates are regression-adjusted so that the difference shown is entirely due to the placement decision. A positive difference indicates that the outcome of interest was more positive for students placed in the higher-level course, and a negative difference indicates that the outcome of interest was more positive for students placed in the lower level course. Figure 2 shows the impact of placement in pre-algebra (as opposed to arithmetic) on completion of pre-algebra for each college, Fig. 3 shows the impact of placement in elementary algebra (as opposed to pre-algebra) on completion of elementary algebra, and Fig. 4 shows the impact of placement in intermediate algebra (as opposed to elementary algebra) on completion of intermediate algebra.

Impact of being placed in pre-algebra as opposed to arithmetic on the cumulative rate of completion of pre-algebra. Results for College A, College B, and College C are estimated imprecisely in some time periods because all students in either one of the research groups experienced the same outcome during that time period

Impact of being placed in elementary algebra as opposed to pre-algebra on the cumulative rate of completion of elementary algebra. Results for Colleges B, C, D, and F are estimated imprecisely in some time periods because all students in either one of the research groups experienced the same outcome during that time period

Impact of being placed in intermediate algebra as opposed to elementary algebra on the cumulative rate of completion of intermediate algebra. Results for Colleges B, C, and F are estimated imprecisely in some time periods because all students in either one of the research groups experienced the same outcome during that time period

Tables 4, 5 and 6 include the numbers underlying these figures as well as results of statistical tests of the significance of the differences between the lines shown. As expected, almost all of the lines in all three of the figures start off above zero, showing considerable and statistically significant initial payoffs to higher placements (at least in terms of these outcomes). However, over time, most of the lines regressed back to the X-axis as students placed in the lower-level course began to catch up. After approximately 4–6 quarters, few of the initial differences were still statistically significant. Across the first two figures (showing impacts on completion of pre-algebra and elementary algebra, respectively), the primary conclusion that emerges is that initial placement in a lower-level course increased the time until a student completed the higher-level course they were not assigned to by 3–4 quarters on average, but in most cases, after this time period, the penalty for initially being placed into a lower level course was no longer substantial or statistically significant. The third figure (showing the impact on the completion of intermediate algebra of having been placed in intermediate algebra immediately) shows more persistent impacts over time, with students much more likely to eventually complete intermediate algebra if they were placed there right away (with the sole exception of College F).

Persistent negative effects of placement in a lower-level course (higher-level course completion rates more than 15 percentage points lower after 4 years) were found for three colleges at the arithmetic/pre-algebra cut point, for two colleges at the pre-algebra/elementary algebra cut point, and for four colleges at the elementary/intermediate algebra cut point. Among the six colleges in the sample, College D was especially problematic in this regard, with significant and consistent negative effects of lower placement throughout the math sequence. The fact that the most persistent negative effects were found around the elementary/intermediate algebra cut point may reflect the fact that elementary algebra was a degree-applicable credit-bearing course during the time of our study and that students were able to graduate or earn a certificate without completing intermediate algebra. Therefore, for many students placed below the cut point catching up by completing intermediate algebra may not have been in alignment with their educational objectives.

Looking at a somewhat more distal set of outcomes, Figs. 5, 6 and 7 repeat this presentation for attainment of 30 degree-applicable credits. Here the picture is more mixed: in many cases students who were placed in the lower-level class attained 30 degree-applicable credits more quickly than their higher-placed counterparts and overall there appears to have been little short or long-term cost to a lower placement as far as credit attainment is concerned. This is largely as expected considering that students can earn college credits even for lower-level math courses that are not transferable to a 4-year college and that students can earn college credits outside of their math sequence even if they are still taking developmental math courses. Thus, lack of progress in math does not necessarily translate into lack of progress in college more generally.

Impact of being placed in pre-algebra as opposed to arithmetic on the cumulative rate of earning 30 degree-applicable credits. Results for College B are estimated imprecisely in some time periods because all students in either one of the research groups experienced the same outcome during that time period.

Impact of being placed in elementary algebra as opposed to pre-algebra on the cumulative rate of earning 30 degree-applicable credits. Results for College C, College D, and College F are estimated imprecisely in some time periods because all students in either one of the research groups experienced the same outcome during that time period.

Impact of being placed in intermediate algebra as opposed to elementary algebra on the cumulative rate of earning 30 degree-applicable credits. Results for College C and College F are estimated imprecisely in some time periods because all students in either one of the research groups experienced the same outcome during that time period.

Sensitivity Checks and Limitations of the Analysis

We estimated the hazard models using four different bandwidths to test the sensitivity of the results: the optimal bandwidth, the optimal bandwidth plus and minus five points, and one point above the optimal bandwidth. The figures showing these results are provided in the Online Appendix. For the most part the results of these sensitivity analyses were very similar to those obtained with the optimal bandwidth. We also ran the model with and without demographic covariates, including age, gender, ethnicity, and a squared assessment variable. When we conducted a likelihood ratio test comparing this model to the main model presented above, the coefficients on these additional variables were never jointly significantly different from zero. Finally, we compared the estimates we obtained to those from a traditional RDD approach with outcomes observed at set points in time—4, 8, 12, and 16 quarters. In cases where the treatment coefficient in the main model was significant, the traditional RDD estimate matched the sign shown in the figures presented above in all but a few cases.

Discussion

The analyses presented in this paper confirm that students who are placed in lower-level math courses have subsequent educational outcomes that are worse than students who are placed in higher-level courses. In some cases and consistently in some colleges, initially placing students in higher-level courses caused them to be more successful in college, both in terms of successfully completing higher-level courses and in terms of earning degree-applicable college credit. However, in many other cases, the positive effect of being placed in a higher-level course became insignificant over time or even reversed. Some colleges consistently saw little positive or negative impact of their placement decisions, which likely reflects well-calibrated placement policies in these colleges. Others, by way of high cutoffs, consistently placed too many students in courses that were too easy for them, thereby increasing their time towards achieving academic milestones and reducing their likelihood of eventual success.

Our results are consistent with previous research as we find evidence that students who were placed in lower-level math courses had subsequently worse educational outcomes than students who were placed directly in higher-level courses (Calcagno and Long 2008; Clotfelter et al. 2014; Martorell and McFarlin 2011; Moss and Yeaton 2006; Moss et al. 2014; Scott-Clayton and Rodriguez 2015). But as described above, and consistent with Boatman and Long (2010) this study presents a more nuanced picture, and illustrates differences in the impact of decisions by level and by college. The evidence also demonstrates the impact of placement decisions on the duration of remediation before certain educational outcomes are achieved. The use of discrete-time hazard models illustrated that the initial “penalty” in terms of lower outcomes decreased in most cases and disappeared in other cases over a sufficiently long period of time.

Considering that there are both instructional expenses and opportunity costs associated with students enrolling in developmental math courses, college administrators and math faculty should consider using analyses like those presented in this paper to fine-tune their placement policies. We suggest that this understanding of whether or not and when students placed in lower levels “catch up” with their higher-placed peers can provide direction for faculty, practitioners, and policy makers to adjust their placement policies. On average, students at the cut point who are placed in lower-level courses should do better than their peers who are not. If not, then the cut points should be lowered gradually until the costs and benefits of developmental education for these students are in balance. In those colleges where students never catch up, colleges could experiment with lowering placement cutoffs. In those colleges where students do eventually catch up over a longer period of time, colleges can consider ways to decrease the amount of time it takes for students to achieve academic milestones. This can help to reduce the opportunity costs of remediation.

We realize that careful calibration of college placement policies would only partially address the challenge of improving the college experience for students whose initial academic skills are insufficient for successful college-level work. Placing these students in appropriate courses does not mean that their success rates in these courses are sufficient to make their college experience worthwhile. Increasing those success rates is a larger educational and policy challenge that goes beyond the practice of creating and adjusting placement policies and includes issues like course quality, support services, and the interaction between higher education and the K-12 and adult education systems.

Notes

The terms basic skills, developmental, remedial and preparatory math education are frequently used interchangeably though they have different conceptual origins, imply differing educational strategies and carry some political baggage. Our preferred terms are either developmental or preparatory math.

The extent to which these math courses are “remedial” depends on students’ subsequent course of study and degree objectives. Arithmetic is never credit bearing and does not count toward any degree or certificate. Pre-algebra is credit bearing but does not meet the math requirement of most certificates or AA degrees and is not transferable to a four-year institution. Elementary algebra is also credit bearing and was sufficient to meet the math requirement for an AA and for most certificates. Its credits are not transferable to a four-year institution. Intermediate algebra credits are transferable and intermediate algebra was required for some technical certificates. Recently, the minimum math requirement for an AA degree was raised to intermediate algebra. However, this change happened after the analysis period for this paper.

Note that compositional changes in the sample over time only affect the validity of the RDD estimates if they change the placement decision through mechanisms other than changes in underlying ability as measured with the placement test score (since the latter is explicitly controlled in the RDD analysis).

As a sensitivity check we also produced traditional cross-sectional estimates (at specific time intervals such as 12, 24, 36, and 48 months after placement) of the impacts presented in this paper. These estimates, which did not differ substantially from those presented here, are summarized in the Online Appendix; the full results are available from the authors.

For a detailed presentation of the use of this test see the Online Appendix.

It is worth noting that specific analyses presented in this paper used subsamples of this overall sample because not all of the students fit within the optimal bandwidths used in the RDD analyses.

Pooling data across colleges was not possible due to the fact that course sequences, placement score cut points, multiple measures, and other major analytical and policy variables varied across the colleges.

References

Allison, P. D. (2005). Fixed effects regression methods for longitudinal data using SAS. Cary, NC: SAS Institute.

Angrist, J. D., & Lavy, V. (1999). Using Maimonides’ rule to estimate the effect of class size on scholastic achievement. Quarterly Journal of Economics, 114(2), 533–575.

Bailey, T. (2009). Challenge and opportunity: Rethinking developmental education in community college. New Directions for Community Colleges, 145, 11–30.

Bailey, T., Jeong, D. W., & Cho, S. W. (2010). Referral, enrollment, and completion in developmental education sequences in community colleges. Economics of Education Review, 29, 255–270.

Bettinger, E. P., Boatman, A., & Long, B. T. (2013). Student supports: Developmental education and other academic programs. The Future of Children, 23(1), 93–115.

Bettinger, E., & Long, B. T. (2009). Addressing the needs of under-prepared students in higher education: Does college remediation work? Journal of Human Resources, 44, 736–771.

Boatman, A. & Long, B. T. (2010). Does remediation work for all students? How the effects of postsecondary remedial and developmental courses vary by level of academic preparation. National Center for Postsecondary Research Working Paper.

Boylan, H., Bliss, L., & Bonham, D. (1994). Characteristics components of developmental programs. Review of Research in Developmental Education, 11(1), 1–4.

Boylan, H., Bliss, L., & Bonham, D. (1997). Program components and their relationship to students’ performance. Journal of Developmental Education, 20(3), 1–8.

Calcagno, J. C., & Long, B. T. (2008). The impact of remediation using a regression discontinuity approach: Addressing endogenous sorting and noncompliance. Working Paper 14194. Cambridge, MA: National Bureau of Economic Research.

Caliendo, M., Tatsiramos, T., & Uhlendorff, A. (2013). Benefit duration, unemployment duration, and job match quality: A regression-discontinuity approach. Journal of Applied Econometrics, 28, 604–627.

California Community College Chancellor’s Office. (2011). Matriculation Handbook. Retrieved from http://www.cccco.edu/Portals/4/SS/Matric/Matriculation%20Handbook%20(REV.%2009-2011).pdf.

Clotfelter, C. T., Ladd, H. F., Muschkin, C. G., & Vigdor, J. L. (2014). Developmental Education in North Carolina Community Colleges. Educational Evaluation and Policy Analysis,. doi:10.3102/0162373714547267.

Fan, J., & Gijbels, I. (1996). Local polynomial modeling and its applications. London: Chapman & Hall.

Fong, K., Melguizo, T., Bos, H., & Prather, G. (2013). A different view on how we understand progression through the developmental math trajectory. Policy Brief 3. California Community College Collaborative. Rossier School of Education, University of Southern California. http://www.uscrossier.org/pullias/research/projects/sc-community-college/.

Fong, K., Melguizo, T., & Prather, G. (2015). Increasing success rates in developmental math: The complementary role of individual and institutional characteristics. Research in Higher Education. doi:10.1007/s11162-015-9368-9.

Gamse, B. C., Jacob, R. T., Horst, M., Boulay, B., Unlu, F. (2008). Reading First impact study: Final report. NCEE 2009-4038. Washington DC: National Center for Educational Evaluation and Regional Assistance, Institute of Education Sciences, U.S. Department of Education.

Greenwood, M. (1926). The natural duration of cancer (Vol. 33, pp. 1–26)., Reports on Public Health and Medical Subjects London: Her Majesty’s Stationery Office.

Ham, J., & LaLonde, R. (1996). The effect of sample selection and initial conditions in duration models: Evidence from experimental data on training. Econometrica, 64(1), 175–205.

Imbens, G., & Lemieux, T. (2008). Regression discontinuity designs: A guide to practice. Journal of Econometrics, 142(2), 615–635.

Lazarick, L. (1997). Back to the basics: Remedial education. Community College Journal, 68, 11–15.

Lesik, S. A. (2007). Do developmental mathematics programs have a causal impact on student retention? An application of discrete-time survival and regression-discontinuity analysis. Research in Higher Education, 48(5), 583–608.

Martorell, P., & McFarlin, I, Jr. (2011). Help or hindrance? The effects of college remediation on academic and labor market outcomes. The Review of Economics and Statistics, 93(2), 436–454.

McCrary, J. (2008). Manipulation of the running variable in the regression discontinuity design: a density test. Journal of Econometrics, 142(2), 698–714.

Melguizo, T., Bos, H., & Prather, G. (2011). Is developmental education helping community college students persist? A critical review of the literature. American Behavioral Scientist, 55(2), 173–184.

Melguizo, T., Bos, H., & Prather, G. (2013). Are community colleges making good placement decisions in their math trajectories? Policy Brief 1. California Community College Collaborative. Rossier School of Education, University of Southern California. http://www.uscrossier.org/pullias/research/projects/sc-community-college/.

Melguizo, T., Hagedorn, L. S., & Cypers, S. (2008). The need for remedial/developmental education and the cost of community college transfer: Calculations from a sample of California community college transfers. The Review of Higher Education, 31(4), 401–431.

Melguizo, T., Kosiewicz, H., Prather, G., & Bos, J. (2014). How are community college students assessed and placed in developmental math? Grounding our understanding in reality. Journal of Higher Education, 85(5), 691–722.

Moss, B., & Yeaton, W. (2006). Shaping policies related to developmental education: An evaluation using the regression-discontinuity design. Educational Evaluation & Policy Analysis, 28(3), 215–229.

Moss, B., Yeaton, W., & Lloyd, J. E. (2014). Evaluating the effectiveness of developmental math by embedding a randomized experiment within a regression discontinuity design. Educational Evaluation & Policy Analysis, 36(2), 170–185.

Murnane, R. J., & Willett, J. B. (2011). Methods matter: Improving causal inference in education and social science research. Oxford: Oxford University Press.

National Center for Public Policy and Higher Education & Southern Regional Education Board. (2010). Beyond the Rhetoric: Improving College Readiness through Coherent State Policy. Washington, D.C.

Ngo, F., Kwon, F., Melguizo, T., Bos, H., & Prather, G. (2013). Course placement in developmental mathematics: Do multiple measures work? Policy Brief 4. California Community College Collaborative. Rossier School of Education, University of Southern California. http://www.uscrossier.org/pullias/research/projects/sc-community-college/.

Ngo, F., & Kwon, W. (2015). Using multiple measures to make math placement decisions: Implications for access and success in community colleges. Research in Higher Education, 56(5), 442–470.

Robinson, J. P. (2011). Evaluating criteria for English learner reclassification: A causal-effects approach using a binding-score regression discontinuity design with instrumental variables. Educational Evaluation and Policy Analysis, 33(3), 267–292.

Schneider, M., & Yin, L. (2011). The hidden costs of community colleges. Washington, DC: American Institutes for Research. Retrieved from http://www.air.org/files/AIR_Hidden_Costs_of_Community_Colleges_Oct2011.pdf.

Schochet, P. A. (2006). Regression discontinuity design case study: National evaluation of early reading first. Princeton, NJ: Mathematica Policy Research.

Scott-Clayton, J., & Rodriguez, O. (2015). Development, discouragement, or diversion? New evidence on the effects of college remediation. Education Finance and Policy, 10(1), 4–45.

Singer, J., & Willett, J. (1993). It’s about time: Using discrete-time survival analysis to study duration and the timing of events. Journal of Educational Statistics, 18(2), 155–195.

Singer, J., & Willett, J. (2003). Applied longitudinal data analysis: Modeling change and event occurrence. New York: Oxford University Press.

Strong American Schools. (2008). Diploma to nowhere. Retrieved from http://www.edin08.com.

Urquiola, M., & Verhoogen, E. (2009). Class-size caps, sorting, and the regression-discontinuity design. American Economic Review, 99(1), 179–215.

Zuckerman, I. H., Lee, E., Wutoh, A. K., Xue, Z., & Stuart, B. (2006). Application of regression-discontinuity analysis in pharmaceutical health services research. Health Services Research, 41, 550–563.

Acknowledgments

The research reported here was supported by the Institute of Education Sciences, U.S. Department of Education, through Grant R305A100381 to the University of Southern California. Additional support was received from an internal grant from the Advancing Scholarship in the Humanities and Social Sciences (ASHSS) Initiative of the University of Southern California, Office of the Provost. We would first like to thank Bo Kim for exceptional research assistance. Special thanks to Will Kwon and Kristen Fong for providing support in replicating the results for other colleges, and to Holly Kosiewicz for insightful feedback. The manuscript benefited substantially from the comments of the following members of the advisory committee to this project: Paco Martorell, Sarah Reber, Lucrecia Santibanez, Juan Esteban Saavedra, and Gary Painter. Lastly, we want to thank the Los Angeles Community College District, its research department, its math faculty, and its students, for their active participation in this research project.

Disclaimer

The views contained herein are not necessary those of the Institute of Education Sciences.

Author information

Authors and Affiliations

Corresponding author

Additional information

George Prather—retired from Los Angeles Community College District.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Melguizo, T., Bos, J.M., Ngo, F. et al. Using a Regression Discontinuity Design to Estimate the Impact of Placement Decisions in Developmental Math. Res High Educ 57, 123–151 (2016). https://doi.org/10.1007/s11162-015-9382-y

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11162-015-9382-y