Abstract

We consider goodness–of–fit tests for the distribution of the composed error in Stochastic Frontier Models. The proposed test statistic utilizes the characteristic function of the composed error term, and is formulated as a weighted integral of properly standardized data. The new test statistic is shown to be consistent and computationally convenient. Simulation results are presented whereby resampling versions of the new tests are compared to classical goodness–of–fit methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The stochastic frontier production model (SFM) was first introduced by Aigner, Lovell, and Schmidt (ALS) Aigner et al. (1977) and Meeusen and van den Broeck (1977), in the form of a Cobb-Douglas production function,

where Y is the maximum log–output obtainable from a vector of log–inputs \(X=({x}_{1},...\,,{x}_{d})\in {{\mathbb{R}}}^{d}\), \(\beta \in {{\mathbb{R}}}^{d},\,(d\ge 1)\), is an unknown vector of parameters, and ε = v − u, denotes the composed error term. The random component v is intended to capture the effects of purely random statistical noise (disturbances beyond the firm’s control), while u ≥ 0 is intended to capture the effects of technical inefficiency which are specific to each firm.

We now review earlier work on goodness–of–fit tests for certain aspects of the SFM. Schmidt and Lin (1984) and Coelli (1995) suggested tests of normality for the composed error term ε by means of the empirical third moment of the OLS residuals. A moment-based method employing skewness and excess kurtosis has also been proposed quite recently by Papadopoulos and Parmeter (2021). Lee (1983) proposed Lagrange Multiplier tests for the normal/half-normal and the normal/truncated-normal SFM within the Pearson family of truncated distributions. Kopp and Mullahy (1990) introduced GMM–based tests for the distribution of the inefficiency component u by simply assuming the noise component v to be symmetric, but not necessarily normally distributed. They also suggest a GMM–based test for the symmetry assumption utilizing odd order moments of residuals. Bera and Mallick (2002) also suggest tests that enjoy moment interpretations but they test their moment restrictions by means of the information matrix. Most of the aforementioned tests however are not omnibus, i.e. they may have negligible power against certain alternatives. While Wang et al. (2011) also suggest certain non-omnibus procedures, they are probably the first to apply specification procedures, such as the Kolmogorov–Smirnov test, that are omnibus, i.e. procedures which, being based on consistent tests, enjoy non-negligible power for arbitrary deviations from the null model, and not just for directive alternatives. These authors are also innovative by suggesting the use of the bootstrap in order to compute critical points and actually carry out the test in practice. A further innovation is brought forward by Chen and Wang (2012) who in effect propose to use the characteristic function (CF) for testing distributional specifications in SFMs.

One important aspect of the SFM specification methods is the distribution of the technical inefficiency term u. In this paper we proceed in the lines set forward by Wang et al. (2011) and Chen and Wang (2012) and suggest bootstrap–based omnibus specification tests for the composed error with special emphasis on the law of the inefficiency component u in SFMs that utilize the CF. Our tests make use of the fact that CFs are often easier to compute than densities or distribution functions, and also utilize the property that conditionally on the independence of u and v, the CF of the composed error term ε may easily be obtained from the product of the CFs of u and v. The rest of the paper unfolds as follows. In Section 2 we introduce the tests, discuss some aspects of the test statistics, prove consistency, and also consider estimation of parameters. In Section 3 we present a Monte Carlo study of a bootstrap–based version of the new tests in the case of a normal/exponential and a normal/gamma SFM. Conclusions and outlook are presented in Section 4. A few technical arguments are deferred to Appendices A and B . There is also an accompanying Supplement containing Monte Carlo results for some extra simulation settings.

2 Goodness–of–fit tests

In this section we consider tests for SFMs with exponentially distributed inefficiency and also tests for SFMs with gamma distributed inefficiency. In the first case the parameters are fully unspecified while in the latter case they are partially specified.

2.1 Tests for the composed error with exponential inefficiency

Let Z denote an arbitrary random variable, and recall that the CF of Z is defined by \({\varphi }_{Z}(t)={\mathbb{E}}({e}^{itZ})={\mathbb{E}}[\cos (tZ)+i\sin (tZ)]\equiv {C}_{Z}(t)+i{S}_{Z}(t), t \in {\mathbb{R}}\), with \(i=\sqrt{-1}\), where CZ(t) and SZ(t) denote the real and imaginary parts, respectively, of φZ(t). A few basic properties of CFs that will be used here are the following: (a) For the CF of Z it holds \({\varphi }_{Z}(-t)={\varphi }_{-Z}(t)=\overline{{\varphi }_{Z}(t)}\), where \(\overline{z}\) denotes the conjugate of the complex number z, (b) if Z has a symmetric around zero distribution, then its CF is real-valued, i.e. it holds SZ(t) ≡ 0, and hence φZ(t) ≡ CZ(t), and (c) if Z1 and Z2 are independent then \({\varphi }_{{Z}_{1}+{Z}_{2}}(t)={\varphi }_{{Z}_{1}}(t){\varphi }_{{Z}_{2}}(t)\).

Consider now the SFM in Eq. (1), and suppose that on the basis of data (Xj, Yj), j = 1, . . . , n, we wish to test the null hypothesis

where \({{{\rm{Exp}}}}(\theta )\) denotes the exponential distribution with density θ−1e−x/θ. At this stage the law of the pure statistical error v will be left unspecified.

In this connection, and since in the context of SFMs, u and v are assumed independent and v is typically assumed to have a distribution that is symmetric around zero, it readily follows that the CF of the composed error term may be computed as

and hence if in addition we assume that \({C}_{v}(t)\,\ne\, 0,\,t\,\in\, {\mathbb{R}}\), then

if and only if

However, Henze and Meintanis (2002) have shown that Eq. (5) is a characterization of the unit exponential distribution. Consequently (4) holds, if and only if (5) holds for all \(t\in {\mathbb{R}}\), which in turn only holds under the null hypothesis \({{{{\mathcal{H}}}}}_{0}\) in (2) with θ = 1. This fact justifies taking the left hand side of Eq. (4) as the point of departure of our test, but in order to reduce the test of the null hypothesis \({{{{\mathcal{H}}}}}_{0}\) to unit exponentiality, we will consider instead of ε the standardize error defined by \(\widetilde{\varepsilon }=\varepsilon /\theta\).

To this end recall that the SFM in (1) depends on the regression parameter β, that under the null hypothesis (2) this model also involves the exponential parameter θ and that the pure statistical error v may also involve an unknown parameter. Let \({\widehat{\varepsilon }}_{j}={Y}_{j}-{\widehat{\beta }}^{\top }{X}_{j}\), be the residuals of the SFM (1) under the null hypothesis \({{{{\mathcal{H}}}}}_{0}\). Clearly these residuals, besides being dependent on the regression parameter \(\widehat{\beta }\), they are also computed conditionally on suitable estimates of the aforementioned distributional parameters; see Section 3 for parameter estimation. We will write \({\widehat{\widetilde{\varepsilon }}}_{j}={\widehat{\varepsilon }}_{j}/\widehat{\theta },\,j=1,\ldots ,n\), for the respective standardized residuals. Then the left hand side of Eq. (4) may be estimated by

where

with Cn (resp. Sn) being an estimator of \({C}_{\tilde{\varepsilon }}\) (resp. \({S}_{\tilde{\varepsilon }}\)). In view of (4), Dn(t) should be close to zero under the null hypothesis \({{{{\mathcal{H}}}}}_{0}\) identically in \(t\in {\mathbb{R}}\), at least for large sample size n. Thus it is reasonable to reject \({{{{\mathcal{H}}}}}_{0}\) for large values of the test statistic

where w(t) > 0 is an integrable weight function–Footnote 1. The test figuring in (7) is an integrated and weighted test, and in this sense it is analogous to a Cramér-von Mises test, the only difference being that instead of the estimated distribution function used in the latter test, within Tn,w, and specifically in \({D}_{n}^{2}(t)\), we employ the estimated CF of the underlying law. In view of the uniqueness property of CFs and the positivity of the weight function w(t), formulation (7) leads to a test statistic that is (globally) consistent, and thus to an omnibus test; see Proposition 1. However the uniqueness property of CFs only holds if, as it is done in (7), this CF is considered over all possible arguments \(t\in {\mathbb{R}}\), and therefore the chi-squared tests suggested in Chen and Wang (2012) (see also Wang et al. (2011)) which are based on computing the empirical CF over a finite grid of points, are not omnibus. On the other hand chi-squared tests have the advantage of a simple limit null distribution with well known and tabulated critical points, and thus the practitioner does not necessarily need to resort to bootstrap resampling. Despite this advantage however, convergence to the limit chi-squared distribution may prove quite slow and thus often one has to revert back to bootstrap for actual test implementation; see for instance Wang et al. (2011).

While the consistency of the test based on Tn,w may be proved for a general class of weight functions, the choice w(t) = e−λ∣t∣, λ > 0, is particularly appealing from the computational point of view. To see this write Tn,λ for the test statistic in (8) with weight function e−λ∣t∣. Then after some straightforward algebra (refer to Appendix A for details) we obtain

where we write ∑j,k for the double sum ∑j∑k, and where \({\widehat{\widetilde{\varepsilon }}}_{jk}^{+}={\widehat{\widetilde{\varepsilon }}}_{k}+{\widehat{\widetilde{\varepsilon }}}_{j}\), and \({\widehat{\widetilde{\varepsilon }}}_{jk}^{-}={\widehat{\widetilde{\varepsilon }}}_{k}-{\widehat{\widetilde{\varepsilon }}}_{j}\), j, k = 1, …, n.

We now illustrate the role that the weight function e−λ∣t∣ plays in the test statistic Tn,λ. To this end we use expansions of the trigonometric functions \(\sin (\cdot )\) and \(\cos (\cdot )\), and after after some algebra (refer to Appendix B for details) we obtain from (8)

The “limit statistic” in the right–hand side of (9) measures normalized distance from unity of the sample mean of the standardized residuals \({\widehat{\widetilde{\varepsilon }}}_{j}\). Recall in this connection that under the null hypothesis \({{{{\mathcal{H}}}}}_{0}\), \({\mathbb{E}}(\widetilde{\varepsilon })=-1\), and thus this distance should vanish under \({{{{\mathcal{H}}}}}_{0}\), as n → ∞. However this same distance will also vanish under an alternative for which the standardized error term happens to have expectation equal to one. In conclusion taking a value of the weight parameter λ that is “too large” forces the test to depend on lower order moments of the residuals and should be avoided if the test is to have good power against alternatives with arbitrary moment structure. On the other hand, values of λ too close to the origin result in a test that is prone to numerical error due to periodicity of trigonometric functions.

Remark 1

It is clear from Eqs. (3)–(4) which are instrumental in defining our test statistic that (5) is robust to the law of the pure statistical error v, as long as this law satisfies \({C}_{v}(t)\ne 0,\,t\,\in\, {\mathbb{R}}\). Of course the test statistic is conditioned on a preliminary estimation step, and thus rejecting on the basis of the test in (8) implies rejection of the “entire” normal/exponential law for the composed error in that this entire law is present in the test statistic both at the estimation as well as at the test construction step following the estimation step. In this sense our test has power not only against non-exponential specifications for u, but also against any non-normal specification for v, such as the Student-t (see Wheat et al. (2019)) and the stable (see Tsionas (2012)) specification. We refer the interested reader to the accompanying Supplement for corresponding Monte Carlo results. In this connection we note that a large class of distributions with φZ ≠ 0 is the class of infinitely divisible laws (see Sasvári (2013), §3.11). At the same time, and while tailored specifically to the null hypothesis \({{{{\mathcal{H}}}}}_{0}\) of exponentiality in (2), our test may also be applied with any other law of v with a non-vanishing CF. To do so one has to apply (8) as test statistic but the residuals have to be computed via, say maximum likelihood, that takes into account the specific non-normal law postulated for v.

Continuing on the power properties and due to the uniqueness of CFs (see Sasvári (2013) Theorem 1.3.3), we maintain that the test statistic Tn,w defined by (8) has asymptotic power one as n → ∞ for arbitrary deviations from the null hypothesis \({{{{\mathcal{H}}}}}_{0}\). This result is formally stated and proved below. As it is already implicit X⊤ denotes vector transposition, and we also write \(\parallel X\parallel ={(\mathop{\sum }\nolimits_{k = 1}^{d}{x}_{k}^{2})}^{1/2}\) for the Euclidean length of X.

Proposition 1

Consider the SFM in (1) and suppose the following conditions hold: (C1) The CF of v satisfies φv ≠ 0, the regressor \(X\in {{\mathbb{R}}}^{d},\,d\ge 1\), has finite mean and (X, v, u) are mutually independent, (C2) the distributions of u and X are such that u + a⊤ X is not exponentially distributed for any d–vector a ≠ 0 (C3) the estimator \(\widehat{\beta }\) satisfies \(\widehat{\beta }\to b\) almost surely (a.s.) as n → ∞ for some \(b\in {{\mathbb{R}}}^{d}\), with b = β0 (the true value) under \({{{{\mathcal{H}}}}}_{0}\) and (C4) the weight function w > 0 is such that \({\int}_{{\mathbb{R}}}{t}^{2}w(t)dt \,<\, \infty\). Then for the test statistic in (8) it holds

a.s. as n → ∞, with D(t) = Se(t) + tCe(t), where Ce (resp. Se) denotes the real (resp. imaginary) part of the CF of ej(b) ≔ Yj − b⊤Xj, j = 1, . . . , n.

Proof.

For simplicity we assume the distributional parameters to be fixed under the null hypothesis and that specifically θ = 1. Then the following Taylor expansion of the cosine function around β = b

where

and b* is such that \(\parallel {b}^{* }-b\parallel \le \parallel \widehat{\beta }-b\parallel\), leads to

a.s. as n → ∞, so that Cn(t) → Ce(t) and likewise Sn(t) → Se(t). Thus \({D}_{n}^{2}(t)\to {D}^{2}(t)\), and since \({D}_{n}^{2}(t)\le {(1+| t| )}^{2}\), with \({\int}_{{\mathbb{R}}}{(1+| t| )}^{2}w(t)dt \,<\, \infty\) by (C4), we may invoke Lebesgue’s theorem of dominated convergence (see Jiang (2010), §A.2.3) and the proof of (10) is finished. Clearly Δw > 0 unless D(t) = 0 identically in t. Now write e = Y − b⊤X = ε − a⊤X = v − (u + a⊤X), where a = b − β0, so that by independence \({\varphi }_{e}(t)={\varphi }_{v}(t){\varphi }_{u+{a}^{\top }X}(-t)={\varphi }_{v}(t)({C}_{u+{a}^{\top }X}(t)-i{S}_{u+{a}^{\top }X}(t))\) and therefore since by (C1) φv ≠ 0, D ≡ 0 holds if and only if \({S}_{u+{a}^{\top }X}(t)=t{C}_{u+{a}^{\top }X}(t),\) identically in t, which is an established characterization of the exponential distribution; see Henze and Meintanis (2002) for tests based on this characterization, and Jammalamadaka and Taufer (2003) and Henze and Meintanis (2005) for reviews on testing for exponentiality. However condition (C2) rules out this possibility unless u follows an exponential distribution, in which case b = β0 (or a = 0), i.e. Δw = 0 only under the null hypothesis \({{{{\mathcal{H}}}}}_{0}\) figuring in (2). Thus Tn,w → ∞ a.s. as n → ∞ under alternatives and consequently the test which rejects \({{{{\mathcal{H}}}}}_{0}\) for large values of Tn,w is consistent. ■

Remark 2

Formally speaking, for fixed distribution of the regressor X with CF φX ≠ 0, condition (C2) is violated if \({\varphi }_{u}(t)={\left((1-it){\varphi }_{{a}^{\top }X}(t)\right)}^{-1}\). For the circumstances under which this violation is possible to become more transparent assume that X ≡ 1, i.e. assume that the simple location SFM, Y = β + ε, holds. Then this condition reads as φu(t) = (1−it)−1e−iAt, \(A=\mathop{\sum }\nolimits_{k = 1}^{d}{a}_{k}\) (the sum of the elements of the vector a), meaning that u = Z − A, with Z exponentially distributed. If this happens however, then we are not in line with the classical assumption that the support of the distribution of u is the non–negative real line.

2.2 Estimation for the normal/exponential case

As already mentioned the parameters of any given SFM are considered unknown and thus they should be estimated from the data (Xj, Yj), j = 1, . . . , n. Here we will illustrate the estimation procedure on the assumption that \(v \sim {{{\mathcal{N}}}}(0,{\sigma }_{v}^{2})\), i.e. that v follows a zero–mean normal distribution with variance \({\sigma }_{v}^{2}\). In this connection one of the most commonly used estimator is the maximum likelihood estimator (MLE), which is known to be consistent and asymptotically efficient.

In order to compute the normal/exponential likelihood function we note that the density for the composed error term ε, is given by (see Kumbhakar and Lovell (2000)),

where Φ( ⋅ ) denotes the standard normal distribution function. Based on this equation, the log-likelihood function for the sample may be written as

Since the log-likelihood function in (12) is non-linear, iterative computational methods are needed to be developed. To this end, a Matlab code was developed in which the unconstrained maximisation of (12) is done using the library function fminunc. For the implementation of this program the Quasi-Newton method is used, instead of the Newton-Raphson method, since the latter requires the calculation of second partial derivatives.

2.3 Tests for the composed error with gamma inefficiency

In this section we consider the test for a SFM with gamma distributed inefficiency term; see for example Tsionas (2000). By way of example we consider the null hypothesis

i.e. we consider testing for a gamma distribution with shape parameter equal to κ = 2, and unspecified value of θ. Recall in this connection the density of the gamma distribution is f(x; κ, θ) = (xκ−1/(Γ(κ)θκ))e−x/θ, with \({{\Gamma }}(\kappa )=\int\nolimits_{0}^{\infty }{x}^{\kappa -1}{e}^{-x}dx\).

Since the CF of a random variable Z following the gamma distribution is given by φZ(t) = (1−itθ)−κ, and by analogous steps as in Section 2 it follows that for κ = 2 the CF of the standardized composed error \(\widetilde{\varepsilon }=\varepsilon /\theta\) satisfies

and therefore suggest a test statistic analogous to (8) with

where Cn and Sn are defined in the same way as in (6) but now the residuals are estimated from the SFM Yj = β + εj under the normal/gamma null hypothesis (13) with κ = 2.

With some further algebra it follows that if we employ the same weight function w(t) = e−λ∣t∣, the test statistic is rendered in the following form which is convenient for computer implementation (refer to Appendix A for details):

with \({\widehat{\widetilde{\varepsilon }}}_{j,k}^{+}\) and \({\widehat{\widetilde{\varepsilon }}}_{j,k}^{-}\,\)j, k = 1, …, n, defined in exactly the same way as in (8).

The comments made in Remark 1 apply here too, i.e. the test defined in (16) may be used with any given law of v with real and non-vanishing CF, be it normal or non-normal, but we reiterate that this generality is conditioned on a proper estimation step that takes into account the specific law of v postulated.

Before closing this part we also wish to emphasize that the tests considered herein, and as far as the law of the inefficiency is concerned, are specific to the null hypotheses as stated, i.e. the test in (8) is specific to the null hypothesis of exponentiality figuring in (2) while the test in (16) is specific to the null hypothesis as stated in (13), and that in both cases the tests are not directional aiming against a specific alternative, but rather they have power against arbitrary deviations from the corresponding null hypothesis. On the other hand these tests may be appropriately modified to test a more general null hypothesis such as testing for a gamma distribution with unspecified value of κ, or to test a separate family of distributions like the popular half-normal specification for the technical efficiency term u. In doing so however, one has to take into account the specific structure of the CF of u under this particular specification and design the test analogously; see Section 4 for some extra discussion on this issue.

3 Simulations

3.1 Simulations for the normal/exponential case

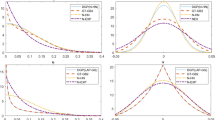

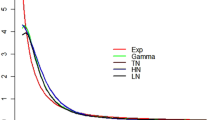

In this section we present the results of Monte Carlo study for the new test statistic given by Eq. (8).Footnote 2 Specifically under the null hypothesis we consider the normal/exponential SFM whereby \(v \sim {{{\mathcal{N}}}}(0,1)\) and \(u \sim {{{\rm{Exp}}}}(\theta )\) for θ = 0.5, 1.0, 3.0, 5.0, 8.0, 10.0, while the power of the test is computed against a normal/half–normal alternative hypothesis with the same Gaussian component, and the half–normal scale parameter set equal to σu = 0.5, 1.0, 3.0, 5.0, 8.0, 10.0.

We also compare the results of the proposed test statistic with those obtained from the classical Kolmogorov – Smirnov (KS) and Cramér–von Mises (CvM) tests. For ease of reference we report the equations defining the KS and CvM test statistics. To this end note that both these statistics utilize the empirical cumulative distribution function \({\widehat{F}}_{n}(\cdot )\) of the residuals \({\widehat{\varepsilon }}_{j}\) and the theoretical (assumed) cumulative distribution function \({F}_{0j}:= {F}_{0}({\widehat{\varepsilon }}_{j};{\widehat{\sigma }}_{v},\widehat{\theta })\) under the null hypothesis \({{{{\mathcal{H}}}}}_{0}\). The respective formulas are given by

and

with the assumed normal/exponential cumulative distribution function F0( ⋅ ) being computed by numerical integration. For recent developments in computing the cumulative distribution function of the composed error see Amsler et al. (2019) and Amsler et al. (2021) that consider the popular case of a normal/half-normal SFM.

We consider the simple location SFM in Eq. (1), Yj = β + εj, with β estimated by MLE. The number of Monte Carlo replications is M = 1000, with sample size n = 100, 200, 300, 500, and nominal level of significance α = 5%. For the new test statistic Tn,λ we consider λ = 0.5, 1.0, 2.0, 3.0, 4.0 and 5.0.

Since however the parameters of the model are considered unknown, we employ a parametric bootstrap version of the tests which resamples from the null distribution with estimated parameters, and thus the extra variation due to parameter estimation is taken into account in computing critical values of test statistics; see for instance Babu and Rao (2004) for theory of the parametric bootstrap. However, the implementation of a Monte Carlo simulation employing the parametric bootstrap will potentially incur a great cost in computational time due to the nested iteration structure involved with attempting to evaluate a bootstrap procedure in a Monte Carlo. To alleviate this computational burden, the so-called “warp speed” bootstrap procedure will be used to approximate the bootstrap critical value in the Monte Carlo study. This bootstrap procedure which has been put on a firm theoretical basis by Giacomini et al. (2013) and Chang and Hall (2015) capitalizes on the repetition inherent in the Monte Carlo simulation to produce bootstrap replications, rather than relying on a separate “bootstrap loop”. More specifically we calculate the bootstrap test statistic for only one bootstrap sample (single double-resampling) for each of the M Monte Carlo iterations, and ultimately obtain M bootstrap sample test statistics at the end of the simulation. The steps in performing the warp-speed version of the parametric bootstrap are itemized below for the normal/exponential case. For the normal/gamma case, step (B3) needs to be modified in an obvious manner.

-

(B1) Draw a Monte Carlo sample \(\{{Y}_{j}^{(m)},{X}_{j}^{(m)}\},\,j=1,...\,,n\), compute the estimator–vector \({\widehat{{{\Theta }}}}^{(m)}\), where \({\widehat{{{\Theta }}}}^{(m)}=({\widehat{\beta }}^{(m)},{\widehat{\sigma }}_{v}^{(m)2},{\widehat{\theta }}^{(m)})\).

-

(B2) On the basis of \({\widehat{{{\Theta }}}}^{(m)}\) calculate the residuals \({\widehat{\varepsilon }}_{j}^{(m)}\) and the corresponding test statistic \({T}_{m}=T({\widehat{\varepsilon }}_{1}^{(m)},\ldots ,{\widehat{\varepsilon }}_{n}^{(m)})\).

-

(B3) Generate i.i.d. bootstrap errors \({\varepsilon }_{j}^{(m)},\,j=1,\ldots ,n\), where \({\varepsilon }_{j}^{(m)}={v}_{j}^{(m)}-{u}_{j}^{(m)}\), with \({v}_{j}^{(m)} \sim {{{\mathcal{N}}}}(0,{\widehat{\sigma }}_{v}^{2})\) and \({u}_{j}^{(m)} \sim {{{\rm{Exp}}}}(\widehat{\theta })\), and independent.

-

(B4) Define the bootstrap observations \({Y}_{j}^{(m)}={\widehat{\beta }}^{(m)}{X}_{j}+{\varepsilon }_{j}^{(m)}\), j = 1, . . . , n.

-

(B5) Based on \(\{{Y}_{j}^{(m)},{X}_{j}\}\) compute the bootstrap estimator \({\widehat{{{\Theta }}}}_{b}^{(m)}=({\widehat{\beta }}_{b}^{(m)},{\widehat{\sigma }}_{b,v}^{2(m)},{\widehat{\theta }}_{b}^{(m)})\), and the corresponding bootstrap residuals, say, \({\widehat{\epsilon }}_{j}^{(m)}\), j = 1, . . . , n.

-

(B6) Compute the test statistic \({\widehat{T}}_{m}:= T({\widehat{\epsilon }}_{1}^{(m)},\ldots ,{\widehat{\epsilon }}_{n}^{(m)})\), based on the bootstrap residuals.

-

(B7) Repeat steps (B1)–(B6), for m = 1, . . . , M, leading to test–statistic values Tm and bootstrap statistic values \({\widehat{T}}_{m},\,m=1,...\,,M\).

-

(B8) Set the critical point equal to \({\widehat{T}}_{(M-\alpha M)}\), where \({\widehat{T}}_{(m)}\), m = 1, …, M, denote the order statistics corresponding to \({\widehat{T}}_{m}\), and α denotes the prescribed size of the test.

In the Table 1 the size results (percentage of rejection rounded to the nearest integer) for the tests Tn,λ, KS and CvM are presented at level of significance α = 5%, corresponding to the \({{{\mathcal{N}}}}(0,1)/{{{\rm{Exp}}}}(\theta )\) null hypothesis. Table 2 shows power results for the normal/half–normal alternative hypothesis \({{{\mathcal{N}}}}(0,1)/{{{\rm{HN}}}}(0,{\sigma }_{u}^{2})\). For power results corresponding to some extra simulation settings we refer the interested reader to the accompanying Supplement. From Table 1 we see that for all three tests the empirical size varies with the value of the exponential parameter θ, while for the new test Tn,λ figures also vary with the weight parameter λ. Overall however and with a few exceptions the nominal size is satisfactorily recovered. Turning to Table 2 we observe that the power is low for all tests when the sample size n is small with lower values of the half–normal parameter σu, but progressively increases with n as σu gets larger, in which case the new test Tn,λ enjoys a clear advantage against its competitors, at least for higher values of the weight parameter λ.

3.2 Simulations for the normal/gamma case

In Table 3 we present level results for the test in (16) under the normal/gamma null hypothesis with \({v}_{j} \sim {{{\mathcal{N}}}}(0,1)\) and uj ~ Gamma(κ = 2, θ) for the same values of θ considered in §3.1. As before estimators of parameters were obtained by maximum likelihood. The results in Table 3 show that the three tests respect the nominal size to a satisfactory degree. The power results are reported in the Tables 4, 5, and 6, and correspond to powers of the test based on Tn,λ in (16) as well as the KS and CM tests for the null hypothesis normal/gamma with κ = 2, against the alternatives normal/exponential (i.e. normal/gamma with κ = 1), normal/gamma with κ = 3 and normal/gamma with κ = 0.5, respectively. The percentage of rejection varies with the alternative under consideration, being relatively low for κ = 3, but increases considerably for the other two alternatives. The message that may be drawn from these results is that the new test with larger values of λ (λ = 4 or 5) seems to be preferable to its competitors almost uniformly with respect to the sample size n and the alternative being considered.

4 Conclusions

We propose goodness–of–fit tests for the distribution of the composed error ε = v − u in stochastic frontier production models. The new test statistics are based on the characteristic function of the composed error term ε and they are omnibus, i.e. they possess non–negligible power asymptotically for any given alternative under test. Moreover, bootstrap versions of the tests are shown to have competitive power compared to the classical Kolmogorov–Smirnov and Cramér-von Mises tests in finite samples.

We wish to close by stressing the fact that the tests presented herein make use of specific properties of the CF underlying the null hypothesis and as such they are tailored for specific hypotheses under test. If they are to be modified to apply to other cases such as the popular normal/half–normal or normal/gamma, or any other specification, then one has to employ alternative properties analogous to (4) and (14) that apply to the CF of the specific distribution under test; see for instance the test for the skew normal distribution suggested by Meintanis (2007) which may be used to test the normal/half-normal SFM. On the other hand there also exists a general formulation for a test statistic based on the CF that may be applied to any specification of the law of the composed error ε. To this end suppose we wish to test a particular specification for the composed error that involves a parameter vector, say Θ, containing regression as well as any distributional parameter of this specification. Then this general formulation is given by

where φn(t) = Cn(t) + iSn(t) is the empirical CF computed from estimated residuals, see for instance below Eq. (6), and φε(t; Θ) is the CF under the null hypothesis, both computed on the basis of the estimator \(\widehat{{{\Theta }}}\) of the parameter vector Θ obtained under the particular parametric specification underlying the null hypothesis. While tests such as the above have the advantage of full generality, this formulation is based on the premise that the null CF φε(t; Θ) is known and has a rather simple expression, so that numerical integration is not necessary. Otherwise tests like the ones defined by (8) and (16) which are tailored, i.e. they make use of the specific structure of the CF under the null hypothesis, may be preferable, at least from the computational point of view.

References

Aigner DJ, Lovell CAK, Schmidt P (1977) Formulation and estimation of stochastic frontier models production function models. J Econometr 6:21–37

Amsler C, Papadopoulos A, Schmidt P (2021) Evaluating the cdf of the skew normal distribution. Empiric Econom 60:3171–3202

Amsler C, Schmidt P, Tsay WJ (2019) Evaluating the CDF of the distribution of the stochastic frontier composed error. J Product Anal 52:29–35

Babu GJ, Rao CR (2004) Goodness–of–fit tests when parameters are estimated. Sankhya 66:63–74

Bera, A. K., Mallick, N. C. (2002) Information matrix tests for the composed error frontier model. In: Balakrishnan N (Ed) Advances on methodological and applied aspects of probability and statistics. Gordon and Breach Science Publishers, London

Chang J, Hall P (2015) Double–bootstrap methods that use a single double-bootstrap simulation. Biometrika 102:203–214

Chen YT, Wang HJ (2012) Centered-residuals-based moment estimator and test for stochastic frontier models. Econometr Rev 31:625–653

Coelli T (1995) Estimators and hypothesis tests for a stochastic frontier function: A Monte Carlo analysis. J Product Anal 6:247–268

Giacomini R, Politis DN, White H (2013) A warp-speed method for conducting Monte Carlo experiments involving bootstrap estimators. Econometr Theory 29:567–589

Henze N, Meintanis SG (2002) Goodness–of–fit tests based on a new characterization of the exponential distribution. Commun Statist - Theor Meth 31:1479–1497

Henze N, Meintanis SG (2005) Recent and classical tests for exponentiality: a partial review with comparisons. Metrika 61:29–45

Jammalamadaka SR, Taufer E (2003) Testing exponentiality by comparing the empirical distribution function of the normalized spacings with that of the original data. J Nonparametr Statist 15:719–729

Jiang J (2010) Large Sample Techniques for Statistics. Springer, New York

Kopp RJ, Mullahy J (1990) Moment-based estimation and testing of stochastic frontier models. J Econometr 46:165–183

Kumbhakar SC, Lovell KCA (2000) Stochastic Frontier Analysis. Cambridge University Press, Cambridge, UK

Lee LF (1983) A test for distributional assumptions for the stochastic frontier functions. J Econometr 22:245–267

Meeusen W, van den Broeck J (1977) Efficiency estimation from Cobb-Douglas production functions with composed error. Inter Econom Rev 18:435–444

Meintanis SG (2007) A Kolmogorov-Smirnov type test for skew normal distributions based on the empirical moment generating function. J Statist Plann Inferen 137:2681–2688

Papadopoulos A, Parmeter CF (2021) Type II failure and specification testing in the stochastic frontier model. Europ J Operat Resear 293:990–1001

Sasvári, Z. (2013) Multivariate Characteristic and Correlation Functions. De Gruyter: Berlin

Schmidt P, Lin T (1984) Simple tests of alternative specifications in stochastic frontier models. J Econometr 24:349–361

Tsionas EG (2000) Full likelihood inference in normal-gamma stochastic frontier models. J Product Anal 13:183–205

Tsionas EG (2012) Maximum likelihood estimation of stochastic frontier models by the Fourier transform. J Econometr 170:234–248

Wang WS, Amsler C, Schmidt P (2011) Goodness of fit tests in stochastic frontier models. J Product Anal 35:95–118

Wheat P, Stead AD, Greene WH (2019) Robust stochastic frontier analysis: a Student’s t half normal model with application to highway maintenance costs in England. J Product Anal 51:21–38

Acknowledgements

The authors express their sincere appreciation for the constructive comments and helpful suggestions of the Associate Editor and two anonymous reviewers.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Appendices

Appendix A

Starting from Eq. (6) we obtain

where we write ∑j,k for the double sum ∑j∑k. Also recall the trigonometric identities

Now plug the above expression for \({D}_{n}^{2}(t)\) into the test statistic (7) and substitute the above product formulae, and integrate term-by-term the resulting expression. Then after some grouping we obtain (8) by making use of the integrals

Equation (16) may be proved by following analogous steps, but we also need the extra integrals

Appendix B

Starting from Eq. (6) and using \(\sin (z)=z-({z}^{3}/3!)+\ldots\) and \(\cos (z)=1-({z}^{2}/2!)+\ldots\), we obtain (in increasing powers of t)

and by squaring

Plugging the above expression in Eq. (7) and integrating term-by-term leads to

and by taking the limit as λ → ∞, we readily obtain (9), where we made use of the integral

Rights and permissions

About this article

Cite this article

Meintanis, S.G., Papadimitriou, C.K. Goodness--of--fit tests for stochastic frontier models based on the characteristic function. J Prod Anal 57, 285–296 (2022). https://doi.org/10.1007/s11123-022-00632-5

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11123-022-00632-5