Abstract

In the process of aerobics competition, it is necessary to evaluate the training and performance of athletes and coaches with real-time data. However, traditional data recording methods have some limitations, such as relying on manual recording or being limited by the number and location of sensors. The research aims to use light sensors and machine learning techniques to develop an application that can detect, record and simulate aerobics competition data in real time. The movement trajectory and posture information of athletes are captured by light sensors, and the data is analyzed and simulated with the help of machine learning algorithms to provide more accurate and comprehensive competition data. The light sensor can accurately detect the posture and movement of the human body, and transmit the data to the computer through the data transmission module. The sensor data is processed and analyzed using machine learning algorithms, which are trained to recognize specific movements and postures and compare them to preset criteria. The results show that athletes and coaches can obtain real-time competition data through the application, and analyze and feedback to improve the accuracy of training effect and performance evaluation.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Competitive aerobics is a sport expressed through music, which also includes a combination of various difficult and easy movements to showcase the performance of athletes (Yue 2021). As a non Olympic event, this event originated from aerobic sports. After years of development, aerobics has gained more and more attention in various countries around the world, and competitive aerobics has entered a new era of development. As athletes not only need good form, the form of competitive athletes is particularly important in research (Masoga and Mphafudi 2022). From the early use of special cables as transmission media to traditional real-time monitoring systems, the first generation of analog real-time monitoring as storage devices, and then to the second generation of digital real-time monitoring. Subsequently, with the advancement of network technology, the implementation of real-time monitoring through networking has reached the third generation of network real-time monitoring (Yang et al. 2019). Therefore, real-time monitoring systems have undergone a development process of simulation, digitization, and networking. Intelligent real-time monitoring technology enables people to complete operations where cameras replace the human eye and computers replace humans, transitioning from passive monitoring to active monitoring. Transition from post analysis to event analysis and early warning, achieving reliable monitoring 24/7, freeing video operators from the monotonous task of "staring at the screen" (Dodero et al. 2021). This can effectively filter out unused information and reduce the transmission and storage of useless information. Further improve real-time monitoring capabilities and operators' ability to distinguish video efficiency, improve response speed, save financial investment, and enable video resources to play a huge role in our decision-making. It is suitable for daily security management and can respond in a timely manner in the event of various abnormal situations (Salih and Younis 2021). The target detection task of image processing technology is to separate the background, determine and classify, and determine future interesting foreground targets, as traditional image processing previously relied on artificial feature expression, including algorithms extracted from marginal features and image formalization. Using manually defined feature extraction algorithms to train classifiers specifically and achieve relevant results for object detection (Wei et al. 2019). Although this type of algorithm avoids the main drawbacks of image background modeling, it may be largely influenced by environmental factors and may not have high robustness. From the above analysis, it can be seen that with the increasing complexity of current traffic scenes, traditional object detection and tracking algorithms can no longer meet the requirements of current traffic video image processing systems for detection accuracy and algorithm efficiency (Cao 2016). In recent years, with the popularization of machine learning technology, object detection algorithms based on machine learning have made significant progress in detection accuracy and speed, which is also one of the focuses of this article. The practical significance of this research topic includes real-time data analysis of aerobics competitions based on real-time monitoring through machine learning technology, real-time data analysis of aerobics competitions based on precise detection, and obtaining the entire aerobics competition movements (Ren and Wang 2022).

In the aerobics competition, the application of light sensors can provide athletes and coaches with more accurate and real-time data, thus improving the effect of training and evaluation. There are some shortcomings in the traditional method of recording the data of aerobics competition. Relying on manual recording is easy to produce errors, and it also increases the workload and time cost. The number and location of sensors limit the range and accuracy of data acquisition. These problems limit the ability of traditional methods to provide comprehensive and accurate data. The optical sensor has the advantage of high sensitivity and accuracy by utilizing the characteristics of light. The light sensor can detect the athlete's movement track and posture information in real time, and output the data in digital form. By arranging the light sensor in the aerobics competition venue, the movement and posture of the athletes can be comprehensively recorded, providing more detailed and accurate data. The rapid development of machine learning algorithms has also provided powerful tools for data analysis and processing of optical sensors. By training deep learning models, specific movements and postures can be identified and compared to preset criteria. Machine learning algorithms can extract patterns and features from large amounts of data, further improving the reliability and accuracy of the data. Therefore, combined with the development of optical sensor detection and machine learning algorithms, machine learning based on optical sensor detection has great potential in the application of real-time data simulation in aerobics competitions. Through real-time detection and simulation of competition data, more accurate and comprehensive data can be provided to provide athletes and coaches with better training and evaluation tools. This not only helps to further promote the development of aerobics, but also brings more possibilities for the innovation and development of the fitness industry.

2 Related work

The literature points out that competitive aerobics is a sport derived from traditional aerobic fitness, and is also accompanied by music. Aerobics can showcase continuous, complex, and difficult skills in sports events (Heijnen et al. 2016). The flexibility and strength of aerobics athletes must be completed through continuous integration of various basic movements and exercise of various difficult movements. The literature suggests that although competitive aerobics has a relatively short history of development in the world and is currently a non Olympic event, it has unique charm, good competition systems, rules, and great potential for development (Aleksandraviciene et al. 2015). The literature introduces that aerobics is a sports event that uses a set of movements as the main competitive means. It has strong expressiveness, and the unique charm of "beauty" in this event can be classified as "human beauty and sports beauty" (Moshenska and Petrov 2020). Aerobics can improve athletes' performance ability. In aerobics, standardized movements can reflect beautiful postures, and physical beauty is the foundation of aerobics, The activity level of aerobics athletes affects their skill level in competition. Therefore, the study of physical data on athletes' competition events has attracted widespread attention from researchers. The literature studied participants in the World Athletic Aerobics Championships and statistically analyzed test data to determine the optimal morphological characteristics of competitive aerobic athletes based on height, weight, index, and skin fold thickness (Wang et al. 2018).

As a disciplinary field of image processing, the research on moving object detection and tracking in intelligent real-time monitoring has high application potential and academic value, therefore, it has received high attention from domestic and foreign experts and scholars. The literature points out that VSAM is a visual monitoring project that integrates multiple sensors, mainly used for future urban research and military situation analysis, such as observation of dangerous places such as battlefields, and automatically collects and disseminates real-time battlefield information (Zhang et al. 2021). The literature indicates the detection, tracking, analysis, and alarm of abnormal behavior targets. The literature indicates that after opening an alarm system, the information captured by the camera can be transmitted to the system software through computer and real-time remote monitoring, and hidden dangers in the monitoring environment can be automatically detected to alarm the system (Kim et al. 2019). By collaborating with the renowned American chip company TI and using DSP chips manufactured by TI as hardware tools, the system has the function of automatic recognition. Machine learning has developed into a technology that effectively realizes data analysis, target recognition and classification of computer systems, and integrates various innovative learning methods and rich forms of Ensemble learning systems. The application prospects of these learning algorithms are also constantly improving. The literature believes that the essence of machine learning is that computers attempt to perform operations within the framework of given basic functions and objective operations (Mahesh 2020). Based on manually calibrated sample observation data, a mathematical model can be established and used to solve a series of similar new problems. This research method extracts patterns from a large number of observed samples and utilizes these data features to predict unobservable and quantifiable data. Based on these, the literature proposes a perception model, namely a single-layer neural network, which is an early prototype of industry neural networks (Ghiasi-Shirazi 2019). It can only complete simple linear classification tasks and is limited by hardware computing power, unable to function in complex computing situations.

3 Real time monitoring and machine learning

3.1 Machine learning

The light sensor converts the light signal into an electrical signal and captures the movement and posture of the athlete through spatial measurements. In an aerobics competition, light sensors can be placed at different locations on the field in order to fully perceive the athlete's behavior. Through the detection of the light sensor, a large amount of data can be obtained, including the position, speed, Angle and other information of the athlete. This data is transferred to a machine learning algorithm for feature extraction and analysis. In the feature extraction phase, the motion data detected by the light sensor is converted into a digital form and the key motion and posture features are extracted. In machine learning algorithms, features can be compressed using squeeze operations. This operation converts each channel of a two-dimensional property to a real number to describe the overall distribution of motion data. This extrusion operation corresponds to the number of specific channels that are input and outputs parameters for each channel. It helps to understand the distribution of motion data throughout the corridor and enables the strata close to the entrance to have a common receiving area. In addition to the extrusion operation, the detection of the light sensor can also apply an exit process, similar to a repeat gate on a neural network. By setting the number of parameter weights for each specific channel and conducting research, the relationship between the feature channels can be determined. This exit process helps to further understand the interactions between different features in the motion data. A process of reweighting can also be applied where, when key feature tracks have been selected, the weights of the output ports are compared to previous features and the original features are reordered. The SE module consists of two functions, Squeeze and Exception, as shown in formula (1).

This paper points out that the common evaluation indexes for evaluating different counting methods are absolute error and Mean squared error, whose definitions are shown in formulas (2) and (3).

CBAM will autonomously search for key features in both channel and spatial dimensions, and recalibrate based on research results to enhance high response features. The specific operation is shown in formula (4).

In this paper, as shown in Formula (5), the density of the final output process needs to be monitored using the distribution of the Loss function Ld and the predicted value of the density estimation graph P.

In order to further improve the learning ability of the network in image foreground and background and the accuracy of network counting, this paper introduces weighted Cross entropy loss to monitor the leading prediction and background error, and the calculation method is shown in Formula (6).

The input and output of the convolutional layer are related to the size of the reserved kernel and the length of the convolutional layer steps. The number of convolutions is related to the step size, while the number of convolutional kernel filters only determines the number of convolutional layer outputs. Therefore, its output size is shown in Eq. (7), and I is the width or height of the input image.

The calculation of formula (7) is linear and not suitable for nonlinear cases. CNN uses nonlinear functions as its stimulus function. For example, when using the most common ReLU model, this is a sublinear function with nonlinear expression ability. After different samples are processed with ReLU, some obtain values of 0, while others obtain values of WU + B. Here U is the input of the excitation layer, so although ReLU is a piecewise linear function, the output space is still a nonlinear transformation of the input space. Its characteristics are fast integration and small computing. The functional definition of ReLU is shown in (8):

The calculation tasks to be completed in each grid include B square bounding boxes, and the output parameters of each bounding box include the target's position coordinate information (x, y, w, h) and confidence value. Among them, x and y are compared with the boundaries of a single grid to detect the offset of the center coordinates representing the rectangular boundary, usually through normalization processing; W and h represent the ratio of the width and height of the overall image to the detection boundary, while confidence represents the evaluation results of the YOLO network for individual grid predictions, whether there are interested targets in the bounding box, and the positional accuracy of the square bounding box. The formula for calculating the confidence level is as follows:

Another computing task of the grid is to predict the Conditional probability of class C targets, so that the probability of all classes in the whole network can be found. Each network unit can only predict one possibility, regardless of the number of boundary frames. When conducting experiments, compare the likelihood of such situations with the confidence prediction within a boundary, and the formula is as follows (10):

The formula for calculating the intersection and union ratio is as follows:

In the application of object detection algorithms, the recognition accuracy P represents the proportion of all correctly predicted positive samples in the successfully recognized image, while the recall rate R represents the proportion of the number of correctly recognized objects of interest to the total number of objects in the sample. The calculation formula is as follows:

3.2 Real time monitoring

C3D is the spatial width and height of the DFxDFxMxN input graph. The standard convolutional layer consists of parameters in the convolutional kernel K, and it can be seen that the computational complexity depends on the entry channel m, exit channel n, number of filters K, convolutional core size DkxDk, and feature map size DfxDF. Based on the improved C3D network convolution kernel, the standard convolution function can be calculated using filtering features and combined features, thereby generating new features. The filtering and combining stages can be combined into two stages using deep decomposable volume integrals. Deep separable convolution is a combination of deep convolution decomposition and point convolution. We use deep deconvolution to separate convolutions, execute a single filter for each input channel, and use lxlxl convolution to create a linear overlay of deep convolutional layers.

Assuming K represents the convolutional kernel of the original C3D network, then:

The number of rows represents the number of output channels, and m represents the number of feature channels:

A (b) means that the diagonal element is a diagonal matrix of bi, i.e.:

Therefore, the above equation can be written as the following formula:

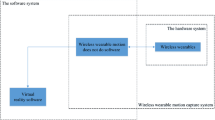

The system architecture of machine learning based on light sensor detection in real-time data simulation of aerobics competition is based on Openstack platform. The entire system consists of five layers, as shown in Fig. 1. Through the system architecture of real-time data simulation application of machine learning based on light sensor detection in aerobics competition, real-time monitoring and analysis of athletes' movements and gestures can be realized, providing accurate feedback and guidance for athletes and coaches, and improving the viewing experience of the audience.

The acquisition of real-time observation data is the focus of the entire analysis system. Due to the complexity of the source level of monitoring data and the different collection methods of monitoring data, it is necessary to establish a monitoring model for cloud platforms, further classify the layering and monitoring items, and then use relevant monitoring methods to monitor the monitoring items. The monitoring model needs to achieve the division of physical resource layer, virtual resource layer, cloud platform management layer, and user application layer, determine the monitoring items in each layer, and then use specific monitoring data collection methods to complete the monitoring data collection of different monitoring items. The monitoring data collection layer uses different monitoring methods to obtain associated monitoring data from the physical and virtual resource layers, and the monitoring data will be transmitted in real-time to the analysis engine in the real-time analysis layer.

Before processing real-time monitoring data, the analysis engine needs to process and analyze the data collected by the optical sensor to determine the exact situation and generate corresponding high-level management activities. The workflow is designed so that we can avoid storing large amounts of observation data in a database and then using that database to filter the observations. The light sensor device is able to sense the movement and posture of the athlete and convert it into an electrical signal through optical technology. These sensors transmit the collected data to the data acquisition system. The data acquisition system is responsible for collecting and processing sensor data in real time and passing it to the analysis engine. In the analysis engine, predefined identification rules are applied to the real-time monitoring data received. These rules are based on machine learning algorithms that, through feature extraction and data analysis of the data, are able to identify the execution of specific actions, gestures or errors. Once predefined identification rules are met, the analysis engine generates corresponding high-level management activities, such as alerts, reminders, or guidance. By using optical sensors for real-time detection and analysis, the storage requirements for large amounts of observation data can be reduced and the efficiency and response speed of the system can be improved. Based on preset recognition rules, specific situations can be identified quickly and accurately, thus providing real-time senior management activities to provide timely feedback and guidance to coaches and competitors.

In Fig. 2, the light sensor can be integrated into the three-dimensional observation framework as a new dimension. This dimension can be expressed as a light sensor segment to measure indicators such as light intensity and movement speed of the player at various time points. By analyzing changes in light intensity and the position of the player, real-time data on the player's movements can be obtained. The optical sensor detection component can be combined with distributed alignment to extract the observed data of all players on different physical hosts. You can also get observations about all applications running on the physical host. In this way, for the sports dimension of the cloud platform, the status and performance of the players during the race can be more comprehensively monitored. In the aerobics competition, the observation index of the application layer of the light sensor mainly focuses on the accuracy and fluency of the players' movements. By monitoring the light sensor data, the performance of the contestant can be evaluated in real time, and real-time feedback and guidance can be provided.

Based on the above assumptions, this article proposes a three-dimensional observation framework. As shown in Fig. 2, it has three dimensions: the horizontal axis represents the physical host, the vertical axis represents the specific application, and the vertical axis is divided into physical segments, virtual segments, application segments, and service categories. The entire three-dimensional space is a cloud platform monitoring space, which can be divided into multiple subspaces based on different levels of the cloud platform. Given different analysis parameters, it is possible to extract relevant parameters needed throughout the entire space for analysis. For example, based on distributed alignment, this allows for the observation data of an application across all physical hosts, as well as the relevant observation data of all applications running on a physical host. These two parts consist of observing items at different levels of the application on the physical host. For the motion dimension of cloud platforms, the main focus is on whether physical resources can meet the needs of users. The logic of user business code is not the focus of this article, so the focus of observation is to monitor the relevant observation indicators of the application layer in real-time, and secondly, to ensure that physical and virtual resources can meet the needs of the application layer. Identify relevant scenes based on the mixed parts of these fragments. In addition, there are a large number of monitoring items related to applications on cloud computing platforms, so there is no need to conduct a comprehensive analysis of observable items when identifying specific situations. In addition, some observation projects are crucial, and in the process of identifying multiple observation situations, it is necessary to analyze the observation data of these important observation projects. Simple language descriptions cannot accurately express the observation project requirements for scene recognition. Therefore, it is necessary to define a real-time observation model mathematically in order to more clearly and objectively express the logical rules in the observation system.

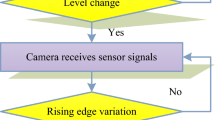

Object detection is an inter frame difference method, which usually selects two consecutive frames or several frames of images from a video image sequence as processing objects, executes pixel based difference functions, and timely detects and extracts changes in the target.

The working principle of the optical sensor is to use the photoelectric effect to convert the light signal into an electrical signal, and obtain real-time data about the movement of the player by measuring the light intensity. In the optical sensor detection part, the received optical signal needs to be processed first. This step usually involves filtering and amplifying the light signal to ensure that subtle changes in the player's movements are accurately captured.

In microscopic images, binary processing is performed based on the T threshold and pixel gray value, with a focus on the target pixels shown in the previous image. Pixels smaller than T represent background image pixels. After processing the received binary differential image, the final area obtained is the area occupied by the target. Gradient based Optical flow method: calculate the gray gradient of image sequence to calculate two-dimensional velocity field. Its principle is to assume that the gray values of the same vertical and moving pixels are kept at a fixed horizontal speed, and the time interval is not more than 0. So we obtain an optical flow regulation equation as shown in (18):

The average function method, also known as the image average method, has a simple and easy to implement algorithm principle. This is an algorithm that extracts background images by directly estimating the grayscale values of background image pixels for background modeling. The principle of using the mean function method to establish a background is that within a given time period, the grayscale values of pixels are averaged at the same position as the N frame video image sequence, and the result is used as the grayscale values of pixels at that position in the background image. The advantage of the average method is its simple principle and low computational complexity. The mathematical expression is as follows:

The median function method is similar to the concept of median filtering, which classifies the grayscale values of pixels, chromaticity, or other parameters at the same position in a cached N-frame video image sequence, thereby obtaining intermediate values and treating the results as the grayscale values of pixels in the background image space.

3.3 Simulation experiment analysis

During the training period, the training records are shown in Fig. 3. The log format is stored in. log format. Then perform visual analysis. Visual analysis refers to the ability to graphically display the changes in parameters obtained during the training process.

In Fig. 3, we can see the change in the loss value of the entire model as the number of batches increases. After about 90 iterations, the Loss value rapidly decreased because YOLO added a Bayesian neural network, greatly accelerating its convergence during training. Subsequently, the Loss value gradually decreases as the number of iterations increases. After repeated more than 20,000 times, this value decreased to around 0.2. When repeated again, this value did not decrease, and in the end, it remained around 0.2.

In Fig. 4, when the R value is less than 0.6, the accuracy of the side is insufficient, and the R value of the person class is low. Many individual categories in the surface marker box are not labeled, which confirms the problem of low person labeling rate under nighttime conditions.

4 Real time data analysis system for aerobics competitions

4.1 Design of real-time data analysis system for aerobics competitions

Light sensors are installed at specific locations on the field to monitor the movement and position of athletes in real time. Through the feedback of the light sensor, the system is able to accurately capture the movement trajectory and body posture of each athlete. During the competition, the light sensor detection section will continuously acquire and update the position and movement data of the athletes in real time. This data is transferred to the competition Data Production subsystem for integration with other performance data. The Contest data production subsystem is responsible for monitoring the process of the contest and ensuring the accuracy and completeness of the data. The data obtained from the optical sensor detection will be correlated and analyzed with the competition performance data to further optimize the accuracy of data processing and statistical analysis.

The competition performance data analysis system is used to achieve statistical analysis of performance data. Its primary premise is the collection of competition performance data, that is, the production of competition performance data. Therefore, the aerobics competition performance data analysis system is composed of two subsystems, namely the competition data production subsystem and the statistical analysis subsystem. In real event competitions, the event performance data production subsystem not only needs to perform the competition functions of a single event, but also needs to obtain specific data of competitive performance. As shown in Fig. 5, the OVR part in the figure is the main part of our system. The processing of the competition ensures that the data of the competition and other interface systems will be fully taken over, allowing the competition to proceed smoothly. After the completion of a single event, the operator collects the competition results obtained from the event data production subsystem into the statistical analysis subsystem. Users use a browser to access the statistical analysis subsystem through various terminal devices and send out various statistical analysis requirements. The system conducts statistical analysis based on these needs. After returning the supplementary results report, the report contains charts and text. Users can print these reports and publish them to other friends and users, increasing interactivity and better serving users. The overall structural design of the entire system is shown in Fig. 5.

By using the B/S method, real-time, accurate, and error free query of sports competition result data has been achieved, providing real-time, accurate, and error free results for sports competition results. Users only need to use the IE browser to search in the comprehensive database, providing protection for users; On the other hand, due to the use of B/S approach, users can only perform simple data queries and cannot perform partial operations such as adding, modifying, and deleting, which ensures the security of the database; Only in C/S mode can data be added, modified, deleted, and other operations be performed. The actual separation operation between the user server and the database server needs to be carried out. According to instructions, users can access networks protected by network firewalls. The server is accessed through the database portal, and the required data is returned to the web server and fully connected access is implemented. For most users, their main requirement is data extraction, not data writing. Therefore, from a load balancing perspective, they should decentralize the task of reading data in order to access data faster and more conveniently.

The business process of the entire aerobics competition performance data analysis system is divided into two parts. The first part is data production, which is the collection and processing of single event performance data. It can not only meet the competition needs of the current event, but also do a good job in collecting competition performance data. The second function is statistics and analysis, which is to input the results of each competition into a statistical analysis system, and perform statistics and analysis on past competition results. Due to the different types of work and processes involved in these two stages, we will introduce their specific processes separately. The process form of the business is shown in Fig. 6.

In the competition data production subsystem, there are three stages: pre competition, mid competition, and post competition. During the competition, performance data needs to be collected, and after the competition, the analysis subsystem needs to be collected.

4.2 Real time data statistics and analysis of aerobics competitions

The role of light sensors is not only to capture the position and movement data of athletes, but also to combine with machine learning algorithms to provide more detailed and accurate data for performance data analysis systems. Through the integration of the light sensor detection part, the system can recognize and record the movements of the athletes in real time, and provide the movement trajectory and body posture data of the athletes. Light sensors are also able to capture key metrics such as an athlete's speed, strength and flexibility. This data will be fed into machine learning models for analysis and prediction, enabling coaches and athletes to better understand performance and room for improvement. Through the training and optimization of machine learning algorithms, the system is able to provide accurate results for athlete performance evaluation in different movements and postures based on a large amount of historical data and real-time competition data. This helps coaches better understand the abilities and potential of each athlete and develop more personalized and targeted training plans based on the analysis results. The application of optical sensor detection can also realize real-time match data simulation. The system can predict an athlete's score and ranking based on their actual movements and posture. This will make the game more interesting and challenging, while also providing a more exciting and intense watching experience for the audience.

Aerobics requires athletes to complete various complex or difficult movements within a specified time, and the level of aerobics athletes will directly affect their technical level. With the reform of competitive aerobics and the improvement of athletes' skills, higher requirements have been put forward for the technical and physical functions of competitive aerobics. Therefore, it is particularly important to comprehensively apply physiological and biochemical analysis to effectively guide sports training. The physical performance of athletes plays a crucial role in scientifically adjusting training loads. On the basis of analyzing the characteristics of competitive aerobic exercise and the physical activity requirements of athletes, physiological indicators for athletes' functional testing are determined, and the test results are statistically analyzed, as shown in Table 1.

Heart rate and blood pressure are basic physiological indicators of the human body, which reflect the function of the cardiovascular system. Exercise has a special impact on heart rate and blood pressure. After long-term exercise training, the heart rate and blood pressure of athletes may be lower than that of normal people, and blood pressure may also decrease. This article selected competitive aerobics athletes from a university in a certain province as the subjects of this experiment. The experimental results showed that the heart rate and systolic blood pressure of aerobics athletes from a university in that province were within the normal range. The heart rate and blood pressure of aerobics athletes from a certain province were higher than those of national team athletes, but there was no statistical difference. The results indicate that long-term aerobics training can significantly improve cardiovascular function. When athletes remain quiet, their heart rate is good and their blood pressure is also in a reduced state. Blood lactate is the basis for evaluating athletes' exercise weight during training. By analyzing changes in blood lactate, the physical activity level of athletes can be analyzed. Blood lactate is very important in reflecting the metabolic characteristics of muscles and human exercise ability during exercise. From Table 2, it can be seen that athletes in a relaxed state are no different from normal individuals, but their physiological levels will sharply increase after exercise, which is directly related to their level of exercise.

A large number of studies have shown that there is a certain connection between the metabolic changes of lactic acid in the human body during exercise and the energy system used during exercise. During exercise training, detecting and analyzing blood lactic acid can help us understand the energy supply situation during exercise. Therefore, the subjects of aerobics were divided into high and low self presentation groups based on their self presentation ability. Among them, those with a self presentation ability score of over 50 were classified as the high self presentation group, while those with a self presentation ability score of less than or equal to 50 were classified as the low self presentation group. As shown in Table 3.

5 Conclusion

This paper studies the application of machine learning technology based on light sensor detection in the real-time data simulation of aerobics competition. Light sensor technology provides an accurate and reliable way to capture movement data of athletes with better accuracy and sensitivity compared to traditional sensor technology. Through the use of machine learning algorithms, this data is simulated and analyzed in real time to provide real-time feedback and evaluation of the match. In the experiment, the heart rate, blood pressure, light intensity reflected by the light sensor and spectral characteristics of the aerobics athletes were measured and analyzed. The experimental results show that the athletes' heart rate and blood pressure are in the normal range after long-term aerobics training. This indicates that long-term aerobics training can significantly improve the cardiovascular function of athletes. Through the light sensor detection, we can obtain the skin reflected light intensity and spectral characteristics of athletes in real time, so as to understand the athletes' physical activity level, muscle metabolism status and exercise ability. The intensity and spectral features of skin reflected light detected by the light sensor increased significantly with the increase of exercise intensity, which was closely related to the level of physical activity of athletes. Therefore, machine learning based on light sensor detection plays an important role in the application of real-time data simulation in aerobics competitions. This technology can provide coaches and athletes with real-time physical condition monitoring and analysis, optimize training programs, and improve athletic performance. There are still some challenges in applying this technology to actual competitions, and further improvement and optimization of the design and positioning of the light sensor is needed to improve the accuracy and reliability of the data. The development and training of machine learning algorithms also requires more research and exploration to ensure their stability and feasibility in practice.

Data availability

The data will be available upon request.

References

Aleksandraviciene, R., Zaicenkoviene, K., Stasiule, L., Stasiulis, A.: Physiological responses and energetics of competitive group exercise in female aerobic gymnasts with different levels of performance. Percept. Mot. Skills 120(3), 787–803 (2015)

Cao, J.: Research on urban intelligent traffic monitoring system based on video image processing. Int. J. Signal Process. Image Process. Pattern Recogn. 9(6), 393–406 (2016)

Dodero, A., Escher, A., Bertucci, S., Castellano, M., Lova, P.: Intelligent packaging for real-time monitoring of food-quality: current and future developments. Appl. Sci. 11(8), 3532–3537 (2021)

Ghiasi-Shirazi, K.: Competitive cross-entropy loss: a study on training single-layer neural networks for solving nonlinearly separable classification problems. Neural Process. Lett. 50, 1115–1122 (2019)

Heijnen, S., Hommel, B., Kibele, A., Colzato, L.S.: Neuromodulation of aerobic exercise—a review. Front. Psychol. 6, 1890–1894 (2016)

Kim, J., Ham, Y., Chung, Y., Chi, S.: Systematic camera placement framework for operation-level visual monitoring on construction jobsites. J. Constr. Eng. Manag. 145(4), 04019010–04019025 (2019)

Mahesh, B.: Machine learning algorithms-a review. Int. J. Sci. Res. 9, 381–386 (2020)

Masoga, S., Mphafudi, G.P.: Dietary recommendations for active and competitive aerobic exercising athletes: a review of literature. Global J. Health Sci. 14(4), 1–95 (2022)

Moshenska, T., Petrov, D.: Improving the physical qualities of volleyball players using aerobics at the stage of specialized basic training. Slobozhanskyi Herald Sci. Sport 8(1), 50–53 (2020)

Ren, J., Wang, Y.: Overview of object detection algorithms using convolutional neural networks. J. Comput. Commun. 10(1), 115–132 (2022)

Salih, T.A., Younis, N.K.: Designing an intelligent real-time public transportation monitoring system based on iot. Open Access Library J. 8(10), 1–14 (2021)

Wang, L., Liu, X., Lee, D.J., Tay, J.H., Zhang, Y., Wan, C.L., Chen, X.F.: Recent advances on biosorption by aerobic granular sludge. J. Hazard. Mater. 357, 253–270 (2018)

Wei, X., Jiang, S., Li, Y., Li, C., Jia, L., Li, Y.: Defect detection of pantograph slide based on deep learning and image processing technology. IEEE Trans. Intell. Transp. Syst. 21(3), 947–958 (2019)

Yang, C.T., Chen, S.T., Liu, J.C., Yang, Y.Y., Mitra, K., Ranjan, R.: Implementation of a real-time network traffic monitoring service with network functions virtualization. Futur. Gener. Comput. Syst. 93, 687–701 (2019)

Yue, S.: Image recognition of competitive aerobics movements based on embedded system and digital image processing. Microprocess. Microsyst. 82, 103925–103929 (2021)

Zhang, S., Zhang, Y., Chen, Z., Li, Z.: Vsam-based visual keyword generation for image caption. IEEE Access 9, 27638–27649 (2021)

Funding

This paper was supported by the Research on Sports Compound Talent Training Model Based on Internet Plus Health through the 2019 Colleges and Universities Teaching Reform Innovation Project of Shanxi Province under Project No.J2019187.

Author information

Authors and Affiliations

Contributions

Haiying Guo has done the first version, Yuzhe Huang and Hui Liu has done the simulations. All authors have contributed to the paper’s analysis, discussion, writing, and revision.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Conflict of interest

The authors declare that they have no competing interests.

Ethical approval

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Guo, H., Huang, Y. & Liu, H. Application of machine learning based on optical sensor detection in real-time data simulation of aerobics competitions. Opt Quant Electron 56, 342 (2024). https://doi.org/10.1007/s11082-023-06027-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11082-023-06027-6