Abstract

It is common for mathematical models of physical systems to possess qualitative properties such as positivity, monotonicity, or conservation of underlying physical behavior. When these models consist of differential equations, it is also common for them to be solved via splitting, i.e., splitting the differential equations into parts that are integrated separately. All splitting strategies are not created equal; however, in this work, we study the effect of two splitting strategies on qualitative property preservation applied to the basic susceptible-infected-recovered (SIR) model from epidemiology and the effect of backward integration of operator-splitting methods on positivity preservation in the Robertson test problem. We find that qualitative property preservation does depend on the splitting strategy even if the sub-integrations are performed exactly. Accordingly, the specific choice of splitting strategy used may be informed by requirements of qualitative property preservation. The choice of operator-splitting method also depends on the specific properties of the exact solution of the sub-systems.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The influence of mathematical models on modern daily life has increased in accordance with the dramatic increase in modern computing power. Many of these models are based on differential equations, e.g., numerical weather prediction, investment portfolio behavior, and trajectory prediction of astronomical bodies. It is not hard to imagine that these models are often large and complex and accordingly have no analytical mathematical solution. Thus, not only do their solutions need to be approximated numerically, but it is also often necessary for the model to be split into multiple parts in order to facilitate their numerical solution. For example, splitting methods are used to solve advection-diffusion-reaction problems [1] and large-scale chemical reaction systems [2].

A production-destruction system (PDS) is a system of ordinary differential equations (ODEs) that is often used in biology and chemistry to describe the production and destruction mechanism between variables.

Definition 1

A production-destruction system of N constituents can be written as a system of differential equations of the form

where \(\textbf{y} = [y_1,y_2,\dots ,y_N]^T\) is the vector of constituents. For solutions to be non-negative, we assume that the production terms \(p_{ij}\) and the destruction terms \(d_{ij}\) are non-negative. The production term \(p_{ij}\) is the rate at which constituent j transforms into constituent i, and the destruction term \(d_{ij}\) is the rate at which constituent i transforms into constituent j. We assume \(p_{ij}(y), d_{ij}(y) \ge 0\) for \(y_i(t) \ge 0\), \(i=1,2, \dots , N\) and all \( t \ge 0\). A sufficient condition for y(t) to be non-negative is then \(\lim \limits _{y_i \rightarrow 0^+} d_{ij}(y) = 0\), \(i=1,2, \dots , N\).

Definition 2

A PDS (1) is called non-negative if non-negative initial values \(y_i(0) \ge 0 \) for \(i=1,2,\dots ,N\) imply non-negative solutions \(y_i(t) \ge 0\) for \(i=1,2,\dots ,N\) for all \(t > 0\).

Definition 3

A PDS (1) is called conservative if \(p_{ij}(\textbf{y}) = d_{ji}(\textbf{y})\) for all \(i,j = 1,2,\dots ,N\). The system is called fully conservative if in addition \(p_{ii}(\textbf{y}) = d_{ii}(\textbf{y}) = 0\) for all \(i=1,2,\dots ,N\).

For the purposes of this analysis and without loss of generality, we only consider fully conservative PDSs.

Definition 4

Given a numerical method to solve (1), let \(\textbf{y}_n\) denote the approximation of \(\textbf{y}(t_n)\) at a time step \(t_n\). The numerical method is called

-

unconditionally conservative if the sum of all components of \(\textbf{y}_n\) is constant for all \(n \in \mathbb {N}\) and all \(\Delta {t} > 0\).

-

unconditionally non-negative if all components of \(\textbf{y}_{n+1}\) is non-negative for all \(\Delta {t} > 0\) whenever all components of \(\textbf{y}_{n}\) is non-negative.

In this paper, we are interested in two examples of PDSs: the susceptible-infected-recovered (SIR) model [3] and the stiff Robertson test problem [4, 5].

Models of PDSs have qualitative properties associated with their variables such as positivity or conservation of underlying physical behavior. Models such as the SIR model further requires monotonicity of certain variables based on the model assumptions. Violation of such properties at best undermines confidence in the model or its solution/predictions; at worst, the modelling process can be invalidated.

Real-world models of complex phenomena such as the spread of disease throughout a population tend to also become complex themselves as the number of processes included or the demands on the predictions increase. Models based on differential equations must be solved numerically. Real-world problems quickly become too unwieldy solve monolithically, either due to their complexity or size, and splitting is a divide-and-conquer approach to obtain numerical solutions more efficiently (or at all) [1, 6,7,8].

It is well known that numerical solutions generally do not preserve known properties of the exact solution. There are some notable exceptions, however, such as the preservation of linear invariants for linear multi-step and Runge–Kutta methods [9], and a great deal of research has gone into preserving qualitative properties such as positivity, monotonicity, and symplecticity, to name but a few; see, e.g., [1, 10, 11] and references therein. Such methods are also referred to as structure-preserving methods.

Many studies focus on the positivity-preserving property of a method. In [12], the authors consider graph-Laplacian ODEs and propose some second-order methods that unconditionally preserve positivity as well as a third-order method that preserves positivity under mild restrictions. These methods are based on Magnus integrators [13]. The authors of [12] propose a splitting strategy for the original system written in an extended space that applies to stiff or non-separable problems and uses the Strang splitting method. The overall method is second order and unconditionally conserves mass (akin to total population in the SIR model) and positivity. Patankar–Runge–Kutta methods (and their modified versions) have been developed to solve production-destruction systems (PDSs) monolithically while preserving the positivity and mass conservation [14, 15]. It turns out that such methods can be interpreted as approximations to the methods proposed in [12].

Although methods that preserve qualitative properties may involve splitting, e.g., [10, 16, 17], few studies systematically consider the effect of splitting strategies used in practice on qualitative property preservation. Given that splitting is so common due to its necessity or utility in practice, we systematically explore the effect of the choice of splitting strategy on the preservation of qualitative properties of the numerical solution of a differential equation. In this study, we limit the strategies considered to the process-based splitting as well as dynamic linearization.

The importance of the choice of splitting strategy on qualitative property preservation is shown indirectly in [12] in the context of insisting on writing the system in graph-Laplacian form. The effect of the choice of splitting strategy on the solution itself is shown more directly and dramatically in [18], where it is shown that two-dimensional rotations can be integrated exactly in time with the use of a splitting strategy based on shear rotations but not with the use of standard directional splitting.

The remainder of this paper is organized as follows. In Section 2, we give a description of operator-splitting methods including \(N_{\text {op}}\)-additively split methods for \(N_{\text {op}} >2\) and relevant background, definitions, and the qualitative properties of interest on the SIR model and Robertson test problem. We describe two specific splitting strategies applied to the SIR model in Section 2.2, a process-based splitting based on the production and destruction terms and one based on dynamic linearization, which essentially performs a local linearization at every step of a numerical method. We further describe the generalization of process-based splitting to PDSs in Section 2.3. In Section 3, we give the main theoretical results regarding qualitative property preservation from the splitting strategies applied to the SIR model and the Robertson test problem. We find that not all splitting strategies are created equal when it comes to qualitative property preservation. How well an operator-splitting method preserves the desired qualitative properties depends on the splitting strategy (process-based or dynamical linearization), the operator-splitting method, and the form of the exact solution of the sub-systems. In Section 4, we offer some numerical experiments to support the theoretical results reported in the previous sections. Finally, in Section 5, we summarize our results and offer some conclusions.

2 Theoretical background

In this section, we describe the relevant theoretical background for the study of qualitative property preservation by operator splitting in the context of the production-destruction systems (PDSs). Accordingly, we introduce the necessary background on operator-splitting methods and the qualitative properties of interest. We examine two ways to split the SIR model (process-based and dynamic linearization) and a process-based splitting of the Robertson test problem in detail.

2.1 Operator-splitting methods

In this section, we introduce the operator-splitting (OS) methods as presented in [10]. We consider the initial value problem (IVP) for a 2-additive ordinary differential equation

Let \(\varphi ^{\left[ {\ell }\right] }_{{\Delta {t}}}\) be the exact flow of the sub-system

for \(\ell = 1, 2\). Compositions of \(\varphi ^{\left[ {\ell }\right] }_{{\Delta {t}}}\) can be used to construct numerical solutions to (2). The most commonly known methods are the first-order Godunov (or Lie–Trotter) splitting method,

and the second-order Strang splitting method,

To construct a general s-stage operator-splitting method, we consider splitting coefficients \(\varvec{\alpha } = [\varvec{\alpha }_1, \varvec{\alpha }_2, \dots , \varvec{\alpha }_s]\), where \(\varvec{\alpha }_k = [\alpha _{k}^{[1]}, \alpha _{k}^{[2]}], \ k=1,2,\dots , s\). An s-stage operator-splitting method that solves (2) can be written as

where \(\Phi _{\varvec{\alpha }_k \Delta {t}}^{\{k\}}:= \varphi ^{\left[ 2\right] }_{\alpha _{2}^{[k]}\Delta {t}} \circ \varphi ^{\left[ 1\right] }_{\alpha _{1}^{[k]}\Delta {t}} \). To achieve an order-\(p_{OS}\) operator-splitting method, the coefficients \(\varvec{\alpha }\) must satisfy a system of polynomial equations derived from the Baker–Campbell–Hausdorff (BCH) formula [10]. For method up to order \(p_{OS}=3\), the order conditions are:

The application of OS methods is often limited to first- and second-order because methods of order three or higher require backward-in-time sub-steps for each operator during the integration [19]. In the case of the SIR model, backward-in-time integration tends to add challenges to preserving monotonicity of the numerical solution.

The family of two-stage, second-order operator-splitting methods admits a one-parameter set of solutions, of which the well-known Strang splitting method is a member. This family can be described using a free parameter \(\beta \ne 1\). We denote such a method as OS22\(\beta \), whose coefficients are given in Table 1. By varying the values of \(\beta \), we can derive second-order OS methods with backward-in-time integration in only one or both of the operators. For \(0 \le \beta \le 0.5\), both operators are integrated forward-in-time only. For \(\beta > 0.5\), operator 1 requires backward integration at one sub-step. For \(\beta > 1\) or \(\beta <0\), operator 2 requires backward integration at one sub-step. This makes OS22\(\beta \) a good template to examine the effect of backward integration on the properties (P1)–(P4).

We note that OS22\(\beta (1 - \sqrt{2}/2)\) is the “best” two-stage, second-order OS method in the sense that it has the minimum local error measure for this class of methods [20]. For the purposes of comparison with higher-order methods, we also present numerical solutions from the third-order Ruth (R3) method, whose coefficients are given in Table 2, and the fourth-order Yoshida (Y4) method, whose coefficients are given in Table 3.

2.1.1 Operator-splitting for \(N_{\text {op}}\)-additive problems

Consider the IVP for an \(N_{\text {op}}\)-additive ODE

The IVP (4) can be solved using a generalized Godunov or Strang \(N_{\text {op}}\)-splitting method,

Remark 1

We note that the Strang splitting method is a composition of the Godunov splitting method with its adjoint over \(\Delta {t}/2\). One of the approaches to generate high-order \(N_{\text {op}}\)-split operator-splitting methods is to use composition methods. We can compose basic low order \(N_{\text {op}}\)-split operator-splitting methods with different step sizes to generate high-order methods [10]. For example, let \( \gamma _1 = \gamma _3 = \frac{1}{2-2^{1/3}},\) and \( \gamma _2 = - \frac{2^{1/3}}{2-2^{1/3}}\). We can generate a Yoshida-like fourth-order \(N_{\text {op}}\)-split method by composing the Strang splitting method (6):

The explicit coefficients of the \(N_{\text {op}}\)-split Yoshida method can be found in Table 4.

2.2 The SIR model

The susceptible-infected-recovered (SIR) model is a basic compartmental model first introduced by Kermack and McKendrick [3] in 1927. It is used for modeling of the spread of infectious diseases. Each living member of a general population is assigned to compartments susceptible (S), infectious (I), or recovered (R) according to whether they have never had the disease, have the disease, or no longer have the disease. The mathematical model can be described using the following differential equations

for all \(t>0\), \(a, b > 0\) with the initial condition

Despite its simplicity, the SIR model can be used to demonstrate general trends in the evolution of its constituent compartments in response to new data (e.g., suggesting changes in parameter values) and potential interventions (e.g., mandatory masking, limits on gathering sizes, or lockdowns).

2.2.1 Process-based splitting of the SIR model with exact sub-integration

We first solve the SIR model with an operator-splitting strategy that splits the right-hand side of (8) according to the physical processes between the variables:

Remark 2

We note that (9a) describes the transformation of population between I and R and (9b) describes the transformation of population between S and I. In this case, the process-based splitting coincides with a linear-nonlinear splitting of the original system of ODE. Linear-nonlinear splitting is a common splitting strategy for systems such as reaction-diffusion systems [21].

At each OS stage k, sub-systems (9a) and (9b) are solved sequentially with time step-sizes \(\alpha _{k}^{[1]}\Delta {t}\) and \(\alpha _{k}^{[2]}\Delta {t}\). For such a splitting strategy, each sub-integration can be performed exactly. The exact solutions to (9a) and (9b) at OS stage k are

The algorithm to advance the numerical solution of (9a) and (9b) using OS22\(\beta \) with exact sub-integration () from given \(S_n, I_n, R_n\) values at time \(t_n\) to values \(S_{n+1}, I_{n+1}, R_{n+1}\) at time \(t_{n+1} = t_n + \Delta {t}\) has the following form.

2.2.2 Dynamic linearization of the SIR model with exact sub-integration

To solve an ODE \( \frac{\textrm{d}\textbf{y}}{\textrm{d}t} = \textbf{f}(t,\textbf{y})\) using dynamic linearization, we first write the ODE as

where \(\textbf{J}= \partial {\textbf{f}}/\partial {\textbf{y}}\) is the Jacobian matrix. Then, the ODE is split as

Unless \(\textbf{f}(t,\textbf{y})\) is linear and has constant coefficients, \(\textbf{J}\) is generally a function of t and the solution \(\textbf{y}(t)\). In the method of dynamic linearization, \(\textbf{J}\) is evaluated and then frozen at the beginning of each time step. Specifically, solving the SIR model using dynamic linearization from \(t_n\) to \(t_{n+1}\), we evaluate the Jacobian matrix at \(( t_n, \textbf{y}_n)\) as

For a splitting based on dynamic linearization of (8), the sub-systems

can again be integrated exactly and the solutions \(X_{n+1}\) for \(X \in \{S,I,R\}\) are derived using a desired OS method. The exact solutions to (11a) and (11b) can be generated by using a computer algebra system such as Maple. Due to the complexity of these solutions, we do not present them here.

2.3 Robertson test problem and general PDSs

The Robertson test problem is a stiff system of three non-linear ODEs that describes the chemical reaction between three variables. It is given as follows

where a, b, c are positive constants and the initial conditions \(X(0) = X_0\), \(Y(0) = Y_0\), \(Z(0) = Z_0\) are all positive.

2.3.1 Process-based splitting of the Robertson test problem with exact sub-integration

As proposed in (2.2.1), we split the Robertson problem according to processes into the following two sub-systems:

Similar to the SIR problem, at each OS stage k, sub-systems (13a) and (13b) are solved exactly with time step-sizes \(\alpha _{k}^{[1]}\Delta {t}\) and \(\alpha _{k}^{[2]}\Delta {t}\). The exact solutions to (13a) and (13b) at OS stage k are

where \(\{\alpha _{k}^{\ell }\}_{k=1,2,\dots ,s}^{\ell = 1,2}\) and \(X_n, Y_n, Z_n\) are the numerical approximations of the variables X, Y, Z at \(t=t_n\). We note that \(X_{n,0}^{[2]} = X_n\), \(Y_{n,0}^{[2]} = Y_n\), and \(Z_{n,0}^{[2]} = Z_n\). To advance from \(t_n\) to \(t_{n+1}\), apply (14a) and (14b) consecutively over all s stages of the operator-splitting method, and let \(X_{n+1} = X_{n,s}^{[2]}, Y_{n+1} = Y_{n,s}^{[2]}, Z_{n+1} = Z_{n,s}^{[2]}\).

2.3.2 Process-based splitting of the production-destruction systems

A natural way to solve a fully conservative PDS (1) with N constituents using operator splitting is to split the system into \( \frac{N(N-1)}{2}\) sub-systems,

for \(i = 1,2,\dots ,N-1\) and \(j = i+1,i+2,\dots , N\).

Remark 3

-

1.

We note that each sub-system describes the rate at which constituents i and j are transformed from one to the other. If all constituents i and j have two-way connections, a process-based splitting strategy will have \(N(N-1)/2\) operators. For example, a general PDS with three constituents would be split into three sub-systems as depicted in Fig. 1. In the case of the SIR model, the transformations are unidirectional and only between S and I and I and R as shown in Fig. 2. Therefore, the resulting split system consists of only two sub-systems (each treated as one operator on two constituents at a time).

-

2.

The choice on how to split a system of differential equations usually depends on the goals of the simulation, e.g., on the properties to be preserved or the physical or computational characteristics of the solution. Splitting the PDSs as described in (15) produces much simpler sub-systems and generally increases the chances of obtaining an exact solution for each sub-system (if desired). There are several available high-order 2- and 3-split operator-splitting methods available, e.g., [20, 22]. \(N_{\text {op}}\)-split operator-splitting methods include Godunov (5), Strang (6), and Yoshida (7). We are unaware of general \(N_{\text {op}}\)-split operator-splitting methods beyond these.

2.4 Qualitative properties

2.4.1 Qualitative properties for the SIR model

Numerical solutions to the SIR model must share important properties with the true solution in order for them to have physical interpretations. We denote the numerical solutions \(X_n \approx X(t_n)\), \(X \in \{S, I, R\}\), for \(t_n = n\Delta {t}\), \(n \in \mathbb {N}\) \( := \{ 0, 1, \dots \} \).

-

1.

The dynamics of an epidemic often dominate the dynamics of birth, death, and population immigration. Accordingly, it is justified to omit the effects of births, deaths, and immigration in a simple SIR model. Hence, the total population is conserved. The conservation of total population can be derived from the differential equations. By adding the equations of system (8), it is easy to obtain

$$\begin{aligned} \frac{\textrm{d}S}{\textrm{d}t} +\frac{\textrm{d}I}{\textrm{d}t} +\frac{\textrm{d}R}{\textrm{d}t} = 0 \quad \Rightarrow \quad S(t) + I(t) + R(t) = N_0 \text { for all } t > 0. \end{aligned}$$We demand the same property from the numerical solutions; i.e., given initial conditions \(S_0 + I_0 + R_0 = N_0\), we have

$$\begin{aligned} S_n + I_n + R_n = N_0 \text { for all } n\in \mathbb {N} \end{aligned}$$(P1)Failure to satisfy (P1) would undermine the credibility of any results. That being said, satisfaction of (P1) in itself does not guarantee reliable solutions; i.e., (P1) is a necessary but not sufficient indicator of solution quality.

-

2.

Because the functions S, I, R denote population densities, their values should remain non-negative. Hence, we require the same from the numerical solution; i.e., given initial condition \(X_0 \ge 0\), we have

$$\begin{aligned} X_n \ge 0, \text { for all } n\in \mathbb {N} \text { and } X \in \{S,I,R \}. \end{aligned}$$(P2) -

3.

We assume that infected or recovered individuals develop immunity; therefore, the function S is non-increasing in time. We require that the numerical solution satisfies

$$\begin{aligned} S_{n} \ge S_{n+1} \text { for all } n\in \mathbb {N}. \end{aligned}$$(P3) -

4.

We assume that recovered individuals do not move to another compartment. Hence, R must be an non-decreasing function in time. We require the same from the numerical solution:

$$\begin{aligned} R_{n} \le R_{n+1} \text { for all } n\in \mathbb {N}. \end{aligned}$$(P4)

2.4.2 Qualitative properties for general PDSs

General production-destruction systems do not require monotonicity in its variables. We are interested in preserving the conservation and positivity properties in the numerical solution as defined in (4). In the context of the Robertson test problem introduced in Section 2.3.1, because the original ODE (12) is unconditionally conservative, therefore for all \(\Delta {t} > 0 \), for each \(n\in \mathbb {N}\):

Because each variable X, Y, Z represents a chemical concentration, for all \(\Delta {t} > 0 \), for each \(n\in \mathbb {N}\):

3 Main results: effect of splitting strategy on qualitative property preservation of production-destruction systems

In this section, we give the main results on the qualitative property preservation of the two different splitting strategies considered applied to the SIR model and process-based splitting applied to the Robertson test problem. We also extend to the positivity-preserving property of OS methods to general production-destruction systems.

3.1 Conservation property of operator-splitting methods

In this section, we discuss the effect of operator-splitting methods in preserving the conservation property of a production-destruction system.

Theorem 1

Assume that each sub-system (15) of a process-based splitting strategy of a PDS (1) has an exact solution. Then, the numerical approximation obtained using the operator-splitting methods and exact sub-integration is unconditionally conservative.

Proof

When solving (1) using operator-splitting methods, let \(\textbf{y}_{n,k}^{[\ell ]}\) be the numerical solution of solving the sub-system (15) exactly over a fraction of \(\alpha _{k}^{[\ell ]}\Delta {t}\). Because the sum of the derivatives in (15) is equal to zero, the total sum of components of \(\textbf{y}_{n,k}^{[\ell ]}\) does not change regardless of the value of \(\alpha _{k}^{[\ell ]}\). Hence, the sum of the components of numerical solution \(\textbf{y}_{n+1}\) at \(t=t_{n+1}\) is equal to the sum of the components of numerical solution \(\textbf{y}_{n}\) at \(t=t_{n}\). Hence, the operator-splitting methods are unconditionally conservative. \(\square \)

Remark 4

We note that the conservation property relies on two facts: 1. each sub-system is unconditionally conservative, i.e., the sum of the derivatives equals zero, and 2. the underlying numerical method to solve the sub-systems is also unconditionally conservative. In this case, the operator-splitting methods do not affect the conservation property. For the same reason, if the SIR model is split using dynamic linearization (11a) and (11b) and solved using operator-splitting methods with exact sub-integration, the numerical solution is still unconditionally conservative. In conclusion, property (P1) is satisfied for the SIR model with both process-based splitting and dynamical linearization splitting, and property (Robertson P1) is satisfied for the Robertson test problem.

Remark 5

One may contemplate eliminating one variable using the conservation property of the system for the SIR model and solving a system with one less unknown. However, it is unclear that such an approach would significantly simplify the solution of the SIR model or (even more so) other larger and more complex systems, e.g., production-destruction systems.

3.2 Process-based splitting of the SIR model

In this section, we focus on the effect of negative time-stepping on the qualitative properties (P2)–(P4). If we do not need to employ any backward stepping, the properties (P2)–(P4) are satisfied trivially as stated in (2).

Theorem 2

Assume that all \(\alpha _{k}^{[\ell ]} \ge 0\). Then, properties (P2)–(P4) are satisfied when we solve the SIR model using (10).

Proof

Property (P2) is satisfied because the exact solutions (10a) and (10b) are both positive for all intermediate stages of S, I, R if \(\Delta {t} \ge 0\) and \(\alpha _{k}^{[\ell ]} \ge 0\).

Property (P3) is satisfied because \( \frac{\textrm{d}S^{[1]}}{\textrm{d}t} = 0\) and \( \frac{\textrm{d}S^{[2]}}{\textrm{d}t} \le 0\) when all intermediate variables of S, I, R are all positive. Hence, when the subsystems (9a) and (9b) are solved exactly, the desired montonicity property (P3) is preserved.

Similarly, Property (P4) is satisfied because \( \frac{\textrm{d}R^{[1]}}{\textrm{d}t} \ge 0\) and \( \frac{\textrm{d}R^{[2]}}{\textrm{d}t} = 0\) when all intermediate variables of S, I, R are all positive. \(\square \)

3.2.1 Solving the SIR model using OS22\(\beta \) with negative coefficients

Lemma 1

If \(S_n \ge 0\) and \(I_n \ge 0\), then \(S_{n+1} \ge 0\) and \(I_{n+1} \ge 0\) for all \(\Delta {t} > 0\) when the SIR model is solved using ().

Proof

Equation (10a) implies that at each stage k, after solving the first sub-system (9a) over \(\alpha _{k}^{[1]}\Delta {t}\), \(S^{[1]}_{n,k} \ge 0\) and \(I^{[1]}_{n,k} \ge 0\) if \(S^{[2]}_{n,k-1} \ge 0\) and \(I^{[2]}_{n,k-1} \ge 0\).

Equation (10b) implies that at each stage k, after solving the second sub-system (9b) over \(\alpha _{k}^{[2]}\Delta {t}\), \(S^{[2]}_{n,k} \ge 0\) and \(I^{[2]}_{n,k} \ge 0\) if \(S^{[1]}_{n,k} \ge 0\) and \(I^{[1]}_{n,k} \ge 0\) because exponential functions are positive.

Therefore, if \(S_{n}\ge 0 \) and \(I_n \ge 0\), we can recursively conclude that \(S^{[\ell ]}_{n,k} \ge 0\) and \(I^{[\ell ]}_{n,k} \ge 0\) for \(\ell = 1,2\) and all \(k=1,2,\dots ,s\). Hence, \(S_{n+1} = S^{[2]}_{n,s} \ge 0 \) and \(I_{n+1} = I^{[2]}_{n,s} \ge 0 \). \(\square \)

Proposition 1

Property (P4) holds for the SIR model for all \(\Delta {t} > 0\) if \(S_n \ge 0\) and \(I_n \ge 0\) in ().

Proof

We consider the following two cases:

-

\(\beta \in (-\infty ,0.5] \). When \(\beta \in (-\infty ,0.5]\), \(\alpha _{k}^{[1]} \ge 0 \) for \(k=1,2\). Hence, \(1-e^{-b\alpha _{k}^{[1]}\Delta {t}} \ge 0\) for \(k=1,2\). Furthermore, the proof of (1) implies that \(I_n \ge 0\) and \(I_{n,1}^{[2]} \ge 0\). Therefore,

$$R_{n+1}- R_n = I_n (1-e^{-b\alpha _{1}^{[1]}\Delta {t}}) + I_{n,1}^{[2]} (1-e^{-b\alpha _{2}^{[1]}\Delta {t}}) \ge 0,$$and so \(R_{n+1} \ge R_n\) for all n.

-

\(\beta \in (0.5,1)\cup (1,\infty )\). When \(\beta \in (0.5,1)\cup (1,\infty )\), solving the SIR model with (OS22\(\beta \)-process-based) yields

$$\begin{aligned} R_{n+1} - R_n = I_n(1-e^{-b\alpha _{1}^{[1]}\Delta {t}}) + \frac{I_n (1-e^{-b\alpha _{2}^{[1]}\Delta {t}})(S_n+I_ne^{-b\alpha _{1}^{[1]}\Delta {t}})e^{-b\alpha _{1}^{[1]}\Delta {t}}}{S_ne^{-a(S_n+I_ne^{-b\alpha _{1}^{[1]}\Delta {t}})\alpha _{1}^{[2]}\Delta {t}} + I_ne^{-b\alpha _{1}^{[1]}\Delta {t}}}. \end{aligned}$$We first show that

$$\begin{aligned} R_{n+1} - R_n \ge I_n(1-e^{-b\alpha _{1}^{[1]}\Delta {t}}) + \frac{I_n (1-e^{-b\alpha _{2}^{[1]}\Delta {t}})(S_n+I_ne^{-b\alpha _{1}^{[1]}\Delta {t}})e^{-b\alpha _{1}^{[1]}\Delta {t}}}{S_n+ I_ne^{-b\alpha _{1}^{[1]}\Delta {t}}} . \end{aligned}$$(16)When \(\beta \in (0.5,1)\), \(\alpha _{1}^{[1]} < 0\), \(\alpha _{1}^{[2]} > 0 \), and \(\alpha _{2}^{[1]} > 0 \), and the following inequalities hold:

$$\begin{aligned} \left\{ \begin{array}{ll} I_n(1-e^{-b\alpha _{1}^{[1]}\Delta {t}})< 0, &{} \text { because } \alpha _{1}^{[1]} <0; \\ \frac{I_n (1-e^{-b\alpha _{2}^{[1]}\Delta {t}})(S_n+I_ne^{-b\alpha _{1}^{[1]}\Delta {t}})e^{-b\alpha _{1}^{[1]}\Delta {t}}}{S_ne^{-a(S_n+I_ne^{-b\alpha _{1}^{[1]}\Delta {t}})\alpha _{1}^{[2]}\Delta {t}} + I_ne^{-b\alpha _{1}^{[1]}\Delta {t}}}> 0, &{} \text { because } \alpha _{2}^{[1]}> 0; \\ e^{-a(S_n+I_ne^{-b\alpha _{1}^{[1]}\Delta {t}})\alpha _{1}^{[2]}\Delta {t}} \le 1 , &{} \text { because } \alpha _{1}^{[2]} > 0. \end{array} \right. \end{aligned}$$Hence, (16) holds. When \(\beta \in (1,\infty )\), \(\alpha _{1}^{[1]} > 0\), \(\alpha _{1}^{[2]} < 0 \), and \(\alpha _{2}^{[1]} < 0 \), and the following inequalities hold:

$$\begin{aligned} \left\{ \begin{array}{ll} I_n(1-e^{-b\alpha _{1}^{[1]}\Delta {t}})> 0, &{} \text { because } \alpha _{1}^{[1]} >0; \\ \frac{I_n(1-e^{-b\alpha _{2}^{[1]}\Delta {t}})(S_n+I_ne^{-b\alpha _{1}^{[1]}\Delta {t}})e^{-b\alpha _{1}^{[1]}\Delta {t}}}{S_ne^{-a(S_n+I_ne^{-b\alpha _{1}^{[1]}\Delta {t}})\alpha _{1}^{[2]}\Delta {t}} + I_ne^{-b\alpha _{1}^{[1]}\Delta {t}}}< 0, &{} \text { because } \alpha _{2}^{[1]}< 0; \\ e^{-a(S_n+I_ne^{-b\alpha _{1}^{[1]}\Delta {t}})\alpha _{1}^{[2]}\Delta {t}} \ge 1 , &{} \text { because } \alpha _{1}^{[2]} < 0. \end{array} \right. \end{aligned}$$Hence, (16) holds. Finally,

$$\begin{aligned} R_{n+1} - R_n{} & {} \ge I_n(1-e^{-b\alpha _{1}^{[1]}\Delta {t}}) + \frac{I_n(1-e^{-b\alpha _{2}^{[1]}\Delta {t}})(S_n+I_ne^{-b\alpha _{1}^{[1]}\Delta {t}})e^{-b\alpha _{1}^{[1]}\Delta {t}}}{S_n+ I_ne^{-b\alpha _{1}^{[1]}\Delta {t}}} \\{} & {} = I_n(1-e^{-b\alpha _{1}^{[1]}\Delta {t}}) + I_n(1-e^{-b\alpha _{2}^{[1]}\Delta {t}})e^{-b\alpha _{1}^{[1]}\Delta {t}} \\{} & {} = I_n - I_ne^{-b\alpha _{1}^{[1]}\Delta {t}} + I_ne^{-b\alpha _{1}^{[1]}\Delta {t}} - I_n e^{-b(\alpha _{1}^{[1]}+\alpha _{2}^{[1]})\Delta {t}} \\{} & {} = I_n(1-e^{-b(\alpha _{1}^{[1]}+\alpha _{2}^{[1]})\Delta {t}}). \end{aligned}$$We note that \(\alpha _{1}^{[1]}+\alpha _{2}^{[1]} = 1\) from the order conditions. Therefore, \(R_{n+1} - R_n > 0\).

In conclusion, \(R_{n+1} \ge R_n\) for all \(\Delta {t} \ge 0\) when the SIR model is solved using (OS22\(\beta \)-process-based). \(\square \)

Corollary 1

Property (P2) holds for all \(\Delta {t} > 0\) when the SIR model is solved using (OS22\(\beta \)-process-based).

Proof

Proposition 1 implies that, for all \(\Delta {t} \ge 0\), \(R_{n+1} \ge 0\) if \(R_n \ge 0\). Equation (1) and the initial condition \(S_0,I_0,R_0\ge 0\) imply that \(S_n, I_n, R_n \ge 0\) for all n. \(\square \)

Remark 6

We note that the intermediate stages \(R_{n,k}^{[\ell ]}\) may not all be positive, but all solution values at \(t = t_{n+1}\) are non-negative.

Furthermore, the proof of Proposition 1 can be generalized to cases where \(\alpha _{k}^{[1]}+\alpha _{k+1}^{[1]} \ge 0\), which is a property both R3 and Y4 satisfy. In particular, it can easily be verified that in R3, we have \(\alpha _{2}^{[1]}+\alpha _{3}^{[1]} > 0\), and in Y4, we have \(\alpha _{1}^{[1]}+\alpha _{2}^{[1]} > 0\) and \(\alpha _{3}^{[1]}+\alpha _{4}^{[1]} > 0\).

Proposition 2

Property (P3) is a result of property (P2) for the SIR model for all \(\beta \ne 1\) in (OS22\(\beta \)-process-based).

Proof

-

If \(\beta \in [0,1)\), then \(\alpha _{k}^{[2]} \ge 0 \) for \(k=1,2\). Because all intermediate stages \(S_{n,k}^{[\ell ]}\) and \(I_{n,k}^{[\ell ]}\) are non-negative, \( \frac{\textrm{d}S^{[2]}}{\textrm{d}t} = - aS^{[2]} I^{[2]} < 0\). Therefore,

$$\begin{aligned} S_{n+1} = S_{n,2}^{[2]} \le S_{n,2}^{[1]} = S_{n,1}^{[2]} \le S_{n,1}^{[1]} = S_n. \end{aligned}$$Hence, property (P3) holds for all \(\Delta {t} \ge 0 \).

-

If \(\beta < 0\), then \(\alpha _{1}^{[1]},\alpha _{2}^{[1]},\alpha _{1}^{[2]} > 0\) and \(\alpha _{2}^{[2]} < 0\). To show that \(S_{n+1} \ge S_n\), it is sufficient to show that \( \frac{S_{n+1}}{S_n} \le 1\).

$$\begin{aligned} \frac{S_{n+1}}{S_n}{} & {} = \frac{S^{[2]}_{n,2}}{S^{[1]}_{n,1}} = \frac{S^{[2]}_{n,2}}{S^{[1]}_{n,2}} \cdot \frac{S^{[1]}_{n,2}}{S^{[1]}_{n,1}} = \frac{S^{[2]}_{n,2}}{S^{[1]}_{n,2}} \cdot \frac{S^{[2]}_{n,1}}{S^{[1]}_{n,1}} \\{} & {} = \frac{[S^{[1]}_{n,2} + I^{[1]}_{n,2}]}{I^{[1]}_{n,2}\exp [a(S^{[1]}_{n,2} + I^{[1]}_{n,2})( \alpha _{2}^{[2]}\Delta {t})]+S^{[1]}_{n,2}} \cdot \frac{[S^{[1]}_{n,1} + I^{[1]}_{n,1}]}{I^{[1]}_{n,1}\exp [a(S^{[1]}_{n,1} + I^{[1]}_{n,1})( \alpha _{1}^{[2]}\Delta {t})]+S^{[1]}_{n,1}}. \end{aligned}$$To show that \( \frac{S_{n+1}}{S_n} \le 1\), it is sufficient to show that

$$\begin{aligned} (I^{[1]}_{n,2}\exp [a(S^{[1]}_{n,2}&\!+\!&I^{[1]}_{n,2})( \alpha _{2}^{[2]}\Delta {t})]\!+\!S^{[1]}_{n,2}) (I^{[1]}_{n,1}\exp [a(S^{[1]}_{n,1} \!+\! I^{[1]}_{n,1})( \alpha _{1}^{[2]}\Delta {t})]\!+\!S^{[1]}_{n,1}) \nonumber \\- & {} (S^{[1]}_{n,2} + I^{[1]}_{n,2})(S^{[1]}_{n,1} + I^{[1]}_{n,1}) > 0. \end{aligned}$$(18)Expanding and simplifying the left-hand side of (18), we get

$$\begin{aligned}{} & {} \underbrace{I^{[1]}_{n,2} I^{[1]}_{n,1}\exp [a(S^{[1]}_{n,2} + I^{[1]}_{n,2})( \alpha _{2}^{[2]}\Delta {t})]\exp [a(S^{[1]}_{n,1} + I^{[1]}_{n,1})( \alpha _{1}^{[2]}\Delta {t})] - I^{[1]}_{n,2} I^{[1]}_{n,1}}_{\text {part 1}} \nonumber \\{} & {} + I^{[1]}_{n,2}S^{[1]}_{n,1}\exp [a(S^{[1]}_{n,2} + I^{[1]}_{n,2})( \alpha _{2}^{[2]}\Delta {t})] - I^{[1]}_{n,2}S^{[1]}_{n,1} \nonumber \\{} & {} \underbrace{\quad \quad \quad \quad + I^{[1]}_{n,1}S^{[1]}_{n,2} \exp [a(S^{[1]}_{n,1} + I^{[1]}_{n,1})( \alpha _{1}^{[2]}\Delta {t})] - I^{[1]}_{n,1}S^{[1]}_{n,2}}_{\text {part 2}}. \end{aligned}$$(19)Before we show that both parts 1 and 2 are positive, we derive some useful equations and inequalities: The order-1 condition is

$$\begin{aligned} \alpha _{1}^{[2]} + \alpha _{2}^{[2]} = 1. \end{aligned}$$(20)Because \(\alpha _{1}^{[1]}>0\) and \(\alpha _{2}^{[1]} > 0\), \(R^{[1]}_{n,1} < R^{[1]}_{n,2}\). Therefore, (P1) implies that

$$\begin{aligned} S^{[1]}_{n,1} + I^{[1]}_{n,1} > S^{[1]}_{n,2} + I^{[1]}_{n,2} \ge 0 . \end{aligned}$$(21)Dividing the S and I terms in (10), we get

$$\begin{aligned} \frac{S^{[1]}_{n,2}}{I^{[1]}_{n,2}} = \frac{S^{[1]}_{n,1}}{I^{[1]}_{n,1}}\exp [\frac{b\Delta {t}}{2(1-\alpha _{2}^{[2]})} - a(S^{[1]}_{n,1} + I^{[1]}_{n,1})(1-\alpha _{2}^{[2]})\Delta {t}]. \end{aligned}$$Hence,

$$\begin{aligned} S^{[1]}_{n,2}I^{[1]}_{n,1} = S^{[1]}_{n,1}I^{[1]}_{n,2}\exp [\frac{b\Delta {t}}{2(1-\alpha _{2}^{[2]})} - a(S^{[1]}_{n,1} + I^{[1]}_{n,1})(1-\alpha _{2}^{[2]})\Delta {t}]. \end{aligned}$$(22)We now consider the exponential terms in part 1:

$$\begin{aligned}{} & {} \exp [a(S^{[1]}_{n,2} + I^{[1]}_{n,2})( \alpha _{2}^{[2]}\Delta {t})]\exp [a(S^{[1]}_{n,1} + I^{[1]}_{n,1})( \alpha _{1}^{[2]}\Delta {t})] \\ ={} & {} \exp [a\Delta {t}(S^{[1]}_{n,2} + I^{[1]}_{n,2})\alpha _{2}^{[2]} + a\Delta {t} (S^{[1]}_{n,1} + I^{[1]}_{n,1})(1-\alpha _{2}^{[2]})] \\ ={} & {} \exp [a\Delta {t}\alpha _{2}^{[2]} (S^{[1]}_{n,2} + I^{[1]}_{n,2} -(S^{[1]}_{n,1} + I^{[1]}_{n,1}) ) + a\Delta {t}(S^{[1]}_{n,1} + I^{[1]}_{n,1})] > 1, \end{aligned}$$using (21) and \(\alpha _{2}^{[2]} < 0\). Therefore, part 1 is positive. We now consider part 2. First, we note that (21) and \(\alpha _{2}^{[2]} < 0\) imply

$$\begin{aligned} \exp [a(S^{[1]}_{n,2} + I^{[1]}_{n,2})\alpha _{2}^{[2]}\Delta {t}]> \exp [a(S^{[1]}_{n,1} + I^{[1]}_{n,1})\alpha _{2}^{[2]}\Delta {t}] > 0. \end{aligned}$$(23)Substituting (20), (22), and (23) into part 2, we get

$$\begin{aligned} \text {part 2}{} & {} > I^{[1]}_{n,2}S^{[1]}_{n,1}\exp [a(S^{[1]}_{n,1} + I^{[1]}_{n,1})( \alpha _{2}^{[2]}\Delta {t})] - I^{[1]}_{n,2}S^{[1]}_{n,1} \\{} & {} \quad + I^{[1]}_{n,1}S^{[1]}_{n,2} \exp [a(S^{[1]}_{n,1} + I^{[1]}_{n,1})( (1-\alpha _{2}^{[2]})\Delta {t})] - I^{[1]}_{n,1}S^{[1]}_{n,2} \\{} & {} = I^{[1]}_{n,2}S^{[1]}_{n,1} \left[ \exp [a(S^{[1]}_{n,1} + I^{[1]}_{n,1})( \alpha _{2}^{[2]}\Delta {t})]-1 \right] \\{} & {} \quad + I^{[1]}_{n,2}S^{[1]}_{n,1}\exp \Bigg [\frac{b\Delta {t}}{2(1-\alpha _{2}^{[2]})} - a(S^{[1]}_{n,1} + I^{[1]}_{n,1})(1-\alpha _{2}^{[2]})\Delta {t}\Bigg ]\\{} & {} \quad \times \left[ \exp [a(S^{[1]}_{n,1} + I^{[1]}_{n,1})( (1-\alpha _{2}^{[2]})\Delta {t})] - 1 \right] \\{} & {} = I^{[1]}_{n,2}S^{[1]}_{n,1} \left\{ \exp \Bigg [\frac{b\Delta {t}}{2(1-\alpha _{2}^{[2]})}\Bigg ] - \exp \Bigg [\frac{b\Delta {t}}{2(1-\alpha _{2}^{[2]})} - a(S^{[1]}_{n,1} + I^{[1]}_{n,1})(1-\alpha _{2}^{[2]})\Delta {t}\Bigg ] \right. \\{} & {} \qquad \left. + \exp [a(S^{[1]}_{n,1} + I^{[1]}_{n,1})( \alpha _{2}^{[2]}\Delta {t})]-1 \right\} \\{} & {} = I^{[1]}_{n,2}S^{[1]}_{n,1} \left\{ \exp \Bigg [\frac{b\Delta {t}}{2(1-\alpha _{2}^{[2]})}\Bigg ] \left( 1 - \exp [ a(S^{[1]}_{n,1} + I^{[1]}_{n,1})(\alpha _{2}^{[2]}-1)\Delta {t}] \right) \right. \\{} & {} \qquad \left. -\left( 1 - \exp [a(S^{[1]}_{n,1} + I^{[1]}_{n,1})( \alpha _{2}^{[2]}\Delta {t})]\right) \right\} \\{} & {} > I^{[1]}_{n,2}S^{[1]}_{n,1} \left\{ \exp [\frac{b\Delta {t}}{2(1-\alpha _{2}^{[2]})}] \left( 1 - \exp [ a(S^{[1]}_{n,1} + I^{[1]}_{n,1})(\alpha _{2}^{[2]}-1)\Delta {t}] \right) \right. \\{} & {} \qquad \left. -\left( 1 - \exp [ a(S^{[1]}_{n,1} + I^{[1]}_{n,1})(\alpha _{2}^{[2]}-1)\Delta {t}]\right) \right\} \\{} & {} = I^{[1]}_{n,2}S^{[1]}_{n,1} \left\{ \left( \exp [\frac{b\Delta {t}}{2(1-\alpha _{2}^{[2]})}] - 1\right) \left( 1 - \exp [ a(S^{[1]}_{n,1} + I^{[1]}_{n,1})(\alpha _{2}^{[2]}-1)\Delta {t}]\right) \right\} \\{} & {} > 0, \end{aligned}$$because \(\alpha _{2}^{[2]} < 0\). Now because parts 1 and 2 are both positive, \(S_{n+1} \le S_n\).

-

If \(\beta > 1\), then \(\alpha _{1}^{[1]}, \alpha _{2}^{[2]} > 0\) and \(\alpha _{1}^{[2]}, \alpha _{2}^{[1]} < 0\). Similar to the case where \(\beta < 0\), to show property (P3), it is enough to show that \( \frac{S_{n+1}}{S_n} \le 1\), which is equivalent to showing that (18) holds. Equation (18) can be split into two parts as in (19), and again we show that both parts 1 and 2 are positive. We note that when \(\beta > 1\), (20) and (22) still hold. Furthermore, because \(\alpha _{2}^{[1]} < 0\), \(I^{[1]}_{n,2} > I^{[2]}_{n,1}\). Hence,

$$\begin{aligned} S^{[1]}_{n,2} + I^{[1]}_{n,2} = S^{[2]}_{n,1} + I^{[1]}_{n,2} > S^{[2]}_{n,1} + I^{[2]}_{n,1} = S^{[1]}_{n,1} + I^{[1]}_{n,1} \ge 0. \end{aligned}$$(24)Now the exponential terms in part 1 can be written as

$$\begin{aligned} \exp [a\Delta {t}\alpha _{2}^{[2]} (S^{[1]}_{n,2} + I^{[1]}_{n,2} -(S^{[1]}_{n,1} + I^{[1]}_{n,1}) ) + a\Delta {t}(S^{[1]}_{n,1} + I^{[1]}_{n,1})] > 1, \end{aligned}$$using (24) and \(\alpha _{2}^{[2]} > 0\). Hence, part 1 is positive. We now consider part 2. Using (20), (22), and (24), we get

$$\begin{aligned} \text {part 2}{} & {} = I^{[1]}_{n,2}S^{[1]}_{n,1} \left\{ \exp [\frac{b\Delta {t}}{2(1-\alpha _{2}^{[2]})}] - \exp [\frac{b\Delta {t}}{2(1-\alpha _{2}^{[2]})} - a(S^{[1]}_{n,1} + I^{[1]}_{n,1})(1-\alpha _{2}^{[2]})\Delta {t}] \right. \\{} & {} \qquad \left. + \exp [a(S^{[1]}_{n,2} + I^{[1]}_{n,2})( \alpha _{2}^{[2]}\Delta {t})]-1 \right\} \\{} & {} > I^{[1]}_{n,2}S^{[1]}_{n,1} \left\{ \exp [\frac{b\Delta {t}}{2(1-\alpha _{2}^{[2]})}] - \exp [\frac{b\Delta {t}}{2(1-\alpha _{2}^{[2]})} - a(S^{[1]}_{n,1} + I^{[1]}_{n,1})(1-\alpha _{2}^{[2]})\Delta {t}] \right. \\{} & {} \qquad \left. + \exp [a(S^{[1]}_{n,1} + I^{[1]}_{n,1})( \alpha _{2}^{[2]}\Delta {t})]-1 \right\} \\{} & {} = I^{[1]}_{n,2}S^{[1]}_{n,1} \left\{ -\exp [\frac{b\Delta {t}}{2(1-\alpha _{2}^{[2]})}]\left( \exp [- a(S^{[1]}_{n,1} + I^{[1]}_{n,1})(1-\alpha _{2}^{[2]})\Delta {t}]-1 \right) \right. \\{} & {} \qquad \left. +\left( \exp [a(S^{[1]}_{n,1} + I^{[1]}_{n,1})( \alpha _{2}^{[2]}\Delta {t})] -1 \right) \right\} \\{} & {} > I^{[1]}_{n,2}S^{[1]}_{n,1} \left\{ -\exp [\frac{b\Delta {t}}{2(1-\alpha _{2}^{[2]})}]\left( \exp [- a(S^{[1]}_{n,1} + I^{[1]}_{n,1})(1-\alpha _{2}^{[2]})\Delta {t}]-1 \right) \right. \\{} & {} \qquad \left. +\left( \exp [- a(S^{[1]}_{n,1} + I^{[1]}_{n,1})(1-\alpha _{2}^{[2]})\Delta {t}]-1 \right) \right\} \\{} & {} = I^{[1]}_{n,2}S^{[1]}_{n,1} \left( \exp [- a(S^{[1]}_{n,1} + I^{[1]}_{n,1})(1-\alpha _{2}^{[2]})\Delta {t}]-1 \right) \left( 1- \exp [\frac{b\Delta {t}}{2(1-\alpha _{2}^{[2]})}]\right) \\{} & {} > 0, \end{aligned}$$because \(\alpha _{2}^{[2]} > 1\). Having shown parts 1 and 2 are both positive, we have the desired property (P3).

\(\square \)

Remark 7

We note that when the SIR model is solved using OS22\(\beta \) and the sub-systems (9a) and (9b) are solved exactly, all properties (P1)–(P4) are satisfied for all \(\Delta {t} > 0\). As discussed in [17, 23], if the nonlinear sub-system (9b) is solved using a Runge–Kutta method, properties (P2)–(P4) are only satisfied with a time-step restriction. This is due to the fact that the Runge–Kutta method does not preserve positivity or monotonicity of the sub-system.

3.3 Dynamic linearization of the SIR model

In this section, we show that property (P2) does not hold for the SIR model when dynamic linearization is applied even when all \(\alpha _{k}^{[\ell ]} \ge 0\). Because (P3) and (P4) are usually consequences of (P2) with potentially more restricted step-sizes, we do not discuss step-size restriction on (P3) and (P4) in this section.

Due to the complexity of the exact solution of (11a) and (11b), we illustrate that property (P2) does not hold for the following set of parameters \(\{ a = 0.0005, b = 0.05, S_0 = 800, I_0 = 200, R_0 = 0 \}\).

Proposition 3

There exists a step-size \(\Delta {t}^*> 0\) such that (P2) does not hold for \(t\ge \Delta {t}^*\) when () is solved with an s-stage operator-splitting method with \(\alpha _{s}^{[1]} \ge 0\).

Proof

Using the parameter values \(\{ a = 0.0005, b = 0.05, S_0 = 800, I_0 = 200, R_0 = 0 \}\). It is enough to show that there is some \(\Delta {t}^*> 0\) such that not all of \(S_1, I_1, R_1 \ge 0\). We show that when \(\Delta {t}\) is sufficiently large, \(R_1\) is either negative or greater than \(N_0\), implying that one of \(S_1\) and \(I_1\) must be negative.

Solving (11a) exactly over \(\alpha _{s}^{[1]}\Delta {t}\), we get

where \(N_0 = S_0+I_0+R_0\) is the conserved total population.

Regardless of the values of \(S_{0,s-1}^{[2]}\) and \(I_{0,s-1}^{[2]}\), \(R_1 \rightarrow \infty \) or \(R_1 \rightarrow -\infty \) as \(\Delta {t} \rightarrow \infty \).

Hence, for sufficiently large \(\Delta {t}\), \(R_1 > 1000\) or \(R_1 < 0\), implying that (P2) fails when \(\Delta {t}\) is sufficiently large. \(\square \)

Remark 8

We note that when \(\alpha _{k}^{[\ell ]} \ge 0\), the property (P2) does not hold because the exact sub-integration of R is no longer non-negative for all \(\Delta {t} > 0\). This is a critical difference between the solution of the SIR model using process-based splitting and the solution using dynamic linearization.

Finally, if \(\alpha _{s}^{[1]} < 0\), it can be shown from the graph of \(R_1\) that depending on the values of \(S_{0,s-1}^{[2]}\) and \(I_{0,s-1}^{[2]}\), \(R_1 < 0\) for some choices of \(\Delta {t} > 0 \).

3.4 Positivity-preservation for the Robertson test problem

Proposition 4

The numerical solution to the Robertson test problem (12) is unconditionally positive for all \(\Delta {t} > 0\) when process-based splitting is used provided that all OS coefficients \(\{\alpha _{k}^{[\ell ]}\}_{k=1,2,\dots ,s}^{\ell = 1,2}\) are non-negative.

Proof

Consider the exact solution (14a) to sub-system (13a). Assume that \(X_{n,k-1}^{[2]}, Y_{n,k-1}^{[2]}, Z_{n,k-1}^{[2]} \ge 0 \). Because \(\alpha _{k}^{[\ell ]} \ge 0\), \(\exp (-(aZ^{[2]}_{n,k-1} + b)\alpha _{k}^{[1]}\Delta {t}) \le 1\) for all \(\Delta {t} > 0\). Now, we consider the following two cases:

-

Case 1: If \(aY^{[2]}_{n,k-1}Z^{[2]}_{n,k-1} - bX^{[2]}_{n,k-1} > 0\), then

$$\begin{aligned} X_{n,k}^{[1]} > \frac{-(aY^{[2]}_{n,k-1}Z^{[2]}_{n,k-1} \!- bX^{[2]}_{n,k-1}) \!+\!aZ^{[2]}_{n,k-1}(X^{[2]}_{n,k-1} \!+\! Y^{[2]}_{n,k-1})}{aZ^{[2]}_{n,k-1} + b} \!=\! X^{[2]}_{n,k-1} \!\ge \! 0, \end{aligned}$$$$\begin{aligned} Y^{[1]}_{n,k} = \frac{\exp (-(aZ^{[2]}_{n,k-1} \!+ b)\alpha _{k}^{[1]}\Delta {t})(aY^{[2]}_{n,k-1}Z^{[2]}_{n,k-1} \!- bX^{[2]}_{n,k-1}) \!+\! b(X^{[2]}_{n,k-1} \!+ Y^{[2]}_{n,k-1})}{aZ^{[2]}_{n,k-1} + b} \ge \! 0, \end{aligned}$$again because both the numerator and denominator are positive.

-

Case 2: If \(aY^{[2]}_{n,k-1}Z^{[2]}_{n,k-1} - bX^{[2]}_{n,k-1} < 0\), then

$$\begin{aligned} X_{n,k}^{[1]} \ge 0, \end{aligned}$$because both the numerator and denominator are positive, and

$$\begin{aligned} Y^{[1]}_{n,k} > \frac{(aY^{[2]}_{n,k-1}Z^{[2]}_{n,k-1} - bX^{[2]}_{n,k-1}) + b(X^{[2]}_{n,k-1} + Y^{[2]}_{n,k-1})}{aZ^{[2]}_{n,k-1} + b} = Y^{[2]}_{n,k-1} \ge 0, \end{aligned}$$because both the numerator and denominator are positive.

It is obvious that \(Z^{[1]}_{n,k} = Z^{[2]}_{n,k-1} \ge 0 \) in both cases. The exact solution (14b) of sub-system (13b) is obviously non-negative if \(X_{n,k}^{[1]}, Y_{n,k}^{[1]}, Z_{n,k}^{[1]} \ge 0 \) and \(\alpha _{k}^{[2]} \ge 0 \). Therefore, \(X_{n+1}, Y_{n+1}, Z_{n+1} \ge 0\) if \(X_{n}, Y_{n}, Z_{n} \ge 0\). Because the initial conditions \(X_0, Y_0, Z_0 \ge 0\), we have \(X_{n}, Y_{n}, Z_{n} \ge 0\) for all \(n = 1,2, \dots \). \(\square \)

We now focus our attention on OS22\(\beta \) and study the effect of backward integration on the Robertson test problem. We use the OS22\(\beta \) method for analysis because it admits backward integration in either one or both of the sub-systems. The generalization of the results to other operator-splitting methods can be done using similar argument.

Proposition 5

We solve the Robertson problem (12) using OS22\(\beta \) with (13a) and (13b) integrated exactly. If \(\alpha _{k}^{[\ell ]} < 0\) for some k and \(\ell \), then one of \(X_1, Y_1, Z_1\) is negative for \(\Delta {t}\) sufficiently large.

Proof

We note that for \(\beta \in [0,0.5]\), all coefficients of OS22\(\beta \) are non-negative. Therefore, we only need to discuss the following three cases: \( \beta \in (0.5, 1)\), \(\beta \in (1,\infty )\), and \(\beta \in (-\infty , 0)\).

-

\( \beta \in (0.5, 1)\) For \(\beta \in (0.5,1)\), \(\alpha _{1}^{[1]} <0\) and \(\alpha _{1}^{[2]}, \alpha _{2}^{[1]}, \alpha _{2}^{[2]} \ge 0 \), we show that if \(aY_0Z_0 - bX_0 < 0\), then \(X_1 < 0\) for \(\Delta {t}\) sufficiently large.

-

If \(aY_0Z_0 - bX_0 < 0\), after integrating the first operator (13a) over \(\alpha _{1}^{[1]} \Delta {t}\),

$$\begin{aligned} \left\{ \begin{aligned} X^{[1]}_{0,1}&= \frac{-\exp (-(aZ_0 + b)\alpha _{1}^{[1]}\Delta {t})(aY_0Z_0 - bX_0) +aZ_0(X_0 + Y_0)}{aZ_0 + b}, \\ Y^{[1]}_{0,1}&= \frac{\exp (-(aZ_0 + b)\alpha _{1}^{[1]}\Delta {t})(aY_0Z_0 - bX_0) +b(X_0 + Y_0)}{aZ_0 + b},\\ Z^{[1]}_{0,1}&= Z_0. \end{aligned} \right. \end{aligned}$$(25)We note that \(Y^{[1]}_{0,1} \rightarrow -\infty \) as \(\Delta {t}\rightarrow \infty \) because all initial conditions \(X_0, Y_0, \) \( Z_0 \ge 0\) and \(\alpha _{1}^{[1]} < 0\). Hence, we can choose \(\Delta {t}\) large enough such that \(Y^{[1]}_{0,1}< 0\) and \(a(Y^{[1]}_{0,1} + Z^{[1]}_{0,1}) + b < 0\). After integrating the second operator (13b) over \(\alpha _{1}^{[2]} \Delta {t}\),

$$\begin{aligned} \left\{ \! \begin{aligned} X^{[2]}_{0,1}&= X^{[1]}_{0,1}, \\ Y^{[2]}_{0,1}&= \frac{Y^{[1]}_{0,1} }{cY^{[1]}_{0,1} \alpha _{1}^{[2]}\Delta {t} + 1}, \\ Z^{[2]}_{0,1}&= \!\frac{cY^{[1]}_{0,1}(Y^{[1]}_{0,1}\!+\! Z^{[1]}_{0,1}) \alpha _{1}^{[2]}\Delta {t} \!+\! Z^{[1]}_{0,1}}{cY^{[1]}_{0,1} \alpha _{1}^{[2]}\Delta {t} +\! 1} \!= \!-\frac{Y^{[1]}_{0,1} }{cY^{[1]}_{0,1} \alpha _{1}^{[2]}\Delta {t} +\! 1} +\! Y^{[1]}_{0,1} \!+\! Z^{[1]}_{0,1}. \end{aligned} \right. \end{aligned}$$(26)We note that \( X^{[2]}_{0,1}>0\), and, for \(\Delta {t}\) sufficiently large, \(Y^{[2]}_{0,1} > 0\) and \(Z^{[2]}_{0,1} < 0\). Moreover, as \(\Delta {t} \rightarrow \infty \), \(Y^{[2]}_{0,1} \rightarrow 0\) and \( aZ^{[2]}_{0,1}+b = a(-\frac{Y^{[1]}_{0,1} }{cY^{[1]}_{0,1} \alpha _{1}^{[2]}\Delta {t} + 1} ) + a(Y^{[1]}_{0,1} + Z^{[1]}_{0,1})+b < 0\). After integrating the first operator (13a) over \(\alpha _{2}^{[1]} \Delta {t}\),

$$\begin{aligned} X^{[1]}_{0,2} =\! \frac{-\exp (-(aZ^{[2]}_{0,1} \!+\! b)\alpha _{2}^{[1]}\Delta {t})(aY^{[2]}_{0,1} Z^{[2]}_{0,1} \!-\! bX^{[2]}_{0,1}) \!+\!aZ^{[2]}_{0,1}(X^{[2]}_{0,1} \!+\! Y^{[2]}_{0,1})}{aZ^{[2]}_{0,1} \!+\! b} . \end{aligned}$$(27)We note that \((aY^{[2]}_{0,1} Z^{[2]}_{0,1} - bX^{[2]}_{0,1}) < 0\) and \(\exp (-(aZ^{[2]}_{0,1} + b)\alpha _{2}^{[1]}\Delta {t})\rightarrow \infty \) as \(\Delta {t} \rightarrow \infty \). Hence, the numerator of \(X^{[1]}_{0,2}\) is positive for \(\Delta {t}\) sufficiently large. Because the denominator of \(X^{[1]}_{0,2}\) is negative, \(X^{[1]}_{0,2}<0\). Therefore, \(X_1 = X^{[2]}_{0,2} = X^{[1]}_{0,2} < 0\).

-

If \(aY_0Z_0 - bX_0 > 0\), because \(\alpha _{1}^{[1]} < 0\), \(\exp (-(aZ_0 + b)\alpha _{1}^{[1]}\Delta {t}) \rightarrow \infty \) as \(\Delta {t}\rightarrow \infty \). Referring to the expression of \(X^{[1]}_{0,1}\) in (25), it is obvious that \(X^{[1]}_{0,1} < 0\) and \(Y^{[1]}_{0,1}, Z^{[1]}_{0,1} > 0\) for \(\Delta {t}\) sufficiently large. Referring to the expressions (26) of integrating (13b) over \(\alpha _{1}^{[2]}\Delta {t}\), in this case \(X^{[2]}_{0,1} < 0\) and \(Y^{[2]}_{0,1}, Z^{[2]}_{0,1} > 0\). Moreover \(Y^{[2]}_{0,1} \rightarrow 0 \) as \(\Delta {t} \rightarrow \infty \). Therefore, we can choose \(\Delta {t}\) sufficiently large such that \(X^{[2]}_{0,1}+Y^{[2]}_{0,1}<0\). Now refer to (27) for the solution of \(X_{0,2}^{[1]}\) after integrating (13a) over \(\alpha _{2}^{[1]}\Delta {t}\). Because \(Z_{0,1}^{[2]} > 0\) and \(\alpha _{2}^{[1]} > 0\), \(\exp (-(aZ^{[2]}_{0,1} + b)\alpha _{2}^{[1]}\Delta {t}) \rightarrow 0\) as \(\Delta {t}\rightarrow \infty \). Because \(X^{[2]}_{0,1}+Y^{[2]}_{0,1}<0\), for \(\Delta {t}\) sufficiently large, \(X_{0,2}^{[1]} < 0\). Therefore, \(X_1 = X^{[2]}_{0,2} = X^{[1]}_{0,2} < 0\).

-

-

\(\beta \in (1,\infty )\) For \(\beta \in (1,\infty )\), \(\alpha _{1}^{[1]}, \alpha _{2}^{[2]} \ge 0 \) and \(\alpha _{1}^{[2]}, \alpha _{2}^{[1]} < 0\). After integrating the first operator (13a) over \(\alpha _{1}^{[1]} \Delta {t}\), the resulting intermediate values \(X_{0,1}^{[1]}, Y_{0,1}^{[1]},\) and \(Z_{0,1}^{[1]}\) are non-negative for any \(\Delta {t} > 0\). After integrating the second operator (13b) over a negative time-step \(\alpha _{2}^{[1]}\Delta {t}\), \(X_{0,1}^{[2]}\) and \(Z_{0,1}^{[2]}\) are non-negative for all \(\Delta {t} > 0\), and \(Y_{0,1}^{[2]} < 0\) for \(\Delta {t}\) sufficiently large. Therefore, \(aY_{0,1}^{[2]} Z_{0,1}^{[2]} - b X_{0,1}^{[2]} < 0\). Because \(\alpha _{2}^{[1]} < 0\), \(\exp (-(aZ_{0,1}^{[2]} + b)\alpha _{2}^{[1]}\Delta {t}) \rightarrow \infty \) as \(\Delta {t} \rightarrow \infty \). Therefore, \(X_{0,2}^{[1]} \rightarrow \infty \), \(Y_{0,2}^{[1]} \rightarrow -\infty \), and \(Z_{0,2}^{[1]} > 0\) when \(\Delta {t} \rightarrow \infty \). Moreover, because \(X_{0,2}^{[1]} + Y_{0,2}^{[1]} + Z_{0,2}^{[1]}\) remains constant, for \(\Delta {t}\) sufficiently large, \( Y_{0,2}^{[1]} + Z_{0,2}^{[1]} < 0\). Now consider \(Z_1 = Z_{0,2}^{[2]}\). Because \(\alpha _{2}^{[1]} > 0\), \(Y_{0,2}^{[1]} < 0, Z_{0,2}^{[1]} > 0\), \( Y_{0,2}^{[1]} + Z_{0,2}^{[1]} < 0\), the numerator of \(Z_1\) is positive and the denominator of \(Z_1\) is negative for \(\Delta {t}\) sufficiently large. Hence, \(Z_1 < 0\).

-

\(\beta \in (-\infty , 0)\): For \(\beta \in (-\infty , 0)\), \(\alpha _{1}^{[1]}, \alpha _{1}^{[2]}, \alpha _{2}^{[1]} \ge 0 \) and \(\alpha _{2}^{[2]} < 0\). As shown in the proof of (4), because the initial conditions \(X_0, Y_0, Z_0 \ge 0\), the intermediate values \(X_{0,2}^{[1]}, Y_{0,2}^{[1]},\) and \(Z_{0,2}^{[1]}\) after integrating the first operator (13a) over \(\alpha _{2}^{[1]}\Delta {t}\) are all non-negative for any \(\Delta {t} > 0 \). Because \(\alpha _{2}^{[2]} < 0\), the exact solution of the second operator (14b) indicate that \(Y_1 = Y_{0,2}^{[2]} < 0\) for \(\Delta {t}\) sufficiently large.

\(\square \)

Remark 9

We note that although the Robertson test problem has exact solutions (14a) and (14b) for each of the sub-systems, the exact solutions are not always positive when \(\alpha _{k}^{[\ell ]} <0\). In fact, the exact solutions blow up when \(\alpha _{k}^{[\ell ]} <0\) and \(\Delta {t} \rightarrow \infty \). This instability in the exact solution is a main difference between the Robertson test problem and the SIR model. Moreover, although we only care about the positivity of the variables at the end of each time-step \(t_n\), it is beneficial to keep the intermediate variables \(X_{n,k}^{[\ell ]}, Y_{n,k}^{[\ell ]}, Z_{n,k}^{[\ell ]}\) positive because this would reduce the chance of blow up in the next sub-step.

Remark 10

Assume that each sub-system (15) of a process-based splitting strategy of a PDS (1) has a positive exact solution. Then, the numerical results obtained using the Godunov or Strang splitting method with \(N(N-1)/2\) operators and exact sub-integration is unconditionally positive because the exact solution of each sub-system (15) is unconditionally positive. However, if we use a generalized Yoshida method (7), the positivity is not guaranteed because the exact solution can be negative when integrated backward in time, and this negativity might not be compensated by the subsequent forward integration.

4 Numerical experiments

In this section, we give the results of some numerical experiments to support the theoretical results reported in the previous sections.

We also performed experiments with the SIR model using the modified Patankar–Runge–Kutta method MPRK22 from [24] as well as the splitting method ES2 from [12] for differential equations that are not split additively. True to the theory, both methods produced results that satisfy (P1)–(P2). However, the resulting accuracies appeared to be significantly worse than solving () using Strang splitting ((OS22\(\beta \)-process-based) with \(\beta =1/2\)) for a given time step-size.

4.1 Process-based splitting

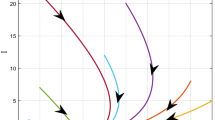

The SIR model is solved using (OS22\(\beta \)-process-based). The splitting methods examined are Strang, OS22\(\beta (1 - \sqrt{2}/2)\) (“Best22”), R3, Y4, OS22\(\beta (-0.25)\), OS22\(\beta \)(0.75), and OS22\(\beta \)(1.5). Figures 3 and 4 display the numerical results for \(\Delta {t}= 15\). In agreement with the theory, we see that properties (P1)–(P4) all hold. Of note, we see that the presence of positive coefficients in the OS methods is neither necessary nor sufficient for qualitative property preservation. We further note that the numerical result for larger \(\Delta {t}\) is similar the case when \(\Delta {t} = 15\), as proved in Section 3.2.

4.2 Dynamic linearization

The SIR model is now solved using dynamic linearization (). The splitting methods examined again are Strang, OS22\(\beta (1 - \sqrt{2}/2)\), R3, Y4, and OS22\(\beta (-0.25)\). Table 5 summarizes the smallest step-size such that each property fails for each method. In agreement with the theoretical results, property (P1) holds for all step-sizes, and there is a step-size \(\Delta {t}\) beyond which each qualitative property (P2)–(P4) does not hold.

We note that both Strang and OS22\(\beta (1-\sqrt{2}/2)\) only have positive coefficients. Nonetheless, properties (P2)–(P4) fail for \(\Delta {t}\) sufficiently large. That is, the mere absence of negative coefficients is not sufficient to guarantee the success of a splitting method depending on the goals.

That being said, all of R3 and Y4 have negative coefficients in both operators, and OS22\(\beta (-0.25)\) has a negative coefficient in only the second operator. Again the properties (P2)–(P4) fail for sufficiently large \(\Delta {t}\), in agreement with the theory. It seems, however, for this model, the presence of negative coefficients may lead to qualitative property preservation breaking down sooner, i.e., for smaller \(\Delta {t}\), than for the case where negative coefficients are absent.

OS22\(\beta (1-\sqrt{2}/2)\) is the method with the smallest splitting error among all OS22\(\beta \) methods. We see from Table 5 that it can take a step-size that is almost 50\(\%\) larger than Strang before any of the properties (P2)–(P4) cease to hold.

Finally, we note from the results of Strang, OS22\(\beta (1-\sqrt{2}/2)\), and OS22\(\beta (-0.25)\) that properties (P3) and (P4) are not a consequence of (P2). Any of the three properties may cease to fail first.

4.3 Robertson test problem

In this section, we solve the Robertson test problem (12) using process-based splitting. The operator-splitting methods examined here are OS22\(\beta \)(\(-\)0.2), OS22\(\beta \)(0.7), and OS22\(\beta \)(1.2). The sub-systems (13a) and (13b) are solved exactly. For the numerical experiments, we use the parameter values \(a=1\text {e}4\), \(b=0.04\), and \(c=3\text {e}7\) with initial conditions \(X(0) = X_0 = 1-2\texttt{eps}\), \(Y(0) = Y_0 = \texttt{eps}\), \(Z(0) = Z_0 = \texttt{eps}\). In Table 6, we present the stepsize \(\Delta {t}\) when one of the three variables \(X_1, Y_1, Z_1\) false to be positive. As proved in Section 3.4, when one of the \(\alpha _{k}^{[\ell ]}\) is negative, (Robertson P2) false for \(\Delta {t}\) sufficiently large.

Remark 11

We note that Table 6 indicates that when \(\Delta {t}\) is sufficiently large, at least one of \(X_1, Y_1, or Z_1\) is negative. However, the step sizes that preserve positivity of \(X_1, Y_1, Z_1\) do not guarantee positivity of \(X_n, Y_n, Z_n\) for all \(n >1\). In the case of OS22\(\beta (0.7)\), both step sizes \(\Delta {t} = 0.12\) and \(\Delta {t} = 0.13\) fail to produce a positive solu- tion to the Robertson problem over the full interval \([0, 1e+10]\).

On the other hand, when process-based splitting is employed and all operator splitting coefficients are positive, positivity of the variables \(X_n, Y_n, Z_n\) for \(n \ge 1\) is satisfied unconditionally, as claimed in Proposition 4. Figure 5 presents the numerical solution of the Robertson problem solved using process-based splitting with OS22\(\beta (1 - \sqrt{2}/2)\) for \(\Delta {t} = 10\). Positivity of all three variables and the conservation of the sum of X, Y, Z are satisfied as expected. We note that the numerical results for larger \(\Delta {t}\) are similar to the case when \(\Delta {t} = 10\). Although operator-splitting methods with positive coefficients are unconditionally positive for the Robertson test problem, they are less accurate as the MPRK22 method for a given step size for this problem.

Remark 12

Furthermore, the differential equations of the Robertson problem imply that Z should be monotonically increasing. When using process-based splitting and an operator-splitting method with positivie coefficients, the monotonicity of Z is satisfied unconditionally for the same reason that R, in the SIR model, is monotonically increasing as presented in Theorem 2. When using operator splitting method with negative coefficients, this property fails for \(\Delta {t}\) sufficiently large as shown in Fig. 6.

5 Summary and conclusions

Mathematical modelling is omni-present in modern daily life. These models are typically large, complex, and require solutions to be approximated by numerical methods. Often, the problems are posed as differential equations that are so large that they must be split into pieces that are solved separately. Furthermore, the numerical solutions may be required to satisfy certain qualitative properties in order to be physically meaningful.

The SIR model is a basic model of infectious disease spread that can be used to illustrate how qualitative properties, such as positivity, monotonicity, or conservation of total population, are affected by the choice of splitting strategy, i.e., despite the fact that the sub-systems are integrated exactly. Accordingly, an analysis such as this can inform which splitting strategies are most amenable to qualitative property preservation.

We have demonstrated that a process-based splitting, which for the SIR model also happens to correspond to a splitting based on linear/nonlinear terms, unconditionally preserves positivity, monotonicity, and total population. This result has some applicability to understanding qualitative property preservation of the more general class of production-destruction systems. For PDSs, total population and positivity are unconditionally preserved under process-based splitting.

On the other hand, the popular and powerful dynamic linearization method is only conditionally stable; i.e., there is a step-size beyond which at least one of the qualitative properties (P1)–(P4) cease to hold. In practice, these step-sizes may be so large as to yield inaccurate solutions, in which case smaller step-sizes would be required anyway, and the conditional nature of qualitative property preservation may largely be irrelevant. As usual, the impact of the presence or absence of restrictions due to stability depends on the goals of the simulation.

Comparing the two splitting strategies applied to the SIR model, we conclude that the process-based splitting is preferred over dynamic linearization because the exact solutions of sub-systems of the process-based splitting are unconditionally positive and conservative for all \(\Delta {t} > 0\). By comparing the results of the SIR model and the Robertson test problem, we conclude that when choosing a particular operator-splitting method, if the exact solution to the sub-systems preserves the desired qualitative properties for \(\Delta {t} < 0\), then it is safe to use operator-splitting methods involving backward integration. Otherwise, one should expect a step-size restriction to preserve positivity when using operator-splitting methods with negative coefficients.

Availability of data and materials

The data that support the findings of this study are available from the corresponding author, S.W., upon reasonable request.

References

Hundsdorfer, W., Verwer, J.G.: Numerical solution of time-dependent advection-diffusion-reaction equations, vol. 33. Springer, Berlin (2003)

Lukassen, A.A., Kiehl, M.: Operator splitting for chemical reaction systems with fast chemistry. J. Comput. Appl. Math. 344, 495–511 (2018)

Kermack, W.O., McKendrick, A.G.: A contribution to the mathematical theory of epidemics. Proc. R. Soc. Lond. A 115, 700–721 (1927)

Robertson, H.: The solution of a set of reaction rate equations. Numer. Anal. Introduction. 178182 (1966)

Hairer, E., Norsett, S.P., Wanner, G.: Solving ordinary differential equations II: stiff and differential-algebraic problems. Solving ordinary differential equations II: stiff and differential-algebraic problems. Springer, New York (1993). https://books.google.ca/books?id=m7c8nNLPwaIC

Marchuk, G.I.: On the theory of the splitting-up method. In: Numerical Solution of Partial Differential Equations-II, pp. 469–500. Academic Press, Maryland (1971)

Yanenko, N.N.: The Method of Fractional Steps. The Solution of Problems of Mathematical Physics in Several Variables, p. 160. Springer, New York,: Translated from the Russian by T. Holt, Cheron. English translation edited by M (1971)

Glowinski, R., Osher, S.J., Yin, W. (eds.): Splitting methods in communication, imaging, science, and engineering. Scientific Computation, p. 820. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-41589-5

Shampine, L.F.: Conservation laws and the numerical solution of ODEs. Comput. Math. Appl. 12(5–6), 1287–1296 (1986)

Hairer, E., Wanner, G., Lubich, C.: Geometric numerical integration: structure preserving algorithms for ordinary differential equations, vol. 31. Springer, Berlin (2006)

Spijker, M.N.: Stepsize conditions for general monotonicity in numerical initial value problems. SIAM J. Numer. Anal. 45(3), 1226–1245 (2007). https://doi.org/10.1137/060661739

Blanes, S., Iserles, A., Macnamara, S.: Positivity-preserving methods for ordinary differential equations. ESAIM. Math. Model. Numer. Anal. 56(6), 1843–1870 (2022). https://doi.org/10.1051/m2an/2022042

Magnus, W.: On the exponential solution of differential equations for a linear operator. Commun. Pur. Appl. Math. 7, 649–673 (1954). https://doi.org/10.1002/cpa.3160070404

Kopecz, S., Meister, A.: Unconditionally positive and conservative third order modified Patankar-Runge-Kutta discretizations of production-destruction systems. BIT. Numer. Math. 58(3), 691–728 (2018). https://doi.org/10.1007/s10543-018-0705-1

Izgin, T., Kopecz, S., Meister, A.: On the stability of unconditionally positive and linear invariants preserving time integration schemes. SIAM J. Numer. Anal. 60(6), 3029–3051 (2022). https://doi.org/10.1137/22M1480318

Boris, J.P., et al.: Relativistic plasma simulation-optimization of a hybrid code. In: Proceedings: Fourth Conference on Numerical Simulation of Plasmas, pp. 3–67 (1970)

Wei, S., Spiteri, R.J.: Qualitative property preservation of high-order operator splitting for the sir model. Appl. Numer. Math. 172, 332–350 (2022)

Bernier, J., Casas, F., Crouseilles, N.: Splitting methods for rotations: application to Vlasov equations. SIAM Journal on Scientific Computing 42(2), 666–697 (2020). https://doi.org/10.1137/19M1273918

Goldman, G., Kaper, T.J.: Nth-order operator splitting schemes and nonreversible systems. SIAM J. Numer. Anal. 33(1), 349–367 (1996)

Auzinger, W., Hofstätter, H., Ketcheson, D., Koch, O.: Practical splitting methods for the adaptive integration of nonlinear evolution equations. Part I: Construction of optimized schemes and pairs of schemes. BIT Numer. Math. 57(1), 55–74 (2017)

MacNamara, S., Strang, G.: Operator splitting. In: Splitting Methods in Communication, Imaging, Science, and Engineering. Sci. Comput., pp. 95–114. Springer, Cham (2016)

Spiteri, R.J., Tavassoli, A., Wei, S., Smolyakov, A.: Practical 3-splitting beyond Strang (2023)

Csomós, P., Takács, B.: Operator splitting for space-dependent epidemic model. Appl. Numer. Math. 159, 259–280 (2021)

Burchard, H., Deleersnijder, E., Meister, A.: A high-order conservative patankartype discretisation for stiff systems of production-destruction equations. Appl. Numer. Math. 47(1), 1–30 (2003)

Funding

This work was supported by the National Sciences and Engineering Research Council of Canada through its Discovery Grant program.

Ethics declarations

Ethics approval and consent to participate

Not applicable

Consent for publication

Not applicable

Competing interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wei, S., Spiteri, R.J. The effect of splitting strategy on qualitative property preservation. Numer Algor 96, 1391–1421 (2024). https://doi.org/10.1007/s11075-023-01730-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-023-01730-7