Abstract

In the last decades, the Volterra series theory has been used to construct reduced-order models of nonlinear systems in engineering and applied sciences. For the particular case of weakly nonlinear aerodynamic and aeroelastic systems, the Volterra series theory has been tested as an alternative to the high computing costs of CFD methods. The Volterra series model determination depends on identifying the kernels associated with the respective convolution integrals. The Volterra kernels identification has been tried in many ways, but the majority of them addresses only the direct kernels of single-input, single-output nonlinear systems. However, multiple-input, multiple-output relations are the most typical case for many dynamic systems. In this case, the so-called Volterra cross-kernels represent the internal couplings between multiple inputs. Not many generalizations of the single-input kernel identification methods to multi-input Volterra kernels are available in the literature. This work proposes a methodology for the identification of Volterra direct kernels and cross-kernels, which is based on time-delay neural networks and the relationship between the kernels functions and the internal parameters of the network. Expressions to derive the pth-order Volterra direct kernels and cross-kernels from the internal parameters of a trained time-delay neural network are derived. The method is checked with a two-degree-of-freedom, two-input, one-output nonlinear system to demonstrate its capabilities. The application to a mildly nonlinear unsteady aerodynamic loading due to pitching and heaving motions of an airfoil is also evaluated. The Volterra direct kernels and cross-kernels of up to third order are successfully identified using training datasets computed with CFD simulations of the Euler equations. Comparisons between CFD simulations and Volterra model predictions are presented, thereby ensuring the potential of the method to systematically extract kernels from neural networks.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The functional series of Volterra is a powerful representation for nonlinear continuous, causal, time-invariant, fading memory systems [1]. Volterra series comprises an infinite expansion of multi-dimensional convolution integrals of increasing order. This approach has been used in a vast range of engineering and applied sciences problems [2,3,4,5].

Survey papers since Billings [6] to the most recent by Cheng et al. [7] present the progress on Volterra functional-based identification and applications in engineering. The Wiener series [8,9,10] attained modeling accuracy similar to the Volterra-type series and are solved using an expansion into a series of mutually orthonormal polynomial functionals, the so-called G-functionals. Although the Wiener models provide a systematic approach to identification problems, the excessive number of required coefficients, even for lower-order nonlinear systems, undermines the applicability of the method. Moreover, nonlinear systems identification methods were also conceived on block-oriented models [11, 12], which use combinations of linear and nonlinear static subsystems. The LNL cascade systems [13] consist of the combination of a linear subsystem followed by a static nonlinearity, and another linear subsystem, which allows nonlinear identification from Wiener and Hammerstein cascade models [14]. The block-oriented approaches were developed solely for random processes, in particular for white Gaussian inputs, to systematically assess the parameters of the identified models for the associated class of nonlinear systems. The Adomian decomposition method was also used as an alternative to Volterra series [15].

A common drawback of the aforementioned methodologies is the excessive number of terms necessary to identify a nonlinear dynamic behavior. In turn, system identification based on the Volterra theory requires the calculation of the kernel functions. Again, most of the proposed kernel identification methods suffer from a large number of expansion terms [7]. Cheng et al. [7] present a comprehensive review of kernel identification methods in both time and frequency domain, in which investigations mostly concern nonlinear SISO dynamics. Marmarelis and Naka [16] were the first to address the issue of multi-input Volterra model. Since then, a relatively small amount of work in multi-variable Volterra theory is observed. The Volterra theory employed to multi-variable systems requires the calculation of the so-called cross-kernels, which identify the system internal nonlinear couplings [17].

Generalized models from the existing direct-kernel identification of nonlinear SISO systems have been proposed. Among the methods used for cross-kernels assessment, Worden et al. [18] expanded the harmonic probing algorithm, and Chatterjee and Vyas [19] developed harmonic functions to the analysis and identification of Volterra higher-order direct kernels and cross-kernels. For both cases, a large number of terms are involved in the kernels identification.

Alternative ways to overcome the cumbersome development of expansion terms of Volterra direct kernels and cross-kernels have been tried. The application of artificial neural networks theory is an example. Neural networks to identify Volterra kernels were proposed by Wray and Green [20]. They proved that particular network architectures are equivalent to a Volterra series representation of a dynamic system. Moreover, the kernels of any order can be extracted from the network parameters. Following similar developments, recent attempts to extract Volterra kernels from neural networks were attained in the frequency domain [21, 22] and with Taylor series expansions of a conventional feedforward architecture [23]. The systematic way of dealing with a black-box-type model through supervised training schemes and extracting the kernels from the network parameter can be considered an appealing form to assess a Volterra model.

Volterra theory has been successfully used to assess reduced-order models (ROM) in the field of unsteady aerodynamics and aeroelasticity [3]. Silva was the first to present a comprehensive application of Volterra series to unsteady aerodynamic ROM [24, 25]. The approach was also tried by other researchers [26,27,28], where direct kernels were identified using numerical solutions of fluid mechanics equations for mildly nonlinear transonic aerodynamics. Cross-kernels identification of multi-variable unsteady aerodynamics was only tackled more recently [29, 30] with multi-impulse inputs and sparse Volterra series. In de Paula et al. [31], the direct-kernel identification using time-delay neural networks was performed for an aerodynamic system. The neural network was based on the proposed kernel extraction method by Wray and Green [20].

This paper addresses the identification of Volterra direct kernels and cross-kernels from time-delay neural networks by generalizing the Wray and Green [20] approach and presents an application to the unsteady aerodynamic loads prediction. To the authors’ best knowledge, the identification of multi-variable Volterra cross-kernels via artificial neural networks has not been investigated in the literature. The method considers a polynomial expansion of the neuron activation function. The neural network training follows conventional techniques, and the final converged network furnishes the internal parameters used for direct kernels and cross-kernels determination. To illustrate the method, a two-degree-of-freedom, second-order nonlinear system is used, and the counterpart Volterra-type model is analyzed. For the unsteady aerodynamics problem, the compressible flow around an airfoil is computed with an Euler CFD code. The unsteady pitching moment predicted with the Volterra model admits simultaneous pitch and heave motions and relies upon higher-order direct kernels and cross-kernels assessment.

2 Volterra kernels identification

2.1 Volterra series

Volterra series [1] are nonlinear functionals developed as a generalization of the Taylor series expansion for a function. The basic premise of the Volterra functional series approach is that a representation for a continuous, nonlinear, physically realizable (or causal), time-invariant, single-input, single-output (SISO) system is given by an infinite series of multi-dimensional convolution integrals of increasing order,

where y(t) is the system response, \(u(\cdot )\) is the input to the system, and \(h_p(\cdot )\) is the pth-order Volterra kernel.

The zeroth-order Volterra kernel \(h_0\) is the zero-input response of the system, whereas the first-order Volterra kernel \(h_1\) represents the linear response of the system to a single impulse input. The higher-order kernels are the system multi-dimensional impulse responses, and they lead to measures of the nonlinearity degrees, or the relative influence of a previous input on the current response, that characterizes the temporal effect in the nonlinear dynamics [6, 7]. From the system causality, it results that the kernel \(h_{p}(\tau _{1}, \ldots , \tau _{p})\) is zero if any \(\tau _{i} < 0\), thereby leading the lower limits of the integrals in Eq. (1) to be equal to zero. The causality hypothesis additionally implies that the system response cannot depend on future input values, making it possible to replace the upper limit of the integrals by t. It is also possible to assure that each kernel \(h_{p}(\tau _{1}, \ldots , \tau _{p})\) is symmetric with respect to any permutation of \(\tau _{1}, \ldots , \tau _{p}\).

A convenient form to present the Volterra functional series for causal, finite memory, time-invariant, and discrete-time systems is,

where n is the discrete-time variable, and \(h_j[k_1, k_2, \ldots , k_j]\) is the jth-order Volterra kernel.

The Volterra theory presented previously concerns only SISO systems, but it can be extended to multiple-input, multiple-output (MIMO) systems. Each lth-output, \(y_l[n]\), of a nonlinear system due to q inputs can be modeled using a pth-order, multi-input Volterra series [29]. For a discrete-time representation of the nonlinear system, the multi-input Volterra series is,

where n is the discrete-time variable, \(u_1[\cdot ], u_2[\cdot ], \ldots , u_q[\cdot ]\) are the system inputs, and \(h_p^{(l:j_1 \ldots j_p)}[k_1,\ldots ,k_p]\) is the pth-order, multi-input, discrete-time Volterra kernel. The first superscript of the kernels notation (before the colon) denotes the output of the system and the secondary superscripts (after the colon) identify to which inputs the kernel corresponds. If the secondary superscripts are equal (\(j_1 = j_2 = \cdots = j_p\)), the kernels are the so-called direct kernels. On the other hand, for combined inputs that differ, i.e., at least one secondary superscript is distinct from the others, the kernels are named the cross-kernels. The cross-kernels differentiate the multi-variable Volterra series from the single-input formulation by accounting for the intermodulation terms that arise when a nonlinear system is excited with more than one input.

The zeroth-order kernels are the steady-state responses of the system and can be subtracted from the total system response. Therefore, \(h_0^{(l)}\) can be neglected in Eq. (3) without loss of generality.

2.2 Multi-variable Volterra kernel identification via time-delay neural networks

Wray and Green [20] have introduced a method to identify the direct kernels of a single-input Volterra series from the internal parameters of a trained time-delay neural network. The method offers a general expression to identify the pth-order Volterra kernel. Recently, de Paula et al. [31] have successfully applied the method to aerodynamic systems and identified direct kernels up to the fourth order.

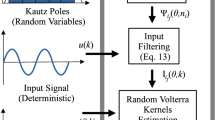

The novelty of the present work is a generalized TDNN approach to systematically identify the multi-variable Volterra direct kernels and cross-kernels. To derive the equations for a system with multiple inputs and multiple outputs, a time-delay neural network (TDNN) architecture is considered, such as the one illustrated in Fig. 1. The TDNN is composed of \((q + \sum _{k=1}^q M_k)\) input units, a hidden layer with N hidden nodes and L output nodes. The input units are grouped into blocks corresponding to each particular input, \(u_k\) (for \(k = 1, 2, \ldots , q\)). Each block contains \(M_k + 1\) time-delayed samples of the kth-input signal. The value \(M_k\) is a measure of the memory length and different values can be assumed for each particular input. In a dynamic system, each particular input corresponds to a degree of freedom (DOF) that is excited. The connecting links between the ith-input unit and the jth-hidden neuron are associated with a synaptic weight \(w_{ij}^{(k)}\), where the superscript index indicates with which particular input the weight is correlated. The numbering of the index i also reflects the block structure in which the input units are grouped. The value of i starts from zero in each new block and goes up to \(M_k\) (for \(k = 1, 2, \ldots , q\)). The connecting links between the jth-hidden unit and the lth-output node are weighted by the amounts \(c_{jl}\). Such a TDNN applies to a system with q inputs and L outputs.

The input into each hidden node (a neuron unit) of the network illustrated in Fig. 1 is a weighted sum over the time-delayed input units in all degrees of freedom,

The basic operation performed by a hidden neuron unit is to process the weighted sum of the inputs (cf. Eq. (4)) with a nonlinear activation function, \(\phi (\cdot )\), as shown in the schematic of Fig. 2. A bias, \(b_j\), is also applied to the linear combination of the inputs. A typical sigmoidal activation function given by a hyperbolic tangent function is assumed. The Taylor series expansion of the activation function around the bias gives an equivalent polynomial representation, \(p_j\),

where \(\tanh ^{(p)}\) represents the pth derivative of \(\tanh \) and \(a_{pj}\) are the coefficients of the polynomial expansion. The calculation of the \(a_{pj}\) values requires the determination of the derivatives of the \(\tanh \) function, which can be obtained analytically or by using a symbolic manipulation software.

The output neurons have linear activation functions, as it is usual for function fitting problems. Assuming their biases as zero, each output of the neural network is described by a linear combination of the output of each hidden unit, each hidden unit corresponding to the response in a degree of freedom of the system,

If it is admitted that the polynomial of Eq. (6) has finite order, the output of the neural network can be expanded (using Eq. (4)) as

where p is an integer value.

The terms related to the power of a sum in Eq. (7) can be further expanded and rearranged as

The form of Eq. (8) is equivalent to the multi-variable Volterra series shown in Eq. (3). The Volterra kernels correspond to the terms shown inside parentheses, that is,

and the general expression for the pth-order kernel is

Note that Eqs. (9)–(12) represent the direct kernels and the cross-kernels. The kernels secondary superscripts, which are related to the combination of inputs, are determined by the block inputs associated with the respective synaptic weights, \(w_{ij}^{(k)}\).

The total number of kernels of a specific order depends on the number of input combinations and can be very large for multi-degree-of-freedom systems. However, because of existing symmetries, the number of cross-kernels to be assessed can be significantly reduced [19]. The Volterra kernel formulae based on the time-delay neural network reflect this symmetry as, for the same set of inputs, the kernel will be the same irrespective of the order in which the synaptic weights are multiplied. For example, for a system with two inputs and a single output (MISO-type system), the multi-variable Volterra series of third order can be written as

where the square of a sum and the third power of a sum (appearing in Eq. (7)) can also be expressed as

in which \(\sum _{i=0}^{M_k}w_{ij}^{(k)}u_k[n-1]\) is taken as \(z_k\) and \(k_1, k_2, k_3\) are positive integer values. The coefficients 2 and 3 multiplying the terms relative to the cross-kernels are the result of the existing symmetries [18], namely

and, similarly

3 Kernels identification for a MISO nonlinear system

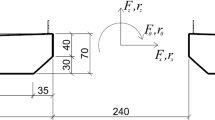

An arbitrary two-degrees-of-freedom, second-order coupled nonlinear system with two inputs and a single output is used to illustrate the features of the kernel identification via a neural network. Quadratic terms of each degree-of-freedom are included to ensure weakly smooth nonlinearity. The MISO system dynamics is represented by the following set of coupled nonlinear ODEs:

where \(y_1\) and \(y_2\) are the system internal variables that compose the system output y, and \(u_1\) and \(u_2\) are independent inputs.

Equations (18)–(20) are numerically evaluated using a third-order, fixed-step of one millisecond, Runge–Kutta solver [32] to establish a database for the neural network training process. The training inputs \(u_1\) and \(u_2\) comprised random signals with a Gaussian distribution. The TDNN architecture parameters used for training were taken after a trial-and-error scheme and subsequent inspection of the network predictive performance. The TDNN architecture parameters used for training were defined by inspection of the predictive performance with increasing neural network complexity, i.e., number of time delays and hidden neurons, such as the error analysis presented in de Paula et al. [31]. A feedforward neural network with a total of \(M = 50\) time delays per input and \(N=3\) hidden neurons has shown a suitable performance. The TDNN was trained with the Bayesian regularization algorithm, and the regularization scheme is performed within the Levenberg–Marquardt algorithm. It constrains the magnitudes of the network parameters in order to force a smoother response, as well as to prevent overfitting and to enhance the generalization properties [33]. The stopping criterion considers the convergence of the mean squared error between the predicted and the numerically simulated outputs.

Figure 3 depicts the comparison between the simulated and predicted signals after completion of the time-delay neural network training and generalization testing (cf. Fig. 3a, b). The training results in Fig. 3a, c demonstrate that the neural network provides a good match to the direct simulation of Eqs. (18)–(20). Figures 3b, d present the network performance in predicting a signal different from those used for the training. Such results ensure that the time-delay neural network satisfactorily represents the MISO two-degree-of-freedom system. The next step concerned the extraction of the Volterra kernels from the neural network internal parameters. The manipulation of Eq. (5), followed by applying Eq. (12), leads to the Volterra direct kernels and cross-kernels.

Volterra kernels up to the second order were extracted for this case through the neural network approach. The first-order direct kernels related with each input signal are presented in Fig. 4a and b, respectively. The asymmetry of the system can be observed from the differences between the kernels. Figure 5 presents the second-order Volterra kernels obtained. The identified second-order direct kernels and cross-kernels also indicate the asymmetry.

Once the Volterra kernels are identified, they can be used as a reduced-order model to predict the system dynamics. To test the reduced-order model built with the computed kernels, a pair of inputs of sinusoidal form is used. The inputs considered are: \(u_1(t) = \cos (t)\) and \(u_2(t) = \cos (0.8 t)\). The numerically simulated signal is compared with various combinations of the identified kernels, which were used in the convolutions in Eq. (3). The results are summarized in Fig. 6. Figure 6a shows how the Volterra reduced model behaves for different kernels combinations, which include some or the totality of kernel functions. It is clear the importance of each higher-order kernel to predict the nonlinear behavior of the system. By examining the time histories, it is possible to conclude that minimum prediction is attained with the first-order kernels. Moreover, the intrinsic asymmetry of the MISO 2-DOF system could only be approximated with the inclusion of the second-order kernels. Table 1 presents the calculated \(L^2\)-norms of the error between the numerical simulation of the MISO 2-DOF system against the Volterra model prediction for each kernel combination, respectively. Lower error values are obtained when more kernel functions are included in the Volterra model. Figure 6b confirms the Volterra model performance enhancement in predicting the MISO 2-DOF system as the second-order direct and cross-kernels are added.

4 Kernel identification for unsteady nonlinear aerodynamic loading model

Unsteady aerodynamic loads can be assessed using numerical techniques pertaining to the branch of computational fluid dynamics (CFD). However, CFD solutions of practical, complex aerodynamic flow fields are still cumbersome. For that reason, reduced-order models have great appeal for unsteady aerodynamic applications [3]. In this section, the Volterra model with direct kernels and cross-kernels identified via time-delay neural networks is tested for an aerodynamic system. The unsteady aerodynamic loading under the effects of compressible flow around an airfoil is considered. To obtain the signals required for training and testing the Volterra model, an inviscid Euler CFD code [34] was used. The CFD code consists of a finite volume 2D solver based on an explicit second-order time marching scheme. The computational domain was set on the NACA 0012 airfoil with an unstructured triangular O-type mesh comprising 19,886 elements, and extending 20 chords from the profile in all directions.

The unsteady pitching moment coefficient response, \(C_{m}(\tau )\), resulting from the airfoil pitching, \(\alpha (\tau )\), and heaving, \(h(\tau )\), motions is simulated with the CFD code, where \(\tau =(tU_\infty )/c\) is the dimensionless time. The variables denote the instantaneous time, t, the free-stream speed, \(U_{\infty }\), and the airfoil chord length, c. Computational grid, domain extension, and time step were adjusted following the solutions presented by Camilo et al. [34]. The neural network training and generalization test signals consist of random-phase multi-sine pitching and heaving motions function, namely

where \(A_k\) is the randomly distributed motion amplitude (for pitching or heaving motions, respectively), k is the reduced frequencyFootnote 1, \(\phi _k\) is the randomly distributed phase shift in the interval \([0,2\pi )\), F is the index related to the value of the maximum excitation frequency, and \(f_0=1/(N_s \Delta t)\) is the frequency band obtained from the number of time samples of the signal, \(N_s\), and the time step, \(\Delta t\).

The simulations based on the inputs given by Eq. (21) were carried out with a dimensionless integration time step of \(\Delta \tau = 6\times 10^{-4}\). A mildly nonlinear loading condition at Mach number 0.6 was chosen for evaluation of the unsteady pitching moment coefficient response. At that compressible regime, weak effects of shock excursion are observed [34] and there is a reasonable amount of nonlinear behavior for testing the Volterra model. Such flow regime has also been used in other work on aerodynamic modeling via Volterra series [25, 29].

Similarly to the approach in the previous example, the neural network architecture was chosen with \(M = 25\) time-delayed inputs per input and \(N=5\) hidden neurons. The training set consisted of a total of 1,997 points sampled from the CFD full simulation signal at a period of \(\Delta \tau = 6\times 10^{-4}\). The training process was performed using the same algorithm as for the previous example given in Sect. 3, namely the Bayesian regularization. After training, a generalization test was carried out and the results of the comparison of the neural network prediction against the CFD simulation are depicted in Fig. 7. The results demonstrate that the neural network prediction features ensure an adequate fitting of the CFD simulation signal, thereby allowing the kernels identification.

Despite the weak nonlinear behavior of the aerodynamic loading, it is expected a more complex dynamics as that of the previous study (cf. Sect. 3). For this reason, the highest kernel order examined for the aerodynamic loading prediction is 3rd-order. Indeed, more complex first-order direct kernels can be observed in Fig. 8a and b for the pitch and plunge degrees of freedom, respectively. Figure 9 depicts the second-order direct kernels and cross-kernels, which also have complex shapes and contribute to modeling asymmetries in the response. As for the third-order kernels, a major difficulty lies on graphically representing them due to the larger-than-three dimensionality. Previous studies [29, 35] with kernel synthesis based on multi-impulse inputs have also revealed non-smooth aerodynamic kernel functions. In de Paula et al. [31], the equivalence between the neural network and the multi-impulse-kernels was established in the frequency domain. The analysis also pointed out that the kernels extracted from the TDNN might be inflicted with high-frequency numerical noise, which impacted the shape of the kernel functions in the time-domain, but not the convolved ROM response.

To test the ability of the identified set of kernels to assess a Volterra model for the unsteady aerodynamic loading prediction, a simulated test case based on the AGARD experimental data is considered. The conditions of the CT2 case [36] were considered by Balajewicz et al. [29] to establish the following prescribed simultaneous oscillation in pitching and heaving, respectively: \(\alpha (\tau ) = 3.16^\circ + 1^\circ \sin (2k_\alpha \tau )\) and \(h(\tau ) = 0.14c \sin (2k_h \tau )\), where \(k_\alpha = 0.08\), \(k_h \approx 0.8k_\alpha \), and \(M_\infty = 0.6\).

Figures 10 and 11 can be used to observe the influence of the kernel functions on the \(C_{m}(\tau )\) prediction in comparison with the CFD simulation. Figure 10 shows the aerodynamic loading approximation including only the direct kernels. It is reasonable to infer that a Volterra series with kernels of first to third order (referenced as third-order kernels in Fig. 10) can more accurately represent the system behavior. The results confirm such feature, but also highlight the need for more kernel functions to reduced local discrepancies within the \(C_{m}(\tau )\) time history. Moreover, it should be observed that the model including only the direct kernels yields a response equivalent to the superposition of the individuals responses in each degree of freedom.

The incorporation of cross-kernel functions in the Volterra model is essential to represent the system internal nonlinear couplings when simultaneous inputs are applied. Figure 11 depicts the effect of the cross-kernels inclusion in the Volterra model. Admitting all direct kernels already as part of the Volterra model, the results in Fig. 11 ensure the importance of additionally considering the cross-kernels, particularly the third-order one. A multi-input Volterra series with direct kernels and cross-kernels up to the third order leads to a satisfactory predicting model of the unsteady pitching moment. To summarize the results in Figs. 10 and 11, error measures based on \(L^2\)-norm are presented in Table 2 and confirm the importance of the cross-kernels for the Volterra series to accurately predict the aerodynamic loading under the effects of internal couplings.

Figure 12 shows the power spectra of the respective signals of Figs. 10 and 11. It is observed that the first-order kernels capture the fundamental frequencies, that is, \(k/{k_\alpha } = 0.8\) (regarding \(k/{k_h} = 1\)) and \(k/{k_\alpha } = 1\). The 2nd- and 3rd-order direct kernels capture the \(2\times \) and \(3\times \) harmonics of the fundamental frequencies, which are observed at \(k/{k_\alpha } = 1.6\) and \(k/k_{\alpha } = 2\), and at \(k/{k_\alpha } = 2.4\) and \(k/k_{\alpha } = 3\). Moreover, the second- and third-order components lead, respectively, to a nonlinear contribution to the static response (\(k/{k_\alpha } = 0\)) and to contributions in the fundamental frequencies.

The second-order cross-kernels bring the first intermodulation terms, namely \(k/{k_\alpha } = 0.2\) and \(k/{k_\alpha } = 1.8\) regarding the combinations \((k_\alpha + k_h)/{k_\alpha }\) and \((k_\alpha - k_h)/{k_\alpha }\). The third-order cross-kernels are responsible for terms as \((2k_h - k_\alpha )/{k_\alpha }\) (resulting in \(k/{k_\alpha } = 0.6\)), \((2k_\alpha - k_h){k_\alpha }\) (resulting in \(k/{k_\alpha } = 1.2\)), \((2k_h + k_\alpha )/{k_\alpha }\) (resulting in \(k/{k_\alpha } = 2.6\)), and \((2k_\alpha + k_h)/{k_\alpha }\) (resulting in \(k/{k_\alpha } = 2.8\)). It is possible to observe that the frequency combinations in the terms of intermodulation are related to the set of inputs associated with the cross-kernels. For instance, in the case of second-order cross-kernels, there is only one occurrence of each of the inputs, so the intermodulation frequencies correspond to the combination of the fundamental frequencies. In the case of third-order cross-kernels, there are two occurrences of a particular input and only one of the second input. Therefore, the intermodulation frequencies associated with third-order cross-kernels are always determined by the combinations between \(2\times \) harmonics and the remaining fundamental frequency.

5 Concluding remarks

This work presented an application of time-delay neural networks for the identification of Volterra direct kernels and cross-kernels. The applicability of the identified kernels to the construction of reduced-order models for aerodynamic loading prediction was tested for the case of a mildly nonlinear unsteady aerodynamic regime. In the proposed method, kernel synthesis is based on the internal parameters of a TDNN after regular supervised training is completed. The extracted direct kernels and cross-kernels are subsequently used in a discrete-time multi-input Volterra series model.

The methodology has been tested with a MISO 2-DOF system to check its potential. Kernels up to second order were identified and applied to a Volterra model to predict the system response to new inputs. Results demonstrated the impact of including the cross-kernel functions in the Volterra series for the prediction of internal system couplings. The direct kernels alone were not capable of accurate approximations of the simulated system response.

The same kernel identification procedure was applied to the prediction of the unsteady pitching moment of an airfoil under compressible flow at Mach number 0.6. The database used to train the neural network was obtained through CFD simulations. In this case, direct kernels and cross-kernels up to third order were necessary to attain an accurate approximation of the aerodynamic system. Moreover, the second- and third-order cross-kernels were essential to represent the features associated with the internal nonlinear couplings resulting from the simultaneous excitation in the pitch and plunge degrees of freedom. Frequency domain analyses of the predicted responses also revealed the importance of the cross-kernels in capturing the intermodulation harmonics of the fundamental frequencies.

Notes

\(k=\left( \frac{\omega b}{U_{\infty }}\right) \), where \(\omega \) is the angular frequency, and \(b=c/2\) is the airfoil semi-chord.

References

Volterra, V.: Theory of Functionals and of Integral and Integro-differential Equations. Dover Books on Mathematics Series. Dover Publications, New York (2005)

Yamanaka, O., Ohmori, H., Sano, A.: Design method of exact model matching control for finite Volterra series systems. Int. J. Control 68(1), 107–124 (1997). https://doi.org/10.1080/002071797223758

Silva, W.A.: Identification of nonlinear aeroelastic systems based on the Volterra theory: progress and opportunities. Nonlinear Dyn. 39(1), 25–62 (2005). https://doi.org/10.1007/s11071-005-1907-z

Peng, Z.K., Lang, Z.Q., Billings, S.A.: Analysis of locally nonlinear MDOF systems using nonlinear output frequency response functions. J. Vib. Acoust. 131(5), 051007–051007-10 (2009). https://doi.org/10.1115/1.3147139

Wu, T., Kareem, A.: Vortex-induced vibration of bridge decks: Volterra series-based model. J. Eng. Mech. 139(12), 1831–1843 (2013). https://doi.org/10.1061/(ASCE)EM.1943-7889.0000628

Billings, S.A.: Identification of nonlinear systems—a survey. IEE Proc. D Control Theory Appl. 127(6), 272–285 (1980). https://doi.org/10.1049/ip-d:19800047

Cheng, C.M., Peng, Z.K., Zhang, W.M., Meng, G.: Volterra-series-based nonlinear system modeling and its engineering applications: a state-of-the-art review. Mech. Syst. Signal Process. 87, 340–364 (2017). https://doi.org/10.1016/j.ymssp.2016.10.029

Schetzen, M.: The Volterra and Wiener Theories of Nonlinear Systems. Wiley, New York (1980)

Franz, M.O., Schölkopf, B.: A unifying view of Wiener and Volterra theory and polynomial kernel regression. Neural Comput. 18(12), 3097–3118 (2006). https://doi.org/10.1162/neco.2006.18.12.3097

Vanbeylen, L., Pintelon, R., Schoukens, J.: Blind maximum-likelihood identification of Wiener systems. IEEE Trans. Signal Process. 57(8), 3017–3029 (2009). https://doi.org/10.1109/TSP.2009.2017001

Billings, S.A., Fakhouki, S.Y.: Nonlinear system identification using the Hammerstein model. Int. J. Syst. Sci. 10(5), 567–578 (1979). https://doi.org/10.1080/00207727908941603

Hunter, I.W., Korenberg, M.J.: The identification of nonlinear biological systems: Wiener and Hammerstein cascade models. Biol. Cybern. 55, 135–144 (1986). https://doi.org/10.1007/BF00341929

Korenberg, M.J., Hunter, I.W.: The identification of nonlinear biological systems: LNL cascade models. Biol. Cybern. 55, 125–134 (1986). https://doi.org/10.1007/BF00341928

Sjöberg, J., Schoukens, J.: Initializing Wiener–Hammerstein models based on partitioning of the best linear approximation. Automatica 48(2), 353–359 (2012). https://doi.org/10.1016/j.automatica.2011.07.007

Guo, Y., Guo, L.Z., Billings, S.A., Coca, D., Lang, Z.Q.: Volterra series approximation of a class of nonlinear dynamical systems using the Adomian decomposition method. Nonlinear Dyn. 74(1), 359–371 (2013). https://doi.org/10.1007/s11071-013-0975-8

Marmarelis, P.Z., Naka, K.: Identification of multi-input biological systems. IEEE Trans. Biomed. Eng. BME–21(2), 88–101 (1974). https://doi.org/10.1109/TBME.1974.324293

Marmarelis, P.Z., Marmarelis, V.: Analysis of Physiological Systems: The White-Noise Approach. Plenum Press, New York (1978)

Worden, K., Manson, G., Tomlinson, G.R.: A harmonic probing algorithm for the multi-input Volterra series. J. Sound Vib. 201(1), 67–84 (1997). https://doi.org/10.1006/jsvi.1996.0746

Chatterjee, A., Vyas, N.A.: Non-linear parameter estimation in multi-degree-of-freedom systems using multi-input Volterra series. Mech. Syst. Signal Process. 18(3), 457–489 (2004). https://doi.org/10.1016/S0888-3270(03)00016-5

Wray, J., Green, G.G.R.: Calculation of the Volterra kernels of non-linear dynamic systems using an artificial neural network. Biol. Cybern. 71(3), 187–195 (1994). https://doi.org/10.1007/BF00202758

Stegmayer, G., Chiotti, O.: Identification of frequency-domain Volterra model using neural networks. In: Duch, W., Kacprzyk, J., Oja, E., Zadrożny, S. (eds.) Artificial Neural Networks: Formal Models and Their Applications—ICANN 2005, pp. 465–471. Springer, Berlin (2005). https://doi.org/10.1007/11550907_73

Stegmayer, G., Chiotti, O.: Volterra NN-based behavioral model for new wireless communications devices. Neural Comput. Appl. 18(3), 283–291 (2009). https://doi.org/10.1007/s00521-008-0180-8

Mišić, J., Marković, V., Marinković, Z.: Volterra kernels extraction from neural networks for amplifier behavioral modeling. In: 2014 X International Symposium on Telecommunications (BIHTEL), pp. 1–6 (2014). https://doi.org/10.1109/BIHTEL.2014.6987646

Silva, W.A.: Application of nonlinear systems theory to transonic unsteady aerodynamic responses. J. Aircr. 30(5), 660–668 (1993). https://doi.org/10.2514/3.46395

Silva, W.A.: Reduced-order models based on linear and nonlinear aerodynamic impulse responses. In: 40th Structures, Structural Dynamics, and Materials Conference and Exhibit, St. Louis, MO, USA (1999). https://doi.org/10.2514/6.1999-1262. AIAA-99-1262

Silva, W.A., Beran, P.S., Cesnik, C.E.S., Guendel, R.E., Kurdila, A., Prazenica, R.J., Librescu, L., Marzocca, P., Raveh, D.E.: Reduced-order modeling: cooperative research and development at the NASA Langley Research Center. In: CEAS/AIAA/AIAE International Forum on Aeroelasticity and Structural Dynamics – IFASD, pp. 1–16, Madrid, Spain (2001). IFASD-2001-008

Marzocca, P., Silva, W.A., Librescu, L.: Nonlinear open-/closed-loop aeroelastic analysis of airfoils via Volterra series. AIAA J. 42(4), 673–686 (2004). https://doi.org/10.2514/1.9552

Lucia, D.J., Beran, P.S., Silva, W.A.: Reduced-order modeling: new approaches for computational physics. Prog. Aerosp. Sci. 40(1), 51–117 (2004). https://doi.org/10.1016/j.paerosci.2003.12.001

Balajewicz, M., Nitzsche, F., Feszty, D.: Application of multi-input Volterra theory to nonlinear multi-degree-of-freedom aerodynamic systems. AIAA J. 48(1), 56–62 (2010). https://doi.org/10.2514/1.38964

Balajewicz, M., Dowell, E.: Reduced-order modeling of flutter and limit-cycle oscillations using the sparse Volterra series. J. Aircr. 49(6), 1803–1812 (2012). https://doi.org/10.2514/1.C031637

de Paula, N.C.G., Marques, F.D., Silva, W.A.: Volterra kernels assessment via time-delay neural networks for nonlinear unsteady aerodynamic loading identification. AIAA J. (2019). https://doi.org/10.2514/1.J057229

Bogacki, P., Shampine, L.F.: A 3 (2) pair of Runge–Kutta formulas. Appl. Math. Lett. 2(4), 321–325 (1989). https://doi.org/10.1016/0893-9659(89)90079-7

Hagan, M.T., Demuth, H.B., Beale, M.H., de Jess, O.: Neural Network Design, 2nd edn. Martin Hagan (2014). ISBN 0971732116, 9780971732117

Camilo, E., Marques, F.D., Azevedo, J.L.F.: Hopf bifurcation analysis of typical sections with structural nonlinearities in transonic flow. Aerosp. Sci. Technol. 30(1), 163–174 (2013). https://doi.org/10.1016/j.ast.2013.07.013

Raveh, D.E.: Reduced-order models for nonlinear unsteady aerodynamics. AIAA J. 39(8), 1417–1429 (2001). https://doi.org/10.2514/2.1473

Landon, R.H., Davis, S.S.: Compendium of unsteady aerodynamic measurements. Technical Report R-702, AGARD—Advisory Group for Aerospace Research and Development (NATO) (1982)

Acknowledgements

The authors acknowledge the financial support of: Grant 2017/02926-9, São Paulo Research Foundation (FAPESP) and Grants 307658/2016-3 and 131493/2016-7, National Council for Scientific and Technological Development (CNPq).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that there is no conflict of interest regarding the publication of this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

de Paula, N.C.G., Marques, F.D. Multi-variable Volterra kernels identification using time-delay neural networks: application to unsteady aerodynamic loading. Nonlinear Dyn 97, 767–780 (2019). https://doi.org/10.1007/s11071-019-05011-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-019-05011-8