Abstract

This article is interested in presenting and implementing two new numerical algorithms for solving multi-term fractional differential equations. The idea behind the proposed algorithms is based on establishing a novel operational matrix of fractional-order differentiation of generalized Lucas polynomials in the Caputo sense. This operational matrix serves as a powerful tool for obtaining the desired numerical solutions. The resulting solutions are spectral, and they are built on utilizing tau and collocation methods. A new treatment of convergence and error analysis of the suggested generalized Lucas expansion is presented. The presented numerical results demonstrate the efficiency, applicability and high accuracy of the proposed algorithms.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Standard spectral methods have vital roles in numerical analysis in general. These methods are capable of providing numerical solutions to various kinds of differential equations. They employ global polynomials as trial functions. Moreover, they provide very accurate approximate solutions with a relatively small number of unknowns. Many important problems in applied science and engineering can be treated by employing the different versions of spectral methods. For some of these applications, one can consult [1,2,3,4,5]. There are three popular versions of spectral methods; they are the collocation, tau and Galerkin methods. The choice of the suitable version of these methods depends on the type of the differential equation under investigation and also on the initial/boundary conditions governed by it. For some articles employ spectral methods for solving different kinds of differential equations, see [6,7,8,9].

Fractional calculus is a pivotal branch of mathematical analysis. This kind of calculus deals with derivatives and integrals to an arbitrary order (real or complex). Due to the frequent appearance of differential equations of fractional order in various disciplines such as fluid mechanics, biology, engineering and physics, many researchers focused on studying them from theoretical and practical points of view. It is rarely that we can obtain analytical solutions of fractional differential equations (FDEs), so it a challenging problem to develop efficient and applicable numerical algorithms to handle them. In this regard, serval numerical schemes are proposed to investigate different kinds of FDEs. Some of these schemes are, the Taylor collocation method [10]; Adomian’s decomposition method [11, 12]; finite difference method [13]; variational iteration method [14], homotopy analysis and homotopy perturbation methods [15] and [16]. For some relevant recent papers in the area of FDEs and their applications, one can be referred to [17,18,19,20,21,22,23,24].

It is well known that many number and polynomial sequences can be generated by recurrence relations of second order. Of these important sequences are the celebrated sequences of Fibonacci and Lucas. These sequences of polynomials and numbers are of great importance in a variety of branches such as number theory, combinatorics and numerical analysis. These sequences have been studied in several papers from a theoretical point of view (see, [25,26,27,28]). However, there are very few articles that employ these sequences practically. In this regard, a collocation procedure based on using the Fibonacci operational matrix of derivatives is implemented and presented for solving BVPs in [29, 30]. Recently, a numerical approach with error estimation to solve general integro-differential–difference equations using Dickson polynomials is introduced in [31].

Various kinds of differential equations were handled by employing spectral methods along with utilization of operational matrices of various orthogonal polynomials. This approach has many advantages. It is simple, applicable and yields very efficient solutions. Many articles follow this approach. For example, Abd-Elhameed in [32, 33] has established and used novel operational matrices of derivatives for solving linear and nonlinear even-order BVPs. In addition, Napoli and Abd-Elhameed in [34] have developed another harmonic numbers operational matrix of derivatives to solve initial value problems of any order. The operational matrices are not only used to solve ordinary differential equations, but they are also fruitfully employed to solve FDEs. For some articles in this direction, one can be referred for example to [18, 35,36,37,38,39].

The principal aims of this research article can be summarized in the following items:

-

(i)

Establishing operational matrices for integer and fractional derivatives of the generalized Lucas polynomials.

-

(ii)

Constructing two numerical algorithms for solving multi-term fractional-order differential equations based on employing spectral methods together with the introduced operational matrices of derivatives.

The rest of the paper is as follows. The next section is devoted to presenting some fundamentals and also some formulae of the generalized Lucas polynomials which are useful in the sequel. Section 3 is interested in establishing operational matrices of integer and fractional derivatives of generalized Lucas polynomials. Treatment of multi-term fractional-order differential equations is discussed in detail in Sect. 4 via presenting two spectral algorithms for solving the linear and nonlinear fractional differential equations. In Sect. 5, we investigate carefully the convergence and error analysis of the proposed generalized Lucas expansion. Some numerical tests and comparisons are given in Sect. 6 to validate the efficiency and applicability of the proposed algorithms. Finally, Sect. 7 displays some conclusions.

2 Fundamentals and used formulae

This section is devoted to presenting some fundamentals of the fractional calculus. Besides, some relevant properties and formulae of the introduced generalized Lucas polynomials are stated and proved.

2.1 Some definitions and properties of fractional calculus

Definition 1

The Riemann–Liouville fractional integral operator \(_0I_t^{\nu }\) of order \(\nu \) on the usual Lebesgue space \(L_1[0, 1]\) is defined as: for all \(t\in (0,1)\)

Definition 2

The right side Riemann–Liouville fractional derivative of order \(\nu >0\) is defined by

Definition 3

The fractional differential operator in Caputo sense is defined as

where \( \,n-1\le \nu <n, n\in {\mathbb {N}}\).

Remark 1

It is worthy to note here that the fractional derivative in the Caputo sense is the most commonly used definition among the definitions of the fractional derivative. The definition of Caputo is mathematically rigorous than the Riemann–Liouville definition (see, Changpin et al. [40] and Li and Zhao [41]). The Caputo derivative exists in the whole interval (0, 1). In addition, Caputo definition is very welcome in applied science and engineering (Changpin et al. [42]). Furthermore, properties of the Caputo derivative are helpful in translating the higher fractional-order differential systems into lower ones ([43]). For a comparison between Caputo and Riemann–Liouville operators, the interested reader is referred to [44].

The following properties are satisfied by the operator \(D^{\nu }\) for \(n-1\le \nu <n,\)

where \(\lceil \nu \rceil \) denotes the smallest integer greater than or equal to \(\nu \). For more properties of fractional derivatives and integrals, see for example, [45, 46].

2.2 Relevant properties and relations of generalized Lucas polynomials

The sequence of Lucas polynomials \(L_{i}(t)\) may be constructed by means of the recurrence relation:

The Binet’s form of Lucas polynomials is

Also, the Lucas polynomials have the following power form representation:

and the notation \(\left\lfloor z\right\rfloor \) represents the largest integer less than or equal to z.

The first few Lucas polynomials \(L_{i}(t)\) are:

In this paper, we aim to generalize the sequence of Lucas polynomials. For this purpose, let a, b be any nonzero real constants, we define the so-called generalized Lucas polynomials which may be generated with the aid of the following recurrence relation:

with the initial values: \(\psi ^{a,b}_{0}(t)=2\) and \(\psi ^{a,b}_{1}(t)=a\, t\).

The first few generalized Lucas polynomials \(\psi ^{a,b}_{i}(t)\) are:

It is worthy to mention here that the generalized Lucas polynomials \(\psi ^{a,b}_{i}(t)\) generalize the Lucas polynomials \(L_{i}(t)\). In fact, Lucas polynomials can be deduced form \(\psi ^{a,b}_{i}(x)\) for the case: \(a=b=1\). In addition, some other important polynomials can be deduced as special cases of \(\psi ^{a,b}_{i}(x)\). Explicitly, we have

where \(Q_{i}(t), f_{i}(t),T_{i}(t)\) and \(D_{i}(t,\alpha )\) are, respectively, the Pell–Lucas, Fermat–Lucas, first kind Chebyshev and first kind Dickson polynomials, each of degree i.

The power form representation of \(\psi ^{a,b}_{i}(t)\) can be written explicitly in the following two equivalent forms

and

where

Moreover, the Binet’s form for \(\psi ^{a,b}_{i}(t)\) is

Now, the following two theorems are of fundamental importance in establishing our proposed algorithms in this paper. The first theorem gives an inversion formula to the power form representation given in (8), while the second introduces an expression for the first derivative of the generalized Lucas polynomials in terms of their original polynomials .

Theorem 1

For every nonnegative \(\ell \), the following inversion formula is valid

where \(\delta _{j}\) is defined as

Proof

To prove relation (10), it is enough to prove its alternative form

We proceed by induction on \(\ell \). Identity (12) is obviously satisfied for \(\ell =0\). Now, assume the validity of (12), and therefore to complete the proof, we have to show the validity of the following identity:

If we multiply both sides of (12) by t, and make use of the recurrence relation (7), then we get

The last relation can be written alternatively—after performing some manipulations—as

After some rather algebraic computations, it can be shown that formula (15) takes the form

Theorem 1 is now proved. \(\square \)

Theorem 2

The first derivative of the generalized Lucas polynomials \(\psi ^{a,b}_{j}(t)\) can be expressed as:

Proof

First, we differentiate the power form representation of the generalized Lucas polynomials \(\psi ^{a,b}_{j}(t)\) given in (9) with respect to t to get

Making use of the inversion formula (10), Eq. (17) can be written equivalently as

Expanding the right-hand side of the latter formula and rearranging the similar terms lead to the following relation

where \(H_{j,\ell }\) is given by

In order to obtain a reduction formula for \(H_{j,\ell }\), we note that it can be written equivalently as

Based on Chu-Vandermonde identity (see Koepf [47]), the hypergeometric \(_2F_{1}\) in (20) can be summed to give

and therefore, \(H_{j,\ell }\) takes the following simplified form

Theorem 2 is now proved. \(\square \)

3 Generalized Lucas operational matrix of integer and fractional derivatives

This section is dedicated to establishing operational matrices for both integer and fractional derivatives of the generalized Lucas polynomials. These operational matrices serve to approximate integer and fractional derivatives.

3.1 Operational matrix of integer derivatives

Let u(t) be a square Lebesgue integrable function on (0, 1), and assume that it can be written as a combination of the linearly independent generalized Lucas polynomials, i.e.,

Assume that u(t) can be approximated as

where

and

In order to approximate the successive derivatives of the vector \({\varvec{{\Psi }}}(t)\), first note that \(\displaystyle \frac{\mathrm{d}\,{\varvec{{\Psi }}}(t)}{\mathrm{d}\,t}\) can be expressed as

where \(G^{(1)}=\left( g^{(1)}_{ij}\right) \), is the \((M+1)\times (M+1)\) operational matrix of derivatives. With the aid of Theorem 2, the entries of this matrix are given explicitly as

Equation (25) enables one to express \(\displaystyle \frac{\mathrm{d}^\ell \,{\varvec{{\Psi }}}(t)}{\mathrm{d}\,t^\ell },\, \ell \ge 1\) as powers of the operational matrix \(G^{(1)}\). In fact, for all \(\ell \ge 1\), one has

3.2 Operational matrix of fractional derivatives

We establish in this section an operational matrix of the fractional derivatives which generalizes the operational matrix of integer derivatives. The following theorem displays the fractional derivatives of the vector \({\varvec{{\Psi }}}(t)\), from which a new operational matrix of fractional derivatives can be obtained.

Theorem 3

If \({\varvec{{\Psi }}}(t)\) denotes the generalized Lucas polynomial vector which defined in Eq. (24), then the following relation holds for all \(\alpha >0\)

where \(G^{(\alpha )}=(g^{\alpha }_{i,j})\) is a lower triangular matrix of order \((M+1)\times (M+1)\). This matrix is the operational matrix of fractional derivatives of order \(\alpha \) in the Caputo sense. The entries of this matrix can be written explicitly in the form

Moreover, the elements \(\left( g^{\alpha }_{i,j}\right) \) are given explicitly in the form

where

Proof

The application of the fractional differential operator \(D^{\alpha }\) to Eq. (9) together with relation (4) yields

which in turn with the aid of the inversion formula in (10) gives

where \( \theta _{\alpha }(i,j)\) is given in (29).

The last relation can be rewritten in the following vector form:

Moreover, we can write

Now, Merging Eq. (32) with Eq. (33), the desired formula can be obtained. \(\square \)

4 Treatment of FDEs based on the introduced operational matrix

This section focuses on constructing two numerical algorithms for treating linear and nonlinear FDEs. For this purpose, the two spectral methods, namely tau and collocation methods, are utilized. To be more precise, we propose a generalized Lucas tau method (GLTM) for handling linear FDEs, while a generalized Lucas collocation method (GLCM) is proposed for handling nonlinear FDEs.

4.1 Handling linear FDEs

In this section, we are interested in solving the following linear fractional differential equation with variable coefficients

where

governed by the following initial conditions

where \(\lambda _i(t), \mu (t)\) and f(t) are known continuous functions. From (22), it can be assumed that u(t) has the following approximation

Thanks to Theorem 3, \(D^{\alpha _i}\,u(t)\) can be approximated as

With the aid of the approximations in (36) and (37), the residual of (34) can be calculated by the formula

As a result of tau method (see for example [48]), the following system of equations can be obtained

In addition, the initial conditions (35) give

Now, Eqs. (39) and (40) constitute a linear system of algebraic equations in the unknown expansion coefficients \(c_i\) of dimension \((M+1)\). The solution of this system can be obtained through employing any suitable numerical algorithm.

4.2 Handling nonlinear FDEs

In this section, we are interested in solving he following nonlinear fractional-order differential equation:

where

governed by the following initial conditions

If \(u(t), D^{\alpha _i}\,u(t)\) are approximated as in Sect. 4.1, then the residual \(\tilde{R}(t)\) of Eq. (41) takes the form

The philosophy of the application of collocation method is based on enforcing the residual to vanish at certain interior points. There are several choices for these points. For example, they may be selected as: \(\left( \frac{i}{M+1}\right) ,\quad i=1,2,\ldots M-q,\) and therefore

Now, Eqs. (43) with (40) constitute a nonlinear system of equations in the unknown expansion coefficients \(c_i\) of dimension \((M+1)\), which may be solved via Newton’s iterative technique, and accordingly, the desired approximate solution can be obtained from (36).

5 Investigation of convergence and error analysis

In this section, we investigate carefully the convergence and error analysis of the proposed generalized Lucas expansion. In order to proceed in our study, the following lemmas are required.

Lemma 1

Let f(t) be an infinitely differentiable function at the origin. Then, it has the following generalized Lucas expansion

Proof

First, we expand f(t) as

Inserting the inversion formula (10) into (45) enables one to write

where \(\eta _{r,n}=\displaystyle \frac{(-1)^{\frac{n+r}{2}}\, \delta _{r}\, a^{-n}\, b^{\frac{n-r}{2}}\, n!}{(\frac{n-r}{2})!\, (\frac{n+r}{2})!}\).

Expanding the right-hand side of (46), and rearranging the similar terms, the following expansion is obtained

This immediately proves (44). \(\square \)

Lemma 2

[49] Let \(I_{\mu }(t)\) denote the modified Bessel function of order \(\mu \) of the first kind. The following identity is valid

Lemma 3

[50] The following inequality is satisfied by the modified Bessel function of the first kind \(I_{\mu }(t)\)

Lemma 4

For all \(t\in [0,1]\), the following inequality holds for generalized Lucas polynomials

Proof

The above inequality follows from the Binet’s formula along with the triangle inequality. \(\square \)

Now, we are in a position to state and prove the following two theorems concerning the convergence and error analysis of the proposed generalized Lucas expansion.

Theorem 4

If f(t) is defined on [0, 1] and \(|f^{(i)}(0)|\le L^i\), \(i\ge 0\), where L is a positive constant, and if f(t) has the expansion \(f(t)=\sum _{k=0}^{\infty }c_k\,\psi ^{a,b}_{k}(t)\), then one has:

-

1.

\(|c_k|\le \displaystyle \frac{|a|^{-k}\,L^k\,\cosh (2\,|a|^{-1}\,b^{\frac{1}{2}}\,L)}{k!}\).

-

2.

The series converges absolutely.

Proof

Lemma 1 implies that

and accordingly, and based on the assumption \(|f^{(i)}(0)|\le L^i,\ i\ge 0\), the following inequality holds

which in turn, after the application of Lemma 2 leads to the inequality

If we make use of the last inequality along with Lemma 3, then the following estimate for the expansion coefficients is obtained

The first part of Theorem 4 is now proved.

Now, we prove the second part of the theorem. Starting with the inequality in (52), we have

and therefore, the application of Lemma 4 yields

Now since \(\sum \nolimits _{k=0}^{\infty }\left| \displaystyle \frac{|a|^{-k}\,L^k\,\,\left( a+\sqrt{a^2+b}\right) ^k}{k!} \right| =e^{|a^{-1}\,L\,\left( a+\sqrt{a^2+b}\right) |},\) so the series converges absolutely. \(\square \)

Theorem 5

Let f(t) satisfy the assumptions stated in Theorem 4. Moreover, let \(e_M(t)=\sum \nolimits _{k=M+1}^{\infty }c_k\,\psi ^{a,b}_{k}(t),\) be the global error. The following inequality holds for \(|e_M(t)|\)

Proof

The first part of Theorem 4 enables one to write

and therefore, we have

where \(\Gamma (.)\) and \(\Gamma (.,.)\) are the so-called gamma and the incomplete gamma functions, respectively, (see [51]). The integral representations of gamma and incomplete gamma functions together with the inequality, \(e^{-t}<1,\ \forall \ t>0,\) lead to the inequality

\(\square \)

Remark 2

If we let \(s=1+\sqrt{1+a^{-2}\,b}\) and \(n=M+1\), we have now \(|e_{n-1}(t)|=\mathcal {O}(s^n/n!)\). From Stirling approximation of factorial function [52], we have

so, it is easy to see that \(|e_{n-1}(t)|=\mathcal {O}((s\,e)^n/n^{n+\frac{1}{2}}),\) which is a very rapid rate of convergence.

6 Numerical examples

This section concentrates on presenting some numerical results accompanied with comparisons with some numerical results in literature in order to validate the efficiency, high accuracy and applicability of the two proposed algorithms. In the following tests, the error is evaluated in maximum norm namely,

Example 1

[53] Consider the following linear fractional initial value problem:

The exact solution of the above equation is \(u(t)=t^3\). If GLTM (generalized Lucas tau method) is applied with \(N=3\), then the residual of Eq. (54) is calculated by the formula

and the operational matrices \(G^{(2)}\) and \(G^{(\alpha )}\) are given explicitly as follows:

The application of GLTM yields the following two equations

Moreover, the initial conditions (55) yield

Equations (55) and (56) can be immediately solved to give

and consequently \(u(t)=t^3,\) which is the exact solution.

Note 1

It is worthy to note here that the maximum pointwise error obtained in [53] for \(N=512\),and \(\alpha =\frac{1}{2},\, \alpha =\frac{3}{4}\) are, respectively, \(1.8626\,\times 10^{-9},\, 1.8624\,\times 10^{-9}\). while our method yields the exact solution with \(N=3\). This ascertains the advantage of our algorithm if compared with the other algorithms.

Example 2

[53] Consider the following multi-term nonlinear higher-order nonhomogeneous initial value problem:

where \(0<\alpha <1\), with the exact smooth solution \(u(t)=t^3/3\). We apply the GLCM which is proposed in Sect. 4.2 for the case corresponds to \(N=3\). The residual of Eq. (57) takes the form

and the operational matrix \(G^{(\alpha )}\) is given by:

The application of the collocation method yields the following real solution

and two refused conjugate complex solutions, and consequently \(u(t)=t^3/3\) which is the exact solution.

Note 2

It is worthy to note here that the maximum pointwise error obtained in [53] for the case \(N=256\) is \(2.392\,\times 10^{-6}\), while we obtained the exact solution with \(N=3\). To the best of our knowledge, this is the first numerical algorithm yield the exact solution for nonlinear fractional problems.

Example 3

Consider the following linear Riccati FDE:

The exact solution of (59) is \(u(x)=e^x,\) where \(\text {erf}(x)\) is the well-known error function, namely

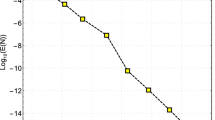

We apply GLTM. Table 1 lists the maximum pointwise error of Eq. (59) for different values of a and b. Figure 1 illustrates the absolute error for the case \(a=b=1\) and \(M=15\).

Absolute error of Example 3

Example 4

[54] Consider the following nonlinear Riccati FDE:

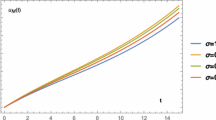

The exact solution of (60) in case \(\alpha =1\) is \(u(x)=\tanh x.\) GLCM is applied for the case \(a=b=1\). Table 2 compares our results with those obtained in [54]. Figure 2 indicates that the approximate solutions for various values of \(\alpha \) near the value 1 have a similar behavior.

Example 5

[55, 56] Consider the following linear fractional oscillator equation

subject the initial conditions

The exact solution of (61) for \(q=2\) is \(u(t)=\sin (\omega \,t).\) In this example, and due to the nonavailability of the exact solution in case of \(q\in (1,2)\) , we evaluate the stepwise error \(e_N=\max \nolimits _{t\in [0,1]}|u_N(t)-u_{N+1}(t)|\). Now, We consider the following two cases:

Case 1: \(L=1\)

Different solutions of Example 4

In Table 3, we compare GLTM for the case: \(a=b=\omega =1\), with the Legendre tau spectral method (LTSM), \(\tau \) denote the computational time of each algorithm. In addition, in Table 4, we list the values of \(e_N\) for different values of q, N.

Case 2: \(L>1\)

We apply GLTM, in order to show the influence of the values of L on the accuracy of the resulted numerical solutions; we list in Table 5 the maximum pointwise errors for the case \(a=b=\omega =1, N=20\) and \(q=2\) for different values of L . In addition, we plot Figs. 3, 4 and 5 to display the behavior of the numerical solutions for the three cases corresponds to: \(L=1,5,25\) for different values of q. The results of these figures along with the results of Table 5 show that the accuracy of the numerical solutions decreases as the values of L increases.

Remark 3

It is worthy to mention here that the definition of the stepwise error used in the above example for measuring error in case of the unavailability of exact solution of the FDE. This definition is used in many articles, see for example [57].

Example 6

[58] Consider the following nonlinear fractional initial value problem:

whose exact solution is: \(u(x)=x^2.\) We apply GLCM for the case \(M=2\) to get

Different solutions of Example 5—\(L=1\)

Different solutions of Example 5—\(L=5\)

Different solutions of Example 5—\(L=25\)

The expansion coefficients can be calculated by solving the nonlinear system:

The above nonlinear system can be solved exactly to give

and therefore

which is the exact solution.

Example 7

[59] Consider the following linear fractional boundary value problem:

where f(x) is chosen such that the exact solution of (64) is given by

We apply GLTM. Table 6 displays a comparison between the numerical scheme presented in [59] and GLTM for different values of M. The displayed results in this table ascertain that our approximations are closer to the exact one than those obtained by the method derived in [59] in almost all cases. This demonstrates that our method is advantageous if compared with the method developed in [59].

Remark 4

Aiming to illustrate the steps for the implementation of our two proposed algorithms, we add two algorithms. In Algorithm 1, we summarize the steps required for solving the nonlinear FDE in Example 4 by the method, namely GLCM, while in Algorithm 2, we summarize the steps required for solving the linear FDE in Example 5—Case 1 by the method, namely GLTM. The Mathematica program version 10 is employed for executing the required computations.

7 Conclusions

In this paper, the operational matrix of fractional derivatives of generalized Lucas polynomials is established. This operational matrix is novel, and it is fruitfully employed for handling multi-term linear and nonlinear fractional differential equations. Spectral solutions are obtained via the application of the collocation and tau methods. The convergence and error analysis are discussed using a new approach. Furthermore, the numerical results indicate that the proposed algorithms are efficient, applicable and easy in implementation. We do believe that the proposed algorithms can be applied to treat other kinds of fractional differential equations.

References

Canuto, C., Hussaini, M.Y., Quarteroni, A., Zang, T.A.: Spectral Methods in Fluid Dynamics. Springer, Berlin (1988)

Hesthaven, J., Gottlieb, S., Gottlieb, D.: Spectral Methods for Time-Dependent Problems. Cambridge University Press, Cambridge (2007)

Boyd, J.P.: Chebyshev and Fourier Spectral Methods. Courier Corporation, North Chelmsford (2001)

Trefethen, L.N.: Spectral Methods in MATLAB, vol. 10. SIAM, Philadelphia (2000)

Kopriva, D.A.: Implementing Spectral Methods for Partial Differential Equations: Algorithms for Scientists and Engineers. Springer, Berlin (2009)

Doha, E.H., Abd-Elhameed, W.M., Bassuony, M.A.: On using third and fourth kinds Chebyshev operational matrices for solving Lane–Emden type equations. Rom. J. Phys. 60, 281–292 (2015)

Bhrawy, A.H., Abdelkawy, M.A., Alzahrani, A.A., Baleanu, D., Alzahrani, E.O.: A Chebyshev-Laguerre-Gauss- Radau collocation scheme for solving a time fractional sub-diffusion equation on a semi-infinite domain. Proc. Rom. Acad. A 16, 490–498 (2015)

Doha, E.H., Abd-Elhameed, W.M.: On the coefficients of integrated expansions and integrals of Chebyshev polynomials of third and fourth kinds. Bull. Malays. Math. Sci. Soc.(2) 37(2), 383–398 (2014)

Abd-Elhameed, W.M., Doha, E.H., Youssri, Y.H.: Efficient spectral-Petrov-Galerkin methods for third- and fifth-order differential equations using general parameters generalized Jacobi polynomials. Quaest. Math. 36, 15–38 (2013)

Çenesiz, Y., Keskin, Y., Kurnaz, A.: The solution of the Bagley–Torvik equation with the generalized Taylor collocation method. J. Frankl. Inst. 347(2), 452–466 (2010)

Daftardar-Gejji, V., Jafari, H.: Solving a multi-order fractional differential equation using Adomian decomposition. Appl. Math. Comput. 189(1), 541–548 (2007)

Momani, S., Odibat, Z.: Numerical approach to differential equations of fractional order. J. Comput. Appl. Math. 207(1), 96–110 (2007)

Meerschaert, M., Tadjeran, C.: Finite difference approximations for two-sided space-fractional partial differential equations. Appl. Numer. Math. 56(1), 80–90 (2006)

Das, S.: Analytical solution of a fractional diffusion equation by variational iteration method. Comput. Math. Appl. 57(3), 483–487 (2009)

Das, D., Ray, P.C., Bera, R.K., Sarkar, P.: Solution of nonlinear fractional differential equation (NFDE) by homotopy analysis method. Int. J. Sci. Res. Edu. 3(3), 3084–3103 (2015)

Ghazanfari, B., Sepahvandzadeh, A.: Homotopy perturbation method for solving fractional Bratu-type equation. J. Math. Model. 2(2), 143–155 (2015)

Yang, Xiao-Jun, Baleanu, D., Khan, Y., Mohyud-Din, S.T.: Local fractional variational iteration method for diffusion and wave equations on Cantor sets. Rom. J. Phys. 59(1–2), 36–48 (2014)

Abd-Elhameed, W.M., Youssri, Y.H.: New spectral solutions of multi-term fractional order initial value problems with error analysis. CMES Comp. Model. Eng. 105, 375–398 (2015)

Abd-Elhameed, W.M., Youssri, Y.H.: New ultraspherical wavelets spectral solutions for fractional Riccati differential equations. Abs. Appl. Anal. (2014). doi:10.1155/2014/626275

Bhrawy, A.H., Zaky, M.A.: Numerical simulation for two-dimensional variable-order fractional nonlinear cable equation. Nonlinear Dyn. 80(1–2), 101–116 (2015)

Bhrawy, A.H., Taha, T.M., Machado, J.A.T.: A review of operational matrices and spectral techniques for fractional calculus. Nonlinear Dyn. 81(3), 1023–1052 (2015)

Yang, X.J., Machado, J.A.T., Srivastava, H.M.: A new numerical technique for solving the local fractional diffusion equation: two-dimensional extended differential transform approach. Appl. Math. Comput. 274, 143–151 (2016)

Moghaddam, B.P., Machado, J.A.T.: A stable three-level explicit spline finite difference scheme for a class of nonlinear time variable order fractional partial differential equations. Comput. Math. Appl. (2017). doi:10.1016/j.camwa.2016.07.010

Moghaddam, B.P., Yaghoobi, Sh, Machado, J.A.T.: An extended predictor–corrector algorithm for variable-order fractional delay differential equations. J. Comput. Nonlinear Dyn. 11(6), 061001 (2016). doi:10.1115/1.4032574

Wang, W., Wang, H.: Some results on convolved \((p, q)\)-Fibonacci polynomials. Integral Transforms Spec. Funct. 26(5), 340–356 (2015)

Gulec, H.H., Taskara, N., Uslu, K.: A new approach to generalized Fibonacci and Lucas numbers with binomial coefficients. Appl. Math. Comput. 220, 482–486 (2013)

Taskara, N., Uslu, K., Gulec, H.H.: On the properties of Lucas numbers with binomial coefficients. Appl. Math. Lett. 23(1), 68–72 (2010)

Koshy, T.: Fibonacci and Lucas numbers with applications. Wiley, New York (2011)

Koç, A.B., Çakmak, M., Kurnaz, A., Uslu, K.: A new Fibonacci type collocation procedure for boundary value problems. Adv. Differ. Equ. 2013(1), 1–11 (2013)

Mirzaee, F., Hoseini, S.: Application of Fibonacci collocation method for solving Volterra–Fredholm integral equations. Appl. Math. Comp. 273, 637–644 (2016)

Kürkçü, Ö.K., Aslan, E., Sezer, M.: A numerical approach with error estimation to solve general integro-differential–difference equations using Dickson polynomials. Appl. Math. Comput. 276, 324–339 (2016)

Abd-Elhameed, W.M.: On solving linear and nonlinear sixth-order two point boundary value problems via an elegant harmonic numbers operational matrix of derivatives. CMES Comput. Model. Eng. Sci. 101(3), 159–185 (2014)

Abd-Elhameed, W.M.: An elegant operational matrix based on harmonic numbers: effective solutions for linear and nonlinear fourth-order two point boundary value problems. Nonlinear Anal-Model. 21(4), 448–464 (2016)

Napoli, A., Abd-Elhameed, W.M.: An innovative harmonic numbers operational matrix method for solving initial value problems. Calcolo 54, 57–76 (2017)

Youssri, Y.H., Abd-Elhameed, W.M., Doha, E.H.: Ultraspherical wavelets method for solving Lane–Emden type equations. Rom. J. Phys. 60(9–10), 1298–1314 (2015)

Bhrawy, A.H., Doha, E.H., Machado, J.A.T., Ezz-Eldien, S.S.: An efficient numerical scheme for solving multi-dimensional fractional optimal control problems with a quadratic performance index. Asian J. Control 17, 2389–2402 (2015)

Bhrawy, A.H., Ezz-Eldien, S.S.: A new Legendre operational technique for delay fractional optimal control problems. Calcolo 53, 521–543 (2016)

Ezz-Eldien, S.S.: New quadrature approach based on operational matrix for solving a class of fractional variational problems. J. Comput. Phys 317, 362–381 (2016)

Zaky, M.A., Ezz-Eldien, S.S., Doha, E.H., Machado, J.A.T., Bhrawy, A.H.: New quadrature approach based on operational matrix for solving a class of fractional variational problems. J. Comput. Nonlinear Dyn. 11(6), 061002 (2016)

Li, C., Qian, D., Chen, Y.Q.: On Riemann-Liouville and Caputo derivatives. Discret. Dyn. Nat. Soc. 2011, 1–15 (2011). doi:10.1155/2011/562494

Li, C.P., Zhao, Z.G.: Introduction to fractional integrability and differentiability. Eur. Phys. J. Spec. Top. 193(1), 5–26 (2011)

Li, C., Dao, X., Guo, P.: Fractional derivatives in complex planes. Nonlinear Anal. 71(5), 1857–1869 (2009)

Li, C., Deng, W.: Remarks on fractional derivatives. Appl. Math. Comput. 187(2), 777–784 (2007)

Ishteva, M.: Properties and applications of the Caputo fractional operator. PhD thesis, Msc. thesis, Department of Mathematics, Universität Karlsruhe (TH), Sofia, Bulgaria, (2005)

Oldham, K.B.: The Fractional Calculus. Elsevier, Amsterdam (1974)

Podlubny, I.: Fractional Differential Equations: An Introduction to Fractional Derivatives, Fractional Differential Equations, to Methods of Their Solution and Some of Their Applications. Academic Press, New York (1998)

Koepf, W.: Hypergeometric summation. Second Edition, Springer Universitext Series, 2014, http://www.hypergeometric-summation.org. (2014)

Doha, E.H., Abd-Elhameed, W.M., Youssri, Y.H.: Second kind Chebyshev operational matrix algorithm for solving differential equations of Lane–Emden type. New Astron. 23, 113–117 (2013)

Abramowitz, M., Stegun, I.A.: Handbook of Mathematical Functions: With Formulas, Graphs, and Mathematical Tables, vol. 55. Courier Corporation, North Chelmsford (1964)

Luke, Y.L.: Inequalities for generalized hypergeometric functions. J. Approx. Theory 5(1), 41–65 (1972)

Rainville, E.D.: Special Functions. Macmillan, New York (1960)

Dutka, Jacques: The early history of the factorial function. Arch. Hist. Exact Sci. 43(3), 225–249 (1991)

Shiralashetti, S.C., Deshi, A.B.: An efficient Haar wavelet collocation method for the numerical solution of multi-term fractional differential equations. Nonlinear Dyn. 83(1–2), 293–303 (2016)

Keshavarz, E., Ordokhani, Y., Razzaghi, M.: Bernoulli wavelet operational matrix of fractional order integration and its applications in solving the fractional order differential equations. Appl. Math. Model. 38(24), 6038–6051 (2014)

Blaszczyk, T., Ciesielski, M.: Fractional oscillator equation: analytical solution and algorithm for its approximate computation. J. Vib. Control. 22(8), 2045–2052 (2016)

Abd-Elhameed, W.M., Youssri, Y.H.: Spectral solutions for fractional differential equations via a novel Lucas operational matrix of fractional derivatives. Rom. J. Phys. 61(5–6), 795–813 (2016)

Chen, Y., Ke, X., Wei, Y.: Numerical algorithm to solve system of nonlinear fractional differential equations based on wavelets method and the error analysis. Appl. Math. Comput. 251, 475–488 (2015)

Bhrawy, A.H., Zaky, M.A.: Shifted fractional-order Jacobi orthogonal functions: application to a system of fractional differential equations. Appl. Math. Model. 40(2), 832–845 (2016)

Irandoust-Pakchin, S., Lakestani, M., Kheiri, H.: Numerical approach for solving a class of nonlinear fractional differential equation. B. Iran. Math. Soc. 42(5), 1107–1126 (2016)

Acknowledgements

The authors would like to thank the three anonymous referees for critically reading the manuscript and also for their constructive comments, which helped substantially to improve the manuscript.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Abd-Elhameed, W.M., Youssri, Y.H. Generalized Lucas polynomial sequence approach for fractional differential equations. Nonlinear Dyn 89, 1341–1355 (2017). https://doi.org/10.1007/s11071-017-3519-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-017-3519-9