Abstract

In this paper, an efficient and accurate computational method based on the Legendre wavelets (LWs) together with the Galerkin method is proposed for solving a class of nonlinear stochastic Itô–Volterra integral equations. For this purpose, a new stochastic operational matrix (SOM) for LWs is derived. A collocation method based on hat functions (HFs) is employed to derive a general procedure for forming this matrix. The LWs and their operational matrices of integration and stochastic Itô-integration and also some useful properties of these basis functions are used to transform such problems into corresponding nonlinear systems of algebraic equations, which can be simply solved to achieve the solution of such problems. Moreover, the efficiency of the proposed method is shown for some concrete examples. The results reveal that the proposed method is very accurate and efficient. Furthermore as some useful applications, the proposed method is applied to obtain approximate solutions for some stochastic problems in the mathematics finance, biology, physics and chemistry.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Approximation by orthogonal families of basis functions has found wide applications in sciences and engineering [1]. The main idea of using an orthogonal basis is that the problem under consideration is reduced into solving a system of algebraic equations which can be simply solved to achieve the solution of the problem under study. This can be done by truncated series of orthogonal basis functions for the solution of the problem and using the operational matrices of these basis functions [1]. Depending on their structure, the orthogonal functions may be mainly classified into three families [2]. The first family includes sets of piecewise constant orthogonal functions such as the Walsh functions, block pulse functions, etc. The second family consists of sets of orthogonal polynomials such as Laguerre, Legendre, Chebyshev, etc., and the third family is the widely used sets of sine-cosine functions. It is worth noting that approximating a continuous function with piecewise constant basis functions results in an approximation that is piecewise constant. On the other hand if a discontinuous function is approximated with continuous basis functions, the resulting approximation is continuous and can not properly model the discontinuities. In remote sensing, images often have properties that vary continuously in some regions and discontinuously in others. Thus, in order to properly approximate these spatially varying properties, it is necessary to use approximating functions that can accurately model both continuous and discontinuous phenomena. Therefore, neither continuous basis functions nor piecewise constant basis functions taken alone can efficiently and accurately model these spatially varying properties. However, wavelets basis functions are another basis set which offers considerable advantages over alternative basis sets and allows us to attack problems not accessible with conventional numerical methods. Their main advantages are [1]: the basis set can be improved in a systematic way, different resolutions can be used in different regions of space, the coupling between different resolution levels is easy, there are few topological constraints for increased resolution regions, the Laplace operator is diagonally dominant in an appropriate wavelet basis, the matrix elements of the Laplace operator are very easy to calculate and the numerical effort scales linearly with respect to the system size.

It is also well known that we can approximate any smooth function by the eigenfunctions of certain singular Sturm–Liouville problems such as Laguerre, Legendre or Chebyshev orthogonal polynomials. In this manner, the truncation error approaches zero faster than any negative power of the number of basis functions used in the approximation [3]. This phenomenon is usually referred to as “The spectral accuracy” [3]. But, in the case that the function under approximation is not analytic, these basis functions do not work well and therefore spectral accuracy does not happen. For these situations, wavelet functions will be more effective. In this communication, it is worth mentioning that the LWs have mutually spectral accuracy, orthogonality and other useful properties of wavelets.

Nonlinear stochastic functional equations have been extensively studied over a long period of time since they are fundamental for modeling science and engineering phenomena [4–8]. As the computational power increases, it becomes feasible to use more accurate functional equation models and solve more demanding problems. Moreover, the study of stochastic or random functional equations can be very useful in application, due to the fact that they arise in many situations. For example, stochastic integral equations arise in a wide range of problems such as the stochastic formulation of problems in reactor dynamics [9, 10], the study of the growth of biological populations [11], the theory of automatic systems resulting in delay-differential equations [12], and in many other problems occurring in the general areas of biology, physics and engineering. Also, nowadays, there is an increasing demand to investigate the behavior of even more sophisticated dynamical systems in physical, medical, engineering and financial applications [13–19]. These systems often depend on a noise source, like a Gaussian white noise, governed by certain probability laws, so that modeling such phenomena naturally involves the use of various stochastic differential equations (SDEs) [11, 20–26], or in more complicated cases, stochastic Volterra integral equations and stochastic integro-differential equations [27–34]. In most cases it is difficult to solve such problems explicitly. Therefore, it is necessary to obtain their approximate solutions by using some numerical methods [9–15, 20, 29–31].

In recent years, the LWs have been used to estimate solutions of some different types of functional equations, for instances see [1, 35–40]. In this paper, the LWs will be used for solving the following nonlinear stochastic Itô–Volterra integral equation:

where X(t), f (t), g(t), h(t), are the stochastic processes defined on same probability space \((\Omega ,{\mathcal {F}},\mathbf {P})\), X(t) is an unknown stochastic function to be found, B(t) is a Brownian motion process and the second integral in (1) is an Itô integral. Moreover, it is assumed that \(\mu \) and \(\sigma \) are analytic functions.

It is worth mentioning that a real-valued stochastic process \(B(t),~t\in [0,1]\) is called Brownian motion, if it satisfies the following properties [41]:

-

(i)

\(B(0)=0\) (with the probability 1).

-

(ii)

For \(0 \le s < t \le 1\) the random variable given by the increment \(B(t)-B(s)\) is normally distributed with mean zero and variance \(t-s\); equivalently, \(B(t)-B(s)\sim \sqrt{t-s}~{\mathcal {N}}(0,1)\), where \({\mathcal {N}}(0,1)\) denotes a normally distributed random variable with zero mean and unit variance.

-

(iii)

For \(0\le s< t < u < v \le 1\) the increments \(B(t)-B(s)\) and \(B(v)-B(u)\) are independent.

In order to compute an approximate solution for Eq. (1), we first obtain some new useful properties for the LWs and then derive an operational matrix of stochastic Itô-integration for these basis functions to eliminate the stochastic integral operation and reduce the problem into solving a system of algebraic equations. The operational matrix of stochastic Itô-integration for LWs can be expressed as:

where B(t) is a Brownian motion process and \(\Psi (t)=[\psi _{1}(t),\psi _{2}(t),\ldots ,\psi _{\hat{m}}(t)]^{T}\), in which \(\psi _{i}(t)\,(i=1,2,\ldots ,\hat{m})\) are LWs and \(P_{s}\) is the operational matrix of stochastic Itô-integration for LWs.

The proposed method is based on reducing the problem under study to a system of nonlinear algebraic equations by expanding the solution as LWs with unknown coefficients and using the operational matrices of integration and stochastic integration. Moreover, a new technique for computation of the nonlinear terms in such equations is presented.

This paper is organized as follows: In Sect. 2, the LWs and their properties are described. In Sect. 3, the proposed method is described for solving nonlinear stochastic Itô–Volterra integral equations. In Sect. 4, the proposed method is applied for solving some numerical examples. In Sect. 5, some applications of the proposed computational method are described. Finally, a conclusion is drawn in Sect. 6.

2 The LWs and their properties

In this section, we briefly review the LWs and their properties which are used further in this paper.

2.1 Wavelets and the LWs

Wavelets constitute a family of functions constructed from dilation and translation of a single function \(\psi (t)\) called the mother wavelet. When the dilation parameter a and the translation parameter b vary continuously, we have the following family of continuous wavelets as [35]:

If we restrict the parameters a and b to discrete values as \(a=a_{0}^{-k}\), \(b=nb_{0}a_{0}^{-k}\), where \(a_{0}>1\), \(b_{0}>0\), we have the following family of discrete wavelets:

where the functions \(\psi _{kn}(t)\) form a wavelet basis for \(L^{2}({\mathbb {R}})\). In practice, when \(a_{0}=2\) and \(b_{0}=1\), the functions \(\psi _{kn}(t)\) form an orthonormal basis.

The LWs \(\psi _{nm}(t)=\psi (k,n,m,t)\) have four arguments, \(n=1,2,\ldots ,2^{k}\), k is any arbitrary non-negative integer, m is the degree of the Legendre polynomials and independent variable t is defined on [0, 1]. They are defined on the interval [0, 1] by [1]:

Here \(P_{m}(t)\) are the well-known Legendre polynomials of degree m, which are orthogonal with respect to the wight function \(w(t)=1\), on the interval \([-1,1]\) and satisfy the following recursive relation [3]:

The set of the LWs is an orthogonal set with respect to the weight function \(w(t)=1\).

2.2 Function approximation

A function f(t) defined over [0, 1] may be expanded by the LWs as:

where

and (., .) denotes the inner product in \(L^{2}[0,1]\).

By truncating the infinite series in Eq. (7), we can approximate f(t) as follows:

where T indicates transposition, C and \(\Psi (t)\) are \(\hat{m}=2^{k}M\) column vectors.

For simplicity, Eq. (9) can be also written as:

where \(c_{i}=c_{nm}\) and \(\psi _{i}(t)=\psi _{nm}(t)\), and the index i is determined by the relation \(i=M(n-1)+m+1\).

Thus we have:

and

By taking the collocation points:

into Eq. (11), we define the LWs matrix \(\Phi _{\hat{m}\times \hat{m}}\) as:

For example, for \(k=1,\,M=3\), we have:

2.3 Operational matrix of stochastic Itô-integration

The stochastic Itô-integration of the vector \(\Psi (t)\), defined in Eq. (11), may be expressed as:

where \(P_{s}\) is the \(\hat{m}\times \hat{m}\) stochastic operational matrix (SOM) for the LWs.

In the sequel we express an explicit form of the matrix \(P_{s}\). To this end, we need to introduce another family of basis functions, namely hat functions (HFs). An \(\hat{m}\)-set of these basis functions is defined on the interval [0, 1] as [42–44]:

and

where \(h=\frac{1}{\hat{m}-1}\).

From the definition of the HFs, we have:

An arbitrary function X(t) defined over [0, 1] may be expanded by the HFs as:

where

and

The important aspect of using the HFs in approximating a function X(t) lies in the fact that the coefficients \(x_{i}\) in Eq. (19) are given by:

Theorem 2.1

Suppose \(\Psi (t)\) be the LWs vector, defined in Eq. (11). Then the stochastic Itô-integration of the vector \(\Psi (t)\) can be expressed as follows:

where \(\Phi _{\hat{m}\times \hat{m}}\) is the LWs matrix which is defined in Eq. (13) and \(\hat{P}_{s}\) is the operational matrix of stochastic Itô-integration for the HFs which is given in [44] by:

where

and

Proof

By considering Eqs. (19) and (22), it can be simply seen that the LWs can be expanded in terms of an \(\hat{m}\)-set of HFs as:

Now, by considering Eq. (14), and using Eqs. (27) and (24), we obtain:

Also from Eqs. (14) and (28), we have:

Then, by considering Eqs. (27) and (29), we obtain the LWs operational matrix of stochastic Itô-integration \(P_{s}\) as:

which completes the proof. \(\square \)

2.4 Operational matrix of integration

The integration of the vector \(\Psi (t)\), defined in Eq. (11), can be expressed as:

where the \(\hat{m}\times \hat{m}\) matrix P is called the operational matrix of integration for the LWs.

Remark 1

By considering the process of proving Theorem 2.1, we can approximate the matrix P as:

where the \(\hat{m}\times \hat{m}\) matrix \(\hat{P}\) is called the operational matrix of integration for the HFs and is given in [43] as follows:

2.5 Some new useful results for the LWs

In this section, we obtain some new useful results for the LWs which will be used further in this paper.

Lemma 2.2

Suppose \( X^{T}\Phi (t)\) and \(Y^{T}\Phi (t)\) be the expansions of X(t) and Y(t) by the HFs, respectively. Then we have:

where \(H=X\odot Y\), denotes pointwise product of X and Y, so that for any two matrices A and B of the same dimensions it yields a matrix of the same dimensions with elements \(\left( A\odot B\right) _{ij}=\left( A\right) _{ij}\left( B\right) _{ij}\).

Proof

By considering Eqs. (19) and (22), we have:

and

which completes the proof. \(\square \)

Corollary 2.3

Suppose \( X^{T}\Phi (t)\) be the expansion of X(t) by the HFs. Then for any integer \(q\ge 2\) we have:

Proof

By considering Lemma 2.2, the proof will be straightforward. \(\square \)

Theorem 2.4

[45] Suppose F be an analytic function and \(X^{T}\Phi (t)\) be the expansion of X(t) by the generalized hat basis functions. Then we have:

where \(F\left( X^{T}\right) =[F(x_{0}),F(x_{1}),\ldots ,F(x_{\hat{m}-1})]\).

Theorem 2.5

Suppose F be an analytic function and \( X^{T}\Psi (t)\) be the expansion of X(t) by the LWs. Then we have:

where \(\widetilde{X}^{T}=X^{T}\Phi _{\hat{m}\times \hat{m}}\).

Proof

By considering Eq. (27) and Theorem 2.4, we have:

So from Eqs. (27) and (38), we have:

which completes the proof. \(\square \)

Corollary 2.6

Suppose \(X^{T}\Psi (t)\) and \( Y^{T}\Psi (t)\) be the expansions of X(t) and Y(t) by the LWs, respectively, and also F and G be two analytic functions. Then we have:

Proof

By considering Theorem 2.5, Eq. (27) and Lemma 2.2, the proof will be straightforward. \(\square \)

3 Description of the proposed computational method

In this section, we apply the operational matrices of integration and stochastic Itô-integration of the LWs together with some of their useful properties of these basis functions for solving nonlinear stochastic Itô–Volterra integral equation:

where X(t), f(t), g(t) and h(t) are the stochastic processes defined on the same probability space \((\Omega , {\mathcal {F}},\mathbf {P})\), X(t) is an unknown stochastic function to be found, B(t) is a Brownian motion process and the second integral in Eq. (41) is an Itô integral. Moreover it is assumed that \(\mu \) and \(\sigma \) are analytic functions.

For solving this equation, we approximate X(t), h(t), f(t) and g(t) by the LWS as follows:

and

where X, H, C and D are the LWs coefficient vectors.

From Eq. (42) and Theorem 2.5, we have:

where \(\widetilde{X}^{T}=X^{T}\Phi _{\hat{m}\times \hat{m}}\).

Now from Eqs. (44), (45) and Corollary 2.6, we have:

where \(\widetilde{C}^{T}=C^{T}\Phi _{\hat{m}\times \hat{m}}\) and \(\widetilde{D}^{T}=D^{T}\Phi _{\hat{m}\times \hat{m}}\).

So by substituting Eqs. (42), (43) and (46) into Eq. (41), and using operational matrices of integration and stochastic Itô-integration, we can write the residual function R(t) for stochastic integral equation (41) as follows:

As in a typical Galerkin method [3], we generate \(\hat{m}\) nonlinear algebraic equations:

where \(\psi _{j}(t)=\psi _{nm}(t)\), and the index j is determined by the relation \(j=M(n-1)+m+1\).

Finally, by solving this system for the unknown vector X, we obtain an approximate solution for the problem by substituting X in Eq. (42).

The algorithm of the proposed method is presented as follows:

Algorithm 1 |

Input: \(M,\,N\in {\mathbb {N}},\,k\in {\mathbb {Z}}^{+}\); Brownian motion process B(t); the functions \(h,\,f,\, g\in L^{2}\left[ 0,1\right] \) and \(\mu ,\,\sigma \in C^{\infty }\left[ 0,1\right] \). |

Step 1: Define the LWs \(\psi _{nm}(t)\) from Eq. (5). |

Step 2: Construct the LWs vector \(\Psi (t)\) from Eq. (11). |

Step 3: Compute the LWs matrix \(\Phi _{\hat{m}\times \hat{m}}\triangleq \left[ \Psi (0),\Psi \left( \frac{1}{\hat{m}-1}\right) ,\ldots ,\Psi (1)\right] \). |

Step 4: Compute the integration operational matrix P using Eqs. (31)–(33) and SOM \(\hat{P}_s\) using Eq. (25). |

Step 5: Compute the LWs stochastic operational matrix \(P_s=\Phi _{\hat{m}\times \hat{m}}\hat{P}_s\Phi ^{-1}_{\hat{m}\times \hat{m}}\). |

Step 6: Compute the vectors \(H,\,C\) and D in Eqs. (43) and (44) using Eq. (8). |

Step 7: Compute the vectors \(\widetilde{C}^{T}=C^{T}\Phi _{\hat{m}\times \hat{m}}\) and \(\widetilde{D}^{T}=D^{T}\Phi _{\hat{m}\times \hat{m}}\). |

Step 8: Put \(R(t)=\left( X^{T}- H^{T}-\left( \widetilde{C}^{T}\odot \mu \left( \widetilde{X}^{T}\right) \right) \Phi _{\hat{m}\times \hat{m}}^{-1}P\right. \) \( \left. - \left( \widetilde{D}^{T}\odot \sigma \left( \widetilde{X}^{T}\right) \right) \Phi _{\hat{m}\times \hat{m}}^{-1}P_{s}\right) \Psi (t)\). |

Step 9: Construct the nonlinear system of algebraic equations: |

\(\qquad \displaystyle \int _{0}^{1}R(t)\psi _{j}(t)\mathrm{d}t=0, \quad j=1,2,\ldots ,\hat{m}.\) |

Step 10: Solve the nonlinear system of algebraic equations in Step 9 and obtain the unknown vector X. |

Output: The approximate solution: \(X(t)\simeq X^T\Psi (t)\). |

4 Illustrative test problems

In this section, we consider some numerical examples to illustrate the efficiency and reliability of the proposed method. For computational purposes, it is useful to consider discretized Brownian motion, where B(t) is specified at t discrete values and employed an spline interpolation to construct B(t). We thus set \(\Delta t = \frac{1}{N}\) for some positive integer N and let \(B_{i}\) denote \(B(t_{i})\) with \(t_{i}=i\Delta t\). Condition (i) in introduction says that \(B_{0} = 0\) with the probability 1, and Conditions (ii) and (iii) tell us that

where each \(dB_{i}\) is an independent random variable of the form \(\sqrt{\Delta t}{\mathcal {N}}(0,1)\).

Also we report the absolute errors in some points \(t_{j}\in [0,1]\) as:

The graphs of the exact and approximate solutions for Example 1

Example 1

Let us first consider the following nonlinear stochastic Itô–Volterra integral equation [46]:

where X(t) is an unknown stochastic process defined on the probability space \((\Omega ,{\mathcal {F}},\mathbf {P})\), and B(t) is a Brownian motion process. The exact solution of this problem is given in [46] by:

This problem is also solved by the proposed computational method for \(X_{0}=a=\frac{1}{20}\). The graphs of the exact and approximate solutions for \(\hat{m}=96\,(k=5, M=3)\) and \(N=120\) are shown in Fig. 1. The absolute errors of the approximate solution at some different points \(t\in [0,1]\), for \(\hat{m}=24\,(k=3, M=3)\), \(\hat{m}=48\,( k=4, M=3)\) and \(\hat{m}=96\,(k=5, M=3)\) are shown in Table 1. From Fig. 1 and Table 1, it can be seen that the proposed method is very efficient and accurate in solving this problem.

The graphs of the exact and approximate solutions for Example 2

Example 2

Consider the following nonlinear stochastic Itô–Volterra integral equation [46]:

where X(t) is an unknown stochastic process defined on the probability space \((\Omega ,{\mathcal {F}},\mathbf {P})\), and B(t) is a Brownian motion process. The exact solution of this problem is given in [46] by:

This problem is also solved by the proposed computational method for \(X_{0}=0\) and \(a=\frac{1}{30}\). The graphs of the exact and approximate solutions for \(\hat{m}=96\) and \(N=82\) are shown in Fig. 2. The absolute errors of the approximate solution at some different points \(t\in [0,1]\), for \(\hat{m}=24\), \(\hat{m}=48\) and \(\hat{m}=96\) are shown in Table 2. From Fig. 2 and Table 2, it can be seen that the proposed method is very efficient and accurate in solving this problem.

The graphs of the exact and approximate solutions for Example 3

Example 3

Consider the following nonlinear stochastic Itô–Volterra integral equation [46]:

where X(t) is an unknown stochastic process defined on the probability space \((\Omega ,{\mathcal {F}},\mathbf {P})\), and B(t) is a Brownian motion process. The exact solution of this problem is given in [46] by:

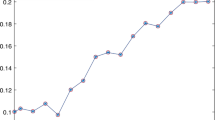

where \(U(t)=\exp \left( \left( a-\frac{c^{2}}{2}\right) t+cB(t)\right) \), and \(a,\,b\) and c are constants. This problem is also solved by the proposed computational method for \(X_{0}=\frac{1}{10}\), \(a=\frac{1}{8}\), \(b=\frac{1}{32}\) and \(c=\frac{1}{20}\). The graphs of the exact solution and approximate solutions for \(\hat{m}=96\) and \(N=60\) are shown in Fig. 3. The absolute errors of the approximate solution at some different points \(t\in [0,1]\), for \(\hat{m}=24\), \(\hat{m}=48\) and \(\hat{m}=96\) are shown in Table 3. From Fig. 3 and Table 3, it can be seen that the proposed method is very efficient and accurate in solving this problem.

The graphs of the exact and approximate solutions for Example 4

Example 4

Consider finally the following nonlinear stochastic Itô–Volterra integral equation [46]:

where X(t) is an unknown stochastic process defined on the probability space \((\Omega ,{\mathcal {F}},\mathbf {P})\), and B(t) is a Brownian motion process. The exact solution of this problem is given in [46] by:

This problem is also solved by the proposed computational method for \(X_{0}=\frac{1}{100}\) and \(a=\frac{1}{30}\). The graphs of the exact and approximate solutions for \(\hat{m}=96\) and \(N=65\) are shown in Fig. 4. The absolute errors of the approximate solution at some different points \(t\in [0,1]\), for \(\hat{m}=24\), \(\hat{m}=48\) and \(\hat{m}=96\) are shown in Table 4. From Fig. 4 and Table 4, it can be seen that the proposed method is very efficient and accurate in solving this problem.

5 Some applications of the proposed method

This section deals with the proposed computational method in Sect. 3, to obtain approximate solutions for some practical stochastic problems.

5.1 The mathematical finance

A well-known stochastic model which is used to stock prices, stochastic volatilities, and electricity prices is as follows [47]:

where \(\kappa >0,\,\bar{\mu }\) and \(\bar{\sigma }\) are constants and also stochastic process S(t) for all \(t>0\) is positive.

It is worth mentioning that stochastic volatility models have become popular for derivative pricing and hedging in the last decade as the existence of a non-flat implied volatility surface (or term-structure) has been noticed and become more pronounced, especially since the 1987 crash. This phenomenon, which is well-documented [48, 49], stands in empirical contradiction to the consistent use of a classical Black–Scholes (constant volatility) approach to pricing options and similar securities. However, it is clearly desirable to maintain as many of the features as possible that have contributed to this model’s popularity and longevity, and the natural extension pursued both in the literature and in practice has been to modify the specification of volatility in the stochastic dynamics of the underlying asset price model.

To solve stochastic model in Eq. (49), by the transformation \(S(t)=X(t)+1\) in which X(t) is the unknown stochastic process, we transform Eq. (49) to the nonlinear stochastic differential equation:

where \(X_{0}=S_{0}-1\).

However, we can write the integral form of the nonlinear SDE (50) as:

It is obvious that the proposed computational method can be used to obtain X(t) as the solution of (51). Finally, S(t) as the solution of original problem is \(S(t)=1+X(t)\).

As a numerical example, we consider the nonlinear stochastic differential equation (49) with \(S_{0}=0.1,\,\kappa =1,\,\bar{\mu }=0.5\) and \(\bar{\sigma }=0.75\). This problem is also solved by the proposed computational method for \(\hat{m}=96\) and \(N=100\). The graph of the approximate solution is shown in Fig. 5.

5.2 The biological systems

One of the most popular nonlinear systems in the biology is the Lotka–Volterra one [50]. As is well known, it was proposed by Volterra to account for the observed periodic variations in a predator–prey system. The Lotka–Volterra model can serve as a stepping stone toward the understanding of most realistic but still mathematically less tractable models of predator–prey systems [50]. The deterministic system which can be used to explain the problem is described by ordinary differential equations and is given by [50]:

where \(N_{1}(t)\) means the number of preys, and \(N_{2}(t)\) the number of predators.

One of the most simple stochastic models for Eq. (52) is called stochastic Lotka–Volterra model and is given as follows [50]:

where \(B_{1}(t)\) and \(B_{2}(t)\) are independent Brownian motions.

We can write the integral form of the two-dimensional SDE (53) as follows:

To solve Eq. (54) using the proposed computational method, we approximate \(N_{1}(t)\) and \(N_{2}(t)\) by the LWS as follows:

where \(N_{1}\) and \(N_{2}\) are the LWs coefficient vectors which should be found, and \(\Psi (t)\) is the vector which is defined in Eq. (11).

Moreover, from Eq. (55) and Corollary 2.6, we have:

where \(\widetilde{N}_{1}^{T}=N_{1}^{T}\Phi _{\hat{m}\times \hat{m}}\) and \(\widetilde{N}_{2}^{T}=N_{2}^{T}\Phi _{\hat{m}\times \hat{m}}\).

Moreover, by expanding \(N_{10}\) and \(N_{20}\) in terms of the Lws, we have:

where e is the LWs coefficients vector for the unit function.

Consequently by substituting Eqs. (55)–(57) into Eq. (54), and considering Eqs. (14) and (31), we have:

Now, from Eq. (58), we can write the residual functions \(R_{1}(t)\) and \(R_{2}(t)\) for system (54) as follows:

As in a typical Galerkin method [3], we generate \(2\hat{m}\) nonlinear algebraic equations:

where \(\psi _{j}(t)=\psi _{nm}(t)\), and the index j is determined by the relation \(j=M(n-1)+m+1\).

Finally, by solving system (60) for the unknown vectors \(N_{1}\) and \(N_{2}\), we obtain the approximate solutions of the problem as \(N_{1}(t)\simeq N_{1}^{T}\Psi (t)\) and \(N_{2}(t)\simeq N_{2}^{T}\Psi (t)\).

The algorithm of the proposed computational method is presented as follows:

Algorithm 2 |

Input: \(M\in {\mathbb {N}},\,k,N\in {\mathbb {Z}}^{+}\); Brownian motion processes \(B_{1}(t)\) and \(B_{2}(t)\); \(a_{i},\,b_{i},\,\bar{\sigma }_{i},\,N_{i0}\) for \(i=1,2\). |

Step 1: Define the LWs \(\psi _{nm}(t)\) from Eq. (5). |

Step 2: Construct the LWs vector \(\Psi (t)\) from Eq. (11). |

Step 3: Compute the LWs matrix \(\Phi _{\hat{m}\times \hat{m}}\triangleq \left[ \Psi (0),\Psi \left( \frac{1}{\hat{m}-1}\right) ,\ldots ,\Psi (1)\right] \). |

Step 4: Compute the integration operational matrix P using Eqs. (31)–(33) and SOM \(\hat{P}_s\) using Eq. (25). |

Step 5: Compute the LWs stochastic operational matrix \(P_s=\Phi _{\hat{m}\times \hat{m}}\hat{P}_s\Phi ^{-1}_{\hat{m}\times \hat{m}}\). |

Step 6: Compute the vectors \(e^{T}\) using Eq. (8). |

Step 7: Compute the vectors \(\widetilde{N}_{1}^{T}=N_{1}^{T}\Phi _{\hat{m}\times \hat{m}}\) and \(\widetilde{N}_{2}^{T}=N_{2}^{T}\Phi _{\hat{m}\times \hat{m}}\). |

Step 8: Put \(\displaystyle \left\{ \begin{array}{l} \displaystyle R_{1}(t) \!=\!\left( N_{1}^{T}-N_{10}e^{T}\!-\!\left( b_{1}N_{1}^{T}\right. \right. \\ \left. \left. -a_{1}\left( \widetilde{N}_{1}^{T}\odot \widetilde{N}_{2}^{T}\right) \Phi _{\hat{m}\times \hat{m}}^{-1}\right) P\!-\!\bar{\sigma }_{1}N_{1}^{T}P_{s}\right) \Psi (t),\\ \displaystyle R_{2}(t)\!=\!\left( N_{2}^{T}\!-\!N_{20}e^{T}\!-\!\left( b_{2}N_{2}^{T}\right. \right. \\ \left. \left. -a_{2}\left( \widetilde{N}_{1}^{T}\odot \widetilde{N}_{2}^{T}\right) \Phi _{\hat{m}\times \hat{m}}^{-1}\right) P\!-\!\bar{\sigma }_{2}N_{2}^{T}P_{s}\right) \Psi (t). \end{array}\right. \). |

Step 9: Construct the nonlinear system of algebraic equations: |

\(\displaystyle \left\{ \begin{array}{l} \displaystyle \left( R_{1}(t),\psi _{j}(t)\right) \!=\!\int _{0}^{1}R_{1}(t)\psi _{j}(t)\mathrm{d}t\!=\!0, \quad j\!=\!1,2,\ldots ,\hat{m}, \\ \displaystyle \left( R_{2}(t),\psi _{j}(t)\right) \!=\!\int _{0}^{1}R_{2}(t)\psi _{j}(t)\mathrm{d}t\!=\!0, \quad j\!=\!1,2,\ldots ,\hat{m}, \end{array}\right. \) |

Step 10: Solve the nonlinear system of algebraic equations in Step 9 and obtain the unknown vectors \(N_{1}\) and \(N_{2}\). |

Output: The approximate solutions: \(N_{1}(t)\simeq N_{1}^T\Psi (t)\) and \(N_{2}(t)\simeq N_{2}^T\Psi (t)\). |

As a numerical example, we consider the nonlinear system of stochastic integral equations (54) with \(a_{1}=0.3,\,a_{2}=0.1, \,b_{1}=2.0,\,b_{2} = 1.5,\,\bar{\sigma }_{1}=0.2, \,\bar{\sigma }_{2}=0.4,\,N_{10}\) and \(N_{20}=1.0\). This problem is also solved by the proposed method for \(\hat{m}=48\) and \(N=80\). The behavior of the numerical solutions is shown in Fig 6.

5.3 The Duffing–Van der Pol Oscillator

We investigate a simplified version of a Duffing–Van der Pol oscillator [46]:

driven by multiplicative white noise \(\xi (t)=\frac{\mathrm{d}B(t)}{\mathrm{d}t}\), where \(\alpha \) is a real-valued parameter. The corresponding Itô stochastic differential equation is two-dimensional, with components \(X_{1}\) and \(X_{2}\) representing the displacement x and speed \(\dot{x}\), respectively [46]:

where B(t) is a one-dimensional standard Wiener process and \(\bar{\sigma }\) controls the strength of the induced multiplicative noise.

We can write the integral form of the two-dimensional SDE (62) as follows:

To solve Eq. (63) using the proposed computational method, we approximate \(X_{1}(t)\) and \(X_{2}(t)\) by the LWS as follows:

where \(X_{1}\) and \(X_{2}\) are the LWs coefficient vectors which should be found, and \(\Psi (t)\) is the vector which is defined in (11).

Also from Eq. (64) and Theorem 2.5, we have:

where \(\widetilde{X}_{1}^{T}=X_{1}^{T}\Phi _{\hat{m}\times \hat{m}}\).

Moreover, by expanding \(X_{10}\) and \(X_{20}\) in terms of the Lws, we have:

where e is the LWs coefficients vector for the unit function.

Therefore by substituting Eqs. (64)–(66) into (63), and considering Eqs. (14) and (31), we have:

Now, from Eq. (67), we can write the residual functions \(R_{1}(t)\) and \(R_{2}(t)\) for system (63) as follows:

As in a typical Galerkin method, we generate \(2\hat{m}\) nonlinear algebraic equations:

Finally, by solving system (69) with respect to the unknown vectors \(X_{1}\) and \(X_{2}\), we obtain the approximate solutions of the problem as \(X_{1}(t)\simeq X_{1}^{T}\Psi (t)\) and \(X_{2}(t)\simeq X_{2}^{T}\Psi (t)\).

The algorithm of the proposed computational method is presented as follows:

Algorithm 3 |

Input: \(M\in {\mathbb {N}},\,k,N\in {\mathbb {Z}}^{+}\); Brownian motion process B(t); \(\alpha ,\,\bar{\sigma },\,X_{10}\) and \(X_{20}\). |

Step 1: Define the LWs \(\psi _{nm}(t)\) from Eq. (5). |

Step 2: Construct the LWs vector \(\Psi (t)\) from Eq. (11). |

Step 3: Compute the LWs matrix \(\Phi _{\hat{m}\times \hat{m}}\triangleq \left[ \Psi (0),\Psi \left( \frac{1}{\hat{m}-1}\right) ,\ldots ,\Psi (1)\right] \). |

Step 4: Compute the integration operational matrix P using Eqs. (31)–(33) and SOM \(\hat{P}_s\) using Eq. (25). |

Step 5: Compute the LWs stochastic operational matrix \(P_s=\Phi _{\hat{m}\times \hat{m}}\hat{P}_s\Phi ^{-1}_{\hat{m}\times \hat{m}}\). |

Step 6: Compute the vectors \(e^{T}\) using Eq. (8). |

Step 7: Compute the vector \(\widetilde{X}_{1}^{T}=X_{1}^{T}\Phi _{\hat{m}\times \hat{m}}\). |

Step 8: Put \(\displaystyle \left\{ \begin{array}{l} \displaystyle R_{1}(t)= \left( X_{1}^{T}-X_{10}e^{T}-X_{2}^{T}P\right) \Psi (t), \\ \displaystyle R_{2}(t)= \left( X_{2}^{T}-X_{20}e^{T}-\left( \alpha X_{1}^{T}\right. \right. \\ \left. \left. -\left( \widetilde{X}_{1}^{T}\right) ^{3}\Phi _{\hat{m}\times \hat{m}}^{-1}-X_{2}^{T}\right) P-\bar{\sigma } X_{1}^{T}P_{s}\right) \Psi (t) . \end{array}\right. \). |

Step 9: Construct the nonlinear system of algebraic equations: |

\(\displaystyle \left\{ \begin{array}{c} \displaystyle \left( R_{1}(t),\psi _{j}(t)\right) =\int _{0}^{1}R_{1}(t)\psi _{j}(t)\mathrm{d}t=0, \quad j=1,2,\ldots ,\hat{m}, \\ \displaystyle \left( R_{2}(t),\psi _{j}(t)\right) =\int _{0}^{1}R_{2}(t)\psi _{j}(t)\mathrm{d}t=0, \quad j=1,2,\ldots ,\hat{m}, \end{array}\right. \) |

Step 10: Solve the nonlinear system of algebraic equations in Step 9 and obtain the unknown vectors \(X_{1}\) and \(X_{2}\). |

Output: The approximate solutions: \(X_{1}(t)\simeq X_{1}^T\Psi (t)\) and \(X_{2}(t)\simeq X_{2}^T\Psi (t)\). |

As a numerical example, we consider the Duffing–Van der Pol Oscillator (62) with \(\alpha =0\) and the two different values \(\bar{\sigma }=0.0\) and \(\bar{\sigma }=0.0\) over the interval [0, 8], starting at \(\left( X_{10},X_{20}\right) =(-\kappa \varepsilon ,0)\) for \(\kappa =11,12,\ldots ,16\), and \(\varepsilon =0.2\). This problem is also solved by the proposed method for \(\hat{m}=80\,(k=4, M=5)\) and \(N=16\). The behavior of the numerical solutions for \(\bar{\sigma }=0.0\) (Deterministic solution) and \(\bar{\sigma }=1.0\) (Stochastic solution) and some different values of \(\kappa \) are shown in Figs. 7, 8 and 9. The behavior of the numerical solutions for the Duffing–Van der Pol Oscillator in the phase space is shown in Fig. 10.

5.4 Stochastic Brusselator problem

The stochastic Brusselator problem is given in [51] as follows:

where \(\alpha \) and \(\beta \) are real constants.

The deterministic Brusselator (\(\alpha =0\)) equation was developed at the occasion of a scientific congress in Brussels, Belgium, to develop a simple model for bifurcations in chemical reactions [51].

We can write the integral form of the two-dimensional SDE (70) as follows:

To solve Eq. (71) using the proposed computational method, we approximate X(t) and Y(t) by the LWS as:

where X and Y are the LWs coefficient vectors which should be found and \(\Psi (t)\) is the vector which is defined in (11).

Also from Eq. (72) and Theorem 2.5, we have:

where \(\widetilde{X}^{T}=X^{T}\Phi _{\hat{m}\times \hat{m}}\) and \(\widetilde{Y}^{T}=Y^{T}\Phi _{\hat{m}\times \hat{m}}\).

Moreover, by expanding \(X_{0}\) and \(Y_{0}\) in terms of the Lws, we have:

where e is the LWs coefficients vector for the unit function.

So by substituting Eqs. (72)–(74) into (63), and considering Eqs. (14) and (31), we have:

Now, from Eq. (75), we can write the residual functions \(R_{1}(t)\) and \(R_{2}(t)\) for system (71) as follows:

As in a typical Galerkin method, we generate \(2\hat{m}\) nonlinear algebraic equations:

Finally, by solving system (77) with respect to the unknown vectors X and Y, we obtain the approximate solutions of the problem as \(X(t)\simeq X^{T}\Psi (t)\) and \(Y(t)\simeq Y^{T}\Psi (t)\).

The algorithm of the proposed computational method is presented as follows:

Algorithm 4 |

Input: \(M\in {\mathbb {N}},\,k,N\in {\mathbb {Z}}^{+}\); Brownian motion process B(t); \(\alpha ,\,\beta ,\,X_{0}\) and \(Y_{0}\). |

Step 1: Define the LWs \(\psi _{nm}(t)\) from Eq. (5). |

Step 2: Construct the LWs vector \(\Psi (t)\) from Eq. (11). |

Step 3: Compute the LWs matrix \(\Phi _{\hat{m}\times \hat{m}}\triangleq \left[ \Psi (0),\Psi \left( \frac{1}{\hat{m}-1}\right) ,\ldots ,\Psi (1)\right] \). |

Step 4: Compute the integration operational matrix P using Eqs. (31)–(33) and SOM \(\hat{P}_s\) using Eq. (25). |

Step 5: Compute the LWs stochastic operational matrix \(P_s=\Phi _{\hat{m}\times \hat{m}}\hat{P}_s\Phi ^{-1}_{\hat{m}\times \hat{m}}\). |

Step 6: Compute the vectors \(e^{T}\) using Eq. (8). |

Step 7: Compute the vectors \(\widetilde{X}^{T}=X^{T}\Phi _{\hat{m}\times \hat{m}}\) and \(\widetilde{Y}^{T}=Y^{T}\Phi _{\hat{m}\times \hat{m}}\). |

Step 8: Put \(\displaystyle \left\{ \begin{array}{l} \displaystyle R_{1}(t)=\left( X^{T}-X_{0}e^{T}-\left\{ \left( \beta -1\right) X^{T}\right. \right. \\ \left. \left. \!+\!\left[ \left( \left( \widetilde{X}^{T}\right) ^{2}\odot \widetilde{Y}^{T}\right) \!+\!2\left( \widetilde{X}^{T}\odot \widetilde{Y}^{T}\right) \right] \Phi _{\hat{m}\times \hat{m}}^{-1}\!+\!Y^{T}\right\} P\right. \\ \left. \qquad -\alpha \left\{ X^{T}+\left( \widetilde{X}^{T}\right) ^{2}\Phi _{\hat{m}\times \hat{m}}^{-1}\right\} P_{s}\right) \Psi (t),\\ \displaystyle R_{2}(t)\!=\! \left( Y^{T}-Y_{0}e^{T}\!+\!\left\{ \beta X^{T}\!+\!\left[ \left( \left( \widetilde{X}^{T}\right) ^{2}\odot \widetilde{Y}^{T}\right) \right. \right. \right. \\ \left. \left. \left. +2\left( \widetilde{X}^{T}\odot \widetilde{Y}^{T}\right) \right] \Phi _{\hat{m}\times \hat{m}}^{-1}+Y^{T}\right\} P\right. \\ \left. \qquad +\alpha \left\{ X^{T}+\left( \widetilde{X}^{T}\right) ^{2}\Phi _{\hat{m}\times \hat{m}}^{-1}\right\} P_{s}\right) \Psi (t). \end{array}\right. \). |

Step 9: Construct the nonlinear system of algebraic equations: |

\(\displaystyle \left\{ \begin{array}{c} \displaystyle \left( R_{1}(t),\psi _{j}(t)\right) =\int _{0}^{1}R_{1}(t)\psi _{j}(t)\mathrm{d}t=0, \quad j=1,2,\ldots ,\hat{m}, \\ \displaystyle \left( R_{2}(t),\psi _{j}(t)\right) =\int _{0}^{1}R_{2}(t)\psi _{j}(t)\mathrm{d}t=0, \quad j=1,2,\ldots ,\hat{m}, \end{array}\right. .\) |

Step 10: Solve the nonlinear system of algebraic equations in Step 9 and obtain the unknown vectors X and Y. |

Output: The approximate solutions: \(X(t)\simeq X^{T}\Psi (t)\) and \(Y(t)\simeq Y^T\Psi (t)\). |

As a numerical example, we consider the stochastic Brusselator problem (70) with \(\beta =2\) and some different values \(\alpha \) over the interval [0, 6.5], starting at \(\left( X_{0},Y_{0}\right) =(-0.1,0.0)\). This problem is also solved by the proposed method for \(\hat{m}=80\) and \(N=35\). The behavior of the numerical solutions for the stochastic Brusselator problem in the phase space are shown in Figs. 11 and 12. The non-noisy curve is the corresponding deterministic limit cycle.

6 Conclusion

Some SDEs can be written as nonlinear stochastic Volterra integral equations given in (1). It may be impossible to find exact solutions of such problems. So, it would be convenient to determine their numerical solutions using a stochastic numerical method. In this paper, the SOM of Itô-integration for the LWs was derived and applied for solving nonlinear stochastic Itô–Volterra integral equations. In the proposed method, a new technique for commuting nonlinear terms in problems under study was presented. Also some useful properties of the LWs were derived and used to solve problems under consideration. Applicability and accuracy of the proposed method were checked on some examples. Moreover, the results of the proposed method were in a good agreement with the exact solutions. Furthermore, as some applications, the proposed computational method was applied to obtain approximate solutions for some stochastic problems in the mathematics finance, biology, physics and chemistry.

References

Heydari, M.H., Hooshmandasl, M.R., Ghaini, F.M.M., Fereidouni, F.: Two-dimensional Legendre wavelets for solving fractional Poisson equation with Dirichlet boundary conditions. Eng. Anal. Boundary Elem. 37, 1331–1338 (2013)

Sohrabi, S.: Comparison Chebyshev wavelets method with BPFs method for solving Abel’s integral equation. Ain Shams Eng. J. 2, 249–254 (2011)

Canuto, C., Hussaini, M., Quarteroni, A., Zang, T.: Spectral Methods in Fluid Dynamics. Springer, New York (1988)

Spencer, J.B.F., Bergman, L.A.: On the numerical solution on the Fokker–Planck equation for nonlinear stochastic systems. Nonlinear Dyn. 4, 357–372 (1993)

Zeng, C., Yang, Q., Chen, Y.Q.: Solving nonlinear stochastic differential equations with fractional Brownian motion using reducibility approach. Nonlinear Dyn. 67, 2719–2726 (2012)

Mamontov, Y.V., Willander, M.: Long asymptotic correlation time for non-linear autonomous itô stochastic differential equation. Nonlinear Dyn. 12, 399–411 (1997)

der Wouw, N.V., Nijmeijer, H., Campen, D.H.V.: A Volterra series approach to the approximation of stochastic nonlinear dynamics. Nonlinear Dyn. 4, 397–409 (2002)

Mahmoudkhani, M., Haddadpour, H.: Nonlinear vibration of viscoelastic sandwich plates under narrow-band random excitations. Nonlinear Dyn. 1, 165–188 (2013)

Levin, J.J., Nohel, J.A.: On a system of integro-differential equations occurring in reactor dynamics. J. Math. Mech. 9, 347–368 (1960)

Miller, R.K.: On a system of integro-differential equations occurring in reactor dynamics. SIAM J. Appl. Math. 14, 446–452 (1966)

Khodabin, M., Maleknejad, K., Rostami, M., Nouri, M.: Interpolation solution in generalized stochastic exponential population growth model. Appl. Math. Model. 36, 1023–1033 (2012)

Oǧuztöreli, M.N.: Time-Lag Control Systems. Academic Press, New York (1966)

Maleknejad, K., Khodabin, M., Rostami, M.: Numerical solutions of stochastic Volterra integral equations by a stochastic operational matrix based on block pulse functions. Math. Comput. Model. 55, 791–800 (2012)

Khodabin, M., Maleknejad, K., Rostami, M., Nouri, M.: Numerical approach for solving stochastic Volterra–Fredholm integral equations by stochastic operational matrix. Comput. Math. Appl. 64, 1903–1913 (2012)

Maleknejad, K., Khodabin, M., Rostami, M.: A numerical method for solving \(m\)-dimensional stochastic Itô–Volterra integral equations by stochastic operational matrix. Comput. Math. Appl. 63, 133–143 (2012)

Cao, Y., Gillespie, D., Petzold, L.: Adaptive explicit–implicit tau-leaping method with automatic tau selection. J. Chem. Phys. 126, 1–9 (2007)

Ru, P., Vill-Freixa, J., Burrage, K.: Simulation methods with extended stability for stiff biochemical kinetics. BMC Syst. Biol. 4(110), 1–13 (2010)

Elworthy, K., Truman, A., Zhao, H., Gaines, J.: Approximate traveling waves for generalized KPP equations and classical mechanics. Proc. R. Soc. Lond. Ser. A 446(1928), 529–554 (1994)

Platen, E., Bruti-Liberati, N.: Numerical Solution of Stochastic Differential Equations with Jumps in Finance. Springer, Berlin (2010)

Khodabin, M., Maleknejad, K., Rostami, M., Nouri, M.: Numerical solution of stochastic differential equations by second order Runge–Kutta methods. Appl. Math. Model. 53, 1910–1920 (2011)

Kloeden, P., Platen, E.: Numerical Solution of Stochastic Differential Equations. Springer, Berlin (1999)

Cortes, J.C., Jodar, L., Villafuerte, L.: Numerical solution of random differential equations: a mean square approach. Math. Comput. Model. 45, 757–765 (2007)

Cortes, J.C., Jodar, L., Villafuerte, L.: Mean square numerical solution of random differential equations: facts and possibilities. Comput. Math. Appl. 53, 1098–1106 (2007)

Oksendal, B.: Stochastic Differential Equations: An introduction with applications. Springer, New York (1998)

Holden, H., Oksendal, B., Uboe, J., Zhang, T.: Stochastic Partial Differential Equations. Springer, Berlin (1996)

Abdulle, A., Blumenthal, A.: Stabilized multilevel Monte Carlo method for stiff stochastic differential equations. J. Comput. Phys. 251, 445–460 (2013)

Berger, M., Mizel, V.: Volterra equations with Ito integrals I. J. Integral Equ. 2, 187–245 (1980)

Murge, M., Pachpatte, B.: On second order Ito type stochastic integro-differential equations. Analele Stiintifice ale Universitatii. I. Cuza din Iasi, Mathematica, 30(5), 25–34 (1984)

Murge, M., Pachpatte, B.: Successive approximations for solutions of second order stochastic integro-differential equations of Ito type. Indian J. Pure Appl. Math. 21(3), 260–274 (1990)

Zhang, X.: Euler schemes and large deviations for stochastic Volterra equations with singular kernels. J. Differ. Equ. 244, 2226–2250 (2008)

Jankovic, S., Ilic, D.: One linear analytic approximation for stochastic integro-differential equations. Acta Math. Sci. 308(4), 1073–1085 (2010)

Zhang, X.: Stochastic Volterra equations in Banach spaces and stochastic partial differential equation. Acta J. Funct. Anal. 258, 1361–1425 (2010)

Yong, J.: Backward stochastic Volterra integral equations and some related problems. Stoch. Process. Appl. 116, 779–795 (2006)

Djordjevic, A., Jankovic, S.: On a class of backward stochastic Volterra integral equations. Appl. Math. Lett. (2013). doi:10.1016/j.aml.2013.07.006

Heydari, M.H., Hooshmandasl, M.R., Mohammadi, F.: Legendre wavelets method for solving fractional partial differential equations with Dirichlet boundary conditions. Appl. Math. Comput. 234, 267–276 (2014)

Heydari, M.H., Hooshmandasl, M.R., Mohammadi, F.: Two-dimensional Legendre wavelets for solving time-fractional telegraph equation. Adv. Appl. Math. Mech. 6(2), 247–260 (2014)

Heydari, M.H., Hooshmandasl, M.R., Cattani, C., Li, M.: Legendre wavelets method for solving fractional population growth model in a closed system. Math. Probl. Eng. 2013, 1–8 (2013)

Heydari, M.H., Hooshmandasl, M.R., Ghaini, F.M., Mohammadi, F.: Wavelet collocation method for solving multiorder fractional differential equations. J. Appl. Math. 2012, 1–19 (2012)

Heydari, M.H., Hooshmandasl, M.R., Ghaini, F.M.M., Cattani, C.: Wavelets method for the time fractional diffusion-wave equation. Phys. Lett. A 379, 71–76 (2015)

Heydari, M. H., Hooshmandasl, M. R., Maalek Ghaini, F. M., Fatehi Marji, M., Dehghan, R., Memarian, M. H.: A new wavelet method for solving the Helmholtz equation with complex solution. Numer. Methods Partial Differ. Equ. 32(3), 741–756 (2016)

Heydari, M.H., Hooshmandasl, M.R., Barid Loghmania, Gh., Cattani, C.: Wavelets Galerkin method for solving stochastic heat equation. Int. J. Comput. Math. (2015). doi:10.1080/00207160.2015.1067311

Babolian, E., Mordad, M.: A numerical method for solving systems of linear and nonlinear integral equations of the second kind by hat basis function. Comput. Math. Appl. 62, 187–198 (2011)

Tripathi, M.P., Baranwal, V.K., Pandey, R.K., Singh, O.P.: A new numerical algorithm to solve fractional differential equations based on operational matrix of generalized hat functions. Commun. Nonlinear Sci. Numer. Simul. 18, 1327–1340 (2013)

Heydari, M.H., Hooshmandasl, M.R., Ghaini, F.M.M., Cattani, C.: A computational method for solving stochastic Itô–Volterra integral equations based on stochastic operational matrix for generalized hat basis functions. J. Comput. Phys. 270, 402–415 (2014)

Heydari, M.H., Hooshmandasl, M.R., Cattani, C., Ghaini, F.M.M.: An efficient computational method for solving nonlinear stochastic Itô–Volterra integral equations: application for stochastic problems in physics. J. Comput. Phys. 283, 148–168 (2015)

Kloeden, P.E., Platen, E.: Numerical Solution of Stochastic Differential Equations. Springer, Berlin (1995)

Schwartz, E.S.: The stochastic behavior of commodity prices: implications for valuation and hedging. J. Finance 52, 923–973 (1997)

Jackwerth, J., Rubinstein, M.: Recovering probability distributions from contemporaneous security prices. J. Finance 51(5), 1611–1631 (1996)

Rubinstein, M.: Nonparametric tests of alternative option pricing models. J. Finance 40(2), 455–480 (1985)

Aarató, M.: A famous nonlinear stochastic equation (Lotka–Volterra model with diffusion). Math. Comput. Model. 38, 709–726 (2003)

Henderson, D., Plaschko, P.: Stochastic Differential Equations in Science and Engineering. World Scientific, Singapore (2006)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Heydari, M.H., Hooshmandasl, M.R., Shakiba, A. et al. Legendre wavelets Galerkin method for solving nonlinear stochastic integral equations. Nonlinear Dyn 85, 1185–1202 (2016). https://doi.org/10.1007/s11071-016-2753-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-016-2753-x