Abstract

Alzheimer’s Disease (AD) is a degenerative, chronic condition of the brain for which there is now no effective treatment. However, there are medications that can slow its development. In order to stop and control the development of AD, earlier diagnosis of the disease is quintessential. Our proposed method’s primary objective is to establish a comprehensive model for the prior detection of Alzheimer’s disease and the categorization of distinct AD stages. This work employs a deep learning methodology, especially CNN. The proposed approach makes use of well-known models that have already been trained to classify medical images, like the EfficentNetB7 model, by applying the transfer learning principle. In order to achieve greater accuracy, convolutional neural networks (CNNs) are frequently scaled up as new resources become available at a fixed cost throughout the construction phase. A compound coefficient is used by the CNN architecture and scaling approach, which is the foundation of the pre-trained EfficientNetB7 model, to scale the dimensions equally. This proposed EfficientNetB7 model is quicker, easier, and more effective than other pre-trained models like VGG19 and InceptionV3. The proposed model includes simple structures that have memory requirements, provide manageable time, overfitting, and low computational complexity as well as training and inference speeds. The Alzheimer’s disease Neuroimaging Initiative (ADNI) dataset was employed for a comprehensive assessment of the method proposed, utilizing well-known performance metrics including sensitivity, specificity, and accuracy. The findings revealed that the improvised results achieved the accuracy metric when compared to existing methods. The EfficientNetB7 model has been enhanced, and this model achieves a sensitivity of 98.08%, specificity-98%, accuracy-98.2%, and F-score-98.95%, for multi-class AD stage classifications.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

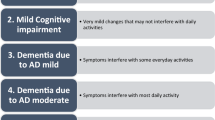

A brain condition called Alzheimer’s often gets worse over time and progresses slowly [1]. In 60–70% of dementia (brain damage) instances, it is the underlying cause. The earliest sign that occurs most frequently is trouble recalling recent events. Behavior problems, mood swings, linguistic difficulties, confusion, self-neglect, and a lack of motivation may be symptoms as the illness worsens. Death occurs as a result of a slow decline in body functions. The normal life hope after diagnosis ranges from three years to nine years, with the pace of progression being variable. During the initial phases of Alzheimer’s disease, challenges might add to the difficulty in recalling specific words and or names, remembering people’s names upon first meeting them, and maintaining regular functioning in social and occupational settings. Encountering difficulties in finding or misplacing valuable items, forgetting a paragraph just read in a book, or resorting to alternatives are all signs of tasks and activities becoming progressively challenging. A World Health Organization (WHO) assessment from 2022 found that there are an estimated 10 million new cases of Alzheimer’s disease per year, impacting 55 million individuals worldwide. It takes a knowledgeable medical expert, considerable data collecting, and powerful prediction algorithms to make an early diagnosis of this ailment. Medical decision support systems can use automated systems, which are more accurate than human evaluation since they are not susceptible to human error. Automating the diagnosis of Alzheimer’s disease is vital since it will speed up the process and reduce the need for human involvement. Additionally, automation reduces expenses overall while producing outputs that are more accurate. Diagnosing Alzheimer’s disease clinically can be particularly intricate, especially in the early stages. Fig. 1 visually portrays the evolution of Alzheimer’s Disease (AD) — commencing with Mild Cognitive Impairment (MCI) and culminating in advanced stages of AD.

Progressing from Mild cognitive impairment (MCI) to severe Alzheimer’s Disease (AD) [2]

The use of computational neuro-scientific methodologies in translational applications to mental health has been demonstrated to be quite beneficial. This extensive field of research can aid in translating the molecular mechanisms that regulate both good functioning and pathological situations of the human brain into detectable clinical manifestations. New opportunities have been created to diagnose and predict neurodegenerative as well as neuropsychiatric illnesses as a result of recent advances in machine learning and the speedy creation of sizable bio-medical datasets [3]. This new development has influenced the development of computational tools to improve treatment outcomes for patients with such illnesses by making predictions based on a variety of patient-specific data. Fig. 2. depicts the prevalence of AD in the US according to age.

AD prevalence in the US according to age [4]

There are medications designed to alleviate the impact of Alzheimer’s disease during its earlier stages. Nonetheless, detecting Alzheimer’s Disease (AD) on time is vital due to its irreversible progression, holding significance from clinical, social, and financial perspectives. Disease was diagnosed pretty early and the necessary clinical procedures were made possible by advances in imaging and computational technology. To quickly diagnose neurological disorders, however, precise and quick computational tools are still required. Alzheimer’s disease (AD) leads to structural as well as functional irregularities within the brain. Based on morphological and structural alterations, the disease cases are separated into three groups: Alzheimer’s Disease (AD), MCI, and NC patients. A stage between AD and NC is called MCI. In therapeutic contexts, the diagnosis of AD in both quantitative as well as qualitative holds significant importance as it can shorten the treatment duration and provide a better quality of life for both the patient and their circumstances. Only a few methods in the most recent research approaches are designed specifically for early AD prediction. The proposed technology analyses Magnetic Resonance Images (MRI) using deep learning methods along with sophisticated machine learning algorithms.

Traditionally, feature extraction has been done manually, which is time-consuming, subjective, and highly reliant on technical knowledge. A promising method for resolving these problems is to apply convolutional neural networks (CNNs) in particular as per [5]. It has shown exceptional performance in the diagnosis of AD, and because it extracts features automatically, CNN’s usefulness can be further boosted [6, 7]. This approach primarily aims to provide a strong approach for the early identification of disease and the categorization of MRI images into distinct stages of AD. The AD spectrum encompasses four stages, each further categorized into multiple classes. The proposed approach makes use of already trained models to classify medical images, like the EfficentNetB7 model, by applying the transfer learning principle. Convolutional Neural Networks (CNNs) are frequently constructed to achieve improved accuracy when adequate resources are available to complete the task. The CNN architecture and scaling method utilize a compound coefficient, forming the basis of the pre-trained EfficientNetB7 model, ensuring equal scaling across depth, breadth, and resolution dimensions. This proposed EfficientNetB7 model is quicker, easier, and more effective than other pre-trained models like VGG19 and InceptionV3. The proposed model includes simple structures that have memory requirements, provide manageable time, overfitting, and low computational complexity as well as training and inference speeds. The proposed method was thoroughly assessed by utilizing all the performance metrics on the Alzheimer’s disease Neuroimaging Initiative (ADNI) dataset. It was found that the accuracy parameter achieved better results in the proposed method than cutting-edge methods. The Enhanced EfficientNetB7 pre-trained model now attains impressive results, boasting sensitivities, specificities, accuracies, and F-scores of 98.08%, 98.00%, 98.20%, and 98.95% for the classification of multi-class AD stages.

Our contribution to the study can be summed up as follows:

-

Our primary objective is to present a resilient approach for the early identification of Alzheimer’s Disease as well as the categorization of MRI images into various stages of AD. The Deep Learning model, particularly CNN is applied in this endeavor.

-

The CNN architecture and scaling approach utilizes a compound coefficient, which forms the pre-trained EfficientNetB7 model basis, to uniformly scale depth, breadth, and resolution dimensions.

-

It achieves great accuracy by focusing on simple structures that memory requirements, provide manageable time, overfitting, and low computational complexity and also training and inference speeds.

The remaining sections of this work are organized as follows: the "Related Work" in section 2 reviews the pertinent works. Section 3 discusses the problem statement and techniques presented with the title "Materials and Methods". Evaluation of the experiments and their findings is done in section 4 under "Experimentation and Analysis". The paper is summarized in the "Analysis of proposed method” in section 5 and “Conclusion" in section 6 respectively.

2 Related works

The method of identifying AD is intricate and has been the subject of in-depth research. Payan et al. [8] used 3D convolutional neural networks and a sparse auto encoder. Using information from a brain MRI, they developed an algorithm that evaluates a person’s state of illness. The primary breakthrough was the introduction of 3D convolutions, which were superior to 2D convolutions in performance. After pre-training the convolutional layer with an auto-encoder, it was left alone. Upon adjustment, performance ought to increase [9].

In their study, J. Neelaveni et al. [10] proposed employing various machine learning algorithms to predict AD. The model incorporated inputs such as age, education, the Mini-Mental State Examination (MMSE), and other psychological variables. AD is thought to be present if a person’s MMSE score decreases over time. Decision trees and SVM were applied to estimate the AD and compare their performance to other machine learning methods. Nonetheless, given that only a single factor, namely accuracy, was considered in this case, the final accuracy turned out to be unusually lower compared to other models. The SVM exhibits notable accuracy. This approach enables the diagnosis of an individual’s ailment and its differentiation from cognitive decline.

To detect Alzheimer’s illness, Sakshi et al. [11] recommended combining shallow learning and deep learning approaches. In addition to gaining a more profound insight into clinical Alzheimer’s disease diagnosis, various methods utilize shallow learning techniques. Shallow learning is an instance of a machine learning method that reaches its performance thresholds as more training instances and training data are added to the network. The Sabon Library’s standard unit private Pearson’s test for the pertinent qualities was employed in this investigation, along with the Mann-Whiteny test and correlation matrix. XG boosting, Gradient boosting, Ada boosting, RFC, SVM linear, Decision tree classifier, Logistic regression, and SVM radial were used to test the dataset. By using ML techniques in 3D MRI scans for AD, a method proposed by Srinivasan Aruchamy et al. [12]. To discriminate between grey and white matter using 3D pictures and identify disease at an early stage, researchers used a novel method in this study. In AD patients, the presence of gray and white matter in the brain is undeniable and most commonly impacted.

Sarraf et al. [13] utilized the well-established CNN architecture LeNet-5 to differentiate between the AD brain and the NC brain otherwise called binary classification. Hosseini et al. conducted the study depicted in [8]. Employing a Deeply Supervised Adaptive (DSA) 3D-CNN classifier, they made predictions for AD. The CAD-Dementia dataset was employed for training three-tiered 3D convolutional autoencoder networks without the need for prior skull stripping preparation [14]. Performance assessment leveraged tenfold cross-validation. As per Korolev et al. [15], comparable performance could potentially be achieved. When employed with 3D structural MRI brain data, in addition to the residual network, plain 3D CNN designs also showcased substantial depths and intricate complexity. However, they did not meet the anticipated performance levels.

Wang et al. [16] investigated the CNN structure which consists of an eight-layer. The feature extraction method employed two fully linked layers and six convolutional layers. For feature extraction, a combination of two fully connected layers and six convolutional layers was utilized. In their study, Khvostikov et al. [17] utilized a DTI and SMRI to evaluate specific hippocampal regions (RoI). They contrasted that approach’s efficiency with the network built using AlexNet. 3D Inception reported better performance than AlexNet.

Sahumbaiev et al. [18] suggested a HadNet design to explore Alzheimer’s spectrum MRI. The Statistical Parametric Mapping (SPM) toolkit spatially normalises the MRI image dataset and removes the skull for better training. It is anticipated that sensitivity and specificity will advance along with HadNet’s architecture. Spasov et al. [19] proposed the paradigm of apolipoprotein E expression level 4 (APOe4). The APOe4 model included clinical evaluation, MRI scans, and genetic tests as inputs. The model had a significantly lower computational cost, memory demand, prototyping speed, overfitting, and number of parameters when compared with already trained models like AlexNet [20] and VGGNet [21]. Wang et al. [22] introduced a distinctive CNN framework rooted in a multi-modal MRI analysis technique, utilizing either functional MRI or DTI data. Patients with NC, AD, and amnestic moderate cognitive impairment were categorized using the framework (aMCI). Even though the classification accuracy was fairly high, it was anticipated that 3D convolution would perform better than 2D convolution.

A superficial adjustment to a pre-trained model like AlexNet, GoogleNet, or ResNet50 was suggested by Khagi et al. [23]. Finding out how each layer’s section affected the results for both the classification of natural photos and diagnostic images was the main objective. In their work, Jain et al. [24] proposed a PFSECTL mathematical model that relies on pre-trained CNN and VGG-16 models. This model acted as one of the feature extractors in the categorization task, with a notable emphasis on the transfer learning concepts.

Ge et al. [25] established an advanced multi-scale 3D CNN model. A ground-breaking architecture for diagnosing AD was 3DMSCNN. They also recommended a feature fusion method and a multi-scale feature improvement strategy. Song et al. [26] described the development of a graph-theoretic-based Graph-based CNN classifier. For the purpose of segmenting the AD spectrum into four distinct groups, they trained and evaluated the network by utilizing structural connection graphs as components of a multi-class model. Using voice data, Liu et al.’s[27] AD detection method was developed. Data from old-person voices was used to determine the spectrogram’s parameters. The application utilized machine learning techniques. The logistic regression model produced the best results out of all the models we looked at. Liu et al. [28] also suggested a multi-model deep learning approach. Using structural MRI data, automatic hippocampus segmentation and AD classification were integrated. The features acquired from both the CNN multi-task model and also 3D DenseNet model were combined to determine the disease status.

By Impedovo et al. [29], a protocol was presented. In order to assess the correlation between cognitive processes and handwriting, this technique provided both healthy volunteers and patients with cognitive impairment with a "cognitive model". The primary goal was to create a quick, painless method for detecting and keeping track of neurodegenerative dementia during screening and follow-up. In order to characterize four stages of AD, Harshit et al. [30] employ a 3D CNN architecture to 4D FMRI data (AD, NC, EMCI, and LMCI). In addition, numerous researchers have proposed novel CNN architectures for 3D MRI, addressing diverse AD stage classifications. They have also integrated a 3D convolutional network which are densely connected with 3D MRI images, resulting in a four-way categorization (3D DenseNets) [31,32,33,34,35,36, 12]. Several authors have put forth a variety of deep learning approaches for Alzheimer’s disease [37,38,39,40,41,42,43,44,45,46,47,48], along with alternative machine learning approaches [49,50,51,52,53,54,55,56,57,58].

Traditionally, feature extraction has been done manually, which takes long time, is subjective, and is highly reliant on technical knowledge. A promising method for resolving these problems is the use of CNNs. It has deliberately shown exceptional performance in the diagnosis of AD, and because it extracts features automatically, CNN’s usefulness can be further boosted. While numerous ML and DL methods exist, there remains a need for a resilient framework designed for detecting Alzheimer’s disease in advance and the classification of MRI images across various stages of AD. The suggested method employs some models that are pre-trained, like the EfficientNetB7 model, that have been trained for classifying the medical images, using the principle of transfer learning.

3 Materials and methods

3.1 Materials

The ADNI dataset’s 2D, MRI mode is used to acquire all of the trained data. It includes DICOM-formatted descriptions of sagittal, coronal, and axial medical images. The dataset comprises 300 people divided into the four classifications of AD, NC, EMCI, and LMCI. Each class contains 75 patients, resulting in a total of 816 scans and 21 images. There are 5764 images in the AD class, 5817 in the EMCI, 3460 in the LMCI, and 6775 in the NC. The size of the medical data was 256 X 256 in 2D format. The experimental segment evaluates the performance of the proposed technique through four metrics: accuracy, sensitivity, F-Score, specificity and ROC-AUC analysis. Two measures, specifically sensitivity as well as specificity, are used to assess the model’s capacity to recognize patients with Normal Control (NC), Mild Cognitive Impairment (MCI), and Alzheimer’s Disease (AD). The model’s precision reveals the proposed method’s general efficiency. The experimental setup for the method proposed here is mentioned in Table 1.

3.2 Methods

There are many techniques for AD detection and classification, as may be shown in the "Related Work" section. Most of the existing works, however, do not employ multi-class classification or transfer learning strategies. The existing literature hasn’t thoroughly addressed these aspects. Therefore, the innovations of this study lie in recognizing that the literature findings highlight the necessity for devising novel methods to detect AD, capable of accommodating a diverse array of variations. The pre-trained MR image classification models, such as the EfficentNetB7 can be used with the proposed method by applying the transfer learning principle. When more resources become available, convolutional neural networks (CNNs) are frequently scaled up to achieve improved accuracy at a fixed resource cost. The CNN architecture and scaling approach utilizes a compound coefficient, forming the cornerstone of the pre-trained EfficientNetB7 model, to uniformly scale dimensions in all angles.

This technique uses an EfficentNetB7 pre-trained model built on CNN architecture to increase the reliability of Alzheimer’s Disease (AD) early-stage identification. The proposed solution involves separate processes for the offline training phase as well as the online testing phase. The off-line method extracts features from MR images for training and maintains a representation of those features in the database. The testing step follows a similar process up until the feature extraction stage of the offline process. The EfficientNetB7 pre-trained model based on CNN classifier is finally deployed to determine if the input is patients with Alzheimer’s Disease (AD), mild cognitive impairment (MCI), or normal control (NC). The suggested Deep Learning Model Based Early Detection of Alzheimer’s Disease Using MRI Images (DL-EDAD) method’s system overview is depicted in Fig. 3.

3.2.1 Pre-processing

MRI scans of AD undergo Gaussian filtering to reduce noise and selectively blur certain parts of the image. The desired output is achieved by implementing the Gaussian low pass filter as an odd sized geometric kernel and applying it to each pixel of the region of interest. The sizes of all the images were shrunk to 256 by 256. It is crucial to first select the size of the kernel or matrix that will be used to deconstruct the image before applying a Gaussian filter to an MRI AD image. Sizes of 3x3 or 5x5 are frequent choices for the kernel. Since the sizes are often odd numbers, the overall results can be computed using the center pixel. Since the kernels are symmetric, their rows and columns are also equal. The following is the Gaussian function, which calculates the values inside the kernel:

3.2.2 Compound model scaling using efficientb7 model

In Fig. 4, the proposed method’s EfficientNetB7 model-based CNN architecture is depicted along with a scaling mechanism that uses a compound coefficient mainly to scale all the resolution parameters uniformly. Unlike traditional practice, to these variables freely, the selected scaling method uniformly adjusts network resolution in terms of width and depth with the help of preset scaling coefficients. Proof that if it requires \({2}^{N}\) times more computing power, it can simply expand the network’s depth by \({\alpha }^{N}\), width by \({\beta }^{N}\), and image size by \({\gamma }^{N}\), where \(\alpha , \beta , \gamma\) are constant coefficients found by a tiny grid search on the initial small model. Let \(M\) be the number of individual models in the compound model scaling approach. The compound model \(C\) is defined as the weighted sum of these individual models:

where \(x\) represents the input AD image, \({f}_{i}\left(x\right)\) is the expected output of the \(i\)-th EfficientB7 model, \({w}_{i}\) is the weight associated with the \(i\)-th model and \(C\left(x\right)={\sum }_{i=1}^{M}{w}_{i}=1\). The aim behind this computation is to integrate the predictions of various EfficientB7 models, each with its own learning features, to create a more reliable and accurate prediction for the input AD image \(x\).

To determine the optimal weights \({w}_{i}\) for each individual model, a training process is performed to minimize the overall loss function \({L}_{compound}\):

where \(N\) is the training samples count, \({x}_{j}\) is the \(j\)-th training AD input image, \({y}_{j}\) is the corresponding ground truth label and \(L\left(.\right)\) is the chosen loss function such as cross-entropy or mean squared error. The weights \({w}_{i}\) are updated during training using gradient descent algorithm to minimize the compound loss \({L}_{compound}\).

The output of each individual EfficientB7 model \({f}_{i}\left(x\right)\) can be computed as follows:

where \({\theta }_{i}\) represents the learnable parameters of the \(i\)-th model and \(x\) is the input AD image.

During training, the parameters \({\theta }_{i}\) of each individual EfficientB7 model are updated using backpropagation to minimize the loss function \({L}_{i}\) for each model:

where \(N\) is the number of training samples, \({x}_{j}\) is the \(j\)-th training AD input image, \({y}_{j}\) is the corresponding ground truth label, and \(L\left(\bullet \right)\) is the chosen loss function. The definition of optimization of the EfficientNet model is

3.2.3 Feature Extraction based on Convolutional Neural Network (FE-CNN)

CNN receives an MRI image and classifies and analyses it based on a variety of criteria, such as Alzheimer’s Disease (AD), mild cognitive impairment (MCI), and individuals with normal control (NC). In CNN, a number of convolutional layers, pooling, fully connected layers, and filters are used to process each input image. Use the soft-max function next to categories an object with probabilistic values between 0 and 1. A convolution layer is utilized to extract information from an input image. The convolutional layer preserves the link between pixels by learning visual properties from input data. This mathematical operation needs two inputs, like an image matrix and a filter. The image matrix has the dimensions \(h\times w\times d\). The filter has the following dimensions: \({f}_{h}{\times f}_{w}\times d\). The output’s dimension is \((h-{f}_{h}+1)\times (w-{f}_{w}+1)\times 1\). The feature maps must then be added to a ReLU layer after being recovered. ReLU takes action, converting each negative pixel to a 0 by doing so element by element.

During the downsampling phase of pooling, the feature map’s dimensionality is reduced. A layer of pooling is now applied to the rectified feature map to create the pooled feature map. The completely linked layer receives the input from the other layers as a flattened vector. The output will be transformed into the required number of classes using the network. The input from the other layers is provided as a flattened vector to the fully linked layer. It will utilise the network to convert the output into the necessary number of classes. As a result, the output activation map should be \({A}_{H}x{A}_{w}\) in size, where \({A}_{H}\) stands for activation height and \({A}_{W}\) for activation width. The following can be done with \({A}_{H}\) and \({A}_{W}\) values:

The CNN model that includes convolutional and pooling layers, the output from these layers is typically flattened into a one-dimensional vector before entering the fully connected layer. Let’s denote this flattened vector as \(x\), which has a size of \(n\) elements. Consider fully connected layer, which consist of \(m\) units. The weights of the fully connected layer can be represented as a \(n\times m\) weight matrix \(W\), and the biases as a vector of size \(m\), denoted as \(b\). The output of the fully connected layer, before applying the activation function is calculated as follows:

where \(x\) is the flattened vector from the preceding layers, \(W\) is the weight matrix of the fully connected layer, \(b\) is the bias vector of the fully connected layer, and ‘ \(\bullet\)’represents the matrix multiplication between \(x\) and \(W\) with broadcasting of the bias vector. The output \(o\) is a vector of size \(m\), representing the activations of the \(m\) units in the fully connected layer. This step essentially performs a linear transformation of the input features using learned weights and biases. Next apply an activation function to introduce non-linearity to the fully connected layer. The Rectified Linear Unit (ReLU) activation is commonly used in CNN classifiers.

where \(z\) is the output from the fully connected layer for each unit. The ReLU activation function sets all negative values to zero and retains positive values as they are. Therefore, the final output \(y\) of the fully connected after applying the ReLU activation function is given by:

This non-linear transformation is crucial for the CNN classifier to learn complex relationships and capture intricate patterns in the data, leading to improved performance in early Alzheimer’s disease detection. The output \(y\) is then forwarded to the output layer, where an appropriate activation function (e.g., softmax) is applied to obtain the final class probabilities for AD classification.

The fully connected layer using the CNN classifier for early Alzheimer’s Disease (AD) detection is shown in Fig. 5. Fully connected layers will be used to turn the feature map matrix into a vector in the CNN fully connected layer such as \(x1,x2,x3,\dots xn\). It combines features to create a model and uses an activation function, such as SoftMax or sigmoid, to categories the outputs as Alzheimer’s Disease (AD), mild cognitive impairment (MCI), and patients with normal control (NC).

4 Experimentation and analysis

The experimental section evaluated the performance of the proposed method using four metrics: sensitivity, specificity, accuracy, F-Score, and ROC-AUC analysis. Sensitivity and specificity, specifically, gauge the model’s ability to identify patients with normal control (NC), mild cognitive impairment (MCI), and Alzheimer’s Disease (AD). The model’s precision underscores the overall effectiveness of the proposed method. The ADNI dataset’s 2D, MRI mode is used to acquire all of the trained data. It includes DICOM-formatted descriptions of coronal, sagittal, and axial medical images.

4.1 Experimental setup

The proposed technique makes use of the CNN architecture and scaling method based on the EfficientNetB7 model, which uses a compound coefficient mainly to scale the resolution parameters evenly. Use the soft-max function next to categorize an object with probabilistic values between 0 and 1. A convolution layer is utilized to extract information from an input image. The convolutional layer preserves the link between pixels by learning visual properties from input data. This mathematical operation needs two inputs, like an image matrix and a filter. The feature maps must then be transferred to a ReLU layer after being retrieved. ReLU carries out a task, converting each negative pixel to ‘0’ one at a time. The final result, a rectified feature map, gives the network non-linearity. To create a pooled feature map, the rectified feature map is now subjected to a layer of pooling. The completely linked layer receives the input from the other layers as a flattened vector. The output will be transformed into the required number of classes using the network. Keras and Tensorflow have been used to accomplish the proposed method. 80% of the AD MRI images that were chosen were utilised for training, and 20% were used for testing.

4.2 Results and comparison with other existing methods

The effectiveness of the proposed Deep Learning Model for Early Detection of Alzheimer’s Disease (DL-EDAD), which employs magnetic resonance imaging, has been evaluated using the benchmark dataset detailed in the experiment setup section. The proposed DL-EDAD system demonstrates high performance in detecting changes in AD MRI images, evident through improved sensitivity, specificity, accuracy, F-Score, and ROC-AUC analysis. According to Table 2, it has a promising accuracy rate for the classification of early Alzheimer’s disease detection. The mean of all the measurements for each of the classes of Alzheimer’s disease stated in Table 2. Table 2 demonstrates that the proposed approach produces promising results across different patient classifications, including NC, AD and MCI.

The proposed DL-EDAD technique is compared with the 2D-M2IC model [34], the AD-InceptionV3 model [37], the 3D AD using ML [12], and the 3D CapsNets [36] to showcase its enhanced ability in distinguishing patients with early-stage Alzheimer’s disease from those with Alzheimer’s disease, as well as patients with normal control, early MCI, and late MCI. Because of the CNN-based EfficientNetB7 architecture model, the proposed AD-EDAD approach distinctly surpasses the performance of existing methods. The suggested system is evaluated against established benchmark datasets in comparison to currently employed techniques. Fig. 6 and Table 2 respectively present the average accuracy performance metrics of the proposed DL-EDAD method.

In comparison to the 2D-M2IC model [34], AD-InceptionV3 model [37], 3D AD employing ML [12], and 3D CapsNets [36], the suggested system’s results clearly outperform them. The suggested method performs better than existing systems with respect to sensitivity (98.08%), specificity (98.20%), accuracy (98.68%), and F-score (98.95%). The proposed strategy when the DL-EDAD model is compared to the 2D-M2IC model, the average accuracy score is 97%. The accuracy rate for the AD-InceptionV3 model is 93.1% on average. The average accuracy rate for the 3D AD technique utilising ML is 90.9%. The 3D CapsNets Model’s average accuracy score is 98.42%. Comparison analysis of the proposed approach, DL-EDAD, and other current methods is shown in Fig. 7 and Table 3 and includes average performance measures.

In terms of various Alzheimer’s Disease (AD) variations, including Normal Control (NC), Early Mild Cognitive Impairment (EMCI), and Late Mild Cognitive Impairment (LMCI), experimentation reveals that the DL-EDAD method consistently yields commendable and comparable outcomes. As Table 2 illustrates, it achieves sensitivities of 98.08%, specificities of 98.20%, accuracies of 98.68%, and F-scores of 98.95%. There are two reasons for this improvement. i) The pre-trained EfficientNetB7 model is based on the CNN scaling method, which treats all the parameters equally. ii) It achieves high accuracy by emphasizing basic structures with minimal computational complexity, manageable time and memory requirements, and fast training and inference rates. In Fig. 7, it can be seen that the proposed system clearly outperforms the other methods under comparison and that the findings are more resistant to variations in AD, NC, EMCI, and LMCI.

This study presents a comprehensive framework for the early detection of Alzheimer’s disease and the classification of medical images across different AD stages. Deep learning techniques, specifically Convolutional Neural Networks (CNN), are employed for this purpose. Built upon the CNN architecture and scaling approach, the EfficientNetB7 model utilizes a compound coefficient to uniformly scale depth, width, and resolution parameters. By concentrating on basic structures with low computational complexity, overfitting, manageable time and memory needs, as well as training and inference rates, it achieves high accuracy.

The trials revealed that the DL-EDAD approach performs well and consistently across different MRI images. It is more resistant to variations like Alzheimer’s Disease (AD), Early Mild Cognitive Impairment (EMCI), Late Mild Cognitive Impairment (LMCI), and patients with Normal Control (NC). Enhancements have been made to the pre-trained EfficientNetB7 model, enabling it to accurately classify AD stages into multiple classes with a precision of 98.95%. Testing has validated that the deep learning model-based Early Detection of Alzheimer’s Disease (DL-EDAD) method provides accurate and consistent results, as depicted in Fig. 8. The suggested system’s accuracy clearly outperforms the other methodologies that were looked at. Additionally, using information from Table 3 as input, Fig. 9 illustrates the process of calculating ROC curves along with their corresponding AUC values.

5 Analysis of Alzheimer’s Disease (AD) Early Detection Using EfficientNetB7 Model-Based CNN Architecture (proposed method)

Performance evaluation

It offers an extensive evaluation in terms of the effectiveness of CNN architecture based on the EfficientNetB7 model for the initial detection of Alzheimer’s disease. They evaluate the DL performance parameters and the Area Under the Curve-Receiver Operating Characteristic curve (AUC-ROC) on a carefully curated dataset of brain MRI scans. Standard techniques are employed to calculate evaluation metrics, assessing the model’s capacity to differentiate the healthy individuals with AD. Substantial enhancements have been made to the pre-trained EfficientNetB7 model, enabling it to accurately classify AD stages into multiple classes, achieving an accuracy of 98.95%. Testing has discovered that the deep learning model-based Detection of AD (DL-EDAD) method gives accurate and similar findings, as shown in Fig. 8.

Comparison with baseline models

To authenticate the effectiveness of the EfficientNetB7 model, it compares its performance with several baseline CNN architectures. These baselines may include popular architectures like CapsNets, and Inception. It analyzes the results to showcase the advantages of utilizing EfficientNetB7 in terms of improved accuracy and reduced computational complexity. Compared to the 2D-M2IC model [34], the AD-InceptionV3 model [37], 3D AD utilizing ML [12], and 3D CapsNets [36], the results of the suggested system exhibit clear superiority. The proposed method showcases higher performance than existing systems concerning sensitivity (98.08%), specificity (98.20%), accuracy (98.68%), and F-score (98.95%). When the DL-EDAD model is compared to the 2D-M2IC model, the average accuracy score stands at 97%. The AD-InceptionV3 model achieves an average accuracy rate of 93.1%. Similarly, the 3D AD technique employing ML averages an accuracy rate of 90.9%. The average accuracy score for the 3D CapsNets Model reaches 98.42%.

Robustness and generalization

To assess the robustness and generalization capabilities of the EfficientNetB7 model, it performs cross-validation experiments and analyze the variance in performance across different folds. It also investigates the model’s behavior when presented with data from unseen sources or variations in scanning equipment to ensure its applicability in real-world scenarios. In terms of various Alzheimer’s Disease (AD) variations, including Normal Control (NC), Early Mild Cognitive Impairment (EMCI), and Late Mild Cognitive Impairment (LMCI), experimentation reveals that the DL-EDAD method consistently yields commendable and comparable outcomes. As Table 2 illustrates, it achieves sensitivities of 98.08%, specificities of 98.20%, accuracies of 98.68%, and F-scores of 98.95%.

Interpretability and feature visualization

Interpreting the model’s decisions is crucial in medical applications. It explores methods to visualize the learned features and activation maps from the EfficientNetB7 model. This analysis helps identify regions of interest and provides insights into the model’s decision-making process, aiding clinicians in understanding the reasoning behind AD predictions. The proposed DL-EDAD system demonstrates high performance in detecting changes in AD MRI images, as evidenced by improvements in sensitivity, specificity, accuracy, and F-Score. The system has the ability to identify patients with Normal Control (NC), Mild Cognitive Impairment (MCI), and Alzheimer’s Disease (AD) with remarkable results. Specifically, for Alzheimer’s Disease (AD), the system achieves sensitivity, specificity, accuracy, and F-Score values of 98.08%, 98.20%, 98.68%, and 98.95% respectively. In the case of Early Mild Cognitive Impairment (EMCI), the system attains sensitivity, specificity, accuracy, and F-Score values of 98.06%, 97.94%, 98.05%, and 98.07%. Late Mild Cognitive Impairment (LMCI) is detected with sensitivity, specificity, accuracy, and F-Score values of 96.03%, 97.94%, 96.13%, and 97.22% respectively. For Normal Control (NC), the system exhibits sensitivity, specificity, accuracy, and F-Score values of 98.10%, 98.44%, 98.66%, and 98.05%.

Impact of data augmentation

Data augmentation techniques, such as rotation, flipping, and zooming, play a vital role in boosting the model’s ability to generalize. The study delves into the influence of various data augmentation strategies on the performance of the EfficientNetB7 model and examines their role in enhancing Alzheimer’s disease detection outcomes.

Model complexity and inference time

Deep learning models, especially large ones like EfficientNetB7, often require substantial computational resources for training and inference. It analyze the complexity of the model and evaluate its inference time on different hardware configurations. This information can guide the choice of deployment platforms and hardware for practical clinical usage.

Clinical relevance and diagnostic value

Beyond numerical performance metrics, it assesses the clinical relevance and diagnostic value of the EfficientNetB7 model’s predictions. it collaborates with medical experts to evaluate the model’s ability to detect early signs of Alzheimer’s disease, its utility in assisting clinicians in making accurate diagnoses, and its potential for improving patient outcomes.

6 Conclusion and future scope

The proposed approach in this study aims to create a systematic framework for the early recognition of Alzheimer’s disease and the classification of medical images for different stages of AD. Deep learning is used in this work, notably convolutional neural networks (CNN). The CNN architecture and scaling method, which uses a compound coefficient to scale all depth, width, and resolution parameters equally, is the foundation upon which the EfficientNetB7 model is created. By concentrating on basic structures with low computational complexity, overfitting, manageable time and memory needs, as well as training and inference rates, it achieves high accuracy. With the use of the Alzheimer’s disease Neuroimaging Initiative (ADNI) dataset, the robustness of this strategy is demonstrated. It subsequently obtained a total accuracy of 98.95% for improved early-stage Alzheimer’s disease identification. The proposed system demonstrates better performance in comparison to the 2D-M2IC model [34], the AD-InceptionV3 model [37], the 3D AD utilizing ML [12], and the 3D CapsNets [36] in terms of results. Exhibiting a sensitivity of 98.08%, specificity of 98.20%, accuracy of 98.68%, and an F-score of 98.95%, the proposed system showcases higher performance. In comparison to the 2D-M2IC model, the DL-EDAD achieves an average accuracy score of 97%. The average accuracy rating for the AD-InceptionV3 model is 93.1%. The average accuracy measurement for the 3D AD utilising ML technique is 90.9%. The average accuracy measurement for the 3D CapsNets Model is 98.42%. The suggested DL-EDAD approach performs well on a variety of MRI images. It exhibits greater resilience against variations induced by factors such as Alzheimer’s Disease (AD), normal control (NC), early mild cognitive impairment (EMCI), and late mild cognitive impairment (LMCI). The pre-trained EfficientNetB7 model has been improved, achieving an accuracy of 98.95% for classifying multi-stage AD. Moving forward, there is an anticipation of utilizing other pre-trained algorithms to identify early-stage Alzheimer’s disease. Moreover, the dataset will be expanded through the implementation of straightforward data augmentation techniques. We will employ the approach of Deep Convolutional Generative Adversarial Network (DCGAN). Furthermore, the aim is to highlight Alzheimer’s characteristics prior to the classification of AD stages through MRI segmentation. Prospective directions for future research, concerning compound model scaling and medical image analysis, are delineated. Potential extensions of the approach, including its application to other medical conditions and the integration of multi-modal data, are proposed.

Data Availability

Data and code are available with authors. The data is available on request from the corresponding author.

References

Kishore P, Usha Kumari C, Kumar MNVSS, Pavani T (2021) Detection and analysis of Alzheimer’s disease using various machine learning algorithms. Materials Today: proceedings 45(Part 2):1502–1508. https://doi.org/10.1016/j.matpr.2020.07.645

Saraiva C, Praca C, Ferreira R, Santos T, Ferreira L, Bernardino L (2016) Nanoparticle-mediated brain drug delivery: Overcoming blood-brain barrier to treat neurodegenerative diseases. J Controlled Release 235:34–47

Eickhoff S, Nichols TE, Van Horn JD, Turner JA (2016) Sharing the wealth: Neuroimaging data repositories. NeuroImage 124:1065–1068

Physicians PC (2020) Alzheimer’s disease facts and figures. Alzheimer’s Dement 16(3):391–460

Noor MBT, Zenia NZ, Kaiser MS, Al Mamun S, Mahmud M (2020) Application of deep learning in detecting neurological disorders from magnetic resonance images: a survey on the detection of Alzheimer’s disease, Parkinson’s disease, and schizophrenia. Brain Informatics 7(1):11

Li F, Tran L, Thung K-H, Ji S, Shen D, Li J (2015) A robust deep model for improved classification of AD/MCI patients. IEEE J Biomed Heal informatics. 19(5):1610–6

Voulodimos A, Doulamis N, Doulamis A, Protopapadakis E (2018) Deep learning for computer vision: a brief review. Comput Intell Neurosci. 2018(1):13

Payan A, Montana G (2015) Predicting Alzheimer's disease: a neuroimaging study with 3D convolutional neural networks. ArXiv:abs/1502.02506. https://api.semanticscholar.org/CorpusID:15570947

Jarrett K, Kavukcuoglu K, Ranzato MA, LeCun Y (2009) What is the best multi-stage architecture for object recognition?. In: 2009 IEEE 12th international conference on computer vision, Kyoto, Japan, pp 2146–2153. https://doi.org/10.1109/ICCV.2009.5459469

Neelaveni J, Devasana MSG (2020) Alzheimer disease prediction using machine learning algorithms. In: 2020 6th international conference on advanced computing and communication systems (ICACCS), Coimbatore, India, pp 101–104. https://doi.org/10.1109/ICACCS48705.2020.9074248

Singh S, Gaikwad K, Nehal A (2022) Detecting Alzheimer’s using shallow learning and deep learning techniques. International journal of advanced research in computer and communication engineering IJARCCE 11(5):2319–5940. https://doi.org/10.17148/IJARCCE.2022.115153

Aruchamy S, Haridasan A, Verma A, Bhattacharjee P, Nandy SN, Vadali SRK (2020) Alzheimer’s disease detection using machine learning techniques in 3D MR images. In: 2020 National conference on emerging trends on sustainable technology and engineering applications (NCETSTEA), Durgapur, India, pp 1–4. https://doi.org/10.1109/NCETSTEA48365.2020.9119923

Sarraf S, Tofighi G (2016) Classification of Alzheimer’s disease structural MRI data by deep learning convolutional neural networks. arXiv:1607.06583. https://doi.org/10.48550/arXiv.1607.06583

Hosseini-Asl E, Keynton RS, El-Baz AS (2016) Alzheimer's disease diagnostics by adaptation of 3Dconvolutional network. In: 2016 IEEE International Conference on Image Processing (ICIP) pp 126–130. https://api.semanticscholar.org/CorpusID:141953

Korolev S, Safiullin A, Belyaev M, Dodonova Y (2017) Residual and plain convolutional neural networks for 3d brain MRI classification Sergey Korolev Amir Safiullin Mikhail Belyaev Skolkovo Institute of Science and Technology Institute for Information Transmission Problems, 2017 IEEE 14th Int. Symp. Biomed. Imaging (ISBI 2017). 835–838.

Wang SH, Phillips P, Sui Y, Liu B, Yang M, Cheng H (2018) Classification of alzheimer's disease based on eight-layer convolutional neural network with leaky rectified linear unit and max pooling. J Med Syst 42(5):85. https://doi.org/10.1007/s10916-018-0932-7

Khvostikov AV, Aderghal K, Krylov AS, Catheline G, Benois-Pineau J (2018) 3D Inception-based CNNwith sMRI and MD-DTI data fusion for Alzheimer's Disease diagnostics. ArXiv: abs/1809.03972. https://api.semanticscholar.org/CorpusID:52189460

Sahumbaiev A, Popov J, Ramírez JM, Górriz Ortiz A (2018) 3D-CNN HadNet classification of MRI for alzheimer’s disease diagnosis. In: IEEE Nuclear Science Symposium and Medical Imaging Conference Proceedings (NSS/MIC). Sydney, NSW, Australia, pp 1-4. https://doi.org/10.1109/NSSMIC.2018.8824317

Spasov SE, et al. (2018) A Multi-modal convolutional neural network framework for the prediction of Alzheimer’s disease. 1271–1274.

Kahramanli H (2012) A modified cuckoo optimization algorithm for engineering optimization. Int J Futur Comput Commun, pp 199–201. https://api.semanticscholar.org/CorpusID:14514416

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. CoRR abs/1409.1556. https://api.semanticscholar.org/CorpusID:14124313

Wang Y, et al. (2018) A novel multimodal MRI analysis for Alzheimer’s disease based on convolutional neural network. 2018 40th Annu Int Conf IEEE Eng Med Biol Soc 754–757.

Khagi B, Lee B (2019) CNN models performance analysis on MRI images of OASIS dataset for the distinction between healthy and Alzheimer’s patient. Int Conf Electron Information Commun. 2019:1–4

Jain R, Jain N, Aggarwal A, Hemanth DJ (2019) Convolutional neural network based Alzheimer’s disease classification from magnetic resonance brain images. Cogn Syst Res 57:147–159. https://doi.org/10.1016/j.cogsys.2018.12.015

Ge C, Qu Q, Gu IY-H, Jakola AS (2019) Multiscale deep convolutional networks for characterization and detection of alzheimer’s disease using MR images. In: 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, pp 789–793. https://doi.org/10.1109/ICIP.2019.8803731

Song T, et al. (2019) Graph convolutional neural networks for Alzheimer’s disease. 2019 IEEE 16th IntSymp Biomed Imaging (ISBI 2019) 414–417

Liu L, Zhao S, Chen H, Wang A (2020) A new machine learning method for identifying Alzheimer’s disease. Simul Model Pract Theory 99:102023

Liu M et al (2018) A multi-model deep convolutional neural network for automatic hippocampus segmentation and classification in Alzheimer’s disease. Neuroimage 208:2020

Impedovo D, Pirlo G, Vessio G, Angelillo MT (2019) A handwriting-based protocol for assessing neurodegenerative dementia. Cognit Comput. 11(4):576–86

Parmar H, Nutter B, Long R, Antani S, Mitra S (2020) Spatiotemporal feature extraction and classification of Alzheimer’s disease using deep learning 3D-CNN for fMRI data. J Med Imaging. 7(05):1–14

Basaia S et al (2018) Automated classification of Alzheimer’s disease and mild cognitive impairment using a single MRI and deep neural networks. Neuro Image Clin. 2019(21):101645

Pan D, Zeng A, Jia L, Huang Y, Frizzell T, Song X (2020) Early detection of alzheimer’s disease using magnetic resonance imaging: a novel approach combining convolutional neural networks and ensemble learning. Front Neurosci 14:259. https://doi.org/10.3389/fnins.2020.00259

Vassanelli S, Kaiser MS, Eds NZ, Goebel R (2020) 3D DenseNet ensemble in the 4-way classification of Alzheimer’s disease. Series Editors 2020

Helaly HA, Badawy M, Haikal AY (2022) Deep learning approach for early detection of alzheimer’s disease. Cogn Comput 14:1711–1727. https://doi.org/10.1007/s12559-021-09946-2

Harika S, Yamini T, Nagasaikamesh T, Basha SH, Santosh Kumar S, DurgaKameswari MSS (2022) Alzheimers disease detection using different machine learning algorithms. International Journal for Research in Applied Science & Engineering Technology (IJRASET) 10(X)

KR Kruthika, Rajeswari, HD Maheshappa, (2019) “Multistage classifier based approach for Alzheimer’s disease prediction and retrieval”, Informat Med Unlocked 14 34-42

Jayesh Locharla, Haswanth Kolanuvada, Kona Venkata Sai Ashrith, S Suchitra, (2022) “A Robust Deep Learning Techniques for Alzheimer’s Prediction”, 2022 IC4S.

Jo T, Nho K, Saykin AJ (2019) Deep Learning in Alzheimer’s Disease: Diagnostic Classification and Prognostic Prediction Using Neuroimaging Data. Front. Aging Neurosci. 11:220. https://doi.org/10.3389/fnagi.2019.00220

Liu S, Masurkar AV, Rusinek H (2022) Generalizable deep learning model for early Alzheimer’s disease detection from structural MRIs. Sci Rep 12:17106. https://doi.org/10.1038/s41598-022-20674-x

Patil V, Madgi M, Kiran, (2022)“A. Early prediction of Alzheimer’s disease using convolutional neural network: a review”. Egypt J Neurol Psychiatry Neurosurg 58, 130 https://doi.org/10.1186/s41983-022-00571-w.

Zhao Z, Chuah JH, Lai KW, Chow CO, Gochoo M, Dhanalakshmi S, Wang N, Bao W, Wu X (2023) Conventional machine learning and deep learning in Alzheimer’s disease diagnosis using neuroimaging: A review. Front Comput Neurosci. 6(17):1038636. https://doi.org/10.3389/fncom.2023.1038636

Al-Shoukry S, Rassem TH, Makbol NM (2020) Alzheimer’s Diseases Detection by Using Deep Learning Algorithms: A Mini-Review. IEEE Access 8:77131–77141. https://doi.org/10.1109/ACCESS.2020.2989396

Gao Shuangshuang, Lima Dimas (2022) A review of the application of deep learning in the detection of Alzheimer’s disease. Intl J Cogn Comput Eng 3:1–8. https://doi.org/10.1016/j.ijcce.2021.12.002

PC Muhammed Raees and Vinu Thomas (2021) Automated detection of Alzheimer’s Disease using Deep Learning in MRI. J Phys Conf Ser 1921:012024. https://doi.org/10.1088/1742-6596/1921/1/012024

EL-Geneedy Marwa, Moustafa Hossam El-Din, Khalifa Fahmi, Khater Hatem, AbdElhalim Eman (2023) An MRI-based deep learning approach for accurate detection of Alzheimer’s disease. Alexandria Eng J 63:211–221. https://doi.org/10.1016/j.aej.2022.07.062

Diogo VS, Ferreira HA, Prata D (2022) Early diagnosis of Alzheimer’s disease using machine learning: a multi-diagnostic, generalizable approach. Alz Res Therapy 14:107. https://doi.org/10.1186/s13195-022-01047-y

Kaur Swapandeep, Gupta Sheifali, Singh Swati, Gupta Isha (2022) Detection of Alzheimer’s Disease Using Deep Convolutional Neural Network. Intl J Image Graphics 22(03):2140012. https://doi.org/10.1142/S021946782140012X

Al Shehri W (2022) Alzheimer’s disease diagnosis and classification using deep learning techniques. PeerJ Comput Sci 8:e1177. https://doi.org/10.7717/peerj-cs.1177

Oh K, Chung YC, Kim KW (2019) Classification and Visualization of Alzheimer’s Disease using Volumetric Convolutional Neural Network and Transfer Learning. Sci Rep 9:18150. https://doi.org/10.1038/s41598-019-54548-6

Hayit G, van Bram G, Ronald MS (2016) Guest Editorial Deep Learning in Medical Imaging: Overview and Future Promise of an Exciting New Technique. IEEE Trans Med Imag 35:1153–1159

Litjens G (2017) A, “Survey on Deep Learning in Medical Image Analysis.” IEEE Trans Med Imag 42:60–88

Anees Abrol, Manish Bhattarai, Alex Fedorov, Yuhui Du, Sergey Plis, Vince D. Calhoun, (2018) “Deep Residual Learning for Neuroimaging: An application to Predict Progression to Alzheimer’s Disease”, bioRxiv https://doi.org/10.1101/470252.

Alex Fedorov, R Devon Hjelm, Anees Abrol, Zening Fu, Yuhui Du k, Sergey Plis, Vince D Calhoun, (2019) “Prediction of Progression to Alzheimer’s disease with Deep InfoMax. arXiv:1904.10931.

Kim J, Calhoun VD, Shim E, Lee J (2016) H, “Deep neural network with weight sparsity control and pre-training extracts hierarchical features and enhances classification performance: evidence from whole-brain resting-state functional connectivity patterns of schizophrenia.” Neuroimage. 124:127–146

Lee Garam, Nho Kwangsik, Kang Byungkon, Sohn Kyung-Ah, Kim Dokyoon (2019) Predicting Alzheimer’s disease progression using multi-modal deep learning approach. Sci Rep 9:1952. https://doi.org/10.1038/s41598-018-37769-z

Liu M, Zhang J, Lian C, Shen D (2020) Weakly supervised deep learning for brain disease prognosis using mri and incomplete clinical scores. IEEE Trans Cybern 50(7):3381–3392. https://doi.org/10.1109/TCYB.2019.2904186

Suk., H. I., Lee, S. W. & Dinggang, L, “Deep ensemble learning of sparse regression models for brain disease diagnosis”, Medical Image Analysis 37, 101–113, 2017.

Vieira S, Pinaya WHL (2017) Mechelli, A, “Using deep learning to investigate the neuroimaging correlates of psychiatric and neurological disorders: method and applications.” Neurosci Biobehav Review. 74:58–75

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of Interest

The authors declare that they have no conflicts of interest to report regarding the present study. We confirm that we have no conflicts of interest to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Suchitra, S., Krishnasamy, L. & Poovaraghan, R.J. A deep learning-based early alzheimer’s disease detection using magnetic resonance images. Multimed Tools Appl (2024). https://doi.org/10.1007/s11042-024-19677-9

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11042-024-19677-9