Abstract

Sand-dust color images suffer from poor image visibility and serious color cast that significantly affect the performance of outdoor computer vision systems. Therefore, this paper proposes an integrated enhancement method for the sand-dust image. The proposed method improves the image visibility and removes the sand-dust color cast. It integrates two main processes in two different color models. The adaptive gray world-blue channel (AGW-B) is utilized in the Red-Green-Blue (RGB) color model to remove the sand-dust color cast. Then, the contrast limited adaptive histogram equalization with normalized intensity and saturation correction (CLAHE-NISC) is conducted in a Hue-Saturation-Intensity (HSI) color model to enhance the image visibility. Sand-dust images with weak, medium, and extreme sand-dust color casts were utilized in the subjective and objective evaluations. Results show that the proposed method produced better and clearer enhanced images than the other four current sand-dust image enhancement methods.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Sand dust is one of the image quality deterioration factors caused by bad weather [1, 2]. Due to sand-dust weather conditions, color images suffer from poor image visibility and low contrast [3,4,5]. Moreover, they have a yellow or even orange color cast due to the absorption of the blue channel by the sand-dust particles [6]. These sand-dust images significantly affect the performance of various outdoor computer vision systems, such as traffic monitoring systems, human and vehicle tracking systems, video surveillance systems, outdoor object recognition systems, and others.

Therefore, various image enhancement methods [1,2,3,4,5,6,7,8,9,10,11,12,13,14,15,16,17,18,19,20,21,22] have been proposed to address this problem. Fu et al. [1] proposed a fusion-based enhancement method that corrects the color of the sand-dust image using a statistical strategy. Meanwhile, Yan et al. [2] integrated global fuzzy enhancement and band-limited histogram in their method. However, although both methods enhance the image contrast, the overall enhancement was not satisfactory for cases with severe color distortion.

Huang et al. [7] implemented an enhancement method based on the dark channel prior (DCP), but they produced a bluish artifact on the sand-dust images. The same blueness happened to Wang et al. [8] when they implemented their enhancement method in the CIE Lab color model. Shi et al. [12] used an improved DCP to reduce the halo effect and implemented this method in the CIE Lab color model. They achieved good image visibility, but their output images showed a dim phenomenon. Another enhancement method employed in the CIE Lab color model by Shi et al. [14] utilized a normalized gamma transformation-based contrast limited adaptive histogram equalization (CLAHE). Although they managed to get reasonable resulting images, they could not enhance severe sand-dust images.

Based on the atmospheric scattering model (ASM), Yu et al. [10] proposed an enhancement method that improved the image visibility and compensated for the color shift. However, the resulting images produced halo effects and looked unnatural. Meanwhile, Al-Ameen [9] proposed an enhancement method based on tuned tri-threshold fuzzy intensification operators, but it failed to remove the color cast effectively.

Gao et al. [15] and Cheng et al. [16, 17] focused their methods on compensating for the blue channel of the sand-dust images. However, these methods did not enhance and recover the image with an orange sand-dust color cast. Gao et al. [18] also proposed an enhancement method based on the YUV color model. They achieved the color correction using the color components U and V in the YUV color model, and they acquired contrast enhancement via CLAHE and improved Retinex. However, color distortions still exist in their resulting images. Alsaeedi et al. [20] also proposed an image enhancement method based on color correction using a new membership function in the YUV color model. However, the resulting images are bluish because the color correction is applied only to the U and V components. Meanwhile, Wang et al. [3] proposed a fast color balance and multi-path fusion method to enhance sand-dust images. Their method removed the color cast but did not correct the color effectively.

Park et al. [4] proposed a sand-dust enhancement method that aims to obtain a coincident chromatic histogram by removing the color cast and enhancing the contrast and brightness of the sand-dust images. This method removed the color cast and enhanced the image contrast except for the brightness. Meanwhile, Gao et al. [5] proposed a color balance and sand-dust image enhancement algorithm in the CIE Lab color model. They effectively remove the color shift while reducing the blue artifact. However, their method fails when encountering images with an extreme sand-dust color cast. A sand-dust image enhancement based on light attenuation and transmission compensation has been proposed recently by Fei Shi et al. [21]. Although the method performs well on most sand-dust images, it did not produce ideal results when dealing with an extreme sand-dust color cast.

Furthermore, enhancement methods using classic deep learning-based dehazing algorithms have also been proposed [11, 13, 19]. Zhu et al. [11] simultaneously estimated the transmission map and atmospheric light using a generative adversarial network but required huge-scale datasets for training and testing [23]. Li et al. [13] utilized three joint subnets to disintegrate the input fuzzy image into three layers. However, there are still errors in the atmospheric light estimation. Recently, Gao et al. [19] proposed a two-step unsupervised approach that utilized a color correction method and generative adversarial network. However, it produced color distortion and a halo when enhancing mages with an extreme sand-dust color cast.

1.1 Motivation and contributions

Although these enhancement methods have varying degrees of success, almost all of the methods mentioned earlier still suffer from color distortion [1,2,3, 13], bluish and halo artifacts [5, 7, 8, 10, 19, 20], ineffective color cast removal [9, 14,15,16,17, 21], dim enhanced image [4, 12], and large dataset requirement [11, 23].

Motivated by these problems, this paper proposes an enhancement method that integrates two main processes in two different color models to improve image visibility and remove the color cast for sand-dust images. Since the sand-dust particles absorb blue light intensively, the blue channel of an image is significantly attenuated [6], resulting in a yellowish to reddish appearance in an extreme sand-dust environment.

The first process addresses the sand-dust color cast issue by utilizing the AGW-B within the RGB color model that effectively removes the sand-dust color cast by incorporating the compensated global and local mean values of the RGB channels. However, the resulting image from the first process lacks visibility.

To address this, CLAHE-NISC is implemented in the HSI color model. It enhanced the visibility by modifying the saturation and intensity without affecting the hue. Lastly, the enhanced image is converted back to the RGB color model.

By combining the AGW-B and CLAHE-NISC processes sequentially, the proposed method capitalizes on the strengths of each approach while addressing their respective limitations. This synergistic integration results in a comprehensive image enhancement technique that effectively tackles color cast removal and contrast enhancement challenges encountered in sand-dust imagery.

The proposed method offers an innovative solution for enhancing sand-dust images by introducing novel concepts and improvements to existing processes, ultimately leading to superior image quality and perceptual fidelity.

Thus, the contributions of this work can be summarized as follows:

-

1.

The proposed method integrates two processes in two color models to enhance the sand-dust image.

-

2.

The blue channel compensation technique is introduced to effectively remove different levels of sand-dust color cast.

-

3.

The normalized intensity and saturation correction functions are introduced to minimize color distortion and reduce the over-enhancement of the image brightness.

The proposed method is compared with four existing model-based sand-dust image enhancement methods to evaluate the performance. Moreover, image quality assessments were computed to assess two criteria: image visibility and color restoration. For the experiments, sand-dust images from the sand-dust image dataset [4] were utilized. Results show that the proposed method produces better images than the other four sand-dust image enhancement methods.

The rest of this paper is organized as follows. Section 2 presents the proposed method, while Section 3 provides the experimental results. Section 4 concludes the paper.

2 Proposed method

In this section, the proposed method is discussed. It integrates two main processes in two different color models. The AGW-B is utilized in the first process. It is implemented to the image in the RGB color model to remove the sand-dust color cast.

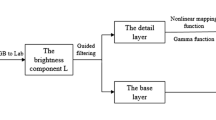

Then, the resulting image is converted from RGB to the HSI color model for the second process. Here, the saturation and intensity of the image are modified using CLAHE-NISC. During this process, the image’s hue is maintained. Lastly, the enhanced image is converted back to the RGB color model. The process flow of the proposed method is illustrated in Fig. 1.

2.1 Adaptive gray world-blue channel (AGW-B)

The proposed method employs the AGW-B approach, a modified version of AGW [24, 25], to remove the sand-dust color cast. In a conventional GW method, scaling factors are determined using a single channel from the RGB color space as a reference, commonly utilized for mitigating color cast in night-time and underwater images [24,25,26].

In contrast, the AGW method incorporates the global mean of an image and integrates the local mean of individual channels in its calculations. Moreover, to eliminate the color cast, the method compensates for the red and blue channels in underwater images [24, 25], and all three color channels for night-time images [26].

In sand-dust imagery, the blue channel experiences considerable attenuation. This diminished intensity results in a yellow, orange, or red color cast within the sand-dust image. Consequently, to remove the sand-dust color cast, the AGW-B method adjusts the blue channel of the sand-dust image of sized \(M \times N\) through the following equations.

where R(i, j), G(i, j) and B(i, j) represent the red, green, and blue color channels of an image with the interval [0,1], \(\bar{G}\) and \(\bar{B}\) are the global mean values of G(i, j) and B(i, j). \(\alpha _b\) is a constant parameter equal to 1, which is deemed suitable for various illumination conditions and acquisition settings [27]. In this computation, the values of the red and green channels remain unchanged, while the blue channel is compensated.

Then, using these values, the compensated global mean values, \(\bar{R}^g, \bar{G}^g\) and \(\bar{B}^g\) for each color channel of the image are computed using the expressions below.

Next, the compensated local mean of each channel are computed.

where \(W_L\) is the moving local average window size for \((2L+1) \times (2L+1)\) region with L is set to 10 [25]. Here, the average of all the local neighboring pixel values for each pixel substitutes its original value. Additionally, mirror reflections of the image’s edge borders are used to pad them.

As the light attenuation in a sand-dust image varies globally, compensated global and local means are calculated to ensure adequate compensation for the global and local light attenuation. Utilizing these mean values, the compensated mean values for the red, green, and blue channels are computed.

where \(0<\alpha <1\).

Lastly, the color cast is removed by averaging out the compensated color channels R(i, j), G(i, j), and \(B^c(i,j)\) with the compensated mean values, \(\bar{R}^\theta (i,j), \bar{G}^\theta (i,j)\) and \(\bar{B}^\theta (i,j)\) using the expressions below.

where

2.2 Contrast limited adaptive histogram equalisation with normalized intensity and saturation correction (CLAHE-NISC)

The resulting image from the initial process is transformed from RGB to the HSI color model. HSI, representing Hue (H), Saturation (S), and Intensity (I), offers a representation of color images that aligns well with the human visual system [28, 29]. This model carries intuitive advantages for image enhancement and offers more precise color descriptions than the RGB model [30].

In the HSI color model, H signifies the human perception of various colors and spans a range from \(0^\circ \) to \(360^\circ \), where \(0^\circ , 120^\circ \) and \(240^\circ \) correspond to red, green, and blue, respectively. S denotes color purity, with higher saturation indicating a more vibrant color, while I represents color brightness. Both S and I fall within the range of 0 to 1.

After the image is converted to the HSI color model, CLAHE is applied to the S and I components. CLAHE is an adaptive contrast enhancement method based on adaptive histogram equalization (AHE) [31]. It refines the enhancement calculation in AHE by imposing a user-specified maximum to the height of the local histogram. This enhanced calculation prevents the edge-shadowing effect of the AHE [32]. It also limits and redistributes the large pixels and effectively reduces the noise.

However, CLAHE may over-enhanced the image brightness and introduce color distortion to the enhanced image [33,34,35]. Thus, to reduce over-enhanced brightness and minimize color distortion, normalized intensity and saturation correction (NISC) functions are introduced.

where \(I_{n}\) and \(S_{n}\) are the normalized intensity and saturation values of the enhanced image, respectively .

During the process, the H component of the image is maintained. Thus, the image visibility and the contrast of the image are improved with minimal color distortion. Lastly, the enhanced image is converted back to the RGB color model. The overview of the two processes is illustrated in Fig. 2. It can be observed that the sand-dust color cast has been completely removed by the AGW-B. Meanwhile, the image visibility and the contrast of the resulting images have been improved by the CLAHE-NISC.

2.3 Computational complexity

The computational complexity of the proposed method primarily hinges on its two key components: the AGW-B method and the CLAHE-NISC method. The AGW-B method involves adjusting the blue channel of the sand-dust image based on global and local mean calculations. These computations require iterating over all pixels in the image, resulting in a complexity of O(N), where N is the number of pixels. Similarly, the CLAHE-NISC method applies adaptive histogram equalization and subsequent corrections to the image’s saturation and intensity components. This method also necessitates processing each pixel in the image, leading to a complexity of O(N). Considering these factors, the overall computational complexity of the proposed method can be concisely summarized as O(N), reflecting the dominant influence of pixel-wise operations in both its constituent methodologies.

3 Experimental results and discussion

The section describes the subjective and objective evaluations employed to assess the performance of the proposed method. Four existing sand-dust image enhancement methods, Park and Eom [4], Wang et al. [3], Shi et al. [14], and Shi et al. [12], were selected for comparison. All the methods belong to the same paradigm as the proposed method, which is model-based. Model-based methods are distinguished by their straightforward mathematical models with minimal complexity, eliminating the need for pre-existing training data [29].

In contrast, learning-based methods necessitate extensive image datasets, leading to higher model and time complexities [36, 37]. Additionally, they may suffer from issues such as local over-enhancement caused by uneven illumination during image acquisition [38, 39] and a failure to accurately represent the image depth information, particularly in edge areas [40]. Moreover, learning-based methods heavily rely on the quality and diversity of the training dataset [23]. In some scenarios, acquiring such data is challenging or impractical.

Furthermore, the results produced by learning-based methods often lack interpretability because learning-based models used in enhancement methods are commonly regarded as black boxes, making it challenging to understand how they arrive at their decisions [41, 42]. This lack of interpretability can be a significant concern, particularly in critical applications where justifications for decisions are necessary.

Given these fundamental differences between model-based and learning-based methods, comparing them falls outside the scope of this paper. Therefore, the evaluation focused solely on comparing the proposed method with other model-based methods to assess its effectiveness.

The experiments were conducted on a laptop with an AMD Ryzen 3 3200U CPU operating at 2.60 GHz with a physical memory of 8.00 GB. Sand-dust images from the sand-dust image dataset [4] were utilized in both experiments. The images in the dataset were categorized into three groups: weak, medium, and extreme sand-dust color cast.

Before the experiments, the clip limit value utilized in the CLAHE-NISC was determined. Figure 3 shows some resulting images from the proposed method using three clip limit values, 0.005, 0.01, and 0.05.

Figure 3 shows that the proposed method enhances the image visibility and contrast as the clip limit value increases. However, noise and blocky artifacts were introduced to the resulting images when the clip limit was 0.05. Therefore, to mitigate the issues, the clip limit value in CLAHE-NISC was set to 0.01 for the experiments. Moreover, it is advisable to consistently utilize a clip limit value of 0.01 whenever employing CLAHE-NISC to ensure satisfactory outcomes.

3.1 Subjective evaluation

In this experiment, we conducted a comparative analysis between the proposed method and four current sand-dust image enhancement methods, Park and Eom [4], Wang et al. [3], Shi et al. [14], and Shi et al. [12]. The sand-dust images from the sand-dust image dataset [4] were enhanced using all the sand-dust image enhancement methods.

Figure 4 shows the enhanced sand-dust images with a weak sand-dust color cast. It can be observed that the enhanced images produced by Shi et al. [12] have a dim phenomenon. Also, the color cast is not effectively removed, as seen in most enhanced images. Meanwhile, Shi et al. [14] generated reasonably enhanced images, but the dust haze still exists from their resulting images. Wang et al. [3] managed to remove the color cast but were unable to correct the color effectively, which made their enhanced images look dull. Lastly, Park and Eom [4] produced dark enhanced images that reduce the image visibility and affect the color restoration.

The enhanced sand-dust images with a medium sand-dust color cast are presented in Fig. 5. Similarly, Shi et al. [12] do not completely remove the sand-dust color cast, resulting in poorly enhanced images. Moreover, their enhanced images still show a slight yellowish color cast. Meanwhile, Park and Eom [4], and Shi et al. [14] produced dull enhanced images. Also, the dust haze still exists in the enhanced images produced by Shi et al. [14]. Wang et al. [3] and the proposed method managed to remove the sand-dust color cast. However, the enhanced images from the proposed method look brighter and more appealing.

Images with an extreme sand-dust color cast have an orange to almost reddish color cast. Figure 6 shows the enhanced sand-dust images of this category. Shi et al. [14] and Shi et al. [12] failed to recover the sand-dust images effectively, and their enhanced images have a light orange color cast. Meanwhile, Park and Eom [4], and Wang et al. [3] produced dull enhanced images that reduced the image visibility.

The proposed method removed the sand-dust color cast and improved the image visibility, such as the edges and the image contrast. Given the challenging nature of enhancing sand-dust images with an extreme sand-dust color cast, the proposed method yields significant results.

The results from Figs. 4 to 6 attest to the effectiveness of the proposed method in sand-dust image enhancement. In particular, the ability to remove different levels of sand-dust color cast by compensating the global and local mean values of the RGB channels of an image. Moreover, the correction functions introduced in the CLAHE-NISC enhance the intensity and saturation of the image while preserving the hue.

3.2 Objective evaluation

For this experiment, the sand-dust image enhancement methods were evaluated objectively based on image visibility and color restoration. For image visibility, the rate of new visible edges, e and quality of contrast restoration \(\bar{r}\) from [43] were utilized. e represents the increased rate of visible edges after image enhancement, while \(\bar{r}\) denotes the quality of contrast restoration in enhanced images.

The image visibility measurement (IVM) [44] and visual contrast measurement (VCM) [45] were also computed to quantify the degree of the visibility of the enhanced images. IVM uses the visible edge segmentation, while VCM uses the local standard deviation to measure image visibility. Thus, higher e, \(\bar{r}\), IVM, and VCM values refer to a better and clearer enhanced image.

Meanwhile, for color restoration, \(\sigma \), which represents the rate of the saturated pixels after the enhancement, was utilized [43]. Smaller \(\sigma \) value indicates better color restoration performance by the enhancement method [33]. Tables 1, 2 and 3 shows the average scores for enhanced sand-dust images with weak, medium, and extreme sand-dust color cast, respectively. Meanwhile, Table 4 shows the average scores for all the enhanced sand-dust images.

It can be observed that the enhanced images produced by the proposed method achieved the best image visibility scores than the other four enhancement methods in all the categories of sand-dust color cast. The proposed method can reveal edges and improve the image details after the enhancement, thus producing images with better visibility and contrast correction.

Meanwhile, for color restoration, the proposed method and Shi et al. [14] scored the lowest for the \(\sigma \) value in all three categories. It shows that the proposed method has minimal color distortion on the enhanced images. Thus, the proposed method produces brighter and more appealing images than the others.

4 Conclusion

This paper has presented an integrated sand-dust image enhancement method. It integrates two main processes in two different color models. The AGW-B is utilized in the RGB color model to remove the sand-dust color cast. Meanwhile, the CLAHE-NISC was conducted in the HSI color model to enhance the saturation and intensity of the image. The amalgamation of these processes yields a holistic image enhancement method to address the challenges of color cast removal and contrast enhancement typically encountered in sand-dust imagery.

Sand-dust images with three categories of color casts, weak, medium, and extreme, were utilized in the subjective and objective evaluations. The results reveal the superiority of the proposed method compared to the four existing sand-dust enhancement methods in terms of image visibility and color restoration. Also, the enhanced images from the proposed method look brighter and more appealing than the others.

In conclusion, the proposed method presents an innovative solution for improving images affected by sanddust, introducing innovative concepts and enhancements to established methodologies. As a result, it achieves superior image quality and perceptual fidelity.

Availability of data and code

The data and code generated during and/or analyzed during the current study are available from the corresponding author on reasonable request by researchers and cite it in their papers.

References

Fu X, Huang Y, Zeng D, Zhang XP, Ding X (2014) A fusion-based enhancing approach for single sandstorm image. In: 2014 IEEE 16th international workshop on multimedia signal processing (MMSP), pp 1–5

Yan T, Wang L, Wang J (2014) Method to enhance degraded image in dust environment. J Softw 9(10):2672–2677

Wang B, Wei B, Kang Z, Hu L, Li C (2021) Fast color balance and multi-path fusion for sandstorm image enhancement. Sig Image Video Process 15:637–644

Park TH, Eom IK (2021) Sand-dust image enhancement using successive color balance with coincident chromatic histogram. IEEE Access 9:19749–19760

Gao G, Lai H, Wang L, Jia Z (2022) Color balance and sand-dust image enhancement in lab space. Multimed Tools Appl (2022). Multimedia Tools and Applications

Liang P, Dong P, Wang F, Ma P, Bai J, Wang B et al (2022) Learning to remove sandstorm for image enhancement. The Visual Computer, pp 1–24

Huang SC, Ye JH, Chen BH (2015) An advanced single-image visibility restoration algorithm for real-world hazy scenes. IEEE Trans Ind Electron 62(5):2962–2972

Wang J, Pang Y, He Y, Liu C 2016 Enhancement for dust-sand storm images. In: Proceedings, Part I, of the 22nd international conference on multimedia modeling. vol 9516. Springer-Verlag. pp 842–849

Al-Ameen Z (2016) Visibility enhancement for images captured in dusty weather via tuned tri-threshold fuzzy intensification operators. Int J Intell Syst Appl 8(8):10–17

Yu S, Zhu H, Wang J, Fu Z, Xue S, Shi H (2016) Single sand-dust image restoration using information loss constraint. J Modern Opt 63(21):2121–2130

Zhu H, Peng X, Chandrasekhar V, Li L, Lim JH (2018) DehazeGAN: when image dehazing meets differential programming. In: Proceedings of the twenty-seventh international joint conference on artificial intelligence, IJCAI-18. International Joint Conferences on Artificial Intelligence Organization. pp 1234–1240

Shi Z, Feng Y, Zhao M, Zhang E, He L (2019) Let you see in sand dust weather: a method based on halo-reduced dark channel prior dehazing for sand-dust image enhancement. IEEE Access 7:116722–116733

Li B, Gou Y, Liu JZ, Zhu H, Zhou JT, Peng X (2020) Zero-shot image dehazing. IEEE Trans Image Process 29:8457–8466

Shi Z, Feng Y, Zhao M, Zhang E, He L (2020) Normalised gamma transformation-based contrast-limited adaptive histogram equalisation with colour correction for sand-dust image enhancement. IET Image Process 14(4):747–756

Gao G, Lai H, Jia Z, Liu Y, Wang Y (2020) Sand-dust image restoration based on reversing the blue channel prior. IEEE Photon J 12(2):1–16

Cheng Y, Jia Z, Lai H, Yang J, Kasabov NK (2020) A fast sand-dust image enhancement algorithm by blue channel compensation and guided image filtering. IEEE Access 8:196690–196699

Cheng Y, Jia Z, Lai H, Yang J, Kasabov NK (2020) Blue channel and fusion for sandstorm image enhancement. IEEE Access 8:66931–66940

Gao G, Lai H, Liu Y, Wang L, Jia Z (2021) Sandstorm image enhancement based on YUV space. Optik - Int J Light Electron Optics 226:1–11

Gao G, Lai H, Jia Z et al (2023) Two-step unsupervised approach for sand-dust image enhancement. International Journal of Intelligent Systems. 2023

Alsaeedi AH, Hadi SM, Alazzawi Y (2023) Fast dust sand image enhancement based on color correction and new membership function. arXiv:2307.15230

Fei S, Zhenhong J, Huicheng L, K KN, Sensen S, Junnan W (2023) Sand-dust image enhancement based on light attenuation and transmission compensation. Multimed Tools App 82(5):7055–7077

Xiang P, Chen C, Liu G, Pang Z, Zhang J, Hu J (2023) Image enhancement of degraded sand-dust images based on channel compensation and brightness partitioning. In: 2023 2nd international conference on robotics, artificial intelligence and intelligent control (RAIIC). IEEE, pp 288–293

Zhang K, Zuo W, Chen Y, Meng D, Zhang L (2017) Beyond a Gaussian denoiser: residual learning of deep CNN for image denoising. IEEE Trans Image Process 26(7):3142–3155

Wong SL, Paramesran R, Taguchi A (2018) Underwater image enhancement by adaptive gray world and differential gray-levels histogram equalization. Adv Electr Comput Eng 18(2):109–116

Wong SL, Paramesran R, Yoshida I, Taguchi A (2019) An integrated method to remove color cast and contrast enhancement for underwater image. IEICE Trans Fundam E102–A:1524–1532

Hassan MF, Adam T, Paramesran R (2023) Lightness enhancement method for low illumination night-time image. In: AIP conference proceedings, vol 2756. AIP Publishing, pp 030008–1–030008–6

Ancuti CO, Ancuti C, De Vleeschouwer C, Bekaert P (2018) Color balance and fusion for underwater image enhancement. IEEE Trans Image Process 27(1):379–393

Zhang W, Liang J, Ren L, Ju H, Bai Z, Wu Z (2017) Fast polarimetric dehazing method for visibility enhancement in HSI colour space. J Opt 19(9):095606

Hassan MF, Adam T, Rajagopal H, Paramesran R (2022) A hue preserving uniform illumination image enhancement via triangle similarity criterion in HSI color space. The Visual Computer,pp 1–12

Chien CL, Tseng DC (2011) Color image enhancement with exact HSI color model. Int J Innov Comput Inf Control 7(12):6691–6710

Zuiderveld K (1994) Contrast limited adaptive histogram equalization. Academic Press Professional, Graphic Gems IV

Pisano ED, Zong S, Hemminger BM, DeLuca M, Johnston RE, Muller K et al (1998) Contrast limited adaptive histogram equalization image processing to improve the detection of simulated spiculations in dense mammograms. J Digit Imaging 11(4):193–200

Xu Y, Wen J, Fei L, Zhang Z (2016) Review of video and image defogging algorithms and related studies on image restoration and enhancement. IEEE Access 4:165–188

Wang W, Wu X, Yuan X, Gao Z (2020) An experiment-based review of low-light image enhancement methods. IEEE Access 8:87884–87917

Hassan MF (2022) A uniform illumination image enhancement via linear transformation in CIELAB color space. Multimedia Tools and Applications

Wang W, Wu X, Yuan X, Gao Z (2020) An experiment-based review of low-light image enhancement methods. IEEE Access 8:87884–87917

Tai Y, Yang J, Liu X, Xu C (2017) Memnet: a persistent memory network for image restoration. In: Proceedings of the IEEE international conference on computer vision, pp 4539–4547

Wang W, Chen Z, Yuan X, Wu X (2019) Adaptive image enhancement method for correcting low-illumination images. Inf Sci 496:25–41

Zhang C, Bengio S, Hardt M, Recht B, Vinyals O (2021) Understanding deep learning (still) requires rethinking generalization. Commun ACM 64(3):107–115

Xu Y, Wen J, Fei L, Zhang Z (2015) Review of video and image defogging algorithms and related studies on image restoration and enhancement. IEEE Access 4:165–188

Li J, Pei Z, Zeng T (2021) From beginner to master: a survey for deep learning-based single-image super-resolution. arXiv:2109.14335

Samek W, Montavon G, Vedaldi A, Hansen LK, Müller KR (2019) Explainable AI: interpreting, explaining and visualizing deep learning. vol 11700. Springer Nature

Hautiere N, Tarel JP, Aubert D, Dumont E (2008) Blind contrast enhancement assessment by gradient ratioing at visible edges. Image Anal Stereology 27:87–95

Yu X, Xiao C, Deng M, Peng L (2011) A classification algorithm to distinguish image as haze or non-haze. In: 2011 Sixth international conference on image and graphics. pp 286–289

Jobson DJ, ur Rahman Z, Woodell GA, Hines GD (2006) A comparison of visual statistics for the image enhancement of FORESITE aerial images with those of major image classes. In: Society of photo-optical instrumentation engineers (SPIE) conference series, vol 6246. pp 624601

Funding

Open Access funding enabled and organized by CAUL and its Member Institutions. This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethics approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Conflicts of interest

No conflict of interest exist in the submission of this manuscript, and the manuscript is approved by all authors for publication.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Hassan, M.F., Siaw Lang, W. & Paramesran, R. An integrated enhancement method to improve image visibility and remove color cast for sand-dust image. Multimed Tools Appl (2024). https://doi.org/10.1007/s11042-024-19245-1

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11042-024-19245-1