Abstract

Due to the scattering and absorption of light, the captured image under sand-dust weather has serious color shift and poor visibility, which can affect the application of computer vision. To solve those problems, the present study proposes a color balance and sand-dust image enhancement algorithm in Lab space. To correct the color of the sand-dust image, a color balance technique is put forward. At first, the color balance technique employs the green channel to compensate the lost value of the blue channel. Then, the technique based on statistical strategy is employed to remove the color shift. The proposed color balance technique can effectively remove the color shift while reducing the blue artifact. The brightness component L is decomposed by guided filtering to obtain the detail component. In the meanwhile, to enhance the detail information of the image, the nonlinear mapping function and gamma function are introduced to the detail component. Experimental results based on qualitative and quantitative evaluation demonstrate that the proposed method can effectively remove color shift, enhance details and contrast of the image and produce results superior to those of other state-of-the-art methods. Additionally, the proposed algorithm can satisfy real-time applications, which can also be used to restore images of turbid underwater and haze images.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Under sand-dust weather, a large number of particles and molecules are suspended in the air, which exert a serious scattering and absorption effect on light, making the captured image have color shift, low contrast and blurred details. Additionally, this seriously limits the use ability of outdoor monitoring system, intelligent transportation system, remote sensing system and target recognition system. For example, in extreme weather, people in the scene will become blurred, resulting in the inability to effectively identify people [21]. Therefore, it is of great significance to investigate the algorithm of sand-dust image clarity and enhance its quality with the aim to expand the application of computer vision.

Recently, some researchers have proposed related research algorithms for sand-dust images, focusing on the problems of color shift and low contrast in sand-dust images, as discussed in Section 2. Although the proposed algorithms can improve the visibility of sand-dust images, some problems still exist. First, when these their color correction techniques process heavy sand-dust images, blue artifacts will be generated. Second, their algorithm has insufficient ability to enhance the details of long-distance scenes. Third, more importantly, some algorithms have large time complexity and can not satisfy the requirements of real-time processing.

Therefore, in order to solve the problems in the research process, the present study proposes fast color balance and sand-dust image enhancement algorithm in Lab space. Firstly, the color balance technique is proposed. According to the characteristics of sand-dust image, the green channel is used to compensate the seriously attenuated blue channel to reduce the blue artifacts. Then, the color shift is removed by employing the statistical method. Afterwards, the image is converted from RGB space to Lab space, and the brightness component L is decomposed into the detail component by guided filtering. Both the nonlinear mapping function and gamma function are introduced to process the detail component, aiming to enhance detail and gradient information of the image. The proposed color balance technique can process heavy sand-dust images and handle the blue artifact problem of the existing color correction technology. The detail enhancement method based on Lab space effectively enhances the distant details, with low algorithm complexity and fast running time. It can be put into real-time application scenarios and has great application value. Our main contributions are presented as follows:

-

1)

A color balance technique is proposed. This color balance technique can correct the color of heavy sand-dust images and avoid the color distortion.

-

2)

The nonlinear mapping function and gamma function are introduced to enhance the detail information of the image.

-

3)

The proposed algorithm can satisfy real-time applications, which can also be used to restore images of turbid underwater and haze images.

The structure of our paper is organized as follows. Section 2 introduces literature review. Section 3 illustrates the details of our method. Section 4 presents experimental results and analysis. Finally, the conclusion is presented in Section 5.

2 Literature review

At present, there are relatively few related algorithms for sand-dust images, and most of them concentrate on the research of image dehazing algorithms, such as literature [6, 13, 16, 18, 20, 29, 33]. Dehazing algorithms can be divided into three categories. One is image enhancement-based methods, such as histogram equalization [3] and wavelet transform based on Retinex algorithm [25]. The use of image processing technology makes the processed image achieve a better subjective effect. However, these restored results are often not real. Among them, one is based on the method of image restoration, and a model for the degraded image is established from the causes of the degraded image. The model needs the prior information to better restore the image, such as dark channel prior [13], color attenuation prior [34], and non-local prior [5]. The recovered results are more real while the solution process is more complicated, and the prior information is not applicable to the recovery of sand-dust images. One is deep learning-based methods, such as [24, 30, 33]. Good results have been generated. Nevertheless, this requires a large number of data sets. For sand-dust image research, due to the lack of a large number of data sets, till the present, there has been no deep learning method which can be used to enhance the sand-dust image. Compared with haze images, sand-dust images not only have poor visibility, but also have serious color shift problems. Therefore, sand-dust image enhancement remains more challenging. At present, there are two main categories of the research on sand-dust image clarity algorithm. One is based on image enhancement and the other is on the basis of image restoration. No matter which method is used, the problem of the color shift must be handled. Classical color correction technologies, such as gray world technology [15] and white balance technology [27], exert poor effect on removing color shifts, and color distortion will appear in the process of processing. Currently, there are some new color correction techniques. Koscevic et al. [17] proposed a convolutional network with attention mechanism to achieve color constancy. Afifi et al. [1] introduced a k-nearest neighbor algorithm and adopted nonlinear color mapping function to correct color. These two methods exert generated good effects on the image with color distortion while the training set is not specifical for the sand-dust image. Therefore, the effect is not extremely ideal. The algorithm of sand-dust image clarity is still under constant research.

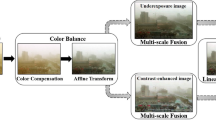

Based on image enhancement-based methods Fu et al. [9] put forward a fusion-based sand-dust image enhancement method. The proposed method first uses a statistical-based correction method to remove the color shift, and then derives two input images with different brightness. The final enhanced image is obtained by multiplying and fusing the input image with three weight maps. In addition, this method enhances the contrast of the image while some details of the image are dark, which is easy to lead to blue artifacts. Alameen et al. [2] proposed a sand-dust image enhancement method based on fuzzy operator. This method first normalizes the three channels, and subsequently controls the fuzzy operator to achieve color correction and contrast enhancement. This method cannot effectively improve the visibility of the image, and the ability of color correction is insufficient. Li et al. [19] proposed a robust Retinex model. This method employs segmental smoothing of the illumination and adopts the fidelity term for gradients of the reflectance to enhance the image details. This method has achieved a good effect on low-light images while it is not ideal for sand-dust images. Gao et al. [11] proposed a sand-dust image enhancement method on the basis of YUV space. This method uses color components U and V to achieve color correction in YUV space, and acquires contrast enhancement through CLAHE and improved Retinex. Nevertheless, there still exists some distortions in removing color shift.

Based on image restoration-based methods Yang et al. [31] proposed an optical compensation method to achieve color correction through the constrained Gaussian function to optimize the transmittance with the aim to enhance the sand-dust image while the sky area is prone to halo. Yu et al. [32] proposed a sand-dust image restoration method on the basis of atmospheric scattering model and information constraints. The proposed method updates the atmospheric light to estimate the transmittance under the constraints of information, and compensates the color shift with the gray world. However, the effect of this method is not satisfying. Gao et al. [10] put forward a sand-dust image restoration method based on the reversing blue channel prior. The method first reverses the blue channel of the sand-dust image and employs the dark channel prior method to estimate the atmospheric light and transmission map. Finally, combined with the gray world, an adaptive color adjustment factor is introduced into the restoration model to remove the color shift. Although the method improves the visibility of the image, it cannot handle heavy sand-dust image. Dhara et al. [8] put forward a sand-dust image restoration via adaptive atmospheric light refinement and non-linear color balancing. Although this method can effectively enhance the sharpness of the image, it is not difficult to cause color distortion during the restoration process. There are similar studies on improving atmospheric light and transmittance, such as [23, 26]. Nevertheless, the above-mentioned methods are not ideal for heavy sand-dust images.

3 Details of our method

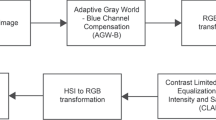

The block diagram of the proposed method is shown in Fig. 1, and the details are shown in 3.1 and 3.3.

3.1 Color balance

Under sand-dust weather, a large number of sand-dust particles are suspended in the air, and most of the blue light is absorbed and scattered, causing a yellowish or even reddish image [10, 23]. As shown in Fig. 2, the histogram distribution of the sand-dust image is presented. Under such characteristic, the light directly affects the three channels of the image, especially the blue channel. Compared with the red channel and the green channel, the attenuation is more serious. If the gray world algorithm or other classical color correction algorithms are used for color correction directly, the phenomenon of blue artifacts will appear. As a result, the blue channel should be compensated. The color correction in [4] is targeted at underwater images, and compensates for the red channel. Inspired by the different characteristics of sand-dust images and underwater images, we adopt the green channel to compensate for the blue channel. This compensation method is based on the characteristics of sand-dust images and can effectively decrease the blue artifacts. The formula is as follows:

where, Ib(x)and Ig(x) are the blue channel and green channel of the image respectively, \({\overline{I}}_g\)and \({\overline{I}}_b\) stand for the mean values of the blue channel and green channel respectively, and \({I}_{\mathrm{b}}^{\prime }(x)\) denotes the blue channel after compensation. After the image compensation, there is also the phenomenon of color shift. Therefore, the color shift should be further removed. In the present study, the statistical method based on [9] is used to remove color deviation, and the specific formula is written as follows:

where

where c ∈ {R, G, B}, Oc is the compensated channel, \({O}_{\boldsymbol{mean}}^c\) represents the mean value of the channel, \( {O}_{\mathrm{var}}^c \) is the variance of the channel, \({O}_{\mathrm{max}}^c\) signifies the maximum value of the color shift, \({O}_{\mathrm{m}i\mathrm{x}}^c\) indicates the minimum value of the color shift, Oa is the color correction after the image, and η is the parameter that controls saturation and contrast. The influence of this parameter is verified by experiments, as shown in Fig. 3. When η = 1.5, the image contrast is large while the color is oversaturated. For example, the trees in the first column of Fig. 3b and the sea water in the foreground in the second column are blackened. When η = 2.5, the color of the image is normal, but the contrast is insufficient, and η = 2 is a compromise scheme, which combines the advantages of the two. Therefore, η= 2 is selected.

The color balance technique proposed in this paper can effectively correct the color and reduce the blue artifact. To prove its effectiveness, we compared gray world algorithm. The optical compensation method (OCM) [31], color balance method [4], statistical-based method [9], and the experimental results are shown in Fig. 4.

As displayed in Fig. 4, both gray world and OCM methods [31] have the phenomenon of yellowish or blue artifacts. Although the method of [4] has no color distortion, the overall visual effect is not as good as our proposed color balance technique. In the meanwhile, the direct use of statistical method [9] will also lead to blue artifacts or color distortion. To a certain extent, our color balance technique realizes color correction and enhances the contrast of the image. Obviously, the proposed color balance technique is superior to other color correction techniques.

3.2 Detail enhancement in lab space

After the color correction of the sand-dust image, due to the large correlation of R, G and B channels, direct enhancement in RGB space will bring color distortion. The Lab space contains three components including brightness component L and color components ab, where a represents the range from magenta to green, b stands for the range from yellow to blue, and L denotes the value [0, 100]. The model is shown in Fig. 5. The correlation between them is small. We can adjust the brightness component to enhance details, and keep the color component unchanged to avoid color distortion during the process of enhancement. At the same time, the conversion between RGB and Lab space is relatively simple, which can satisfy the needs of real-time application. To enhance detailed information of the image more effectively, the guided filtering [14] is used to decompose the brightness component L to obtain the detail layer and the base layer. The detail layer represents the edge information and local gradient of the image, and the base layer signifies the overall contrast of the image. The details of the image after color correction are blurred. Therefore, the acquired detail components need to be enhanced. At first, the guided filtering is used to obtain the base layer, as shown in (5).

where, G represents the guided filtering operator, r is the filter radius and εcontrols its blurring degree with r = 20, ε= 0.25. L is the brightness component, which is used as the input image and the guidance image. Then, the detail layer is easily obtained by (5).

As presented in [7, 28], nonlinear functions are introduced. We modify the sigmoid function to map the detail layer to enhance the details of the image. Its function is expressed as follows:

where a denotes width parameter for the nonlinear region, which is equal to 0.5, λ is a scale factor, which is equal to 30, and Dmean is the mean value of the detail component. Then, the enhanced detail layer can be written as:

ξ represents the gamma parameter and its function is to fine-tune the enhancement amplitude. Here, Fig. 7 shows the influence of different ξ on the restored image. Finally, the enhanced luminance component L′ is obtained by fusing the enhanced detail layer D′ and the base layer B.

Then, the RGB space is inverted to obtain the final enhanced image. Figure 6 shows the process diagram of the proposed algorithm. As illustrated in Fig. 6, the proposed method removes the color shift and effectively enhances the details and contrast in the distance.

Based on Fig. 7, the smaller ξ, the stronger the ability to enhance the details, but it also brings a lot of noise, such as the sky area. The larger ξ, the smaller the noise while the ability to enhance the details is weakened, as the image presented in Fig. 7e becomes blurred. When ξ = 1.2, the noise is effectively reduced while enhancing the details. Therefore, ξ= 1.2 is taken here.

4 Experimental results and analysis

The pictures used in the experiment are real and nonsynthetic high-quality pictures from the Internet, which are typical representatives of sand-dust images. In MATLAB 2016a, the host is configured on a computer with an Intel(R) Core(TM) i5–6500 CPU@3.2 GHz, an 8-GB memory and a 64-bit operating system to implement the algorithm of this article. At the same time, a large number of images were selected for performing the experiments. To verify the performance of the proposed algorithm, the present study makes the comparison with the methods proposed by Li et al. [19], Alameen et al. [2], Yang et al. [31], Dhara et al. [8] and Gao et al. [10]. The experimental results are shown in Figs. 8, 9, 10, 11, providing qualitative and quantitative analysis, evaluation of algorithm performance, the application of the algorithm in other fields as well as limitation of study.

4.1 Qualitative evaluation

As shown in Figs. 8, 9, 10, 11a, the original sand-dust images have color shift, blurred details and low contrast. According to Figs. 8, 9, 10, 11b, the method of [19] does not remove the color shift, and the contrast is not enhanced. In particular, the mountains in the distance of Fig. 8b and the animals in Fig. 10b become more blurred and the color shift in Fig. 11b becomes more serious. The reason is that the sand-dust image scene is complex, and the proposed robust Retinex model is not suitable for sand-dust image enhancement. Based on Figs. 8, 9, 10, 11c, the method of [2] only removes the color shift in Fig. 10c, and all the other results have the phenomenon of color shift. Although the contrast has been improved to some extent, the overall image quality does not remain high. As presented in Fig.10c, the distant plants are still blurred. The reason is that the method of [2] employs fuzzy operators to achieve contrast enhancement and color correction. The proposed method adopts fixed parameters to control the operators, which cannot flexibly enhance each sand-dust image. As a result, its robustness is not strong. As shown in Figs. 8, 9, 10, 11d, the method of [31] removes the color shift very well while the overall contrast is not high, and the details are still blurred. Figures 8 and 9d are brighter. The reason is that the value of the red channel is larger, and the blue and green channels match the red channel. Consequently, the corresponding value will be larger, making the overall image brighter. The halo appears in the sky area of Fig. 10d, which is mainly caused by the inaccurate estimation of the transmittance of the sky area. The blue artifacts in the foreground of Fig. 11d are due to heavy sand-dust that does not compensate for the blue channel, influencing the visual effect. As shown in Figs. 8, 9, 10, 11e, the method of [8] enhances the contrast and details of the image.

Figures 8e, 9e, and 10e show the color distortion during the enhancement process. Especially in the sky area of Fig. 9e, there is a larger halo, and Fig. 10e has insufficient ability to remove color casts, which is a bit reddish. The reason is that the used non-linear color correction is not suitable for some sand-dust images, resulting in unsatisfactory color correction effect. Due to the inaccurate estimation of atmospheric light, the visibility of the image is affected. Figs. 8, 9, 10, 11f show that the method of [10] improves the overall contrast, but brings color distortions, such as the sky area in Fig. 8f and 11f. Although Fig. 10f removes the color shift well, the ability to enhance the details in the distance is still insufficient. As illustrated in Fig. 9f, halo appears in the air region, because a fixed threshold is adopted to estimate the transmittance of the sky region, influencing the accuracy of the transmittance. The color is easy to appear distortion, which is mainly due to the combination of the gray world, making the air area or the area with large color value obtain transition adjustment. From Figs. 8, 9, 10, 11g, compared with several other algorithms, the proposed method removes the color shift, improves the contrast and enhances the details without causing color distortion, and has better visibility. The contrast of Fig. 11g is enhanced and color distortion is reduced. As presented in Fig. 8g, the mountains in the distance are clearly visible, and the restored colors are vivid. In Fig. 9g, the white shed in the distance and the plants in Fig. 10g are effectively enhanced, exhibiting more details. The reason is that the proposed color balance technique achieves color correction well, and the introduced nonlinear mapping function and gamma function can well enhance the details of the image. Figure 12 shows more enhancement results of the proposed method. As presented in the figure, the proposed algorithm reveals a better recovery effect. Although the proposed method is better than several other algorithms, the imperfections in the figure can also be observed. Some noise is introduced in the process of enhancement, and the sky area is obvious. There is also a slight color distortion in Fig. 11g, and the color recovery effect of close-up part in Fig. 10g is not vivid enough.

4.2 Quantitative evaluation

In general, there are two broad categories of objective indicators of image restoration effects including reference methods and nonreference methods. It is extremely difficult to obtain the reference image of the sand-dust image in a real scene. Therefore, we employ a nonreference evaluation index to evaluate the recovered sand-dust image. Besides, two well-known quantification indexes e and r [12] are used to evaluate the restoration effect of each comparison method in a real scene. In addition, we also adopt the NBIQA index [22] to evaluate the overall image quality. Specifically, e evaluates the comparative ease of discriminating the edge between the restored image and the original blurred image. r evaluates the average gradient before and after the restoration of blurred images in order to evaluate the average visibility. The larger the values of e and r are, the better the restored images are. NBIQA combines a large number of features in the spatial and transform domains based on natural scene information and comprehensively evaluates an image by predicting the image quality score. It suggests that the higher the NBIQA score is, the higher the quality of the image will be. Three indicators are employed to evaluate Figs. 8, 9, 10, 11, and the results are shown in Tables 1, 2, 3. To better evaluate the effectiveness of several algorithms, we test the average values of e, r and NBIQA indicators for 100 sand-dust images. The results are displayed in Table 4.

According to Table 1, the index e of the proposed method is higher than other comparison algorithms, demonstrating that the method in the present study can recover more edge details effectively. In particular, we can observe more edge details in Figs. 8g and 10g. The value in [19] is the lowest, and the negative value appears, indicating that the algorithm loses more edge details. Based on Table 2, the index r of the proposed algorithm is higher than that of other comparison algorithms, providing that the method in the present study has higher contrast. As shown in Table 3, among several algorithms, the NBIQA index of the proposed algorithm is the highest, implying that the quality of the recovered sand-dust image is the highest. On the basis of Figs. 8, 9, 10, 11, the recovered image has higher visibility. Table 4 further proves the superiority of the proposed algorithm.

In order to test the time complexity of each algorithm, we give the running time of each algorithm for different sizes to better evaluate the performance of the algorithm. As shown in Fig. 13, it can be found that when the size of the image increases, the increase in the running time of the method in [19] is the largest, and the running time is the longest for the same size image. Compared with other comparison algorithms, the running time of the proposed algorithm is the shortest for the same size image. With the increase of image size, the increase of time is the shortest. Because the proposed algorithm is based on pixel processing, the processing process remains relatively simple. Therefore, the proposed algorithm has the lowest complexity and fast running speed, which can satisfy the real-time applications.

4.3 Applications of our algorithm

4.3.1 Application1 underwater image restoration

From Fig. 14, the algorithm in this paper can effectively remove underwater color shifts and enhance the details of underwater images. It exerts good application effect on underwater images.

4.3.2 Application2 haze image restoration

As shown in Fig. 15, the recovered images have clear details and high contrast. In addition, the proposed method also achieves certain results for haze images.

4.4 Limitation of study

Although the proposed algorithm can process a variety of degraded images and is effective for most sand-dust images, it will fail when encountering some heavy images, which can be found in Fig. 16. It can be shown from Fig. 16b that the enhanced results have a large halo and noise, and some of them have color distortions. This invalid case is due to the image itself, because there are many kinds of sand-dust images with different degrees of severity, and the image itself contains a lot of noise when being acquired, which is difficult to eradicate. Additionally, the denoising ability of the algorithm itself is insufficient. In some sand-dust images, the proposed color balance method can not effectively compensate the attenuated blue channel, contributing to the problem of color distortion.

5 Conclusion

In this paper, we propose a method of color balance and sand-dust image enhancement in Lab space. To correct the color of the sand-dust image, a color balance technique is put forward. At first, the blue channel is compensated for lower blue artifacts. Then, the color shift is removed by employing a statistical-based method. Subsequently, the image is converted to Lab space and the brightness component is decomposed by guided filtering to obtain the detail component. The nonlinear mapping function and the gamma function are introduced to process the detail component. Finally, the enhanced brightness component is converted to the RGB space. This method can remove the color shift and restore vivid colors, clear details and high contrast. Experimental results demonstrate that the proposed algorithm is superior to several other comparative algorithms in both qualitative and quantitative analysis. The algorithm in this paper is low in complexity and can meet real-time application processing, which is of great significance for expanding the application of computer vision. In addition, the proposed algorithm is also suitable for underwater image and haze image restoration. Our work brings slight noise while enhancing the details, and the denoising ability is insufficient. The proposed method can be improved by adding some special denoising methods to integrate it into the model, so that the improved method can maintain the details while significantly reducing the noise. In the future studies, we will collect and expand the sand-dust data set and apply the algorithm to the sand-dust video. A robust algorithm is developed to adapt to the image of various scenes, such as the sand-dust image at night, which can not only improve the details, but also have strong denoising ability. Currently, no deep learning method has been proposed to explore sand-dust images. The next step is to explore the method of synthesizing sand-dust data sets. Moreover, it is expected to obtain better performance to investigate sand-dust images with deep learning method.

References

Afifi M, Price B, Cohen S, Brown MS (2019) When color constancy goes wrong: correcting improperly white-balanced images. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 1535–1544

Al-Ameen Z (2016) Visibility enhancement for images captured in dusty weather via tuned tri-threshold fuzzy intensification operators. International Journal of Intelligent Systems and Applications 8(8):10–17

Al-Sammaraie MF (2015) Contrast enhancement of roads images with foggy scenes based on histogram equalization. In: 2015 10th international conference on computer science & education (ICCSE), pp 95–101

Ancuti CO, Ancuti C, De Vleeschouwer C, Bekaert P (2018) Color balance and fusion for underwater image enhancement. IEEE Trans Image Process 27(1):379–393

Berman D, Avidan S et al (2016) Non-local image dehazing. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1674–1682

Berman D, Treibitz T, Avidan S (2020) Single Image Dehazing Using Haze-Lines. IEEE Trans Pattern Anal Mach Intell 42(2):720–734

Cho Y, Jeong J, Kim A (2018) Model-assisted multiband fusion for single image enhancement and applications to robot vision. IEEE Robot Autom Lett 3(4):2822–2829

Dhara SK, Roy M, Sen D, Biswas PK (2020) Color cast dependent image Dehazing via adaptive Airlight refinement and non-linear color balancing. IEEE Trans Circuits Syst Video Technol 31(5):1–5

Fu X, Huang Y, Zeng D et al (2014) A fusion-based enhancing approach for single sandstorm image. In: 2014 IEEE 16th international workshop on multimedia signal processing (MMSP). IEEE, pp 1–5

Gao G, Lai H, Jia Z, Liu YQ, Wang YL (2020) Sand-dust image restoration based on reversing the Blue Channel prior. IEEE Photonics J 12(2):1–16

Gao GX, Lai HC, Liu YQ, Wang LJ, Jia ZH (2021) Sandstorm image enhancement based on YUV space. Optik (Stuttg) 226:165659

Hautière N, Tarel JP, Aubert D, Dumont É (2008) Blind contrast enhancement assessment by gradient ratioing at visible edges. Image Anal Stereol 27(2):87–95

He K, Sun J, Tang X (2011) Single image haze removal using Dark Channel prior. IEEE Trans Pattern Anal Mach Intell 33(12):2341–2353

He K, Sun J, Tang X (2013) Guided image filtering. IEEE Trans Pattern Anal Mach Intell 35(6):1397–1409

Huo JY, Chang YL, Wang J, Wei XX (2006) Robust automatic white balance algorithm using gray color points in images. IEEE Trans Consum Electron 52(2):541–546

Kim SE, Park TH, Eom IK (2020) Fast single image Dehazing using saturation based transmission map estimation. IEEE Trans Image Process 29:1985–1998

Koscevic K, Subasic M, Loncaric S (2019) Attention-based convolutional neural network for computer vision color constancy. In: Int Symp image signal process anal ISPA, pp 372–377

Li B, Peng X, Wang Z et al (2017) AOD-Net: All-in-One Dehazing Network. In: Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, pp 4770–4778

Li M, Liu J, Yang W, Sun X, Guo Z (2018) Structure-revealing low-light image enhancement via robust Retinex model. IEEE Trans Image Process 27(6):2828–2841

Ning X, Li W, Liu W (2017) A fast single image haze removal method based on human retina property. IEICE Trans Inf Syst E100(1):211–214

Ning X, Gong K, Li W, Zhang L, Bai X, Tian S (2020) Feature refinement and filter network for person re-identification. IEEE Trans Circuits Syst Video Technol 31:3391–3402

Ou FZ, Wang YG, Zhu G (2019) A novel blind image quality assessment method based on refined natural scene statistics. In: 2019 IEEE International Conference on Image Processing (ICIP), pp 1004–1008

Peng Y-T, Cao K, Cosman PC (2018) Generalization of the Dark Channel prior for single image restoration. IEEE Trans Image Process 27(6):2856–2868

Qin X, Wang Z, Bai Y et al (2020) FFA-net: feature fusion attention network for single image dehazing. In: Proceedings of the AAAI Conference on Artificial Intelligence, pp 11908–11915

Rong Z, Jun WL (2014) Improved wavelet transform algorithm for single image dehazing. Optik (Stuttg) 125(13):3064–3066

Shi Z, Feng Y, Zhao M, Zhang E, He L (2019) Let you see in sand dust weather: a method based on halo-reduced Dark Channel prior Dehazing for sand-dust image enhancement. IEEE Access 7:116722–116733

Tai SC, Liao TW, Chang YY, Yeh CP (2012) Automatic white balance algorithm through the average equalization and threshold. In: 2012 8th international conference on information science and digital content technology (ICIDT2012) 3, pp 571–576

Talebi H, Milanfar P (2016) Fast multilayer Laplacian enhancement. IEEE Trans Comput Imaging 2(4):496–509

Wang A, Wang W, Liu J, Gu N (2019) AIPNet: image-to-image single image Dehazing with atmospheric illumination prior. IEEE Trans Image Process 28(1):381–393

Yang X, Xu Z, Luo J (2018) Towards perceptual image dehazing by physics-based disentanglement and adversarial training. In: Proceedings of the AAAI Conference on Artificial Intelligence, pp 7485–7492

Yang Y, Zhang C, Liu L, Chen G, Yue H (2020) Visibility restoration of single image captured in dust and haze weather conditions. Multidimens Syst Signal Process 31(2):619–633

Yu S, Hong Z, Jing W et al (2016) Single sand-dust image restoration using information loss constraint. Opt Acta Int J Opt 63(21):2121–2130

Zhang J, Tao D (2019) FAMED-net: a fast and accurate multi-scale end-to-end Dehazing network. IEEE Trans Image Process 29:72–84

Zhu Q, Mai J, Shao L (2015) A fast single image haze removal algorithm using color attenuation prior. IEEE Trans Image Process 24(11):3522–3533

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Gao, G., Lai, H., Wang, L. et al. Color balance and sand-dust image enhancement in lab space. Multimed Tools Appl 81, 15349–15365 (2022). https://doi.org/10.1007/s11042-022-12276-6

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-022-12276-6