Abstract

One of the difficult tasks in the field of computer vision is the classification and detection of vehicles. Researchers from all over the world are working to create autonomous vehicle detection (AVD) systems due to their numerous practical applications, including highway management and surveillance systems. Deep learning techniques, which require a lot of data for proper model training, are the current AVD trend. However, a number of vehicles are discovered in India, the second-largest nation in terms of population, that are not included in the vehicle detection datasets that are currently in use. Furthermore, India’s overcrowding makes traffic management difficult and unusual. In this research, we present a dataset for still-image-based vehicle detection that includes one class of pedestrians and 13 different types of vehicles that are seen on Indian urban and rural roads. Initially, we provide baseline results using some state-of-the-art deep learning models on this dataset. To improve the accuracy further, we present an ensemble-based object detection and classification model. The dataset consists of 4K images and 14.3K bounding boxes of various vehicles; that is, researchers are provided with appropriately annotated rectangular boxes for use with these vehicles in the future. A 16-megapixel Sony IMX519 high-resolution camera was used to take all images while travelling throughout West Bengal, an Indian state on the eastern side. Dataset can be found at: https://github.com/IRUVD/IRUVD.git.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Vehicles play a crucial role in our daily lives. The most important part of the transportation department is the traffic management system. The initial traffic control system was created in the 1910s. Through the use of computer vision techniques, traffic management systems have been evolved over time to become more intelligent and efficient. In addition to the traffic management system, a lot of data is required for other systems as well including surveillance, pollution control, autonomous vehicle detection (AVD), and vision-based vehicle parking management. Most importantly, the system needs to be reliable and accurate in order to be used in real-world AVD applications. Many datasets [16, 17] have been created in recent years for a variety of object detection purposes including vehicle detection. Autonomous vehicles locates obstacles using camera captures images from the vehicle. The range of the sensor and the size of the target must be considered when detecting vehicles [26]. It should be noted that the complexity of newer make and model vehicles’ shapes, sizes, colors, and textures is making this research field more challenging [11].

The pose and viewing angle make it difficult for a model to identify the precise object class. Additionally, it is challenging to create a generalized AVD dataset due to the numerous country or region-specific issues. The most widely used datasets like Pascal VOC2007 [10] and KITTI [13], only include five and seven vehicle classes that are frequently seen on urban roads, respectively. Only a few datasets are available that were created with Indian traffic conditions in mind. The most popular Indian vehicle dataset, known as IDD [38], is used for segmentation tasks. Images were taken in Bangalore and Hyderabad, two popular Indian cities. There are a total of 34 classes in this dataset, including 8 classes for vehicles. Other classes include the sky, roadside objects (such as walls, fences, billboards, etc.), distant objects (such as buildings and bridges), living things (such as animals and people), drivable and non-drivable objects (such as sidewalks and non-drivable fallbacks). Another Indian vehicle detection dataset is NITCAD [28], which includes 7 different vehicle classes. Images were collected throughout Kerala, a southern state of India, for this dataset. Despite the fact that auto-rickshaws are widely used in both urban and rural areas of India, NITCAD and IDD only included them in their datasets because they are frequently seen in urban areas.

However, more vehicles, including totos, cycle-rickshaws, and motor-rickshaws, are commonly found in rural areas, and those are not incorporated in any of the aforementioned datasets. Due to inadequate traffic control systems, auto-rickshaws and motorcycles frequently break traffic laws in rural areas. In congested urban areas, crosswalks are not always used by pedestrians. These elements contribute to India’s extremely difficult traffic management systems. The fact that the same kind of vehicle is used for multiple purposes in India presents another challenge for vehicle detection, and it confuses the computer vision based learning models. A motor-rickshaw, for instance, can carry both passengers and goods. This study offers a new dataset for AVD to address those problems, and an ensemble of deep-learning models is used to provide baseline results. Vehicle detection using still images is a challenging task due to various factors, such as vehicle orientation, lighting conditions, and scale variations. In the Indian context, there are several additional challenges to consider, such as non-standard vehicles, crowded scenes, and diverse driving behaviors. This article explores the challenges in vehicle detection in the Indian context and proposes solutions to address them. The first step in developing an effective vehicle detection system is to collect a standard dataset. The dataset should cover a range of scenarios, including different lighting conditions, backgrounds, and vehicle types and orientations. In the Indian context, the dataset should also include some uncommon vehicles (in other countries) such as auto-rickshaws and cycle-rickshaws. Figure 1 shows an example of a dataset that considers these factors.

One of the significant challenges in vehicle detection is the orientation of vehicles. Vehicles can have different orientations, such as front-facing, side-facing, or rear-facing, which can make it difficult to detect them using traditional methods. Additionally, variations in lighting conditions can affect the accuracy of detection. Vehicles can appear at different scales in images or videos, making it challenging to detect them using existing datasets. Furthermore, vehicles may blend into complex backgrounds, such as trees, buildings, and other vehicles, which can make it difficult to distinguish them from the surroundings. In the Indian context, traffic congestion is another significant issue. The high volume of traffic, particularly in urban areas, can make it problematic to detect vehicles accurately in crowded scenes. Moreover, in rural areas, we can observe a wide range of sometimes erratic driving behaviours, which can further complicate the detection task. Additionally, India has a diverse range of uncommon vehicles, such as auto-rickshaws and cycle-rickshaws, which can be difficult to detect if a model is trained on existing datasets.

Contributions

With the aforementioned information in mind, in this paper, we have developed the IRUVD: Indian Rural and Urban Vehicle Detection, a new still-image-based dataset for AVD. Specific contrubutions of this paper are as follows:

-

A new vehicle detection dataset that includes 13 vehicle classes and 1 pedestrian object has been introduced. Toto, cycle-rickshaw, and motor-rickshaw classes of vehicles that are frequently seen on Indian roads have been considered.

-

To make the dataset as realistic as possible, we have taken into account both urban and rural areas of India during data collection. This aids in capturing a variety of traffic scenarios, including both low-congested rural areas without a traffic system and highly congested urban areas with a well-maintained traffic system.

-

There are several challenges that are considered while preparing the current dataset, such as vehicle orientation, variations in lighting conditions, scale variation of the objects, complex backgrounds, occlusions, and different traffic diversities.

-

This dataset contains 14343 properly annotated bounding boxes for 4000 labeled images. Figure 1 displays some examples. The images were captured from various locations in West Bengal, a state in eastern India. The images were taken during the day, and the objects were in various poses. The resolution of each image is 1920 × 1080.

-

Utilizing the most up-to-date deep learning-based object detection models, we have benchmarked the IRUVD dataset using the You Only Look Once version 3 (YOLOv3), YOLO version 4 (YOLOv4), Scaled YOLO version 4 (Scaled-YOLOv3), and YOLO version 5 (YOLOv5) (YOLOv5). We have used a variety of object detection metrics, including recall, precision, F1-score, mean average precision (mAP) at intersection over union (IOU) threshold 0.5 (mAP@0.5), mAP score at IOU 0.75 (mAP@0.75), mAP scores at IOU 0.95 in steps of 0.05 (mAP@0.5:0.05:0.95), and mAP scores at IOU 0.95 (mAP@0.75:0.05:0.95).

-

On the IRUVD dataset, we have proposed both weighted and non-weighted ensemble approaches to establish the baseline results. In this case, we have observed that the non-weighted ensemble approach performs better than the weighted ensemble approach.

The rest of the paper is organized as follows. A literature review is given in Section 2. The developed dataset is presented in Section 3. In Section 4, we describe the procedure for benchmarking the dataset. To further improve detection results, we introduce an ensemble technique in Section 5. In Section 6, we tested the proposed ensemble technique on some additional datasets. Finally, in Section 7, we conclude our paper.

2 Literature survey

Researchers have created various datasets to address a variety of difficult problems in the field of computer vision. The most popular datasets for image classification, localization, and segmentation are ImageNet [6], Microsoft COCO [22], ADE20K [48], and Pascal VOC. But only Pascal VOC2007 can be used for vehicle detection tasks. The two categories of existing vehicle detection datasets are segmentation-based datasets and localization-based datasets, which are briefly discussed below.

2.1 Segmentation-based detection dataset

Segmentation implies the finding an exact outline around the object in an image. The most widely used dataset, Pascal VOC2007, has 20 main classes, but only 5 of them can be applied for developing autonomous vehicle and traffic management systems. The most well-known datasets for segmentation-based vehicle detection are Cityscapes [5], Mapillary Vistas [29], and CBCL StreetScenes [2]. The 3.5k images in CBCL StreetScenes, which have 9 classes including cars, pedestrians, bicycles, buildings, trees, sky, roads, sidewalks, and stores, were collected from urban streets in Boston, Massachusetts, in the United States. Another dataset for vehicle detection with 25k images is Vistas. With 11 different vehicle types and a total of 37 classes, the images of this dataset were collected from urban streets across 6 continents with the goal of diversifying the detection models. A segmentation-based dataset that took into account both urban and rural areas of the USA is the Berkeley Deep Drive Video dataset. The videos used to create this dataset totaled 10,000 hours. The largest vehicle dataset, BDD100K [44], was collected from New York City. Each of the 100,000 video sequences in this dataset has a duration of 40 seconds and ten different object classes. The primary driving force behind the creation of this dataset was the desire to experience various time and weather conditions, such as sunny, cloudy, and rainy. The Cityscapes segmentation-based vehicle detection dataset, which was created taking Indian traffic conditions into consideration, is the one that most closely resembles IDD. Only 8 of the 45k images’ 34 object classes are clearly identifiable as vehicle classes. However, some vehicles that are frequently seen on Indian rural roads are not taken into account in this dataset.

2.2 Localization-based detection dataset

Localization is a method for determining an object’s precise location, which is typically indicated by a rectangular box. For the sole purpose of detecting pedestrians, datasets like INRIA [7], Daimler Pedestrian [27], TudBrussels [43], and Citypersons dataset [45] were created. However, most objects encountered on the road must be able to be detected by AVD systems. A 3D car classification dataset with 207 car categories was introduced by Krause et al. [20]. The most well-known vehicle detection dataset, known as KITTI, was developed using images from Karlsruhe, Germany, and eight different vehicle classes. The KITTI dataset has 200k 3D bounding boxes and 15k images with 8 different object classes. The most recent Iranian vehicle detection dataset, LRVD [19], contains 110k images and 5 classes. BIT dataset [8] is another vehicle classification dataset that consists of 6 classes - bus, SUV, minivan, truck, microbus, and Sedan. It has more than 9.8k images which were taken day and night times from a camera installed on the highway. The limitation of this dataset is that it only contains a front view of every vehicle which is not preferable in a real-life scenario. NITCAD is a stereo vision-based autonomous navigation dataset, which was collected from Kerala, India. It has 7.5k distorted images. The main motive to develop this dataset was to provide more information about Indian roads. However, it only mentions one new class than other datasets, i.e., auto-rickshaw. Other vehicles like the toto, cycle-rickshaw, and motor-rickshaw seen on Indian roads were not taken into account when these datasets were made. In most cases, the object detection model predicts the presence of multiple objects within a single bounding box. The most accurate bounding boxes can be determined using either Non-maximum Suppression (NMS) or Soft Non-maximum Suppression (Soft-NMS).

2.2.1 Non-maximum suppression

Every detection model calculates the object’s location using a bounding box or anchor box, the box’s class, and the prediction percentage’s level of confidence. A filtering technique called the NMS is used to get rid of overlapping bounding boxes. IOU is a metric that is typically calculated for each object class to determine the maximum amount of box overlap. The highest IOU box is the only one left for the final prediction. However, this method eliminates partially obscured objects of the same class, which is undesirable. Hence, this type of technique is used in the training process of a detection model.

2.2.2 Soft non-maximum suppression

To predict the most ideal bounding box from multiple bounding boxes, Bodla et al. [4] developed soft-NMS. In contrast to NMS, soft-NMS assigns the score based on the IOU value. In this method, a very low confidence score is given if the IOU value is high, which raises the detection model’s training accuracy. Soft-NMS techniques are not very good at ensembling, though.

To develop an intelligent traffic control system in the Indian context, the above dataset may not be useful because of the lack of information about the vehicles like toto, cycle-rickshaw, motor-rickshaw, and tempo which are frequently seen on Indian roads. To this end, we have created a dataset with 14 classes that include a few special vehicle classes that, to the best of our knowledge, are not included in any other datasets. Since both urban and rural roads were taken into consideration, this dataset effectively captures both structured and unstructured traffic scenarios. This is a crucial component of developing nations like India’s traffic management system. Table 1 compares the widely used datasets with the one we have created. YOLOv3, YOLOv4, Scaled-YOLOv4, and YOLOv5 are the four most recent deep learning-based object detection models used to benchmark the results on this dataset. Additionally, weighted and non-weighted ensembles of these deep learning models are employed to boost the detection accuracy. The ensemble technique, which is primarily used in the machine learning field, combines the decisions of multiple predictive models to make the final prediction that is more accurate than those made by the base models. It has the following main advantages,

-

One of the main benefits of using the ensemble technique is that it improves the performance of the average accuracy of any present member.

-

It reduces the bias-variance trade-off of the contributing members.

-

It improves the robustness of the models.

3 Developed object detection dataset

Data collection and annotation process, quality and statistics of the data, and comparison with other vehicle detection datasets are presented in this section.

3.1 Data collection

Due to the lack of structure in the traffic management system, 11% of all fatalities worldwide occur in India [15]. In order to create more reliable systems that can be applied in the real world, we have created a still-image-based AVD dataset that takes into account the unique characteristics of Indian roads and vehicles. We have gathered information from various locations and at various times of the day to adequately represent the variety of traffic conditions. The traffic control system is more organized and effectively managed in urban areas. However, in rural areas, some vehicles and pedestrians disobey traffic laws, which leads to a high number of traffic accidents. When gathering the data, different perspectives, including the front, side, and back views of the same object, were taken into account. As the images were captured from different angles, the size of the objects varies a lot. We made use of a 16MP Sony IMX519 high-resolution camera to take all of these pictures. To create the database, we watched 1080p footage at 60 frames per second for more than 5 hours (during various times). Some samples images are already shown in Fig. 1.

3.2 Annotation process

A non-iconic image is one that contains multiple objects, which makes it more difficult for researchers to identify objects accurately in such images. To accurately assess the performance of any existing or newly developed methods, the data annotations must be flawless. The majority of the dataset’s images are not iconic in any way. Although the annotation process may be prone to errors, we must make sure that they are kept to a minimum. We struggled to obtain the correct bounding boxes for each object when it was obscured by another. Objects may be regarded as noise because of their small size and light particle illumination. Figure 2 displays a challenging annotation example. Because the object in this example is too small, we have not considered it to be an object. Using the open-source program LabelIMG [36], all of the images have annotations.

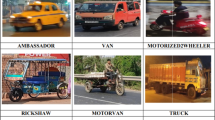

3.3 Statistics of the dataset

Because they are the most varied and common on Indian roadways, we selected 13 vehicle categories and one class for pedestrians from the photographs we collected. They are toto, bike, cyclist, auto-rickshaw, motor-rickshaw, van, tempo, car, bus, taxi, truck, jeep, cycle-rickshaw, and pedestrian. A sample of each category found in the developed dataset is shown in Fig. 3. We took into account every vehicle that was included in datasets like KITTI and NITCAD. Additionally, six new vehicle classes have been added: tempo, taxi, motor-rickshaw, jeep, toto, and cycle-rickshaw. We have annotated 4000 images using the aforementioned process, and a total of 14343 bounding boxes have been labeled manually. Each image in our dataset has an average of 3.58 boxes. Figure 4 shows the frequency of each object. From this figure, it is clear that there are a maximum number of pedestrians and a minimum number of cycle-rickshaws in the dataset.

3.4 Quality of annotation

Thanks to the open-source annotation software LabelImg [36], which made it easier to prepare most accurate annotations. We have annotated the images using this software in accordance with the YOLO format. As seen in Fig. 2, typical situations like occlusion and the small size of the objects, among others, lead to errors in the annotation process. To lessen the uncertainty of the bounding boxes and class labels, we have carefully examined the results.

3.5 Comparison with other vehicle detection datasets

For AVD, a number of datasets have been made available, including CBCL StreetScenes, KITTI, Dataset by Yu P. et al., BDD100k, Mapillary Vistas, IDD, NITCAD, and others. There are mainly two types of datasets: segmentation based datasets and localization based datasets. The dataset developed under the current work can be applied to localization. Our dataset is more comparable to the NITCAD dataset because we have concentrated on Indian vehicle detection and classification. As seen in Table 1, our dataset has 14 classes while NITCAD has only 7 classes. The current dataset has less object classes than datasets like IDD and Mapillary Vistas, but it has more vehicles because it adds new classes like tempo, taxi, motor-rickshaw, jeep, toto, and cycle-rickshaw.

4 Dataset benchmarking

Results of experimentation carried out under the current work are reported in this section. For the performance evaluation, a total of 11 methods have been applied on the IRUVD dataset.

4.1 Deep learning models used to benchmark

Several object detection models, including YOLOv3 [31], YOLOv4 [3], Scaled-YOLOv4 [40], YOLOv5 [18], YOLOX [12], YOLOR [41], YOLOv6 [21] and YOLOv7 [42] etc. have been introduced in recent years. In the current work, we have considered four state-of-the-art object detection models: YOLOv3, YOLOv4, Scaled-YOLOv4 and YOLOv5. The four models are discussed below.

4.1.1 YOLOv3

Joseph Redmon and Ali Farhadi’s [31] YOLOv3 algorithm is the one of the most widely-used object detection techniques in the field of computer vision. As shown in Fig. 5, the detection process is divided into three steps: feature extraction, bounding box prediction, and class prediction. The Darknet-53 method, used by YOLOv3, for feature extraction consists of 53 convolutional layers. In three different levels, YOLOv3 anticipates the boxes to determine the precise size of the object, or prior. K-means clustering is employed to estimate the box prior. Softmax does not discard overlapping boxes, which is useful for detection in more complicated domains like AVD, so it was used in YOLOv3. Figure 5 displays a typical YOLOv3 architecture.

4.1.2 YOLOv4

Bochkovskiy et al. [3] introduced YOLOv4 as a successor of YOLOv3 to better speed and accuracy in terms of mAP [@0.5:0.05:0.95] and mAP [@0.5]. YOLOv4 consists of four sub-blocks, namely, Backbone, Neck, Dense Prediction block, and Sparse Prediction block as shown in Fig. 6. A typical object detection model takes images as input and finds the features through the convolution layer. For this purpose authors of YOLOv4 used SpineNet [9], CSPResNext50 [39], CSPDarknet53 [39], EfficientNet-B3 [34], VGG16 [33] Backbone for the feature extractor. In this paper, we have used pre-trained CSPDarknet53 on ImageNet as the Backbone. Extracted feature maps are mixed in the Neck region to find more generalized characteristics of the objects. YOLOv4 was examined in a few Neck configurations like Feature Pyramid Network (FPN) [23], Path Aggregation Network (PANet) [24], Neural Architecture Search Feature Pyramid Network (NAS-FPN) [14], Bi-directional Feature Pyramid Network (BiFPN) [35], Adaptively Spatial Feature Fusion (ASFF) [25], Scaled-wise Feature Aggregation Module (SFAM) [47]. PANet is the most commonly used neck structure in YOLOv4. Generally, head sections refer to the Dense Prediction block and the Sparse Prediction block. The Sparse Prediction block is used for two-stage detection, while the Dense Prediction block is used for one-stage detection. The location and class are both estimated during one stage of the detection process. Two-stage detection separates each object’s locations and classes. The YOLO head has been used in this essay. The terms “Bag of freebies” and “Bag of specials” are two new techniques introduced in YOLOv4 that improve the model and boost performance. The authors noted that Rectified Linear Unit (ReLU) activation functions did not effectively optimize the features in YOLOv4. The Mish activation function was consequently used for improved performance. The YOLOv4 architecture is demonstrated in Fig. 6.

4.1.3 Scaled-YOLOv4

Bochkovskiy et al. [40] suggested the Scaled-YOLOv4 to detect both small and large objects using the same model. Scaled-YOLOv4 includes CSPDarknet53, CSPUp sampling block, and CSPDown sampling block, as demonstrated in Fig. 7. In addition to the detection bandwidth, both large and small models can use up and down-sampling without sacrificing speed or accuracy. This approach led to the development of two models, tiny-YOLOv4 and large-YOLOv4, which offered cutting-edge outcomes for both small and large object detection models in terms of mAP scores. Scaled-YOLOv4 has a backbone in the form of CSPDarknet53. To lessen the computation at the model’s neck, the PAN architecture on Scaled-YOLOv4 employs the CSP-ize technique. By using this technique, the computation is slashed by about 40%. The neck also makes use of CSPSSP. It is clear that YOLOv4’s training processes heavily rely on data augmentation. In the scaled YOLOv4, the model is only fine-tuned by adding training data after the training is complete. Additionally, it aids in accelerating the training process.

4.1.4 YOLOv5

Glenn Jocher et al. [18] came up with the idea for YOLOv5. It has three main sub-blocks, including Backbone, PANet Head, and Output block, like other YOLO models, as shown in Fig. 8. BottleNeckCSP is used to organize YOLOv5’s backbone into Darknet53, also known as CSPDarknet53. For feature extraction and feature dimension reduction, a large-scale backbone like CSPDarknet53 works best. This improves the model’s detection speed and accuracy. A PANet is present in the model’s second component. The model’s information flow from the backbone to the head is improved by PANet neck. A bottom-up method is used to pass information through the feature pyramid, allowing the model to recognize more low-level features. The model propagates the features to other layers with the aid of skip connections. Last but not least, the YOLO head is used by the YOLOv5’s head or output section. Similar to YOLOv3, YOLOv5 forecasts the output in three distinct scales, namely (72 × 72,36 × 36,and 18 × 18), enabling the model to recognize objects of various sizes. Four distinct models based on trainable parameters are available in YOLOv5.

-

YOLOv5s (small)

-

YOLOv5m (medium)

-

YOLOv5l (large)

-

YOLOv5x (extra-large)

In this paper, we have used YOLOv5s to benchmark the results on the IRUVD dataset.

4.2 Object detection benchmarks

As mentioned earlier, we have reported object detection benchmarks using state-of-the-art deep learning models. Class-wise mAP scores from various detection models at an IOU threshold of 0.5 to 0.95 in steps of 0.05 are displayed in Table 2. For the evaluation of each model, we have used a 3-fold cross-validation scheme in order to get more accurate results. This table clearly shows that the detection models classify toto, truck, bus, and motor-rickshaw with greater accuracy. However, we have found that the accuracy of object detection is lower for cars, taxis, bicycles, and pedestrians. Since the shapes of a car and a taxi are almost identical but their colors differ, the object detection models occasionally fail to classify them properly. In Tables 3 and 4 we have shown the class-specific mAP at IOUs of 0.5 and 0.75. The average precision, recall, F1-score, and mAP score at IOU thresholds of 0.5, 0.75, and 0.5 to 0.95 in steps of 0.05 are displayed in Table 5. YOLOv5s yields the highest score for precision. In contrast, Scaled-YOLOv4 yields the highest rating for recall. The Scaled-YOLOv4 model yields the highest overall ratings.

4.3 Confusion matrix and miss rate

The confusion matrix for the YOLOv5s model on the current AVD dataset is shown in Fig. 9. As can be seen, every object has been detected almost perfectly. However, because to their similar shapes, as already indicated, taxis are sometimes mistaken as cars. Following are a few erroneous classifications between some classes:

-

Car and Taxi

-

Cyclist and Pedestrian

-

Toto and Auto-rickshaw

-

Cycle-rickshaw and Cyclist

-

Cyclist and Motor-Bike

We have shown the total number of the true positive and false positive objects detected by each model. We have presented class-wise log average miss rate for each model to see how the model works. Figure 10(a) shows the total number of true positive and false positive objects detected by the YOLOv3 model for each class, whereas (b) shows the log average miss rate of the YOLOv3 model for each class. Figures 11, 12 and 13 show the similar results produced by Scaled-YOLOv4, YOLOv5 and YOLOv4 models, respectively.

4.4 Precision vs. recall curve

Precision measures the percentage of true positives, whereas recall measures the percentage of false negatives. Low false negative rates indicate a high recall value, and high true positive rates indicate a high precision value. A perfect model needs to be highly accurate and highly reliable. The precision-recall trade-off, on the other hand, states that as precision increases, recall decreases, and vice versa. For each of the 14 classes, the precision vs. recall curve is displayed in Fig. 14. This graph shows that we have achieved encouraging results for the bus, cycle-rickshaw, toto, van, and motor-rickshaw. We have received subpar results for some classes, including auto-rickshaw, pedestrian, taxi, cyclist and jeep, and this indicates a precision-recall trade-off.

5 Ensemble techniques

The proposed ensemble method, which is actually a box estimation technique used to predict the precise bounding box from the N number of bounding boxes provided by the same number of detection algorithms, has been discussed in this section. Even though a single object detection model locates objects fairly accurately, it occasionally classifies the background as an object or becomes confused with objects that have a similar shape. We have suggested an ensemble technique, which is described below, to address this problem.

-

Consider the use of N detection models to create the aforementioned ensemble. At a specific IOU threshold T, each model provides a prediction score for the detected object. In our situation, we have established the limit T >= 0.5. The box that the Nth model returns is designated as BN.

-

Each box includes the object’s height and width as well as the class prediction, prediction confidence, and center coordinates (x,y). The algorithms count how many models classified an object as belonging to the same class for a given object out of N predictions for that class. The class that the majority of models select is the actual class of the object. Now, the true class is estimated using the prediction confidence scores if the same number of models correctly predict an object that belongs to multiple classes. The model with the highest confidence score is considered to have provided the final class for an object. For instance, if four models are used for assembly and three of them identify an object as a car and one as a taxi, our suggested method will label the object as a car. The method considers an object to be a car if two models predict it to be a car and the other two models predict it to be a taxi, but the first two models have the highest confidence scores. False predictions can be decreased by using this method.

-

Our method does not remove overlapping anchor boxes like NMS and Soft-NMS. However, it estimates new anchor box from N number of boxes obtained from different detection models. We propose a technique to estimate the box using a weighted method or a non-weighted method. Let us consider that N number of detection models gives N number of predictions for a particular object as [C1,S1,x1,y1,w1,h1],.............,[CN,SN,xN,yN,wN,hN], where CN = class predicted by Nth model, SN = confidence score given by Nth model, xN = x coordinate given by Nth model, yN = y coordinate given by Nth model, wN = width given by Nth model, hN = height given by Nth model. Then parameters are estimated using (1)—(7). The weighted method takes P1,P2,......,PN as inputs, where P are the parameter which we want to estimate, i.e., x coordinate, y coordinate, width, height, etc., and N is the number of models used in the ensemble technique. On the other hand, P1,P2,......,PN,W1,W2,.......,WN are used as inputs for the non-weighted method, where P is x coordinate, y coordinate, width, height, and WN is the confidence score of Nth model. We have considered the confidence score as the weight in weighted methods. To estimate the parameters, we have used seven different popular functions that are described below:

-

Non-weighted methods:

-

1.

Mean:

$$ f(P_{1},P_{2},......,P_{N}) = \frac{{\sum}_{i=1}^{N} P_{i}}{N} $$(1) -

2.

Harmonic Mean (HM):

$$ f(P_{1},P_{2},......,P_{N}) = \frac{N}{{\sum}_{i=1}^{N}\frac{1}{P_{i}}} $$(2) -

3.

Contraharmonic Mean (CM):

$$ f(P_{1},P_{2},......,P_{N}) = \frac{{\sum}_{i=1}^{N} {P_{N}}^{2}}{{\sum}_{i=1}^{N} P_{N}} $$(3) -

4.

Root Mean Square (RMS):

$$ f(P_{1},P_{2},......,P_{N}) = \sqrt{\frac{1}{N}\sum\limits_{i=1}^{N} {P_{N}}^{2}} $$(4)

-

1.

-

Weighted methods:

-

1.

Weighted Mean (WM):

$$ f(P_{1},.,P_{N},W_{1},.,W_{N}) = \frac{{\sum}_{i=1}^{N} W_{i} P_{i}}{{\sum}_{i=1}^{N} W_{i}} $$(5) -

2.

Weighted Harmonic Mean (WHM):

$$ f(P_{1},..,P_{N},W_{1},..,W_{N}) = \frac{{\sum}_{i=1}^{N} W_{i}}{{\sum}_{i=1}^{N} {\frac{W_{i}}{P_{i}}}} $$(6) -

3.

Weighted Geometric Mean (WGM):

$$ f(P_{1},..,P_{N},W_{1},..,W_{N}) = \frac{{\sum}_{i=1}^{N} {P_{N}}^{2}}{{\sum}_{i=1}^{N} P_{N}} $$(7)

-

1.

Using (1)–(7), all the said parameters are estimated for the newly obtained bounding boxes. An illustration of our ensemble architecture is shown in Fig. 15. Tables 6 and 7 show results of an ensemble of different models using various methods in terms of mAP at IOU threshold 0.5, mAP at IOU threshold 0.75, mAP at IOU threshold ranging from 0.5 to 0.95 in steps of 0.05. We have combined two and more models to form different ensembles. From Table 6, it can be seen that the combination of YOLOv3 and YOLOv4 using the Mean method achieves 1% improvement on mAP@[0.5:0.05:0.95] than YOLOv4 and 8.8% than YOLOv3. Popular techniques like NMS and soft-NMS achieve only 3.8% more than YOLOv3, but 5% less than YOLOv4. Ensemble of YOLOv4 and Scaled-YOLOv4 using the Mean method gives an 83.6% mAP@[0.5:0.05:0.95] which is 7.1% more than YOLOv4 and 2.2% more than Scaled-YOLOv5. Other methods give similar performance, however, NMS and soft-NMS give less mAP than Scaled-YOLOv4 because of the unnecessary elimination of overlapping boxes. Fusion of YOLOv4 and YOLOv5 provides an 82.3% of mAP@[0.5:0.05:0.95], which is 1.5% higher than Scaled-YOLOv4 and 4.7% higher than YOLOv5s. When we have used an ensemble of YOLOv5 and Scaled-YOLOv4 models, NMS and S-NMS display better results than other methods, which is a 5% improvement than YOLOv5 and 1.9% improvement than the Scaled-YOLOv4. Not only two models, but more than two models are also used to form the ensemble, as shown in Table 7. Combinations of YOLOv3, YOLOv4, Scaled-YOLOv4 and YOLOv3, YOLOv4, YOLOv5 provide better results than a single model but do not provide better results than the combination of two models. However, an ensemble of YOLOv4, Scaled-YOLOv4, and YOLOv5 models using the Mean method shows higher performance than all other models. An illustration of a bar plot of mAP@[0.5:0.05:0.95] by the ensemble of different models using different methods for each class is shown in Fig. 16. A number of false positives, true positives and log average miss rate of each class given by the ensemble of YOLOv4, Scaled-YOLOv4, and YOLOv5 using the Mean method are shown in Fig. 17. In Fig. 18, a comparison of class-wise mAP at different thresholds from 0.5 to 0.95 is presented. We have used YOLOv3, YOLOv4, Scaled-YOLOv4, and YOLOv5, an ensemble of YOLOv4, Scaled-YOLOv4 using Mean method and ensemble of YOLOv4, Scaled-YOLOv4, YOLOv5 using Mean method. From Fig. 18 it can be clearly seen that YOLOv3 has the worst performance, whether as the ensemble of YOLOv4, Scaled-YOLOv4, YOLOv5 using the Mean method outperforms other models.

6 Experimentation on other datasets

In this paper, we have presented a dataset for vehicle detection on Indian roads and benchmarked the results using four state-of-the-art deep learning-based detection models. We have also proposed an ensemble technique for the improvement of the benchmark results on the developed IRUVD dataset. For better understanding of how a model trained on existing datasets fails to detect a vehicle in the complex situation on Indian roads, we have tested the YOLOv5 model trained on two datasets, namely Udacity Self-Driving-Car [37] and Otonomarc [32]. Comparative results on the IRUVD dataset are shown in Table 8. In terms of precision, recall, F1-score, and mAP@0.5, the model trained on the proposed dataset outperforms the models trained on the Udacity Self-Driving-Car and Otonomarc datasets. We have analysed predictions of the YOLOv5 model trained on the Udacity Self-Driving-Car, Otonomarc, and IRUVD datasets, as shown in Fig. 19. From Fig. 19, it is clear that the model trained on IRUVD gives the best result, whereas the model trained on the Udacity Self-Driving-Car model shows the worst performance.

We have also evaluated our proposed ensemble of YOLOv4 and YOLOv5 models on Udacity Self-Driving-Car and Otonomarc datasets, and we have obtained 31.05% and 13.29% mAP@0.5 improvements, respectively. Results of this testing are shown in Table 9. From the table, it is observed that in terms of mAP@0.5:0.05:0.95, the proposed ensemble method outperforms the base models, thereby ensuring the effectiveness of the ensemble method for the problem under consideration.

7 Conclusion and future scope

Autonomous vehicles, intelligent traffic management systems, and other technologies have largely taken over our daily lives. Traffic management is becoming more and more challenging as vehicles proliferate, especially in crowded nations like India. Nowadays, deep learning models are primarily used by researchers to build an effective AVD system, which requires a large amount of data. Several datasets that are freely available for this purpose have been found in the literature. However, the majority of them only take into account the traffic patterns and vehicles that are frequently seen on urban roads, making them less useful for creating a comprehensive traffic management system. To this end, in this paper, we have developed a 14-class IRUVD dataset. Many vehicle classes that are frequently seen in rural areas were not taken into account in past datasets. New vehicle classes like the toto, motor-rickshaw, tempo, taxi, and cycle-rickshaw have been included in the dataset. With 14343 popper annotations, we have 4000 high-quality images to offer. Four state-of-the-art deep learning-based object detection models, namely YOLOv3, YOLOv4, Scaled-YOLOv4, and YOLOv5, have been used to benchmark the results on the said dataset. Additionally, we have suggested weighted and non-weighted ensemble techniques that improve mAP@[0.5:0.05:0.95] by 1.9%. Despite our best efforts, some classes like cycle-rickshaw, jeep, taxi, bus, etc. have a very small number of samples. This may result in overfitting of deep learning models. Hence to address this, we may employ several types of data augmentation techniques in future. However, in our research, we have observed that current models operate efficiently without applying any data augmentation techniques because the YOLO models use the focal loss for training, which can deal with imbalance data. [46]. On the other hand ensemble approaches surpass existing models in terms of the performance metrics under consideration. Another gap in our dataset is that we have not consider varied weather conditions such as wet, hazy, or overcast days, as well as the time of day such as evening or night. We would like to resolve these constraints in our future attempts. Our dataset can be expanded by collecting data from new types such as animals, signboards, and so on. It is necessary to conduct research on the development of unique architectures capable of detecting and classifying in a wide range of settings, including numerous edge cases. Our another future plan is to build a video dataset in Indian context for AVD purposes.

Data Availability

The developed dataset can be found at the GitHub repository: https://github.com/IRUVD/IRUVD.git.

References

Bhattacharyya A, Bhattacharya A, Maity S, Singh P, Sarkar R (2023) Juvdsi v1: developing and benchmarking a new still image database in Indian scenario for automatic vehicle detection. Multimed Tools Appl 1–33

Bileschi SM, Wolf L (2006) Cbcl streetscenes. Technical report

Bochkovskiy A, Wang C-Y, Liao H-YM (2020) Yolov4: optimal speed and accuracy of object detection. arXiv:2004.10934

Bodla N, Singh B, Chellappa R, Davis LS (2017) Improving object detection with one line of code. arXiv:1704.04503

Cordts M, Omran M, Ramos S, Rehfeld T, Enzweiler M, Benenson R, Franke U, Roth S, Schiele B (2016) The cityscapes dataset for semantic urban scene understanding. arXiv:1604.01685

Deng J, Dong W, Socher R, Li L-J, Li K, Li F-F (2009) Imagenet: a large-scale hierarchical image database. In: 2009 IEEE conference on computer vision and pattern recognition, pp 248–255

Dollár P, Wojek C, Schiele B, Perona P (2009) Pedestrian detection: a benchmark. In: 2009 IEEE conference on computer vision and pattern recognition. IEEE, pp 304–311

Dong Z, Wu Y, Pei M, Jia Y (2015) Vehicle type classification using a semisupervised convolutional neural network. IEEE Trans Intell Transp Syst 16(4):2247–2256

Du X, Lin T-Y, Jin P, Ghiasi G, Tan M, Cui Y, Le QV, Song X (2019) Spinenet: learning scale-permuted backbone for recognition and localization. arXiv:1912.05027

Everingham M, Van Gool L, Williams CKI, Winn J, Zisserman A (2010) The pascal visual object classes (voc) challenge. Int J Comput Vis 88(2):303–338

Ershad S F (2013) Developing feature representation and respected innovative database collecting algorithm for texture analysis 11

Ge Z, Liu S, Wang F, Li Z, Sun J (2021) Yolox: exceeding yolo series in 2021. arXiv:2107.08430

Geiger A, Lenz P, Urtasun R (2012) Are we ready for autonomous driving? The kitti vision benchmark suite. In: 2012 IEEE conference on computer vision and pattern recognition. IEEE, pp 3354–3361

Ghiasi G, Lin T-Y, Pang R, Le QV (2019) NAS-FPN: learning scalable feature pyramid architecture for object detection. arXiv:1904.07392

India tops the world with 11% of global death in road accidents: World bank report. shorturl.at/goEXZ, February 2021

Jian M, Qi Q, Dong J, Yin Y, Lam K-M (2018) Integrating qdwd with pattern distinctness and local contrast for underwater saliency detection. J Vis Commun Image Represent 53:31–41

Jian M, Qi Q, Yu H, Dong J, Cui C, Nie X, Zhang H, Yin Y, Lam K-M (2019) The extended marine underwater environment database and baseline evaluations. Appl Soft Comput 80:425–437

Jocher G, Stoken A, Borovec J, NanoCode012, Chaurasia A, TaoXie, Liu C., Abhiram V, Laughing, tkianai, yxNONG, Hogan A, Mammana L, AlexWang1900, Hajek J, Diaconu L, Marc Y, Kwon O, Wanghaoyang0106, Defretin Y, Lohia A, ml5ah, Milanko B, Fineran B, Khromov D, Ding Y, Doug D, Ingham F (2021) ultralytics/yolov5: v6.0 - YOLOv5n ‘Nano’ models, Roboflow integration, TensorFlow export, OpenCV DNN support

Khosravi H, Gholamalinejad H (2020) Irvd: a large-scale dataset for classification of iranian vehicles in urban streets 06

Krause J, Stark M, Deng J, Li F-F (2013) 3d object representations for fine-grained categorization. In: 2013 IEEE International conference on computer vision workshops, pp 554–561

Li C, Li L, Jiang H, Weng K, Geng Y, Li L, Ke Z, Li Q, Cheng M, Nie W et al (2022) Yolov6: a single-stage object detection framework for industrial applications. arXiv:2209.02976

Lin T-Y, Maire M, Belongie SJ, Bourdev LD, Girshick RB, Hays J, Perona P, Ramanan D, Dollár P, Zitnick CL (2014) Microsoft COCO: common objects in context. arXiv:1405.0312

Lin T-Yi, Dollár P, Girshick RB, He K, Hariharan B, Belongie SJ (2016) Feature pyramid networks for object detection. arXiv:1612.03144

Liu S, Lu Q, Qin H, Shi J, Jia J (2018) Path aggregation network for instance segmentation. In: 2018 IEEE/CVF conference on computer vision and pattern recognition, pp 8759–8768

Liu S, Di H, Wang Y (2019) Learning spatial fusion for single-shot object detection. arXiv:1911.09516

Maity S, Bhattacharyya A, Singh P, Kumar M, Sarkar R (2022) Last decade in vehicle detection and classification: a comprehensive survey. Arch Comput Methods Eng 1–38

Munder S, Gavrila DM (2006) An experimental study on pedestrian classification. IEEE Trans Pattern Anal Mach Intell 28(11):1863–1868

Namburi S, Joseph A, Umamaheswaran S, Priyanka C h, Malavika M, Sankaran P (2020) Nitcad—developing an object detection, classification and stereo vision dataset for autonomous navigation in indian roads. Procedia Comput Sci 171:207–216 (01)

Neuhold G, Ollmann T, Bulò SR, Kontschieder P (2017) The mapillary vistas dataset for semantic understanding of street scenes. In: 2017 IEEE International conference on computer vision (ICCV), pp 5000–5009

Peng Y, Jin JS, Luo S, Min X, Cui Y (2012) Vehicle type classification using pca with self-clustering. In: 2012 IEEE International conference on multimedia and expo workshops. IEEE, pp 384–389

Redmon J, Farhadi A (2018) Yolov3: an incremental improvement. arXiv:1804.02767

Sener E, Sebatli-Saglam A, Cavdur F (2021) Otonom-paylaşımlı araç yönetim sistemi. J Polytechnic

Simonyan K, Zisserman A (2015) Very deep convolutional networks for large-scale image recognition

Tan M, Le QV (2019) Efficientnet: rethinking model scaling for convolutional neural networks. arXiv:1905.11946

Tan M, Pang R, Le QV (2019) Efficientdet: scalable and efficient object detection. arXiv:1911.09070

Tzutalin (2015) Labelimg. git code. https://github.com/tzutalin/labelImg

Udacity self driving car (2018). https://github.com/udacity/self-driving-car

Varma G, Subramanian A, Namboodiri AM, Chandraker M, Jawahar CV (2018) IDD: a dataset for exploring problems of autonomous navigation in unconstrained environments. arXiv:1811.10200

Wang C-Y, Liao H-Y M, Yeh I-H, Wu Y-H, Chen P-Y, Hsieh J-W (2019) Cspnet: a new backbone that can enhance learning capability of CNN. arXiv:1911.11929

Wang C-Y, Bochkovskiy A, Liao H-Y (2020) Scaled-yolov4: scaling cross stage partial network. arXiv:2011.08036

Wang C-Y, Yeh I-H, Liao H-YM (2021) You only learn one representation: unified network for multiple tasks. arXiv:2105.04206

Wang C-Y, Bochkovskiy A, Liao H-YM (2022) Yolov7: trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv:2207.02696

Wojek C, Walk S, Schiele B (2009) Multi-cue onboard pedestrian detection. In: 2009 IEEE conference on computer vision and pattern recognition, pp 794–801

Yu F, Xian W, Chen Y, Liu F, Liao M, Madhavan V, Darrell T (2018) BDD100K: a diverse driving video database with scalable annotation tooling. arXiv:1805.04687

Zhang S, Benenson R, Schiele B (2017) Citypersons: a diverse dataset for pedestrian detection

Zhang L, Zhang C, Quan S, Xiao H, Kuang G, Li L (2020) A class imbalance loss for imbalanced object recognition. IEEE J Sel Top Appl Earth Obs Remote Sens 13:2778–2792

Zhao Q, Sheng T, Wang Y, Tang Z, Chen Y, Cai L, Ling H (2018) M2det: a single-shot object detector based on multi-level feature pyramid network. arXiv:1811.04533

Zhou B, Zhao H, Puig X, Fidler S, Barriuso A, Torralba A (2016) Semantic understanding of scenes through the ADE20k dataset. arXiv:1608.05442

Acknowledgements

A part of this work is funded by DST (ICPS) CPS-Individual/2018/403(G).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethical approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Conflict of interests

The authors declare that they have no conflict of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ali, A., Sarkar, R. & Das, D.K. IRUVD: a new still-image based dataset for automatic vehicle detection. Multimed Tools Appl 83, 6755–6781 (2024). https://doi.org/10.1007/s11042-023-15365-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-15365-2