Abstract

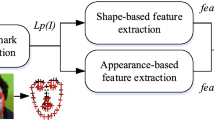

Face recognition remains critical and up-to-date due to its undeniable contribution to security. Many descriptors, the most vital figures used for face discrimination, have been proposed and continue to be done. This article presents a novel and highly discriminative identifier that can maintain high recognition performance, even under high noise, varying illumination, and expression exposure. By evolving the image into a graph, the feature set is extracted from the resulting graph rather than making inferences directly on the image pixels as done conventionally. The adjacency matrix is created at the outset by considering the pixels’ adjacencies and their intensity values. Subsequently, the weighted-directed graph having vertices and edges denoting the pixels and adjacencies between them is formed. Moreover, the weights of the edges state the intensity differences between the adjacent pixels. Ultimately, information extraction is performed, which indicates the importance of each vertex in the graphic, expresses the importance of the pixels in the entire image, and forms the feature set of the face image. As evidenced by the extensive simulations performed, the proposed graphic-based identifier shows remarkable and competitive performance regarding recognition accuracy, even under extreme conditions such as high noise, variable expression, and illumination compared with the state-of-the-art face recognition methods.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Facial data is one of the preferred and leading biometrics due to its high-discrimination performance and ability to be collected in real-time through devices such as cameras, without physical contact and human intervention, and without disturbing anyone simultaneously. [4, 10, 27]. Autonomous facial recognition, in other words, facial recognition through the machine, remains a field of research that is of great interest due to its difficulties and wide application areas. The application areas of face recognition include surveillance, human-computer interaction, and commercial and legal identification. The most common challenges that make the process extremely difficult are the variations in facial expression, exposure, lighting, and the presence of noise [5, 45, 53, 54, 59].

Since the variability of the number of classes in object recognition is low, the possibility of traditional object recognition methods being successful in face recognition is questionable. That is because in facial recognition, each time a new individual is added to the knowledgebase, the number of classes increases accordingly. Therefore, facial recognition is a multi-class classification problem. Thus, the proposed facial recognition methods should be compatible with this uncertain and variable situation [30].

Although face detection, face alignment, face representation, and face matching are the four main steps of an ordinary face recognition system, face representation and face matching are the most critical. Face images captured in unconstrained environments are often exposed to effects such as noise, occlusions, variation in expressions, and illumination, which degrades the recognition performance seriously by reducing the similarity between the images of the same person while causing the images of different individuals to look similar [34].

Due to the importance of face representation, most studies carried out and in progress are directed in this field. Face representation methods are generally divided into holistic and local feature-based approaches [17, 61]. Holistic approaches examine the entire image and consider holistic features, which state the general characteristics of the face [6]. Gray-level co-occurrence matrices (GLCM) [18, 19], principal component analysis (PCA) [50, 52], linear discriminant analysis (LDA) [3], and independent component analysis (ICA) [8] are the most prominent and essential representatives of the holistic branch, which inspired many subsequent studies [20, 52].

In contrast to holistic approaches, local descriptor-based methods focus on knowledge discovery through local patterns in the image. Thus, they are more resistant to difficulties such as changes in facial expression and illumination [7]. Numerous research activities (Local Tetra Patterns (LTetP) [41], Monogenic Binary Coding (MBC) [56], Local Monotonic Pattern (LMP) [39], Local Ternary Pattern (LTP) [48], Local Derivative Pattern (LDrvP) [60], Local Directional Pattern (LDP) [25], Local Transitional Pattern (LTrP) [24], Local Phase Quantization (LPQ) [55], Weber Local Descriptor (WLD) [32], Local Gradient Pattern (LGP) [22], Median Binary Pattern (MBP) [16], Local Arc Pattern (LAP) [23]) have been carried out, among which Texton Learnnig [29, 51], Local Binary Pattern (LBP) [1], Gabor wavelets [38, 57] and Radon transformations [26] are the most prominent.

1.1 Contributions of this study

Graphs are of famous structures with an old past. Besides mathematics, they have been implemented in the vast majority of the science and engineering areas. In addition, there are many ways of applying graphic theory in image processing and computer vision [31]. As noted above, the leading figure in face recognition is face representation. To qualify a face representative as high quality, it must be capable of providing high discrimination even in challenging and accessible environments.

The contribution of this study can be expressed as follows:

-

This study proposes a novel graph-based face descriptor, which preserves its discrimination capability even under high noise, varying facial expressions, and illumination exposure situations.

-

The proposed graph-based descriptor shows very high discriminative performance and competes with the other face descriptors. Moreover, even under high noise exposure, changing facial expression, and illumination, the proposed descriptor maintains its discriminatory performance, while that of others significantly decreases, sometimes even ultimately.

1.2 Article outline

The rest of the article is organized as follows. Section 2 describes the proposed descriptor in detail, while Section 3 presents the experimental results and discussions conducted on five primary face datasets. Finally, Section 4 concludes the article.

2 Proposed method

Graph theory is based on mathematics and is applied in many different fields, providing a rich set of powerful abstract techniques that allow the modeling of various natural and human problems, from biology to sociology, and the mathematical examination of systems of interacting elements [11, 40, 62].

A graph provides an abstract representation of the elements of a set and the paired relationships between these elements. Elements of the set are represented by vertices, and the relationships between these elements are denoted by edges.

2.1 Notation

A graph is denoted byG = (V, E), where V, Е refer to the set of vertices and edges, respectively. If there is a relationship between two members (vi, vj ∈ V) of a set, this relationship is depicted by an edge(ei ∈ Е). Vertices connected by edge/edges to each other are said to be adjacent.

Depending to the type of the graph-application-field, edges or vertices can be assigned numerical values, which are called weights. Weights can be assigned to either vertices only (corner-weighted graph), or only edges (edge-weighted graph) or both (double-weighted graph) [46].

The weight of a vertexvi, is denoted by w(vi), whereas the weight of an edge, eij, between two vertices, vi, vj, is stated as w(vi, vj) or wij. For a graph to be unweighted, weights of all the vertices and edges should be equal to unity, that is, G = (V, E) → unweighted if w(vi), for ∀vi ∈ V,as well as wij = 1 for ∀eij ∈ E.

Each edge is said to be oriented if it includes an ordered pair of vertices (vi, vj) and called as directed if w(vi, vj) ≠ w(vj, vi) or wij ≠ wji. Considering this, a graph is directed if ∃eij ∈ E s. t. eij → directed.

In the proposed method, the graph emerging as the result of transformation of the image is a weighted and directed graph. A pixel that is not positioned on the borders of the image has eight neighbors, thus inherently has eight incident edges touching to the corresponding vertex on the graph. Hence, the degree of such a pixel is eight, that is, d(vi) = 8, where vi → g(I(pxy)), x < nr(I)andy < nc(I). This is d(vi) = 3 for pixels close to the boundaries, i.e., x = nr or y = nc, where nr, nc denote the number of rows and columns, respectively. The transformation of a pixel p(x, y) on image Inr × nc to a vertex v on graph G is represented by the g() (notation)

2.2 Graph and matrix representation

Graphs can be represented in various ways in computer systems. In addition, the data structures used to describe the graphs extensively impact process complexity in terms of time and speed. Matrices and lists are the two main data structures used for this purpose. In the representation of matrices, the most common way on which basic graphical operations and methods are based, a number of different types such as adjacency matrices, Laplacian matrices, incidence matrices, construction matrices, etc. can be preferred.

For a graph G = (V, E), where ∣E∣ denotes the total number of edges in the graph, the adjacency matrix A is ∣E ∣ × ∣ E∣. The adjacency matrix’s components are described as follows [31]:

Inherently, wij = 1 for an unweighted graph.

In the proposed method, there is no loop on the graph representation of the image because a pixel has no relationship with itself. Thus, the elements on the diagonal of the adjacency matrix are all equal to zero, that is Aij = 0 if i = j.

Figure 1 depicts a sample sub-image, its graph transformation, and the adjacency matrix of the formed directed-weighted graph.

In Fig. 1a, a sample sub-image is shown. Each box consists of two triangles. The left triangle includes the index number of the corresponding pixel, which is also the vertex number in the graph, while the right triangle contains the pixel’s intensity value. When determining the weights of the edges on the graph, the pixels’ immediate neighborhood and intensity values are considered. Therefore, there is an edge connection from the vertex representing each pixel to the vertices representing only adjacent neighboring pixels. The direction of an edge is always from the vertex, which refers to the low-density pixel, to the vertex representing the higher-density pixel, and its weight is equal to the difference in density values of those pixels. The edge-weight-calculation is formulated as follows:

where ε denotes a value chosen in the interval (0, 1) to avoid ambiguity occurring in the case that the weight of an edge connecting the vertices of two adjacent pixels having equal intensity values would be equal to zero. This situation, edge-weight-equal-to-zero, states that these pixels are not directly connected. However, there is a connection between these pixels, which should be represented by an edge with a very small value different from zero.

As seen in Fig. 1b, the adjacency matrix of the image is a typical hollow matrix [14] because all the elements on the diagonal are 0 due to the absence of loops. In addition, since the intensity-difference relationship is expressed in only one direction, the adjacency matrix is non-symmetric.

Pixels are partitioned into nine groups, as illustrated in Fig. 2, regarding their positions on the image. Since pixels in different groups have different adjacencies, treating each pixel the same is not appropriate.

The flow diagram of the image-to-adjacency matrix index-transformation process is illustrated in Fig. 3.

2.3 Centrality analysis

Several centrality metrics have been proposed, the most popular and known, as described below [13, 28, 33, 58]. In this study, centrality analysis, having a wide application area from communication networks to social networks, is used to identify the importance of the edges and vertices on a graph.

Degree centrality: the count of adjacencies of a vertex on an undirected graph, while for directed graphs, it has two branches, in-degree, and out-degree centrality.

Closeness centrality: the measure of how close a vertex is to all other vertices on an undirected graph. A vertex having a lower total-sum-of-distances is vital because the other vertices lay closer to it.

Outcloseness centrality: the total-sum-of-distances to all other reachable nodes from the specified vertex on a directed graph.

Incloseness centrality: the total-sum-of-distances from other nodes that can reach the specified vertex on a directed graph.

Stress centrality: the count of shortest paths passing over the vertex on a directed graph. If a plurality of shortest paths contains a particular vertex, this indicates the importance of that vertex in the network.

Betweenness centrality: a shortest-paths-based metric applied on both directed and undirected graphs. It is the normalized version of the stress centrality and states a vertex’s control over the rest of the network.

Page-rank centrality: is developed by Google Co. and implemented on the World Wide Web to assign ranks to websites and pages. Page ranking can be applied to both directed and undirected graphs.

Laplacian centrality: gives an idea about the effect of deactivating a node from a graph [42, 44].

In the proposed method, since the generated graph is a directed graph, page-rank, incloseness, and outloseness measures are implemented to analyze the importance of each pixel in the image. Table 1 presents page-rank, outcloseness, and incloseness ranking analysis results of the graph given in Fig. 1c, respectively.

3 Simulation results and discussions

Performance analysis of the proposed descriptor is done by conducting comprehensive simulations on five essential and popular datasets, CASPEAL-R1 [12], EXTENDED YALE B [15], FACES95 [47], ORL [49], and JAFFE [36, 37].

CAS-PEAL-R1 dataset is a subset of CAS-PEAL that includes different folders containing 30863 colorless images 1040 subjects specific to issues such as aging, expression, accessories, and illumination. Among these, folders that are dedicated to illumination and expression (5 images per subject) are considered during simulations. The resolution of each image is 360 × 480. Sample images shown in Fig. 4 depict how difficult it is to distinguish.

The EXTENDED YALE B dataset contains 16,352 images of 28 individuals with a resolution of 360 × 480, exposed to 9 different poses and 64 different lightings. Although images in the EXTENDED YALE B dataset do not include any expression variation, they are exposed to significant posture and illumination changes. Figure 5 presents sample images from the EXTENDED YALE B dataset.

The FACES95 database contains 1440 color face images having a resolution of 180 × 200, belonging to 72 individuals (male and female subjects). Images pose significant head (scale) and expression variations between the images of the same subject. Besides, considerable lighting changes occur on faces due to the artificial lighting arrangement. Figure 6 shows sample images of the Faces95 data set, which include exposure, expression, and varying lighting changes that reduce the distinguishing performance of any face recognition method.

The ORL dataset includes 400 images of size 112 × 92 belonging to 40 individuals. The images were taken at different times under varying illumination and facial expressions. Figure 7 shows sample images from the ORL dataset.

The Japanese Female Facial Expression (JAFFE) dataset contains 213 images having a resolution of 256×256 of 7 facial expressions of 10 Japanese models. Each person gave three or four examples for each of their seven facial expressions (happiness, sadness, surprise, anger, disgust, fear, and neutral) [9]. Figure 8 provides sample images from the JAFFE dataset.

Recognition performance analysis of the proposed descriptor examined under two titles. First, it is detailed how the proposed descriptor behaves under conditions of varying pose, expression and illumination. The noise resistance of the proposed descriptor is then analyzed.

3.1 Varying pose, expression, and illumination resistance analysis

All datasets above comprise face images with varying poses, expressions, and illumination. These effects seriously make the individual discrimination process challenging. KNN (K-Nearest-Neighbor) algorithm is used during classification.

The Lighting folder of the CASPEAL-R1 dataset contains 2097 images, and each nine belong to a single individual of 233 subjects. Images include extreme illumination variations, as illustrated in Fig. 9.

Recognition accuracy results of the proposed descriptor and other state-of-the-art descriptors under varying illumination conditions are presented in Fig. 10.

For the proposed descriptor, Page-rank (PRC), outcloseness (OCC), and incloseness (ICC) centralities are considered during the creation of the feature set. As seen in Fig. 10, the feature set that is the concatenation of the three centrality values (PRC + OCC + ICC) shows remarkable performance and significantly outperforms the other state-of-the-art methods. Even under the challenging varying illumination effect, PRC + OCC + ICC achieves a recognition rate of 87%. Recognition performances of the other methods other than LMP decrease severely, which reveals their weakness against lighting variations.

Another dataset containing images exposed to extreme illumination variations is the EXTENDED YALE B. Although the images in this dataset also include pose variations, this dataset’s performance analysis is discussed in this section. Figure 11 depicts sample images that are exposed to illumination from different directions.

As presented in Fig. 12, the proposed identifier and others perform better than their performance in the ORL dataset. Because every subject has 574 images, which makes the training process better than the training in the ORL dataset containing nine images for each individual. However, the proposed descriptor, consisting of PRC + OCC + ICC values, fully distinguishes individuals even under a wide range of lighting exposure.

Pose and expression variations are other essential factors significantly affecting recognition performance. A series of simulations are performed on the FACES95, JAFFE databases and on the expression folder of CASPEAL-R1 to analyze the robustness of the proposed and other descriptors against exposure and expression variations. Figures 13, 14 and 15 illustrate the recognition accuracy results, which put forth the reaction of the descriptors against expression and pose variations.

When Figs. 13, 14 and 15 are analyzed, it is seen that PRC + OCC + ICC performs consistently high on all datasets. The other consistent, highly performing method, not surprisingly, is the Gabor because it has entered the literature and inspired many other studies after it. PRC + OCC + ICC achieves recognition rates of 0.76%, 0.92%, 0.93% on datasets FACES95, JAFFE and CASPEAL-R1, respectively, whereas Gabor achieves 0.83%, 0.80% and 0.90%. The other methods show a fluctuating performance on different datasets, indicating that they are inconsistent against pose and expression changes.

3.2 Noise resistance analysis

Another essential consideration during the performance analysis of a face identifier is how it resists a demanding factor such as noise without any enhancement or filtration. Therefore, the reaction of the proposed method to noise is examined by applying two types of noise, Gauss and salt pepper, produced artificially, to each image in the data set.

First, the salt-pepper-noise resistance of the proposed descriptor is examined in detail. Images may sometimes be subject to pulsed noise during acquisition, transfer, or recording. Impulse noise is generally classified as Random-Valued Impulse Noise (RVIN) and Fixed-Valued Impulse Noise (FVIN). These two noise models differ in the intensity-value change in the noisy pixels. In the FVIN model, each pixel exposed to noise takes a value of 0 or 255, which means that the pixel is black or white. The modeling of FVIN is generally done as follows:

where xij, x′ij, p denote the original, noisy pixel intensity values at image coordinate (i,j), and the noise density, respectively [21].

Two types of models have been proposed for RVIN. In the first of these models [43], a noisy pixel can take one of the values in a fixed interval of length m rather than two fixed values, as in FVIN. This model is called Fixed Range Impulse Noise (FRIN) and is formulated as follows:

The second proposition [43] for RVIN is called General Fixed-Valued Impulse Noise (GFN) or Multi-Valued Impulse Noise (MVIN) and is formulated as follows:

Each image in the expression folder of the CAS-PEAL-R1 dataset is artificially exposed to salt-pepper noise, and recognition-accuracy-performance analysis is performed on these salt-pepper noisy images. The amount of the salt-pepper noise applied to the images is defined by the node density parameter d, which denotes the noise density. Hence, the total number of pixels that are exposed to noise is defined as:

where Nnp, d, r, c refer to the number of noisy pixels, noise density, number of rows, and number of columns, respectively. Figures 16 and 17 depict two sample images of an individual and their noisy versions with concerning the increasing salt-pepper-noise density.

Second sample image of the same individual given in Fig. 14 from the expression folder of the CASPEAL-R1 dataset and its salt-pepper-noisy versions

Figures 18 and 19 demonstrate the histograms of the proposed descriptor belonging to the non-noisy images and their noisy versions of the individual shown in Figs. 16 and 17.

The histograms of the proposed descriptor calculated for the sample non-noisy image and its salt-pepper-noisy versions given in Fig. 16

The histograms of the proposed descriptor calculated for the sample non-noisy image and its salt-pepper-noisy versions given in Fig. 17

Figure 20 shows the recognition accuracy performances of the proposed and other methods concerning the increasing noise density. As seen, the proposed descriptor preserves its discrimination ability even under the burden of heavy noise, whereas the performances of the other descriptors deviate to zero.

In the second step of the noise-resistance analysis, the Gaussian noise is considered. Two predominant noise sources during digital image acquisition are the stochastic quality of photon counting in detectors and the internal and electronic fluctuations of collecting devices [35]. This most common noise from the image acquisition system can generally be modeled as Gaussian random noise [2]. Gaussian noise is statistical noise with a probability density function (PDF) equal to that of the normal distribution, also known as the Gaussian distribution, named after Carl Friedrich Gauss. In other words, the locations of the pixels exposed to the noise and the scattering of the values are subject to the Gaussian distribution. The PDF of a Gaussian random variable is formulated as follows:

where z, μ, σ denote the grey-level, mean value, and standard deviation, respectively.

Figures 21 and 22 depict two sample images of an individual and their noisy versions for the increasing Gaussian variance (σ2) (Figs. 23 and 24).

Second sample image of the same individual given in Fig. 21 from the expression folder of the CASPEAL-R1 dataset and its Gaussian-noisy versions

The histograms of the proposed descriptor calculated for the sample non-noisy image and its Gaussian-noisy versions given in Fig. 21

The histograms of the proposed descriptor calculated for the sample non-noisy image and its Gaussian-noisy versions given in Fig. 22

Figure 25 demonstrates the proposed and other methods’ recognition accuracy performances for increasing Gaussian variance (σ2). As seen, the proposed descriptor preserves its discrimination ability even under the burden of heavy noise, whereas the performances of the other descriptors quickly deviate to zero.

4 Conclusion

Graph Theory has been applied in many fields of engineering and science. Image processing has also been a potential implementation field of graph theory. However, we know that graph theory has not been exploited in facial recognition. This article proposes a face descriptor that is based on graph theory. Firstly, adjacency matrices are formed from face images. Then, considering the adjacency matrices, corresponding directed graphs of the images, on which each vertex denotes a pixel, are created. Some graph-based properties are calculated, which put forth the importance of each vertex (pixel in the image) on the graphs. Comprehensive simulations and analyses are performed under varying conditions, making the discrimination process difficult. However, even under very high illumination variations and noise, the proposed graph-based descriptor maintains its recognition performance, which proves its robustness to effects exposed in unconstrained environments.

This field, which seems promising for future studies, is aimed to apply more features of graph theory to face recognition.

Data availability

Data sharing does not apply to this article as no datasets were generated or analyzed during the current study.

References

Ahonen T, Hadid A, Pietikainen M (2004) “Face recognition with local binary patterns”, Proceedings of the 8th European Conference on Computer Vision, Prague, Czech Republic, pp. 469–481

Barbu T (2013) Variational image Denoising approach with diffusion porous media flow. Abstr Appl Anal 2013:1–8. https://doi.org/10.1155/2013/856876

Belhumeur P, Hespanha J, Kriegman D (1997) Eigenfaces vs. Fisher-faces: recognition using class specific linear projection. IEEE Trans Pattern Anal Mach Intell 19(7):711–720

Cevik N, Cevik T (2019) DLGBD: a directional local gradient-based descriptor for face recognition. Multimed Tools Appl 78:15909–15928

Cevik T, Cevik N (2019) RIMFRA: rotation-invariant multi-spectral face retrieval approach by using orthogonal polynomials. Multimed Tools Appl 78(18):26537–26567

Cevik N, Cevik T (2019) “A novel high-performance holistic descriptor for face retrieval”, Pattern Anal Appl, https://doi.org/10.1007/s10044-019-00803-5, pp. 1–13

Cevik N, Cevik T, Gurhanli A (2019) Novel multispectral face descriptor using orthogonal Walsh codes. IET Image Process 13(7):1097–1104

Comon P (1994) Independent component analysis—a new concept? Signal Process 36:287–314

Deng H, Zhu J, Lyu MR, King I (2007) “Two-stage multi-class AdaBoost for facial expression recognition”, proceedings of the international joint conference on neural networks, Orlando, Florida, USA

Dubey SR (2017) “Local directional relation pattern for unconstrained and robust face retrieval”, arXiv:1709.09518 [cs.CV]

Dwyer DB, Harrison BJ, Yücel M, Whittle S, Zalesky A, Pantelis C, Fornito A, Allen NB (2016) “Adolescent Cognitive Control”, In: Stress: Concepts, Cognition, Emotion, and Behavior, pp. 177–185, Elsevier

Gao W, Cao B, Shan S, Chen X, Zhou D, Zhang X, Zhao D (2008) The CAS-PEAL large-scale Chinese face database and baseline evaluations. IEEE Trans Syst Man Cybern (Part A) 38(1):149–161

Gao C, Wei DJ, Hu Y, Mahadevan S, Deng Y (2013) A modified evidential methodology of identifying influential nodes in weighted networks. Physica A 392:5490–5500

Gentle JE (2007) “Matrix algebra: theory, computations, and applications in statistics”, Springer-Verlag

Georghiades A, Belhumeur P, Kriegman D (2001) “From few to many: illumination cone models for face recognition under variable lighting and pose”, PAMI

Hafiane A, Seetharaman G, Zavidovique B (2007) Median Binary Pattern for Textures Classification. In: Proceedings of the International Conference on Image Analysis and Recognition, Lecture Notes in Computer Science, vol. 4633, pp- 387-398. Springer, Berlin, Heidelberg

Handbook of Face Recognition (2005) S.Z. Li and A. K. Jain eds. Springer Verlag

Haralick RM (1979) Statistical and structural approach to texture. Proc IEEE 67(5):786–804

Haralick RM, Shanmugan K, Dinstein I (1973) “Textural features for image classification”, IEEE transactions on systems. Man Cybern 3:610–621

He X, Yan S, Hu Y et al (2005) Face recognition using laplacian faces. IEEE Trans Pattern Anal Mach Intell 27(3):328–340

Hosseini H, Marvasti F (2013) Fast restoration of natural images corrupted by high-density impulse noise. EURASIP J Image Vid Process 15:1–7

Islam MS (2014) Local gradient pattern-a novel feature representation for facial expression recognition. J AI Data Min 2:33–38

Islam MS, Auwatanamongkol S (2014) Facial expression recognition using local arc pattern. Trends Appl Sci Res 9:113–120

Jabid T, Chae OS (2011) “Local transitional pattern: a robust facial image descriptor for automatic facial expression recognition”, Proceedings of the International Conference on Computer Convergence Technology, Seoul, Korea, pp. 333–44

Jabid T, Kabir MH, Chae OS (2010) “Local directional pattern (LDP) for face recognition,” Proceedings of the IEEE Int Conf Consum Electron, pp. 329–330

Jafari-Khouzani K, Soltanian-Zadeh H (2005) Radon transform orientation estimation for rotation invariant texture analysis. IEEE Trans Pattern Anal Mach Intell 27(6):1004–1008

Jafri R, Arabnia HR (2009) A survey of face recognition techniques. J Inf Process Syst 5(2):41–68

Kepner J, Gilbert J (2011) Graph algorithms in the language of linear algebra, Society for Industrial and Applied Mathematics, Philadelphia, PA, USA

Lazebnik S, Schmid C, Ponce J (2005) A sparse texture representation using local affine regions. IEEE Trans Pattern Anal Mach Intell 27(8):1265–1278

Lei Z, Pietikainen M, Li SZ (2014) Learning discriminant face descriptor. IEEE Trans Pattern Anal Mach Intell 36(2):289–302

Lezoray O, Grady L (2017) “Image processing and analysis with graphs: theory and practice”, 1st edition, CRC Press

Liu S, Zhang Y, Liu K (2014) “Facial expression recognition under partial occlusion based on Weber Local Descriptor histogram and decision fusion”, Proceedings of the 33rd Chinese Control Conference (CCC), Nanjing, China, pp. 4664–4668

Liua J, Xiong Q, Shi W, Shi X, Wang K (2016) Evaluating the importance of nodes in complex networks. Physica A 452:209–219

Lu J, Liong VE, Zhou X, Zhou J (2015) Learning compact binary face descriptor for face recognition. IEEE Trans Pattern Anal Mach Intell 37(10):2041–2056

Luisier F (2011) Image Denoising in mixed Poisson–Gaussian noise. IEEE Trans Image Process 20(3):696–708

Lyons MJ, Akamatsu S, Kamachi M, Gyoba J (1998) “Coding Facial Expressions with Gabor Wavelets”, In Proceedings of the 3rd IEEE International Conference on Automatic Face and Gesture Recognition, pp. 200–205, Nara, Japan

Lyons MJ, Budynek J, Akamatsu S (1999) Automatic classification of single facial images. IEEE Trans Pattern Anal Mach Intell 21(12):1357–1362

Melendez J, Garcia MA, Puig D (2008) Efficient distance-based per-pixel texture classification with Gabor wavelet filters. Pattern Anal Applic 11(3):365–372

Mohammad T, Ali ML (2011) “Robust facial expression recognition based on local monotonic pattern (LMP)”, Proceedings of the 14th International Conference on Computer and Information Technology (ICCIT), IEEE, Dhaka, Bangladesh, pp. 572–576

Mulders PC, van Eijndhoven PF, Beckmann CF (2016) “Identifying Large-Scale Neural Networks Using fMRI”, In: Systems Neuroscience in Depression, pp. 209–237, Elsevier

Murala S, Maheshwari RP, Balasubramanian R (2012) Local tetra patterns: a new feature descriptor for content-based image retrieval. IEEE Trans Image Process 21(5):2874–2886

Newman M (2010) Networks—an introduction, Oxford University Press

Ng PE, Ma KK (2006) A switching median filter with boundary discriminative noise detection for extremely corrupted images. IEEE Trans Image Process 15(6):1506–1516

Qi X, Fuller E, Wu Q, Wu Y, Zhang CQ (2012) Laplacian centrality: a new centrality measure for weighted networks. Inf Sci 194:240–253

Saeidi N, Karshenas H, Mohammadi HM (2019) Single sample face recognition using multicross pattern and learning discriminative binary features. J Appl Secur Res 14(2):169–190

Skiena S (1990) “Implementing discrete mathematics: combinatorics and graph theory with Mathematica. Reading”, MA: Addison-Wesley

Spacek DL (2008) Computer vision science research projects, , online, www.essex.ac.uk/mv/allfaces/faces95.html.

Tan X, Triggs B (2010) Enhanced local texture feature sets for face recognition under difficult lighting conditions. IEEE Trans Image Process 19(6):1635–1650

The database of faces: Cambridge University Computer Laboratory, online, (2008)

Turk MA, Pentland AP (1991) Eigenfaces for recognition. J Cogn Neurosci 3(1):71–86

Varma M, Zisserman A (2009) A statistical approach to material classification using image patch exemplars. IEEE Trans Pattern Anal Mach Intell 31(11):2032–2047

Wang X, Tang X (2004) A unified framework for subspace face recognition. IEEE Trans Pattern Anal Mach Intell 26(9):1222–1228

Wang Z, Miao Z, Jonathan Wu QM, Wan Y, Tang Z (2014) Low-resolution face recognition: a review. Vis Comput 30(4):359–386

Xu Z, Jiang Y, Wang Y, Zhou Y, Li W, Liao Q (2019) Local polynomial contrast binary patterns for face recognition. Neurocomputing 355:1–2

Yang S, Bhanu B (2011) “Facial expression recognition using emotion avatar image”, In Proceedings of the IEEE Conference on Automatic Face and Gesture Recognition, Santa Barbara, USA, pp. 866–871

Yang M, Zhang L, Shiu SCK, Zhang D (2012) Mon-ogenic binary coding: an efficient local feature extraction approach to face recognition. IEEE Trans Inf Forensic Secur 7(6):1738–1751

Yin QB, Kim JN (2008) Rotation-invariant texture classification using circular Gabor wavelets based local and global features. Chin J Electron 17(4):646–648

Yu H, Liu Z, Li YJ (2013) Key nodes in complex networks identified by multi-attribute decision-making method. Acta Phys Sin 62:020204

Yu YF, Dai DQ, Ren CH, Huang KK (2017) Discriminative multi-layer illumination-robust feature extraction for face recognition. Pattern Recogn 67:201–212

Zhang B, Gao Y, Zhao S, Liu J (2010) “Local Derivative Pattern Versus Local Binary Pattern: Face Recognition with High-Order Local Pattern Descriptor”, IEEE Trans Image Process, vol. 19, no. 2

Zhao W, Chellappa R, Phillips P, Rosenfeld A (2003) Face recognition: a literature survey. ACM Comput Surv 35:399–458

Zoppis I, Mauri G, Dondi R (2019) “Kernel Machines: Introduction”, In: Encyclopedia of Bioinformatics and Computational Biology, pp. 495–502, Elsevier BV

Funding

No funding was received.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Cevik, T., Cevik, N. & Zontul, M. A local-holistic graph-based descriptor for facial recognition. Multimed Tools Appl 82, 19275–19298 (2023). https://doi.org/10.1007/s11042-022-14152-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-022-14152-9