Abstract

Low-resolution face recognition (LR FR) aims to recognize faces from small size or poor quality images with varying pose, illumination, expression, etc. It has received much attention with increasing demands for long distance surveillance applications, and extensive efforts have been made on LR FR research in recent years. However, many issues in LR FR are still unsolved, such as super-resolution (SR) for face recognition, resolution-robust features, unified feature spaces, and face detection at a distance, although many methods have been developed for that. This paper provides a comprehensive survey on these methods and discusses many related issues. First, it gives an overview on LR FR, including concept description, system architecture, and method categorization. Second, many representative methods are broadly reviewed and discussed. They are classified into two different categories, super-resolution for LR FR and resolution-robust feature representation for LR FR. Their strategies and advantages/disadvantages are elaborated. Some relevant issues such as databases and evaluations for LR FR are also presented. By generalizing their performances and limitations, promising trends and crucial issues for future research are finally discussed.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Face recognition (FR) has been widely studied for decades due to its great potential applications. Many technologies focus on dealing with complex conditions such as aging, occlusion, disguise, and variations in pose, illumination, and expression [1]. Although the recognition accuracy of face recognition in controlled environments with cooperative subjects is satisfactory, the performance in real applications such as surveillance is still an unsolved problem partially due to low-resolution (LR) image quality [2]. With the growing installation of surveillance cameras in many places, there are increasing demands for face recognition in surveillance applications from small-scale stand-alone cameras in banks and supermarkets, to large-scale multiple networked close-circuit televisions in public streets [3]. In such cases, subjects are far from cameras, and face regions tend to be small. This issue is called low-resolution face recognition (LR FR).

In this paper, LR FR aims to recognize faces from small size or poor quality images with varying pose, illumination, expression, etc. Traditional methods [4–9] based on high-resolution (HR) face images could not perform well when face images have relatively LR. Compared with other noninvasive biometric authentication techniques such as gait recognition at a distance [10], LR FR is a more difficult task due to the variability of human facial features. The challenges associated with LR FR can be attributed to the following factors:

- Misalignment :

-

Inaccurate alignment will severely affect the performance of HR as well as LR face recognition systems [11] and it is difficult to perform automatic alignment on LR images.

- Noise affection :

-

As a generalized issue, LR problem causes a lot of chain effects for face recognition. The degradation in resolution together with pose, illumination, and expression variations adds complexity to the recognition [12]. In other words, these variations produce much more noises for LR FR.

- Lack of effective features :

-

LR leads to the loss of large amounts of information. Most effective features used in HR FR such as Gabor [13] and local binary pattern (LBP) [14] may fail in LR case, especially very LR case, e.g., 6×6. Novel features insensitive to resolution are essential for LR FR.

- Dimensional mismatch :

-

Different resolutions between gallery images and probe ones in LR FR systems cause dimensional mismatch in traditional subspace learning methods [15].

Due to the challenges and significances for real applications, LR FR has gradually become an active research subarea of face recognition in recent years, and about 150 publications have reported its related contributions. Many promising methods have been proposed, such as multi-modal tensor super-resolution (M2TSR) [12], simultaneous super-resolution and recognition (S2R2) [16], discriminative super-resolution (DSR) [3], RQCr color features for degraded images [17], local frequency descriptor (LFD) [18], coupled locality preserving mappings (CLPMs) [19], multi-dimensional scaling (MDS) [20], and coupled kernel embedding (CKE) [21]. These representative LR FR methods are listed in Table 1. Some comparisons between HR FR and LR FR, including advantages and disadvantages are summarized.

Generally, the straightforward way to solve LR FR problem is super-resolution (SR), which first reconstructs HR faces from several LR faces and then performs recognition with the super-resolved HR images. This kind of methods is steadily developed within the last decade. Since 2005, many researchers have studied simultaneous SR and recognition for including facial features into an SR method as prior information. In recent years, resolution-robust feature representation methods have been gradually considered. However, all of them are limited to different constraints and do not completely solve LR FR problem. Future researches are still necessary for some related problems, such as accurate alignment and unified feature spaces, toward ultimately reaching the goal of resolution-robust face recognition.

Several survey papers [23–28] and books [29, 30] with good reviews on general face recognition have been published. S.Z. Li et al. [31, 32], and Wheeler et al. [33] definitely proposed issues, challenges, and prospects of biometric sensing and recognition at a distance for practical system deployments. However, none of them made specific reviews on LR FR. Thus, the contribution of this paper is to make a comprehensive survey on LR FR with detailed reviews on the existing methods and provide discussions on some open issues within this area. As the topic has attracted researchers since the year 2000, this review generalizes the main contributions during the last decade. No review of this nature can possibly cite every paper that has been published; therefore, we include only what we believe to be representative samples of important works and broad trends from recent years.

The rest of this paper is organized as follows. Section 2 provides a brief overview on LR FR with introductions of concept description, system architecture, method categorization, etc. In Sects. 3 and 4, a detailed review on LR FR methods is presented from two aspects: Super-Resolution for LR FR (Sect. 3) and Resolution-Robust Feature Representation for LR FR (Sect. 4). Section 5 describes the performance evaluations on LR FR methods. Section 6 gives a discussion on existing problems and future trends. Section 7 concludes the review.

2 Overview on LR FR

In this section, an overview on LR FR is given. First, the concept of LR FR is discussed after introducing two concepts: the best resolution and the minimal resolution. Then system architecture including the main strategies considered for LR FR is briefly described. Finally, LR FR methods are categorized, and some representative works are illustrated.

2.1 Concept descriptions

In an ideal scene, an HR face image with an abundance of pixels and details is important for recognition. That is to say, image resolution determines the capacity to discriminate fine features of image to a great extent. Before investigating LR FR, two concepts are introduced for describing the effects of image resolution on face recognition.

The best resolution, on which the optimal performance can be obtained with the perfect trade-off between recognition accuracy and performing speed. The problem of finding “what is the best spatial resolution for face recognition?” was originally raised by Kurita et al. [34]. They observed that faces characterized by global features could often be more easily recognized in lower resolutions. Pyramidal data structure was used to represent image sets in different resolutions from LR to HR with magnification factors from 1 to 64, with the order of 2, and they found that their classifier did not perform the best at the highest resolution. This is a phenomenon worth special attention.

The minimal resolution, also called the threshold resolution, above which the performance remains steady with the resolution decreasing from the best resolution, but below which the performance deteriorates rapidly. Wang et al. [35] demonstrated that face image information could be divided into discriminative information (the individual information compared with other faces) and structure information (the common information of all face images under the same resolution). In addition, similar to the structure information, a new concept called face structural similarity was proposed in [36]. In fact, all of them are from an interesting phenomenon in the real world. When observing a person moving toward us, what we see first is a person moving nearer, then the identity of the person when the distance exceeds a fixed value. Here, the fixed value is just the minimal resolution for the human visual system. In other words, we first obtain the structure information, and then the discriminative information as the resolution exceeds the minimal resolution.

Investigation of the minimal resolution can be found in [35, 37–39]. Lemieux et al. [38] studied principal component analysis (PCA) system with AR database, and observed that the minimal resolution of face size is about 21×16 pixels. Wang et al. [35] explored PCA and linear discriminant analysis (LDA) system with AR database, and indicated the minimal resolution of the face size as 64×48 pixels. Boom et al. [37] investigated PCA and LDA system with FRGC database, and found the minimal resolution of face size to be 32×32 pixels. Fookes et al. [39] examined PCA and elastic bunch graph matching (EBGM) system with XM2VTS database, and obtained the minimal resolution of face size of about 42×32 pixels. From the above investigations, we can draw a conclusion that the minimal resolution depends on different methods and databases.

LR FR we address is automatically recognizing people by their face images in LR. An LR face image means that a face size is smaller than 32×24 pixels (with an eye-to-eye distance about 10 pixels), typically taken by surveillance cameras (maximum resolution 320×240, QVGA) without subject’s cooperation and normally contains noises and motion blurs. In an LR image, exact delineation of facial features is not so trivial both for humans and machines. Generally, LR images discussed here roughly fall into three cases [18] as follows. Such examples of LR are demonstrated in Fig. 1.

-

(1)

Small size, for which the probe images have the insufficient number of pixels. According to the reports of FRVT2000 [40] on resolution experiment, the metric used to quantify resolution is eye-to-eye distance in pixels [41]. Nevertheless, the distance is not usually adopted in most LR FR systems but replaced by face size. In the conventional methodology, small size is sufficient for face recognition. However, when the size of face captured from surveillance camera is smaller than 32×24 pixels, or the size of face down-sampled from HR static image is smaller than 16×16 and even 6×6 pixels, most conventional methods will be of no effect [3, 16, 42].

-

(2)

Poor quality, which is from the fact that probe images are provided in the resized form with blur (e.g., out of focus, interlace, and motion blur), variation of illumination and loss of details. Therefore, the underlying resolutions of the images are comparatively low [43], which means that even if the size of face is 200×200 pixels, there is no guarantee that the face is in HR. From the reports of MBGC2009 [44], the levels of “focus” and “illumination” are taken as image quality measures. However, they just consider LR problem from vision perspective rather than recognition purpose.

-

(3)

Small size & Poor quality, which is from the combination of them naturally. In addition, Han et al. [45] introduced a new form of LR, called low gray-scale resolution.

2.2 System architecture

Similar to conventional HR FR system, LR FR system also includes three main parts. That is LR face detection or tracking, LR feature extraction and LR feature classification, as illustrated in Fig. 2. In general, the latter two are collectively referred to as LR FR, which is the focus in this review.

LR face detection/tracking means that pre-processing, detection, tracking and segmentation of the faces are automatically performed on LR images or videos. State-of-the-art face detection methods such as AdaBoost [46], which are usually able to detect face images larger than 20×20, could fail in LR case. Therefore, building an efficient and effective face detection system is necessary for LR FR especially in surveillance applications. At present, two strategies are considered for this problem as follows.

The first is multicamera active vision systems, which are used for applications with large coverage areas and high detection efficiency [47–50]. Generally, they include two kinds of cameras. One is wide field of view (WFOV) cameras covering a large area to allow the detection and localization of subjects by a combination of motion detection, background modeling and skin detection. The other is narrow field of view (NFOV) cameras actively controlled to capture HR face images using pan, tilt, and zoom (PTZ) commands.

The second is to improve the existing HR face detection methods for solving LR problem. For example, Hayashi et al. [42] proposed the first method to detect very LR faces. They adopted upper-body images and frequency-band limitations of features based on AdaBoost-based detector. The detection rate was improved from 39 % to 73 % on 6×6 with MIT+CMU frontal database. Recently, Zheng et al. [51] developed a modified census transform technique by using boosting classifiers for detecting LR faces in color images.

Besides these two strategies, selection of the most suitable faces is also used for LR problem.

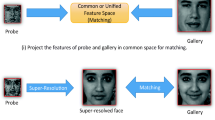

LR FR initially extracts resolution-robust features, and performs classification by matching the features to obtain the identity decision. The steps are similar to HR FR system from general framework. However, as opposed to HR FR, LR FR needs to consider the particular problem of dimensional mismatch. In practical face recognition applications, it is reasonable to assume that all gallery images are in HR. From classification perspective, LR will obviously cause the mismatch problem between gallery/probe pairs, as illustrated in Fig. 3. To deal with the problem, three general ways can be considered as follows:

-

(1)

Up-scaling (or interpolation), such as cubic interpolation, is conventionally adopted in most of the subspace-based face recognition methods. For LR images, it does not introduce any new information but potentially brings noises or artifacts. Therefore, the process of up-scaling can be feasible under high-resolution or middle-resolution, but may drop in performance confronting with much lower resolution. Thus, it is generally not a good way for solving LR problem. For further refined solution, super-resolution or hallucination [52] can be employed to estimate HR faces from LR ones. However, it usually requires a lot of images, which belongs to the same scene with precise alignment, and it also needs large computation cost.

-

(2)

Unified feature space, also called inter-resolution (IR) space [19], is used to project HR gallery images and LR probe ones into a common space. This idea seems to be direct and reasonable for solving LR problem. However, it is difficult to find the optimal inter-resolution space. And the two bidirectional transformations from both HR to IR and LR to IR may bring much more noises.

-

(3)

Down-scaling seems to be a feasible solution for the mismatch problem. Unfortunately, it reduces the amount of available information, especially the high-frequency information mainly for recognition. However, down-scaling on both training/gallery and test/probe may improve the performance under very LR case such as 7×6 [3].

Here, we briefly summarize the three ways.

In the first way, most super-resolution methods are taken as indirect way. They firstly obtain super-resolved HR images from LR images and then for recognition.

The second way is to build unified feature space with optimal mapping techniques, or resolution-robust feature extraction techniques. They can be taken as direct way that performs on the original LR images.

Since the third way, i.e., down-scaling techniques are poor in performance for solving LR problem, and few researches focused on them, which are not our main considerations in this review.

2.3 Categorization and representatives

Based on the above analyses, LR FR methods can generally be classified into two categories, (1) Indirect method: super-resolution for LR FR, (2) Direct method: resolution-robust feature representation for LR FR. Of course, some methods may overlap category boundaries.

-

(1)

Indirect method: super-resolution (SR) is initially used to synthesize the higher-resolution images from the LR ones, and then traditional HR FR methods could be applied for recognition. The landmark works in this category are face hallucination (Baker et al. [52]) and simultaneous SR and recognition (S2R2) (Hennings-Yeomans et al. [16]). Two criteria are considered for SR applications: visual quality and recognition discriminability. However, most of these methods [36, 53–55] aimed to improve face appearance but failed to optimize face images from recognition perspective. Recently, a few attempts were made to achieve these two criteria under very LR case [3].

-

(2)

Direct method: resolution-robust feature representation is the process of directly extracting the discriminative information from LR images. The landmark works are color feature (Choi et al. [17]) and coupled locality preserving mappings (CLPMs) (Li et al. [19]). These methods can be separated into two groups further. One is feature-based method in which the resolution-robust features, such as texture [18], and subspace [56] information, are used to represent faces. However, some features used in traditional HR FR methods are sensitive to resolution. The other is structure-based method, e.g., multidimensional scaling (MDS) [20] in which the relationships between LR and HR are explored in resolution mismatch problem.

This categorization might provide useful information for potential readers. However, it is not unique. Alternative categorizations based on other criteria are also possible, such as relatively LR case and very LR case, single-modality based (against LR only) and multimodality based (against LR and other variations such as pose). Table 2 summarizes representative works of LR FR within the two categories. In Sects. 3 and 4, the general principle and typical methods of each category will be discussed. They are followed by a review of specific methods, including discussions of their pros and cons and suggestions for future researches.

3 Super-resolution for LR FR

Many researchers want to build face recognition systems with LR images obtained by web cameras or close-circuit television. However, the overall performance of LR FR needs great improvement. Compared with the development of resolution-robust face recognition methods, super-resolution (SR), or hallucination methods have gained much more attentions, due to many problems that degrade the quality of face images in LR case. In this section, some typical SR methods specifically satisfying the requirement for face recognition will be reviewed. In the last decade, most of the conventional SR methods called vision-oriented SR were taken as the indirect way, which is reconstruction followed by recognition. Recently, some researchers focused on simultaneous SR and recognition, and SR mainly for recognition, called as recognition-oriented SR obtaining promising results for LR classification.

3.1 Vision-oriented super-resolution for LR FR

The simplest way to increase resolution is direct interpolation of input images with methods such as nearest neighbor, bilinear, and bicubic. However, its performance is usually poor since no new information is added into the process [36]. In contrast to the interpolation, SR increases resolutions of images or video frames using the relationships among several images. Generally, SR can be divided into two classes [73]: reconstruction-based method (from input images alone) [74–77], and learning-based method (from other images) [36, 52–55, 57–59, 73, 78–87].

The reconstruction-based method reconstructs HR images based on sampling theory by simulating the image formation process. However, the method has some fatal shortcomings. Baker et al. [57] pointed out that the method inherits limitations when the magnification factor increases. Lin et al. [88] proposed the problem “Do fundamental limits exist for the reconstruction-based SR?”. They further gave explicit bounds of the magnification factor based on perturbation theory analysis. Recently, Nasrollahi et al. [89] tried to solve the problem and improve the magnification factor from about two to almost four by using multilayer perceptron.

Most of the reconstruction-based methods are more suitable for synthesizing local texture, and they do not incorporate any specific prior information (e.g., face domain) about the super-resolved images. Therefore, they are usually applicable to generic object or scenes rather than face images. Few researchers focused on enhancing face images, though Yu et al. [74] proposed a new method for enhancing LR face videos. Moreover, the performance of the reconstruction-based methods is severely influenced by the following factors [88]: the level of noise existing in the LR images, the accuracy of point spread function (PSF) estimation and the accuracy of alignment. In other words, higher level of noise or poorer PSF estimation and alignment will result in less improvement in resolution.

The learning-based method, also known as face hallucination [52], which is the focus in this review, is always used to enhance resolutions of face images compared with the reconstruction-based method. In essence, the learning-based SR is used to learn the relationships between LR and HR corresponding to different face images in a training set, and then use these learnt relationships to predict fine details for LR probe images (stored by image pixels, image patches, or coefficients of alternative representations). Establishing a good learning model to obtain the prior knowledge is the key to the learning-based method. At present, the commonly used learning models include the PCA model [36], image pyramid model [57], Markov model [82], etc.

How can we evaluate the quality of hallucinated HR images for face recognition? Three goals should be reached step by step. The first goal is to obtain HR images from visual perspective only. This is also the basic target for SR. Then the HR images are expected to be more like face images. Finally, we hope the HR face images are more like someone’s face from recognition perspective.

For realizing the three goals, Liu et al. [58] introduced two different data constraints: soft constraint and hard constraint. The former was to beautify faces and make the results more like the mean face, corresponding to the first two goals. And the latter was to faithfully reproduce facial details to be exactly the same as the input face, similar to the third goal. Enlightened by Liu’s work, Zou et al. [3] designed two new constraints. They included a data constraint and a discriminative constraint. The data constraint was ∥I H −R I L ∥2, to estimate the reconstruction error in the HR image space to make use of the information from HR training images. It was opposed to ∥D I H −I L ∥2 used in the LR image space in the conventional methods. The discriminative constraint was to use class label information to boost recognition performance. For the detailed discussions about the two constraints, please refer to Sect. 3.2.2.

Zou et al. [3] further categorized learning-based SR methods into two classes, namely maximum a posteriori (MAP)-based method [52–54, 57, 58, 73, 79–84] and example-based method [36, 55, 59, 78, 85–87]. It is noticeable that some methods overlap category boundaries. Other categories can also be discussed, such as single-frame-based and multiframe-based, intensity-based and frequency-based, global-based and local-based as well as global&local. In this paper, MAP-based and example-based are taken as the main categorization and other categories as a supplement.

3.1.1 MAP-based method

In this method, the goal is to find an optimal solution maximizing the posterior probability p(I H |I L ) to obtain super-resolved HR images, i.e.,

In this formula, since p(I L ) is a constant for LR image I L is known already, the model can be simplified as follows:

p(I L |I H ) is the probability of obtaining I L when HR image I H is given, and depends on the distribution of the noise deriving from the process of down-sampling. p(I H ) denotes the prior of I H . Therefore, the key of MAP-based method is to estimate p(I H ).

For p(I H ), different algorithms have different solutions. Baker et al. [57] first proposed the idea of face hallucination and led the precedent of learning-based method. They estimated p(I H ) by using an image Gaussian pyramid under Bayesian formulation. The method obtained high-frequency components from a parent structure based on training face images; however, it intrinsically relied on a complicated statistical model. Similar to Baker’s work, Capel et al. [83] also used MAP estimators, with the difference that Capel divided a face image into six unrelated parts, and applied PCA on them separately. Dedeoglu et al. [81] extended Baker’s work to hallucinate face video by exploiting spatiotemporal constraints, and they reported a very high (×16) magnification factor for LR case.

Based on Baker’s work [57] and Freeman’s work [84], Liu et al. [53, 58] proposed a two-step statistical method. It integrated a parametric model called global face image \(I_{H}^{g}\) recording common facial properties, with a nonparametric model called local feature \(I_{H}^{l}\) carrying individualities, to generate the HR image. Then the model (2) could be naturally transformed into

They applied PCA linear inferences to maximize \(p( I_{L} |I_{H}^{g}) \times p(I_{H}^{g})\) and get an optimal global face image \(I_{H}^{g}\), and a Markov random field prior was used to maximize \(p( I_{H}^{l} |I_{H}^{g})\) for obtaining a local feature \(I_{H}^{l}\). However, this method depends on an explicit down-sampling function, which is sometimes unavailable in practice.

Enlightened by Liu’s work, many methods treating face hallucination as a two-step problem have been proposed [73, 80]. They all perform as the following two-step process. First, a global face image containing low-frequency information is obtained, which looks smooth and lacks some detailed features. Second, a residue face image keeping high-frequency information is synthesized. And then the residue image is piled onto the global image to get the final super-resolved face images. For example, Li et al. [80] used a MAP criterion for reconstructing both the global image and the residual image. Jia et al. [73] proposed a unified tensor space representation for hallucinating low-frequency and middle-frequency information, and then recovered high-frequency part by patch learning.

In addition, some methods are performed in transformed feature space rather than pixel density domain. Zhang et al. [82] performed SR in frequency domain with inferring discrete cosine transform (DCT) coefficients instead of estimating pixel intensities in spatial domain. Alternating component (AC) coefficients in DCT were inferred by the Markov network of low-level vision. Subspace methods are also applied to restrict the reconstructed HR image locating within face subspace, such as PCA [54] and kernel PCA subspace [79]. However, most of these SR methods only focus on frontal faces, and fail to deal with unconstrained variations in pose, illumination, and expression.

3.1.2 Example-based method

In this method, the HR image \(\overline{h}\) is reconstructed as a linear or nonlinear combination of the HR training images h i by finding an optimal solution from the given LR image l, i.e., it can be mathematically written as

The key of example-based method is to determine the weight coefficients α i . They minimize the error caused by the linear or nonlinear approximation of the LR training images l i (the pairs of h i ) for l as follows:

For obtaining α i , different algorithms have different models.

Wang et al. [36] proposed a representative example-based method and treated the hallucination problem as a transformation between LR and HR. They used PCA to fit an input LR face image as a linear combination of LR training images. The HR image was then synthesized by replacing the LR training images with their HR counterparts while retaining the same combination coefficients α i . However, the linear PCA model could not capture distinct structures of the input face efficiently and only focused on global estimation without paying attention to local details. Thus, the results seemed unclear, lacked detailed features, and caused some distortions. Moreover, they designed a mask to avoid artifacts on hair and background, and performed hallucination in the interior region of face. In fact, local modeling and appropriate smoothing can be adopted to handle these artifacts properly. That is to say, the idea of two-step in [53] can be used to compensate high-frequency features for the work.

Compared with Wang’s work [36] operated in eigenface space, Liu et al. [55] performed as the two-step way in patch-tensor space. LR image was first partitioned into overlapped patches. HR patches were inferred respectively based on TensorPatch model and then fused together using a local distribution structure to form the hallucinated result. To further enhance the quality of the HR image, the coupled PCA method was developed for residue compensation. While the method added more details to the face, it also introduced more artifacts. Therefore, whether to adopt residue compensation techniques and when to do them is critical for super-resolution (SR).

Besides eigenface and tensor space, manifold learning techniques are used for example-based SR. Manifold learning theory suggests that the subspace of face images has an embedded manifold structure. The high-dimensional structure formed by HR face images is homeomorphic with a geometric structure in LR space. It means that the features of LR and HR face images share a common topological structure, and thus, they are coherent through the structure. Therefore, some ideas of manifold learning such as local linear embedding (LLE) [78] and locality preserving projection (LPP) [85]-[86] are introduced into SR and are discussed as follows.

Chang et al. [78] introduced the idea of LLE into SR with neighbor embedding. They assumed that training LR and HR images form manifolds with similar local geometry in two distinct feature spaces and used the training image pairs to estimate the weight coefficients for reconstruction. However, they treated SR as a patch-based single-step technique without compensation for local image details.

Zhuang et al. [85] developed locality preserving hallucination method based on LPP [7]. It combined LPP and radial basis function (RBF) together to hallucinate a global HR face. Compared with Wang’s work [36], the hallucinated global HR face contained more detailed features. However, there were more noises in the local features such as contour, nostril, and eyebrow, because LPP resulted in the loss of nonfeature information. To improve the details of the synthesized HR face, they developed a residue compensation method based on patch by neighbor embedding [78].

Inspired by Zhuang’s work, Ma et al. [86] employed additional constraints on neighborhood reconstruction for face hallucination. The input LR image determined the position at which the neighbors of a patch were chosen in training step, and then the hallucinated patches were reconstructed using optimal weights of the training image position-patches. The method did not incorporate any residue compensation step into hallucination. They gave the reason and discussed why the conventional two-step methods usually adopted the residue compensation step. According to them, some detailed facial information was lost in the first global reconstruction step.

In addition, some methods performed example-based SR on single-frame LR face image [59, 87]. For example, Park et al. [59] performed SR within PCA feature space with an extended morphable face model. They defined the model by the pixel correspondence between a reference face and other faces. By using the model, all face images were separated into extended 3D-shape and texture. Then the PCA-based SR method was implemented on both shapes and textures of LR input to reconstruct the corresponding HR shapes and textures respectively, and they were further synthesized into the result.

Recently, Hu et al. [87] also developed a single-frame SR method, like Liu’s work [53]. They used both global and local constraints for hallucination; the difference was that their global model was derived from the nonrigid warping of reference face examples and the learning of the pixel structure. The warping could capture a moderate range of face variations. And the effects of warping errors were reduced by the adaptive weighting in the local prior model. Thus, the method could infer more faithful individual structures of the target HR face.

3.1.3 Discussion

Most of the vision-oriented SR methods have attempted to minimize mean-squared error (MSE) or maximize signal-to-noise ratio (SNR) between the original HR and the reconstructed SR images. As we all know, the performances of face recognition systems mostly rely on the ability to identify key facial features, which are typically captured by high-frequency components. However, high-fidelity reconstruction of low-frequency content in SR may dominate the image [90]. Therefore, obtaining a lower MSE or a higher SNR does not necessarily contribute to a better performance. That is to say, the primary goal of vision-oriented SR methods is to obtain a good visual reconstruction, but not usually designed from recognition perspective. As resolution decreases, SR becomes more vulnerable to unconstrained variations. It also introduces noises and distortions that affect recognition, especially when the probe identities could not be included in the process of training selection. Thus, one problem of what relationships exist between SR and recognition is generated.

Some researchers discussed the problem and made attempts to explore the potential of SR in recognition. Baker et al. [52] stated that no new information had been added during resolution enhancement. Also, face recognition methods could be developed to theoretically work as well on the LR images as they did on the hallucination results. Gunturk et al. [54] tried to reconstruct the necessary information required by face recognition system, especially with the consideration of the statistics of noises and motion estimation errors. However, the method is unsuitable for pose variations, and is also heavily time-consuming. Wang et al. [36] explored whether hallucination could contribute to recognition. They found that the hallucinated images performed much better than the LR images but performances of both dropped when the face size decreased from 32×24 to 16×12 pixels with XM2VTS database. However, the improvement in recognition seemed not as significant as that in face appearance. They gave a relatively reasonable explanation that human visual system could better interpret the added high-frequency details in the reconstruction process.

In summary, most of the existing vision-oriented SR methods are not completely suitable for recognition. A promising way to further improve the robustness performance of SR for recognition is to embed SR into recognition. In the following subsection, we turn to recognition-oriented SR.

3.2 Recognition-oriented super-resolution for LR FR

Recognition-oriented SR is not to use SR before recognition in the conventional way. It embeds the elements of SR methods into face recognition. Specifically, it fuses the models of the image formation process and the prior information, together with feature extraction and classification to design methods for recognition [3, 12, 16, 64, 91–93]. Compared with vision-oriented SR, recognition-oriented SR maybe more suitable for LR FR due to the following two observations. Firstly, it simultaneously performs SR and feature extraction with the direct goal of recognition. Secondly, it performs feature SR with the aim of reconstructing not only the low-frequency content (structure information) but also the high-frequency content (discriminative information) for recognition.

3.2.1 Simultaneous super-resolution and feature extraction

The method has drawn much attention. Here, we discuss two representative methods in detail as follows: multimodal tensor SR (M2TSR) [12] and simultaneous SR and recognition (S2R2) [16]. Especially for S2R2, it is the first framework for realizing SR and recognition simultaneously.

Jia et al. [12] made some pioneering explorations in this field and proposed M2TSR method, though it was still sequential and did not achieve complete simultaneity. It initially computed a maximum likelihood vector in the HR tensor space. Although it did not simultaneously perform against pose and illumination variation as illustrated in Fig. 4, face hallucination and recognition were unified in this way. The consideration of multimodality could contribute to LR FR. However, its disadvantage was that the tensor manipulations for reconstruction demanded high computation expenses.

Hennings-Yeomans et al. [16, 60, 61] showed that the performances of conventional SR methods were degraded under very LR case. Thus, they proposed S2R2 method to combine identification with reconstruction for dealing with LR problem by introducing the constraints between LR and HR images in a regularization form, as illustrated in Fig. 5. Formula (6) denotes the base model of S2R2. y p , \(f_{g}^{(k)}\), and x denote the input LR probe image, the gallery image in the kth class, and the output HR image, respectively; B, L, and F represent operators for down-sampling, smoothness and feature extraction, respectively; besides, α and β are the regularization parameters. The goal of the S2R2 model is to obtain a suboptimal output HR image x for satisfying the need of vision and recognition simultaneously.

In addition, the base S2R2 model was improved by involving the cases of multiframes or multicameras version B (i). Furthermore, the base SR prior model l (k) and feature extraction (F L ) were modified based on multiresolutions version (l or L). The modified model is shown in (7), where α,β,γ are the regularization parameters, and B denotes the image formation process. The base S2R2 model and the improved version tested on CMU Multi-PIE database on 6×6 obtained the accuracies of 62.8 % and 73 %, in comparison with the PCA baseline method at 47.1 %.

Compared with the general indirect SR methods, S2R2 improves identification accuracy and gets promising results on 6×6. However, the parametric optimization needs to be repeated for each gallery image in the database, especially for large databases; thus, their formulation is quite time-consuming. Also, this method assumes that gallery and probe images are in the same pose, frontal or localized perfectly, directly resulting in its inefficiency under many general scenarios. Therefore, how to obtain the appropriate regularization parameters and reduce the computational complexity are two important issues in this model.

3.2.2 Feature super-resolution

The method is also called feature hallucination, which was innovatively proposed by Li et al. [93] to reconstruct HR features instead of HR images for face recognition. The kernel version of support vector data description (SVDD) [94] was used to synthesize HR discriminative features both for vision and recognition perspective [64, 65]. SVDD approximated the support of objects belonging to the normal class. Its main idea was to find a ball that could achieve two conflicting goals simultaneously. One was that it should be as small as possible and the other was that it should contain as much training data as possible with equal importance. However, the method is only for frontal faces and its generalization ability remains doubtful.

Besides, some methods are used for video applications. For example, Arandjelovic et al. [91] proposed an extended generic model called shape-illumination manifold (gSIM) framework by separating illumination and down-sampling effects for feature SR. Their experiments on both the Cambridge database [95] and the Toshiba database reported promising results for face recognition. However, the method requiring video sequences at enrollment makes it impractical for surveillance scenarios.

In addition, we introduce two representative recognition-oriented SR methods: nonlinear mappings on coherent features (NMCF) [92] and discriminative SR (DSR) [3]. Both of them introduce classification discriminability into SR process.

Huang et al. [92] proposed NMCF method with canonical correlation analysis (CCA) to establish coherent features between LR and HR images represented by PCA. Motivated by Zhuang’s work [85], they also applied the radial basis function (RBF) mapping to build the regression model by adopting the advantages of RBF, such as fast learning and generalization ability. NMCF was evaluated on 12×12 with FERET database and obtained the accuracy of 84.4 % compared with 36.9 % of the PCA baseline method.

Recently, Zou et al. [3, 63] proposed the DSR method with two constraints (new data constraint and discriminative constraint). It modeled the SR problem as a regression problem in the kernel space under very LR case such as 16×12 and 7×6, as illustrated in Fig. 6. Formula (8) shows the mapping relationships under the two constraints. The former is the new data constraint, while the latter is the discriminative constraint. The conventional SR method employs the data constraint \(\| \mathbf{D}I_{h}^{i} - I_{l}^{i} \|^{2}\) (D is the down-sampling operator) to make full use of the information in LR space for SR. However, this data constraint may not work well for very LR case because of the limited information carried by LR space. So, the data constraint is changed into \(\| I_{h}^{i} - \mathbf{R}I_{l}^{i} \|^{2}\), where DR=I. The discriminative constraint is to use the class label information of the training data for improving the discriminability. DSR shows its superiority from both visual quality and recognition performance. For example, super-resolution results on 16×12 with Extended Yale B database are shown in Fig. 7. Also, DSR obtained the recognition accuracy of 73.5 % on 7×6 with CMU PIE database in comparison with 40.5 % in the PCA baseline method.

A brief discussion on the similarities and differences of DSR and NMCF is as follows: Both DSR and NMCF require a training set containing LR and HR image pairs to learn the nonlinear mappings from LR to HR feature space, followed by the reconstruction of SR images or features. Compared with NMCF, DSR performs more efficiently when LR images are used for training/gallery sets. Conversely, when HR training/gallery sets are used, the performance of NMCF is better than DSR.

3.2.3 Discussion

Some successes have been achieved by recognition-oriented SR methods such as S2R2 [16] and feature SR [93]. However, they just provide the framework for recognition-oriented SR, and their recognition performances largely depend on different reconstruction regularization models and feature extraction techniques. Some common problems are still unsolved in these methods. For example, it is unclear what kind of reconstruction regularization method is more appropriate for recognition. In addition, feature extraction is known to be sensitive to large appearance changes due to pose, illumination, expression, etc. To combine super-resolution and feature extraction perfectly is also a big issue for the future work. A possible way to handle these problems is to adopt more robust feature extraction techniques, which is the focus in the following section.

3.3 Summary of super-resolution for LR FR

A summary of super-resolution (SR) for LR FR is as follows:

-

(1)

Most of the vision-oriented SR methods focus on obtaining a good visual reconstruction rather than a higher recognition rate; however, the essence of the recognition-oriented SR methods is to satisfy the need of recognition with LR images.

-

(2)

Both vision-oriented SR and recognition-oriented SR are sensitive to different variations such as pose, and require lots of training samples of the same scene.

-

(3)

In general, although SR methods require large computation costs, they have the potential advantages in very LR cases like 6×6.

4 Resolution-robust feature representation for LR FR

In contrast to super-resolution for LR FR, researches on resolution-robust feature representation in LR problem started around year 2008. The difficulties of finding the effective features in LR case render face recognition more complicated. Some typical features in HR case such as texture, shape, and color may fail in the LR case. Therefore, the only feasible option is to explore the potential of these features for LR FR. In addition, LR results in a dimensional mismatch problem under the subspace framework. Building interresolution space may provide a promising direction for solving this problem. Resolution-robust feature representation method is classified into two groups: feature-based method [17, 18, 56, 69, 96] and structure-based method [15, 19–21, 43, 97–100].

4.1 Feature-based method

This method identifies an LR face directly using the features extracted from probe images in resized forms. However, all the existing resolution-robust features are improved from the features used in HR FR, such as the improved color space [17] and the improved local binary pattern descriptor [18]. Similar to the categorization that successfully used in [24], we further classify feature-based method into two categories, that is, the global feature-based method and local feature-based method.

4.1.1 Global feature based method

In this method, the whole LR probe image, represented by a single high-dimensional vector containing the global low-frequency information, is taken as input. The advantage of this method is to implicitly preserve all the detailed texture and shape information, which is useful for recognizing LR faces. On the other hand, this method is also easily affected by variations such as pose and illumination like they do as HR FR. Here, two kinds of global methods are introduced: color features and improved dimensionality reduction techniques.

Color features are the representative global features. Choi et al. [17] first demonstrated that color-based features could significantly improve LR FR recognition performance compared with gray-based features. The idea was based on the boosting effects of color features on low-level vision [101]. A new metric called variation ratio gain (VRG) as shown in (9) was further defined to prove the significance of color effect on LR face images within the subspace face recognition framework. In VRG, J lum+chrom(γ) and J lum(γ) represent variation ratio parameterized by face resolution (γ) for color-augmentation-based feature subspace and intensity-based ones respectively. Here, J(γ) is the ratio between the variations of extra-personal covariance matrices and those of intrapersonal covariance matrices for classification tasks. As illustrated in Fig. 8, as γ decreases, VRG (γ) gets larger, indicating that color components can compensate a decreased extra-personal variation by intensity component with LR. Based on the phenomenon, RQCr color space was selected for LR FR. Experiments on the hybrid database collected from CMU PIE, Color FEERT, and XM2VTS with probe resolution 15×15 tested on “RQCr” and “R” space achieved accuracies of 68 % and 54 %, respectively.

VRG(γ) demonstrates the role of color in LR classification. However, no theories can prove that RQCr is more efficient for LR case in comparison with other color spaces. Therefore, to efficiently use color-based features for boosting intensity-based features is still an open issue. It is known that reducing the correlation of different color components is certainly helpful to HR FR, and even LR FR. Yang et al. [102] investigated the potential efficiency of color spaces, and proposed various normalized spaces such as the improved YRB space to enhance face recognition.

Choi et al. [67, 68] improved their work and proposed a color feature selection method by boosting-learning framework. Thirty-six different color components were used to form a color-component pool, and a weighted fusion scheme was used to fuse the selected color features at the feature level. The method was successfully evaluated on very LR images with SCface database. It improved the accuracy with RQCr space from 49.61 % to 62.78 % with the new color pool. The experiment indicated that the framework of color fusion was perhaps beneficial to LR FR. Furthermore, they adopted LBP features in color space for LR FR [66]. However, the role of color features for LR images is degraded by serious illumination variations despite of their successes in face recognition.

In addition, improved dimensionality reduction techniques are also proposed for the LR problem. Abiantun et al. [56] adopted the kernel class-dependence feature analysis (KCFA) method [103] for dealing with very LR case on the FRGC database Experiment 4. KCFA used a set of minimum average correlation energy filters to exploit higher-order correlations between training samples in the kernel space, and obtained the accuracy of 27.1 % on 8×8 compared with the PCA baseline method of 12 % on HR images. Wang et al. [96] proposed a new graph embedding method called FisherNPE for resolution-robust feature extraction, based on LDA and neighborhood preserving embedding (NPE) preserving both global and local structures on the data. Also, Bayesian probabilistic similarity analysis [8] of intensity differences between LR and HR images was used for classification. Photon-counting LDA [104] was proposed for coping with the LR FR, modeling the image pixels with Poisson distribution by the semiclassical theory of photon detection.

4.1.2 Local feature based method

In this method, the LR probe image is represented by a set of low-dimensional vectors containing the local high-frequency information. Compared with global method, local feature based method provides additional flexibility to recognize a face based on its parts, and is more robust to variations.

For example, Hadid et al. [105] proposed a novel discriminative feature space for detecting and recognizing LR faces from video, employing LBP representation and SVM classifier. Ahonen et al. [69] adopted local phase quantization (LPQ) method based on the assumption of point spread function. The method used the phase information of Fourier transformed images for LR FR, revealing that LPQ information in the high-frequency domain was almost invariant to blur. Afterwards, Lei et al. [18] made an improvement on LPQ and proposed local frequency descriptor (LFD) using not only phase information, but also magnitude information. Furthermore, the relative relationships between phase information were adopted without the assumption of point spread function instead of the absolute value. Also, a uniform pattern mechanism [14] was introduced to improve the performance.

4.1.3 Discussion

Finding resolution-robust features is a conventional issue for face recognition in the LR or HR case. Although many researchers concerned resolution-robust feature representation, the performance is far from perfect due to different complicated variations. Most methods mentioned above are only against one variation and not against multiple. For example, compared with local features, global features are more sensitive to illumination variation. With regards to pose and expression variation, local features are more susceptive than global features. A possible way to further improve the robustness may lie in the combination of local-based and global-based features. However, what features should be combined and how to combine them for concentrating their advantages are the future issues for LR FR.

4.2 Structure-based method

Compared with the feature-based method concerning resolution-robust features, the structure-based method focuses on constructing the relationships between LR and HR feature space for facilitating direct comparison of LR probe images with HR gallery ones from a classification perspective. The method aims to build the holistic framework for LR matching by especially solving the particular problem in LR FR, namely dimensional mismatch. Here, we introduce three kinds of structure-based methods. Coupled mappings [19] aim to find the structure relationships. Resolution estimation [43] determines the kinds of structures chosen for building LR FR system. Finally, the sparse representation based method [98] is adopted for representing LR probe images using HR training images from a structure perspective.

4.2.1 Coupled mappings

Choi et al. [15] first pointed out the dimensional mismatch problem, and proposed eigenspace estimation (EE) techniques for obtaining a common LR feature space for matching between LR and HR. Then Li et al. [19, 70] proposed a more general framework called unified feature space based on coupled mappings (CMs) (10). In CMs model, l i and h i represent an LR face image and an HR one, respectively, and A L and A H are two coupled mapping matrices. For LR FR, the mapping between each LR image and the corresponding HR image is expected to be as close as possible in the new unified feature space. Obviously, EE is one special case of CMs with A H of down-sampling and A L of identity matrix. Although CMs provides a promising framework for learning the relationships between LR and HR, it has an obvious shortcoming in poor discriminability for classification. Therefore, Li et al. introduced the locality preserving objective [7] into nonparametric CMs model, and proposed coupled locality preserving mappings (CLPMs) method as shown in (11). It significantly improved the performance by involving the weight relationships (W ij ) among data points. Evaluation on FERET database obtained the accuracy of 90.1 % on 12×12 in comparison with PCA baseline method with 61.8 %. However, CLPMs still exhibits sensitivity to the parameters and pose variations.

Other works with the aim of improving CMs have been developed. Zhou et al. [100] improved the classification discriminability of CMs by introducing linear discriminant relationships [5] between intraclass scattering and interclass scattering into CMs. Ren et al. [106] adopted canonical correlation analysis with local discrimination criterion [107] to compute the two coupled mapping matrices. With the process of regularization and piecewiseness on feature space, the method showed its superiority compared with CMs/CLPMs in both recognition accuracy and time complexity. Furthermore, Ben et al. [108] used the ideas of CMs to couple gait feature with LR face images and map them onto a common space for LR FR.

Recently, Ren at al. [21] further introduced the kernel tricks into CLPMs and proposed coupled kernel embedding (CKE) method for dealing with LR FR. In the CKE model (12), Ψ and Φ represent two different nonlinear mappings such as the Gaussian-quadratic kernel function. Experiments on the CMU Multi-PIE database obtained the accuracy of 84 % on 6×6. Although the Rank-1 accuracy on SCface database is only 11 %, it still outperformed the LR baseline method with 4 %. By the kernel tricks, on one hand, CKE improved the classification performance; on the other hand, it increased the time complexity.

In resolution mismatch problem, there exist three relationships (LR vs. LR, LR vs. HR, and HR vs. HR) involved in data. CMs/CLPMs only considered LR vs. HR. Deng et al. [109] further considered the other two relationships and adopted regularized coupled mappings with two new color spaces to get more information. However, the efficiency of the method empirically depends on the regularized parameters.

Similar to the work in [19, 109], Biswas et al. [20, 71, 72] skillfully utilized the three relationships between LR and HR, as illustrated in Fig. 9. During training, they embedded LR images into a new Euclidean space in order to achieve the best distances between their HR counterparts using multidimensional scaling (MDS) [110], and a transformation matrix W was obtained. During the test, LR gallery and probe images were transformed independently using the learned transformation matrix. Then the matching process was performed. It should be emphasized that they highlighted the pose problem involved in LR recognition. This is an important contribution for researches on LR FR. They evaluated MDS on CMU Multi-PIE (8×6) and SCface database (12×10), and obtained the accuracies of 52 % and 71 %, respectively. In their experiments for LR FR, MDS performed better than sparse representation based super-resolution [111].

For further extension, here we provide a more general CMs model (13), including super-resolution (SR) and resolution-robust feature extraction. F represents feature extraction or subspace dimensionality reduction techniques. Then different stable features can be integrated into the framework. When A L is replaced by SR constraints with the settings of A H =I M , the new model will be turned into SR. That is to say, SR is one special case of the model. Although S2R2 [16] is essentially different from the general indirect SR methods, it can also be represented by the new model when A and F are simultaneously used from both reconstruction and recognition perspectives. When F is provided as the form of kernel processing, the model will be turned into CKE [21] and MDS [20]. Finally, if F is ignored and just A is preserved, it returns to the base CMs model. In short, the new model is more general. However, to efficiently compute the two coupled mapping matrices A H and A L is also the key to the new model like the CMs model.

In fact, ideas similar to CMs are also applied to other problems of face recognition. Lin et al. [112] proposed the idea of common discriminant feature extraction to solve the heterogeneous face recognition problems such as matching between visual (VIS) image and near infrared (NIR) image, and photo-sketch recognition. Recently, Lei et al. [113] proposed coupled spectral regression (CSR) to address VIS-NIR recognition. Furthermore, they improved CSR method in [114], which was also evaluated on LR images.

4.2.2 Resolution estimation

The method is another way proposed for dealing with the dimensional mismatch problem. It determines the kinds of structures chosen for building the LR FR system. Wong et al. [43] proposed two innovations for the LR problem. One was the concept of an underlying resolution, which did not rely on the size of face image. The other was that the local features sensitive to resolution were exploited for LR classification. Based on the innovations, they proposed a resolution detection and compensation framework for dynamically choosing the appropriate face recognition system. A similar method was proposed by Pedro et al. [115]. They developed the concept of estimating the acquisition distance in three different scenarios (close, medium, and far distance). And the distance was taken as the weight to fuse two systems (PCA-SVM system and DCT-GMM system) at the score-level. They demonstrated that training with medium distance images was a good way to control the performance degradation due to the varying distance.

In a way, the compensation frameworks show the potential of multiple face recognition systems for addressing LR FR. For example, color feature selection framework [67] is just a typical one. A combination of different classifiers [116, 117] also provided multimodal fusion at the classifier-level for the LR problem. In addition, a combination of several common methods was also proposed for dealing with the LR problem in [97]. They first adopted sparse representation [98] to describe patches represented by LBP features with different sizes. Then AdaBoost was used to select the most discriminative patches for classification. However, compared with feature selection [118], the method tested on Extended Yale B database showed poor performance. Thus, it remains doubtful whether such combination is efficient for LR FR, even just for HR FR.

4.2.3 Sparse representation based method

Sparse representation is first proposed by Wright et al. [98] for coping with robust classification problem. It is recently warmed and has become one of the standard methods of face recognition within the literature followed by many researchers. They cast the recognition problem as one of classifying among multiple linear regression models. If the number of features was sufficiently large, and the sparse representation was correctly computed, they demonstrated that the choice of features was no longer critical. It was right even in the down-sampled images, though their work was not specialized for the LR case. However, like most of the other methods, sparse representation also requires the training/gallery samples covering different variations such as pose, illumination, and expression. It will be a big obstacle for real applications.

Furthermore, Yang and Wright et al. [111] adopted sparse representation for super-resolution (SRSR) on face images. More recently, inspired by their work, Bilgazyev et al. [90] performed SRSR on high-frequency components learned by wavelet decomposition-based rules. They reported that the recognition performance outperformed SRSR [111] and S2R2 [16] for the CMU PIE database. Moreover, Shekhar et al. [99] proposed an LR FR method with especially handling illumination variations based on sparse representation. In short, sparse representation may provide a new theoretical framework for dealing with LR FR problem in the future.

4.2.4 Discussion

The key of the coupled mappings is to obtain an interresolution space or unified feature space for solving the mismatch problem. The interresolution may be intuitively less than HR but greater than LR. However, EE [15] takes a new LR space as the interresolution space. Also, CLPMs [19] achieves the best performance in the interresolution less than the resolution of probe images. Thus, a question will be naturally generated as which the interresolution is suitable for LR FR. It is difficult to answer due to many factors such as different feature representations, face databases, and applications. Other strategies in the structure-based method, such as resolution estimation, system compensation, and sparse representation may provide the promising directions for addressing LR FR.

4.3 Summary of resolution-robust feature representation for LR FR

A summary of resolution-robust feature representation for LR FR is as follows:

-

(1)

In general, resolution-robust feature representation methods are mainly applicable in relatively LR cases rather than very LR cases like 6×6.

-

(2)

The feature-based methods can be used for multiple resolutions from HR to LR, but they need online training. However, the structure-based methods are more suitable for offline training, but they are mainly used for a single resolution application with the balance between efficiency and speed.

-

(3)

Obviously, the combination of feature-based and structure-based methods will contribute to very LR cases. More characteristics about resolution-robust feature representation methods are shown in Table 3, which also provides a comparison between super-resolution and resolution-robust feature representation.

Table 3 Comparison between super-resolution and resolution-robust feature representation

5 Evaluations on LR FR methods

In order to have a clear idea on various LR FR methods, it is important to evaluate them based on certain evaluation criteria with some standard LR face databases. Unfortunately, such a requirement is seldom satisfied in practice due to the lack of general criteria and databases originally developed for LR FR. At present, LR FR methods are just evaluated based on HR FR criteria and databases.

As for the evaluation criteria for face recognition, generally speaking, face recognition can be described in terms of the following two tasks. One is face verification where the input is a face image and an identity label, and the output is a binary decision, yes or no, to confirm the identity label. Thus, face verification is a 1-to-1 problem. The other is face identification where the input is a face image, and the output is to assign the identity label assigned to the face by pointing out the subject. Evidently, face identification is a 1-to-N problem and also popularly called face recognition. Moreover, some new tasks have been proposed, such as screening and watch list, which are the transformed versions of verification or identification [62].

In this review, face identification (recognition) is our focus. In identification, the cumulative match characteristic (CMC) curve is usually adopted to plot the percentage of identification accuracy (IDA) vs. Rank. (IDA is the number of correctly assigned labels to the total number of input faces.) All these metrics are generally derived from HR FR and can also be used in LR FR with different resolutions. Other metrics, such as the minimal resolution and execution time, are occasionally applied to evaluate LR FR methods. However, the performances of different methods depend on different databases to some extent. Therefore, a few standard LR face databases are necessarily built for fair comparisons, which is the future work. In this section, we evaluate some representative methods on image-based standard databases and video-based real environment, respectively, to find the problems existing in LR FR. The performances and the limitations of these methods are finally summarized.

5.1 Evaluation on image-based standard databases

While HR FR is investigated in depth, many image-based standard databases have been established to compare performances of these methods, such as AR [119], Yale [120], Extended Yale B [121], and CAS-PEAL [122]. However, there is currently no database for LR FR, so that for evaluations on most of the existing LR FR methods, face images with frontal view, neutral expression, and illumination variations are selected and preprocessed such as down-sampling and blurring instead of the actual LR images taken by surveillance cameras. Currently, the widely used databases for LR FR are FERET [123]/Color FERET [124], CMU PIE [125]/CMU Multi-PIE [126], FRGC [127], and SCface [128].

FERET [123] consists of one gallery set and four probe sets (fafb, fafc, dup1, dup2). There are 1,196 images of 1,196 subjects in the gallery set and the four probe sets contain 1,195, 194, 722, and 234 images, respectively. Fafb probe images are obtained as frontal view and expression variation, and usually taken as the experiment data in most LR FR methods such as CLPMs [19], LFD [18], S2R2 [16], and NMCF [92]. As for other methods such as M2TSR [12], the experimental data are collected from the four probe sets. Moreover, Color FERET [124] is also used for evaluating LR FR methods such as RQCr [17]. It is worth noting that S2R2 is just evaluated on 6×6, and LFD and RQCr are tested on blurred images.

CMU PIE [125] includes 41,368 images of 68 subjects (21 samples/subject). Among them, 3,805 images have coordinate information of facial feature points. Some methods such as EE [15], RQCr [17] and DSR [3], evaluated on this database, select the frontal view images with illumination variation for experiments from the 3,805 images. CMU Multi-PIE [126] is a recent extension of CMU PIE database. It has a total of 337 subjects (compared with 68 subjects in CMU PIE) who participated in one to four different recording sessions, separated by at least a month (unlike CMU PIE, where all images of each subject are captured on the same day in a single session). As in CMU PIE, facial pose, expression, and illumination variations due to flashes from different angles are recorded. The frontal view images with neutral expression and illumination variations are chosen for LR FR methods, e.g., S2R2 [16], MDS [20], CKE [21], and evaluated on CMU Multi-PIE, which is also similar to CMU PIE.

FRGC [127] consists of 50,000 images divided into training and validation sets. The training set is designed for training methods and the validation set is used for assessing performance of methods in a laboratory setting. The validation set includes 16,028 images of 466 subjects. The FRGC database consists of six experiments. Among the six experiments, in experiment 1, the gallery consists of a single controlled still image of a person and each probe consists of a single controlled still image. Experiment 2 studies the effect of using multiple still images of a person on performance. In experiment 2, each biometric sample consists of the four controlled images of a person taken in a subject session. Experiment 4 measures recognition performance from uncontrolled images. In experiment 4, the gallery consists of a single controlled still image, and the probe set consists of a single uncontrolled still image. S2R2 [16], KCFA [56], and DSR [3] methods are evaluated on FRGC database experiments 1, 2, and 4, respectively.

SCface [128] is a database of static images of human faces. Images were taken in an uncontrolled indoor environment using five video surveillance cameras of various qualities. The database contains 4,160 static images (in visible and infrared spectrum) of 130 subjects. Images from different quality cameras mimic the real-world conditions and enable robust testing, emphasizing law enforcement and surveillance scenarios. MDS [20], DSR [3], and CKE [21] methods are evaluated on this database.

The performances of LR FR methods tested on FERET, CMU PIE, CMU Multi-PIE, FRGC, and SCface are summarized in Tables 4, 5, 6, 7, and 8, respectively. Moreover, other databases such as XM2VTS [129], UMIST [130], ORL [131], and KFDB [132] are also used to evaluate LR FR methods such as RQCr [17], NMCF [92], and SVDD [64], which are shown in Table 9. Most methods listed in Tables 4–9 aim at face identification (recognition) with CMC (Identification accuracy vs. Rank-1) as the evaluation criterion except for KCFA in Table 7 aiming at face verification with receiver operating characteristic (ROC) curve (Verification accuracy vs. False acceptance rate).

On these databases, different methods are able to be compared on a relatively fair basis. And one can easily pick up the methods with good performances. The direct performance comparison of LR FR methods is not provided in this review mainly due to the lack of standard LR databases and evaluation protocols; however, the performances of baseline methods shown in the above tables can be taken as references for rough comparisons. Here, we discuss the results and attempt to give some relatively reasonable comparisons. Compared with other recent databases, FERET database mainly covers expression variation, which is relatively old. Tested on FERET, resolution-robust feature representation methods such as CLPMs [19] slightly outperform recognition-oriented super-resolution methods such as S2R2 [16] and NMCF [92]. The result further supports that the former is more suitable for simple conditions, e.g., single expression variation. However, for relatively complicated databases such as FRGC and SCface, the situation will be reversed. That is to say, recognition-oriented super-resolution methods obtain much better performance, although the resolution-robust feature representation method MDS [20] tested on SCface is greatly superior to DSR [3]. This result is attributed to the use of frontal view probe images. In addition, the evaluations on CMU PIE or CMU Multi-PIE demonstrate that recognition-oriented super-resolution methods such as S2R2 [16] and DSR [3] are more easily against unconstrained variations, e.g., illumination and expression. However, the resolution-robust feature representation method CKE [21] also obtains promising results on CMU Multi-PIE mainly with the help of the kernel trick.

From the above analyses, we can know that it is very difficult to rank all the methods based on the existing and widely used image-based standard databases. Also, we find that no method can satisfactorily handle the LR problem in face recognition under all complicated variations. For example, M2TSR [12] is the only method specially designed to deal with pose and illumination problem in LR classification. However, its performance on the FERET database with 14×9 is only 74.6 %, which is still far below the requirement of practical use. Therefore, this review mainly focuses on the discussions of different methodologies for LR FR, in hope of providing helpful technical insights and promising directions for interested researchers.

5.2 Evaluation on video-based real environment

After evaluations on image-based standard databases, let us conduct some further experiments on video-based real environment to evaluate the performances of various LR FR methods. Some typical video databases such as CMU MoBo [133], Honda/UCSD [134], and CLEAR2006 [135] are widely used for video-based face recognition even for LR FR. Recently, some researchers attempted to build LR databases to mimic real environment. Huang et al. [136] presented a database named “labeled faces in the wild” (LFW), containing images that were collected from the web. Although it has natural variations in pose, illumination, expression, etc., there is no guarantee that such a database can accurately capture all variations found in the real world [137]. Besides, most objects in LFW only have one or two images, which might not be enough to conduct different face recognition experiments. Yao et al. [138] created a face video database, UTK-LRHM, obtained from long distances and with high magnifications, both indoors and outdoors under uncontrolled surveillance conditions. Also, they developed a wavelet transform based multiscale processing algorithm, which was used to deal with image degradations related to long-distance acquisition and was successful in improving recognition rate. Ni et al. [139] manually put together a remote face database including the face images with variations due to occlusion, blur, pose, and illumination, which were taken from long distances and under unconstrained outdoor environments. The remote database was just evaluated on two state-of-the-art face recognition methods including baseline methods such as PCA, LDA, SVM, and the recently developed methods, e.g., sparse representation. But it did not form into a complete database for LR FR. In a word, there is still no LR benchmark database for public comparisons at present.

To evaluate the performances of the existing LR FR methods for real applications, we construct a video-based face database with uncooperative subjects in an uncontrolled indoor environment using a video camera (QVGA, 320×240). Images from the low-fidelity quality camera mimic the real-world LR FR conditions factually. The testing environment mainly includes two conditions, good/poor illumination, and 2.0/3.0 meters distance, which are illustrated in Fig. 10. The database contains 800 training/gallery images (20 images per subject) and 160 testing/probe videos (4 videos per subject) from 40 subjects. Here, we do not discuss the process of detection for capturing and tracking face frames as that is out of the scope of this paper, though it is very important for video-based recognition.

The illustration of the surveillance camera system for different distances and illuminations. In each scenario, the left is a frame acquired by the surveillance camera, while the right is an up-sampled version of the frame. (a) 2.0 meters distance with good illumination, (b) 2.0 meters distance with poor illumination, (c) 3.0 meters distance with good illumination, (d) 3.0 meters distance with poor illumination

In our experiments, S2R2 [16] and CLPMs [19] are used for the real environment evaluation, as they are the representatives in recognition-oriented super-resolution method and resolution-robust feature representation method, respectively. For S2R2, all the HR training images and the corresponding down-sampled LR images are used for computing Fisherface features for representation, and learning regularization parameters and the discriminant coefficient. For the details of the system implementation, please refer to [16]. In the testing procedure, since the current S2R2 method needs large computation and fails to reach the requirement of real time, frame images are taken as the probe samples at a fixed distance such as 2.0 and 3.0 meters instead of performing on video directly. For CLPMs, all the HR training images and the corresponding down-sampled LR images are used for computing the two coupled mapping matrices. For the details of the training process, please refer to [19]. Compared with S2R2, CLPMs is more suitable for real-time application. In experiments, only one aligned HR image (64×48) with frontal view and good illumination is taken as gallery for each subject. For each subject, five probe images are randomly selected at 2.0 and 3.0 meters distance, and their face sizes are resized into 32×24 and 21×15, respectively.