Abstract

This paper presents a novel enhancement technique for low light grayscale images. The main goal of this work is to enhance the visual quality and improve the information contents (entropy) of the images using a novel Directed Searching Optimized mean-exposure based sub-image histogram equalization technique. Initially, the proposed method clips the original histogram to prevent over enhancement. The clipped histogram is divided into two sub-histograms, based on mean intensity value. A further division of the lower sub-histogram is carried out, based on an exposure threshold to avoid unnatural artifacts. Then, each sub-histogram is equalized independently followed by a modified transfer function. Two optimal constraint parameters are used in this paper, to reduce the information loss during histogram equalization. The Directed Searching Optimization algorithm is employed in this paper for automatic selection of the constraint parameters in order to maximize the fitness function. It makes the proposed technique more adaptive. Finally, the proposed method is compared with other existing histogram equalization based image enhancement techniques. Simulation results show that, the proposed method is able to maximize the information contents effectively and preserves the natural appearance of the image. It also results better visual quality image with improved PSNR, SSIM, FSIM and reduced MSE as compared to other state-of-the-art methods.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In this technological world, images are being captured by different camera sensors for different applications, but manipulation of these images without any information loss is an arduous task. The process of transformation of a degraded low quality input image to the image with better qualityis known as image enhancement technique. This technique is used for improving the interpretability of information contents for viewers with better quality image. The degradation in an image is due to non-uniform environmental illumination, imperfect image acquisition, noise, aperture size, shutter speed, low quality camera sensor, poor light environment [28]. Quality of the image is also degraded due to the distance between camera sensor and target. This effect can be observed while taking the images of the earth’s surface from satellite or aircraft. So to improve the quality of such images, the image enhancement technique is used in image processing.

The method of image enhancement can be performed either in spatial domain or in the frequency domain. Spatial domain is directly associated with the pixel value of an image. Histograms are considered as the basis for a number of spatial domain techniques. It describes the frequency of the intensity values that occur in an image. Its shape predicts the possibility of contrast enhancement. To set the image statistics in a clarified visual format, histograms are also used.The most commonly used spatial domain technique is histogram equalization [12]. The frequency domain method is associated with fourier transform of an image. In frequency domain, fourier transform of image is multiplied with filter transfer function. Then,an enhanced image is obtained by taking the inverse transform of the product term. It modify the distribution of pixel values. The frequency domain method is based on convolution theorem. Computation complexity is less in frequency domain techniques, but simultaneously, it cannot enhance every part of an image [19].

An image which is captured in low light environment is not clearly visible. So, to enhance such image, many histogram equalization (HE) based methods have been proposed. Histogram equalization is a technique for improving the quality of image by modifying the intensity distribution of Histogram [6]. It is based on input gray level probability distribution. By the method of the histogram equalization, the image’s histogram becomes flattened and stretched [12]. So the overall brightness of the image is to be improved. The most widely used areas of HE are medical and radar image processing. But the main shortcoming of HE is that, it generates some unnatural enhancement in output image and due to such excessive change in brightness, image becomes brighter. So this method is not suitable for consumer electronics such as TV because of its flattening property [17].

To mitigate such limitation observed in HE, Y. T. Kim [17] introduced a new algorithm named as Brightness Preserving Bi-Histogram Equalization (BBHE), for contrast enhancement. The main focus of this algorithm is to preserve the mean brightness of the given image and enhancing the contrast. As per this algorithm, the input image is decomposed into two sub-images depending on the mean of the input image. Then the sub-images are equalized independently depending on corresponding Histogram of the sub-image. Again to avoid the artifacts and over enhancement, another histogram equalization based technique known as equal area Dualistic Sub-image Histogram Equalization (DSIHE) was introduced [32]. In this method, the image is divided into sub-images of two equal areas depending upon the probability density function. Then the individual sub-images are equalized differently. Finally the enhanced image is obtained by combining these equalized sub-images. Also, it has been observed that, this method enhances the image as well as preserves the original image luminance.

In [7], Soong-Der Chen et al. proposed an enhancement method which is an extension of BBHE and is known as Minimum Mean Brightness Error Bi-Histogram Equalization (MMBEBHE). The main goal of this method is to provide maximum brightness preservation. But in MMBEBHE, the separation of image is based on the threshold level, at which, Absolute Mean Brightness Error (AMBE) is minimum. This AMBE is the absolute separation between input mean and output mean. MMBEBHE can enhance the contrast of the image and preserve the mean brightness which may or may not be handled well by HE, BBHE and DSIHE. Chen et al. [8] proposed a technique for image enhancement, which recursively performs the BBHE. This method performs the image subdivision based on mean intensity value in a recursive way. Then in [25], a new algorithm was proposed for image enhancement, which recursively performs the division of histogram, based on the median value of brightness instead of mean brightness.

The above discussed techniques are not able to provide any mechanism for controlling the level of enhancement with preserving brightness and entropy. In [26], the author proposed another technique for contrast enhancement of low exposure image known as Exposure based Sub-Image Histogram Equalization (ESIHE). In this paper the author has given more focus for contrast enhancement and Maximizing the entropy. The work in [27] is mainly associated with enhancement of low light, or night vision images. It includes two methods such as, Recursive Exposure based Sub-image histogram equalization (R-ESIHE) and Recursively Separated Exposure based sub-image histogram equalization (RS-ESIHE). The method R-ESIHE recursively performs ESIHE [26] method till the exposure among successive iteration is less than a predefined threshold. RS-ESIHE performs the image histogram subdivision recursively. Each histogram is divided into sub-Histogram based on respective exposure thresholds and equalizes each sub histogram independently and finally integrates into one image.

Another approach for image contrast enhancement and entropy restoration is presented in [28] which is based on swarm intelligence, Histogram equalization and gamma correction. In 2018, M. Kanmani [16] proposed a new technique for enhancing the information contents and improving the visual quality of the grayscale image by using particle swarm optimization algorithm and adaptive gamma correction technique. Then, A. K. Bhandari [5] proposed an image enhancement technique which produces a higher contrast image with minimum change in entropy with respect to the original image by using Social Spider Optimization algorithm. In 2019, M. Zarie suggested a robust contrast enhancement technique [36] named as image contrast enhancement using triple clipped histogram equalization based on standard deviation. In the last few years, different approaches [1,2,3,4, 9,10,11, 13,14,15, 18, 20,21,22,23,24, 30, 31, 33,34,35, 37,38,39] have been developed to improve the quality of images and to handle the challenges of image enhancement.

From literature survey [2, 5, 7,8,9, 12, 13, 16,17,18, 24,25,26,27, 30, 32, 33, 36], it has been observed that most of the existing methods have the following drawback.

-

i.

More Information loss

-

ii.

Over enhanced image

-

iii.

No control on enhancement rate

-

iv.

Result artifacts in the enhanced image.

-

v.

Not adaptive

-

vi.

Loss of the natural appearance of the image by affecting the structure, feature similarity.

In this work, our proposed method emphasizes to meet the challenges like maximization of entropy, improving the visual quality of the image, controlling the enhancement rate, reducing the artifacts and natural appearance of the image without much affecting the basic structure, feature. By taking the following parameters into mind, a novel image enhancement technique is proposed in this paper. The main contributions of this proposed work is

-

i.

Subdivision of the histogram based on mean and exposure threshold in order to preserve the brightness and improve the natural appearance of the image.

-

ii.

Construct a new transfer function (mapping function) for the enhanced image using CDF of each sub-image and constraint parameters.

-

iii.

Automatic Selection of the constraint parameters as per the fitness function, using DSO algorithm. Selection of these parameters takes place without human intervention which makes the proposed method more adaptive.

The rest part of this paper is arranged as follows. Section 2 addresses the proposed method, which includes the description of clipping technique, image sub-division, HE, DSO algorithm with mathematical expression. In Section 3 includes result analysis and comparison of the proposed method with different existing methods by taking different parameters. Section 4 concludes the paper by justifying the objective.

2 Proposed method

The proposed method is an extension of histogram equalization technique. The enhancement problem is represented as an optimization problem in this paper. The main objective of this paper is to avoid the shortcomings found in HE based technique. The histogram equalization technique improves the contrast of the image at the cost of more information loss. There is no controlling parameter used to control the enhancement rate. There is no specific method used in histogram equalization method to maximize the information contents of enhanced image. It also creates some unnatural artifacts and over enhancement problems. So, a novel image enhancement technique is proposed in this paper to improve the information content, enhance the quality of the image and controlling the enhancement rate. This proposed algorithm consists of following main steps named as histogram clipping technique, histogram sub-division with equalization and maximization of entropy.

2.1 Histogram clipping technique

To eliminate the problem of over enhancement observed in histogram equalization and to control the enhancement rate, clipping technique is used in this paper. This technique is used to clip the original histogram h(k) and to form a new histogram hc(k). In this technique, a clipping threshold is formed by using (1). The bin of the histogram, whose value is greater than the clipping threshold, is limited to the threshold level. The formula used to calculate clipping threshold [26] is

The new clipped Histogram is represented as,

2.2 Histogram sub-division with equalization and maximization of entropy

To improve the natural appearance of the image and to avoid unnatural enhancement, the clipped histogram is bisected into two sub-histograms, based on mean intensity value. Two sub-Histograms are generated named as lower histogram and upper histogram. Again, to avoid unnatural artifacts, the lower histogram is divided into two sub-histograms known as extremely low exposure histogram and low exposure histogram. Then each sub-histogram performs histogram equalization independently. The transfer function used for both extremely low exposure and low exposure image contains two different constraint parameters which controls the information content of the image during HE in each sub-image.

To maximize the information contents, an efficient optimization technique known as Directed Searching Optimization (DSO) [40] algorithm. Has been used in this paper. DSO algorithm performs two most important operations which are needed to find the best optimal solution in a solution space and prevent the premature convergence of DSO. It includes two operations such as position updating and genetic mutation. Position updating is used to find the best solution vector among the set of randomly generated solution vectors and it takes the major role for convergence of DSO. Genetic mutation takes the active role to increase the diversity of solution vectors and prevent the premature convergence.

To solve any optimization problem, a cost function or fitness function is necessary to compute the fitness of each solution vector. It has been observed that the entropy of enhanced image is very less as compared to input image which indicate the information loss is more in HE [12]. So naturalness is loosed in enhanced image. So to minimize the entropy difference between the input image and enhanced image, an objective function is used in this paper which indicates maximization of information content of enhanced image. The information content (entropy) is to be maximized by using Directed Searching Optimization algorithm (DSO). The fitness function is represented in (3)

Where H represents the entropy of image and \( {p}_i=\frac{n_i}{s\times t} \) is the probability of occurrence of ith intensity level and (s × t) is the total number of pixels present in the image.

2.3 Steps involved in proposed method

Here, Fig. 1 represents the flowchart of our proposed method. To simulate the proposed method, the following steps are taken into consideration.

-

Step 1:

Compute the Histogram of the input image

-

Step 2:

Calculate the mean of the image intensity value.

-

Step 3:

Evaluate the clipping threshold using (1) and clip the original Histogram using (2) to avoid over saturation.

-

Step 4:

The clipped histogram is decomposed into two sub-Histogram based on the mean intensity value Xm [8]. Where the lower sub-histogram contain intensity value, up to mean intensity and upper sub-Histogram having intensity value lies in between mean and max gray level.

-

Step 5:

Again the lower sub-histogram is sub-divided into two sub-histograms by using exposure threshold Xe [26]. So, two sub-Histograms are formed, extremely low exposure sub-histogram and low exposure sub-histogram. Finally, these histogram sub-divisions result three sub-images named as sub-image-1, sub-image-2, and sub-image-3.

-

Step 6:

The probability density functions (PDF) of sub-image-1, sub-image-2, sub-image-3 are calculated using Eqs. (4), (5) and (6) respectively.

The variables NLI, NLu, and NU represent the number of pixels in sub-image-1, sub-image-2, and sub-image-3 respectively.

-

Step 7:

The cumulative density function (CDF) of sub-image-1, sub-image-2, sub-image-3 are evaluated using (7–9).

-

Step 8:

The transfer function (mapping function) of individual sub-image is determined using (10–12).

Transfer function for sub-image-1,

Transfer function for sub-image-2,

Transfer function for sub-image-3,

A complete image Y,(13) is formed by integrating all three sub-images.

In (6), l represents the maximum gray level and in (10), Xmin represents the minimum gray level. Two constraint parameters L and M are used in the above transfer function (10), (11). The values of these parameters are taken in the range [0, 1]. The DSO algorithm is used to search the most optimal values of L and M in a two dimensional searching space for which it give the maximum fitness value.

-

Step 9:

Initialize all the parameters for the DSO algorithm [40].

-

i.

Population Size or number of solution vector (PS).

-

ii.

Maximum number of iterations (iter)

-

iii.

Forward probability (Pα)

-

iv.

Forward co-efficient (α)

-

v.

Backward co-efficient (β)

-

vi.

Genetic Mutation Probability (Pm)

$$ PM=\left[\begin{array}{ccc}{P}_1^1& {P}_2^1& \cdots \kern0.5em {P}_{DM}^1\\ {}{P}_1^2& {P}_2^2& \cdots \kern0.5em {P}_{DM}^2\\ {}\begin{array}{c}\vdots \\ {}{P}_1^{PS}\end{array}& \begin{array}{c}\vdots \\ {}{P}_2^{PS}\end{array}& \begin{array}{c}\begin{array}{cc}\ddots & \vdots \end{array}\\ {}\begin{array}{cc}\cdots & {P}_{DM}^{PS}\end{array}\end{array}\end{array}\right] $$

Initialize the population matrix PM, where i varies from 1,2,3,…..,Dimension (DM) of the problem space and j varies from 1,2,3,……, Population Size (PS). Figure 2 represents the position updating strategy of DSO algorithm [40]. In Fig. 2, the region between P and V is named as forward region and the region between P and S is named as backward region. Here \( {P}_i^j(k) \) is the ith component of jth solution vector of kth iteration,\( {P}_i^j\left(k+1\right) \) is the corresponding updated component. Here jg is the index of the global solution vector. \( {P}_i^{j_g}(k) \) is the ith component of jgth global solution vector of kth iteration. PiU and PiL are the upper and lower bound of ith component of position vector. PV,Ps are forward and backward extension of \( {P}_i^j(k) \). The variable r represents the random number which lies in between [0, 1]. After initializing the parameters, position updating and genetic mutation operators [40] are used to search the optimal solution.

To update the position of each solution vector, it must follow the following conditions. These conditions are j ∈ [1, PS] and j ≠ jg, i ∈ [1 DM]. Then, a random number is generated and check the forward probability condition (Rand () < Pα). If, the forward probability condition is satisfied then the position is updated in forward direction using (14).

In (14),\( {P}_i^{j_g}(k)-{P}_i^j(k) \)represents the adaptive step size. Initially solution vectors are sporadic in solution space, so this adaptive size is beneficial for global search. But in later stage, solution vectors are close to each other, so step size is small which is helpful for local search of the DSO algorithm If, PV is greater than the upper bound of ith position component, then limited it, to upper bound PiU. If Pv is less than lower bound, then limited it to lower bound PiLThenthe new updated position is shown in (15)

If, the forward probability condition is not satisfied, then position is updated (16) in backward direction.

If, Ps is greater than the upper bound of ith position component, then it is limited to the upper bound PiU. If Ps is less than lower bound, then limited it to lower bound PiL. Then the new updated Position (17) in backward direction,

This Backward region is the auxiliary region and it is used to slow down the convergence of DSO. After position updating, the genetic mutation operator is being used to improve the DSO performance. To apply genetic mutation, it will check the genetic mutation probability condition. If that satisfied the genetic mutation probability (Rand() <Pm) then the new updated component (18) is,

3 Results and discussions

In this section, the simulation result of the proposed method is compared with some of the existing image enhancement techniques like HE [12], BBHE [17], DSIHE [32], MMBEBHE [7], ESIHE [26], Particle Swarm Optimization (PSO) based technique [24], by considering the following performance parameters such as entropy, MSE, PSNR, SSIM, FSIM and execution time. To perform the simulation work, hundred low light images are taken from USC-SIPI database [29] and few randomly selected images are presented in this paper. All these analysis were performed on Intel(R), core (TM), i3-4005U CPU @1.70 GHz, 4.00 GB RAM, 64 bit operating System, Matlab-2018.

3.1 Parameter selection for experiment setup

The parameters taken to perform the simulation work of our proposed method are presented in Table 1.The parameters should be keenly selected, so that the optimal or globally best solution can be achieved with proper avoidance of excessive complexity up to a certain extent. Larger population size may leads to less number of iterations for similar time bounded convergence for a given optimization problem. Very large as well as very low population size should be avoided, as they may lead to unnecessary complex behavior and premature result respectively. Various experiment works have been conducted and it has been observed that a population of moderate size will results the best optimal solution with less number of iteration without wastage of resources. So, in this paper the population size and number of iterations are taken as 50 and 30 respectively. Genetic mutation works in a random manner and is used to enhance the diversity of individuals. It is required for improving the DSO performance by preventing the premature convergence to local minima through exploiting the unseen areas of the search space. But a higher value of mutation probability may lead the solution to diverge. So the mutation probability is considered as 0.01. Like the PSO algorithm, a new position updating strategy has been used in DSO algorithm. As per this strategy, the current position is inclined to mimic the global best position. The forward region is considered as the main searching region which is actually a region near the global position. So the forward probability and forward coefficient are taken as 0.8 and 1 respectively. The backward region is an auxiliary region, and it is used to slow down the rapid convergence of the DSO algorithm, which is beneficial to prevent the premature convergence of the DSO. So the backward coefficient is taken as ten. After performing many experimentations, finally the value of the constraint parameters have been taken in the range of [0, 1] for proper balanced weighted summation.

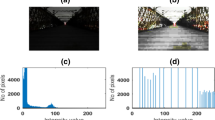

3.2 Performance evaluation based on visual quality

To show the supremacy of the proposed method, both visual and quantitative analysis are carried out in this paper. Figs. 3, 5, 6, 7, 8 and 9 represent the input images and their corresponding enhanced images obtained by using different enhancement techniques. Figure 4 represents the histogram or the intensity distribution at different pixels of above mentioned images. From these histograms of Fig. 4, it is noticed that, the process of the intensity distribution of our proposed method is completely different from other techniques. From the histogram equalized images (Figs. 3b, 5b and 9b) and histogram of HE image (Fig. 4b), it is observed that HE introduced a significant change in brightness. Due to the stretching and flattening property of the histogram equalization, the dynamic range of the original image is expanded, which is shown in Fig. 4b. So that image obtained by the histogram equalization is much brighter than the input image. It is because; HE mapped the gray level proportionally with cumulative density function (CDF). It has also been observed that the histogram of the histogram equalized image does not follow the pattern of the input image histogram. The HE technique enhances the contrast of the image, but produces some artifacts. There is no mechanism used in HE, BBHE, DSIHE and MMBEBHE methods to avoid over enhancement.

So these methods result over enhancement images. The ESIHE technique produce better enhanced images, but the information loss is more in such images. The enhanced image produced by PSO based technique contain so many noise points which are shown in Figs. 3, 5, 6, 7, 8 and 9. But, the over enhancement problem is eliminated in our proposed method, by using clipping technique.

Some unnatural artifacts has also been noticed in the background part of HE, BBHE, DSIHE, MMBEBHE, ESIHE, PSO based techniques based enhanced images in Figs. 3, 6 and 8. From Fig. 8h, it has been observed that all three vehicles are clearly visible in the image which is produced by our proposed method. But the third vehicle in Fig. 8, is not visible in the enhanced image produced by other state of the art techniques. No such unnatural enhancement or artifacts found in the enhanced image obtained by our proposed method because of such novel histogram sub-division technique. The histogram of the proposed method also follows the pattern of the histogram of the input image, shown in Fig. 4a and 4h. So the enhanced image obtained by the proposed method is more similar with input image as compare to other methods. From the above simulation results, it has been observed that, proposed method yields the images of more natural looking by enhancing the contrast.

Extraction of a highly improved Image from low quality image is the main target of image enhancement. So, visual quality of the image is not only the criteria to measure the performance of different enhancement technique, but also some quantitative analyses are required to evaluate the same. So following parameters are also taken to measure the performance of enhanced image.

3.3 Performance evaluation based on entropy

Entropy represents the average information content of the image. Then Entropy of each enhanced image is calculated using (19).

Here, p(i) represents the normalized histogram value of the image. Here H represents the entropy of enhanced image. The higher value of H indicates the image contain more information. From Table 2 and Fig. 10a, it has been noticed that the average information content of enhanced image by the method of BBHE is better as compared to HE and DSIHE and MMBEBHE but worse than the other techniques used in this paper. Information loss is more in Histogram Equalization technique and it also produces some unusual enhancement. But our proposed method gives better entropy preservation as compared to other methods. Because, the DSO algorithm used to search the optimal constraint parameters in such a way that, it will maximize the entropy.

3.4 Performance evaluation based on mean square error (MSE)

It is the mean of the square of the error between the input image and enhanced image [14]. Mean Square Error between original image and enhanced image is calculated using (20). From Table 3 and Fig. 10b, it has been observed that the mean square error between the input image and histogram equalized image is very high as compare to the other HE based algorithm and the MSE value is very less in our proposed method.

3.5 Performance evaluation based on peak signal to noise ratio (PSNR)

It is one of the performance measurement techniques to evaluate the quality of the output image [14]. It is defined as the ratio between maximum powers of signal to power of distorting noise and is calculated using (21). The PSNR is inversly related to the Mean Square Error (MSE). If, higher is the value of PSNR, more is the image quality. From Table 4 and Fig. 10c, it has been found that proposed method results better PSNR value as compared to other existing methods.

3.6 Performance evaluation based on structural similarity index measure (SSIM)

It measures the structural similarity between input and output image [9]. It is computed using (22).

Where, μx, μy are the mean and σx, σy are the standard deviation of the input image and enhanced image respectively. Here, the sample correlation coefficient between these two images is represented by σxy. C1, C2 are the constants used to eliminate the instability. From Table 5 and Fig. 10d, it has been noticed that the average structural similarity between the input image and enhanced image obtained by using our proposed method is far better as compared to the other HE based method used in this paper. A very poor SSIM is generated by using HE.

3.7 Performance evaluation based on feature similarity index measure (FSIM)

The feature similarity between original image and enhanced image is measured by FSIM [39]. The formula used to measure FSIM (23) is

Here X represents the whole image, SL(x) is the similarity between the input image and enhanced image, PCmis the phasecongruency map Feature Similarity Index Measure (FSIM) between enhanced image and original image is calculated using (23). Table 6 and Fig. 10e represent the average FSIM obtained by different enhancement techniques. From these results, it has observed that the feature similarity between original image and enhanced image is less in Histogram Equalization and more in our proposed method.

3.8 Execution time

Table 7 represents the execution time of the different algorithms. It indicates the computational time complexity of enhancement techniques [15]. All the experiments were performed on a computer with Intel i3, 1.7 GHz processors, 4 GB RAM and Matlab 2018a. From this table, it has been observed that the HE and ESIHE techniques take very less time to process the images among all these existing techniques. BBHE, DSIHE and MMBEBHE algorithms require all most similar time to generate the enhanced images. But these techniques are not adaptive in nature. They don’t use any of the optimization techniques to find the optimal parameters. So the execution time of such techniques is very less over other optimized based techniques like PSO and DSO. Finally the execution time of the proposed technique is compared with one of the PSO based image enhancement technique. It has been found that the time taken by the proposed method is less as compared to the PSO based technique. It is because of the new position updating strategy of the DSO algorithm and the simplicity in proposed methodology.

3.9 Convergence performance

The Fig. 11a-d represents the convergence performance of proposed method for image-1-4. The convergence performance shows, how fast the global optimum is reached i.e., the minimum number of iteration required to reach the best value which is nothing but the maximum entropy value. In each iteration, this fitness value or entropy value is measured by using (3). From these convergences plot, It has been observed that DSO required very less iterations to converge and for reaching the maximum fitness value as compared to PSO for the application of image enhancement. In PSO, only position and velocity updating strategies are used to search the most optimal solutions. Mutation operator is not used in PSO. It is because of the simplicity in methodology, new position updating strategy and mutation of DSO algorithm.

From the above analysis, it is noticed that HE method enhances the contrast of the image, but not able to produce a quality image in terms of entropy, SSIM, PSNR, naturalness, FSIM and adaptiveness. But the execution time of HE technique is very less. The other HE based methods like BBHE, DSIHE, MMBEBHE, ESIHE, enhances the low illuminated image and somehow preserve the above discussed performance parameters. The above simulation results show the excellency of the proposed method. The excellency of this method is due to the novel way of sub-division of image, modified transfer function and the dynamic searching nature of the Directed Searching Optimization technique. By observing the above simulated results shown in figures (Figs. 3, 4, 5, 6, 7, 8, 9, 10 and 11) and Table (Tables 2, 3, 4, 5 and 6), it can be concluded that our proposed method performs superior in terms of visual quality, entropy, SSIM, MSE, PSNR, FSIM, naturalness and adaptiveness over other existing methods.

4 Conclusions

In this paper, a novel mean and exposure based sub-image histogram equalization method is proposed in which, an optimization technique named as DSO algorithm has been implemented for harvesting more and more information contents in low light grayscale images. To prevent the over enhancement, clipping technique is implemented along with HE. The method of sub-division of histogram takes the major role in preserving the natural appearance of the image. The Optimization technique, DSO is used in this paper for finding the optimal constraint parameters for improving the information content of enhanced image. As searching of these parameters is carried out without human intervention, so proposed method is more adaptive. This method takes less execution time as compared to PSO and more time as compared to other discussed method. But it generates best optimal results over other techniques. The visual quality of the enhanced images shows the robustness of the proposed method. The supremacy of the proposed method is also proved by taking the performance parameters like entropy, SSIM, FSIM, PSNR, and MSE. From the simulation results, it has been observed that Directed Searching Optimized Mean-Exposure based sub-image histogram equalization technique performs better as compared to most of the state-of-the-art techniques.

References

Abdoli M, Nasiri F, Brault P, Ghanbari M (2019) Quality assessment tool for performance measurement of image contrast enhancement methods. IET Image Process 13(5):833–842

Al-Ameen Z (2019) Nighttime image enhancement using a new illumination boost algorithm. IET Image Process 13:1314–1320

Bae TW, Ahn SH, Altunbasak Y (2017) Automatic contrast enhancement by transfer function modification. ETRI J 39(1):76–86

Bhandari AK, Maurya S (2020) Cuckoo search algorithm-based brightness preserving histogram scheme for low-contrast image enhancement. Soft Comput 24(3):1619–1645

Bhandari AK, Maurya S, Meena AK (2018) Social spider optimization based optimally weighted Otsu thresholding for image enhancement. IEEE J Sel Top Appl Earth Obs Remote Sens, 1, 13

Chen SD (2012) A new image quality measure for assessment of histogram equalization-based contrast enhancement techniques. Digit Sig Process 22(4):640–647

Chen SD, Ramli AR (2003) Minimum mean brightness error bi-histogram equalization in contrast enhancement. IEEE Trans Consum Electron 49(4):1310–1319

Chen SD, Ramli AR (2003) Contrast enhancement using recursive mean-separate histogram equalization for scalable brightness preservation. IEEE Trans Consum Electron 49(4):1301–1309

Chen J, Yu W, Tian J, Chen L, Zhou Z (2018) Image contrast enhancement using an artificial bee colony algorithm. Swarm Evol Comput 38:287–294

Deng H, Sun X, Liu M, Ye C, Zhou X (2016) Image enhancement based on intuitionistic fuzzy sets theory. IET Image Process 10(10):701–709

Dhal KG, Das A, Ray S, Gálvez J, Das S (2020) Histogram equalization variants as optimization problems: a review. Arch Comput Methods Eng:1–26

Gonzalez RC, Richard EW (2002) Digital image processing (3rd). Prentice Hall Press, Upper Saddle River,NJ,USA

Hanmandlu M, Verma OP, Kumar NK, Kulkarni M (2009)A novel optimal fuzzy system for color image enhancement using bacterial foraging. IEEE Transactions on Instrumentation and Measurement 58(8):2867–2879

Isa IS, Sulaiman SN, Mustapha M, Karim NKA (2017) Automatic contrast enhancement of brain MR images using average intensity replacement based on adaptive histogram equalization (AIR-AHE). Biocybernetics Biomed Eng 37(1):24–34

Kandhway P, Bhandari AK, Singh A (2020) A novel reformed histogram equalization based medical image contrast enhancement using krill herd optimization. Biomed Sig Process Control 56:101677

Kanmani M, Narsimhan V (2018) An image contrast enhancement algorithm for grayscale images using particle swarm optimization. Multimed Tools Appl 77(18):23371–23387

Kim YT (1997) Contrast enhancement using brightness preserving bi-histogram equalization. IEEE Trans Consum Electron 43(1):1–8

Mandal S, Mitra S, Shankar BU (2019) FuzzyCIE: fuzzy colour image enhancement for low-exposure images. Soft Comput 1–7

Mishra R, Sharma U (2013) Review of image enhancement techniques. Int J Eng Res Technol 2 (8).

Muniyappan S, Rajendran P (2019) Contrast enhancement of medical images through adaptive genetic algorithm (AGA) over genetic algorithm (GA) and particle swarm optimization (PSO). Multimed Tools Appl 78(6):6487–6511

Peng F, Liu Y, Long M (2014) Reversible watermarking for 2D CAD engineering graphics based on improved histogram shifting. Comput Aided Des 49:42–50

Peng F, Zhou DL, Long M, Sun XM (2017) Discrimination of natural images and computer generated graphics based on multi-fractal and regression analysis. AEU-Int J Electron Commun 71:72–81

Ren W, Liu S, Ma L, Xu Q, Xu X, Cao X, Du J, Yang MH (2019) Low-light image enhancement via a deep hybrid network. IEEE Trans Image Process 28(9):4364–4375

Shanmugavadivu P, Balasubramanian K, Muruganandam A (2014) Particle swarm optimized bi-histogram equalization for contrast enhancement and brightness preservation of images. The Visual Computer 30(4):387–399

Sim KS, Tso CP, Tan YY (2007) Recursive sub-image histogram equalization applied to gray scale images. Pattern Recogn Lett 28(10):1209–1221

Singh K, Kapoor R (2014) Image enhancement using exposure based sub image histogram equalization. Pattern Recogn Lett 36:10–14

Singh K, Kapoor R, Sinha SK (2015) Enhancement of low exposure images via recursive histogram equalization algorithms. Optik 126(20):2619–2625

Singh H, Kumar A, Balyan LK, Singh GK (2018) Swarm intelligence optimized piecewise gamma corrected histogram equalization for dark image enhancement. Comput Electr Eng 70:462–475

SIPI USC (2016) The usc-sipi image database. 2005-07-12)[2009-08-05]. http://sipi.usc.edu/services/database/data-base.html

Sowjanya K, Kumar RP (2017) Gray level image enhancement using nature inspired optimization algorithm: an objective based approach. World J Model Simul 13:66–80

Wan M, Gu G, Qian W, Ren K, Chen Q, Maldague X (2018) Particle swarm optimization-based local entropy weighted histogram equalization for infrared image enhancement. Infrared Phys Technol 91:164–181

Wang Y, Chen Q, Zhang B (1999) Image enhancement based on equal area dualistic sub-image histogram equalization method. IEEE Trans Consum Electron 45(1):68–75

Xu Y, Liang K, Xiong Y, Wang H (2017) An analytical optimization model for infrared image enhancement via local context. Infrared Phys Technol 87:143–152

Ying Q, Qian Z, Zhang X, Ye D (2019) Reversible data hiding with image enhancement using histogram shifting. IEEE Access 7:46506–46521

Yuan LT, Swee SK, Ping TC (2015) Infrared image enhancement using adaptive trilateral contrast enhancement. Pattern Recogn Lett 54:103–108

Zarie M, Pourmohammad A, Hajghassem H (2019) Image contrast enhancement using triple clipped dynamic histogram equalization based on standard deviation. IET Image Process 13(7):1081–1089

Zhang LB, Peng F, Long M (2017) Identifying source camera using guided image estimation and block weighted average. J Vis Commun Image Represent 48:471–479

Zhang LB, Peng F, Qin L, Long M (2018) Face spoofing detection based on color texture Markov feature and support vector machine recursive feature elimination. J Vis Commun Image Represent 51:56–69

Zhang L, Zhang L, Mou X, Zhang D (2011) FSIM: a feature similarity index for image quality assessment. IEEE Trans Image Process 20(8):2378–2386

Zou D, Liu H, Gao L, Li S (2011) Directed searching optimization algorithm for constrained optimization problems. Expert Syst Appl 38(7):8716–8723

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Acharya, U.K., Kumar, S. Directed searching optimized mean-exposure based sub-image histogram equalization for grayscale image enhancement. Multimed Tools Appl 80, 24005–24025 (2021). https://doi.org/10.1007/s11042-021-10855-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-021-10855-7