Abstract

Many histogram equalization (HE) techniques have been proposed for the contrast enhancement in the past. In recent years clipped histogram equalization techniques are developed to control the degree of over enhancement and the noise. Yet these methods are not guaranteed to preserve the gray levels and thus the information in output image is less than that in the input image, even though it has been enhanced. We propose two new one-to-one gray level mapping (OGM) transformation methods, namely exposure based one-to-one gray level mapping (EOGM) transformation and median based one-to-one gray level mapping (MOGM) transformation. In EOGM and MOGM methods histogram is divided into two sub histograms based on exposure and median of the images respectively. Weights for these sub histograms are calculated and then OGM transformation function is applied to these sub histograms by using the derived weights. This transformation addresses both over enhancement and gray level loss effectively and also ensure uniform degree of enhancement. This preserves all the information content even after enhancement with all structural details, ensures no false contouring. Thus they are suitable for medical image applications, where information loss leads to wrong diagnosis. The experimental results show the supremacy of our methods over existing HE methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Simple and well known technique to achieve the contrast enhancement of the poor contrasted image is HE [1]. But major problem with HE is over enhancement of peaks in the histogram and thus enhancing the noise. Because of this image loses its natural look and also has large shift of mean brightness in the output image. Preservation of mean brightness is important in consumer electronic products such as TV, digital camera and camcoder etc. To preserve the mean brightness various histogram partition and equalization techniques viz, brightness preserving bi histogram equalization (BBHE) [2], minimum mean brightness error bi histogram equalization (MMBEBHE) [3], Dualistic sub-image histogram equalization (DSIHE) [4], recursive mean separated histogram equalization (RMSHE) [5] and recursive sub image histogram equalization (RSIHE) [6] have been proposed. In BBHE histogram is divided into two parts and the separation gray level is mean of the image and these sub histograms are equalized independently by conventional HE. MMBEBHE is similar to BBHE but separation point is chosen in such a way that it produces smallest absolute mean brightness error (AMBE). Computational complexity is high in this method as it carries out BBHE from lowest nonzero frequency gray level to highest non zero frequency gray level, to find out the separation point. One more technique which is similar to BBHE is DSIHE, but separation intensity is based on median of the image. Recursive way of performing BBHE to each sub histogram is RMSHE. Recursive way of performing DSIHE to each sub histogram is RSIHE. Both RMSHE and RSIHE sub histograms are restricted to power of two. One problem associated with these methods is optimum recursion point is not defined.

Unlike the previous methods, local minima is used in dynamic histogram equalization (DHE) [7] to separate the sub histograms. If there is any dominant portion in the sub histogram, recursive operation is carried out till dominant free sub histograms are achieved. These sub histograms are assigned to new dynamic range and then conventional HE is applied on them. One problem of this approach is it gives large mean shift, as sub histograms are allocated to new ranges. To preserve the mean brightness, brightness preserving dynamic histogram equalization (BPDHE) [8] was proposed. It uses Gaussian filter to smooth the histogram. Next, local maxima are used to split the histograms. These sub histograms are allocated to new dynamic range and conventional HE is performed on them. Finally normalization of image brightness is carried out to preserve the mean brightness. But if normalization ratio is less than 1, it gives poor contrasted image, if greater than 1, it results in some of the intensities rounded to maximum gray level [9]. For preserving mean brightness and improving the contrast further, through histogram modification by weights, recursively separated and weighted histogram equalization (RSWHE) [10] was developed.

Clipped histogram equalization methods viz., weighted thresholded histogram equalization (WTHE) [11], bi histogram equalization with plateau level (BHEPL) [12], exposure based sub-image histogram equalization (ESIHE) [13] and median-mean based sub-image-clipped histogram equalization (MMSICHE) [14] have been proposed to control the degree of enhancement. In WTHE, histogram is altered by weighted and thresholded probability density function. It restricts the modified probabilities with in the upper and lower thresholds. Finally HE is performed on these modified histogram. In BHEPL, histogram is split into two based on mean, these are clipped by using plateau level and conventional HE is applied on the clipped histograms to get an equalized image.

In ESIHE, histogram is divided based on exposure threshold [15]. These sub histograms are restricted to clipped threshold, which is calculated by averaging histogram frequencies. Conventional HE is applied on clipped histograms to get over all equalized image. MMSICHE, divides the histogram based on median intensity. Then each of these sub histograms is divided into two based on mean intensities. Clipping threshold for these four sub histograms is calculated by the median of occupied gray level frequencies. Finally conventional HE is applied on these clipped histograms.

All the above methods use cumulative distribution function (CDF) based transformation for equalization. All the brightness preserving methods viz, BHE, DSIHE, MMBEBHE, RMSHE, RSIHE and BPDHE preserve the mean brightness, but they have non uniform degree of enhancement in certain histogram regions and also introduce gray level loss. To address the over enhancement, clipped histogram equalization methods were developed, however they also do not address the gray level loss. To obtain an overall image enhancement and to have an overall natural look, all the gray levels need to be enhanced.

As all HE dependent methods introduce grey level loss and these lost grey levels are getting over lapped with other grey levels, they reflect in altering the structural details of objects to which they belong to and thus causing false contours. In this paper, we propose two methods viz, EOGM and MOGM to address intensity saturation, gray level loss and non uniform degree of enhancement. These methods ensure no gray level loss and uniform degree of enhancement while preserving all structural details of all objects in the processed images.

2 Brief Discussion and Commonalities of Different HE Methods

To explain the commonly occurring problems in HE based methods and its resultant images, we consider the Einstein image. First we consider HE that operates on whole histogram of Einstein image, Fig. 1d.

In Einstein image histogram, Fig. 1a, the marked rectangular box indicates peaks and oval box indicates low frequency gray levels. The corresponding objects in the input image, Fig. 1d, are suite and face respectively. We can see the intensity saturation of peaks (marked with rectangular box) in output image histogram, Fig. 1c. This is due to steep rise (marked with rectangular box) in transformation, Fig. 1b, this results in noise amplification as reflected in suite in the output image, Fig. 1e. The dips in transformation function, Fig. 1b corresponds to gray levels with zero frequency. Flat region in transformation, Fig. 1b, is due to gray level loss (many to one mapping). Wide range of low frequencies (oval box) in Fig. 1a are compressed to narrow range (oval box) in output histogram, Fig. 1c. The result of this is face losing its natural look and looking more bright, Fig. 1e. The causes for image degradation are due to steep rise and flatness in transformation. General HE methods which operate on whole histogram have these problems.

We move next to HE methods, which operate on partitioned histograms viz., recursively partitioned sub image HE (RPHE) methods. Figure 2 represents experimental results of HE, that operates on partitioned histograms (Here we consider one of the recursive partitioned HE method that has recursion level 2 (\( r_{l} = 2 \)) and has \( 2^{{r_{l} }} \) sub histograms).

Consider the gray levels marked in rectangular box (these belong to two sub histograms) of Fig. 2a, histogram of input image Fig. 2d. These gray levels correspond to suite in Fig. 2d. These gray levels are transformed to gray levels marked in output histogram, Fig. 2c, by the transformation marked in Fig. 2b. It can be clearly observed that there is very less degree of enhancement in output sub histograms marked in Fig. 2c. This results in suite of output image, Fig. 2e, looking almost same as in input image, Fig. 2d.

We can notice that recursively partitioned sub image HE methods are having the problem of very less or no degree of enhancement in some sub histogram regions (especially when gray levels in that sub histogram are above certain threshold frequency). In those cases, these gray levels do not undergo any enhancement, thus output image will look same as input image. Also these methods suffer from intensity saturation and gray level loss.

To control over enhancement and hence intensity saturation clipped histogram equalization (CHE) methods were developed, but they also result in gray level loss. Figure 3 describes the analysis of HE, that operates on partitioned clipped sub histograms (\( r_{l} = 1 \), has two sub histograms).

Look at the oval shaped markings in Fig. 3a–e. We can notice that the face in output image, Fig. 3e, is losing its natural look due to flatness in the transformation, Fig. 3b, corresponding intensity compression can be observed in Fig. 3c.

Following observations are made by analysis of Figs. 1, 2and3:

Figure 1:

-

Tie is enhanced well, but suite and back ground are having noise due to intensity saturation in histogram, that corresponds to steep rise in transformation.

-

Face lost its natural look due to flatness in transformation, that corresponds to intensity compression in histogram.

-

Also it has loss of details, as 56 gray levels are lost.

Entropy of input image: 6.5432; Entropy of output image: 6.4411

No. of gray levels in input image: 164

No. of gray levels in output image: 108

No. of gray levels lost: 56 (164 − 108)

No. of gray levels overlapped in output image: 15

Total no. of gray levels effected: 71 (56 + 15)

Fig. 2:

-

Tie and collar are enhanced well.

-

Face is enhanced well, but not smoothly (fore head has slightly brightened).

-

Middle sub histogram is not enhanced, resulting in suite looking same in both input and output images.

-

Also it has loss of details, as 11 gray levels are lost.

Entropy of output image: 6.4787

No. of gray levels in output image: 153

No. of gray levels lost: 11

No. of gray levels overlapped in output image: 8

Total no. of gray levels effected: 19

Fig. 3:

-

Suite, tie enhanced well.

-

Face lost its natural look and is more brightened due to flatness in transformation.

-

Also it has loss of details, as 39 gray levels are lost.

Entropy of output image: 6.5041

No. of gray levels in output image: 125

No. of gray levels lost: 39

No. of gray levels overlapped in output image: 12

Total no. of gray levels effected: 51

From above Figs. 1, 2, 3it can be observed that many gray levels are lost and effected. This causes the processed image lost some or many of its details. The lost details are proportional to the number of gray levels effected.

*The effected gray levels will also affect the corresponding objects, to which these gray levels belong to.

2.1 Image Enhancement Using CDF Based Transformation Function

Consider an image, \( F\left( {x,y} \right) \) captured under a low contrast environment. A well known and most commonly used method to improve the contrast is HE. A review of the transformation used in HE and notations used in our algorithm are presented below. Gray level of an image at pixel location \( \left( {x, y} \right) \) is given by \( r \), compactly denoted as

where \( x = 0,1, \ldots M - 1 \) and \( y = 0,1, \ldots N - 1 \). Total number of pixels in the image are then \( MN \). The number of pixels having same gray level \( r \) is termed as frequency denoted as \( f_{r} \). It is easy to note

Collection of all these frequencies with respect to gray levels is the image histogram. A vector representation of this is

The component of histogram \( \varvec{H } \) corresponding to the \( r \)th gray level is

Normalized histogram can be viewed as probability density function (PDF) as

From the PDF, we can derive CDF as

HE maps an input gray level \( r \in \left[ {0, L - 1} \right] \) to the output gray level \( s \in \left[ {r_{min} , r_{max} } \right] \) using CDF as

Different modifications to the fundamental histogram equalization are proposed. However, all the CDF based transformations have some common attributes.

-

1.

Transformed functions after digitization, will exhibit steep rise at certain gray levels. This steep rise will result in zero frequency gray level regions in the output image histogram, given by the range of output gray levels covered by the steep rise.

-

2.

Flat regions in the transformation function result in peaks in the output image histogram. The range of input gray levels covered by the flat region will map to a single output gray level.

-

3.

Flat regions will result in many to one gray level mapping and inverse mapping is not possible. More the range of flat region, more the loss of details in the processed image. This will alter structural details of objects, causing false contouring.

Due to these attributes CDF based transformations result in intensity saturation leading to noise amplification and gray level loss, which in turn leads to loss of natural look in the processed image. To address these limitations a new transformation called one-to-one gray level mapping (OGM) is proposed. Two approaches of OGM (EOGM and MOGM) are discussed in the following section.

3 Proposed OGM Transformations

In OGM transformations the number of input gray levels is preserved. The transformation functions for each method are developed based on \( \varvec{H} \), histogram vector.

3.1 EOGM Transformation

Exposure [15], is an objective measure used to estimate the over and under exposed regions of the image. This can be calculated from the mean gray level, \( r_{mean} \) of the image. The mean gray level \( r_{mean} \) can be obtained from PDF as

Image exposure, \( E \), can be defined as

The exposure falls in the range of 0 to 1. If exposure is greater than 0.5, the image has more over exposed area then under exposed area. If exposure is less than 0.5, the image is said to have more under exposed area. To divide the histogram based on exposure, it needs to be normalized to get exposure threshold gray level [15], \( r_{exposure} \) as

The transformation functions are developed based on exposure threshold gray level, \( r_{exposure} \) of \( F\left( {x,y} \right) \). Exposure threshold is used to separate low exposure gray levels and high exposure gray levels. Then histogram \( \varvec{H} \) can be partitioned into two parts, \( \varvec{H}_{L} \) and \( \varvec{H}_{U} \) as

where

The occurrences of \( f_{r} = 0 \) for \( r \in \left[ {0,L - 1} \right] \) can be identified and non-zero frequency gray levels are obtained in each partition.Let \( C_{L} \) and \( C_{U} \) denote number of non-zero frequency gray levels in \( \varvec{H}_{L} \) and \( \varvec{H}_{U} \) respectively. It is easy to note that the number of zero frequency gray levels in \( \varvec{H}_{L} \) and \( \varvec{H}_{U} \) are \( d_{L} \) and \( d_{U} \) respectively, as

We can develop new vectors \( \varvec{N}_{L} \) and \( \varvec{N}_{U} \) by removing zero frequencies from \( \varvec{H}_{L} \) and \( \varvec{H}_{U} \). If the \( j \)th non-zero frequency gray level is present at the \( i \)th position of vector \( \varvec{H}_{L} \), we can relate \( \varvec{N}_{L} \) and \( \varvec{H}_{L} \) as

where \( H_{L} \left( i \right) \ne 0 \) and \( j = 0,1,2, \ldots C_{L} - 1. \) Similarly \( \varvec{N}_{U} \) and \( \varvec{H}_{U} \) are related as

where \( H_{U} \left( i \right) \ne 0 \) and \( j = 0,1,2, \ldots C_{U} - 1. \)A new indexed histogram \( \varvec{N} \) can be generated by cascading \( \varvec{N}_{L} \) and \( \varvec{N}_{U} \) as

Transformations are developed on \( \varvec{N} \) by developing weights given by

The gray levels in \( \varvec{N}_{L} \) are transformed as

Similarly gray levels in \( \varvec{N}_{2} \) are transformed as

The above method is briefed in the following steps

-

1.

Calculate \( r_{exposure} \) using Eq. (10).

-

2.

Partition \( \varvec{H} \) as \( \varvec{ H}_{L } \) and \( \varvec{H}_{U} \) using Eqs. (12) and (13).

-

3.

Generate \( \varvec{ N}_{L} \) and \( \varvec{N}_{U} \) from \( \varvec{ H}_{L} \) and \( \varvec{H}_{U} \) using Eqs. (16) and (17).

-

4.

Derive weights \( \alpha_{L} \) and \( \alpha_{U} \) using Eqs. (19) and (20).

-

5.

Apply weights on \( \varvec{ N}_{L} \) and \( \varvec{N}_{U} \) to get the transformed output as in Eqs. (21) and (22)

3.2 MOGM Transformation

The MOGM is similar to EOGM except finding the median gray level of image, \( r_{median} \). The steps in this method are:

-

1.

Calculate \( r_{median} \).

-

2.

Use \( r_{median} \) to Partition \( \varvec{H} \) as \( \varvec{ H}_{L } \) and \( \varvec{H}_{U} \) using Eqs. (12) and (13).

-

3.

Generate \( \varvec{ N}_{L} \) and \( \varvec{N}_{U} \) from \( \varvec{ H}_{L} \) and \( \varvec{H}_{U} \) using Eqs. (16) and (17).

-

4.

Derive weights \( \alpha_{L} \) and \( \alpha_{U} \) using Eqs. (19) and (20), where \( r_{exposure} \) is replaced by \( r_{median} \).

-

5.

Apply weights on \( \varvec{ N}_{L} \) and \( \varvec{N}_{U} \) to get the transformed output as in Eqs. (21) and (22).

Image enhancement based on OGM transformation function has the following characteristics.

-

1.

The transformation function has no steep rise.

-

2.

It is strictly monotonic. Gray level loss and gray level overlapping is not observed as there are no flat regions in the transformation function. Due to this there is no information loss, preserves the structural details of objects in the processed image and hence the processed image is free from false contouring of objects.

-

3.

Inverse mapping is possible.

4 Simulation Results

The results of the proposed methods EOGM and MOGM, are compared with the existing histogram equalization methods viz., HE, RMSHE (\( r_{l} = 2 \)), RSIHE (\( r_{l} = 2 \)), RSWHE (\( r_{l} = 2 \) with mean partitioning), ESIHE and MMSICHE. To test performance of these algorithms, two widely used image quality measures (IQM) viz., entropy and absolute mean brightness error (AMBE) are used. We also use gray level analysis tables for better understanding of causes for image quality degradation. Along with these measures, to find out the structural similarity of the enhanced image with input image an efficient perceptual similarity index metric, gradient magnitude similarity deviation (GMSD) [16] is used.

Ten different test images viz., Einstein, girl, seeds, copter, jet, house, Foster city, arctic hare, butterfly, kodim21 are considered and IQM are tabulated in the following sub sections.

4.1 Entropy Assessment

Entropy is used to find the richness details in an image. Entropy is calculated as

The entropy values of the original and processed images are presented in Table 1. Tables 2 and 3 contain the gray level analysis of Einstein (Fig. 4a), girl (Fig. 7a) images respectively in terms of number of gray levels lost and number of gray levels overlapped in the processed image. In all the other methods (HE, RMSHE, RSIHE, RSWHE, ESIHE and MMSICHE) some gray levels are lost and they are getting over lapped with existing gray levels causing loss of information and reduced entropy, even though the image has been enhanced. These loss of details can be mainly observed in the face and hair of Einstein (Fig. 4b–g) and girl (Fig. 7b–g) images. The loss of information can also be observed inr images. Figures 1b–g, 4b–g and 7b–g in ESM at some of the marked objects in Figs. 1j, 4j and 7j in ESM.

The proposed methods (EOGM and MOGM) preserve the entropy as they use one to one mapping transformations. They are preserving the details in the image as there is no gray level loss. These can be observed in images, Fig. (4h, i) and images, Fig. 7h, i and Figs. 1 h, i, 4 h, i and 7 h, i in ESM of Einstein, girl, seeds, copter and jet respectively.

4.2 Brightness Preservation

Absolute mean brightness error (AMBE) is widely used measure for brightness preservation of the image. Brightness preservation even after enhancement is essential for consumer electronic products. This is calculated as

\( {\text{where}}\,r_{meanx} \) is the mean of input image and \( r_{meany} \) is the output image mean. We can observe from the table that the numbers marked as bold, represents low AMBE. It can be observed that the proposed methods are not having much brightness deviation. It is about eight grey level shift of mean between input and output images. From the visual analysis it can be observed that low AMBE does not always guarantee better image quality. Let us consider the Einstein image for observation of AMBE. RSWHE is giving better brightness preservation among all other HE methods, but visually, Fig. 4e, it is observed that coat is not enhanced well, resembles same as input image, Fig. 4a. Next to RSWHE, conventional HE is giving lower AMBE value, but visually from Fig. 4b, image has lost many details in the face, coat and back ground, lost its natural look and is more bright. For the proposed OGM methods, even the AMBE is slightly high compared to most of the other methods (Table 4), but it is visually observed from Fig. 4h, i that all the objects like coat, hair, face and back ground are enhanced well and are looking more natural than the other methods. Even for other images, Fig. 6h, i and Figs. 1 h, i, 4 h, i, 7 h, i in ESM, all structural details are well enhanced without false contouring and are looking more natural than other HE operated images.

4.3 Assessment of GMSD

Image processed by HE methods introduce grey level loss in the output image, resulting in some of the visual content being lost. These lost grey levels affect the objects to which they belong to. These affected objects alter the structural details, causing false contouring in the output images, leading to patchy look. So for better evaluation of structural similarity between input and enhanced image a most efficient and simple metric viz., gradient magnitude similarity deviation (GMSD) [16] was proposed. This is a simple whole reference image quality assessment method, which out performs the other state of the art whole image quality assessment methods viz., PSNR, information fidelity criteria (IFC), geo metric structure distortion (GSD), gradient -structure similarity (G-SSIM), structure similarity (SSIM), visual information fidelity (VIF), most apparent distortion (MAD), multi scale-SSIM (MS-SSIM), gradient similarity (GS), gradient magnitude similarity mean (GMSM), information weighted -SSIM (IW-SSIM) and feature similarity (FSIM).

Pixel wise gradient magnitude similarity (GMS) is given by

where \( m_{r} \) and \( m_{d} \) are gradient magnitudes of reference and distorted images respectively. Here input image is considered as reference image and enhanced image as distorted image. The constant ‘c’ is set as 0.0026.From the tables it is observed that the HE methods (HE, RMSHE, RSIHE, RSWHE, ESIHE and MMSICHE) are having many gray levels affected. These effected gray levels affect the objects to which they belong. More the gray levels effected more the structural deviation of the output image. As all the HE methods are having some gray levels effected, which reflects in structural changes in corresponding output image.Standard deviation of GMS, GMSD is given by

Lower the GMSD, better the similarity of input and output images. It can be observed from the entropy Table 1, gray level analysis Tables 2 and 3 and GMSD Table 5 that they are closely correlated with each other. The lower the gray levels effected, results in lower loss of details, better the entropy and less loss of structural details. This reflects as lower GMSD. So to have low GMSD, the image should have lesser number of gray levels affected with high entropy.

For better analysis of the role of GMSD in preserving structural details and in analyzing false contouring of objects, let us consider jet image Fig. 7a in ESM.

The HE methods are having many gray levels effected. This results in high GMSD and are producing false contours at the marked objects, numbered 1 and 2 (Fig. 7j in ESM). Processed images by HE, RSIHE and MMSICHE methods have lost shadow details and causing false contouring viz., at marked objects 1 and 2 (Fig. 7j in ESM) of images, Fig. 7b, d and g in ESM respectively. They are also having large back ground noise, resulting from saturation of grey levels in histograms, Fig. 9b, d and g in ESM. RMSHE and RSWHE resulted images, Fig. 7c, e in ESM are giving fairly enhanced results at these objects, but they introduce back ground noise. From ESIHE processed image, Fig. 7f in ESM it is easy to note that even marked objects are clearly visible, but total jet looks noisy and also having back ground noise. For all above HE dependent methods one common issue is uniform back ground in input image becoming non uniform back ground in the output image and is looking noisy.

Some of the HE methods viz., RMSHE, RSIHE, RSWHE and MMSICHE are giving better enhancement than all the other methods at oval shape marked objects, number 25 and > symbol are clearly seen, but at the cost of other objects. Thus they fail to give over all enhancement with pleasing look.

The HE methods are having many gray levels effected, refer Tables 2, 3 and Tables 1, 2 and 3 in ESM. This results in high GMSD and are producing false contours at some or all of the marked objects, Figs. 4b–g, 7b–g and Figs. 1b–g, 4b–g, 10b–g in ESM. It can also be observed that RSWHE, CHE methods viz., ESIHE and MMSICHE are having less number of gray levels effected compared to HE, RMSHE and RSIHE methods, giving relatively better look, having lower GMSD. But all the methods are not giving original look for all objects in the image, failing at some of the objects due to compression and saturation of intensities.

Even the proposed OGM methods are not giving such fair results at oval shape marked objects in jet image, Fig. 7a in ESM than RMSHE, RSIHE, RSWHE and MMSICHE methods, but they are giving uniform degree of enhancement for all objects (objects numbered with 1 and 2, oval shaped marked objects, other non marked objects) in the image including back ground, ref Fig. 7 h, i in ESM. Thus OGM methods are giving over all enhancement with pleasing look and without non uniform background, preserving all structural details (no structural changes at all marked and non marked objects after enhancement), images, Figs. 7 h, i in ESM and resulting in lower GMSD, Table 5. Also for other images viz., Einstein, girl, seeds and copter images, the OGM methods are not altering structural details of any object, producing naturally enhanced images with overall pleasing look, which are free from false contouring in Figs. 4h, i, 7h, i and Figs. 1 h, i and 7 h, i in ESM images.

4.4 Assessment of Visual Quality

To assess the visual quality we consider two test images viz., Einstein and girl. Visual quality is an important parameter in analyzing noise amplification, over enhancement, artifacts like patches and unnatural look in the processed images. Visual quality is judged by analyzing input, output histograms and its intensity transformation. Various HE methods viz., HE, RMSHE, RSIHE, RSWHE, ESIHE and MMSICHE are compared with EOGM and MOGM methods.

Let us consider Einstein image, Fig. 4a, for analysis. In HE, there is over enhancement due to intensity saturation in mid histogram region, in Fig. 6b, that results in noise amplification in suite (Fig. 4b). It can be observed in transformation, Fig. 5a, that there is a steep rise. Figure 5a is also having flat region, resulting in gray level loss and loss of natural look in the face, Fig. 4b. In RMSHE and RSIHE processed histograms, Fig. 6c, d, there is intensity saturation around gray level 170 and also intensity compression, which results in face looking patchy, Fig. 4c, d, and this corresponds to a steep rise and flat region in transformations, Fig. 5b, c. In RSWHE there is very little degree of enhancement in sub histogram region around gray level 100, Fig. 6e, resulting in very less enhancement in the suite object, Fig. 4e, and looking almost same as in Fig. 4a. Also left most gray level has undergone saturation, Fig. 6e.

In ESIHE, there is small intensity saturation around gray level 170 and also having small intensity compression, Fig. 6f. The result of this is face is more bright with noise and losing its natural look, Fig. 4f. In MMSICHE image, Fig. 4g, we can see the combined effect of RSIHE (steep rise and flat region) and RSWHE (very less degree of enhancement in sub histogram region) images. The result of this is, noisy and patchy look of face and very less enhancement in suite. In EOGM and MOGM, the transformations have no steep rise and flat regions, Fig. 5g, h, which results in uniform distribution of gray levels with no gray level loss. This can also be observed as there is no intensity saturation and intensity compression in histograms, Fig. 6h, i. Hence all the objects viz., hair, face, suite, collar and background are well enhanced and are maintaining a natural look, Fig. 4h, i.

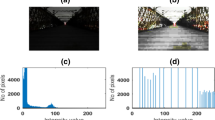

For girl image, Fig. 7a, there is over enhancement and noise amplification in face and background of HE, RMSHE and RSIHE processed images, Fig. 7b–d due to steep rise and flat regions in transformations, Fig. 8a–c. In ESIHE and MMSICHE, the over enhancement is controlled to certain degree at peaks, but has low intensity saturation around gray level 240, in Fig. 9f, g, which leads to low noise in back ground, Fig. 7f, g. We can also observe that near flat regions in transformations, Fig. 8e, f, are introducing loss of natural look in face. RSWHE image, Fig. 7e, looks better compared to all other methods but it also introduces low noise amplification in back ground and almost non expandable mid sub histograms (around gray level 100), Fig. 9e, results shirt is looking same in input and output images. EOGM and MOGM processed images, Fig. 7h, i, are having natural look with enhancement, as there is uniform degree of enhancement of gray levels in histograms, Fig. 9h, i without any gray level loss. These can be observed in the regions of shirt, hair, face and back ground of girl images.

5 Conclusion

In this paper two novel and most efficient methods viz., EOGM and MOGM for contrast enhancement are presented to preserve the entropy. In EOGM and MOGM methods, histogram is divided into two sub histograms based on exposure and median intensities of the images respectively. Image dependent weights for these sub histograms are calculated and then OGM transformation function is applied to these sub histograms. Finally these transformed sub images are combined into one to get enhanced image. In HE based methods many gray levels are affected due to gray level loss and gray level overlap, thus causing loss of picture details, resulting in poor visual quality and reduced entropy. This is resulting in altered structural details, causing false contouring of some of the objects in the image, resulting in high GMSD.

From the experimental results it is observed that the proposed methods are giving good results compared to all the existing methods in terms of entropy as well as visual quality along with uniform degree of enhancement. As OGM methods preserves all the gray levels and has no flat regions in the transformation, they are achieving natural enhancement without loss of picture details. These methods are devoid of intensity compression in histograms as there is no gray level overlap. This leads to unaltered structural details even after enhancement. Hence OGM methods are free from false contouring and is resulting in lower GMSD. As The proposed methods, are having low brightness deviation between input and enhanced images, are better suited for consumer electronic goods.

References

Gonzalez RC, Woods RE (2012) Digital image processing

Kim YT (1997) Contrast enhancement using brightness preserving bi-histogram equalization. IEEE Trans Consum Electron 43(1):1–8

Chen SD, Ramli AR (2003) Minimum mean brightness error bi-histogram equalization in contrast enhancement. IEEE Trans Consum Electron 49(4):1310–1319

Wang Y, Chen Q, Zhang B (1999) Image enhancement based on equal area dualistic sub-image histogram equalization method. IEEE Trans Consum Electron 45(1):68–75

Chen SD, Ramli AR (2003) Contrast enhancement using recursive mean-separate histogram equalization for scalable brightness preservation. IEEE Trans Consum Electron 49(4):1301–1309

Sim KS, Tso CP, Tan YY (2007) Recursive sub-image histogram equalization applied to gray scale images. Pattern Recogn Lett 28(10):1209–1221

Abdullah-Al-Wadud M, Kabir MH, Dewan MA, Chae O (2007) A dynamic histogram equalization for image contrast enhancement. IEEE Trans Consum Electron 53(2):593–600

Ibrahim H, Kong NS (2007) Brightness preserving dynamic histogram equalization for image contrast enhancement. IEEE Trans Consum Electron 53(4):1752–1758

Ooi CH, Isa NA (2010) Quadrants dynamic histogram equalization for contrast enhancement. IEEE Trans Consum Electron 56(4):2552–2559

Kim M, Chung MG (2008) Recursively separated and weighted histogram equalization for brightness preservation and contrast enhancement. IEEE Trans Consum Electron 54(3):1389–1397

Wang Q, Ward RK (2007) Fast image/video contrast enhancement based on weighted thresholded histogram equalization. IEEE Trans Consum Electron 53(2):757–764

Ooi CH, Kong NS, Ibrahim H (2009) Bi-histogram equalization with a plateau limit for digital image enhancement. IEEE Trans Consum Electron 55(4):2072–2080

Singh K, Kapoor R (2014) Image enhancement using exposure based sub image histogram equalization. Pattern Recogn Lett 36:10–14

Singh K, Kapoor R (2014) Image enhancement via median-mean based sub-image-clipped histogram equalization. Optik Int J Light Electron Opt 125(17):4646–4651

Hanmandlu M, Verma OP, Kumar NK, Kulkarni M (2009) A novel optimal fuzzy system for color image enhancement using bacterial foraging. IEEE Trans Instrum Meas 58(8):2867–2879

Xue Wufeng et al (2014) Gradient magnitude similarity deviation: a highly efficient perceptual image quality index. IEEE Trans Image Process 23(2):684–695

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Eswar Reddy, M., Ramachandra Reddy, G. Exposure and Median Based One-to-One Gray Level Mapping Transformation for Entropy Preservation and Contrast Enhancement. Proc. Natl. Acad. Sci., India, Sect. A Phys. Sci. 89, 467–490 (2019). https://doi.org/10.1007/s40010-018-0497-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40010-018-0497-3