Abstract

In this article, passive contrast enhancement detection technique is presented using block based reciprocal singular value curve features. Contrast enhancement operation changes the natural statistics of the image and variation in singular value curve is exploited for constructing the feature vector for forgery detection. Various statistical features using reciprocal singular value curve are extracted after multilevel 2-Dimensional wavelet decomposition. Fisher criterion is employed to choose the most discriminating and to discard the redundant features. Experimental results are presented using gray scale, G component and C b image database and support vector machine classifier. Robustness against anti-forensic algorithm and JPEG compression is also presented. The algorithm outperforms all the existing feature based blind contrast enhancement detection methods in terms of detection accuracy.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Images are everywhere due to the widespread use of internet. Each minute, Instagram users post a combined 48,611 photos and Facebook users upload 208,300 photos [23]. An average of 300 hours of YouTube videos are uploaded every minute. Large number of easy to use image editing tools and extremely powerful yet cheap computational hardware have been developed recently. Because of this, digital images can be created, doctored and distributed easily.

Digital images have generally been accepted as proof of events in many areas including law enforcement, insurance processing, forensic investigation, medical imaging, criminal investigation, surveillance systems and journalism. Image forgery detection is an emerging area to ensure the trustworthiness of images by examining change in the statistics due to the forgery operation. Verifying the integrity of the image is important, as it can lead to the creation of false memories [34].

Image forgery detection approaches are divided into two types. (1) Active image tampering detection methods and (2) blind or passive forgery detection techniques which uses received image only for assessing its authenticity [7, 16, 29, 33]. Active approaches involve use of digital watermarking or digital signatures to insert secret information at the receiver [1,2,3, 19,20,21, 24]. These methods require embedding of information (secret key) in the image and it is verified at the receiver. Major difficulties with such methods are information needs to be embedded before manipulation. Whereas, passive method uses received image only for authentication (without any watermark or signature embedded). Passive methods exploit artifacts resulting in inconsistencies due to the presence of the forgery. Common type of forgeries are copy-move, splicing, resampling and image retouching.

In copy-move or image splicing doctoring, geometrical transformation and contrast enhancement are very likely to be used in order to conceal the traces of manipulations. Blind contrast enhancement detection method is proposed in this article based on reciprocal singular value curve to reveal these forgeries.

Blind contrast enhancement detection algorithms are classified into two types: (a) statistical learning (feature) based blind contrast enhancement detection techniques and (b) histogram based intrinsic fingerprint extraction which finds unique artifacts introduced due to contrast enhancement operation [11, 38], pixel brightness transform [43] and exploring inter-channel correlation [25]. First, recent statistical learning based approaches for blind contrast enhancement detection are reviewed briefly.

Various feature based approaches have been proposed to detect contrast enhancement (CE) blindly. In [4], passive CE detection approach to extract image descriptors independent of original image content is proposed. Image doctoring detection technique based on the neighborhood bit planes of the image is presented in [5]. In [8], Wavelet subband energy and statistical features are computed using multilevel 2-dimension wavelet decomposition for contrast enhancement detection.

CE detection methods using (1) binary similarity measures (BSMs) (2) image quality measures (IQMs) and (3) higher order wavelet statistics (HOWS) are proposed in [6, 10]. In [17], a method is developed using singular value decomposition (SVD) for image forgery detection. Image rows/columns linear dependency is changed due to doctoring operation and the derived features can be used to detect image manipulations.

Although the aforementioned detection algorithms are capable of detecting contrast enhancement forgery, their computational complexity is high due to the large dimension feature vector. In this paper, the properties of Reciprocal Singular Curve (RSV) are investigated for blind forgery detection. Traces of contrast changes are detected using RSV features. Input image is decomposed by applying 2-dimensional Discrete Wavelet Transform (DWT) into various subbands and LL subband is further divided into different block size. RSV based statistical features are extracted using different block sizes and to choose relevant features and to discard non-important features, Fisher criterion based feature selection approach is employed. Finally, image is classified as authentic or contrast enhanced using support vector machine (SVM) classifier. The experimental results confirm the effectiveness of the proposed method for blind image forgery detection. In addition to this, the proposed algorithm is evaluated against robustness to JPEG compression and anti-forensic algorithm.

The major advantages of the proposed technique can be summarized as: 1) good detection accuracy with low dimension feature vector (2) local feature extraction technique resulting in better detection compared to other statistical learning methods (3) method presented is independent of type of image resulting in similar detection accuracy when compared between gray and G component images.

The structure of the paper is organized as follows. Section 2 presents the review of singular value decomposition and reciprocal singular curve. Proposed scheme for blind contrast enhancement detection is described in Section 3. 2D-DWT is discussed in Section 4. Contrast enhancement detection and feature extraction process is discussed in Section 5. Fisher criterion and SVM classifier configuration used for evaluation of the proposed algorithm is presented in the Section 5. Section 6 summarizes the simulation results and finally, Section 7 concludes the paper.

2 Singular value decomposition

In linear algebra, the singular value decomposition (SVD) is one of the most powerful matrix factorization technique used in signal processing applications. In image processing, SVD is used to obtain diagonal matrix from the decomposed image. Singular values are the ordered entries of the diagonal matrix. Few singular values with higher magnitude are enough to represent useful information of an image. SVD has been used in image watermarking [32, 37], steganalysis [18, 31] and passive image forgery detection [17, 41].

In SVD transformation, a matrix A = [A i,j ] of size N × N can be decomposed into the product of three matrices, where 1 ≤ i,j ≤ n. SVD for a matrix A is represented as,

where U and V are orthogonal matrices representing left and right singular vectors respectively and S is a diagonal matrix with rank r. S singular values are the non-negative square roots of the eigenvalues of A T A, denoted by σ where,

Singular value decomposition has following important properties [12, 26, 42]:

-

1.

σ 1 ≥ σ 2 ≥ σ 3 ≥… ≥ σ r ≥ 0.

-

2.

2 − D image luminance component is represented by singular values.

-

3.

Small changes in the singular values will not result in larger variance variation of image.

-

4.

$$ E=\sum\limits_{i,j=1}^{n} \mid A_{i,j} \mid^{2} = \sum\limits_{i=1}^{n} {\sigma_{i}^{2}} $$(3)

Wang and Ping [41] proposed a blind resampling and interpolation detection method using SVD. Singular value decomposition is used to analyze the statistical changes brought into the linear dependencies among image pixels. 44-D feature vector consists of the mean and variance of the number of zero singular values at block level and index level are extracted and SVM classifier is used for forgery detection. Various manipulations including scaling (up-scaling and down-scaling), rotation, brightness, blurring and contrast are detected using correlation among the rows and columns [17]. Mean of normalized singular values of different block sizes (3 to 21) are used for feature extraction.

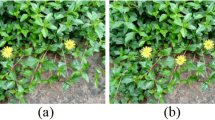

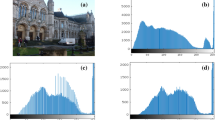

In this article, features of singular values are illustrated to investigate the contrast enhancement operation. In this experiment, an original and two corresponding contrast enhanced images are selected randomly from UCID database [36] which are depicted in Fig. 1. In the Fig. 1, image A is an original image whereas image B and C are contrast enhanced images with γ = 1 and γ = 2 respectively. Here, γ is an exponent of a constant power function used for the image contrast enhancement operation. The reciprocal singular value curve of each image is plotted in Fig. 2 as a function of 1/S i n g u l a r V a l u e(i) (1/S V (i)). As evident, the change in γ is illustrated by the exponential fall-off of the reciprocal singular value curve. This is because of the nonlinear mapping of the image pixels due to contrast enhancement operation.

Reciprocal singular value curves of the contrast enhanced images in Fig. 1

Another example is shown in Fig. 3 which consists of original image A, image B and C modified using γ = 0.2 and 0.6 respectively. Exponential fall-off of the reciprocal singular value curve is observed in the Fig. 4 similar to Fig. 2. This change in the RSV is exploited to detect the forgery operation. RSV based features are used earlier for steganlaysis [31] and no-reference image quality assessment [35].

Reciprocal singular value curves of the contrast enhanced images in Fig. 3

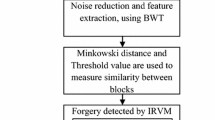

3 Proposed scheme for blind contrast enhancement detection

This section presents proposed scheme for blind contrast enhancement detection using reciprocal singular value curve features and support vector machine classifier. Entire process flow of the technique is described in the Fig. 5. The method involves two steps: training and testing (classification) step. During the training phase, RSV based statistical features from training set images are extracted. SVM classifier is trained using these extracted features. In the second step, statistical features are extracted from unknown test image and input to the trained classifier model which is used to identify authenticity of the test image.

Main computational process for the blind contrast enhancement detection is explained using the following steps:

-

Step 1: In the first step, input training set of images in RGB (Red, Green and Blue) color space of size 512 × 384 has been taken for the processing.

-

Step 2: Input RGB image is pre-processed to obtain (1) grayscale image (2) G component image and (3) C b component image. Experiments are performed using all the three types of images. Input RGB to gray scale image conversion is computed using,

$$ 0.2989 \times R + 0.5870 \times G + 0.1140 \times B $$(4)Three different types images are used to verify the effect of type of image on the detection accuracy and to identify whether the presented scheme is biased to any particular type of image.

-

Step 3: Statistical feature extraction step consists of three different sub-steps. (1) Applying forward DWT (2) block division and (3) RSV based feature extraction.

Multilevel decomposition of input training image is obtained by applying forward discrete wavelet transform resulting in Z ∈{L L,L H,H L,H H}. The LL band is used for further processing as it provides useful information to exploit contrast enhancement operation.

LL band is further subdivided into different block sizes. Here, four types of block sizes are used, N = {8 × 8, 16 × 16, 32 × 32 and 64 × 64}. Higher detection accuracy can be achieved using such kind of local statistical features.

From each block B seven features are extracted resulting in 7 × 4 = 28-D feature vector. Detailed procedure of feature extraction is explained in Section 5.1. The final feature vector based on RSV statistics is expressed as

$$ F\,=\,[ {\left\lbrace f_{1}, f_{2}, \ldots, f_{7}\right\rbrace}_{B_{1}},\, {\left\lbrace f_{1}, f_{2}, \ldots, f_{7}\right\rbrace}_{B_{2}},\, {\left\lbrace f_{1}, f_{2}, \ldots, f_{7}\right\rbrace}_{B_{3}},\, {\left\lbrace f_{1}, f_{2}, \ldots, f_{7}\right\rbrace}_{B_{4}} ] $$(5) -

Step 4: To reduce the feature vector length and which in turn reduces the computation time of the process, Fisher criterion based feature selection techniques is used. Output of this step is most important feature subset consisting of 20 features F s = {f 1,f 2,…,f 20}.

-

Step 5: Support vector machine classifier model is generated by applying step 4 output. While selecting best 20 features, SVM detection accuracy is considered.

Finally to validate the method, test image features are applied to the trained SVM classifier to classify input image as doctored (contrast enhanced) image or authentic image. The algorithm performance is measured in terms of sensitivity, specificity and total detection accuracy (T) and is computed from (6)–(8),

$$ \text{Specificity}=\frac{TN}{TN+FP} $$(6)$$ \text{Sensitivity}=\frac{TP}{TP+FN} $$(7)$$ \text{T}=\frac{TN+TP}{TP+TN+FP+FN} $$(8)TP represents the CE (manipulated) images detected CE, TN represents the correct detection rate of original (unaltered) images, FP represents the original images detected CE and FN represents CE images detected as original.

Pseudo code of the feature extraction scheme for blind contrast enhancement detection is outlined in Algorithm 1 using gray scale images. Similar steps are followed for G and C b component images.

4 2-D discrete wavelet transform (DWT)

Discrete wavelet transform is an effective multiresolution analysis tool in the area of signal and image processing. Multiresolution analysis [30] involves decomposition of the given input signal in four frequency channels of constant bandwidth on a logarithmic scale using the property of translation and dilation. Using first level decomposition, an image is decomposed into four subbands represented as LL, LH, HL and HH. LH (Low-High), HL (High-Low) and HH (High-High) represent the finest scale DWT coefficients and LL (Low-Low) stands for the coarse-level coefficients.

In two-level DWT, sub-image (LL) get decomposed into the approximation sub-band (LL2), the horizontal (LH2), the vertical (HL2) and the diagonal sub-band (HH2). Most of the energy is contained in LL band of the decomposed input image and hence this LL band provides useful band for feature extraction. From the LL sub-band coefficients seven types SVD based features are extracted per block size.

5 CE detection using RSV

Change in the image statistics is illustrated by the exponential fall-off of the RSV curve as described in Section 2. This exponential fall-off characteristics is extracted by using RSV feature vector which can be used to authenticate the image. Feature extraction and selection process is outlined in this section. As the image consists of heterogeneous regions, the input image is decomposed into different size blocks and from each block features are extracted.

5.1 Feature extraction using RSV curve

No-reference image quality assessment based on RSV curve is proposed in [35]. The technique uses no reference quality index obtained from reciprocal singular value curve which resembles inverse power function. The fall-off of the RSV curve characterizes the change in contrast and hence can be employed to detect contrast enhancement forgery. The modified image quality index is given by,

where S1 is the singular value vector, r is the number of singular values and the parameter β 1 and β 2 are the thresholds. β 1 and β 2 values will be discussed in experimental results section. To avoid the loss of discrimination ability, range of singular values are considered in this experiment. Smaller and higher singular values are removed using β.

In addition to this exponential RSV feature, six more features are extracted from the singular values. In total seven features per block size are derived from this image quality index after decomposing the image using 2-D DWT. After the experimental evaluation we found that, contrast enhancement operation can be better investigated in case of block based feature vector extraction. The technique uses various non-overlapping block size N × N. Figure 6 shows feature extraction steps. The input image is first decomposed by applying 2-D DWT. Selected LL band is divided into on-overlapping blocks of size N = 8,16,32,64. And finally, Q e x p features are extracted from these blocks. All the features are tabulated in Table 1.

The mean (μ) of all the singular values for each block size is computed as a second feature. Standard deviation, entropy, energy and variance of all the block based singular values are extracted as other features. As the presence of contrast enhancement operation alters the energy of the image and to detect block based energy and variance features are used.

5.2 Feature selection using Fisher criterion

Feature selection is an important technique used in pattern recognition and machine learning. The goal of feature selection approach is to find the best set of informative and discriminating features from the large dimension input feature set. Feature selection results in the reduction of data dimensionality by removing irrelevant and redundant features and speeding up the classification process.

Feature selection techniques are classified into three types: filter, wrapper and embedded [9, 22, 27]. Filter methods employ some statistical measures of the data for feature selection and the process is independent of classifier. Wrapper techniques include a classifier in the process of feature selection to choose the relevant features. Filter approaches are simple and more general compared to wrapper. But, the classification accuracy of wrapper is better than filter approach as it considers the prediction capability of classification algorithm. In this article, Fisher based filter approach is used to choose the optimal set of features.

Feature selection process using Fisher index is illustrated in the Fig. 7. Feature selection maps the input N-dimensional feature space into M-dimensional optimal output feature space, where M < N. In this experiment, 28-D feature vector is extracted using block based SVD and then Fisher index is used to choose non-redundant feature subset. Finally, 20 features are selected and fed to the classifier (reduction by 28.58%).

Fisher feature selection criterion is employed to analyze the separability of input feature set which is based on variance and means of data samples belonging to each class [28, 39]. Finally, based on the degree of separability called as Fisher Index subset of features are selected. The variance value of the feature corresponding to one class should be as small as possible. In addition to this, the mean of feature values for the input data of different classes should be separated as much as possible for better discrimination. The higher the Fisher index, the better the separability of the feature into different classes.

Let A and M be the two classes corresponding to authentic and manipulated image. Features extracted from these authentic and manipulated image class is represented by feature spaces F A and F M , respectively with dimensions D. In Fisher method, the Fisher index or discrimination coefficient F A M (f) is used for the assessment of the feature f to distinguish between class A and M as,

where μ A and μ M are the mean values of feature f in the class A and M respectively. Standard deviations are represented by σ A and σ M for both A and M classes. Higher values of Fisher index shows good separation capability of the feature f between the two classes.

Before applying Fisher feature selection, all the input features are first scaled to make all the ranges comparable as the various features have different dynamic ranges. Consider feature f having the range [f m i n ,f m a x ]. The the scaled feature \(\bar {f}\) is obtained using,

5.3 Support vector machine (SVM) classifier

The Support Vector Machine is a powerful supervised classifier based on structural risk minimization proposed by Vapnik [40] and Cortes [15]. SVM has been popularly used in pattern classification problem to find best separator between the two classes which are not linearly separable.

In this article, SVM is used to classify the input image into two classes (authentic and forged). Input data sample x is classified using SVM as,

where x i are the training data samples, class labels are represented by y i ∈{+1,−1}, k is the kernel function (linear, Gaussian or radial basis), α i and b are the model parameters.

6 Experimental results and discussions

6.1 Experimental setup

The performance of RSV based statistical features to evaluate blind contrast enhancement detection scheme is demonstrated in this section. For the experimental image database, 800 original RGB uncompressed images are randomly selected of size 384 × 512 from the UCID [36] database. After RGB to gray scale conversion, contrast enhancement operation is carried out on 400 randomly selected images with different γ values ranging from {0.2,0.4,…,3}. Similar procedure is followed for G and C b component images. 70% of the images are used for training the classifier and remaining 30% for testing.

6.2 Parameter tuning

As described in Section 5.1, RSV based statistical feature set of 28-D is extracted after two level DWT decomposition. Four different block size B = {8,16,32,64} is selected in this experiment. For an image of size M × N, total number of blocks are computed as \(NB=\frac {MN}{B^{2}}\) blocks of size B × B. For example, in case of 32 × 32 block size, we have 192 such blocks considering the input image size of 384 × 512. Haar DWT with two level decomposition is employed.

To compute the image quality index feature Q e x p and the subsequent features, two parameters are β 1 and β 2 are important. No variations are observed when the singular value is very small and very high for the reciprocal singular value curves of original (unaltered) and contrast enhanced images. So, range of singular values are chosen to distinguish the original from doctored image. Upper (β 2) and lower (β 1) thresholds are imposed to select medium singular values. After conducting several experiments β 1 = 40 and β 2 = 400 are selected. These values resulted in better detection accuracy.

There are two important SVM parameters in RBF kernel function that directly affects on the detection accuracy. These parameters includes (1) regularization parameter C and (2) gamma (γ) of the kernel function. Grid-search technique is employed using 10-fold cross-validation to find out the optimal parameter values of RBF kernel function [13]. 10-fold cross-validation is used to choose the parameters of C = {2−5,2−3,…,215} and γ = {2−15,2−13,…,21}.

Choosing optimum γ and C is a challenging task and can be obtained by the n-fold cross-validation technique. The grid searching algorithm steps of optimizing γ and C is shown in Fig. 8. Different combinations of pairs of (C,γ) are tested and the one with the highest cross-validation accuracy is selected as the optimal parameter values (C,γ) of RBF kernel create training model. The implementation SVM is carried out using LIBSVM package developed by Chang and Lin [13].

6.3 CE detection results using RSV statistical features

Sensitivity, specificity and total detection accuracy are the various performance measures used to evaluate the detection accuracy of the algorithm. Total detection accuracy results for all three types of images for various γ values are shown in the Table 2. These results are obtained using 28-D feature vector without applying feature selection. We found 93.48% average detection accuracy in case of gray scale image, 92.39% in case of G component image and 78.39% for C b image database. Gray scale resulted in highest detection rate whereas, C b with the minimum detection accuracy. From the table it is also evident that, detection accuracy is maximum in case of lower and higher γ values. Whereas, it decreases near γ = 0.8. The statistical alternations at γ = 0.8 are negligible and difficult to detect these changes. Otherwise, for rest of the γ values, the performance of the method is satisfactory.

Figures 9 and 10 shows the scatter plots of various features of original and doctored gray scale images. Figure 9 depicts scatter plot of original and contrast enhanced images for Q e x p , mean and energy, central moments features. Whereas, standard deviation, variance and entropy, energy features are plotted in Fig. 10. It is observed that authentic image features are clearly separable from the contrast enhanced image features.

6.4 Effect of feature selection

In order to acquire further insight into the role of the feature selection step, three different types of thresholds are applied. Important discriminating features from input 28-D feature set are selected using Fisher criterion as discussed in Section 5.2. 20 features are selected using this step after obtaining normalized Fisher index. As Fisher measure is the ranking method of feature selection, experiments are carried out by using three thresholds T 1, T 2 and T 3.

When threshold is set as T 1, all the features are selected resulting in similar detection accuracy as described in Section 6.3 with 28-D feature vector. In second threshold T 2, all input features having normalized Fisher index of greater than 10 are chosen as the best candidate feature subset. By applying threshold T 2, 20-D feature vector is obtained (removing 8 features). Input features having normalized Fisher score of grater than 5 are selected by setting third threshold T 3, resulting in 23-D feature vector (removing 5 features).

Table 3 depicts effect of different thresholds on total detection accuracy (%) as a function of different γ values for gray scale images. Average detection accuracy found was 93.48% for threshold T 1, 95.02% for threshold T 2 and 91.38% when threshold T 3 is selected. Results demonstrate the effectiveness of feature selection approach. It not only reduces feature dimensionality but also enhances the detection rate.

For G and C b component image database, impact of different thresholds on the detection rate is shown in Figs. 11 and 12 respectively. Forgery detection has been increased in both the cases after employing feature selection step. Average detection accuracy obtained are T 1 = 92.39% (78.39%), T 2 = 93.77% (79.92%) and T 3 = 89.73% (76.35%) for G (C b ) components respectively.

6.5 DWT decomposition and detection accuracy

Experiments are also carried out to identify optimum number of DWT decomposition levels before applying to SVD decomposition. Average detection accuracy is computed with 28-D feature vector and shown in the Table 4. As illustrated in the table, highest accuracy was achieved by using two-level DWT decomposition. No further increase in detection rate was observed by applying higher level decomposition.

6.6 Contrast enhancement detection after JPEG compression

JPEG compression can also be performed after the contrast enhancement operation. Robustness of the proposed algorithm against JPEG compression with different quality factors (QF) 100, 90 and 80 is evaluated in this subsection. As expected, the detection rate was dropped in all three cases.

JPEG compression is carried out after the contrast enhancement operation using QF = 100, 90 and 80 for grayscale, G component and C b image type. 200 images were used for training and 100 for testing the SVM classifier. Table 5 shows total detection accuracy obtained using grayscale image database for different γ values with feature vector dimension of 28. Average detection rate achieved was 89.58%, 92.15% and 93.13% in case of QF=80, QF=90 and QF=100 respectively. It is evident from the Table 5 that the method is robust against JPEG compression resulting in good detection accuracy.

Additional experiments are performed using C b and G component images after JPEG compression. Figures 13 and 14 depicts JPEG compression detection accuracy for G and C b component images respectively using different γ values. As JPEG is a lossy image format resulting in low detection rate. 88.54% (73.9%), 91.43 (75.40%) and 92.79% (77.18%) average detection accuracy was obtained using JPEG QF = 80, QF = 90 and QF = 100 respectively for G (C b ) component images.

Lower detection rate was noticed for lower QF (QF = 80) and it increases in higher QF (QF = 90 and 100). Grayscale and G images resulted in similar detection accuracy, whereas the detection rate is decreased in case of C b image database for all QF. Best JPEG compression detection rate (93.13%) was achieved in case of grayscale with QF = 100.

6.7 Performance against anti-forensics method

To investigate the performance of the proposed technique under anti- forensic scenario, an alternative anti-forensic method proposed in [14] is used. The passive contrast enhancement detection algorithms for global and local contrast enhancement detection are based on uncovering unique peak and gap introduced into the histogram [11, 38].

An anti-forensic method to attack the contrast enhancement detection approach is developed in [14]. Peaks and gaps introduced by the contrast enhancement forgery are avoided preserving good image quality in terms of PSNR. Internal bit depth of the images are incremented to model continuous input pixel intensity value before the contrast enhancement operation. Experiments are performed using the anti-forensic approach developed in [14] to evaluate the proposed method in this article.

Figure 15 depicts detection accuracy (%) under anti-forensic scenario as a function of different γ values for gray scale, G component and C b images. The methods still achieves good detection performance in the given anti-forensic scenario. 85.61%, 83.54% and 72.40% average detection rate was obtained in case of grayscale, G and C b component images. Highest detection rate was resulted in grayscale image type. As the anti-forensic method [14] which relies on modeling of continuous input pixel values to remove peak-gap artifacts, the proposed method does not extract any of the feature based on peaks and gaps of the histogram and hence resulting in good detection rate.

6.8 Performance comparison with existing methods

The algorithm described in this article is compared with all the existing feature based contrast enhancement detection techniques. Table 6 compares all the feature based blind contrast enhancement approaches with proposed method. Compared to [10] and [6] feature vector (FV) dimension is very small, but it is larger than [44] and [4]. In terms of detection accuracy, the method presented outperforms all the existing approaches with 95.02% accuracy.

7 Conclusion

RSV based statistical learning approach for blind contrast enhancement detection is presented in this article. The method achieves highest detection accuracy compared to all the other feature based techniques with moderate feature vector dimenionality. Block based approach for local feature extraction is used to enhance the detection rate. Fisher criterion based feature selection is employed and classification is performed using SVM. The method performs better even after JPEG compression. The detection rate is independent of the type of the image.

References

Abu-Marie W, Gutub A, Abu-Mansour H (2010) Image based steganography using truth table based and determinate array on rgb indicator. Int J Signal & Image Process 1(3):196–204

Al-Otaibi N, Gutub A (2014) 2-layer security system for hiding sensitive text data on personal computers. Lect Notes Inf Theory 2(2):151–157

Al-Otaibi N A, Gutub A A (2014) Flexible stego-system for hiding text in images of personal computers based on user security priority. In: Proceedings of international conference on advanced engineering technologies (AET-2014), pp 250–256

Avcibas I, Bayram S, Memon N, Ramkumar M, Sankur B (2004) A classifier design for detecting image manipulations. In: Proceedings of international conference on image processing, pp 2645–2648

Bayram S, Avcibas I, Sankur B, Memon N (2005) Image manipulation detection with binary similarity measures. In: Proceedings of European signal processing conference, pp 1–4

Bayram S, Avcibas I, Sankur B, Memon N (2006) Image manipulation detection. J Electron Imag 15(4):1–17

Birajdar G K, Mankar V H (2013) Digital image forgery detection using passive techniques: a survey. Digit Investig 10(3):226–245

Birajdar G K, Mankar V H (2016) Passive image manipulation detection using wavelet transform and support vector machine classifier. In: Proceedings of international conference on ICT for sustainable development. Springer, Singapore, pp 447–455

Blum A, Langley P (1997) Selection of relevant features and examples in machine learning. Artif Intell 97(1–2):245–271

Boato G, Natale F, Zontone P (2010) How digital forensics may help assessing the perceptual impact of image formation and manipulation. In: International workshop on video processing and quality metrics for consumer electronics, pps 1–6

Cao G, Zhao Y, Ni R, Li X (2014) Contrast enhancement-based forensics in digital images. IEEE Trans Inf Foren Secur 9(3):515–525

Chandra D (2002) Digital image watermarking using singular value decomposition. In: Proceedings of 45th IEEE midwest symposium on circuits and systems, pp 264–267

Chang C C, Lin CJ (2001) LIBSVM: a library for support vector machines http://www.csie.ntu.edu.tw/cjlin/libsvm

Chun-Wing K, C AO, Sung-Him C (2012) Alternative anti-forensics method for contrast enhancement. Springer, Berlin Heidelberg, pp 398–410

Cortes C, Vapnik V (1995) Support vector networks. Mach Learn 20(3):273–297

Farid H (2009) A survey of image forgery detection. IEEE Signal Process Mag 26(2):16–25

Gul G, Avcibas I, Kurugollu F (2010) SVD based image manipulation detection. In: Proceedings of 17th IEEE international conference on image processing, pp 1765–1768

Gul G, Kurugollu F (2010) SVD-based universal spatial domain image steganalysis. IEEE Trans Inf Foren Secur 5(2):349–353

Gutub A (2010) Pixel indicator technique for rgb image steganography. J Emerg Technolgoies Web Intell 2(1):56–63

Gutub A, Al-Qahtani A, Tabakh A (2009) Triple-a: secure rgb image steganography based on randomization. In: 2009 IEEE/ACS international conference on computer systems and applications, pp 400–403

Gutub A, Ankeer M, Abu-Ghalioun M, Shaheen A, Alvi A (2008) Pixel indicator high capacity technique for rgb image based steganography. In: WoSPA 2008 – 5th IEEE international workshop on signal processing and its applications, pp 1–4

Guyon I, Elisseeff A (2003) An introduction to variable and feature selection. J Mac Learn Res 3:1157–1182

http://www.cio.com (2016). Last accessed on 17 July 2016

Khan F, Gutub A A A (2007) Message concealment techniques using image based steganography. In: 4th IEEE GCC conference and exhibition, gulf international convention centre. Manamah, pp 1–4

Lin X, Li C T, Hu Y (2013) Exposing image forgery through the detection of contrast enhancement. In: 2013 IEEE international conference on image processing, pp 4467–4471

Liu R, Tan T (2002) An SVD-based watermarking scheme for protecting rightful ownership. IEEE Trans Multimed 4(1):121–128

Liu H, Yu L (2005) Toward integrating feature selection algorithms for classification and clustering. IEEE Trans Knowl Data Eng 17(4):491–502

Lu CJ, Liu LF, Luo Y X (2014) Selection of image features for steganalysis based on the fisher criterion. Digit Investig 11(1):57–66

Mahdian B, Saic S (2010) A bibliography on blind methods for identifying image forgery. Signal Process Image Commun 25(6):389–399

Mallat S (1989) The theory for multiresolution signal decomposition: the wavelet representation. IEEE Trans Pattern Anal Mach Intell 11(7):654–693

Nouri R, Mansour A (2016) Digital image steganalysis based on the reciprocal singular value curve. Multimed Tools Appl. 1–12. doi:10.1007/s11042-016-3507-y

Pandey P, Kumar S, Singh S (2014) Rightful owenship through image adaptive DWT-SVD watermarking algorithm and perceptual tweaking. Multimed Tools Appl 72 (1):723–748

Redi J A, Taktak W, Dugelay J L (2011) Digital image forensics: a booklet for beginners. Multimed Tools Appl 51(1):133–162

Sacchi D L, Agnoli F, Loftus E (2007) Changing history: doctored photographs affect memory for past public events. Appl Cogn Psychol 21(8):1005–1022

Sang Q, Wu X, Li C, Bovik A C (2014) Blind image quality assessment using a reciprocal singular value curve. Signal Process Image Commun 29(10):1149–1157

Schaefer G, Stich M (2004) UCID - an uncompressed colour image database. In: Proceedings of SPIE, storage and retrieval methods and applications for multimedia, pp 472–480

Singh D, Singh S (2016) DWT-SVD and DCT based robust and blind watermarking scheme for copyright protection. Multimed Tools Appl 1–24. doi:10.1007/s11042-016-3706-6

Stamm M, Liu K J R (2010) Forensic detection of image manipulation using statistical intrinsic fingerprints. IEEE Trans Inf Foren Secur 5(3):492–506

Staroszczyk T, Osowski S, Markiewicz T (2012) Comparative analysis of feature selection methods for blood cell recognition in leukemia. In: Perner P (ed) Machine learning and data mining in pattern recognition, vol 7376. Springer Berlin, Heidelberg, LNCS, pp 467—481

Vapnik V (1998) Statistical learning theory, 1st edn. Adaptive and learning systems for signal processing, communications, and control. Wiley

Wang R, Ping X (2009) Detection of resampling based on singular value decomposition. In: Proceedings of Fifth international conference on image and graphics, pp 879–884

Wang D, Liu S, Luo X, Li S (2013) A transcoding-resistant video watermarking algorithm based on corners and singular value decomposition. Telecommun Syst 54:359–371

Zhang X, Lyu S (2014) Blind estimation of pixel brightness transform. In: 2014 IEEE International conference on image processing (ICIP), pp 4472–4476

Zontone P, Carli M, Boato G, Natale D (2010) Impact of contrast modification on human feeling: an objective and subjective assessment. In: International Conference on image processing, pp 1757–1760

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Birajdar, G.K., Mankar, V.H. Blind image forensics using reciprocal singular value curve based local statistical features. Multimed Tools Appl 77, 14153–14175 (2018). https://doi.org/10.1007/s11042-017-5021-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-017-5021-2