Abstract

The performance accuracy of JPEG steganalysis depends on the best features extracted from the images. This demands extraction of all possible features that undergo changes during embedding. The computational complexity due to such large number of features necessitates feature set optimization. Existing research in JPEG image steganalysis tend to extract rich feature sets and reduce them by statistical feature reduction techniques. Compared to these techniques, genetic algorithm based optimization techniques are more promising as they converge to global minima. The objective of this paper is to implement genetic based optimization to reduce the high dimensional image features and hence obtain improved classification accuracy. The method implemented includes the extraction of image features in terms of co-occurrence matrices of the differences of all possible Discrete Cosine Transform (DCT) coefficients to give 200 × 23,230 features. These features are optimized by a nature inspired meta-heuristic, Ant Lion Optimization (ALO) which considers the features as ants that move in random space. The fitness function for the antlion to hunt the ants is proportional to the traps built by the antlion. The proposed steganalyser has been tested for classification accuracies with different payloads. The classifiers implemented include Support Vector Machines (SVM), Multi Layer Perceptron (MLP) and fusion classifiers based on Bayes, Decision template and Dempster Schafer data fusion schemes. The results show that highest average classification accuracy has been obtained for Bayes fusion classifier followed by Dempster Schafer fusion classifier. It has been noted that the performance of fusion classifiers is better compared to individual classifiers. Thus the proposed method gives better classification accuracy for JPEG steganalysis than existing methods.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Image steganography is the mechanism of embedding secret information into a clean (cover) image using a stego key. The embedded image is called as the stego image. Owing to the fact that human eye is less perceptive to slight changes in image parameters, image steganography has gained importance and is widely used by terrorists. The common steganographic algorithms include JSteg, JP Hide and Seek, Outguess. Apart from these commercial tools, many application specific steganographic algorithms have been developed by researchers.

While steganography relates to secret communication, steganalysis involves hacking a suspicious image and identifying any secret message. There are two methods of image steganalysis, embedding specific and blind steganalysis. The embedding specific methods know the steganographic algorithm that was used to embed, but blind steganalysis does not have knowledge about the embedding logic that was used. Hence blind steganalysis is called as Universal method where the suspected image is classified by a two class (clean or stego) classifier. This classification could be based on statistical methods or computational intelligence methods [25]. While statistical blind steganalysis use Markov or wavelet or DCT coefficients to classify an image, computational intelligence methods are based on neural networks and genetic or evolutionary algorithms [9]. The efficiencies of these methods depend on the optimal features selected from the image for classification. Researchers state that acquiring the best feature set has always been a challenge as different steganographic algorithms change different image parameters [14, 20, 23, 29]. Recent research has presented a method of acquiring a rich set of image features that would give best classification of images but have disadvantages in terms of computational complexity [12]. This demands the need to optimize the data sets for best possible features. The most prominent statistical feature reduction methods convergence to local minima and choose inappropriate features.

To overcome this challenge this research intends to implement nature inspired optimization techniques for best image steganalysis. These are meta-heuristic algorithms that use stochastic operators to obtain global optimal values [2]. Randomness being the main characteristics of these stochastic algorithms, they search for the global optimum by creating a set of candidate solutions and then iteratively fine tune them till a satisfactory terminating condition [28]. The recent nature inspired algorithms include Firefly Algorithm (FA) [31], Cuckoo Search (CS) algorithm [32], Cuckoo Optimization Algorithm (COA) [24], Ray Optimization (RO) algorithm [15,16,17]. This research work implements modified Ant Lion Optimization for steganalysis of JPEG images. The next section discusses the proposed steganalyser, followed by the extracted image features and then ALO optimization technique. Finally the classification scheme used for steganalysis is discussed.

2 Implementation of proposed steganalyser

The proposed image steganalyser in this research consists of four main parts. In the first part stego images are created from clean images using the non shrinkage F5 (nsF5) algorithm. The second part involves the extraction of rich feature set (23,230 features) from stego and clean images. Optimizing this feature set to 232 features by ALO is the third part, followed by classification in forth stage. All implementations are done in MATLAB.

2.1 Image database and steganographic embedding

The images used in this research are taken from the standard BOSS (Break Our Steganographic System) database [1]. This database has full resolution images acquired from different cameras like Panasonic, Leica, Pentax, Cannon EOS and Nikon in .cr2 or .dng format and later converted into .pgm format. The original images are resized and cropped to 512 × 512. These images are made public for use by researchers in the field of steganography and steganalysis. From the images available in this standard database (http://dde.binghamton.edu/download/) 100 images are chosen and converted into .jpg format with a quality factor of 75. These 100 images are the cover (clean) images that are used to create another 100 stego images by Non Shrinkage F5 (nsF5) embedding. The subsequent feature extraction, optimization and classification in this research uses these 200 images.

The stego images for this research work have been created from the Non Shrinkage F5 (nsF5) algorithm. Permutation straddling of the image pixels in F5 enables uniform embedding of the data with a specific time complexity of order O(n). The sequences of operations involved in F5 are – choose a DCT coefficient, permute it with a key, embed the secret data and then code it with Huffman coding. For s secret bits and code words with length n = 2 s – 1, the embedding rate R(s), change in embedding density E(s) and embedding efficiency (μ) are

Coefficients that become zero due to embedding are avoided in F5 as the decoder in receiver skips that coefficient. Hence the modified F5 (non shrinkage F5) algorithm uses syndrome coding on the DCT coefficients before applying F5 logic. This method eliminates the shrinkage problem and is superior compared to other steganographic methods that use side information about the cover. Literature shows that nsF5 algorithm is the best steganographic algorithm till date for JPEG images [18].

2.2 Extracted image features

Features extracted in this research include a rich set of all possible changes in DCT coefficients, that have spatial and frequency correlations. For a JPEG image of dimension I × J, the quantized DCT coefficients could be in a matrix C ϵ X I×J. Each DCT coefficient C p,q m,n represents the (p,q) th coefficient in the (m,n) th 8 × 8 block where (p,q) ϵ {0,1,....7}2, m ϵ {1,2,....I/8} and n ϵ {1,2,.... J/8}. For simplicity the individual elements are represented as Cm,n. The matrices denoting the absolute values, inter block differences and intra block differences are

where, DC m,n represents difference of absolute value, DC ha m,n is the difference between the intra block horizontal coefficients, DC va m,n is the difference between the intra block vertical coefficients, DC da m,n is the difference between the intra block diagonal coefficients, DC he m,n is the difference between the inter block horizontal coefficients and DC ve m,n is the difference between the inter block vertical coefficients. The proposed feature model is the sub model framed from the 2D co-occurrence matrices calculated from these difference coefficients. There are 10 sub models in each of the co-occurrences matrices and final rich feature set has 23,230 features from all possible co-occurrence combinations of DCT coefficients. The details of the features due to each of the sub bands are enumerated in Table 1.

This rich feature set has 23,230 features from all possible co-occurrence combinations of DCT coefficients.

2.3 ALO optimization

With such a large and diverse feature set, classification is a time and space complex problem. Hence the feature set is reduced by Ant Lion Optimization, a nature inspired meta-heuristic algorithm. The positions of the ants are the features in the search space in a specific iteration. The characteristics of the ALO algorithm are

-

The movements of the ants and antlions are random walks in search space.

-

The fitness function depends on the size of the pits built by the antlions (probability of ants getting trapped is more if the pit is larger in size).

-

In each iteration an elite antlion catches a prey.

-

Fitter ants are caught by the antlion (fitter features are selected).

-

Antlions start building new pits by repositioning themselves to the latest prey.

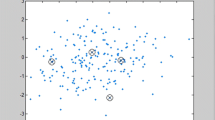

Mathematical representation of the random walk of ants is

Here ‘CU’ is the cumulative sum of random function‘s’ till ‘n’ iterations, ‘t’ is the step size of the random walk and s(t) is random function defined as 1 if rand > 0.5 and is 0 if rand < 0.5, where rand is a random number in the interval [0,1]. The nature of random walk is shown in Fig. 1 for three sets of features extracted with payload 0.5. The nature of randomness can be seen from the fluctuation in the curves around the origin (as expected for the behaviour of ants in search space).

The position of each ant is stored in matrix format for use in subsequent iterations during optimization.

here, A n,p is the p th position of the n th ant in any iteration. The number of features is n, corresponding to ants and their p positions correspond to p features. Each ant (feature vector) is optimized according to a fitness function and the fitness values are stored in a matrix,

here, f(An, 1 An, 2 An, p) is the function for calculating the optimal value of the nth ant. According to the ALO algorithm, apart from the ants, antlions also hide in the search space to catch the ants. Their positions need to be tracked and are stored in another matrix,

where, L n,p is the d th position of the n th antlion. The n antlions have d positions for d features. The fitness values of antlions are stored in a matrix,

here, f(Ln, 1 Ln, 2 Ln, d) is the function for calculating the optimal value of the nth antlion. The phenomenon of random walk may be diverse, hence to accommodate within the search space, the variables are normalized by min-max normalization technique as below.

Mn are B are the minimum and maximum values of n th feature vector, Ct is minimum of feature vector in n th iteration and Bt is the maximum value of feature in n th iteration. Normalization ensures that all the feature values are in the search space. During the random walk, the ants get trapped in an antlion’s pit. Figure 2 shows the pits built by one or more antlions.

A pit is modelled as a hyper sphere for each selected antlion and the movement of ants around the antlion in the hyper sphere is modelled according to the following equations,

C i t and D i t are the minimum and maximum of the feature values of the i th ant, C t and D t are the minimum and maximum among all feature values in the t th iteration. L n t is the n th antlion in t th iteration. When the ants come into the trap, they tend to move away, but the antlions spill or shoot sand upwards to make them slide. This sliding behaviour of the ants is modelled by decreasing radius of the hyper sphere defined by vectors C and D as

The parameter S, defines the level of accuracy [22] and is defined as

where t is the current iteration, T is the total number of iterations, u = 2 when t/T > 0.1, u = 3 when t/T > 0.5, u = 4 when t/T > 0.75, u = 5 when t/T > 0.9, u = 6 when t/T > 0.95. The values of C and D iteratively decrease mimicking the sliding of ants to the bottom of the pit (global optimal point). To acquire high probability of catching the next prey (ant), the antlions update their position to that of the hunted ant, if fitness of ant is greater than fitness of antlion.

In this research work the fitness function is sum of squares of feature values and the random walk of ants and antlions is implemented as roulette wheel selection. The number of search agents (antlions) is chosen as 100 and hence the rich image feature set of size 23,230 reduces to 232 after optimization. For 100 cover images and 100 stego images, the optimized feature set is 200 × 232. This reduced feature set is given to the fusion classifier system.

2.4 Fusion classifier system

The identification of an image as a clean or stego image is a two class problem (clean image is classified as 1 and a stego image is classified as 2). The classifiers used are individual classifiers (SVM and MLP) and their fusion by three schemes (Bayes, Decision template and Dempster Schafer). Fusion classifiers are superior as they exploit the strengths of individual classifiers but avoid their weaknesses [19]. The original feature set is divided into 3 folds. Two folds are used for training and 1 fold is used for validation.

Support Vector Machines fit the data into high dimensional feature space and separate them by hyperplane. SVM in this research uses RBF kernel with penalty factor 100 and gamma of 10. Multi Layer Perceptron (MLP) [30] is a feed forward network for non - linearly separable data. The MLP implemented in this research has 10 nodes to associate the inputs to high output response with sigmoidal activation function.

The classifier fusion methods implemented are Bayes, Decision template and Dempster Schafer [19]. These are decision based data fusion methods using the perceived data from many sources. Bayesian inference uses the conditional probability according to Bayes rule in terms of the posterior probability P(Y/X), which demands the prior knowledge of P(X) and P(X/Y). Dempster Schafer fusion deals with uncertainty in terms of changing beliefs, evidences and incomplete knowledge. To combine the effect of two hypotheses (classifiers), the rule according to Dempster Schafer is in terms of mass functions or probabilities [19]. The selected classes try to maximize the belief function. Decision Template is another fusion scheme that combines classifiers by comparing the output of classifiers with a reference (decision) template. The reference templates are measured prior to comparison. The comparison is based on similarity measure and consistency measure. This method differs from other methods in that it considers the output of all classifiers to make the final support for a class, while other methods consider the output of that class alone to calculate the support [19]. This enables a decision based on the average of decision profiles due to all elements in the training set. Classification accuracy is used to compare the performance of individual and fusion classifiers. Accuracy is (Tp + Tn)/ (Tp + Tn + Fp + Fn), Tp is True Positive or hit, Tn is True Negative or rejection, Fp is false Positive or false alarm and Fn is False Negative or miss.

3 Results

The stego image is obtained for specific payloads on randomly selected DCT coefficients of cover images with a PRNG seed as in Table 2.

Appendix shows few clean (cover) images and their embedded counterpart for a specific payload of 0.5bpdct, embedding output, the extracted features stored in .xls file and the convergence of ALO optimizer for 400 iterations. The convergence of the entire ALO for the chosen feature set is shown in Fig. 3.

After optimization the reduced features are classified. The classification accuracy for single and fusion classifiers for different payloads (0.5, 0.8, 0.01, 0.1) are tabulated in Tables 3, 4, 5, and 6 respectively. The highest accuracy values are in bold under each category.

For a payload of 0.5, the highest classification accuracy has been noticed for Decision template (72.22%) and Dempster Schafer (72.22%) fusion classifiers followed by Bayes (69.44%). Considering average accuracy, Bayes fusion classifier has the highest overall average accuracy (64.44%). When the payload is 0.8, Bayes has maximum average accuracy (61.66%) compared to all other classifiers. For payloads of 0.01 and 0.1, again Bayes classifier has the highest average classification accuracy. Table 7 shows the performance of all classifiers for different payloads but fixed PRNG value (PRNG seed = 80) during embedding. Even in this case, Bayes classifier has the highest average classification accuracy.

Comparing the average classification accuracies of all classifiers in Table 7, Fig. 4 shows that Bayes fusion classifier is the best. Considering the average classification accuracies (from Tables 3, 4, 5, and 6) of all classifiers for different payloads, Fig. 5 shows that Bayes fusion classifier outperforms all other type of classifiers. Thus Bayes fusion classifier can be considered as universal classifier for JPEG steganalysis.

Table 8 shows the timing calculations for different images for a payload of 0.5 and PRNG seed value 80 during embedding. The total time is the sum of the extraction and optimization time of both the cover and stego images along with the embedding time. The average time taken for processing one image is 18.4315 s. Appendix shows the time calculation for stego image (image number 74). Running the algorithm for steganographic embedding, feature extraction and optimization, the time for 200 images was found to be 691.34 s or 11.522 min. This is shown in Appendix. Further the classification of this optimized feature set took 55.09 s. Thus the TOTAL PROCESSING TIME IS 746.43 s or 12.44 min for 200 images.

4 Comparison with prior research

In the recent past, steganalysis with feature extraction has used limited statistical features and neural network based classifiers [13, 23]. While some parallel processing video coding algorithms [3,4,5] exist for reducing time and space complexity, their application to image processing would be expensive compared to our proposed simple genetic based ALO optimization. Though few research works have concentrated on rich models for universal steganalysis, they use statistical feature reduction techniques and like Fisher Linear Discriminant (FLD) and ensemble classifiers [12]. These ensemble methods take different portions of feature sets for classification and find the Minimal total error (in terms of false alarm and missed detection rate). Table 9 shows the comparison of this research with the earlier research work in this field.

From Table 9 it is obvious that this research has better classification accuracy (highest value of average classification accuracy is shown in bold) than other research works. Comparing with the recent research work in this field, ALO gives better classification accuracy for the most sophisticated nsF5 embedding, while others report steganalysis of HUGO and YASS [26]. Research by Chhikara [7] shows that the feature reduction rate for CCPEV-548 features is 82%. Another research by Chhikara [6] states that the reduction rate is 67% for DCT features and 38% for DWT features. Whereas the feature reduction rate in this research is 99%. This high reduction rate enables the use of rich image feature sets (23,230 features) for improved classification accuracy.

While most of the image steganalysis research analyse the results in terms of classification accuracy, research by Kodovsky [12] shows average running time for HUGO, EA and ± Embedding algorithms for their ensemble method. It is seen that for the most simple ± Embedding the running time is 1 h 20 min, for HUGO 4 h 35 min and for EA 3 h 09 min [12]. The time complexity calculation in our research shows 12.44 MINUTES for extracting, optimizing and classifying all 200 images for the sophisticated nsF5 embedding algorithm. Table 10 shows comparison of this research with our earlier research.

The research work presented in this paper gives greater classification accuracy of 64.44% (shown in bold in Table 10) than these earlier methods stated in Tables 8 and 9. Future scope of this research could be fine tuning the parameters of the ALO algorithm or changing the random selection of position of antlions (features) to some other method.

5 Significance of this research

The ALO algorithm guarantees exploration of the entire feature space as it considers the features as random walk of ants. There are only few adjustable parameters in ALO while optimizing. These characteristics justify the use of ALO compared to other nature inspired optimization algorithms. The concept of ALO based JPEG steganalysis is significant compared to related work in this field due to the following reasons,

-

The feature reduction rate obtained by ALO is far superior compared to other methods [6, 7, 26].

-

The method of direct feature optimization and classification used in this research is simpler than the state of art ensemble based feature selection method proposed by Kodovsky and Fridrich [12].

-

Compared to the various approaches of feature extraction and steganalysis [14, 20, 23, 29], this approach is not complicated as it considers all possible combinations of feature changes and then optimizes them to give better classification accuracy.

Thus Bayes classifier when used with ALO based optimization gives significant improvement in classification accuracy and reduced time complexity for JPEG steganalysis.

6 Conclusion

This research has implemented a nature inspired meta-heuristic technique for optimizing the high dimensional image features for improved image steganalysis. The steganographic embedding is due to nsF5 algorithm and the extracted features are of dimension 200 × 23,230. The extracted feature model is based on the correlation among the DCT coefficients in frequency and spatial domain. To tackle the problem of computational complexity, the feature set is reduced by Ant Lion Optimization (ALO) technique. In this technique, movements of ants and antlions are considered as random walks in search space and the positions of the ants are the features in iteration. The fitness function is due to the pits built by the antlions. The reduced feature set of dimension 200 × 232 is classified with individual (SVM and MLP) and fusion classifiers (Bayes, Decision template, Dempster Schafer). With classification accuracy and time complexity as the performance measure, results have been analyzed for different payloads and different chosen DCT coefficients (different PRNG seed values). The highest AVERAGE CLASSIFICATION ACCURACY has been noticed for Bayes fusion classifier (64.44%) when the payload is 0.5. It has been noted that the performance of fusion classifiers is good compared to individual classifiers. Hence for JPEG steganalysis, Bayes classifier with ALO based optimization gives better classification accuracy compared to existing research.

References

Bas P, Filler T, Pevny T (2011) Break our steganographic system --- the ins and outs of organizing BOSS. In: Proceedings of Information Hiding Conference 6958:59–70. http://dde.binghamton.edu/download/. Accessed 2 May 2016

Bianchi L, Dorigo M, Gambardella LM, Gutjahr WJ (2009) A survey on metaheuristics for stochastic combinatorial optimization. Nat Comput 8:239–287 http://code.ulb.ac.be/dbfiles/BiaDorGamGut2009natcomp.pdf. Accessed 30 May 2016

Chenggang Y, Yongdong Z, Jizheng X, Feng D, Liang L, Qionghai D, Feng W (2014) A highly parallel framework for HEVC coding unit partitioning Tree decision on many-core processors. IEEE Signal Processing Lett 21(5):573–576

Chenggang Y, Yongdong Z, Jizheng X, Feng D, Jun Z, Qionghai D, Feng W (2014) Efficient parallel framework for HEVC motion estimation on many-Core processors. IEEE Trans Circuits Syst Video Technol 24(12):2077–2089

Chenggang Y, Yongdong Z, Feng D, Jizheng X, Liang L, Qionghai D (2014) Efficient parallel HEVC intra prediction on many-core processor. Electron Lett 50(11):805–806

Chhikara RR, Sharma P, Singh L (2016) An improved dynamic discrete firefly algorithm for blind image steganalysis. Int J Mach Learn Cybern. doi:10.1007/s13042-016-0610-3

Chikkara RR, Singh L (2017) An improved discrete firefly and t-test based algorithm for blind image steganalysis, In: Proc. of 6th International Conference on Intelligent Systems, Modelling and Simulation (ISMS). IEEEXplore Digital library. http://ieeexplore.ieee.org/document/7311210/. Accessed May 24

Christaline JA, Ramesh R, Vaishali D (2014) Steganalysis with classifier combinations. ARPN Journal of Engineering and Applied Sciences 9(12). http://www.arpnjournals.com/jeas/research_papers/rp_2014/jeas_1214_1402.pdf. Accessed 2 May 2016

Christaline JA, Ramesh R, Vaishali D (2015) Critical review of image steganalysis techniques. International Journal of Advanced Intelligence Paradigms, Inderscience 7(3/4):368–381 www.inderscienceonline.com/doi/abs/10.1504/IJAIP.2015.073715. Accessed 27 May 2016

Christaline JA, Ramesh R, Vaishali D (2016) Optimized JPEG Steganalysis. International Journal of Multimedia and Ubiquitous Engineering, SERSC 11(1):385–396. doi:10.14257/ijmue.2016.11.1.37 Accessed 2 May 2016

Christaline JA, Ramesh R, Vaishali D (2016) Bio-inspired computational algorithms for improved image steganalysis. Indian Journal of Science and Technology 9(10). http://www.indjst.org/index.php/indjst/article/viewFile/88995/68459. Accessed 2 May 2016

Fridrich J, Kodvosky J (2012) Rich models for Steganalysis of digital images. IEEE Trans Inf Forensics Secur 7(3):868–882 http://dde.binghamton.edu/kodovsky/pdf/TIFS2012-SRM.pdf. Accessed 30 May 2016

Holub V, Fridrich J (2013) Random projection s of residuals for digital image Steganalysis. IEEE Trans Inf Forensics Secur 8(12):1996–2006 http://dde.binghamton.edu/vholub/pdf/TIFS13_Random_Projections_of_Residuals_for_Digital.pdf. Accessed 22 May 2016

Huang F, Li B, Huang J (2008) Universal JPEG steganalysis based on microscopic and macroscopic calibration. In: Proceedings IEEE International Conference on Image Processing ICIP, 52:2068–2071. http://ieeexplore.ieee.org/document/4712193/. Accessed 19 May 2016

Kaveh A, Khayatazad M (2012) A new meta-heuristic method: ray optimization. Comput Struct 112:283–294 www.sciencedirect.com/science/article/pii/S0045794912002131. Accessed 29 May 2016

Kaveh A, Ghazaan MI, Bakhshpoori T (2013) An improved ray optimization algorithm for design of truss structures. Civ Eng 57:97–112 https://pp.bme.hu/ci/article/viewFile/7166/6159. Accessed 31 May 2016

Kaveh A, Ghazaan MI, Bakhshpoori T (2013) An improved ray optimization algorithm for design of truss structures. Civ Eng 57:97–112. https://pp.bme.hu/ci/article/viewFile/7166/6159. Accessed 1 June 2016

Kodovsky J, Fridrich, J (2011) Steganalysis in high dimension: fusing classifiers built on random subspace. In: Proc. Of SPIE, Electronic Imaging, Media, Watermarking, Security and Forensics XIII, pp. 23–26. http://dde.binghamton.edu/kodovsky/pdf/Kod11spie.pdf. Accessed 21 May 2016

Kuncheva LI (2002) A theoretical study on six classifier fusion strategies. IEEE Trans Pattern Anal Mach Intell 24(2):281–286 http://machine-learning.martinsewell.com/ensembles/Kuncheva2002a.pdf. Accessed 2 May 2016

Liu Q (2011) Detection of misaligned cropping and recompression with the same quantization matrix and relevant forgery. In: Proceedings of the 3rd international ACM workshop on Multimedia in forensics and intelligence, pp 25–30. http://www.shsu.edu/~qxl005/New/Publications/Mifor2011.pdf. Accessed 29 May 2016

Liu Q (2011) Steganalysis of DCT embedding based adaptive steganography and YASS. In: Proceedings of the 13th ACM workshop on Multimedia and Security, Buffalo. https://pdfs.semanticscholar.org/ca6f/92a55790769a56fe9c4b87179a09fd50df9a.pdf. Accessed 22 May 2016

Mirjalili SA (2015) The ant Lion optimizer. Adv Eng Softw 83:80–98. doi:10.1016/j.advengsoft.2015.01 Accessed 28 May 2016

Pevny T, Fridrich J (2007) Merging Markov and DCT features for multi-class JPEG steganalysis. In: Delp EJ, Wong PW (eds) Proceedings SPIE, Electronic Imaging, Security, Steganography, and Watermarking of Multimedia Contents IX, 6505(1):3–14. https://pdfs.semanticscholar.org/b7a7/700eaf1c2803a511ac4ca71ae128e09b2a18.pdf. Accessed 29 May 2016

Rajabioun R (2011) Cuckoo Optimization Algorithm. Applied Soft Computing 11:5508–5518. dl.acm.org/citation.cfm?id=2039522. Accessed 31 May 2016

Roshini D, Samsudin A (2009) A digital steganalysis: computational intelligence approach. Int J Comput 3(1):161–170 https://www.researchgate.net/profile/Roshidi_Din/publication/228844865_Digital_Steganalysis_Computational_Intelligence_Approach/links/0fcfd5066a469e19ac000000.pdf?origin=publication_list. Accessed 25 May 2016

Sajedi H (2017) Image steganalysis using artificial bee colony algorithm. J Exp Theor Artif Intell. http://tandfonline.com/doi/abs/10.1080/0952813X.2016.1266037. Accessed 7 June 2017

Shi YQ, Chen C, and Chen W (2006) A Markov process based approach to effective attacking JPEG steganography. http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.646.5540&rep=rep1&type=pdf. Accessed 22 May 2016

Talbi EG (2009) Metaheuristics: from design to implementation, vol 74. Sons, John Wiley &

Wang Y, Liu JF, Zhang WM (2009) Blind JPEG steganalysis based on correlations of DCT coefficients in multi-directions and calibrations. In: Proceedings of the 2009 International Conference on Multimedia Information Networking and Security, 1:495–499. http://ieeexplore.ieee.org/document/5368437/. Accessed 9 June 2016

Windeatt T (2006) Accuracy/diversity and ensemble MLP classifier design. IEEE Trans Neural Netw 17(5):1194–1211 http://ieeexplore.ieee.org/document/1687930/. Accessed 12 May 2016

Yang XS (2010) Firefly algorithm, stochastic test functions and design optimization. International Journal of Bio-Inspired Computation 2(2):78–84 https://arxiv.org/pdf/1003.1409.pdf. Accessed 1 June 2016

Yang XS, Deb S (2010) Engineering optimisation by cuckoo search. International Journal of Mathematical Modelling and Numerical Optimization 1(4):330–343

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendices

-

A.

Few of the Clean and Stego images in this research for Payload = 0.5 bpdct

Embedding output

-

B.

Features extracted from 200 images (100 clean and 100 stego) for payload of 0.5.

-

C.

Convergence of Elite fitness by ALO optimizer for 400 iterations

-

D.

Time Complexity Calculation for Image number 74

-

E.

Time Calculation for 200 images (Feature extraction and optimization)

Rights and permissions

About this article

Cite this article

Anita Christaline. J, Ramesh. R, Gomathy. C et al. Nature inspired metaheuristics for improved JPEG steganalysis. Multimed Tools Appl 77, 13701–13720 (2018). https://doi.org/10.1007/s11042-017-4983-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-017-4983-4