Abstract

In this paper, we propose a new semantic descriptor based on axiomatic fuzzy set (AFS) to describe facial expressions. The new descriptor has two advantages: The first one is that it does not depend on priori-knowledge, when one uses it to construct semantic concepts. According to the distribution of feature data, one can quickly establish semantic concepts using the fuzzy membership degree. The second one is that the descriptor can describe complex features by implementing operation on semantic concepts. The developed descriptor can provide variations and relations of expression features. Finally, we implement our method on FEI and CK+ database, and make semantic interpretations for various expressions. Meanwhile, the performance is evaluated with the state-of-the-art methods such as C4.5, Bayes, Decision Table, Cart and Reduced error pruning tree.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Facial expression plays an important role in our daily life and provides rich and powerful source of communicative information about human emotions. In the past two decades, it not only has attracted much attention, but also numerous solution approaches have been proposed for expression recognition.

The existing methods for facial expression recognition can be generally categorized into subspace methods [1, 41, 42], local feature methods [6, 43, 45], facial action unit methods [26, 34] and geometric feature methods [3, 28]. The most popular subspace methods mainly focus on principal component analysis (PCA) [39], linear discriminant analysis (LDA) [47] and support vector machine [4]. These algorithms pay much attention on the image global properties and extract the essential features. Based on a derived projection matrix, the original expressions are represented in a new subspace. However, the facial expression images are usually non-linear, and the details are distributed in different regions. Due to emphasis on features of local region, local feature methods are usually applied to solve these troubles. For example, the local binary patterns (LBP) and Gabor [10, 45, 48] have attracted much more attention, because they can well solve expressional variations, illuminations and occlusions. However, all above methods have ignored semantic descriptions/features of facial components. When the facial action coding system (FACS) [9] emerged, it was rapidly used in expression recognition [25, 26]. According to this system, the changes of facial expression could be visually described, and clearly endowed by semantic concepts. However, the numerous landmarks in this system increase difficulties of applications because they are manually marked. Accompanied with the automatic landmark detector [5], geometric feature method has attracted much attention on facial expression again [17, 44]. Generally, the fuzzy methods are used to interpret the concepts of facial components, and related rules will be generated for the corresponding results. Specially, these landmarks can well represent the shape of facial components and bridge semantic gap between low-level visual features of image and high-level semantic concepts. Usually, the combining feature including the facial action unit and geometric feature is applied in facial expression recognition [2, 32, 38]. However, all these methods need priori-knowledge such as fuzzy models and prior-defined semantic features.

After thorough comparisons, Axiomatic fuzzy set (AFS) [18, 20] has broken the limitation of the existing fuzzy models, and it has extensive applications such as text recognition [33] and knowledge discovery [35]. Specially, it has been applied in facial characteristics description [16, 31] because of its advantage in semantic interpretability. In the AFS theory, fuzzy sets (membership functions) and their logic operations are algorithmically determined according to the distributions of original data and the semantics of the fuzzy sets. The AFS framework facilitates the studies on how to convert the information in databases into the membership functions and their fuzzy logic operations, by taking both fuzziness (subjective imprecision) and randomness (subjective uncertainty) into account. The advantage of AFS framework is that it does not be required to define membership function and initial value in advance as all these can be learned from original database.

In this paper, a new descriptor is proposed in framework of AFS. This descriptor not only interprets expression features using semantic concepts, but also serves as a classifier for expression recognition. The contributions of this paper are listed as follows:

-

We propose a new descriptor extraction algorithm for facial expressions. In comparison with traditional methods, it can directly extract semantic concepts by using the data distribution.

-

By considering differences among all these seven expressions, each semantic concept will be built respectively, which can be comprehended by observers intuitively.

-

Moreover, in order to verify our method, we have conducted experiments for analyzing expressions on FEI [36, 37] and CK+ [13, 24]. Finally, there is a comparison about performance with some of state-of-the-art methods such as C4.5 [29], Bayes [30], Decision Table (DT) [12], Cart [15] and Reduced error pruning tree (REP) [8].

The remainder of this paper is organized as follows: in section 2, we give a brief introduction of related works. Section 3 describes facial expression features constructed by landmarks, and details the process of the proposed method. Experimental results are reported in section 4. Finally, we conclude the paper in Section 5.

2 Preliminaries

2.1 AFS theory

AFS theory was proposed by Liu [18,19,20], whose fuzzy sets (membership function) and logic operations are algorithmically defined following the distributions of original data and the semantic concepts of the fuzzy sets. In its framework, AFS does not demand pre-defined membership functions as those can be learned from original data, so that it is suitable for transforming facial characteristics to semantic concepts. In what follows, we offer an illustration to AFS theory with one simple example. Let us assume that there are three attributes “The distance between the points of inner canthus and the points of inner eyebrow”, “The angle between the point of inner canthus and the point of inner eyebrow in right eye” and “The angle between the point of inner canthus and the point of inner eyebrow in left eye” to describe the facial characteristics. Let M = {m j,k ⎹ 1 ≤ j ≤ 3, 1 ≤ k ≤ 3} be a set of simple semantic concepts, where m j,1, m j,2, m j,3 is respectively corresponding to the semantic concept “large”, “medium” and “small” associated with feature f j . We will generate a complex semantic concepts ∏ m∈A m(A ⊆ M) for each set of concept terms using a simple concept set belonged to M, which represents a conjunction of the concept terms in A. For example, let A 1 = {m 1,3, m 2,1, m 3,2} ⊆ M, a new fuzzy set “m 1,3 and m 2,1 and m 3,2” with the linguist interpretation: “The distance between the points of inner canthus and the points of inner eyebrow is smaller and The angle between the point of inner canthus and the point of inner eyebrow in right eye is larger and The angle between the point of inner canthus and the point of inner eyebrow in left eye is medium” can be represented as ∏ m∈A m = m 1,3 m 2,1 m 4,2. Similarly, Let A 2 = {m 1,1, m 2,3, m 3,2}, A 3 = {m 1,3, m 3,1} ⊆ M, a new concept set as the disjunction of ∏ m∈A1 m, ∏ m∈A2 m, ∏ m∈A3 m, i.e., “m 1,3 m 2,1 m 4,2 or m 1,1 m 2,3 m 3,2 or m 1,3 m 3,1” can be represented by ∑3 u= (∏ m∈Au m) = ∏ m∈A1 m + ∏ m∈A2 m + ∏ m∈A3 m. The set EM can be defined in the following way.

In addition, there is an equivalence relation [22, 23] between α and β. For example, there are two complex concepts, γ 1 = m 1,3 m 2,1 m 3,1 + m 1,1 m 2,2 m 3,2 + m 1,3 m 3,1, γ 2 = m 1,3 m 2,1 m 3,1 + m 1,3 m 3,1. They are equivalent according to equivalence relation. This means that the concept “m 1,1 m 2,2 m 3,2” is redundant. Meanwhile, AFS demonstrates that (EM, ˄, ˅) is a completely distributive lattice if the lattice operators “˄” and “˅” are defined as follows: for any fuzzy sets ∑ i∈I (∏ m∈Bi m) ∊EM, ∑ j∈J (∏ m∈C j m) ∊EM.

where for any k∊ I∪J (the disjoint union of I and J, i.e., every element in I and every element in J are always regarded as different elements in I∪J), D k = A k if k ∊ I, and D k = B k if k∊J.

In the AFS framework, the membership function is utilized to interpret concept of m j,k . Its ordered relation is defined as follows. Let X be an observed data set, and M be a set of fuzzy terms on X. For A ⊆ M, x∊ X, we define

where a linearly ordered relation is denoted by “\( \underset{\bar{\mkern6mu}}{\succ } \)”. For m ∊ M, “x \( \underset{\bar{\mkern6mu}}{\succ } \) m y” implies that the degree of x belonging to m is larger than or equal to that of y. \( {A}^{\underset{\bar{\mkern6mu}}{\succ }}(x) \) is the set of all elements in which their degrees of belonging to set ∏ m∈A m are less than or equal to that of x. \( {A}^{\underset{\bar{\mkern6mu}}{\succ }}(x) \) is determined by the semantics of fuzzy set A and the probability distribution of data.

Theorem 1 ([20])

Let v be a fuzzy term on X. v: X ⇒ R+ = [0,∞). ρ v is called a weight function of the simple concept v if ρ v satisfies the following conditions:

-

1.

\( \begin{array}{cc}\hfill For\hfill & \hfill x\in X,{\rho}_v(x)=0\iff x{\underset{\bar{\mkern6mu}}{\nsucc}}_m x;\hfill \end{array} \)

-

2.

\( \begin{array}{cc}\hfill For\hfill & \hfill x, y\in X,{\rho}_v(x)\ge {\rho}_v(y)\iff x{\underset{\bar{\mkern6mu}}{\nsucc}}_m y.\hfill \end{array} \)

The coherence membership function is defined as follows:

where, Nu is the number of observed times of sample u. Some other properties and its applications of AFS are detailed in the literature [20,21,22,23].

2.2 The max-dependency, max-relevance and min redundancy algorithm (mRMR)

In this section, a feature selection algorithm will be briefly introduced, which is established according to mutual information theory [7, 27]. Its aim is to find a feature set M with n features {m i }, which jointly exhibit the largest dependency on the target class C. Usually, it searches the subset M n − 1 with n − 1 features according to the contribution to the largest increase of entropy I(M n ; C), which is presented as follows:

where M n = {M n−1, m n } can be treated as a multivariate variable. Thus, by the definition of mutual information, it can be represented in the form:

where H(·) represents the entropy of the respective multivariate (or univariate) variables. Similarly, the quantity J(M n ) = J(m 1, m 2, …, m n ) is defined for scalar variables m 1, m 2, …, m n

Then, the formula (11) and (10) can be easily derived as follows:

By substituting them in the corresponding term in formula (6), we can rewrite the form of entropy I(M n ;C) as follows:

However, due to insufficient samples, high-dimensional and intensive computing required in above estimation, it is hard to arrive at an accurate estimation. Therefore, the maximal-relevance-minimal-redundancy (mRMR) algorithm [27], is proposed to select a subset of M, by choosing mutually exclusive features via optimizing the maximal relevance criterion D(M,C) and minimal redundancy condition R(M). In summary, by combining the above constraints, the operator Φ(D, R) can be defined. Here, the terms D and R need to be optimized simultaneously. The simplest form is in the following form:

where the mutually exclusive (independent) features are extracted through mining J(Mn) and the max-relevance features will be chosen by maxing the J(Mn, C). Finally, mRMR can select a compact set of superior features. The details are illustrated in literature [7, 27].

3 SDAFS: a semantic descriptor based on AFS

In this section, we will create a semantic descriptor based on AFS (SDAFS). The algorithmic process mainly contains three stages. The first one is to select features. The second stage is to establish semantic description for each individual. The third one is to optimize concept set for obtaining the best interpretation for each expression. We first interpret the key symbols used in this paper in Table 1.

3.1 Construction of expression features construction

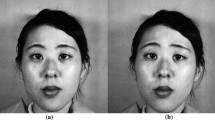

When expression is changed, the geometrical shape of facial components will be varied intuitively (see Fig. 1).

According to literature [10, 14], the characteristics are main concentrated on facial principal regions such as eyes, mouth and nose. Therefore, we construct 30 expression features by landmarks [5] for describing expression variations. Let L K = {l 1, l 2, …, l k } be a set of landmarks extracted from each expression image. l i = (r i , c i ) ∈L K presents the points of facial component. r i and c i are i-th coordinates of point l i . In this case, the d(l h , l k )(l h , l k ∈ L K ) represents the distance between two points. The ∠(l h , l k , l p )(l h , l k , l p ∈L K ) indicates the angle among three points. Their calculations are illustrated as follows:

For all the measurements including d(l h , l k ) and cos∠(l h , l k , l p ), there are 30 expression features F total = {f 1, f 2, · · ·, f 30} to be established, which are listed illustrated in Table 2.

3.2 Construction of compact feature subset by using mRMR

The constructed features can well describe the characteristics of facial components. However, the effectiveness is distinction among various features for different expressions. In this study, mRMR is used to select the compact feature subset for differentiating expressions. The aim of mRMR is to search a subset F∈F total , which could satisfy the minimal redundancy and maximal relevance simultaneously. This strategy consists of two steps.

-

First, according to formula (13), the score S score j is built to evaluate the performance for corresponding f j ∈F total . Then, the score S score = {S score 1, S score 2, · · ·, S score 30} will be obtained.

-

Second, the element S score j∈S score will be sorted in a descent order. Then, according to the score S score j, the feature subset F for each expression can be determined. The f j , whose score is bigger, will be remained.

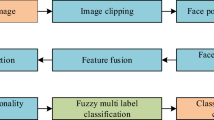

3.3 Semantic description for each expression

By using the mRMR, the compact feature subset F can be obtained. However, any expression characteristics are different from others. Therefore, a new approach is proposed in this section to extract the representative features, and establish semantic concepts for describing various expressions. The process is divided into three steps, and the flow chart is illustrated in Fig. 2.

3.3.1 Step A: features selected from each individual

According to the sample label, it is very important to extract salient features, which can describe x i ∈X effectively. By comparing with literature [31], we propose a new approach here to select the valuable features according to discriminative power of features. Technically, let F = {f 1, f 2, · · ·, f S } be a set of features, X = {x 1, x 2, · · ·, x n } be a set of samples. The \( {\mathcal{R}}_{k/{x}_i}^{f_s} \) (1 ≤ s ≤ S) denotes the k nearest neighbors around x i with f j ∈F, \( {\mathcal{R}}_{k/{x}_i}^F \) presents the k nearest neighbors around x i with F = {f 1, f 2, · · ·, f S }. Here, the distance Dis (fs)(x i -x j ) between x i and x j (x i , x j ∈X Ci , x i ≠ x j ) is estimated by the Euclidean Distance. Similarly, the distance Dis (F)(x i -x j ) between x i and x j is also estimated by the Euclidean Distance.

For each x i, we utilize the mutual neighbors to estimate the influence of f s between \( {\mathcal{R}}_{k/{x}_i}^{f_s} \) and \( {\mathcal{R}}_{k/{x}_i}^F \). If the neighbors of x i in f s are similar to that of x i in F, we consider f s could represent x i in feature set F.

where \( {\mathcal{R}}_N^{f_s} \) is the number of neighbors between f s and F, which can be used to reflect the performance of feature f s . It means that the \( {\mathcal{R}}_N^{f_s} \) contains more discriminative information than F on data X. Its extraction process is described in Algorithm 1.

3.3.2 Step B: semantic concepts for each individual

In section 3.2, we obtained a set of features F′ for each x i . And each f j is divided into three semantic concepts “m j,1”, “m j,2” and “m j,3”, which corresponds to “large”, “medium” and “small” respectively. Then, we can obtain the semantic concept set for every x i as follows.

where δ 1 is a threshold for selecting m j,k , which can describe x i well. However, when the expression happens, several facial components will change their shape simultaneously. Therefore, the conjunction semantic concept set A xi is selected for describing x i by:

where δ 2 is a threshold for selecting the semantic concept A xi. Then, \( {A}_{remain}^{x_i}=\prod {m}_{j, k}\left({m}_{j, k}\subset {M}^{x_i}\right) \) is a set of conjunction concepts set. However, we expect to obtain the best description A x i select∈A xi for describing x i . Assume that \( {\mathcal{R}}_{k/{x}_i}^{A_s^{x_i}} \) is the k neighbors around x i with semantic concept \( {A}_s^{x_i}\in {A}^{x_i} \) and for each x i , we can extract the best semantic concept \( {A}_{select}^{x_i} \) according the number of mutual neighbors.

where \( {\mathcal{R}}_N^{A_s^{x_i}} \) is the number of neighbors between \( {A}_s^{x_i} \) and \( {A}^{x_i} \). Then the \( {A}_{select}^{x_i} \) corresponding to maximum \( {\mathcal{R}}_N^{A_s^{x_i}} \) will be selected for x i . Similarly as in section 3.2, the distance \( {D}^{\left({A}_s^{x_i}\right)}\left({x}_i-{x}_j\right) \) between x i and x j (x i , x j ∈X Ci , x i ≠ x j ) is measured by the Euclidean Distance. However, the feature value, which describes x i under \( {A}_s^{x_i} \), is replaced by membership value calculated by formula (5).

3.3.3 Step C: optimizing the semantic concepts for each group

In this section, the main aim is to search the best semantic concepts for each expression. From section 3.3.2, we have obtained the salient concept for each x i ∈X Ci . Then, we can summarize a set of concepts EM Ci for C i class as follows.

However, there are redundant concepts in EM Ci, which may generate weak influence for C i class. As well known, the compactness within class and separability between classes are very important for discriminant feature selection. However, it is difficult to accomplish compactness and separability simultaneously. Hence, we design a new optimization criterion for selecting the best semantic concepts for each group according to compactness, separability and weighting.

where R Ci(ω η ) is considered as a quotient for satisfying greater separability and smaller compactness simultaneously. Here Class sep and Class com signify the separability and compactness respectively. And the quotient is larger, the result is better.

where \( {\mu}_{\omega_{\eta}}\left({x}_i\right) \) represents the membership degree of x i under semantic concept ω η . n i is the number of samples in i-th class. N is the total number of all samples. \( {\mu}_{\omega_{\eta}}\left(\overline{X_{C_i}}\right) \) is the mean value of all samples in X Ci . Then, we can introduce R Ci(ω η ), W Ci(ω η ) and G Ci(ω η ) as follows:

where, x i ∈X Ci , ω η ∈EM Ci, x t ∈X, x t ∉ X Ci . X Ci represents the i-th class. Then, the process of optimizing semantic concepts for each group is described in detail as Algorithm 2.

3.4 Inference

In order to predict the class label for new samples which are not included in X, we need the semantic concept set ξ expressed over the entire input space U 1 × U 2 × · · · × U s ⊆ R s(X ⊆ U 1 × U 2 × · · · × U s ), where U i is the feature associated with the semantic concept term m j,k in M. If the distribution of data set is given, the membership function of semantic concept set γ can be derived according to the observed data. For any semantic concept ξ∈EM, we expand its universe of discourse X to U 1 × U 2 × · · · × U s . For each x = (u 1, u 2, · · ·, u s ) ∈ U 1 × U 2 × · · · × U s , ξ = ∑ i∈I (∏ m∈Ai m) ∈EM, the lower bound of membership function of semantic concept set γ is defined over U 1 × U 2 × · · · × U s as follows:

where \( {L}_{A_i}^x\subseteq X \), i ∈ I are defined as \( {L}_{A_i}^x=\left\{ y\in X\left| x{\underset{\bar{\mkern6mu}}{\succ}}_m y,\forall m\in {A}_i\right.\right\} \). We call \( {\mu}_{\xi}^L(x) \) the lower bound of membership function of ξ. In virtue of formula (26), we can expand the fuzzy set \( \xi ={\sum}_{i\in I}\left({\prod}_{m\in {A}_i} m\right)\in EM \), from the universe of discourse X to the universe of discourse U 1 × U 2 × · · · × U s . Therefore, by the fuzzy rule-base, we can establish fuzzy-inference systems whose input space is U 1 × U 2 × · · · × U s . The membership function \( {\mu}_{\xi}^L(x) \) is dependent on the distribution of training examples and the AFS fuzzy logic.

When, new pattern x∈U 1 × U 2 × · · · × U s is provided with unknown class label, we can calculate the membership degree \( {\mu}_{\xi}^L(x) \) with (26), where \( {\xi}_{C_k} \) is the fuzzy description of the class C i . Finally, x belongs to the class C q , if \( q= \arg { \max}_{1\le k\le C}\left\{{\mu}_{\xi}^L(x)\right\} \).

4 Experimental results

In all the experiments, the membership functions of semantic concepts are determined by formula (5) in Theorem 1; for any \( {m}_{j, k}\in {M}_j,{\rho}_{m_{j, k}}\left({x}_i\right)=1 \); \( {N}_{x_i}=1 \) denotes x i is observed once. Three semantic concepts are defined on each feature f j . m j,1, m j,2, m j,3 are semantic concept terms, “large”, “medium”, “small” associated with the feature f j in F. m j,1 with the semantic meaning “the value on f j is large”, “m j,2 with the semantic meaning “the value on f j is medium”, and m j,3 with the semantic meaning “the value is closer to the small on f j ”.

We conduct our experiments on database FEI [36, 37] and CK+ [13, 24]. The FEI contains 2800 images coming from 200 different subjects (100 male, 100 female), which are gathered by college students of Brazil. Each frontal face includes two expressions (natural and happy), which are used in our experiment. CK+ [13, 24] is a sequence of images including 123 subjects. In which, the expression is changed from the neutral face to the corresponding peak. In order to compare the differences among expressions, we only use the peak expression.

In addition, we extract the semantic concepts for interpreting the differences of expressions. Meanwhile, we consider SDAFS as a classifier to have a comparison of performance with some state-of-the-art classifiers such as C4.5 [29], Bayes [30], Decision Table (DT) [12], Cart [15] and Reduced error pruning tree (REP) [8].

4.1 The semantic description for expression on FEI

In this section, we select natural and smile images from FEI (NH-FEI), which include 400 samples (natural 200, happy 200), to verify our method. In order to distinguish each expression, the symbol ξ C1 and ξ C2 represent a set of semantic concepts of natural and happy respectively. The result is as follows:

-

ξ C1 = m 17,3. Its semantic interpretation is as follows: “The perimeter of mouth is smaller”;

-

ξ C2 = m 17,1. Its mean is that: “The perimeter of mouth is smaller is larger”.

According to the semantic concepts, we can easily observe that the mouth can obviously differentiate natural and happy (see Fig. 3). The result denotes that the mouth is best important feature for distinguishing natural and happy. In addition, the result also implies the change extent of facial component according to the semantic concept of mouth. For example, the perimeter shows the open extent in happy is larger than that of natural.

In order to observe the effect of SDAFS, we make 10-fold cross-validation on NH-FEI. And the experiments are executed 5 times. Here, “A” represents average accuracy in one experiment; “C” denotes the average number of correct testing samples in one experiment; “N” is the number of testing samples; “std” presents standard deviation of average accuracy. The result is illustrated in Table 3.

4.2 The semantic description for expression on CK+

According to the results in [48], samples are often misclassified because of the similar appearance variations between anger and sadness. In addition, due to the sample number of fear expression is very small, we only select four expressions such as natural, disgust, happy and surprise in our experiment, and the peak expressions of disgust, happy and surprise will be selected. And we execute 10-fold cross-validation on each database to estimate the performance of classifiers.

4.2.1 The difference description among natural, disgust and surprise

In this section, we extract natural, disgust and surprise images from CK+ as a new expression database (NDS-CK+). It contains 225 samples (natural 83, disgust 59, surprise 83). The symbol ξ C1, ξ C2, and ξ C3 denote a set of semantic concepts of natural, disgust and surprise respectively. The results are as follows:

-

ξ C1 = m 23,1 with the semantic concept is that: “The angle between corners of mouth and the down point of mouth is larger”;

-

ξ C2 = m 1,3 + m 8,3 with the semantic concept is that: “The distance between the point of inner canthus point and the point of inner eyebrow” or “The height of eyes is smaller”;

-

ξ C3 = m 18,1 m 19,1 + m 15,1 m 19,1 with the semantic concept is that: “The area of mouth is larger and the angle of right corner of the mouth is larger” or “the height of mouth is larger and the angle of right corner of the mouth is larger”.

According to the above results, we can obtain the differences of various expressions with semantic concepts. For example, ξ C1 denotes three movements of facial components such as eyebrows downed and eyes squinted. ξ C3 presents the mouth is opened. Moreover, the result illustrates the characteristics of disgust expression is concentrated on eyes region and that of surprise is mouth region. The differences are described in Fig. 4.

Then, a 10-fold cross-validation is carried out 5 times for comparing with other classifiers on NDS-CK+. The results are illustrated in Table 4. Here “A” represents average accuracy in one experiment; “C” denotes the average number of correct testing samples in one experiment; “N” is the number of testing samples;“std” presents standard deviation of average accuracy.

4.2.2 The difference description among natural, happy and surprise

In this section, we make a combining database, which includes natural, happy and surprise (NHS-CK+). The database contains 235 samples (natural 83, happy 69, surprise 83). In order to distinguish each expression, the ξ C1, ξ C2 and ξ C3 indicate natural, happy and surprise respectively. The results are as follows.

-

ξ C1 = m 23,1 with the semantic concept is that: “The angle between corners of mouth and the down point of mouth is larger”;

-

ξ C2 = m 21,1 with the semantic concept is that: “The angle between corners of mouth and nasal peak is larger”;

-

ξ C3 = m 15,1 + m 15,1 m 19,1 with the semantic concept is that: “The height of mouth is larger” or “The height of mouth is larger and the angle of right corner of the mouth is larger”.

According to these results, we can obtain the differences among natural, happy and surprise, which are focused on mouth region (see Fig. 5.). For example, ξ C2 denotes the mouth corners will be stretched into sides, when happy happened. It leads to increasing the angle (f 21). However, ξ C3 denotes that the surprise expression is also emphasized on mouth region.

In this case, we execute 5 times 10-fold cross-validation on NHS-CK+. Here, “A” represents average accuracy in once experiment; “C” denotes the average number of correct testing samples in once experiment; “N” is the number of testing samples;“std” presents standard deviation of average accuracy. The result is illustrated in Table 5.

4.2.3 The difference description among natural, disgust, happy and surprise

In this section, we make a combining database, which includes natural, disgust, happy and surprise (NDHS-CK+). The database contains 294 samples (natural 83, disgust 59, happy 69, surprise 83). In order to distinguish each expression, ξ C1, ξ C2, ξ C3 and ξ C4 indicate natural, disgust, happy and surprise respectively. The results are as follows.

-

ξ C1 = m 23,1 + m 16,2 m 23,1 with the semantic concept is that: “The angle between corners of mouth and the down point of mouth is larger” or “The width of mouth is medium and the angle between corners of mouth and the down point of mouth is larger”;

-

ξ C2 = m 8,3 + m 8,3 m 11,3 + m 10,3 m 11,3 with the semantic concept is that: “The height of eyes is smaller” or “The height of eyes is smaller and the angle between points of top and down of eye and inner canthus in left eye is smaller” or “The angle between points of top and down of eye and inner canthus in right eye is smaller”;

-

ξ C3 = m 21,1 + m 16,1 m 21,1 with the semantic concept is that: “The angle between corners of mouth and nasal peak is larger” or “The width of mouth is larger and the angle between corners of mouth and nasal peak is larger”;

-

ξ C4 = m 1,1 m 15,1 + m 15,1 m 19,1 + m 15,1 m 20,1 + m 15,1 m 23,3 + m 19,1 m 23,3 with the semantic concept is that: “The distance between the points of inner canthus and the points of inner eyebrows is larger and the height of mouth is larger” or “The height of mouth is larger and the angle of right corner of the mouth is larger” or “The height of mouth is larger and the angle of left corner of the mouth is larger” or “The height of mouth is larger and the angle between corners of mouth and the down point of mouth is smaller” or “The angle of right corner of the mouth is larger and the angle between corners of mouth and the down point of mouth is smaller”.

According to these semantic results, we can obtain two important observations of facial expression. First, the differences of facial regions can be extracted in various expressions such as natural, disgust, happy and surprise (see Fig. 6). Second, the movements of facial components can be represented using semantic concepts clearly. For example, eyebrows frowned and eyes squinted are two representative action in disgust expression. Grin is a typical action in happy expression. Mouth opened and eyebrows rise are also two facial movements, when surprise happens.

Similarly, we can still execute 5 times 10-fold cross-validation on NDHS-CK+. Here, “A” represents average accuracy in once experiment; “C” denotes the average number of correct testing samples in once experiment; “N” is the number of testing samples;“std” presents standard deviation of average accuracy. The result is illustrated in Table 6.

4.3 Performance analysis

According to these experimental results, we can obtain some insights in the performance of the classifier. However, those results do not provide enough support for drawing a strong conclusion in favor or against any of the studied methods. In order to achieve a convincing conclusion, we resort ourselves to statistical testing of the results. The Friedman Test [11, 40] is a nonparametric test that is based on the relative performance of classification method in terms of their ranks: for each dataset, the methods to be compared are sorted according to their performance, i.e., each method is assigned a rank (in case of ties, average ranks are assigned). Therefore, under the null hypothesis, the Friedman statistic is as follows:

where k is the number of classifiers and N is the number of data sets. \( {r}_i^j \) is the rank of classifier j on the data set i and \( {R}_j=\raisebox{1ex}{$\left({\sum}_{i=1}^N{r}_i^j\right)$}\!\left/ \!\raisebox{-1ex}{$ N$}\right. \) is the average rank of classifier j. The Friedman statistic is asymptotically χ 2-distributed with k − 1 degrees of freedom. If N and k are not large enough, it is recommended to use the following correction that is F-distributed with (k − 1) and (k − 1)(N − 1) degrees of freedom:

We now evaluate the performance using the Friedman test. The values of N and k are set to 3 and 7 respectively. First the average rank R j is determined (see Table 7).

According to formula (28), the value of _F is 11.483, while the critical value for the significance level α = 0.05 is 2.901. Thus, the null-hypothesis can quite safely be rejected, which means that there are significant differences in the classifiers performance. Given the result of the Friedman Test, we conducted the Nemenyi Test [11] as a post-hoc test to compare the classifiers in a pairwise manner. According to this test, the performance of two classifiers is significantly different if the distance of the average ranks exceeds the critical distance CD.

where the q α is taken from the table of normal distribution, the value CD = 3.770. According to R j in Table 7, the performance among classifiers can be observed in detail in Fig. 7. From Fig. 7, we evaluate the performance of various classifiers. Here, the performance of SDAFS and Bayes is similarity; the performance of C4.5 and Cart is similarity; the performance of Cart, REP and DT is similarity. And, the performance of SDAFS and Bayes is better than that of REP and DT.

However, considering the semantic interpretation of expression, the proposed approach is quite convincing. Then, in order to compare the effect, the pixel features will be addressed in expression recognition.

4.4 Expression recognition with holistic features

In last section, we will execute two experiments to show the holistic pixel features are not as effective as the features extracted in this paper. For this purpose, PCA [39], LDA [47] and Local Binary Patterns (LBP) [46] are used to recognize expressions using pixel features. Meanwhile, we carry out 10-fold cross-validation in experiments. In order to ensure the same resolution, all images are cropped into a new size 100 ∗ 100 (see Fig. 8).

Here, “A” represents average accuracy in one experiment; “C” denotes the average number of correct testing samples in one experiment; “N” is the number of testing samples. The result is illustrated in Tables 8, 9, and 10.

By comparing with the performance results by using the geometry features and pixel features on NDS-CK+, NHS-CK+ and NDHS-CK+. The pixel features are much worse than that of geometry features. The results indicate that the illumination intensity can influence pixel values, which could lead to error in recognition process. In addition, another advantage of geometry features is that it breaks the limitation of image resolution and illuminations.

5 Conclusions

In this paper, we propose a new approach for facial expression based on the framework of AFS theory. The developed descriptor not only describes the characteristics of various expressions, but also bridges the gap between primitive features and semantic concepts. When certain expression happened, its shape could directly reflect the differences among expressions. Firstly, we establish expression features using landmarks, and then extract the semantic concepts with its distribution. Secondly, we design a method to select the effective features. In the same class, we estimate representative effectiveness of features for each sample. We finally select the better features as its representation. Eventually, an optimization criterion is built to extract semantic concept sets for each expression. Then, we estimate four combining data sets such as NH-FEI, NDS-CK+, NHS-CK+ and NDHS-CK+, and conduct the experiments for analyzing expression characteristics respectively. The results obtained on NH-FEI show that if one only differentiates the natural and happy, the feature of mouth is obvious (m 17,3 and m 17,1). Then, we have a study on disgust and surprise. For example, the result on NDS-CK+ represents that the differences among natural, disgust and surprise focuses on eyes (m 8,3), and eyebrows (m 1,3) and mouth (m 15,1, m 18,1 and m 19,1). Similarly, the results on NHS-CK+ indicate the main differences among natural, happy and surprise still concentrate on mouth (m 23,1, m 21,1, m 15,1 and m 19,1). Finally, the experiment on NDHS-CK+ denotes the regions of eyes and mouth are important to analyze facial expressions. In addition, the results demonstrate SDAFS can describe the various expressions using semantic concepts, which conforms to real variation of facial components in various expressions.

Furthermore, we have an accuracy rate test between SDAFS and some state-of-the-art methods such as C4.5, Bayes, DT, Cart and REP. In order to compare their performance, the Friedman Test is applied in examination. The results show that the performance of SDAFS and Bayes is similar, and better than that of REP and DT in analyzing facial expressions.

In the future, the expression images will be collected continuously. Meanwhile, more advanced optimization schemes will be researched. Specially, we will have a deep research about facial expression in different cultural backgrounds.

References

An G, Liu S, Jin Y, Ruanc Q, Lu S (2014) Facial expression recognition based on discriminant neighborhood preserving nonnegative tensor factorization and ELM. Mathematical Problems in Engineering 2014:10. doi:10.1155/2014/390328

Chakraborty A, Konar A, Chakraborty UK, Chatterjee A (2009) Emotion recognition from facial expressions and its control using fuzzy logic. IEEE Trans Syst Man Cybern Part A Syst Hum 39:726–743

Cheng S-C, Chen M-Y, Chang H-Y, Chou T-C (2007) Semantic-based facial expression recognition using analytical hierarchy process. Expert Syst Appl 33(1):86–95

Chew SW, Lucey S, Lucey P, Sridharan S, Conn JF (2012) Improved facial expression recognition via uni-hyperplane classification. 2012 I.E. Conference on Computer Vision and Pattern Recognition (CVPR), pp 2554–2561

Cootes TF, Taylor CJ, Cooper DH, Graham J (1995) Active shape models-their training and application. Comput Vis Image Underst 61:38–59

De Marsico M, Nappi M, Riccio D (2010) FARO: face recognition against occlusions and expression variations. IEEE Trans Syst Man Cybern Part A Syst Hum 40:121–132

Ding C, Peng H (2005) Minimum redundancy feature selection from microarray gene expression data. J Bioinforma Comput Biol 3:185–205

Elomaa T, Kaariainen M (2001) An analysis of reduced error pruning. J Artif Intell Res 15:163–187

Ekman P, Friesen WV (1978) Facial action coding system: a technique for the measurement of facial movement. Consulting Psychologists Press, Palo Alto, CA

Hernandez-Matamoros A, Bonarini A, Escamilla-Hernandez E, Nakano-Miyatake M, Perez-Meana H (2016) Facial expression recognition with automatic segmentation of face regions using a fuzzy based classification approach. Knowl-Based Syst 110:1–14

Huhn JC, Hullermeier E (2009) Fr3: a fuzzy rule learner for inducing reliable classifiers. IEEE Trans Fuzzy Syst 17:138–149

Huysmans J, Dejaeger K, Mues C, Vanthienen J, Baesens B (2011) An empirical evaluation of the comprehensibility of decision table, tree and rule based predictive models. Decis Support Syst 51:141–154

Kanade T, Cohn JF, Tian Y (2000) Comprehensive database for facial expression analysis. IEEE International Conference on Automatic Face and Gesture Recognition, pp 46–53

Lee SH, Ro YM (2016) Partial matching of facial expression sequence using over-complete transition dictionary for emotion recognition. IEEE Trans Affect Comput 7:389–408

Lee TS, Chiu C-C, Chou Y-C, Lu C-J (2006) Mining the customer credit using classification and regression tree and multivariate adaptive regression splines. Comput Stat Data Anal 50:1113–1130

Li Q, Ren Y, Li L, Liu W (2016) Fuzzy based affinity learning for spectral clustering. Pattern Recogn 60:531–542

Liang H, Liang R, Song M, He X (2016) Coupled dictionary learning for the detail-enhanced synthesis of 3-d facial expressions. IEEE Trans Cybern 46:890–901

Liu X (1998) The fuzzy sets and systems based on AFS structure, EI algebra and EII algebra. Fuzzy Sets Syst 95:179–188

Liu X (1998) The fuzzy theory based on afs algebras and afs structure. J Math Anal Appl 217:459–478

Liu X, Pedrycz W (2009) Axiomatic fuzzy set theory and its applications. Studies in Fuzziness and Soft Computing 244. doi:10.1007/978-3-642-00402-5

Liu X, Wang W, Chai T (2005) The fuzzy clustering analysis based on AFS theory. IEEE Trans Syst Man Cybern Part B Cybern 35:1013–1027

Liu X, Chai T, Wang W, Liu W (2007) Approaches to the representations and logic operations of fuzzy concepts in the framework of axiomatic fuzzy set theory I. Inf Sci 177:1007–1026

Liu X, Wang W, Chai T, Liu W (2007) Approaches to the representations and logic operations of fuzzy concepts in the framework of axiomatic fuzzy set theory II. Inf Sci 177:1027–1045

Lucey P, Cohn JF, Kanade T, Saragih J, Ambadar Z, Matthews I (2010) The extended cohn-kanade dataset (ck+): A complete dataset for action unit and emotion-specified expression. IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), pp 94–101

Mohammadi MR, Fatemizadeh E, Mahoor MH (2016) Intensity estimation of spontaneous facial action units based on their sparsity properties. IEEE Trans Cybern 46:817–826

Pantic M, Rothkrantz LJ (2004) Facial action recognition for facial expression analysis from static face images. IEEE Trans Syst Man Cybern Part B Cybern 34:1449–1461

Peng H, Long F, Ding C (2005) Feature selection based on mutual information criteria of max-dependency, max-relevance, and min-redundancy. IEEE Trans Pattern Anal Mach Intell 27:1226–1238

Pu X, Fan K, Chen X, Ji L, Zhou Z (2015) Facial expression recognition from image sequences using twofold random forest classifier. Neurocomputing 168:1173–1180

Quinlan JR (1993) Quinlan J R. C4. 5: Programs for machine learning. Morgan Kaufmann Publishers Inc., San Francisco

Ramoni M, Sebastiani P (2001) Robust bayes classifiers. Artif Intell 125:209–226

Ren Y, Li Q, Liu W, Li L (2016) Semantic facial descriptor extraction via axiomatic fuzzy set. Neurocomputing 171:1462–1474

RodrìGuez RM, MartıNez L, Herrera F (2013) A group decision making model dealing with comparative linguistic expressions based on hesitant fuzzy linguistic term sets. Inf Sci 241:28–42

Sarkhel R, Das N, Saha AK, Nasipuri M (2016) A multi-objective approach towards cost effective isolated handwritten bangla character and digit recognition. Pattern Recogn 58:172–189

Siebers M, Schmid U, Seuß D, Kunz M, Lautenbacher S (2016) Characterizing facial expressions by grammars of action unit sequences–a first investigation using ABL. Inf Sci 329:866–875

Silva C, Vieira SM, Sousa JMC (2015) Fuzzy decision tree to predict readmissions in intensive care unit. Lect Notes Electr Eng 321:365–373

Tenorio EZ, Thomaz CE (2011) Anàlise multilinear discriminante de formas frontais de imagens 2d de face. Proceedings of the X Simposio Brasileiro de Automacao Inteligente SBAI, pp 266–271

Thomaz CE, Giraldi GA (2010) A new ranking method for principal components analysis and its application to face image analysis. Image Vis Comput 28:902–913

Tseng JL (2016) An improved surface simplification method for facial expression animation based on homogeneous coordinate transformation matrix and maximum shape operator. Math Probl Eng 2016:14. doi:10.1155/2016/2370919

Turk MA, Pentland AP (1991) Face recognition using eigenfaces. 1991 I.E. Conference on Computer Vision and Pattern Recognition (CVPR). IEEE, Maui, p 586–591

Wang X, Liu X, Zhang L (2014) A rapid fuzzy rule clustering method based on granular computing. Appl Soft Comput 24:534–542

Wang Z, Ruan Q, An G (2016) Facial expression recognition using sparse local fisher discriminant analysis. Neurocomputing 174:756–766

Wang H, Gao J, Tong L, Yu L (2016) Facial expression recognition based on PHOG feature and sparse representation. 2016 I.E. Conference on Chinese control Conference (CCC), pp 3869–3874

Yang S, Bhanu B (2012) Understanding discrete facial expressions in video using an emotion avatar image. IEEE Trans Syst Man Cybern Part B Cybern 42:980–992

Zhang Z, Wang L, Zhu Q, Chen S-K, Chen Y (2015) Pose-invariant face recognition using facial landmarks and weber local descriptor. Knowl-Based Syst 84:78–88

Zhang K, Mistry S, Neoh C, Lim CP (2016) Intelligent facial emotion recognition using moth-firefly optimization. Knowl-Based Syst 11:248–267

Zhao G, Pietikäinen M (2007) Dynamic texture recognition using local binary patterns with an application to facial expressions. IEEE Trans Pattern Anal Mach Intell 29(6):915–928

Zhao H, Yuen PC (2008) Incremental linear discriminant analysis for face recognition. IEEE Trans Syst Man Cybern Part B Cybern 38:210–221

Zhong L, Liu Q, Yang P, Huang J, Metaxas DN (2015) Learning multiscale active facial patches for expression analysis. IEEE Trans Cybern 45:1499–1510

Acknowledgements

This work is supported by Natural Science Foundation of China (No.61370146, 61672132) and Liaoning Science & Technology of Liaoning Province of China (No.2013405003).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Li, Z., Zhang, Q., Duan, X. et al. New semantic descriptor construction for facial expression recognition based on axiomatic fuzzy set. Multimed Tools Appl 77, 11775–11805 (2018). https://doi.org/10.1007/s11042-017-4818-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-017-4818-3