Abstract

Achieving high embedding capacities for information hiding systems while maintaining high perceptual stego quality is a critical challenge in steganography. This quandary is attracting researchers to overcome the trade-off barrier between high capacities and enhanced levels of stego image quality. This work introduces a promising transform-domain hiding scheme that aims to achieve ultimate hiding capacity with premium perceptual quality results. The proposed scheme is based on the fact that highly correlated images are represented by significant coefficients that are strongly packed in the transform-domain of the image. This allows for a large space in the insignificant coefficient areas to embed in. To exploit this feature optimally, a curve-fitting approach is introduced and implemented in various adaptive-region transform-domain embedding schemes. Experimental results demonstrate that this curve-fitting methodology is able to enhance adaptive transform-domain embedding schemes where very high embedding capacities can be achieved that are much higher than competing high-capacity hiding schemes. The other noticeable result is that although the embedding capacity has increased compared to earlier work, the perceptual quality level has also improved over previous methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Steganography is the art that deals with concealing the existence of messages. The invention of this art is not new, some historical studies traced back this science to 440BC [17]. However, the interest in information security techniques, such as steganography and encryption, has widely grown in recent decades with the massive increase in the exchanged multimedia data over insecure networks [6, 14, 20, 21, 40].

Steganography differs from encryption in that encryption is concerned with encoding messages, so that only authorized entities can understand them. Steganography adds a further security step since it conceals the occurrence of the hidden data exchanged. “Watermarking”, on the other hand, is a different category of data hiding techniques. This class is used for authentication and integrity purposes [23].

There are four different aspects that researchers try to improve; capacity, perceptibility, robustness, and security. The capacity attribute refers to the size of the hidden data in a cover medium. Perceptibility deals with the amount of “noise” in the stego medium. Robustness refers to the “solidity” of the stego medium, and its ability to keep the secret information undestroyed with the existence of noise and impairments, and security refers to an eavesdropper’s inability to detect and inturn extract or change the hidden message.

The recent growth in data sizes has attracted many researchers to contribute in the embedding capacity area of research [2, 5, 8, 9, 16, 18, 25, 30]. The drive to improve the embedding capacity is hindered by the fact that an increase in the amount of embedded secret data will typically result in poor stego channel quality.

In this work, we present a transform-domain embedding scheme that addresses the shortcomings in previous high capacity embedding schemes where researchers had to trade-off between higher capacities and reduced perceptual quality or choose higher perceptual quality at the expense of lower capacities.

In images, the core concept behind data embedding is the fact that most images can be partitioned into different regions based on their inter-pixel relations. These regions vary between high frequency areas where pixels have almost no correlation, and low frequency areas (highly correlated). Embedding schemes will usually find the redundancy in the pixel information of the cover image where the correlation is at its minimum level, and use it to hide the secret data.

Recent work by Rabie & Kamel [33, 34] has tried to address the problem of capacity versus perceptual stego quality by estimating adaptive square regions inside fixed and adaptive blocks of the DCT of the cover image. These schemes were able to reach extremely high embedding capacities above 21bpp while maintaining perceptibility at an acceptable level of around 27dB.

The steganography scheme described in this paper is mainly based on the idea that the DCT coefficients of a correlated image will be strongly packed in the top left region of the DCT domain. This suggests segmenting the cover image into different segments based on their inter-pixel correlation level. By transforming each segment using DCT, we insure having a very large area to embed in, as important coefficients will be concentrated in a small area due to the strong “energy compaction” property of the DCT.

Unlike previous adaptive region embedding approaches [33, 34], where only a square region in the lower-right corner of the possible hiding area in the DCT block is used to embed in, our new scheme introduces a curve-fitting (CF) approach to utilize fully the whole area that is suitable to embed in. This has led to a substantial increase in the available embedding area in the DCT of the cover image and has consequently resulted in improved hiding capacities while achieving very high perceptibility values for the stego image in comparison to these earlier schemes. As a matter of fact, we were able to reach an embedding capacity of 22bpp at 35.83dB, as will be shown in detail in Section 5.

The rest of this paper is organized as follows. Section 2 discusses related research work in the area of high-capacity data hiding. Section 3 briefly reviews the discrete cosine transform and the discrete wavelet transform, and their importance for high-capacity data hiding. The proposed Curve-Fitting methodology is discussed in significant detail in Section 4. Section 5 presents comparison results and demonstrates the highest capacity/perceptibility levels that can be achieved based on the proposed approach. Finally, concluding remarks appear in Section 6.

2 Related work

The traditional methods to embed information into a cover image are the Least Significant Bit (LSB) methods. The general idea of this technique is to embed in the least significant bit in each pixel of the cover image. There are many implementations of this method. In [39] only one of the three channels at each pixel of the cover image is elected by a Sample Pairs analysis, then a LSB Match method is performed so that the final color is similar to the original one in terms of colors. The Least significant bit (LSB) methods along with Spread Spectrum and code based techniques are examples of the spatial techniques [41]. Spatial schemes are simpler and faster than other hiding techniques. However, these methods are less robust, and are easier to be detected by an attacker.

In [19] the authors propose a gray-scale image hiding scheme where the cover image is divided into blocks of two sequential pixels. These blocks are grouped based on their smoothness and contrast attributes. The amount of information that can be hidden in a block is determined by its smoothness and contrast level. Since it is tougher for human perception to detect changes in non-smooth areas, this algorithm suggests to embed more data in edge areas.

Image inpaintaing was also used for data hiding in [24, 26]. A scheme that utilizes data-hiding and compression simultaneously using side match vector quantization and image inpainting is proposed in [24]. Since this method combines data hiding and image compression, the maximum capacity reached by this method was quite low (0.14bpp for an R,G,B color secret image). Another class of spatial hiding schemes are the reversible data hiding schemes. Examples of such schemes are the work are [27] and [25].

The second embedding category is the transform-domain methods. In these techniques, an image is transformed from it’s spatial domain to a different domain. The hiding process is then performed in the transformed domain by inspecting the less important coefficients, and replacing them by scaled bits from the secret image. Finally, a stego image is produced by taking the inverse transform. There are several transforms that are used in image hiding schemes. Examples are, the discrete Fourier transform, discrete cosine transform, and discrete wavelet transform. The advantage of these techniques is the improved security and robustness comparing to the spatial domain techniques.

A frequency domain hiding scheme is introduced in [28, 29]. The idea is to use the ‘Matryoshka principle’ or the ‘nested doll principle’ which is a well-known design paradigm. The author’s theory states that the Fourier phase of the cover image is much more important than its Fourier mgnitude when reconstructing the image. Thus, the scheme keeps the Fourier phase intact and embeds in the Fourier magnitude. This has allowed a robust embedding with acceptable quality of the stego image and minor degradation in the extracted secret image.

The same authors have investigated the trade-off between hiding capacity and perceptibility in many papers. In [32] a Fixed-Block-size locally Adaptive-Region (FBAR) DCT approach was implemented to discover this relationship. An improvement over the previous method using a Fixed-Block-size Globally Adaptive-Region (FB-GAR) DCT method had enabled to embed and extract with higher capacities and perceptibility [33]. Moreover, the authors made a further step in challenging this trade-off in [34]. The new idea is based on partitioning the cover image into non-overlapping segments using a Quad-Tree Adaptive-Region (QTAR) DCT embedding scheme. This has achieved the highest capacity/perceptibility levels among all of their previous work.

A wavelet transform scheme is presented in [36]. This method encrypts the secret data, and then embeds into the wavelet coefficients of the cover image to produce a stego-image. Furthermore, the algorithm increases the robustness of the proposed method by electing the approximation band of the wavelet domain, and embed in that band. In [11] another novel wavelet approach that embeds hidden information in the integer wavelet coefficients of the cover image. To increase the security, a pseudorandom function is applied to select the coefficients that will be used in hiding.

A third class in hiding schemes is the compression-based algorithms. The author in [35] introduces a methodology that combines Least Significant Bit(LSB), Discrete Cosine Transform(DCT), and compression techniques. At the first place, the secret data is embedded into the cover image using a LSB algorithm to produce a stego image. Then, DCT is used to transform the image to the frequency domain. Finally compression techniques like quantization and runlength coding are performed to compress the stego-image in order to make it more secure.

The embedding scheme introduced in this paper is based on a Curve-Fitting (CF) methodology applied in the transform domain and implemented on the previously published embedding algorithms in [33, 34]. It is shown that this proposed enhancement will allow for higher embedding capacities while improving the quality of the stego image in comparison to results obtained using these previous schemes. A comparison with the embedding capacity and perceptibility of various steganography schemes that have been recently published in the literature is also demonstrated.

3 Theoretical background

3.1 The discrete cosine transform

The strong “energy compaction” property has made the Discrete Cosine Transform (DCT) a widely used utility in signal and image processing applications. When using the DCT transform, most of the signal information is concentrated in the top-left area of the domain, which is the area that has low-frequency coefficients [1, 37]. Since images are two-dimensional signals, the 2-dimensional DCT (2D-DCT), which is an extension of the 1D-DCT, is often used in image processing.

For images, the strong “energy compaction” property of the DCT manifests clearly with highly correlated images. In contrast, uncorrelated images have their energy spread out in the domain where some large values of the DCT coefficients are found in the high frequency areas.

Another property of the DCT transform is the importance of the DCT-phase component for reconstructing an image in the spatial domain. It has been found that the DCT-phase carries a significant amount of the image information. On the other hand, the DCT-magnitude of the image provides much less significant information about an image [3, 4]. Therefore, it is practical to hide in the magnitude of the DCT, and to keep the DCT-phase intact.

The strong energy compaction property of the DCT and the significance of its magnitude and phase spectra can be found with more details in [31] and [33].

3.2 The discrete wavelet transform

The other transform domain that has been widely utilized in recent years in steganography is the discrete wavelet transform (DWT). A Wavelet is a small wave that has its energy concentrated in time to allow dealing with time-varying and non-stationary cases. The Wavelet Transform is a process that can decompose a signal into coefficients that represent the signal within a certain time period [12]. The transform can be defined on an input function f(t) using the following equation:

where W(R,S) represents the coefficients which are a function of scale and position transform parameters (R,S), and ψ t represents the mother wavelet function.

Since an image is considered as a discrete two-dimensional signal, the 2D Discrete Wavelet Transform (2D-DWT) must be applied instead of the continuous wavelet transform. The 2D-DWT can be defined on an input image x(k,j) in the form:

where D(r,s) represents the 2D-DWT coefficients as a function of the scale and shift transform parameters (r,s), and ψ k j is the mother wavelet basis time function with finite energy and fast decay.

The DWT decomposition can also be obtained by using filter banks. A filter bank is a series of filters that divides an input signal into multiple components. For images, wavelet analysis can help separate the input image into approximate and detailed sub-images. This can be done by applying a 1D low-pass filter on the rows of the input image, which produces the horizontal approximation of the input image. A 1D high-pass filter is also applied on the rows of the input image, which produces the horizontal details of the input image. Next, a second round of filters are applied to both the horizontal approximation and the horizontal details, but this time, these filters are applied on the columns. The second filtering stage results in 4 sub-images; the approximation image (LL), the vertical details (LH), the horizontal details (HL), and the diagonal details (HH). Figure 1 illustrates the filter bank operation on an input image.

3.3 Stego image quality measures

Perceptibility is an embedding system aspect that deals with the amount of ”distortion” in the cover medium due to the hiding process. The perceptibility level plays an important role in evaluating the performance of an embedding system. It is important in the evaluation to consider both the visual quality of the stego images and the analytical performance of the hiding scheme.

Capturing the existence of an embedded message breaks the fundamental goal of steganography. The definitive measure of visual fidelity are those tests that are related to our human perception. However, these test will give various results since human perception system differs from a person to another.

Tests are performed by people who search for visual differences between the stego and cover images, and trying to detect the original cover image. According to International Telecommunication Union rules and recommendations [13, 38], if the percentage of success approaches 50%, then it can be considered that the message is securely hidden.

A well established approach to image fidelity measurement that tries to emulate the human visual perception of image structure, is the Structural SIMilarity (SSIM) index. Under the assumption that human visual perception is highly adapted to extracting structural information from a scene, SSIM was introduced as an alternative complementary framework for quality assessment based on the degradation of structural information [42, 43]. Since the human visual system is more sensitive to changse in the luminance or the contrast channel, this new technique calculates the similarity based on some luminance and contrast measurements.

Contrary to the subjective approach which is based on human perception, another robust measure of image quality that has been widely used by the signal processing community is the Peak-Signal-to-Noise-Ratio (PSNR) in decibels (dB) [7, 22]. This measure is less sensitive to minor deviations between images and, together with the SSIM index, will be adopted for measuring the performance of our embedding scheme.

A description of each of these quality measures, including mathematical formulations, can be found with more details in [33, 34].

4 The proposed curve-fitting scheme

The essential feature that attracts researchers to transform domain techniques based on DCT is its energy compaction property. As discussed in Section 3, the DCT domain of a correlated image has few coefficients concentrated at the top left corner. These few coefficients represents the low frequency component of an image. As a result, it is guaranteed to have a large area to hide in which lies in the high frequency region of the DCT domain.

The strong compaction property of the DCT for highly correlated images is the key feature allowing our new scheme to break the traditional barrier between capacity and stego fidelity. The merits of the proposed scheme is two fold; because our scheme is able to properly utilize the full high frequency areas made available by the DCT’s strong energy compaction by utilizing a Curve-Fitting (CF) approach applied in the DCT domain, an optimally large area is available for embedding the secret data, thus allowing for optimally high capacity rates. Secondly, since the scheme is utilizing the high frequency areas to embed in, the scheme is able to achieve excellent stego image quality because of the fact that our human visual system is less sensitive to distortion around edges or in high frequency areas.

To clarify this idea, we use the Quad-Tree Adaptive-Region (QTAR) scheme of [34], and the Fixed-Block Global-Adaptive-Region (FB-GAR) method of [33] to show improvements in these methods by re-implementing them using our novel curve-fitting methodology proposed in this work. We thus denote the curve-fitting schemes as (CF-QTAR) and (CF-FB-GAR). To start, CF-QTAR segments the cover image into highly correlated blocks using a quad-tree approach. Then the 2D-DCT is applied to each block to transform the block to the DCT domain. A quantization/thresholding process is performed on each block to obtain a binary image that marks the embedding area.

Unlike previous adaptive region embedding approaches in [32,33,34], where only a block or a segment of the possible hiding area is used to embed in, this scheme fully utilizes the area that is possible to hide in. The CF-QTAR scheme will select 3 points at the edges of the hiding area, and a piecewise linear curve fits these three point underwhich will lie the entire embedding area. These 3 points are then sent along with the stego image to the receiver side to be able to determine the embedding area, and extract the secret image successfully. This novel curve fitting approach has allowed the embedding algorithms to achieve top capacity results that reached 22bpp at PSNR values that reached 35.83dB.

4.1 The embedding process

Segmenting the cover image into coherent regions is the first step in our proposed method. The partitioning technique used follows a quad-tree approach for all of the three (R,G,B) color channels. The quad-tree dividing approach can be classified as a top-down segmentation method that has been widely used in many image processing applications [10, 34].

The quad-tree method starts by dividing an image into four equal sized blocks. Then, it checks the correlation level in each pixel. This check is done by simply computing the difference between the maximum pixel value and the minimum pixel value in a block. If the difference value is greater than a pre-defined “threshold” value, then this block is further sub-divided into four blocks. Otherwise, the block is assumed that it has met the pre-defined coherence standard, and it is not divided any more. This process is performed repeatedly until each block meets the criterion.

Along with the threshold value, there are two more quad-tree parameters; the maximum block size, and the minimum block size. The quad-tree algorithm will not sub-divide a block to a lower block size than the minimum block size even if the block did not meet the criterion. In the same way, the algorithm will force segmenting a block that is larger than the maximum block size even if the difference between the maximum and minimum pixel value in that block is less than the threshold value.

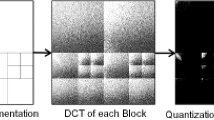

After partitioning the cover image using a quad-tree approach, the 2D-DCT is applied to each block. Next, a quantization process begins to estimate the embedding region in each block. The embedding process is performed by replacing the unimportant DCT coefficients by a scaled version of the secret image. The inverse DCT is then applied to each pixel producing the stego image in the spatial domain. Figure 2 shows a general demonstration of the embedding process.

The quantization process is used to locate the region that is appropriate for embedding. CF-QTAR uses a quantization technique that is similar to the one used in JPEG compression standard. The idea is to choose one of the JPEG quantization matrices, and divide the magnitude of the DCT of the current block with the quantization matrix values element by element, after first resizing the quantization matrix to the same size as the current block. We use the Matlab function “imresize” to resize the original 8 × 8 JPEG quatization matrix, shown in step-1 of Fig. 3, to the same size as the current block size of the quad-tree-segmented cover image. The main purpose of this quantization step is to distinguish between important and redundant DCT coefficients, masking out the less important coefficients and replacing them with the scaled secret data while keeping the important DCT coefficients intact, so that the scheme can reconstruct the cover image with minimal distortion, which is of utmost improtance for the security of steganography applications.

After quantizing the magnitude of the DCT of a block, a thresholding process begins. The quantized DCT block is divided into 8 × 8 non-overlapping segments, and the values inside each segment is checked. If a non-zero value is found in the segment, then the whole segment is turned to white. Otherwise, the block is kept unchanged if all values inside this block are zeros. This process converts the quantized matrix to a binary image, where the black area (zeros) is the proposed embedding area. On the other hand, the white region indicates the area that contains the important DCT coefficients that must be kept intact. The quantization and thresholding step is shown in Fig. 3.

The pre-embedding step is to fit the black area by a piecewise linear curve. This is done by locating 3 points; points 1 and 3 are located at the edges of the black area, point 2 is the intersection between the diagonal and the black area. These points are then connected by a line generated by a linear interpolation algorithm. The black area must be now bordered by the line from top, and thus the region under this line is the embedding area. Figure 4 shows the curve-fitting procedure. These points must be sent to the receiver so that it can locate the embedding area, and extract the secret image. The maximum square size of the secret image that can fit into the proposed area can be estimated as follows:

where S is the maximum dimension of the square secret image, m is the index of quad-tree blocks, b m is the number of pixels that lie under the piecewise linear curve in block b m ;m = 1,2,3,⋯ ,N blocks.

a The black region represents the area that has less important DCT coefficients in a Quad-Tree block, and can be replaced by scaled pixel values from the secret image without sacrificing the stego image quality. b In QTAR [34], the embedding area is selected by finding the largest square block that can fit in the lower-left corner of the black area. On the other hand, CF-QTAR utilizes the whole proposed area by fitting a piecewise linear curve to three points bordering the region as shown

Finally, the embedding is done in the magnitude of the DCT in the locations of the black areas of the quantized matrix after thresholding. Coefficients are replaced by scaled pixels from the secret image in the range [1,5] pixel by pixel column-wise from the top left to the bottom right. The re-scaling step is important to allow the hidden secret image values to blend into the natural range of values of the DCT coefficients. Figure 5 shows the pseudocode for the embedding process.

4.2 The DWT-QTAR and CF-DWT-QTAR schemes

In this section, we investigate our Curve-Fitting hiding approach using wavelets. DWT-QTAR is a modified version of QTAR where the DWT of the quad-tree blocks is used instead of the DCT. First, the cover image is segmented in a quad-tree segmentation fashion similar to QTAR. Then DWT is applied on each block. This operation produces 4 sub- blocks in each quad-tree block, namely the Approximate Image (LL), Vertical Detail (LH), Horizontal Detail (HL), and Diagonal Detail (HH). After that, the LL band is transformed to the frequency domain using DCT. This will make the whole quad-tree block represented in the frequency domain. The magnitude of the DCT of the LL band is then quantized to find the area (P) that has the least important DCT coefficients. Then, a contiguous square region in the lower-right corner of this (P) area is selected to be a feasible area to hide in. This square region is embedded with part of the secret image and the rest of the secret image pixels will replace the DWT coefficients of the LH, HL, and HH bands. Figure 6 clarifies the idea of DWT-QTAR.

CF-DWT-QTAR on the other hand, is an implementation of our Curve-Fitting approach on the DWT-QTAR technique. CF-DWT-QTAR tries to utilize the whole feasible area (P) in the LL band, instead of hiding in a square region which would not fully utilize the whole area (P) for hiding. Figure 7 presents the idea of CF-DWT-QTAR. The pseudocode of the CF-DWT-QTAR embedding process is presented in Fig. 8.

4.3 The CF-FB-GAR scheme

A special case of the QTAR scheme of [34] is the FB-GAR scheme introduced in [33], which differs from QTAR by setting the block size for the maximum and minimum quad-tree blocks to the same value. This segments the image into fixed-size blocks. Similar improvements as those achieved by the CF-QTAR can also be obtained for the FB-GAR scheme by using the proposed curve-fitting methodology. We call this the curve-fitted fixed-block global-adaptive-region (CF-FB-GAR) scheme.

Wavelets can also be applied to the FB-GAR scheme of [33] using the same approach described in Section 4.2 for QTAR. We call this DWT-FB-GAR, and experiemental results in Section 5 will show that our curve-fitting technique when applied to these adaptive-region embedding schemes will improve the visual quality of the stego image while allowing higher capacities for data hiding.

4.4 The CF-QTAR extraction process

To successfully extract the secret image, the size of the adaptive blocks, their locations, and their 3 points must be transmitted along with the stego image. Extraction is performed in the reverse order as follows:

-

The received stego image is segmented into quad-tree blocks using the received size and locations of each block.

-

The 2D-DCT of each block is computed and the magnitude of this DCT is obtained.

-

A piece-wise linear curve is fitted to each block’s 3 points (which are received with the stego image), as shown in Fig. 4.

-

The region under each block’s curve is assumed to have the secret data. The secret data is extracted from each block pixel by pixel column-wise from the top left to the bottom right, in the same order that was used during the embedding process.

-

Finally, the values of the pixels must be rescaled from the current range to the original intensity range of [0,255] per color channel.

Figures 9 and 10 show the pseudocode of the extraction process for both CF-QTAR and CF-DWT-QTAR methods.

5 Experimental results and analysis

In this part, we compare results obtained by utilizing our curve-fitting embedding scheme against various other steganography schemes which have been recently published in the literature. Detailed demonstrative results of the CF-FB-GAR, CF-QTAR, and CF-DWT schemes applied at different block-size dimensions of {32 × 32,64 × 64,128 × 128}, and tested on four different color cover host images are presented. Figure 11 shows the four different cover images used: “Balloons”, “TigerPounce”, “F15Large”, and “Zebras”. The three secret images used, “Flower”, “Handwriting”, and “Pasta” are also shown in the same figure.

5.1 Comparative results

For the fixed-block adaptive-region (FBAR) embedding scheme proposed in [32], the authors were able to embed, in a typical cover image of a natural scene, and losslessly extract, from the generated stego image, approximately 6.74bpp per color channel of a three channel (R,G,B) color cover image for a maximum overall 20.22bpp embedding capacity with a perceptibility measured at PSNR of 25dB. This corresponds to embedding a color image of size 470 × 470 inside a color cover image of size 512 × 512.

The fixed-block global-adaptive-region (FB-GAR) scheme, described in [33], was able to embed at a capacity of 20.83bpp with a fixed block size of 128 × 128 and for the “F15Large” cover image at a PSNR of 27.24 dB.

The quad-tree-adaptive-region (QTAR) embedding scheme, proposed in [34], was able to introduce improvements over the FB-GAR scheme for the same perceptibility PSNR of the stego image. It is able to embed, in a typical cover image of a natural scene, and losslessly extract, from the generated stego image, approximately 7bpp per color channel of a three channel (R,G,B) color cover image for a maximum overall 21.01bpp embedding capacity for the same cover image (the “F15Large” cover image) when setting the minimum quad-tree block size to 128 × 128 and maximum to 256 × 256, with a perceptibility measured at PSNR of 27.21 dB. This corresponds to embedding a secret color image of size 479 × 479 inside a color cover image of size 512 × 512.

Table 1 shows the different embedding capacities and PSNR values reached by some recent state-of-the-art schemes in comparison to our curve-fitting (CF) methodology implemented in both the QTAR scheme (CF-QTAR and CF-DWT-QTAR) and the FB-GAR scheme (CF-FB-GAR). The table clearly shows that the high capacity results achieved by our scheme is the highest among all of our previous work. The scheme is able to embed approximately 22.7bpp for the “F15Large” cover image. The quality of the cover image is measured to be 28.15 dB, which is even higher than other lower capacity schemes. Another promising result obtained by our curve-fitting CF-QTAR scheme is a capacity rate of 19.88bpp at a PSNR of 35.02 dB for the “Balloons” cover image and a scale size of 32 × 32, which is an improvement over the capacity of the curve-fitting CF-FB-GAR scheme for the same “Balloons” cover image and at the same scale size of 32 × 32 achieving only 19.54bpp for the same visual stego quality of 35.03 dB, as is clear from Table 1.

5.2 Analysis of results

A sample of the results demonstrating our CF-QTAR curve-fitting scheme are shown in Figs. 12, 13, 14, 15, 16 and 17. The “Flower” image was the embedded secret image used in these example results. Tables 2, 3, 4, 5, 6, 7, 8 and 9 illustrate comparative results between our proposed curve-fitting approach for both DCT and DWT transform domains (namely; CF-FB-GAR, CF-DWT-FB-GAR, CF-QTAR, CF-DWT-QTAR) with the DCT-based Fixed-Block Global-Adaptive-Region (FB-GAR) scheme of [33], the DCT-based Quad-Tree-Adaptive-Region (QTAR) scheme of [34], the DWT-FB-GAR and DWT-QTAR approaches implemented in Section 3.2. For the quad-tree approaches, experiments were done at various minimum quad-tree block sizes, but were allowed to grow to a maximum of 256 × 256.

Hiding the secret image “Flower” into 512 x 512 “Balloons” cover image. From left to right: 1) The cover image, 2) Quad-tree segmentation with threshold value of 0.1, 3) The curve-fitted DCT of each block after quantization 4) The stego image with 35.0 dB, 5) The extracted secret image with size of 463 x 463 (19.62bpp)

Hiding the secret image “Flower” into 512 x 512 “Balloons” cover image using CF-FB-GAR. From left to right: 1) The cover image, 2) FB-GAR segmentation using 64 × 64 block size, 3) The curve-fitted DCT of each block after quantization 4) The stego image with 34.37 dB, 5) The extracted secret image with size of 468 x 468 (20.05bpp)

Hiding the secret image “Flower” into 512 x 512 “Balloons” cover image. From left to right: 1) The cover image, 2) Quad-tree segmentation with threshold value of 0.9, 3) The curve-fitted DCT of each block after quantization, 4) The stego image with 34.42 dB, 5) The extracted secret image with size of 466 x 466 (19.88bpp)

Hiding the secret image “Flower” into 512 x 512 “F15Large” cover image. From left to right: 1) The cover image, 2) Quad-tree segmentation with threshold value of 0.1, 3) The curve-fitted DCT of each block after quantization, 4) The stego image with 28.45 dB, 5) The extracted secret image with size of 495 x 495 (22.43bpp)

Hiding the secret image “Flower” into 512 x 512 “Balloons” cover image. From left to right: 1) The cover image, 2) Quad-tree segmentation with threshold value of 0.9, 3) The curve-fitted DCT of each block after quantization, 4) The stego image with 34.0 dB, 5) The extracted secret image with size of 462 x 462 (19.54bpp)

Hiding the secret image “Flower” into 512 x 512 “F15Large” cover image. From left to right: 1) The cover image, 2) Quad-tree segmentation with threshold value of 0.9, 3) The curve-fitted DCT of each block after quantization, 4) The stego image with 28.65bpp, 5) The extracted secret image with size of 491 x 491 (22.07bpp)

The cover images used “Balloons”, “TigerPounce”, “F15Large”, and “Zebras” have different levels of correlation and are all of size 512 × 512. Since “F15Large” has the largest highly correlated area of all the cover images used, the capacity achieved using this image as a cover image was the highest among all the other cover images. The capacity reached an upper limit of 22.7bpp which is equivalent to embedding a secret image of size 498 × 498. When using the “Zebras” as a cover image, it is clear from the tables that this cover image gave the lowest capacity (19.22bpp) due to the highly uncorrelated nature of the image (too many high-frequency regions). This is a natural consequence of the type of quad-tree segmentation used and shows that our technique is more suitable to highly correlated cover images, which is a reasonable choice if high capacity is desired.

The experimental results and comparisons clarify that our curve-fitting scheme has exceeded the maximum capacity level achieved by our earlier techniques without sacrificing the stego image quality. Analysis of the data from Tables 2–9 shows that 91.97% of the results of the curve-fitting technique have improved capacities without sacrificing the quality of the stego images. To be more specific, 73.24% of this 91.97% have also improved the quality of the stego image, which represents 67.36% of the total recorded results. Our statistics shows that only 4.47% of the results have higher capacities at lower PSNR values. Only 3.56% of the results did not show any improvement neither in the capacity nor in the quality of the stego image.

However, the DWT methods (DWT-FB-GAR versus CF-DWT-FB-GAR, and DWT-QTAR versus CF-DWT-QTAR) did not show marked improvements while comparing between the CF and non-CF versions of these DWT methods. The reason for this can be understood by referring to the embedding procedure of both methods, which are clari- fied in Figs. 6 and 7. Both methods are hiding in the HH, HL, and LH bands in each block. The only difference is in the LL band, which is 1/4 of a quad-tree block in size. This means that the difference in the embedding area will not be too significant.

Another important note is that the curve-fitting approach has shown its best improvement when applying it to QTAR. For example, referring to Tables 6 and 7, when using the “TigerPounce” as a cover image, the “Flower” as a secret image, and when setting the minimum block size to 32 × 32, we have reached a PSNR value of 28.85dB with a capacity rate of 18.8bpp in QTAR. In CF-QTAR, although the capacity has increased to 20.74bpp, the PSNR was also raised to 32.88dB.

Another noticeable result is when using 128 × 128 as the minimum block size with the “Balloons” cover image. The quality of the stego image has improved from 31.81 dB to 34.23 dB with also an increase in the capacity rate from 18.29bpp to 19.54bpp. CF-FB-GAR method comes in the second rank after CF-QTAR. Referring to Tables 2 and 3, the PSNR has grew from 27.23 dB to 32.54 dB while an increase also in the capacity rate from 19.63bpp to 20.83bpp. This result was obtained by setting the minimum block size to 128 × 128, using the “TigerPounce” as the cover image, and “Flower” as the secret image.

Table 10 illustrates detailed results obtained by the CF-QTAR scheme at various threshold values not used in Table 7 which produce improved results. The highest capacity rate reached by CF-QTAR was 22.70bpp with a PSNR value of 28.15 dB. This is achieved when setting the minimum block size to 32 × 32 and using the ‘F15Large” as the cover image. On the other hand, CF-QTAR was able to embed the “Flower” secret image into the “Balloons” cover image with a stego image PSNR of 35.02 dB and a capacity rate of 19.88bpp. This result has the highest PSNR value, and is obtained by setting the minimum block size to 32 × 32. Another attractive result obtained by CF-QTAR is when using the “Balloons” cover image with a minimum block size of 64 × 64. The capacity rate reached up to 20.05bpp while having a stego image PSNR value of 34.37 dB.

5.3 The curve-fitting advantage

Although CF-QTAR/QTAR schemes and CF-FB-GAR/FB-GAR schemes share the same initial segmentation procedure, the curve-fitting implementations improved the embedding capacity while enhancing the perceptual quality level. The main reason for this improvement is clarified in Fig. 4. Curve-fitting allows for utilizing the whole proposed embedding area, clearly shown in Fig. 4a. As such, the capacity will increase naturally.

On the other hand, the reason that curve-fitting schemes achieve higher PSNR is the uniform embedding of the scaled secret image values that allow it to blend into the natural range of values of DCT coefficients that it replaces. This embedding takes place in the whole area under the curve for curve-fitting schemes, as compared to embedding in just a square region in the lower-right corner of the DCT for non-curve-fitting schemes (for example compare Figs. 6 and 7), which causes abrupt changes in DCT coefficient values between the square region used to embed the scaled secret data and the rest of the DCT coefficients, as is clear from Fig. 4b. When transforming back the DCT coefficients to the space domain, the uniform embedding for curve-fitting schemes will cause less noise artifacts to be generated in the stego image, thus contributing to its improved visual quality.

5.4 Robustness test

In this section we examine the robustness of our Curve-Fitting embedding scheme when the stego image has been attacked by different types of additive noise as well as various geometric deformations.

First, we simulate tampering the stego image with an information loss attack by deleting a square region of different sizes. The results are shown in Fig. 18.

Next, we add Salt and Papper noise, and Speckle noise to the stego image. Our embedding scheme also successfully passed through the stretching and warping attacks which are considered as geometric attacks, albeit at higher levels of degradation to the extracted hidden image.

Figure 19 shows the robustness test using different types of noise applied as well as the stretching demormation attack. Figure 20 shows the extracted hidden image after degrading the stego image with a warping geometric attack at 20∘,30∘,40∘ degrees for the “Flower” and “Handwriting” secret images. The proposed method clearly comes short of extracting an acceptable hidden image evident by the low SSIM values.

6 Conclusions

This paper has introduced a Curve-Fitting (CF) approach to transform domain steganography schemes that demonstrated improvements in capacity as well as stego visual fidelity. This work complements previous work published in the literature [32,33,34] investigating the relationship between hiding capacity and stego image quality. This new methodology was implemented as the CF-FB-GAR, CF-QTAR, CF-DWT-FB-GAR, and CF-DWT-QTAR schemes and compared against the non-curve-fitted FB-GAR, QTAR, DWT-FB-GAR, and DWT-QTAR schemes. In CF-QTAR, the idea was to segment the cover image into blocks using the quad-tree segmentation algorithm thus forming statistically stationary regions of increasing sizes. The secret data is then hidden in the least significant areas of the DCT of each block by using a quantization step followed by piecewise linear curve fitting to three points bordering the full least significant DCT coefficient area. Experimental results and comparative evaluation have confirmed that although the embedding capacity has increased when using curve-fitting compared to earlier work, the perceptibility level has also improved over previous methods, thus breaking the traditional barrier that has confined the relationship between capacity and perceptibility to either higher capacities with reduced perceptual quality or higher perceptual quality at the expense of lower capacities.

References

Ahmed N, Natarajan T, Rao K (1974) Discrete cosine transform. IEEE Trans Comput 23(1):90–93

Anderson RJ, Petitcolas FA (1998) On the limits of steganography. IEEE J Sel Areas Commun 16(4):474–481

Bracamonte J, Ansorge M, Pellandini F, Farine PA (2000) Low complexity image matching in the compressed domain by using the dct-phase Proc. of the 6th COST, vol 276, pp 88–93

Bracamonte J, Ansorge M, Pellandini F, Farine PA (2005) Efficient compressed domain target image search and retrieval Image and video retrieval. Springer, pp 154–163

Brisbane G, aini RSN, Ogunbona P (2005) High-capacity steganography using a shared colour palette. IEE Proc Vis Image Signal Process 152(6):787–792

Chan CK, Cheng L (2004) Hiding data in images by simple LSB substitution. Pattern Recogn 37:469–474

Chang CC, Chen TS, Chung LZ (2002) A steganographic method based upon jpeg and quantization table modification. Inf Sci 141(1):123–138

Chen B, Wornell G (2001) Quantization index modulation: a class of provably good methods for digital watermarking and information embedding. IEEE Trans Information Theory 47(4):1423–1443

Cole E (2003) Hiding in plain sight: Steganography and the art of Covert communication, 1st edn. Wiley, New York

Ebrahimpour-Komleh H, Chandran V, Sridharan S (2001) Face recognition using fractal codes Proceedings of the 2001 international conference on image processing, 2001, vol 3, pp 58–61

El Safy R, Zayed H, El Dessouki A (2009) An adaptive steganographic technique based on integer wavelet transform International conference on networking and media convergence, 2009. ICNM 2009, pp 111–117

Ibaida A, Khalil I (2013) Wavelet-based ecg steganography for protecting patient confidential information in point-of-care systems. IEEE Trans Biomed Eng 60 (12):3322–3330

IEC I (1994) Information technology-digital compression and coding of continuous-tone still images: Requirements and guidelines. Standard, ISO IEC pp. 10,918–1

Jain A, Uludag U, Hsu R (2002) Hiding a face in a fingerprint image Proc of the international conference on pattern recognition (ICPR). Quebec city, Canada

Lee Y, Chen L (2000) High capacity image steganographic model. IEE Proc, Vis Image Signal Process 147(3):288–294

Lee YK, Chen LH (2000) High capacity image steganographic model. IEE Proceedings-Vision Image and Signal Process 147(3):288–294

Leng CK, Labadin J, Juan SFS (2008) Steganography: Dct coefficients reparation technique in jpeg image. JDCTA 2(2):35–41

Lin CC, Shiu PF (2010) High capacity data hiding scheme for dct-based images. J Infor Hiding and Multimed Signal Process 1(3):220–240

Lu P, Luo X, Tang Q, Shen L (2004) An improved sample pairs method for detection of lsb embedding International workshop on information hiding. Springer, pp 116–127

Marvel LM, Charles G, Boncelet J, Retter CT (1999) Spread spectrum image steganography. IEEE Trans Image Process 8(8):1075–1083

Nozaki K, Niimi M, Eason RO, Kawaguchi E (1998) A large capacity steganography using color bmp images ACCV ’98: Proceedings of the third asian conference on computer vision-Volume I. Springer, London, pp 112–119

Pavlidis G, Tsompanopoulos A, Papamarkos N, Chamzas C (2003) Jpeg2000 over noisy communication channels thorough evaluation and cost analysis. Signal Process Image Commun 18(6):497–514

Qazanfari K, Safabakhsh R (2013) High-capacity method for hiding data in the discrete cosine transform domain. J Electron Imaging 22(4):043,009–043,009

Qin C, Chang CC, Chiu YP (2014) A novel joint data-hiding and compression scheme based on SMVQ and image inpainting. IEEE Trans Image Process 23(3):969–978

Qin C, Chang CC, Hsu TJ (2015) Reversible data hiding scheme based on exploiting modification direction with two steganographic images. Multimed Tool Appl 74(15):5861–5872

Qin C, Chang CC, Huang YH, Liao LT (2013) An inpainting-assisted reversible steganographic scheme using a histogram shifting mechanism. IEEE Trans Circ Syst Video Technol 23(7):1109–1118

Qin C, Zhang X (2015) Effective reversible data hiding in encrypted image with privacy protection for image content. J Vis Commun Image Represent 31:154–164

Rabie T (2007) Frequency-domain data hiding based on the matryoshka principle. Special Issue on Advances in Video Processing and Security Analysis for Multimedia Communications. Int J Advan Media Commun 1(3):298–312

Rabie T (2010) Data secrecy: An FFT approach. Advanced Techniques in Multimedia Watermarking: Image, Video and Audio Applications pp 21–35. doi:10.4018/978-1-61520-903-3.ch002

Rabie T (2013) High-capacity steganography 6th international congress on image and signal processing (CISP), vol 2, pp 858–863

Rabie T (2016) Color-secure digital image compression. Multimedia Tools and Applications. doi:10.1007/s11,042--016--3942--9

Rabie T, Kamel I (2015) On the embedding limits of the discrete cosine transform. Multimedia Tools and Applications 75(10). doi:10.1007/s11,042--015--2557--x

Rabie T, Kamel I (2016) High-capacity steganography: A global-adaptive-region discrete cosine transform approach. Multimedia Tools and Applications 76(5). doi:10.1007/s11,042--016--3301--x

Rabie T, Kamel I (2016) Toward optimal embedding capacity for transform domain steganography: A quad-tree adaptive-region approach. Multimedia Tools and Applications 76(6). doi:10.1007/s11,042--016--3501--4

Raja K, Chowdary C, Venugopal K, Patnaik L (2005) A secure image steganography using lsb, dct and compression techniques on raw images 2005 3rd international conference on intelligent sensing and information processing, pp 170–176

Raja K, Venugopal K, Patnaik L, et al (2006) High capacity lossless secure image steganography using wavelets 2006 International conference on advanced computing and communications, pp 230–235

Rao K, Yip P (1990) Discrete cosine transform: algorithms, Advantages, Applications. Academic Press, ISBN 0-12-580203-X Boston

Rodrigues J, Rios J, Puech W, et al (2004) Ssb-4 system of steganography using bit 4 5Th international workshop on image analysis for multimedia interactive services

Roque JJ, Minguet JM (2009) Slsb: Improving the steganographic algorithm lsb WOSIS, pp 57–66

Solanki K, Jacobsen N, Madhow U, Manjunath BS, Chandrasekaran S (2004) Robust image-adaptive data hiding using erasure and error correction. IEEE Trans Image Process 13(12):1627–1639

Sumathi C, Santanam T, Umamaheswari G (2014) A study of various steganographic techniques used for information hiding. arXiv:1401.5561

Wang Z, Bovik AC (2009) Mean squared error: love it or leave it? A new look at signal fidelity measures. IEEE Signal Process Mag 26(1):98–117

Wang Z, Bovik AC, Sheikh HR, Simoncelli EP (2004) Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Process 13 (4):600–612

Yang B, Schmucker M, Funk W, Busch C, Sun S (2004) Integer dct-based reversible watermarking for images using companding technique. Proc. SPIE 5306, Security, Steganography and Watermarking of Multimedia Contents 6(405)

Acknowledgments

The authors would like to thank the five anonymous reviewers for their valuable suggestions that helped improve the original manuscript. This work was supported by the College of Graduate Studies and Research at the University of Sharjah.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Rabie, T., Kamel, I. & Baziyad, M. Maximizing embedding capacity and stego quality: curve-fitting in the transform domain. Multimed Tools Appl 77, 8295–8326 (2018). https://doi.org/10.1007/s11042-017-4727-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-017-4727-5