Abstract

Due to the huge intra-class variations for visual concept detection, it is necessary for concept learning to collect large scale training data to cover a wide variety of samples as much as possible. But it presents great challenges on both how to collect and how to train the large scale data. In this paper, we propose a novel web image sampling approach and a novel group sparse ensemble learning approach to tackle these two challenging problems respectively. For data collection, in order to alleviate manual labeling efforts, we propose a web image sampling approach based on dictionary coherence to select coherent positive samples from web images. We propose to measure the coherence in terms of how dictionary atoms are shared because shared atoms represent common features with regard to a given concept and are robust to occlusion and corruption. For efficient training of large scale data, in order to exploit the hidden group structures of data, we propose a novel group sparse ensemble learning approach based on Automatic Group Sparse Coding (AutoGSC). After AutoGSC, we present an algorithm to use the reconstruction errors of data instances to calculate the ensemble gating function for ensemble construction and fusion. Experiments show that our proposed methods can achieve promising results and outperforms existing approaches.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

With the advent of the big data era, the explosive growth of visual contents on the Internet presents a challenge in how to manage the ever-growing size of the multimedia collections, particularly in how to extract sufficiently accurate semantic metadata (concepts) to make them searchable [9, 11, 25]. Visual concept detection is essentially a classification task in which classifiers are learned with various features extracted from training samples to predict the presence of a certain concept in a video shot or keyframe (image) [25, 29]. Ranging from objects such as “boat” and “car” to scenes such as “sky” and “sea”, semantic concepts can serve as good intermediate semantic metadata for video content indexing and understanding [25]. Establishing a large set of robust concept detectors will yield significant improvements in many challenging applications, such as image/video search and summarization [29].

Due to the existence of the well-known semantic gap [18] between the low level visual features and the users’s semantic interpretation of diversified visual data, concept detection is a challenging yet essential task that has attracted the attention of many researchers [25]. The visual content for a given concept often possess huge variations resulting from diversified visual appearances, camera shooting and video editing styles, etc. Such huge intra-class variations hinders the performance of most machine learning approaches [25].

To solve the problem of huge intra-class variations, it may be a promising solution to collect large scale training data to cover a wide variety of samples as much as possible. Previous studies on visual concept detection [9] and pedestrian classification [8, 14] indicate that the data matters most; this was highlighted recently by machine learning researchers who stated that “the quickest path to success is often to just get more data, and more data beats a cleverer algorithm” [7]. In order to learn effective concept detectors, a critical step is to acquire a sufficiently large amount of training samples, especially positive training samples [9]. However, how to collect and label large scale training data is very challenging since the data collection and manual labeling are laborious and time consuming. Fortunately, with the explosive growth of visual contents on the Internet, large amounts of training samples have become available through Web searching [5, 29]. Consequently, how to utilize these abundant web images to improve concept detection has been the subject of intensive research by a large multimedia research community, since it has offered promising ways to automatically annotate the contents at relatively low cost [5, 29].

Furthermore, with the increasing of training dataset size, the training may be very time consuming since the time complexity of most machine learning methods such as Support Vector Machine (SVM) is between \(O\left (n^{2}\right )\) and \(O\left (n^{3}\right )\) (n is the number of training samples) [4, 25]. This seems infeasible if the number of training samples is very large, such as over one hundred thousand, and the feature dimension is very high. Therefore, for large scale dataset, how to train it effectively and efficiently is also a big challenge.

In this paper, we propose to an novel web image sampling approach and a novel group sparse ensemble learning approach to tackle these two challenging problems respectively. For data collection, in order to alleviate manual labeling effort, we propose a web image sampling approach based on dictionary coherence to select coherent positive samples from web images based on the degree of image coherence with a given concept. We propose to measure the coherence in terms of how dictionary atoms are shared since shared atoms represent common features with regard to a given concept and are robust to occlusion and corruption. Thus, two kinds of dictionaries are learned through online dictionary learning methods: one is the concept dictionary learned from key-point features of all the positive training samples while the other is the image dictionary learned from those of web images. Intuitively, the coherence degree is then calculated by the Frobenius norm of the product matrix of the two dictionaries. For efficient training of large scale data, in order to exploit the hidden group structures of data, we propose a novel group sparse ensemble learning approach based on Automatic Group Sparse Coding (AutoGSC). We first adopt AutoGSC to learn both a common dictionary over different data groups and an individual group-specific dictionary for each data group which can help us to capture the discrimination information contained in different data groups. Next, we represent each data instance by using a sparse linear combination of both dictionaries. Finally, we propose an algorithm to use the reconstruction errors of data instances to calculate the ensemble gating function for ensemble construction and fusion.

The main contribution of this paper is that we propose a novel web image sampling approach for training data collection and a novel group sparse ensemble learning approach for efficient visual concept detection.The rest of the paper is organized as follows. We first review the related work on web image sampling and visual concept Learning respectively in Section 2. Then we describe our proposed web image sampling approach based on dictionary coherence and our group sparse ensemble learning method respectively in Section 3. We will describe our experiments and give our experimental results in Section 4. Finally, we will conclude our work in Section 5.

2 Related work

2.1 Web image sampling

As aforementioned, how to utilize web images to improve concept detection has been the subject of intensive research by a large multimedia research community due to its relatively low cost for manual annotation [5, 29]. [29] empirically studied the effect of exploiting tagged images on concept learning by analyzing tag lists. [5] proposed an automatic concept-to-query mapping method for acquiring training data from online platforms.

However, the online web images are very noisy, often cover a wide range of unpredictable contents, and have quite different data distributions with any close dataset such as TRECVid dataset [15, 22]. As shown in Fig. 1, for example, the content of web images searched from Google Image with the keyword “Airplane-flying” varies greatly. Obviously, the images in the top row of the figure are incoherent from the concept “Airplane-flying” in the TRECVid dataset. Thus these images can not facilitate the training of the concept and may even harm it. Only the images in the second row are consistent with the dataset and hence helpful. Therefore, how to select coherent positive training samples from diffused web images is a challenging problem for training of effective concept detectors [5, 20, 21] due to the existence of cross-domain incoherence resulting from the mismatch of data distributions.

Existing work on video concept learning using web images has mainly focused on how to leverage compact features, such as region-based features [21] or image salience [20], to alleviate the visual differences. Since an image is greatly reduced to a very compact feature vector, the effect of these approaches is not evident. In this paper, we propose a novel sampling approach on how to exploit bundles of local key-point features to measure how coherent a web image is with a given concept, from the aspects of sparse coding and dictionary learning.

2.2 Visual concept learning

Due to the low efficiency and unscalability of the classical methods based on global classification such as SVM [6], Gaussian Mixture Model [1], Hidden Markov Model [16], statistical active learning[27], and various ensemble learning methods such as LDA-SVM [22–24], multi-kernel ensemble learning [19] and sparse ensemble learning [25], were developed for visual concept detection; they exploit the “divide and conquer” strategy to train large amounts of samples both effectively and efficiently.

In particular, [25] proposed an efficient sparse ensemble learning method by exploiting a sparse non-negative matrix factorization process for ensemble construction and fusion. It was shown to achieve state-of-art performance on the TRECVid 2008 dataset. However, this approach adopts traditional sparse coding and so treats each data instance as an individual and no data group information is considered. It considers each visual feature such as Bag of Visual Word (BoVW) of an image as a separate coding problem and does not take into account the fact that the sparse coding of each feature does not guarantee the sparse coding of all images in the dataset.

Each dataset usually consists of many categories, and is assured of having hidden group structures [28]. Once a dictionary atom has been selected to represent an image, it may as well as be used to represent other images of a given category without much additional regularization cost [3]. Therefore, it makes more sense to learn a group level sparse representation [3]. To exploit the group structures hidden in the data set, [3] proposed Group Sparse Coding (GSC), which learns a sparse representation on the group level as well as a shared dictionary. However, GSC assumes that the data group identities are pre-given, even though they are often hidden in many real world applications, and it can only learn a common dictionary [26]. To discover the hidden data groups, [26] proposed Automatic Group Sparse Coding (AutoGSC) by learning both a common dictionary over different data groups and an individual group-specific dictionary for each data group which can help us to capture the discrimination information contained in different data groups.

Inspired by the sparse ensemble learning work [25] and the advantages of AutoGSC [26] in discovering hidden structures of data, in this paper, we propose a novel group sparse ensemble learning approach based on automatic group sparse coding to exploit the hidden group structures of data.

3 Proposed approaches

Due to the huge intra-class variations for visual concept detection, it is necessary for concept learning to collect large scale training data to cover a wide variety of samples as much as possible. But it presents both great challenges on both how to collect and how to train the large scale data. In this section, we will elaborate on the details of our proposed web image sampling approach and group sparse ensemble learning approach to tackle these two challenging problems respectively.

3.1 Web image sampling

3.1.1 Overview

Inspired by the observation that dictionary atoms representing common features in all categories tend to appear to be repeated almost exactly in dictionaries corresponding to different categories, [17] promotes incoherence between the dictionary atoms to improve the speed and accuracy of sparse coding.

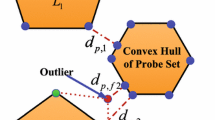

Motivated by this work, since the shared dictionary atoms learned from data can represent common features with regard to a given concept (represented by the set of positive training samples) and are robust to occlusion and corruption [13], we propose to use dictionary coherence in terms of how an image and a given concept share dictionary atoms to measure the degree of image coherence with the concept. That is, the more atoms they share, the higher the dictionary coherence is, which means it is more probable that the web image is coherent with the concept.

In order to compute the dictionary coherence, we learn two kinds of dictionaries through the online dictionary learning method [13]: one is the concept dictionary learned from key-point features of all the positive training samples while the other is the image dictionary learned from those of web images. Intuitively, the coherence degree is then calculated by the Frobenius norm of the product matrix of the two dictionaries since it reflects the sum of the absolute values of inner products between dictionary atoms.

On the basis of the dictionary coherence, we propose a novel adaptive sampling approach to select coherent positive samples from diffused web images for further concept learning.

3.1.2 Algorithm

As shown in the framework of Fig. 2, for each concept, the algorithm of the proposed sampling principally consists of the following steps:

-

(1) Construction of concept set: Select all the positive training samples from a development dataset such as TRECVid development set to represent the concept.

-

(2) Feature extraction of concept set: Extract local key-point features, such as SIFT [12] or SURF [2], and collect each key-point feature \(x_{i} \in \mathbf {R}^{d}\) of all the images in the concept set to form the data matrix \(\mathbf {X_{\mathit {c}}} = [x_{1}, {\ldots } ,x_{n}] \in \mathbf {R}^{d \times n}\). Here, d is the feature dimensionality, and n is the total number of keypoints.

-

(3) Concept dictionary learning: Adopt the efficient online dictionary learning methods [13] to learn the concept dictionary D c ∈ Rd×k from the concept data matrix X c , where k is the size of the dictionary, i.e., the number of atoms. For the SIFT feature, we set k=192 about 1.5 to 2.0 times of the feature size d=128 [25].

-

(4) Collection of web image set: After query construction or mapping [5] based on the concept name, search the web images and crawl the top-ranked ones.

-

(5) Feature extraction of web image: For each image in the web image set, extract the same local key-point features as the second step, and form the image data matrix \(\mathbf {X_{\mathit {i}}} \in \mathbf {R}^{d \times m}\), where m is the number of keypoints in the image.

-

(6) Image dictionary learning: Adopt the same dictionary learning methods [13] to learn the image dictionary \(\mathbf {D_{\mathit {i}}} \in \mathbf {R}^{d \times k}\) from the image data matrix X i .

-

(7) Dictionary coherence computing: Use (2) in subsection 3.1.4 to compute the dictionary coherence C i between the image dictionary D i and the concept dictionary D c .

-

(8) Adaptive sampling: Compare the dictionary coherence C i of the current web image with the adaptive threshold in subsection 3.1.5 to determine whether to add the current web image to the training set.

As shown in Fig. 2, after adding the selected coherent positive web samples (a manual check is advised to ensure it is positive) to the training set, we can do further concept learning for training more effective concept detectors. We will detail the key procedures in the following subsections.

3.1.3 Dictionary learning

In our study, we use the efficient online learning methods [13] to learn the dictionary. Due to the advantage of non-negativity constraints in learning part-based representations [25], which is helpful for object-oriented concept learning, we impose the positivity constraints on both dictionary D and sparse code α i in solving the optimization problem as below:

while restricting the atoms to have a norm of less than one. The optimization is achieved through an iterative approach consisting of two alternative steps: the sparse coding step on a fixed D and the dictionary update step on fixed α i [13]. As mentioned above, we learn two types of dictionaries: (1) a concept dictionary D c ; (2) an image dictionary D i .

3.1.4 Dictionary coherence computing

The natural way to measure the degree of coherence C i between the image dictionary D i and the concept dictionary D c , is to inspect the product matrix: \(\mathbf {D^{T}_{\mathit {i}}}\mathbf {D_{\mathit {c}}}\) , where the superscript T denotes the matrix transposition. This is because the element d i j of the product matrix represents the inner product between a pair of the two dictionary atoms, i.e., d i j =d i ⋅d j , here, d i ∈D i , d j ∈D c . Therefore, as shown in (2), we compute dictionary coherence C i through a Frobenius norm defined as the square root of the sum of the absolute squares of the matrix’s elements d i j :

where the subscript F denotes the Frobenius norm.

3.1.5 Adaptive sampling

After computing the dictionary coherence C i between the current web image and the concept, we can easily determine whether to add the current web image to the training set by simply comparing the C i with a pre-given threshold C t h . If C i ≥C t h , meaning that the web image is coherent with the concept, then we accept it. Otherwise, we discard it.

Here, we propose an adaptive off-line method through automatic calculation of the threshold C t h from the distribution of the coherence degrees of all the positive train samples. According to the theory of hypothesis testing, the threshold C t h can be adaptively determined by:

where μ and σ are the mean and standard deviation of all the coherence degrees C P o s between each positive training sample and the concept, and η is an empirical parameter that can be determined universally. In our experiments, we set \(\eta =\sqrt 3\).

3.2 Group sparse ensemble learning

3.2.1 Problem formulation

Ensemble learning refers to the process of combining multiple classifiers to provide a single and unified classification decision [25]. Recent research has demonstrated that a good ensemble of localized classifiers can outperform a single (best) classifier learned over the entire dataset [7, 25]. Additionally, learning a set of “smaller” localized classifiers is usually more efficient in terms of algorithmic complexity than a global classifier, which has motivated researchers to adopt the ensemble learning approach for concept detection [25]. [7] advocates to “learn many models not just one”.

In visual concept detection, an image or a keyframe of a video shot is processed to detect the presence of a set of predefined concepts. Without loss of generality, we assume that the data instances are represented as vectors, such as the visual feature vectors of keyframes. Mathematically, we denote the observed data matrix as X=[x1,x2,…,x n ]∈Rd×n, where x i ∈Rd represents the i-th data instance vector with dimensionality d. For each concept, we have the label Y={y i ∈±1,i=1,2,…,n} for the training set matrix X. Consequently, with the binary classification in the framework of SVM, the ensemble discriminant function F(x t ) for a given test sample x t is [25]:

where k localized classifiers are built on instance localities π c , and Ψ c (x t ) are the gating functions that govern how localized classifiers are coordinated for the final classification of test sample x t . Learning the ensemble discriminant function F(x t ), i.e., ensemble construction, can be decomposed into two steps [25]: (1) learning the instance localities π c and gating function Ψ c (x t ), and (2) training the individual classifiers to estimate the kernel classifier parameters such as the optimal classification hyperplane parameters β i ,b c .

3.2.2 Framework of group sparse ensemble learning

Here, we propose to construct the ensemble through AutoGSC [26] to take advantage of the hidden group structures of data. The overall framework is illustrated in Fig. 3.

Specifically, as shown in the figure, after feature extraction, we use AutoGSC to learn both a common shared dictionary DS over different data groups and the k individual group-specific dictionaries \(\left \{ \mathbf {D}_{c}^{I} \right \}_{c=1}^{k}\) which can help us to capture the discrimination information contained in the different data groups. We then represent each data instance x i by using a sparse linear combination of both dictionaries, i.e., get the shared sparse code matrices \(\left \{ \mathbf {G}_{c}^{S} \right \}_{c=1}^{k}\) from the common shared dictionary DS and the individual sparse code matrices \(\left \{ \mathbf {G}_{c}^{I} \right \}_{c=1}^{k}\) from the individual group-specific dictionaries \(\left \{ \mathbf {D}_{c}^{I} \right \}_{c=1}^{k}\) for data matrix X. Finally, we compute the reconstruction errors of the group sparse coding for each data instance, and use them to calculate the gating functions Ψ c (x t ) which are used for instance grouping during ensemble construction and individual classification result fusion during testing.

The following subsections will detail the gating function calculation with AutoGSC, ensemble construction and fusion, and complexity analysis.

3.2.3 Gating function calculation with autoGSC

AutoGSC tries to discover the hidden group structures of data by solving the optimization problem under the following non-negativity constraints [26]:

where the normalized matrices DS and \(\left \{ \mathbf {D}_{c}^{I} \right \}_{c=1}^{k}\) to be solved are the common shared dictionary and k individual group-specific dictionaries on each group locality π c respectively. The matrices \(\left \{ \mathbf {G}_{c}^{S} \right \}_{c=1}^{k}\) and \(\left \{ \mathbf {G}_{c}^{I} \right \}_{c=1}^{k}\) to be solved are the sparse code (i.e., reconstruction coefficient) matrices decoded by the two dictionaries correspondingly. In order to achieve group sparsity, the second term is introduced to impose some regularization on the sparse code where function ϕ is used to compute the ℓ1-norms of the row vectors of the input matrix.

After merging the two kinds of dictionaries and corresponding sparse codes by:

the objective function \(\hat {\mathcal {J}}_{0}\) of the optimization problem (5) can be rewritten as:

where Dc⋅j is the j-th column of D c , and G c i j is the (i,j)-th entry of G c .

AutoGSC uses a Lloyd style algorithm [26] to solve to the problem by alternating between the dictionary and sparse code.

Since AutoGSC searches each locality π c to obtain the group identity of a data instance x i with the minimum reconstruction error, we propose to use the reconstruction error to calculate the gating function vector \({\Psi }(x_{i})=\{ {\Psi }_{c}(x_{i})\}_{c=1}^{k}\) in the four steps shown in Fig. 4. This makes our proposed algorithm very different from the gating function calculation method proposed in [25], which uses the sparse code to obtain the gating function directly.

To achieve high efficiency, especially testing efficiency, we adopt the Instance-Locality Assignment Algorithm proposed in [24, 25] in our proposed algorithm to detect sharp decrease in the two adjacent elements and remove the rest small elements of the descending membership vector. In this paper, we restrict the maximum number of groups to which data instance x i can be assigned with the input replication parameter l, i.e., an instance can only be allocated to at most l group localities. After calling the algorithm, the returned gating function Ψ c (x i ) has only r (r≤l≪k) non-zero elements, which means x i can be allocated into only r groups at the same time.

3.2.4 Ensemble construction and fusion

For ensemble construction, we use Ψ c (x i ) to allocate each training data instance x i into multiple r group localities π c among all k hidden groups, and use SVM to train the individual classifiers for estimating the kernel classifier parameters such as the optimal classification hyperplane parameters β i ,b c .

Whereas for ensemble fusion, with (4), we use Ψ c (x t ) to coordinate the classification results of the related r group localities to obtain the ensemble discriminant function F(x t ) for a given test sample x t .

3.2.5 Complexity analysis

Similar to the computational complexity analysis in [25], since the theoretical computational complexity of SVM training is between \(O\left (n^{2}\right )\) and \(O\left (n^{3}\right )\) (n is the number of training samples) depending on the value of the hyper-parameter C [25], we can greatly improve the training efficiency after we use AutoGSC by partitioning a given training sample into at most l hidden groups. Specifically, the number of training samples in a given group locality π c is n l/k on average, and we need to train k individual classifier in the ensemble. Therefore, the complexity is greatly reduced to only l2/k to l3/k2 (l≪k) times that of global classification. While for testing, since we only invoke at most l individual classifiers in the ensemble for a given test sample, and the classifiers are very compact with many fewer support vectors than those used by a global classifier, the testing efficiency can be greatly improved compared with global classification. Thus we conclude that our proposed group sparse ensemble learning method is very efficient in both training and testing.

4 Experiments and results

4.1 Experiment setup

To evaluate the performance of our proposed web image sampling and group sparse ensemble learning methods, we selected the same TRECVid 2008 video benchmark collection [15] as [25] to conduct our experiments. TRECVid is now widely regarded as the actual standard for evaluation the performance of concept based video retrieval systems [25]. The number of positive training samples for each concept in the TRECVid 08 development set is shown in the column “#DPos” of Table 1 [25]. Refer to [15, 25] for more details about the dataset.

4.2 Web image sampling

First, we used the Google API to search and download the top 1000 web images for each concept by constructing a query with the concept name. Then we annotated the images manually; the number of positive samples for each concept in the initial web image set is shown in the column “#WPos” of Table 1. Finally, we used our proposed sampling method to select the positive samples for each concept; the number of positive samples for each concept selected from the web images is shown in the column “#SPos” of Table 1. To test the effectiveness of our proposed method, we performed three runs for each concept:

-

[Baseline]: Use only positive training samples in the TREC-Vid 08 development set (“#DPos” in Table 1).

-

[AddWeb]: Use positive training samples of the TREC-Vid 08 development set and the initial positive web image set (“#DPos+#WPos” in Table 1).

-

[Sampling]: Use positive training samples of the TREC-Vid 08 development set and the web image set after the proposed sampling(“#DPos+#SPos” in Table 1).

In the above runs, we used the SIFT features [12] for dictionary learning during sampling, and the well-known BoW feature [10] based on soft-weighting of SIFT, due to its widely reported effectiveness [25].

Figure 5 shows the comparison results of AP for each concept and mean AP (MAP) of the three runs. As shown, the proposed run [Sampling] achieved the highest MAP of 0.144, which is 9.92 % higher than the run [Baseline] (MAP 0.131), and 6.67 % higher than the run [AddWeb] without sampling(MAP 0.135). In particular, the proposed method outperformed the others on 9 out of 20 concepts, including Airplane-flying, Dog, Telephone, Demonstration-Or-Protest, Hand, and Flower, which had been selected with sufficient visually-coherent positive samples, while little was gained with the concepts such as Harbor, Kitchen, Bridge, and Emergency-Vehicle because these concepts on the old documentary TRECVid videos may be too outdated for enough positive web samples to be obtained. On the other hand, the run [AddWeb] achieved only a 3.05 % improvement in MAP compared with the run [Baseline].

Compared with the best runs in TRECVid 2008 [10], significant improvement was obtained in handling concepts with few TRECVID positive training samples. The experimental results show that the proposed approach can achieve constant overall improvement despite cross-domain incoherence.

4.3 Group sparse ensemble learning

To test our proposed group sparse ensemble learning method, we conducted comparison experiments with the sparse ensemble learning (SEL) method proposed by [25] we used the same VIREO-374 BoVW features released by [10] as [25] to train and test our system. Additionally, we used the same parameters such as number of locality k=800, replication parameter l=20, RBF kernel of SVM, and same evaluation criteria InfAP as [25] for direct comparison.

First, to verify the effectiveness of our ensemble fusion method based on the proposed gating function calculation algorithm, we compared it with average fusion. InfAP of each concept and MAP yielded by the two fusion schemes are shown in Fig. 6. It shows that our fusion method, based on the proposed gating function calculation algorithm, yields MAP of 0.147, 6.5% higher than average fusion (MAP=0.138). We can also see that the proposed method clearly outperforms average fusion on most of the concepts, such as “Dog”, “Airplane-flying”, “Cityscape”, “Street”, “Hand”, “Mountain”, “Nighttime”. This superiority does not extend to rare concepts with too few positive training samples such as “Bus” and “Emergency-Vehicle”; their detection rates are low and unstable. Thus we can conclude that our fusion method is effective.

Next, we compared our method with the SEL method [25], global SVM classification (Single-SVM). Ours yielded a MAP of 0.147, an improvement of 4.3 % and 12.2 % over SEL (MAP=0.141) and Single-SVM (MAP=0.131), respectively. Figure 7 compares InfAP for each concept. From the figure, we can see that the proposed method clearly outperforms SEL and single SVM on 11 out of 20 concepts, including both scene concepts like “Cityscape”, “Harbor”, “Street”, “Mountain”, “Nighttime” and object concepts like “Dog”, “Airplane-flying”, “Two-people”, “Telephone”, “Hand”, “Flower”. For the remaining concepts such as “driver” and “Demonstration-Or-Protest”, SEL performs better, this is due to the diversified patterns of these concepts. Our conjecture is that the instances of these concepts in which our proposed method performs best are not too diversified, and often have good tendency or consistence on being well grouped. This reflects the advantages of group sparse coding in discovering the hidden group structures.

Additionally, our experiments also shows that the time complexity of ours is almost equivalent to that of SEL method.

The above results show that the ensemble learning proposal has achieved promising results and can outperform existing approaches.

5 Conclusion

In this paper, we propose a novel web image sampling approach and a novel group sparse ensemble learning approach to tackle the two challenging problems of large scale data collection and training respectively. For data collection, in order to alleviate manual labeling efforts, we propose a web image sampling approach based on dictionary coherence to select coherent positive samples from web images. For efficient training of large scale data, in order to exploit the hidden group structures of data, we propose a novel group sparse ensemble learning approach based on Automatic Group Sparse Coding (AutoGSC). Experiments show that our proposed methods can achieve promising results and outperforms existing approaches.

References

Amir A, Berg M, Chang S -F, Hsu W, Iyengar G, Lin C-Y, Naphade M, Natsev AP, Neti C, Nock H, Smith JR, Tseng B, Wu Y, Zhang D IBM research TRECVID-2003 video retrieval system. In: NIST TRECVID Workshop, Nov 2003

Bay H, Ess A, Tuytelaars T, Gool LV (2008) SURF: Speeded up robust features. Comp Vision Image Underst 110(3):346–359

Bengio DSS , Pereira F, Singer Y (2009) Group Sparse Coding. In: Neural Information Processing Systems - NIPS

Bordes A, Ertekin S, Weston J, Bottou L (2005) Fast kernel classifiers with online and active learning. J Mach Learn Res 6:1579–1619

Borth D, Ulges A, Breuel TM (2011) Automatic concept-to-query mapping for web-based concept detector training. In: ACM Multimedia 2011, pp 1453–1456

Cao J, Lan Y, Li J, Li Q, Li X, Lin F, Liu X, Luo L, Peng W, Wang D, Wang H, Wang Z, Xiang Z, Yuan J, Zhang B, Zhang J, Zhang L, Zhang X, Zheng W Intelligent multimedia group of Tsinghua University at TRECVID, 2006. In: NIST TRECVID Workshop, Nov 2006

Domingos P (2012) A few useful things to know about machine learning. Commun ACM 55(10):78–87

Enzweiler M, Gavrila DM (2009) Monocular pedestrian detection: Survey and experiments. IEEE Trans Pattern Anal Mach Intell 31:2179–2195

Huiskes MJ, Thomee B, Lew M S (2010) New trends and ideas in visual concept detection: the MIR Flickr retrieval evaluation initiative. In: Proceedings of the international conference on Multimedia Information Retrieval (MIR 2010), pp 527–536

Jiang Y-G, Yang J, Ngo C-W, Hauptmann AG (2010) Representations of keypoint-based semantic concept detection: A comprehensive study. IEEE Trans Multimed 12(1):42–53

Li H, Wang X, Tang J, Zhao C (2013) Combining global and local matching of multiple features for precise retrieval of item images. ACM/Springer Multimed Syst J 19(1):37–49

Lowe DG (2004) Distinctive image features from scale-invariant keypoints. Int J Comput Vis 60(2):91–110

Mairal J, Bach F, Ponce J, Sapiro G (2010) Online learning for matrix factorization and sparse coding. J Mach Learn Res 11:19–60

Munder S, Gavrila D (2006) An experimental study on pedestrian classification. IEEE Trans Pattern Anal Mach Intell 28:1863–1868

Over P, Awad G, Rose RT, Fiscus JG, Kraaij W, Smeaton AF (2008) Trecvid 2008 - goals, tasks, data, evaluation mechanisms and metrics. In: NIST TRECVID Workshop

Pytlik B, Ghoshal A, Karakos D, Khudanpur S TRECVID 2005 Experiment at Johns Hopkins University: Using Hidden Markov Models for Video Retrieval. In: NIST TRECVID Workshop, Nov 2005

Ramirez I, Sprechmann P, Sapiro G (2010) Classification and clustering via dictionary learning with structured incoherence and shared features. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2010), pp 3501–3508

Smeulders AWM, Worring M, Santini S, Gupta A, Jain R (2000) Content-based image retrieval at the end of the early years. IEEE Trans Pattern Anal Mach Intell 22(12):1349–1380

Song Y, Zheng Y-T, Tang S, Zhou X, Zhang Y, Lin S, Chua T-S (2011) Localized multiple kernel learning for realistic human action recognition in videos. IEEE Trans Circ Syst Vi Technol 21(9):1193–1202

Sun Y, Kojima A (2011) A novel method for semantic video concept learning using web images. In: ACM Multimedia 2011, pp 1081–1084

Sun Y, Shimada S, Taniguchi Y, Kojima A (2008) A novel region-based approach to visual concept modeling using web images. In: ACM Multimedia 2008, pp 635–638

Tang S, Li J-T, Li M, Xie C, Liu Y, Tao K, Xu S-X Trecvid 2008 high-level feature extraction by MCG-ICT-CAS. In: NIST TRECVID Workshop, Nov 2008

Tang S, Li J-T, Zhang Y-D, Xie C, Li M, Liu Y, Hua X, Zheng Y-T, Tang J, Chua T-S PornProbe: an LDA-SVM based pornography detection system. In: ACM Multimedia 2009, Oct. 2009

Tang S, Zheng Y-T, Cao G, Zhang Y-D, Li J-T (2012) Ensemble learning with LDA topic models for visual concept detection. Multimedia - A Multidisciplinary Approach to Complex Issues, pp 175–200

Tang S, Zheng Y-T, Wang Y, Chua T-S (2012) Sparse ensemble learning for concept detection. IEEE Trans Multimed 14(1):43–54

Wang F, Lee N, Sun J, Hu J, Ebadollahi S Automatic group sparse coding. In: Twenty-Fifth AAAI Conference on Artificial Intelligence, Aug 2011

Zha Z-J, Wang M, Zheng Y-T, Yang Y, Hong R, Chua T-S (2012) Interactive video indexing with statistical active learning. IEEE Trans Multimed 14(1):17–27

Zha Z-J, Zhang H, Wang M, Luan H, Chua T-S (2013) Detecting group activities with multi-camera context. IEEE Transactions on Circ Syst Vi Technol 23(5):856–869

Zhu S, Wang G, Ngo C-W, Jiang Y-G (2010) On the sampling of web images for learning visual concept classifiers. In: Proceedings of the 9th ACM International Conference on Image and Video Retrieval (CIVR 2010), pages 50–57, Xi’an, China

Author information

Authors and Affiliations

Corresponding author

Additional information

The preliminary version of this paper was partly published in the Pacific-Rim Conference on Multimedia (PCM 2013), and partly in the 19th International Conference on Multimedia Modeling (MMM 2013).

Rights and permissions

About this article

Cite this article

Sun, Y., Sudo, K. & Taniguchi, Y. Visual concept detection of web images based on group sparse ensemble learning. Multimed Tools Appl 75, 1409–1425 (2016). https://doi.org/10.1007/s11042-014-2179-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-014-2179-8