Abstract

A novel content-based motion descriptor is proposed. Firstly, the multi-view image information is captured to represent motion, and then the Switching Kalman Filters Model (S-KFM), which is a kind of the Dynamic Bayesian Network (DBN), is built based on the images fusion and the optical stream technology. Secondly, through the S-KFM inferring and sequence signal coding, a graph-based motion descriptor can be obtained. Lastly, motion matching results based on the graph model descriptor show our method is effective.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In recent years, computer animation has become very popular due to the increasing importance of it in many applications [20, 24, 25]. In computer animation, we are particularly interested in human motion. There are many methods developed to produce the human motion data. A well-known method is called the motion capture (MoCap). With the motion capture devices becoming more widely available, large motion databases start to appear [5–7, 15, 18]. However, as the number of motions grows, it becomes difficult to select an appropriate motion that satisfies certain requirements. Hence, motion retrieval has become one of the major research focuses in motion capture animation in recent years.

Motion retrieval research is still relatively new compared to retrieval research of other multimedia data. There are only a few motion retrieval methods in the literature. Many motion retrieval systems use the Dynamic Time Warping (DTW) as the similarity measure [9]. However, the DTW usually has low efficiency due to motion capture data consists of many parameters and attributes. For increasing the DTW-based retrieval efficiency, dimension reduction methods are often employed [2]. In order for the DTW to support indexing and further increasing retrieval efficiency, [8] proposes an algorithm which is based on the Uniform Scaling to match the query. However, in order to handle the motions that contain both local and global differences, the computational cost of system is increased significantly when the DTW and the Uniform Scaling should be applied separately.

Besides the DTW-based methods, other works concern finding logically similar motions. For example, in [11], templates are created to describe motion, retrieval is based on the template matching. In [10], geometric features are used to build indexing tree automatically based on segmentation and clustering, and motion matching is based on peak points. In [14], a motion index tree is constructed based on a hierarchical motion description. The motion index tree serves as a classifier to determine the sub-library that contains the promising similar motions to the query sample. The Nearest Neighbor rule-based dynamic clustering algorithm is adopted to partition the library and construct the motion index tree. The similarity between the sample and the motion in the sub-library is calculated through elastic match.

Some works related to motion sequence analysis and estimation are also done in recently years [1, 4, 19, 22, 23], which are basis for finding more effective motion retrieval approaches. For example, in [4], a motion-compensated deinterlacing scheme based on hierarchical motion analysis is presented. For motion estimation, a Gaussian noise model for choosing the best motion vector for each block is introduced. In [23], a general framework to unsupervisedly discover video shot categories is studied. A new feature is proposed to capture local information in videos. In [1], a motion trajectory-based compact indexing and efficient retrieval mechanism for video sequences is proposed. This approach solves the problem of trajectory representation when only partial trajectory information is available due to occlusion. It is achieved by a hypothesis testing-based method applied to curvature data computed from trajectories. In [19], a robust logical relevance metric based on the relative distances among the joints is discussed. The [22] studies an adaptive tracking algorithm by learning hybrid object templates online in video. The templates consist of multiple types of features, each of which describes one specific appearance structure, such as flatness, texture or edge/corner.

In this paper, we are interested in finding motions that are entirely similar to a given query. Based on multi-view information and image fusion technology, we convert motion matching into a transportation problem to handle rotating, local scaling or global scaling. Based on graph model inference and sequence information coding, we can compute distance between two motions. We compare mainly with the DTW and the Uniform Scaling method. Though we have not implemented any indexing scheme, extending our method to support indexing can be easily achieved because our distance function is a metric. Our experimental results show that the proposed method is promising.

The rest of this paper is organized as follows. Overview of our method is presented in Section 2. Section 3 describes our method in detail. Section 4 evaluates the performance of our method through experiments. Section 5 briefly concludes this paper.

2 Overview of our method

Our method can be briefly described in Fig. 1. Motion retrieval frame can be separated two parts entirely: motion descriptor building and motion retrieval.

In stage of motion descriptor building, firstly, motion database would be constructed, in this paper, the CMU motion database [5] is used and some character motions, which can be discriminated each other, are selected out to building the motion database. Secondly, each motion feature is extracted, the steps including (as shown in Fig. 1): (1) Processing of multi-view motion information. Each animation is put into the lightfield [21] to get multi-view images, and the PCA-based image fusion algorithm is used to get the fusion image sequence. (2) Building motion graph model. For each frame of motion, the multi-view information can be fused into fusion-images, optical stream signals [12] is computed based on difference between the adjacent fusion-images, and graph model is constructed based on the optical stream signals to represent the motion. (3) Inference and coding. Based on obtained graph model, the DBN inference algorithm [13, 16] is used to get hidden variable sequence information, and all variables in graph model can be coded [3] as the motion descriptor.

In stage of retrieval, the query motion is extracted feature according to above, and then χ 2-test is used to compute distances between query and motions in database, the retrieval results are sorted and outputted.

3 Graph-based motion descriptor

3.1 Motion descriptor building

-

(a)

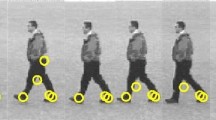

Processing of multi-view motion information. In order to decrease affection of rotating and scaling and represent motion fully, the multi-view images are utilized to describe animation [21]. Firstly, the animation is put into lightfield, as shown in Fig. 2, the m cameras are set on vertexes of polyhedron around the object. In i-th frame of the motion, the images of different viewpoints are acquired around the object (denoted as: I i 1-view, I i 2-view,…, I i m-view). In order to decrease size of motion descriptor and retain useful information, image fusion algorithm based on the Principle Component Analysis (PCA) [17] is used to transform the m images into a fusion image I i . The multi-view information is got from 1st frame to T-th frame, and fusion-image sequence is obtained: I 1, I 2,…, I T .

The multi-view images can be fused through the pixel-based and the PCA-based method. We can further discuss the fusion method as follow.

-

(1)

Let image I i-view be an N × N matrix: I i-view = [f ij ] N×N , where the f ij is the gray value of each pixel. The matrix I i-view (1 ≤ i ≤ n) can also be denoted as a vector: I i-view = [f 11, f 12, …, f NN ]T = [f 1, f 1, …, f Q ]T (Q = N 2), then the means and var of I i-view are: μ f = E[f], K f = E[(f - μ f )(f - μ f )T].

-

(2)

A matrix X can also be constructed based on the multi-view images (denoted as I 1-view,…, I m-view), suppose there are m images and size of each image is n = N × N, we have:

$$ X=\left( {\begin{array}{*{20}c} {{x_{11 }}} \hfill & \cdots \hfill & {{x_{1j }}} \hfill & \cdots \hfill & {{x_{1n }}} \hfill \\ \vdots \hfill & \vdots \hfill & \vdots \hfill & \vdots \hfill & \vdots \hfill \\ {{x_{i1 }}} \hfill & \cdots \hfill & {{x_{ij }}} \hfill & \cdots \hfill & {{x_{jn }}} \hfill \\ \vdots \hfill & \vdots \hfill & \vdots \hfill & \vdots \hfill & \vdots \hfill \\ {{x_{m1 }}} \hfill & \cdots \hfill & {{x_{mj }}} \hfill & \cdots \hfill & {{x_{mn }}} \hfill \\ \end{array}} \right) $$(1)Where x ij is gray value in j-th pixel of i-th image (I i-view). The var matrix of X can be calculated as:

$$ C=\left( {\begin{array}{*{20}c} {{\sigma_{11 }}} \hfill & \cdots \hfill & {{\sigma_{1j }}} \hfill & \cdots \hfill & {{\sigma_{1n }}} \hfill \\ \vdots \hfill & \vdots \hfill & \vdots \hfill & \vdots \hfill & \vdots \hfill \\ {{\sigma_{i1 }}} \hfill & \cdots \hfill & {{\sigma_{ij }}} \hfill & \cdots \hfill & {{\sigma_{jn }}} \hfill \\ \vdots \hfill & \vdots \hfill & \vdots \hfill & \vdots \hfill & \vdots \hfill \\ {{\sigma_{m1 }}} \hfill & \cdots \hfill & {{\sigma_{mj }}} \hfill & \cdots \hfill & {{\sigma_{mn }}} \hfill \\ \end{array}} \right) $$(2)Where \( \sigma_{i,j}^2=\frac{1}{n}\sum\limits_{i=0}^{n-1 } {\left( {{x_{i,l }}-{{\overline{x}}_i}} \right)\left( {{x_{j,l }}-{{\overline{x}}_i}} \right)} \), \( {{\overline{x}}_i} \) is the average gray value of i-th image.

-

(3)

Let |λ Ψ-C| = 0 (Ψ is unit matrix), and feature vector λ 1, λ 2, …, λ m are obtained. We can get fusion coefficients: \( {\omega_i}={\lambda_i}/\sum\nolimits_{i=1}^m {{\lambda_i}} \). Next, feature matrix A is calculated:

$$ \left. {\begin{array}{*{20}c} {\varLambda =\left( {\begin{array}{*{20}c} {{\lambda_1}} \hfill & {} \hfill & {} \hfill \\ {} \hfill & \ddots \hfill & {} \hfill \\ {} \hfill & {} \hfill & {{\lambda_m}} \hfill \\ \end{array}} \right)} \\ {{K_f}\varLambda =A\varLambda } \\ \end{array}} \right\}\Rightarrow A=\left( {\begin{array}{*{20}c} {{a_1}} \hfill \\ {{a_2}} \hfill \\ \vdots \hfill \\ {{a_m}} \hfill \\ \end{array}} \right) $$(3) -

(4)

Lately, the fusion image is got: \( I=\sum\nolimits_{i=1}^m {{\omega_i}{a_i}} \)

We can give an example to further explain the fusion processing. As shown in Fig. 3. Firstly, before fusion computation, all the multi-views images should be normalized. The steps can be described as: based on center of motion object, we can find a rectangle (a × b) to just enclose the motion object, and the enclosed pixels can be scale to 100 × 100 image, as shown in Fig. 3. Secondly, based prior knowledge, we know that images with different viewpoints have different efficiency for discriminating motions, then for different viewpoint images, we set different weights during the fusion processing. For example, as shown in Fig. 3, first according to experience, viewpoint images are selected and sorted according to discrimination efficiency, and the weight of first image is set to 1.0, the weight of secondly image can be set to 0.8, and so on. Lastly, according to the pixel-based image fusion and the PCA theory, all the multi-view images are composed together by Eqs. (1)–(3), as shown in Fig. 3.

-

(1)

-

(b)

Building motion graph model. As we known, sequence data can be expressed by the Dynamic Bayesian Network (DBN) [16]. In this paper, we select the Switching Kalman Filters Model (S-KFM) [16], which is a kind of the DBN, to represent motion.

As shown in Fig. 4, we use the DBN to build motion description, firstly, let optical stream signals be the observation signals of the DBN, the reason can be written as: in video tracking, the optical stream signals is often be used to detect moving object, the moving trend can be also described by difference between adjacent images. Based on the idea, we take the optical stream sequence of fusion-images (I 1, I 2,…, I T .) as observation values of the S-KFM. The optical stream sequence L 1,…,L 5 can be computed according to [12], we can describe in detail as follow.

Firstly, based on [12], the motion trend can be detected based on the optimal shifting vectors of corresponding points between the adjacent images. In the i-th frame image, let shifting value in point (x, y) be:

$$ {e_{x,y }}={{\sum\nolimits_{{x\prime, y\prime \in W}} {\left( {I{{{\left( {t+1} \right)}}_{{x\prime +\delta x,y\prime +\delta y}}}-I{(t)_{{x\prime, y\prime }}}} \right)}}^2} $$(4)where the (x’,y’) is any pixel point in given window W, and I(t + 1) x’+δx, y’+δy is the pixel of (x’ + δx, y’ + δy) at t + 1 in fusion-image, the (δx, δy) is the shifting vector of point (x, y), the I(t) x’,y’ is the pixel of (x’, y’) at t in fusion-image, a optimal solution (δx,δy)* can be found to make the e x,y minimize:

$$ {{\left( {\delta x,\delta y} \right)}^{*}}=\mathop{{\arg \min }}\limits_{{_{{\left( {\delta x,\delta y} \right)}}}}{{\sum\nolimits_{{x\prime, y\prime \in W}} {\left( {I{{{\left( {t+1} \right)}}_{{x\prime +\delta x,y\prime +\delta y}}}-I{(t)_{{x\prime, y\prime }}}} \right)}}^2} $$(5)All optimal shifting vectors between adjacent fusion-images (I i and I i+1) are combined together to express motion trend, that is denoted as optical stream L i . We can let the L i be input signal of the S-KFM, which is also observation signal e i in the DBN, then observation sequence is written as: E = {e 1 , e 2 ,…, e T }.

Secondly, given input signal E, similar as noised signal processing, we can use the S-KFM to estimate hidden sequence and state switching signals (denoted as X = {x 1,x 2,…,x T }, S = {s 1 , s 2 ,…, s T }). Based on above analysis, the graph model of motion is constructed in Fig. 4.

-

(c)

Inference and coding. The DBN Inference is to estimate the posterior probability of hidden states in system. Given observation sequence E (or called evidence sequence), hidden sequence X and switching sequence S can be obtained by using inference algorithm. The inference can be described as follow.

We suppose that all continuous variables or conditional probability density functions in the DBN are Gaussian distribution, let:

$$ P\left( {{x_0}} \right)=\frac{1}{{\sqrt{{2\pi }}{\sigma_0}}}{e^{{-\tfrac{{{{{\left( {x-{\mu_0}} \right)}}^2}}}{{\sigma_0^2}}}}}=N\left( {{\mu_0},\sigma_0^2} \right) $$(6)and let P(s 0) = N(μ s0, σ 2 s0), let state transition probability P(x t+1|x t ) = N(μ x1, σ 2 x1) and P(s t+1|s t ) = N(μ s1, σ 2 s1), condition transition probability P(x t+1|x t , s t ) = N(μ x s , σ 2 xs ), let observation probability P(e t | x t ) = N(μ e1, σ 2 e1).

In general, the DBN learning can be formulated as the ML learning problem. In this paper, initial system parameters are set according to prior knowledge, and the parameters can also be adjusted according to user’s satisfaction for motion retrieval results. When retrieval system runs for a period of time, the large number of retrieved data can be obtained, the EM algorithm [16] is used to find optimal values of the DBN parameters {μ x0, σ 2 x0, μ s0, σ 2 s0, μ x1, σ 2 x1, μ s1, σ 2 s1, μ x s , σ 2 xs , μ e1, σ 2 e1}.

As shown in Fig. 4, we can calculate P(x 1) based on the x 0 and the s 0 according to Bayesian rule:

$$ \begin{array}{*{20}c} {P\left( {{x_1}} \right)=\iint\limits_{{{x_0},{s_0}}} {P\left( {{x_1}\left| {{x_0},{s_0}} \right.} \right)P\left( {{x_0},{s_0}} \right)d{x_0}d{s_0}}} \\ {=\sum\limits_{{{s_0}=1}}^k {P\left( {{s_0}} \right)} \int\nolimits_{{{x_0}}} {P\left( {{x_0}} \right)P\left( {{x_1}\left| {{x_0},{s_0}} \right.} \right)d{x_0}} } \\ \end{array} $$(7)Based on Eq. (7), because the P(x 0,s 0) = P(x 0)P(s 0) (variable conditional independence) and the s is the discrete signal. The next, predicted data can be updated by computing the P(x 1, s 1|e 1). According to the Bayesian rule and the DBN filtering equation [16], the predicted data can be updated:

$$ \begin{array}{*{20}c} {P\left( {{X_{1+t }}\left| {{e_{1:1+t }}} \right.} \right)=\alpha P\left( {{e_{1+t }}\left| {{X_{1+t }}} \right.} \right)P\left( {{X_{1+t }}\left| {{e_{1:t }}} \right.} \right)} \\ {=\alpha P\left( {{e_{1+t }}\left| {{X_{1+t }}} \right.} \right)\sum\nolimits_{{{X_t}}} {P\left( {{X_{1+t }}\left| {{X_t}} \right.} \right)P\left( {{X_t}\left| {{e_{1:t }}} \right.} \right)} } \\ \end{array} $$(8)Where α is a parameter, which ensures computed results to be normalized [16]. In above equation, if to replace the X t with (x t , s t ), then we have:

$$ \begin{array}{*{20}c} {P\left( {{x_{t+1 }},{s_{t+1 }}\left| {{e_{1:t+1 }}} \right.} \right)} \hfill \\ {=\alpha P\left( {{e_{t+1 }}\left| {{x_{t+1 }},{s_{t+1 }}} \right.} \right)\sum\limits_{{{s_t}=1}}^k {\int\nolimits_{{{x_t}}} {P\left( {{x_t},{s_t}\left| {{e_{1:t }}} \right.} \right)P\left( {{x_{1+t }},{s_{1+t }}\left| {{x_t},{s_t}} \right.} \right)} } } \hfill \\ {=\alpha P\left( {{e_{t+1 }}\left| {{x_{t+1 }}} \right.} \right)\sum\limits_{{{s_t}=1}}^k {P\left( {{x_t}\left| {{e_{1:t }}} \right.} \right)P\left( {{s_t}\left| {{e_{1:t }}} \right.} \right)\int\nolimits_{{{x_t}}} {P\left( {{x_{1+t }}\left| {{x_t},{s_t}} \right.} \right)P\left( {{s_{1+t }}\left| {{x_t},{s_t}} \right.} \right)} } } \hfill \\ {=\alpha P\left( {{e_{t+1 }}\left| {{x_{t+1 }}} \right.} \right)\sum\limits_{{{s_t}=1}}^k {P\left( {{s_t}\left| {{e_{1:t }}} \right.} \right)P\left( {{s_{1+t }}\left| {{s_t}} \right.} \right)\int\nolimits_{{{x_t}}} {P\left( {{x_t}\left| {{e_{1:t }}} \right.} \right)P\left( {{x_{1+t }}\left| {{x_t},{s_t}} \right.} \right)} } } \hfill \\ \end{array} $$(9)According to the above formula, the x t and s t can be updated based on observation data e t . The next, due to:

$$ P\left( {{x_{t+1 }}\left| {{e_{1:t+1 }}} \right.} \right)=\alpha P\left( {{e_{t+1 }}\left| {{x_{t+1 }}} \right.} \right)\int\nolimits_{{{x_t}}} {P\left( {{x_{t+1 }}\left| {{x_t}} \right.} \right)P\left( {{x_t}\left| {{e_{1:t }}} \right.} \right)} $$(10)And because: \( P\left( {{x_{t+1, }}{s_{t+1 }}\left| {{e_{1:t+1 }}} \right.} \right)=P\left( {{x_{t+1 }}\left| {{e_{1:t+1 }}} \right.} \right)P\left( {{s_{t+1 }}\left| {{e_{1:t+1 }}} \right.} \right) \), so we have:

$$ \begin{array}{*{20}c} {P\left( {{s_{t+1 }}\left| {{e_{1:t+1 }}} \right.} \right)=\frac{{P\left( {{x_{t+1, }}{s_{t+1 }}\left| {{e_{1:t+1 }}} \right.} \right)}}{{P\left( {{x_{t+1 }}\left| {{e_{1:t+1 }}} \right.} \right)}}} \\ {=\frac{{P\left( {{x_{t+1, }}{s_{t+1 }}\left| {{e_{1:t+1 }}} \right.} \right)}}{{\alpha P\left( {{e_{t+1 }}\left| {{x_{t+1 }}} \right.} \right)\int\nolimits_{{{x_t}}} {P\left( {{x_{t+1 }}\left| {{x_t}} \right.} \right)P\left( {{x_t}\left| {{e_{1:t }}} \right.} \right)} }}} \\ {=\frac{{P\left( {{e_{t+1 }}\left| {{x_{t+1 }}} \right.} \right)\sum\limits_{{{s_t}=1}}^k {P\left( {{s_t}\left| {{e_{1:t }}} \right.} \right)} P\left( {{s_{1+t }}\left| {{s_t}} \right.} \right)\int\nolimits_{{{x_t}}} {P\left( {{x_t}\left| {{e_{1:t }}} \right.} \right)P\left( {{x_{1+t }}\left| {{x_t},{s_t}} \right.} \right)} }}{{P\left( {{e_{t+1 }}\left| {{x_{t+1 }}} \right.} \right)\int\nolimits_{{{x_t}}} {P\left( {{x_{t+1 }}\left| {{x_t}} \right.} \right)P\left( {{x_t}\left| {{e_{1:t }}} \right.} \right)} }}} \\ \end{array} $$(11)Now, based on observation E = {e 1 , e 2 ,…, e T }, we can estimate the hidden sequence X = {x 1,x 2,…,x T } and the state switching signals S = {s 1 , s 2 ,…, s T } according to recurrence formula: from Eqs. (7) to (11). Lastly, all sequence signals would be transformed into the quantized and normalized signals (denoted as E norm, X norm, S norm), as shown in Fig. 5. We can use the matrix G to describe the motion:

$$ G={{\left[ {g\left( {i,j} \right)} \right]}_{{3\times T}}}=\left[ {{E_{\mathrm{morm}}};{X_{\mathrm{norm}}};{S_{\mathrm{norm}}}} \right]=\left[ {\begin{array}{*{20}c} {{{\overline{e}}_1},} \hfill & {{{\overline{e}}_2}} \hfill & {\cdots, } \hfill & {{{\overline{e}}_T}} \hfill \\ {{{\overline{x}}_1},} \hfill & {{{\overline{x}}_2}} \hfill & {\cdots, } \hfill & {{{\overline{x}}_T}} \hfill \\ {{{\overline{s}}_1},} \hfill & {{{\overline{s}}_2}} \hfill & {\cdots, } \hfill & {{{\overline{s}}_T}} \hfill \\ \end{array}} \right] $$(12)and \( {{\overline{e}}_i}={e_i}/\sum\nolimits_{i=1}^T {{e_i};{{\overline{x}}_i}={x_i}/\sum\nolimits_{i=1}^T {{x_i};{{\overline{s}}_i}={s_i}/\sum\nolimits_{i=1}^T {{s_i}} } } \)

Where \( {{\overline{e}}_i},{{\overline{x}}_i},{{\overline{s}}_i} \) are the quantized and normalized values of e i , x i and s i , respectively, and the T in Eq. (12) denotes length of motion.

We can further explain the DBN inference through a toy. For easy calculation and expression, we use discrete data instead of continuous data. As shown in Fig. 6, the DBN parameters are: P(s 0) = P(x 0) = [0.5 0.5], and P(s t+1|s t ) = [0.3 0.7; 0.7 0.3], condition transition probability P(x t+1|x t , s t ) = [0.1 0.2 0.3 0.4; 0.9 0.8 0.7 0.6], P(x t+1|x t ) = [0.2 0.8; 0.8 0.2], let observation probability P(e t | x t ) = [0.4 0.6; 0.6 0.4].

Now, if suppose all nodes have 2 states (denoted as Ture = 1, False = 2), let inputted signals e 1 = 1, we can calculate P(s 1 = 1|e 1 = 1) and P(x 1 = 1|e 1 = 1).

Firstly, based on Eq. (9), we have:

$$ \begin{array}{*{20}c} {P\left( {{x_{t+1 }},{s_{t+1 }}\left| {{e_{1:t+1 }}} \right.} \right)=\alpha P\left( {{e_{t+1 }}\left| {{x_{t+1 }}} \right.} \right)\sum\limits_{{{s_t}=1}}^k {P\left( {{s_t}\left| {{e_{1:t }}} \right.} \right)P\left( {{s_{1+t }}\left| {{s_t}} \right.} \right)\int\nolimits_{{{x_t}}} {P\left( {{x_t}\left| {{e_{1:t }}} \right.} \right)P\left( {{x_{1+t }}\left| {{x_t},{s_t}} \right.} \right)} } } \\ {\approx \alpha P\left( {{e_{t+1 }}\left| {{x_{t+1 }}} \right.} \right)\sum\limits_{{{s_t}=1}}^k {P\left( {{s_t}\left| {{e_{1:t }}} \right.} \right)P\left( {{s_{1+t }}\left| {{s_t}} \right.} \right)\sum\limits_{{{x_t}=1}}^k {P\left( {{x_t}\left| {{e_{1:t }}} \right.} \right)P\left( {{x_{1+t }}\left| {{x_t},{s_t}} \right.} \right)} } } \\ \end{array} $$(13)When t = 0, we have:

$$ \begin{array}{*{20}c} {P\left( {{x_1}=1,{s_1}=1\left| {{e_1}=1} \right.} \right)} \hfill \\ {=P\left( {{e_1}=1\left| {{x_1}=1} \right.} \right)\left( {P\left( {{s_0}=1} \right)\times P\left( {{s_1}=1\left| {{s_0}=1} \right.} \right)+P\left( {{s_0}=2} \right)\times P\left( {{s_1}=1\left| {{s_0}=2} \right.} \right)} \right)} \hfill \\ {\times P\left( {{x_1}=1\left| {{e_1}=1} \right.} \right)\left( \begin{array}{*{20}c} \left[ {P\left( {{x_1}=1\left| {{x_0}=1,{s_0}=1} \right.} \right)+P\left( {{x_1}=1\left| {{x_0}=1,{s_0}=2} \right.} \right)} \right] \hfill \\ +\left[ {P\left( {{x_1}=1\left| {{x_0}=2,{s_0}=1} \right.} \right)+P\left( {{x_1}=1\left| {{x_0}=2,{s_0}=2} \right.} \right)} \right] \hfill \\\end{array} \right)} \hfill \\ {=0.4\times \left( {0.5\times 0.3+0.5\times 0.7} \right)\times P\left( {{x_1}=1\left| {{e_1}=1} \right.} \right)\left( {\left[ {0.1+0.2} \right]+\left[ {0.3+0.4} \right]} \right)} \hfill \\ {=0.4\times 0.5\times P\left( {{x_1}=1\left| {{e_1}=1} \right.} \right)} \hfill \\ \end{array} $$(14)Similar, we have \( P\left( {{x_1}=1,{s_1}=2\left| {{e_1}=1} \right.} \right)=0.2\times P\left( {{x_1}=1\left| {{e_1}=1} \right.} \right) \). Then we can get P(x 1 = 1|e 1 = 1) according to Eq. (10):

$$ \begin{array}{*{20}c} {P\left( {{x_{t+1 }}\left| {{e_{1:t+1 }}} \right.} \right)=\alpha P\left( {{e_{t+1 }}\left| {{x_{t+1 }}} \right.} \right)\int\nolimits_{{{x_t}}} {P\left( {{x_{t+1 }}\left| {{x_t}} \right.} \right)P\left( {{x_t}\left| {{e_{1:t }}} \right.} \right)} } \hfill \\ {\Rightarrow P\left( {{x_1}=1\left| {{e_1}=1} \right.} \right)\approx P\left( {{e_1}=1\left| {{x_1}=1} \right.} \right)\sum\nolimits_{{{x_0}}} {P\left( {{x_1}\left| {{x_0}} \right.} \right)P\left( {{x_0}} \right)} } \hfill \\ {=P\left( {{e_1}=1\left| {{x_1}=1} \right.} \right)\left( {P\left( {{x_1}=1\left| {{x_0}=1} \right.} \right)P\left( {{x_0}=1} \right)+P\left( {{x_1}=1\left| {{x_0}=2} \right.} \right)P\left( {{x_0}=2} \right)} \right)} \hfill \\ {=0.4\times \left( {0.2\times 0.5+0.8\times 0.5} \right)=0.2} \hfill \\ \end{array} $$(15)Similar, we have:

$$ \begin{array}{*{20}c} {P\left( {{x_1}=2|{e_1}=1} \right)} \hfill \\ {\approx P\left( {{e_1}=1|{x_1}=2} \right)\left( {P\left( {{x_1}=2|{x_0}=1} \right)P\left( {{x_0}=1} \right)+P\left( {{x_1}=2|{x_0}=2} \right)P\left( {{x_0}=2} \right)} \right)} \hfill \\ {=0.6\times \left( {0.8\times 0.5+0.2\times 0.5} \right)=0.3} \hfill \\ \end{array} $$(16)Then, we get: \( P\left( {{x_1}\left| {{e_1}=1} \right.} \right)=\alpha \left[ {\begin{array}{*{20}c} {0.2} \hfill & {0.3} \hfill \\ \end{array}} \right]=\left[ {\begin{array}{*{20}c} {0.4} \hfill & {0.6} \hfill \\ \end{array}} \right] \), we put Eq. (16) into Eq. (14), have:

$$ P\left( {{x_1}=1,{s_1}=1\left| {{e_1}=1} \right.} \right)=0.4\times 0.5\times 0.4=0.08 $$(17)Then we get:

$$ P\left( {{s_1}=1\left| {{e_1}=1} \right.} \right)=\frac{{P\left( {{x_1}=1,{s_1}=1\left| {{e_1}=1} \right.} \right)}}{{P\left( {{x_1}=1\left| {{e_1}=1} \right.} \right)}}=\frac{0.08 }{0.4 }=0.2 $$(18)$$ P\left( {{s_1}=2\left| {{e_1}=1} \right.} \right)=\frac{{P\left( {{x_1}=1,{s_1}=2\left| {{e_1}=1} \right.} \right)}}{{P\left( {{x_1}=1\left| {{e_1}=1} \right.} \right)}}=\frac{0.08 }{0.4 }=0.2 $$(19)In the end, we get calculation results: \( P\left( {{s_1}\left| {{e_1}=1} \right.} \right)=\alpha \left[ {\begin{array}{*{20}c} {0.2} \hfill & {0.2} \hfill \\ \end{array}} \right]=\left[ {\begin{array}{*{20}c} {0.5} \hfill & {0.5} \hfill \\ \end{array}} \right] \).

3.2 Motion retrieval

In stage of matching or retrieval, the query motion is extracted feature according to above, and then χ 2-test is used to compute distances between query and motions, as shown in Fig. 5, the retrieval results can be sorted and outputted. Assume there are two motions (denoted as G 1 and G 2), we have:

Where g 1(i,j) and g 2(i,j) are elements of G 1 and G 2, respectively. The \( \overline{e}_j^{(1) },\overline{s}_j^{(1) },\overline{x}_j^{(1) } \) denote the j-th column elements in G 1, the \( \overline{e}_j^{(2) },\overline{s}_j^{(2) },\overline{x}_j^{(2) } \) denote the j-th column elements in G 2. The parameters T in Eq. (20) denotes length of motion.

At last, the matching results are sorted according to distances between the query motion and motions in database, the top-p (p is feedback number of retrieval, the p is usually set to 20) motions can be feedback to user.

4 Experiments

To evaluate performance of the proposed motion descriptor on different motion clips, we discuss some of the experiments that we have conducted. We have constructed a motion database from 1000 different motion clips. For easy to test effective of motion matching and retrieval, we cut all motions into uniform length. We categorize the 1000 motions into 20 motion groups, normal speed walking, fast walking, slow walking, leg-wild walking, jumping, and so on. All the experiments presented here are performed on a PC with a Pentium 5 GHz CPU and 1 GB RAM. The motion files are downloaded from CMU [5].

The motion clips typically contain more than one action within each clip. To obtain more accurate performance results, we manually break each of the clips down into basic motion clips with a single action. We use the basic motion clips as input and we take the first 50 frames of the basic motion clips as the query for scale computation. Our objective in the experiments is to find the most similar motion clips within the motion database. For comparison, we have implemented Dynamic Time Warping [8] and the Uniform Scaling method [10].

4.1 Performance on motion discrimination

In the first experiment, we compare retrieval performance of the three methods, Uniform Scaling, DTW, and our method, using the similarity matrix. To generate matrix, we first compute similarity score between every motion pair in our database. We then normalize the results with the maximum and minimum of the corresponding matrices to show the contrasts. The darker the color, the more similar the two motions are. Figure 7 shows the similarity matrices of the three methods. From the similarity matrices, we have the following observations: on the one hand, the diagonal lines of the matrices give the darkest color. This means our method performs well in identifying the same motion. This means that proposed method is able to give high similarity scores for similar motions. On the other hand, our method also gives a larger similarity contrast when comparing two motions from different groups. This may suggest that our method can distinguish different motion groups relatively easier.

4.2 Performance on motion retrieval

In the second experiment, we calculate average precision and recall value (as shown in Fig. 8). Those diagrams are generated by taking each of the motions in the database as query, searching similar motions from the same database and averaging all the precision and recall values.

The Fig. 8 shows part of our precision and recall results. From the diagram, our method performs well. This finding confirms our similarity analysis that our method can distinguish dissimilar motions. The Fig. 8 plots the precision and recall curves for 8 motions in our database, from retrieval results, we observe that: for simple motions, such as run, walk, and so on, the compared 3 methods all have the best performance. On the other hand, for some motions with up or down direction, such as jump, climb and so on, the compared 3 methods have better performance, our method still has better performance than other approaches. The proposed retrieval algorithm always have low effective to motion with up to down direction, the main reason is that there is lesser discrimination for up and down movement based on proposed view-based motion coding method. It is just the weakness of this proposed algorithm, we will improve that in future works. However, for complex motions, such as dance, throw, and so on, our method has very good performance than other methods, that means, our retrieval frame is not only suited for simple motion retrieval, but also suited for complex motion discrimination.

In Fig. 9, we compare the 3 methods (our method, DTW and US) based on proposed retrieval frame and averaging precision and recall value, we can see that all three methods perform very well, whilst our method performs better.

4.3 Speed comparison

In the third experiment, we would like to compare the performance of the 3 methods according to retrieval speed. The parts of experimental results are shown in Table. 1, which reveal that our method actually performs better than DTW and Uniform Scaling. This is because DTW or Uniform Scaling computes all the motion frames, but our method, by applying the graph model and coding algorithm, involves only a matrix. This explains why the computation time consumed by our method is far less than other methods.

Based on the computational complexity, we can also explain why our method outperforms existed methods. In Uniform Scaling, we try to find the best scaled match between the query and the candidate. So, the time complexity is O(p × (m-n)), where p, m and n represent the lengths of a scaled time series, the candidate and the query, respectively. The time complexity of DTW is roughly O(m × n). The time complexity of our method is harder to analyze because it is based on the simplex algorithm. However, if the algorithm is modeled as a matrix matching problem, the complexity is O(n).

5 Conclusion

We have introduced a novel and efficient method for retrieving human motion data. Unlike other approaches, our method applies the graph model to describe motion, through inferring and coding, a small size and robust motion descriptor can be obtained. Our experiments show encouraging results.

References

Bashir FI, Khokhar AA, Schonfeld D (2007) Real-time motion trajectory-based indexing and retrieval of video sequences. IEEE Trans Multimed 9:58–65

Chakrabarti K, Keogh E, Mehrotra S, Pazzani M (2002) Locally adaptive dimensionality reduction for indexing large time series databases. ACM Trans Database Syst 27(2):188–228

Chao M-W, Lin C-H, Assa J, Lee T-Y (2012) Human motion retrieval from hand-drawn sketch. IEEE Trans Vis Comput Graph 18(5):729–740

Feng L, Yueting Z, Fei W, Yunhe P (2003) 3D motion retrieval with motion index tree. Comp Vision Image Underst 92(2):265–284

Gao Y, Tang J, Hong R, Yan S, Dai Q, Zhang N, Chua T-S (2012) Camera constraint-free view-based 3-D object retrieval. IEEE Trans Image Process 21(4):2269–2281

Gao Y, Wang M, Zha Z-J, Tian Q, Dai Q, Zhang N (2011) Less is more: efficient 3-D object retrieval with query view selection. IEEE Trans Multimed 13(5):1007–1018

Graphics Lab. “Motion capture database”. Carnegie Mellon University, http://mocap.cs.cmu.edu/

Keogh E, Palpanas T, Zordan V, Gunopulos D, Cardle M (2004) “Indexing large human-motion databases.” Proc VLDB: 780–791

Kovar L, Gleicher M, Pighin F (2002) “Motion graphs.” Proc ACM SIGGRAPH: 473–482

Lin Y (2006) “Efficient human motion retrieval in large databases.” Proc ACM GRAPHITE: 31–37

Müller M, Röder T (2006) “Motion templates for automatic classification and retrieval of motion capture data.” Proc ACM SCA

Nixon MS, Aguado AS (2008) “Feature extraction and image processing”, Second edition, published by Elsevier, pp 135–140

Pavlovíc V, Rehg JM, Murphy KP, Cham T-J (1999) “A dynamic Bayesian network approach to figure tracking using learned dynamic models”. Intl. Conf. Computer Vision: 94–101

Qian H, Debin Z, Siwei M, Wen G, Huifang S (2010) Deinterlacing using hierarchical motion analysis. IEEE Trans Circ Syst Video Technol 20(5):673–686

Qinkun X, Haiyun W, Fei L, Yue G (2011) 3D object retrieval based on a graph model descriptor. Neurocomputing 74(17):2340–2348

Russell S, Norvig P (2004) “Artificial intelligence: a modern approach”, Second edition, published by Pearson Education Asia Limited, pp 430–436

Shah VP, Younan NH, King RL (2008) An efficient pan-sharpening method via a combined adaptive PCA approach and contourlets. IEEE Trans Geosci Remote Sens 46(5):1323–1335

Tam GKL, Lau RWH (2007) Deformable model retrieval based on topological and geometric signatures. IEEE Trans Vis Comput Graph 13(3):470–482

Tang Jeff KT, Leung H (2012) Retrieval of logically relevant 3D human motions by adaptive feature selection with graded relevance feedback. Pattern Recognit Lett 33(4):420–430

Tian J, Qi W, Liu X (2011) Retrieving deep web data through multi-attributes interfaces with structured queries. Int J Softw Eng Knowl Eng 21(4):523–542

Xiao QK, Dai QH, Wang HY (2008) 3D object retrieval approach based on directed acyclic graph lightfield feature. Electron Lett 44(14):847–849

Xiaobai L, Liang L, Shuicheng Y, Hai J (2011) Adaptive object tracking by learning hybrid template on-line. IEEE Trans Circ Syst Video Technol 21(11):1588–1599

Xiaohua D, Liang L, Hongyang C (2013) Discovering video shot categories by unsupervised stochastic graph partition. IEEE Trans Multimed (TMM) 15(1):167–180

Yang Y, Nie F, Xu D, Luo J, Zhuang Y, Pan Y (2012) A multimedia retrieval framework based on semi-supervised ranking and relevance feedback. IEEE Trans Pattern Anal Mach Intell 34(4):723–742

Zhang Z, Tao D (2012) Slow feature analysis for human action recognition. IEEE Trans Pattern Anal Mach Intell 34(3):436–450

Acknowledgment

This work is partly supported by the National Basic Research Project of China (No. 2010CB731800) and the China National Foundation (No. 60972095, 61271362).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Xiao, Q., Luo, Y. & Wang, H. Motion retrieval based on Switching Kalman Filters Model. Multimed Tools Appl 72, 951–966 (2014). https://doi.org/10.1007/s11042-013-1416-x

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-013-1416-x