Abstract

Although deep reinforcement learning has become a promising machine learning approach for sequential decision-making problems, it is still not mature enough for high-stake domains such as autonomous driving or medical applications. In such contexts, a learned policy needs for instance to be interpretable, so that it can be inspected before any deployment (e.g., for safety and verifiability reasons). This survey provides an overview of various approaches to achieve higher interpretability in reinforcement learning (RL). To that aim, we distinguish interpretability (as an intrinsic property of a model) and explainability (as a post-hoc operation) and discuss them in the context of RL with an emphasis on the former notion. In particular, we argue that interpretable RL may embrace different facets: interpretable inputs, interpretable (transition/reward) models, and interpretable decision-making. Based on this scheme, we summarize and analyze recent work related to interpretable RL with an emphasis on papers published in the past 10 years. We also discuss briefly some related research areas and point to some potential promising research directions, notably related to the recent development of foundation models (e.g., large language models, RL from human feedback).

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Reinforcement learning (RL) (Sutton & Barto, 2018) is a general machine learning framework for designing systems with automatic decision-making capabilities. Research in RL has soared since its combination with deep learning, called deep RL (DRL), achieving several recent impressive successes (e.g., AlphaGo (Silver et al., 2017), video game (Vinyals et al., 2019), or robotics (OpenAI et al., 2019)). These attainments were made possible notably thanks to the introduction of the powerful approximation capability of deep learning and its adoption for sequential decision-making and adaptive control.

However, this combination has simultaneously brought all the drawbacks of deep learning to RL. Indeed, as noticed by abundant recent work in DRL, policies learned via a DRL algorithm may suffer from various weaknesses, e.g.:

-

They are generally hard to understand because of the blackbox nature of deep neural network architectures (Zahavy et al., 2016).

-

They are difficult to train, require a large amount of data, and DRL experiments are often difficult to replicate (Henderson et al., 2018).

-

They may overfit the training environment and may not generalize well to new situations (Zhang et al., 2018b).

-

Consequently, they may be unsafe and vulnerable to adversarial attacks (Huang et al., 2017).

These observations reveal why DRL is currently not ready for real-world high-stake applications such as autonomous driving or healthcare, and explain why interpretable and explainable RL has recently become a very active research direction. In this survey, we view interpretability as an intrinsic property of a model and explainability as a post-hoc operation (see Sect. 3)

Most real-world deployments of RL algorithms require that learned policies are intelligible as they provide an answer (or a basis for an answer) to various concerns encompassing ethical, legal, operational, or usability viewpoints:

-

Ethical concerns When designing an autonomous system, it is essential to ensure that its behavior follows some ethical and fairness principles discussed and agreed upon beforehand by the stakeholders according to the context (Crawford et al., 2016; Dwork et al., 2012; Friedler et al., 2021; Leslie, 2020; Lo Piano, 2020; Morley et al., 2020; Yu et al., 2018). The growing discussion about bias and fairness in machine learning (Mehrabi et al., 2019) suggests that mitigating measures must be taken in every aspect of an RL methodology as well. In this regard, intelligibility is essential to help assess the embedding of moral values into autonomous systems, and contextually evaluate and debate their equity and social impact.

-

Legal concerns As autonomous systems start to be deployed, legal issues arise regarding notably safety (Amodei et al., 2016), accountability (Commission, 2019; Doshi-Velez et al., 2019), or privacy (Horvitz & Mulligan, 2015). For instance, fully-autonomous driving cars should be permitted in the streets only once proven safe with high confidence. The question of risk management (Bonnefon et al., 2019) but also responsibility, in the case of an accident involving such systems, has become a more pressing and complex problem. Verification, accountability, but also privacy can only be ensured with more transparent systems.

-

Operational concerns Since transparent systems are inspectable and verifiable, they can be examined before deployment to ensure that their decision-making is based on meaningful (ideally causal) relations and not on spurious features, ensuring higher reliability and increased robustness. From the vantage point of researchers or engineers, such systems have the advantage of being more easily debugged and corrected. Moreover, one may expect that such systems are easier to train, more data efficient, and more generalizable and transferable to new domains thanks to interpretability inductive biases.

-

Usability concerns Interpretable and explainable models can form an essential component for building more interactive systems, where an end-user can request more information about the outcome or decision-making process. In particular, explainable systems would arguably be more trustworthy, which is a key requirement for their integration and acceptance (Mohseni et al., 2020), although the question of trust touches on many other contextual and non-epistemic factors (e.g., risk aversion or goal) beyond intelligibility.

In addition to this high-level list of concerns, we refer the interested reader to Whittlestone et al. (2021) for a more thorough discussion about the potential societal impact of the deployment of DRL-based systems. Although interpretability is a pertinent instrument to achieve more accountable AI-systems, the debate around their real-life implementation should stay active, and include diverse expertise from legal, ethical, and socio-political fields, whose coverage goes beyond the scope of this survey.

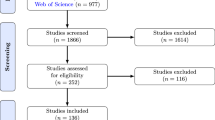

Motivated by the importance of these concerns, the number of publications in DRL specifically tackling interpretability issues has increased significantly in recent years. The surging popularity of this topic also explains the recent publication of three survey papers (Alharin et al., 2020; Heuillet et al., 2021; Puiutta & Veith, 2020) on interpretable and explainable RL. In Puiutta and Veith (2020) and Heuillet et al. (2021), a short overview is provided with a limited scope, notably in terms of surveyed papers, while Alharin et al. (2020) cover more studies, organized and categorized into explanation types. The presentation of those surveys generally leans towards explainability as opposed to interpretability (see Sect. 3 for the definitions adopted in this survey) and focuses on understanding the decision-making part of RL.

In contrast, this survey aims at providing a more comprehensive view of what may constitute interpretable RL, which we here specifically distinguish from explainable RL (see Sect. 3). In particular, while decision-making is indeed an important aspect of RL, we believe that achieving interpretability in RL should involve a more encompassing discussion of every component involved in these algorithms, and should stand on three pillars: interpretable inputs (e.g., percepts or other structural information provided to the agent), interpretable transition/reward models, and interpretable decision-making.

Based on this observation, we organize previous work that proposes methods for achieving greater interpretability in RL, along those three components, with an emphasis on DRL papers published in the last 10 years. Thus, in contrast to the previous three surveys, we cover additional work that belongs to interpretable RL such as relational RL or neuro-symbolic RL and also draw connections to other work that naturally falls in this designation, such as object-based RL, physics-based models, or logic-based task descriptions. We also briefly examine the potential impact of large language models on interpretable and explainable RL. One goal of this proposal is to discuss the work in (deep) RL that is specifically identified as belonging to interpretable RL and to draw connections to previous work in RL that is related to interpretability. Since such latter work covers a very broad research space, we can only provide a succinct account for it.

The remaining of this survey is organized as follows. In the next section, we recall the necessary definitions and notions related to RL. Next, we discuss the definition of interpretability (and explainability) in the larger context of artificial intelligence (AI) and machine learning (Sect. 3.1), and apply it in the context of RL (Sect. 3.2). In the following sections, we present the studies related to interpretable inputs (Sect. 4) and models (Sect. 5). The work tackling interpretable decision-making, which constitutes the core part of this survey, is discussed in Sect. 6. For the sake of completeness, we also sketch a succinct review of explainable RL (Sect. 7), which helps us contrast it to interpretable RL. Based on this overview, we provide in Sect. 8 a list of open problems and future research directions, which we deem particularly relevant. Finally, we conclude in Sect. 9.

2 Background

In RL, an agent interacts with an environment through an interaction loop. The agent repeatedly receives an observation from the environment, chooses an action, and receives a new observation and usually an immediate reward. Although most RL methods solve this problem by considering the RL agent as reactive (i.e., given an observation, choose an action), Fig. 1 lists some other potential problems that an agent may tackle on top of decision-making: perception if the input is high-dimensional (e.g., image), learning from past experience, knowledge representation (KR) and reasoning, and finally planning if the agent has a model of its environment.

This RL problem is generally modeled as a Markov decision process (MDP) or one of its variants, notably partially observable MDP (POMDP).Footnote 1 An MDP is defined as a tuple \(({\mathcal {S}}, {\mathcal {A}}, T, R)\) with a set \({\mathcal {S}}\) of states, a set \({\mathcal {A}}\) of actions, a transition function \(T: {\mathcal {S}} \times {\mathcal {A}} \times {\mathcal {S}} \rightarrow [0, 1]\), and a reward function \(R: {\mathcal {S}} \times {\mathcal {A}} \rightarrow {\mathbb {R}}\). The sets of states and actions, which may be finite, infinite, or even continuous, specify respectively the possible world configurations for the agent and the possible response that it can perform. In a partially observable MDP, the agent does not observe the state directly, but has access to an observation that probabilistically depends on the hidden state. The difficulty in RL is that the transition and reward functions are not known to the agent. The goal of the agent is to learn to choose actions (i.e., encoded in a policy) such that it maximizes its expected (discounted) sum of rewards \(\mathbb E\big [\sum _{t=0}^{\infty } \gamma ^t R(S_t, A_t)\big ]\) where \(\gamma \in [0, 1]\) is a discount factor, \(S_t\) and \(A_t\) are random variables representing the state and action at time step t. The expectation is with respect to the transition probabilities T, the action selection, and a possible distribution over initial states. This expected sum of rewards is usually encoded in a value function when the initial states are fixed. A policy may choose actions based on states or observations in a deterministic or randomized way. In RL, the value function often takes the form of a so-called Q-function, which measures the value of an action a followed by a policy from a state s, i.e., \(\mathbb E\big [\sum _{t=0}^{\infty } \gamma ^t R(S_t, A_t) \mid S_0 = s, A_0 = a\big ]\) To solve this RL problem, model-based and model-free algorithms have been proposed, depending on whether a model of the environment (i.e., transition/reward model) is explicitly learned or not.

The success of DRL is explained partly by the use of neural networks (NN) to approximate value functions or policies, but also by various algorithmic progress. Deep RL algorithms can be categorized in two main categories: value-based methods and policy gradient methods, in particular in their actor-critic version. For the first category, the model-free methods are usually variations of the DQN algorithm (Mnih et al., 2015). For the second one, the current state-of-the-art model-free methods are PPO (Schulman et al., 2017) for learning a stochastic policy, TD3 (Fujimoto et al., 2018) for learning a deterministic policy, and SAC (Haarnoja et al., 2018) for entropy-regularized learning of a stochastic policy. Model-based methods can span from simple approaches such as first learning a model and then applying a model-free algorithm using the learned model as a simulator, to more sophisticated methods that leverage the learned model to accelerate solving an RL problem (Francois-Lavet et al., 2019; Scholz et al., 2014; Veerapaneni et al., 2020).

For complex decision-making tasks, hierarchical RL (HRL) (Barto & Mahadevan, 2003) has been proposed to exploit temporal and hierarchical abstractions, which may facilitate learning and transfer, but also promote intelligibility. Although various architectures have been proposed, decisions in HRL are usually made at (at least) two levels. In the most popular framework, a higher-level controller (also called meta-controller) chooses temporally-extended macro-actions (also called options), while a lower-level controller chooses the primitive actions. Intuitively, an option can be understood as a policy with some starting and ending conditions. When it is known, it directly corresponds to the policy applied by the lower-level controller. An option can also be interpreted as a subgoal chosen by the meta-controller for the lower-level controller to reach.

3 Interpretability and explainability

In this section, we first discuss the definition of interpretability and explainability as proposed in the explainable AI (XAI) literature. Then, we focus on the instantiations of those notions in RL.

3.1 Definitions

Various terms have been used in the literature to qualify the capacity of a model to make itself understandable, such as interpretability, explainability, intelligibility, comprehensibility, transparency, or understandability. Since no consensus about the nomenclature in XAI has been reached yet, they are not always distinguished and are sometimes used interchangeably in past work or surveys on XAI. Indeed, interpretability and explainability are for instance often used as synonyms (Miller, 2019; Molnar, 2019; Ribeiro et al., 2016a). For better clarity and specificity, in this survey, we only employ the two most common terms, interpretability and explainability, and clearly distinguish those two notions, which we define below. This distinction allows us to provide a clearer view of the different work in interpretable and explainable RL. Moreover, we use intelligibility as a generic term that encompasses those two notions. For a more thorough discussion of the terminology in the larger context of machine learning and AI, we refer the interested readers to surveys on XAI (Barredo Arrieta et al., 2020; Chari et al., 2020; Gilpin et al., 2019; Lipton, 2017).

Following Barredo Arrieta et al. (2020), we simply understand interpretability as a passive quality of a model, while explainability here refers to an active notion that corresponds to any external, usually post-hoc, methodology or proxy aiming at providing insights into the working and decisions of a trained model. Importantly, the two notions are not exclusive. Indeed, explainability techniques can be applied to interpretable models and explanations may potentially be more easily generated from more interpretable models. This is why, one may argue that model interpretability is more desirable than post-hoc explainability (Rudin, 2019). Moreover, both notions are epistemologically inseparable from both the observer and the context. Indeed, what is intelligible and what constitutes a good explanation may be completely different for an end-user, a system designer (e.g., AI engineer or researcher), or a legislator for instance. Except in our discussion on explainable RL, we will generally take the point of view of a system designer.

While interpretability is achieved by resorting to intrinsically more transparent models, explainability requires carrying out additional processing steps to explicitly provide a kind of explanation aiming to clarify, justify, or rationalize the decisions of a trained black-box model. At first sight, it seems that interpretability is involving an objectual and mechanistic understanding of the model, whereas explainability mostly restricts itself to a more functional.Footnote 2—and often model-agnostic—understanding of the outcomes of a model. Yet, as advocated by Páez (2019), post-hoc intelligibility in AI should require some degree of objectual understandingFootnote 3 of the model, since a thorough understanding of a model’s decisions, also encompasses the ability to think counterfactually (“What if...”) and contrastively (“How could I alter the data to get outcome X?”).

Since the main focus of this review is interpretability, we further clarify this notion by recalling three potential definitions as proposed by Lipton (2017): simulatability, decomposability, and algorithmic transparency.

A model is simulatable if its inner working can be simulated by a human. Examples of simulatable models are small linear models or decision trees. The concept of simplicity, and quantitative aspects, consequently underlie any definition of simulatability. In that sense, a hypothesis class is not inherently interpretable with respect to simulatability. Indeed, a decision tree may not be simulatable if its depth is huge, whereas a NN may be simulatable if it has only a few hidden nodes. A model is decomposable if each of its parts (input, parameter, and calculation) can be understood intuitively. Since a decomposable model assumes its inputs to be intelligible, any simple model based on complex highly-engineered features is not decomposable. Examples of decomposable models are linear models or decision trees using interpretable features. While the other two definitions focuses on the model, the third one shifts the attention to the learning process and requires it to be intelligible. Thus, an algorithm is transparent if its properties are well-understood (e.g., convergence). In that sense, standard learning methods for linear regression or support vector machine may be considered transparent. However, since the training of deep learning models is currently still not very well-understood, it results in a regrettable lack of transparency of these algorithms.

Although algorithmic transparency is important, the most relevant notions for this survey are the first two since our main focus is the intelligibility of trained models. More generally, it would be useful and interesting to try to provide finer definitions of those notions, however this is out of the scope of this paper, whose goal is to provide an overview of work aiming at enhancing interpretability in (deep) RL.

3.2 Interpretability in RL

Based on the previous discussion of interpretability in the larger context of AI, we now turn to the RL setting. To solve an RL problem, the agent may need to solve different AI tasks (notably perception, knowledge representation, reasoning, learning, planning) depending on the assumptions made about the environment and the capability of the agent (see Fig. 1).

For this whole process to be interpretable, all its components have arguably to be intelligible, such as: (1) the inputs (e.g., observations or any other information the agent may receive) and its processing, (2) the transition and preference models, and (3) the decision-making model (e.g., policy and value functions). The preference model describes which actions or policies are preferred. It can simply be based on the usual reward function, but can also take more abstract forms such as logic programs. Note that making those components more intelligible supposes a certain disentanglement of the underlying factors, and entails a certain representation structure. With this consideration, this survey can be understood as discussing methods to achieve structure in RL, which consequently enhances interpretability.

The three definitions of interpretability (i.e., simulatability, decomposability, and algorithmic transparency) discussed previously can be applied in the RL setting. For instance, for an RL model to be simulatable, it has to involve simple inputs, simple preference (possibly also transition) models, and simple decision-making procedures, which may be hard to achieve in practical RL problems. In this regard, applying those definitions of interpretability at the global level in RL does not lead to any interesting insights in our opinion. However, because RL is based on different components, it may be judicious to apply the different definitions of interpretability to them, possibly in a differing way. A more modular view provides a more revealing analysis framework to understand previous and current work related to interpretable RL. Thus, an RL approach can be categorized for instance, as based on a non-interpretable input model, but simulatable reward and decision-making models (Penkov & Ramamoorthy, 2019) or as based on simple inputs, a simulatable reward model, and decomposable transition and decision-making models (Degris et al., 2006).

In the end, interpretability is a difficult notion to delineate, whose definition may depend on both the observer and the context. Moreover, it is generally not a Boolean property, but there is a continuum from black-box (e.g., deep NN) to undoubtedly interpretable (e.g., structured program) models. For these reasons, we discuss interpretable methods, but also DRL approaches that are not necessarily considered interpretable, but bring some intelligibility in the RL framework (e.g., via NN architectural bias or regularization). We organize these studies along three categories: interpretable inputs, interpretable transition and preference models, and interpretable decision-making. We argue that they are essential aspects of interpretable RL since they each pave the way towards higher interpretability in RL. As in any classification attempt, the boundary between the different clusters is not completely sharp. Indeed, some propositions could arguably belong to several categories. However, to avoid repetition, we generally discuss them only once with respect to their most salient contributions.

4 Interpretable inputs

A first step towards interpretable RL regards the inputs that an RL agent uses to learn and make its decisions. Arguably, these inputs must be intelligible if one wants to understand the decision-making process later on. Note that we define inputs in a very general sense. They can be any interpretable information specified by the system designer. Thus, they include the typical (interpretable) RL observations, but also any structural information, which enforces higher intelligibility to the data provided to the agent, such as the relational or hierarchical structure of the problem.

Interpretable numeric observations (e.g., kinematic information) can be directly provided by sensors or estimated from high-dimensional observations (e.g., depth from images in Michels et al., 2005). To keep this survey concise, we do not cover such methods, which are more related to the state estimation problem. Instead, we mainly focus on RL approaches exploiting (pre-specified or learned) symbolic and structural information, since they specifically enforce intelligibility in the agents’ inputs. Moreover, they can help with faster learning, better generalizability and transferability but also be smoothly integrated with reasoning and planning.

Diverse approaches have been investigated to provide interpretable inputs to the agent (see Fig. 2). They may be pre-given as seen in the literature of structured RL (Sect. 4.1), or may need to be extracted from high-dimensional observations (Sect. 4.2). Tangentially, additional interpretable knowledge can be provided to help the RL agent, in addition to the observations (Sect. 4.3).

4.1 Structured approaches

The literature explored in this subsection assumes a pre-given structured representation of the environment which may be modelled through a collection of objects, and their relations as in object-oriented or relational RL (RRL, Dzeroski et al., 1998). Thus, the MDP is assumed to be structured and the problem is solved within that structure: task, reward, state transition, policies and value function are defined over objects and their interactions—e.g., using first-order logicFootnote 4 (FOL, Barwise, 1977) as in RRL. A non-exhaustive overview of these approaches is provided in Table 1.

A first question, tied to knowledge representation (Swain, 2013), is the choice of the specific structured representations for the different elements (i.e., state, transition, rewards, value function, policy). For instance, a first step in this literature was to depart from propositional representation, and turn to relational representations, which not only seems to better fit the way we reason about the environment—in terms of objects and relations —but may bring other benefits, such as the easy incorporation of logical background knowledge. Indeed, in propositional representations, the number of objects is fixed, all relations have to be grounded—a computationally heavy operation—but most importantly it is not suitable to generalize over objects and relations, and is unable to capture the structural aspect of the domain (e.g., Blocks World, Slaney & Thiébaux, 2001). Variations of this structure are presented below.

Relational MDP

Relational MDPs (RMDPs) (Guestrin et al., 2003) are first-order representation of factored MDPs (Boutilier et al., 2000) and are based on probabilistic relational modelFootnote 5 (PRM, Koller, 1999). The representation involves different classes of objects (over which binary relations are defined), each having attributes attached to a specific domain. Transition and reward models are assumed given, e.g., as a dynamic Bayesian network (DBN, Dean & Kanazawa, 1990), although the specific representational language may vary.Footnote 6 Closely related, Object Oriented-MDPs (see Diuk et al., 2008, presented in Sect. 5.1)—later extended to deictic representations (Marom & Rosman, 2018)—use similar state-representation yet differ in the way their transition dynamics are described: transitions are assumed deterministic and learned within a specific propositional form in the first step of their algorithm.

Relational RL

Following the initial work on Relational RL (Dzeroski et al., 1998), a consequent line of work summarized below extends previous work dealing with MDPs modelled in a relational language to the learning setting, at the crossroad of RL and logical machine learning—such as inductive logic programming (ILP, Cropper et al., 2020) and probabilistic logic learning (De Raedt & Kimmig, 2015). We also refer the interested readers to the surveys by van Otterlo (2009, 2012).

In Džeroski et al. (2001), the Q-function is learned with a relational regression tree using Q-learning extended to situations where states, actions, and policies are represented using first-order logic. However, explicitly representing value functions in relational learning is difficult, partly due to concept drift (van Otterlo, 2005), which occurs since the policy providing examples for the Q-function is being constantly updated. It may motivate to turn towards policy learning (as Dzeroski et al., 1998 relying on P-trees), and employ approximate policy iteration methods which would keep explicit representation of the policy but not the value function, for larger probabilistic domains.

Diverse extensions of relational MDPs and of Relational RL (RRL) have been proposed, either exact or approximate methods, in model-free and in model-based, with more or less expressive representations and within a plain or more hierarchical approach (Driessens & Blockeel, 2001). Regarding the representations, previous model-free RRL work is based on explicit logical representation such as logical (FOL or more rarely Higher Order Logic (HOL, e.g., Cole et al., 2003)) regression trees, which, in a top-down way, recursively partition the state space; in contrast, other bottom-up and feature-based approaches (Sanner, 2005; Walker et al., 2004) aim to learn useful relational features which they would combine to estimate the value function, either by feeding them to a regression algorithm (Walker et al., 2004), or into a relational naive Bayes network (Sanner, 2005). Other alternatives to regression trees have been implemented such as through Gaussian processes—incrementally learnable Bayesian regression—with graph kernels, defined over a set of state and action (Driessens et al., 2006). Finally, other work in quest of more expressivity turns towards neural representations. For instance, after extracting a graph instance expressed in RDDL\(^{7}\) (Sanner, 2011), Garg et al. (2020) compute nodes embedding via graph propagation steps, which are then fed to value and policy decoders (multi-layer perceptrons, MLP) attached to each action symbol. In Janisch et al. (2021), graph NNs are similarly used to build a relational state representation in relational problems. The authors resort to auto-regressive policy decomposition (Vinyals et al., 2017) to tackle multi-parameter actions (attached to unary or binary predicates).

Let us point out that despite the “reinforcement” appellation, a significant proportion of work in RRL assumes that environment models (transitions and reward structures) are known to the agent, which may be unrealistic. RRL has also been applied to diverse domains, such as for efficient exploration within robotics (Martínez et al., 2017b).

Discussion

In structured MDP and relational RL, by borrowing from symbolic reasoning, most work leads to agents that can learn and reason about objects. Such an explicit and logical representation of learned structures may help both generalize or transfer efficiently and robustly to similar representational frameworks by e.g., reusing learned representations or policies. However, some major drawbacks are that these approaches necessitate the symbolic representation to be hand-designed, and often rely on non-differentiable operations. They are therefore not very flexible over framework variations (e.g., task or input) and not well suited for more complex tasks, or noisy real-life environments.

4.2 Learning symbolic representations

When inputs are given as high-dimensional raw data, it seems judicious—although challenging—to extract explicit symbolic representations on which we can arguably reason and plan in a more efficient and intelligible way. This process of abstraction, which is very familiar to human cognition,Footnote 7 reduces the complexity of an environment to low dimensional, discrete, abstract features. By abstracting away lower-level details and irrelevant variations, this paradigm brings undeniable advantage and could greatly leverage the learning and generalization abilities of the agent. Moreover, it provides the possibility of reusing high-level features through environments, space and time.

Some promising new research directions—tackling the key problem of symbol grounding (Harnad, 1990)—are adopting an end-to-end training, therefore tying the semiotic emergence not only to control but also to efficient high-level planning (Andersen & Konidaris, 2017; Konidaris et al., 2014, 2015), or model-based learning (Francois-Lavet et al., 2019), to encourage more meaningful abstractions. Work presented below (see Table 2) ranges from extracting symbols to relational representations, which are in turn used for control or planning. In the next two sections, we distinguish actual high-level (HL) decomposability—meaning the HL module is decomposable—from HL modularity, which denotes the gain in interpretability brought by the task decomposition, which may be seen as a partial high-level decomposability.

Symbol grounding

Some previous approaches (Andersen & Konidaris, 2017; Konidaris et al., 2014, 2015, 2018) have tackled the problem of learning symbolic representations adapted for high-level planning from raw data. As they are concerned about evaluating the feasibility and success probability of a high-level plan, they only need to construct symbols both for the initiation set and the termination set of each option. There, the state-variables are gathered into factors, which can be seen as sub-goals, and are tied to a set of symbols; through unsupervised clustering, each option is attached to a partition of the symbolic state; it leads to a probabilisitc distribution over symbolic options (Sutton et al., 1999) which guides the higher-level policy to evaluate the plan.

In a different direction, some researchers have involved human teaching (Kulick et al., 2013) or programs (Penkov & Ramamoorthy, 2019; Sun et al., 2020) to guide the learning of symbols. For instance, in the work by Penkov and Ramamoorthy (2019), the pre-given program mapping the perceived symbols (from an auto-encoder network) to actions, imposes semantic priors over the learned representations, and may be seen as a regularization which structures the latent space. Meanwhile, Sun et al. (2020) present a perception module which aims to answer the conditional queries (“if”) within the program, which accordingly provides a symbolic goal to the low-level controller. However, these studies, by relying on a human-designed program, or human-teaching, partly bypass the problem of autonomous and enacted symbol extraction.

Object Recognition

When the raw input is given as an image, diverse techniques within computer vision and within Object Recognition (OR) or Instance Segmentation (combining semantic segmentation and object localization) are beneficial to extract a symbolic representation. Such extracted information, fed as input to the policy or Q-network, should arguably lead to more interpretable networks. OR aims specifically to find and identify objects in an image or video sequence, despite possible changes in sizes, scales or obstruction when objects are being moved; it ranges from classical techniques—such as template matching (Brunelli, 2009), or Viola-Jones algorithm (Viola & Jones, 2001)—to more advanced ones.

As an example of recent RL work learning symbolic representation, O-DRL (Iyer et al., 2018; Li et al., 2017b) can exploit and incorporate object characteristics such as the presence and positions of game objects, which are extracted with template matching before being fed into the Q-network. In the case of moving rigid objects, techniques have been developed to exploit information from object movement to further improve object recognition. For instance, Goel et al. (2018) first autonomously detect moving objects by exploiting structure from motion, and then use this information for action selection.

Aiming to delineate a general end-to-end RL framework, Deep Symbolic RL (DSRL, Garnelo et al., 2016) combines a symbolic front end with a neural back end learning to map high-dimensional raw sensor data into a symbolic representation in a lower-dimensional conceptual space. Such proposition may be understood within emblematic neuro-symbolic approaches’ scheme (as presented in Bader and Hitzler 2005, Fig.4), where a symbolic system and a connectionist system share information back and forth. The authors also reflect on a few key notions for an ideal implementation such as conceptual abstraction (e.g., how to detect high level similarity), compositional structure, common sense priors or causal reasoning. However, their first prototype proposal is relatively limited, with a symbolic front end carrying out very little high-level reasoning, and a simple neural back end for unsupervised symbol extraction. Further work has questioned the generalization abilities of DSRL (Dutra & d’Avila Garcez, 2017) or aimed to incorporate common sense within DSRL (d’Avila Garcez et al., 2018) to improve learning efficiency and accuracy, albeit still within quite restricted settings.

Relational Representations

To obtain more interpretable inputs for the policy or Q-value network, some work deals with specifically relation-centric representations, e.g., graph-based representation. Such relational representation can be leveraged for decision-making, e.g., once fed to the value or policy network or even in a hierarchical setting. Within this line of research, graph networks (Battaglia et al., 2018) stand out as an effective way to compute interactions between entities and can support combinatorial generalization to some extent (Battaglia et al., 2018; Cranmer et al., 2020; Gilmer et al., 2017; Li et al., 2017c; Sanchez-Gonzalez et al., 2018; Scarselli et al., 2009). Roughly, the inference procedure is a form of propagation process similar to a message passing system. Having high capacity, graph networks have been thoroughly exploited in a diverse range of problem domains, either for supervised, unsupervised or in model-free or model-based RL, for tasks ranging from visual scene understanding, to physical systems dynamics via chemical molecule properties, image segmentation, point clouds data, combinatorial optimization, or dynamic of multi-agent systems.

Aiming to represent relations between objects, relational modules have been designed to inform the Q-network (Adjodah et al., 2018), and/or the policy network (Zambaldi et al., 2019). In a work by Zambaldi et al. (2019), the pairwise interactions are computed via a self-attention mechanism (Vaswani et al., 2017), and used to update each entity representation which—according to the authors’ claim—is led to reflect important structure about the problem and the agent’s intention. Unlike most prior work in relational inductive bias (e.g., Wang et al., 2018), it does not rely on a priori knowledge of the problem and the relations, yet is hard to scale to large input space, suffering from quadratic complexity. Other relational NN modules could be easily incorporated into any RL framework, e.g., (Chang et al., 2017; Santoro et al., 2017) which aim to factorize dynamics of physical systems into pairwise interactions.

Discussion

Extracting symbolic and relational representations from high dimensional raw data is crucial as it would avoid the need of hand-designing the symbolic domain, and could therefore unlock enhanced adaptability when facing new environments. Indeed, the symbolic way of representing the environment inherently benefits from its compositional and modular perspective, and enables the complexity of the state-space to be reduced thanks to abstraction. Moreover, most of the modules presented in this section may be incorporated as a preprocessing step for any object-oriented or relational RL, either being trained beforehand or more smoothly end-to-end with the subsequent model.

Nevertheless, despite a certain history of work in this area, in the absence of pre-given hand-crafted schemes, it remains a consequent challenge for an agent to autonomously extract relevant abstractions model from a high-dimensional continuous complex and noisy environment. Advanced computer vision techniques could be leveraged in RL, e.g., for object detection (e.g., YOLO, Redmon et al., 2016), tracking (e.g., Deep Sort, Wojke et al., 2017), or for extracting structured representation (e.g., scene graphs, Bear et al., 2020).

For scene interpretation, going beyond the traditional spatial or subsumption (”part-of”) relations, some logic-based approaches have emerged, using decidable fragments of FOL, such as Description Logic (DL), in order to infer new facts in a scene, given basic components (object type or spatial relation), e.g., redefining labels. For instance, Donadello et al. (2017) extending the work of Serafini and d’Avila Garcez (2016) employ fuzzy FOL. Another idea would be to first learn—ideally causally—disentangled subsymbolic representations from low-level data (e.g., Higgins et al., 2018), to bootstrap the subsequent learning of higher-level symbolic representations, on which more logical and reasoning-based frameworks can then be deployed. In partially observable domains, an alternative approach is to enforce interpretability of the memory of the agent (Paischer et al., 2023).

As a side note, this line of work touches sensitive questions on how symbols acquire their meanings; e.g., with the well-known symbol grounding problem, inquiring on how to ground the representations and symbolic entities from raw observations.Footnote 8 On top of the challenges of “when” and “how” to invent a new symbol, enters also the question of how to assess of its quality.

4.3 Hierarchical approaches

Instead of working entirely within a symbolic structure as in Sect. 4.1, many researchers have tried to incorporate elements of symbolic knowledge with sub-symbolic components, with notable examples within hierarchical RL (HRL, e.g., Hengst., 2010). Their aim was notably to leverage both symbolic and neural worlds, with a more structured high-level and a more flexible low-level. In this type of work, the agent can be understood as taking as inputs this interpretable structural information in addition to its usual observations. One may argue that this hierarchical structure helps make more sense of the low-level high-dimensional observations.

Table 3 introduces these approaches, focusing notably on their high-level interpretability, as their lower-level components—especially when based on neural networks—rarely claim to be interpretable. Indeed, different levels of temporal and hierarchical abstractions within human decision-making arguably participate to make it more intelligible. For instance, Beyret et al. (2019) demonstrate how a meta-controller providing subgoals to a controller achieves both performance and interpretability for robotic tasks.

Working at a higher level of abstraction than the controller, it seems reasonable that the high-level module (e.g., meta-controller, planner) manipulates symbolic representations and handles the reasoning part, while the low-level controller could still benefit from the flexibility of neural approaches. Yet, we could also imagine less dichotomic architectures: for instance, another neural module may coexist at the high-level along with the logic-based module to better inform the decisions under uncertainty (as in Sridharan et al., 2019).

Various symbolic domain knowledge may be incorporated to HRL frameworks, in order to leverage both learning and high-level reasoning: high-level domain-knowledge (e.g., with high-level transitions and mappings from low-level to high level), high-level plans, task-decomposition, or specific decisions rules (e.g., for safety filtering). High-level domain knowledge may be described through symbolic logic-based or action language such as PDDL or RDDL (Sanner, 2011) or via temporal logic (Camacho et al., 2019; Li et al., 2019).

Modularity

Modular approaches in HRL have been relevantly applied to decompose a possibly complex task domain into different regions of specialization, but also to multitask DRL, in order to reuse previously learned skills across tasks (Andreas et al., 2017; Shu et al., 2018; Wu et al., 2019a). Tasks may be annotated by handcrafted instructions (as “policy sketches” in Andreas et al., 2017), and symbolic subtasks may be associated with subpolicies which a full task-specific policy aims to successfully combine. Distinctively, Shu et al. (2018) propose to train (or provide as a prior) a stochastic temporal grammar (STG), in order to capture temporal transitions between tasks; an STG encodes priorities of sub-tasks over others and enables to better learn how to switch between base or augmented subpolicies. The work in Wu et al. (2019a) seeks to learn a mixture of subpolicies by modeling the environment assuming given a set of (imperfect) models specialized by regions, referred to as model primitives. Each policy is specialized on those regions and the weights in the mixture correspond to the posterior probability of a model given the current state. Echoing the hierarchical abstractions involved in human decision-making, such modularity and task-decomposability—referred to as high-level modularity as previously—would arguably participate to make decision-making more intelligible.

Symbolic Planning

A specific line of work within HRL has emerged trying to fuse symbolic planning (SP, Cimatti et al., 2008) with RL (SP+RL), to guide the agent’s task execution and learning (Leonetti et al., 2016). In classical SP, an agent uses a symbolic planner to generate a sequence of symbolic actions (plan) based on its symbolic knowledge. Yet, this pre-defined notion of planning seems unfit to most RL or real-world domains which present both domain uncertainties and execution failures. Recent work then usually interleaves RL and SP, aiming to send feedback signals to the planner in order to handle such scenarios. Planning agents often carry prior knowledge of the high-level dynamics, typically hand-designed, and assumingly consistent with the low-level environment.Footnote 9

Framing SP in the context of automatic option discovery, in PEORL (Yang et al., 2018a), a constraint answer set solver generates a symbolic plan, which is then turned into a sequence of options to guide the reward-based learning. Unlike earlier work in SP-RL (e.g., Leonetti et al., 2016), RL is intertwined with SP, such that more suitable options could be selected. Some work has extended PEORL, with two planning-RL loops for more robust and adaptive task-motion planning (Jiang et al., 2018), or with an additional meta-controller in charge of subtask evaluation to propose new intrinsic goals to the planner (Lyu et al., 2019). Recent work (Jin et al., 2022) starts to deal with learning action models in the RL loop.

Declarative Domain Knowledge

Declarative and common sense knowledge has been incorporated in RL frameworks in diverse ways to guide exploration, such as to filter out unreasonable or risky actions with finite state automaton (Li et al., 2019) or high-level rules (Zhang et al., 2019). In contrast, Furelos-Blanco et al. (2021) propose to learn a finite-state automaton for the higher-level with an ILP method and solve the lower-level with an RL method. There, the automaton is used to generate subgoals for the lower level. A deeper integration of knowledge representation and reasoning with model-based RL has been advocated in Lu et al. (2018), where the learned dynamics are fed into the logical-probabilistic reasoning module to help it select a task for the controller. In a different direction, exploiting declarative knowledge to construct actions sequences can also help reward shaping to find the optimal policy, as a few studies (Camacho et al., 2019; Grzes & Kudenko, 2008) have demonstrated. We refer to the survey by Zhang and Sridharan (2020) for further examples of studies both in probabilistic planning and RL aiming to reason with declarative domain knowledge.

Discussion

Symbolic knowledge and reasoning elements have been consistently incorporated to (symbolic) planning or hierarchical decision-making, in models which may reach a certain high-level interpretability, albeit neglecting action-level interpretability. Moreover, most work still typically relies on manually-crafted symbolic knowledge—or has to rely on a (pretrained or jointly-trained) perception model for symbol grounding—assuming a pre-given symbolic structure hand-engineered by a human expert. Due to the similarities shared by the majority of discrete dynamic domains, some researchers (Lyu et al., 2019) have argued that a laborious crafting of symbolic model is not always necessary, as the symbolic formulation could adapt to different problems, by instantiating new types of objects and a few additional rules for each new task; such claim would still need to be backed by further work in order to demonstrate such flexibility. Moreover, when adopting a symbolic or logical high-level framework, one also needs to face a new trade-off between expressivity and complexity of the symbolic representation.

5 Interpretable transition/preference models

In this section, we overview the work that focuses on exploiting an interpretable model of the environment or task. This model can take the form of a transition model (Sect. 5.1) or preference model (Sect. 5.2). Such interpretable models can help an RL agent reason about its decision-making, but also help humans understand and explain its decision-making. As such, they can be used in an interpretable RL algorithm, but also in a post-hoc procedure to explain the agent’s decision-making. Note that those models may be learned, or not, and, when not learned, may possibly be fully provided to the RL agent or not. For instance, the reward function, which is one typical way of defining the preference model, is generally specified by the system designer in order to guide the RL agent to learn and perform a specific task. This function is usually not learned directly by the RL agent (except in inverse RL, Ng & Russell, 2000), but still has to be intelligible in some sense, otherwise the agent’s decision-making may be based on spurious reasons and the agent may not accomplish the desired task since a non-intelligible reward function is hard to verify and may be incorrectly specified.

5.1 Interpretable transition models

Interpretable transition models can help discover the structure and potential decomposition in a problem that are useful for more data-efficient RL (via e.g., more effective exploration), but also allow for larger generalizability and better transfer learning. The work discussed here either solely focuses on model learning or belongs to model-based RL. Various interpretable representations have been considered for learning transition models, such as decision trees or graphical models for probabilistic models, physics-based or graph for deterministic models, or NNs with architectural inductive bias. The NN-based approaches, which are more recent, are presented separately to emphasize them. We provide an overview of all the methods for interpretable transition models in Table 4.

Probabilistic Models

While the setting of factored MDPs generally assumes that the structure is given, Degris et al. (2006) propose a general model-based RL approach that can both learn the structure of the environment and its dynamics. This method is instantiated with decision trees to represent the environment model. Statistical \(\chi ^2\) tests are used to decide for the structural decomposition.

Various approaches are based on generative models under the form of graphical models. In Metzen (2013), a graph-based representation of the transition model is learned in continuous domains. In Kansky et al. (2017), schema networks are proposed as generative models to represent the transition and reward models in a problem described in terms of entities (e.g., objects or pixels) and their attributes. As a graphical model, the authors explain how to learn its structure and how to use it for planning using inference. The work in Kaiser et al. (2019) learns via variational inference an interpretable transition model by encoding high-level knowledge in the structure of a graphical model.

In the relational setting, a certain number of studies explored the idea of learning a relational probabilistic model for representing the effects of actions (see Walsh, 2010 for discussions of older work). In summary, those approaches are either based on batch learning (e.g., (Pasula et al., 2007; Walker et al., 2008)) or online methods (e.g., Walsh, 2010) with or without guarantees using more or less expressive relational languages. Some recent work (Martínez et al., 2016, 2017a) proposes to learn a relational probabilistic model via ILP and uses optimization to select the best planning operators.

Deterministic Models

In Diuk et al. (2008), an efficient model-based approach is proposed for an object-oriented representation of the world. This approach is extended by Marom and Rosman (2018) to deictic object-oriented representations, which use partially grounded predicates, in the KWIK framework (Walsh, 2010).

Alternatively, Scholz et al. (2014) explore the use of a physics engine as a parametric model for representing the deterministic dynamics of the environment. The parameters of this engine are learned by a Bayesian learning approach. Finally, the control problem is solved using the A* algorithm.

In a hierarchical setting, several recent studies have proposed to rely on search algorithms on state-space graphs or planning algorithms for the higher-level policy. Given a mapping from the state space to a set of binary high-level attributes, Zhang et al. (2018a) learn a model of the environment predicting if a low-level policy would successfully transition from an initial set of binary attributes to another set. The low-level policy observes the current state and the desired set of attributes to reach. Once the transition model and policy are learned, a planning module can be applied to reach the specified high-level goals. Thus, the high-level plan is interpretable, but the low-level policy is not.

Eysenbach et al. (2019) extract a state-space graph from a replay buffer and apply the Dijkstra algorithm to find a shortest path to reach a goal. This graph represents the state space for the high level, while the low level is dealt with a goal-conditioned policy. The approach is validated in navigation problems with high-dimensional inputs.

NN-based Model

Various recent propositions have tried to learn dynamics model using NNs with specific architectural inductive bias taking graphs as inputs (Battaglia et al., 2016; Sanchez-Gonzalez et al., 2018). While this line of work can provide high-fidelity simulators, the learned model may suffer from a lack of interpretability.

The final set of work we would like to mention aims at learning object-based dynamics models from low-level inputs (e.g., frames) using NNs. In that sense, they can be understood as an extension of the work in relational domain where the input is now generally high-level. One early work (Finn et al., 2016; Finn & Levine, 2017) tries to take into account moving objects. However, the model predicts pixels and does not take into account relations between objects, which limits its generalizability. Zhu et al. (2018) propose a novel NN, which can be trained in an unsupervised way, for object detection and object dynamic prediction conditioned on actions and object relations. This work has been extended to deal with multiple dynamic objects (Zhu et al., 2020).

Another work (Agnew & Domingos, 2018) proposes an unsupervised method called Object-Level Reinforcement Learner (OLRL), which detects objects from pixels and learns a compact object-level dynamics model. The method works according to the following steps. Frames are first segmented into blobs of pixels, which are then tracked over time. An object is defined as blobs having similar dynamics. Dynamics of those objects are then predicted with a gradient boosting decision tree.

In Veerapaneni et al. (2020) an end-to-end object-centric perception, prediction, and planning (OP3) framework is developed. The model has different components jointly-trained: for (dynamic) entity grounding, for modeling the dynamics and for modeling the observation distribution. The variable binding problem is treated as an inference problem: being able to infer the posterior distribution of the entity variables given a sequence of observations and actions. One further specificity of this work is to model a scene not globally but locally, i.e., for each entity and its local interactions (locally-scoped entity-centric functions), avoiding the complexity to work with the full combinatorial space, and enabling generalization to various configurations and number of objects.

Discussion

Diverse approaches have been considered for learning an interpretable transition model. They are designed to represent either deterministic or stochastic environments. Recent work is based on NNs in order to process high-dimensional inputs (e.g., images) and has adopted an object-centric approach. While NNs hinder the intelligibility of the method, the decomposition into object dynamics can help scale and add transparency to the transition model.

5.2 Interpretable preference models

In RL, the usual approach to describe the task to be learned or performed by an agent consists in defining suitable rewards, which is often a hard problem to solve for the system designer. The difficulty of reward specification has been recognized early (Russell, 1998). Indeed, careless reward engineering can lead to undesired learned behavior (Randlov & Alstrom, 1998) and to value misalignment (Arnold et al., 2017). Different approaches have been proposed to circumvent or tackle this difficulty: imitation learning (or behavior cloning) (Osa et al., 2018; Pomerleau, 1989), inverse RL (Arora & Doshi, 2018; Ng & Russell, 2000), learning from human advice (Kunapuli et al., 2013; Maclin & Shavlik, 1996), or preference elicitation (Rothkopf & Dimitrakakis, 2011; Weng et al., 2013). Table 5 summarizes the related work for interpretable preference models. Note that some of the approaches in Sect. 5.1 apply here as well (e.g., Walker et al., 2008).

Closer to interpretable RL, Munzer et al. (2015) extend inverse RL to relational RL, while Martínez et al. (2017a) learn from demonstrations in relational domains by active learning. In Srinivasan and Doshi-Velez (2020), more interpretable rewards are learned using tree-structured representations (Bewley & Lecue, 2022; Yang et al., 2018b).

Recent work has started to investigate the use of temporal logic (or variants) to specify an RL task (Aksaray et al., 2016; Littman et al., 2017; Li et al., 2017a, 2019). Related to this direction, Kasenberg and Scheutz (2017) investigate the problem of learning from demonstration an interpretable description of an RL task under the form of linear temporal logic (LTL) specifications.

Another related work Toro Icarte et al. (2018a) propose to specify and represent a reward function as a finite-state machine, called reward machine, which clarifies the reward function structure. Reward machines can be specified using inputs in a formal language, such as LTL (Camacho et al., 2019; Hasanbeig et al., 2020). The model has also been extended to stochastic reward machines (Corazza et al., 2022). Reward machines can also be learned by local search (Toro Icarte et al., 2019) or with various automata learning techniques (Gaon & Brafman, 2020; Xu et al., 2020). Inspired by reward machine, Illanes et al. (2020) propose the notion of taskable RL, where RL tasks can be described as symbolic plans.

Using a different approach, Tasse et al. (2020) show how a Boolean task algebra, if such structure holds for a problem, can be exploited to generate solutions for new tasks by task composition (Todorov, 2009). Such approach, which was extended to the lifelong RL setting (Tasse et al., 2022), can arguably provide interpretability of the solutions thus obtained.

Discussion

As can be seen, research in interpretable preference representation has been less developed than for transition models. However, we believe interpretability of preference models is as important if not more than interpretability for describing the environment dynamics, when trying to understand the action selection of an RL agent. Thus, more work is needed in this direction to obtain more transparent systems based on RL.

6 Interpretable decision-making

We now turn to the main part of this survey paper, which deals with the question of interpretable decision-making. Among the various approaches that have been explored to obtain interpretable policies (or value functions), we distinguish four main families (see Fig. 3). Interpretable policies can be learned directly (Sect. 6.1) or indirectly (Sect. 6.2), and in addition, in DRL, interpretability can also be enforced or favored at the architectural level (Sect. 6.3) or via regularization (Sect. 6.4).

6.1 Direct approaches

Work in the direct approach aims at directly searching for a policy in a policy space chosen and accepted as interpretable by the system designer. They can be categorized according to their search space and the method to search in this space (Table 6). Several models have been considered in the literature:

Decision Trees

A decision tree is a directed acyclic graph where the nodes can be categorized into decision nodes and leaf nodes. It is interpretable by nature, but learning it can be computationally expensive. The decision nodes will determine the path to follow in the tree until a leaf node is reached, this selection is mostly done according to the state features. Decision trees can represent value functions or policies. For instance, some older work (Ernst et al., 2005) uses a decision tree to represent the Q-value function where each leaf node represents the Q-value of an action in a state. Their optimization method is based on decision tree-based supervised learning methods which do not rely on differentiability.

In contrast, Likmeta et al. (2020) propose to learn parameterized decision nodes. In this approach, the policy instead of the value function is represented by the decision tree where a leaf represents the action to take. The structure of the tree is assumed to be given by experts. To update the tree parameters, policy gradient with parameter-based exploration is employed. Similarly, Silva et al. (2020) design a method to discretize differentiable decision trees such that policy gradient can be used during learning. Therefore, the whole structure of the tree can be learned. The analysis in Silva et al. (2020) also suggests that representing the policy instead of the value function with a decision tree is more beneficial. Expert or other prior knowledge may also bootstrap the learning process, as demonstrated by Silva and Gombolay (2020), where the policy tree is initialized from human-provided knowledge, before being dynamically learned. In addition, Topin et al. (2021) introduce a method defining a meta-MDP from a base MDP with additional actions where any policy in the meta-MDP can be transformed in a decision tree policy in the base MDP. In this way, the meta-MDP can be solved by classic DRL algorithms. Recently, Pace et al. (2022) propose to learn a tree-based policy in the offline and partially-observable setting.

In a different approach, Gupta et al. (2015) propose to learn a binary decision tree where each leaf is itself a parametric policy. Linear Gibbs softmax policies are learned in discrete action spaces, while in continuous action spaces Gaussian distributions are learned. These parametric policies remain interpretable since their parameters are directly interpretable (probabilities for Gibbs softmax policies, mean and standard deviation for Gaussian distribution) and do not depend on states. Hence, the composition of the decision tree with the parametric policies is interpretable. Policy gradient is employed to update the parametric policies and to choose how the tree should grow.

Formulas

Maes et al. (2012a) represent the Q-value function (used to define a greedy policy) with a simple closed-form formula constructed from a pre-specified set of allowed components: binary operations (addition, subtraction, multiplication, division, minimum, and maximum), unary operations (square root, logarithm, absolute value, negation, and inverse), variables (components of states or actions, both described as vectors), and a fixed set of constants. Because of the combinatorial explosion, the total number of operators, constants, and variables occurring in a formula was limited to 6 in their experiments. To search among this space, the authors formulate a multi-armed bandit problem and used a depth-limited search approach (Maes et al., 2012b).

In Hein et al. (2018, 2019), a formula is used to directly represent a policy. In this work, expressivity is improved by adding more operators (tanh, if, and, or) and deeper formulas (a maximum depth of 5 and around 30 possible variables). Genetic programming is used to search for the formula when a batch of RL transitions is available..

A different approach is proposed in the context of traffic light control by Ault et al. (2020) who design a dedicated interpretable polynomial function where the parameters are learned by a variant of DQN. This function is then used similarly to a Q-value function to derive a policy.

Fuzzy controllers

Fuzzy controllers define a policy as a set of fuzzy rules of the form: “\(\text {IF } fuzzy\_condition(state) \text { DO } action.\)” Akrour et al. (2019) assume that a state is categorized in a discrete number of clusters with a fuzzy membership function. Then \(fuzzy\_condition(state)\) is defined as a distance to a centroid. A policy is defined as a Gaussian distribution such that the closer a state is to a centroid, the more the mean associated to the centroid is taken into account for the global mean. The mean associated to each cluster is learned via policy gradient given a non-interpretable critic. Similarly, Hein et al. (2017) learn fuzzy rules for deterministic policies with particle swarm optimization in continuous action domains. In both approaches, the number of rules (and clusters) are adapted automatically.

Logic Rules

Neural Logic Reinforcement Learning (NLRL Jiang & Luo, 2019) aims at representing policies by first-order logic. NLRL combines policy gradient methods with a new differentiable ILP architecture adapted from Evans and Grefenstette (2018). All the possible rules are generated given expert-designed rule templates. To represent the importance of rules in the deduction, a weight is associated to each rule. As all rules are applied with a softmax over their weights, the resulting predicate takes its value over the continuous interval [0; 1] during learning. Such approach is able to generalize to domains with more objects than it was trained on, but computing all the applications of all possible rules during training is costly.

In the previous approach, to limit the number of possible rules, the templates are generally formulated such that the number of atoms in the body of a rule is restricted to two. To overcome this limitation, Payani and Fekri (2019a, 2019b) design an alternative model, enforcing formulas to be in disjunctive normal form, where weights are associated to atoms (instead of rules) in a clause and extend it to RL (Payani & Fekri, 2020). Similarly, Zimmer et al. (2021) also define weights associated to atoms. However, the architecture proposed by Dong et al. (2019) is adapted to enforce interpretability and relies on a Gumbel-Softmax distribution to select the arguments in a predicate. This approach can be more interpretable than previous similar work, since it can learn a logic program instead of a weighted combination of logic formulas. Recently, alternative approaches (Delfosse et al., 2023; Glanois et al., 2022) have been further explored.

Alternatively, Yang and Song (2019) propose a differentiable ILP method extending multi-hop reasoning (Lao & Cohen, 2010; Yang et al., 2017). Instead of performing forward-chaining on predefined templates, weights are associated to every possible relational paths where a path corresponds to a multi-step chain-like logic formula. Compared to previous work, it is less expressive since it is not able to represent full Horn clauses, but has a better scalability. This approach is extended by Ma et al. (2020) to the RL setting.

Programs

Verma et al. (2019) propose a novel approach to learn directly a policy written as a program. Their approach can be seen as inspired by (constrained functional) mirror descent. Thus, their algorithm iteratively updates the current policy using a gradient step in the continuous policy space that mixes neural and programmatic representations, then projects the resulting policy in the space of programmatic policies via imitation learning. This approach is extended by Anderson et al. (2020) to safe RL in order to avoid unsafe states during exploration with formal verification. Recently, Qiu and Zhu (2022) propose a differentiable method to learn a programmatic policy, while Cao et al. (2022) propose a framework to synthesize hierarchical and cause-effect logic programs.

Graphical Models

Most previously-discussed work uses a deterministic interpretable representation. However, graphical models can also be considered interpretable. Thus, for instance, in the context of autonomous driving, Chen et al. (2020) solve the corresponding DRL problem as a probabilistic inference problem (Levine, 2018): for both the RL model and policy, they learn graphical models with hidden states, which are trained to be interpretable by enforcing semantic meanings available at training time. The drawback of this approach is that it can only provide interpretability to the learned latent space.

Discussion

Using the direct approach to find interpretable policies is hard since we must be able to solve two potentially conflicting problems at the same time: (1) finding a good policy for the given (PO)MDP and (2) keeping that policy interpretable. These two objectives may become contradictory when the RL problems are large, resulting in a scalability issue with the direct approach. Most work learning a fully interpretable policy focuses only on small toy problems.

The direct approach is related to discrete optimization where the objective function is not differentiable and looking for a policy in such a space is very difficult. Another limitation of these approaches is their poor robustness to noise. To overcome those issues, several approaches use a continuous relaxation to make the objective function differentiable (i.e., search in a smoother space) and more robust to noise, but the scalability issue remains open.

6.2 Indirect approach

In contrast to the direct approach, the indirect approach follows two steps: first train a non-interpretable policy with any efficient RL algorithm, then transfer this trained policy to an interpretable one. Thus, this approach is related to imitation learning (Hussein et al., 2017) and policy distillation (Rusu et al., 2016). Note a similar two-step approach can be found in post-hoc explainability for RL. However, a key difference concerns how the obtained interpretable policy is used, either as a final controller or as a policy that explains a black-box controller, which leads to different considerations about how to learn and evaluate such interpretable policy (see Sect. 7). Similarly to RL algorithms that can be subdivided into value-based methods and policy-based methods, the focus in the indirect approach may be to obtain an interpretable representation of either a learned Q-value function (which provides an implicit representation of a policy) or a learned policy (often called oracle), although most work focuses on the latter case. Regarding the types of interpretable policies, decision trees (or variants) are often chosen due to their interpretability, however other representations like programs have also been considered.

Decision Trees and Variants

Liu et al. (2018)’s work is representative among the value-based methods using decision trees. The authors introduce Linear Model U-trees (LMUTs) to approximate Q-functions estimated by NNs in DRL. LMUTs is based on U-tree (Maes et al., 1996), which is a tree-structured representation specifically designed to approximate a value function. A U-Tree, whose structure and parameters are learned online, can be viewed as a compact decision tree where each arc corresponds to the selection of the feature of a current or past observation, and each path from the root to a leaf represents a cluster of observation histories having the same Q-values. LMUTs extend U-Trees by having in each leaf a linear model, which is trained by stochastic gradient descent. Although LMUT is undoubtedly a more interpretable model than a NN, it shows its limit when dealing with high-dimensional features spaces (e.g., images). In Liu et al. (2018), rules extraction and super-pixels (Ribeiro et al., 2016b) are used to explain the decision-making of the resulting LMUT-based agent.

Many policy-based methods propose to learn a decision tree policy. The difficulty of this approach is that a high-fidelity policy may require a large-sized decision tree. To overcome this difficulty, Bastani et al. (2018) present a method called VIPER that builds on DAGGER (Ross et al., 2011), a state-of-the-art imitation learning algorithm, but exploits the available learned Q-function. The authors show that their proposition can achieve comparable performance to the original non-interpretable policy, and is amenable to verification. As an alternative approach to control the decision tree size, Roth et al. (2019) propose to increase its size only if the novel decision tree increases sufficiently the performance. As an improvement to work like VIPER using only one decision tree, Vasic et al. (2019) use a mixture of Expert Trees (MOET). The approach is based on a gating function that partitions the state space and then within each partition, a decision tree expert (via VIPER) approximates the policy.

For completeness, we mention a few other relevant studies, mostly based on imitation learning: Natarajan et al. (2011) learn a set of relational regression trees in relational domains by functional gradient boosting; Cichosz and Pawełczak (2014) learn decision tree policies for car driving; Nageshrao et al. (2019) extract a set of fuzzy rules from a neural oracle.

Programs

As an alternative to decision trees, Verma et al. (2018) introduce Programmatically Interpretable RL (PIRL) to generate policies represented in a high-level, domain-specific programming language. To find a program that can reproduce the performance of a neural oracle, they propose a new method, Neurally Directed Program Search (NDPS). NPDS performs a local search over the non-smooth space of programmatic policies in order to minimize a distance from this neural oracle computed over a set of adaptively chosen inputs. To restrict the search space, a policy sketch is assumed to be given. Unlike the imitation learning setting where the goal is to match the expert demonstrations perfectly, a key feature of NPDS is that the expert trajectories only guide the local program search in the program space to find a good policy.

Zhu et al. (2019) also propose a search technique to find a program to mimic a trained NN policy for verification and shielding (Alshiekh et al., 2018). The novelty in their approach is to exploit the information of safe states, assumed to be given. If a generated program is found to be unsafe from an initial state, this information is used to guide the generation of subsequent programs.

In Burke et al. (2019), a method is proposed to learn a program from demonstration for robotics tasks that are solvable by applying a sequence of low-level proportional controllers. In a first step, the method fits a sequence of such controllers to a demonstration using a generative switching controller task model. This sequence is then clustered to generate a symbolic trace, which is then used to generate a programmatic representation by a program induction method.

Finally, although strictly speaking not a program, Koul et al. (2019) propose to extract from a trained recurrent NN policy a finite-state representation (i.e., Moore machine) that can approximate the trained policy and possibly match its performance by fine-tuning if needed. This representation is arguably more interpretable than the original NN.

Discussion

As mentioned previously, the direct approach requires tackling simultaneously two difficulties: (1) solve the RL problem and (2) obtain an interpretable policy. In contrast to the direct approach, the indirect approach circumvents the first above-mentioned difficulty at the cost of solving two consecutive (hopefully easier) problems: (1) solve the RL problem with any efficient RL algorithm, (2) mimic the good learned policy with an interpretable one by solving a supervised learning problem. Therefore, any imitation learning (Hussein et al., 2017) and policy distillation (Rusu et al., 2016) methods could be applied to obtain an interpretable policy in the indirect approach. However, the indirect approach is more flexible than standard imitation learning because of the unrestricted access to (1) an already-trained expert policy using the same observation/action spaces, and (2) its value function as well. The teacher-student framework (Torrey & Taylor, 2013) fits particularly well this setting. As such, it would be worthwhile to investigate the applications of techniques proposed for this framework (e.g., Zimmer et al., 2014) to the indirect approach.

In addition, the work by Verma et al. (2019) seems to be a promising approach to combine the direct and indirect approaches. While the authors show that the performance of their proposition outperforms NDPS, it is currently still not completely clear which of a direct method or an indirect one should be preferred to learn good interpretable policies.

6.3 Architectural inductive bias

To favor interpretable decision-making, specific architectural choices may be adopted for the policy network or value function, may it be through relational, logical, or attention-based bias; some examples are presented in Table 7.

Relational Inductive Bias