Abstract

Simple logistic regression can be adapted to deal with right-censoring by inverse probability of censoring weighting (IPCW). We here compare two such IPCW approaches, one based on weighting the outcome, the other based on weighting the estimating equations. We study the large sample properties of the two approaches and show that which of the two weighting methods is the most efficient depends on the censoring distribution. We show by theoretical computations that the methods can be surprisingly different in realistic settings. We further show how to use the two weighting approaches for logistic regression to estimate causal treatment effects, for both observational studies and randomized clinical trials (RCT). Several estimators for observational studies are compared and we present an application to registry data. We also revisit interesting robustness properties of logistic regression in the context of RCTs, with a particular focus on the IPCW weighting. We find that these robustness properties still hold when the censoring weights are correctly specified, but not necessarily otherwise.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

To handle right censored data when fitting logistic regression models, it has been suggested to weight the estimating equations, see. e.g. Zheng et al. (2006); Uno et al. (2007), or to weight the outcome, see e.g. Scheike et al. (2008). In this manuscript we review these two approaches and we study and compare their large sample properties. We further present their potential to estimate meaningful treatment effects in observational studies and randomized clinical trials.

The two weighting approaches can be used very similarly with or without competing risks, which is convenient. As pointed out by e.g. Schumacher et al. (2016), competing risks are very common in medical research. The two weighting approaches have the advantage that they directly extend the usual logistic regression method for binary data to both survival data and competing risks data.

Logistic regression is appealing both for its simplicity and its direct interpretation when the interest lies in estimating a risk or survival probability at a fixed time-point. We refer to this as a t-year risk or survival, in what follows. Unlike alternative methods based on hazards regression, the parameters of the model lead to a direct interpretation for the t-year risk or survival (probability) being modeled. The modeling assumptions are also easier to communicate and check, in our opinion. This is in contrast to hazard-based regression, where model parameters do not have a direct interpretation for the t-year risk or survival, and are commonly misunderstood by clinicians. This is true without competing risks (Sutradhar and Austin 2018), and even more so with competing risks. Standard approaches to model hazards involve structural assumptions, typically proportional hazards, whose consequences for the risk of interest are typically not clear. In addition, it can be challenging to assess the validity of these structural assumptions. Stensrud and Hernán (2020) also mentioned that treatment effects calculated from t-year risks are often more helpful for clinical decision-making and more easily understood by patients than hazard ratios.

The rest of the manuscript is organized as follows. In Sect. 2, we introduce the estimating equations to fit a logistic regression model and we study the large sample properties of the two different weighting approaches. To derive the large sample results, we use only “simple” arguments based on Taylor expansions and “standard” martingales theory (Aalen et al. 2008, Sec. 2.2). We avoid the use of projection arguments as in e.g. Tsiatis (2006), which we hope facilitates the understanding of the origins of the key results. Building on the comparison of the asymptotic properties of each weighting approach, in Sect. 2.8, we show that the two approaches sometimes have very different performances, in terms of efficiency. In Sect. 3, we further show how to use logistic regression to build G-computation (standardized) or double robust estimators to account for confounding in observational studies, when estimating treatment effects. Here we focus on the situation with a baseline treatment and baseline confounders. We do not cover the more complex settings with time-dependent treatment and/or time-dependent confounding. As compared to similar approaches building on hazard-based regression, as that of Ozenne et al. (2020), here logistic regression enables a direct parametrization of the outcome model. This can facilitate modeling choices and the discussion of their strengths and limitations. In Sect. 4, we present an application to Danish registry data (Holt et al. 2021), where we compared the 33-months risk of cardiovascular death among patients who have initiated a beta-blocker treatment to those who have not. In Sect. 5, we revisit interesting robustness properties of logistic regression fitted to randomized clinical trials (RCT) data. We find that these robustness properties still hold with the two weighting approaches, when the censoring weights are correctly specified. Finally, Sect. 6 presents a discussion.

2 Logistic regression with censored data

2.1 Observed data

We assume to observe \(({\widetilde{T}}_i,\Delta _i,\widetilde{\eta _i},{\varvec{L}}_i,A_i)\), \(i\!=\!1,\dots ,n\), i.i.d. copies of \(({\widetilde{T}},\Delta ,{\widetilde{\eta }},{\varvec{L}},A)\). Here T is the time-to-event with cause of death \(\eta \in \{ 1,2\}\), that is observed subject to right censoring. Specifically, because of a censoring time C, we observe \({\widetilde{T}}=\min (T,C)\), the right censored time to event, \(\Delta = 1\!\! 1 \{ T \le C \}\), the event (or non-censoring) indicator and the observed cause of death \(\widetilde{\eta }=\Delta \eta \). In addition to \(({\widetilde{T}},\Delta ,\widetilde{\eta })\), we also observe baseline covariates \({\varvec{L}}\) and a binary treatment group A.

In the worked example we consider in Sect. 4, T is the time from start of follow-up to death, with \(\eta \in \{ 1,2\}\) indicating either a cardiovascular death, when \(\eta =1\), or a non-cardiovascular death, when \(\eta =2\). The censoring time C is the time from start of follow-up to either administrative censoring at end of follow-up (e.g. 31 December 2018) or loss of follow-up due to other reasons (here only emigration), whichever comes first. The variable A indicates whether a patient has initiated a beta-blocker treatment (\(A=1\)) or not (\(A=0\)) within 3 months before start of follow-up. As follow-up starts 3 months after a myocardial infarction (MI), the variable A indicates whether a patient has initiated the treatment within 3 months after MI. Here \({\varvec{L}}\) denotes a vector of many baseline (pre-treatment) variables, such as age and diabetes history (see Sect. 4 for the full list).

Finally, note that the case without competing risk simply corresponds to the case where \(\eta =1\) with probability 1. In that case, the clinical interpretation of the statistical results will often be simpler. The fact that we assume only two competing events is also without loss of generality as all competing events can always be grouped in a single one.

2.2 Additional notations

Further, for a given time horizon t of interest (e.g. t=1 year) we also define the binary indicator of experiencing a main event within t-year as \(D(t)=1\!\! 1 \{ T\le t, \eta =1 \}\). Because of censoring, D(t) is not always fully observed, but we do observe \({\widetilde{D}}(t)=1\!\! 1 \{ {{\widetilde{T}}}\le t, {\widetilde{\eta }}=1 \}\). These two indicators are related via the equation \({\widetilde{D}}(t)=D(t)\cdot \Delta (t)\), where \(\Delta (t)=1\!\! 1 \{ t \wedge T \le C \}=1-1\!\! 1 \{ {\widetilde{T}}\le t \}\cdot (1-\Delta )\) is the indicator of not observing a censored time before t. We use the notation \(x \wedge y\) to indicate the minimun of x and y. To present technical results, we further introduce the notation \(\lambda _c(t,A,{\varvec{L}})\) for the hazard rate of C given the covariates \(({\varvec{L}},A)\) and we let \(M^c(t,A,{\varvec{L}})=1\!\! 1 \{ {\widetilde{T}}\le t, \Delta =0 \} - \int _0^tY(v)\lambda _c(v,A,{\varvec{L}})dv\) be the related standard martingale, where \(Y(v)=1\!\! 1 \{ {\widetilde{T}}\ge v \}\). When the hazard rate \(\lambda _c(t,A,{\varvec{L}})\) is assumed to not depend on either \({\varvec{L}}\) or A (or both), we will omit them in the notation, for conciseness. We will also use the notation \({\varvec{V}}^2={\varvec{V}}{\varvec{V}}^T\), for any vector \({\varvec{V}}\), and \(\text{ Var }({\varvec{V}})\) to denote the variance-covariance matrix of \({\varvec{V}}\). We also use the “expit” and “logit” functions, defined as \(\text{ expit }(x)=\exp (x)/\{1+\exp (x) \}\) and \(\text{ logit }(x)=\text{ expit}^{-1}(x)=\log \{x/(1-x)\}\).

2.3 Assumptions about the censoring

Throughout the manuscript, we assume the usual independent censoring assumption that C is independent of the outcome \((T,\eta )\) given \(({\varvec{L}},A)\). This allows some dependency between C and \(({\varvec{L}},A)\) that is present in many practical situations such as the study of cardiovascular death presented in Sect. 4. In some sections, we will make the stronger assumption that C is independent of T to derive specific results. This assumption is more restrictive but nevertheless still often plausible and used in practice. For identifiability, we further assume that not all subjects of any subgroup defined at baseline are systematically censored before t, i.e., \(P\{C>t \vert {\varvec{L}}={\varvec{l}}, A=a \}>0\) for all \({\varvec{l}}\) and \(a=0,1\).

2.4 Logistic regression & estimating equations

In this section, we aim to estimate a conditional t-year risk of event, often known as the absolute risk (Pfeiffer and Gail 2017) and defined as \(F_1(t,a,{\varvec{l}})=P\{D(t)=1 \vert A=a, {\varvec{L}}={\varvec{l}}\}\). Within our context presented in Sect. 2.1, it has a simple interpretation as the proportion of patients who are expected to die from a cardiovascular death within the 33 months of follow-up, among patients from treatment group \(A=a\), with baseline characteristics \({\varvec{L}}={\varvec{l}}\). Both Geskus (2016) and Young et al. (2020) discuss the use of this quantity as well as various other relevant quantities. We assume that the absolute risk \(F_1(t,a,{\varvec{l}})\) can be modeled by a logistic model, that is,

where \({\varvec{x}}\) is a pre-specified vector of p random variables constructed from \(({\varvec{l}},a)\) and \({\varvec{x}}^T \varvec{\beta }\) denotes the linear predictor associated to \({\varvec{X}}={\varvec{x}}\). For example, \({\varvec{X}}=(1,A, {\varvec{L}})^T\) is used in the simplest model and \({\varvec{X}}=(1, A,{\varvec{L}}, A {\varvec{L}})^T\) can be used to model interactions for heterogeneous treatment effects. Note that we implicitly assume that the parameter vector \( \varvec{\beta }\) depends on t. For ease of notation we will sometimes write \(Q(t,{\varvec{x}})\) for \(Q(t,{\varvec{x}}, \varvec{\beta })\), without emphasizing that it depends on the parameter \(\varvec{\beta }\). Whenever convenient, we will also use \(F_1(t,{\varvec{x}})\) to denote the conditional risk \(P\{D(t)=1 \vert {\varvec{X}}={\varvec{x}}\}\).

For uncensored data, i.e. assuming \(P(\Delta =1)=1\), the binary variable \(D_i(t)\) is observed for all subjects \(i=1,\dots ,n\). In that case, we can simply fit a usual generalized linear model (glm) and it is well known that maximizing the likelihood leads to the following p estimating equations, see e.g. McCullagh and Nelder (1989),

For right censored data, \(D_i(t)\) is not observed for all subjects, but only for subjects i such that \(\Delta _i(t)=1\). Two modifications of the estimating equations have been proposed to obtain consistent estimators via inverse probability of censoring weighting. The first consists of weighting each individual contribution in (1) as follows,

using subject specific weight

where \({\widehat{G}}_c(u, {\varvec{x}}_i)\) denotes a consistent estimator of \(G_c(u, {\varvec{x}}_i)=P(C_i > u \vert {\varvec{X}}_i={\varvec{x}}_i)\) for all \(u\le t\). We refer to this approach below as IPCW-GLM. Although \(D_i(t)\) is not observed for all subjects, we note that (2) can be computed since \({\widehat{W}}_i(t) \cdot {\widetilde{D}}_i(t)=\widehat{W}_i(t) \cdot D_i(t)\).

Often, a Kaplan-Meier estimator is used to compute \({\widehat{G}}_c\). It may be computed from the entire dataset, when the censoring is independent of the covariates, or within strata defined from \({\varvec{x}}_i\) (e.g. in each treatment group) when this is not the case. Alternatively, a Cox model can be used to model the censoring distribution. This approach has been used by Zheng et al. (2006) and Uno et al. (2007) in a context without competing risks and by Azarang et al. (2017) in presence of competing risks.

A second approach consists of weighting each individual outcome in (1) as follows,

Here again, we could equivalently write \(D_i(t)\) instead of \({\widetilde{D}}_i(t)\) in (3) to clarify that the computation can be done using the observed censored data. We refer to this approach as the outcome weighted IPCW approach or the OIPCW below. This approach has been used by Scheike et al. (2008), among others, in a context with competing risks.

2.5 Properties and comparison of the two approaches

In this section, we study and compare some properties of the two different IPCW adjustements given by (2) or (3). Surprisingly, the two weighting approaches can lead to very different performance in some settings, as it will be illustrated in Sect. 2.8. But, first, we start by noting a situation in which the two approaches are identical. This happens when \({\widehat{G}}_c(t,{\varvec{x}}_i)\) is fully non-parametric, that is, when for all values of \({\varvec{x}}_i\), the survival probability \(G_c(t,{\varvec{x}}_i)\) can estimated with the Kaplan-Meier estimator. Indeed, for each strata \(s({\varvec{x}}_i)\) of \(n_{s({\varvec{x}}_i)}\) subjects having this covariate value \({\varvec{x}}_i\), we note that \(\sum _{j \in s( {\varvec{x}}_i )} \widehat{W}_j(t) = n_{s({\varvec{x}}_i)} \) for all t such that \({\widehat{G}}_c(t,{\varvec{x}}_i) > 0\), see e.g. Appendix A of Cortese et al. (2017). Therefore it follows that

which makes the two set of estimating Eqs. (2) and (3) equivalent and give the same estimator.

Second, we study the large sample properties of the two IPCW adjustments given by (2) or (3). In the remainder of this section, we will restrict our attention to the case in which a simple Kaplan-Meier estimator is used to estimate the censoring distribution and the distribution of C does not depend on the covariates \((A,{\varvec{L}})\). We will not necessarily assume that the logistic model is correctly specified. That is, we allow for \(Q(t,{\varvec{x}},\varvec{\beta })\ne F_1(t,{\varvec{x}})\). In that case, \(\varvec{\beta }\) denotes the limit of the solution to the estimating Eq. (1), when the sample size n tends to infinity. Such a \(\varvec{\beta }\) exists under mild conditions, see e.g. Uno et al. (2007). Following similar lines as those of Bang and Tsiatis (2000), in Appendix A.1, we provide i.i.d. decompositions for the score equations, in Theorem 1 below.

Theorem 1

The scores \({\widehat{U}}_{oipcw}(\varvec{\beta })\) and \({\widehat{U}}_{ipcw-glm}(\varvec{\beta })\) have the i.i.d. representation

where the subscript m denotes either “oipcw” or “ipcw-glm”, with

where

From the above Theorem 1, the asymptotic normality of the estimators follows and the asymptotic variances are calculated following standard martingale theory (Aalen et al. 2008, Sec. 2.2). Note that similar results have been given before in Scheike et al. (2008) for the OIPCW case and by Azarang et al. (2017) for the IPCW-GLM case, although the studied estimation equations were slightly different.

Corollary 1

Let \({\widehat{\varvec{\beta }}}_m\) be the estimator that solves \({\widehat{U}}_{m}(\varvec{\beta })={\varvec{0}}\). Asymptotically, \(\sqrt{n} \big ({\widehat{\varvec{\beta }}}_m - \varvec{\beta }\big ) \sim \mathcal {N}\big ({\varvec{0}} , \varvec{\Sigma }_m \big )\), with the usual sandwich form of the variance, \(\varvec{\Sigma }_m= \varvec{{{\mathcal {I}}}}^{-1}\varvec{\Omega }_m\varvec{{{\mathcal {I}}}}^{-1}\), with “bread” \(\varvec{{{\mathcal {I}}}}= E\left[ {\varvec{X}}^{2} Q(t,{\varvec{X}}) \left\{ 1 - Q(t,{\varvec{X}}) \right\} \right] \) and “meat” \(\varvec{\Omega }_m= \varvec{{{\mathcal {J}}}}+ \int _0^t {\varvec{f}}_m(s) f_c(s) ds\), where \(f_c(s)= \lambda _c(s)/G_c(s)\), \(\varvec{{{\mathcal {J}}}}=E\big (\big [ {\varvec{X}}\big \{ D(t) - Q(t,{\varvec{X}})\big \} \big ]^2\big )\) and \({\varvec{f}}_m(s)=E\big [ \big \{\varvec{\varphi }_{m}\big ({\varvec{X}}, D(t),s\big )\big \}^2 1\!\! 1 \{ T\ge s \} \big ]\). A consistent estimator \({\widehat{\varvec{\Sigma }}}_m\) of \(\varvec{\Sigma }_m\) is provided in Appendix A.2. Further, if the model is well-specified, that is, if \(Q(t,{\varvec{x}},\varvec{\beta })= F_1(t,{\varvec{x}})\) for all \({\varvec{x}}\), then \(\varvec{{{\mathcal {J}}}}=\varvec{{{\mathcal {I}}}}\).

Proof

Following standard martingale theory (Aalen et al. 2008, Sec. 2.2), the second term of the two in the right-hand side of (4) is a martingale relative to the filtration \(\{\mathcal {F}_u\}\), where \(\mathcal {F}_u\) is the \(\sigma \)-algebra generated by \(\big \{ 1\!\! 1 \{ C \le t \}, t\le u \, ; (T, \eta , {\varvec{X}}) \big \}\). Further, the two terms in the right-hand side of (4) therefore are uncorrelated as the first term is \(\mathcal {F}(0)\) measurable. With notation \(\varvec{\Omega }_m=\text{ Var }\Big [ \varvec{\Phi }_m\big \{{\varvec{X}}, D(t), {{\widetilde{T}}}, {\widetilde{\eta }} , t \big \}\Big ]\), as the two terms are also 0 mean, it follows

By definition \(E\big (\left[ {\varvec{X}}\big \{ D(t) - Q(t,{\varvec{X}})\big \} \right] ^2\big )= \varvec{{{\mathcal {J}}}}\) and we note that that \(\varvec{{{\mathcal {J}}}}=\varvec{{{\mathcal {I}}}}\) follows from the law of iterated expectations, when \(Q(t,{\varvec{x}},\varvec{\beta })= F_1(t,{\varvec{x}})\) for all \({\varvec{x}}\). Again following standard variance calculation for martingales (Aalen et al. 2008, Sec. 2.2) and the law of iterated expectations, it further follows

where \({\varvec{f}}_m(s)=E\left[ \big \{\varvec{\varphi }_{m}\big ({\varvec{X}}, D(t),s\big )\big \}^2 1\!\! 1 \{ T\ge s \} \right] \) and \(f_c(s)= \lambda _c(s)/G_c(s)\).

Finally, the delta-method implies that, asymptotically, the variance of the estimator \({\widehat{\varvec{\beta }}}_m\) solving \({\widehat{U}}_{m}(\varvec{\beta })={\varvec{0}}\) will be proportional, in the sample size, to the usual sandwich form of the variance, \(\varvec{\Sigma }_m= {\varvec{{{\mathcal {I}}}}}^{-1} \varvec{\Omega }_m{\varvec{{{\mathcal {I}}}}}^{-1}\), since \({\varvec{{{\mathcal {I}}}}}\) is equal to the mean of the derivative of the estimating Eqs. (1), (2) and (3), see e.g., Stefanski and Boos (2002). That is, asymptotically, \(\sqrt{n} \big ({\widehat{\varvec{\beta }}}_m - \varvec{\beta }\big ) \sim \mathcal {N}\big ({\varvec{0}} , \varvec{\Sigma }_m \big )\). \(\square \)

We now compare the asymptotic efficiency of the two IPCW adjustements given by (2) or (3), when the model is well specified, and show that which of the two is the most efficient depends on the censoring time distribution. Specifically, there exists a function \({\varvec{g}}\) such that \(\varvec{\Omega }_{ipcw-glm} - \varvec{\Omega }_{oipcw} = \int _0^t {\varvec{g}}(s) f_c(s)ds\) and the average \(\int _0^t {\varvec{g}}(s) f_c(s)ds\) can be either positive definite or negative definite, depending on the shape of \(f_c=\lambda _c/G_c\), and hence of the density of the censoring time C within [0, t]. Therefore, for some censoring time distributions the estimator \({\widehat{\varvec{\beta }}}_{ipcw-glm}\) will be more efficient than \({\widehat{\varvec{\beta }}}_{oipcw}\), but for other distributions it will be the other way around. Hence, a general recommendation about which method to prefer between OIPCW and IPCW-GLM cannot be made based on general efficiency criteria. To the best of our knowledge, this result, which we encapsulated in Corollary below, is new. For illustration, in Fig. 1 of Sect. 2.8 we show the diagonal of \({\varvec{{{\mathcal {I}}}}}^{-1} {\varvec{g}}(s) {\varvec{{{\mathcal {I}}}}}^{-1}\) versus \(s \in [0,t]\), from which originates the difference in asymptotic variance between \({\widehat{\varvec{\beta }}}_{ipcw-glm}\) and \({\widehat{\varvec{\beta }}}_{oipcw}\), in a specific setting with two binary covariates.

Corollary 2

When the model is well-specified, the difference of the asymptotic variance of the two scores \({\widehat{U}}_{oipcw}(\varvec{\beta })\) and \({\widehat{U}}_{ipcw-glm}(\varvec{\beta })\), that is, \(\varvec{\Omega }_{ipcw-glm} - \varvec{\Omega }_{oipcw}\), can be either positive definite or negative definite, depending on the shape of the density of the censoring time within the interval [0, t]. This means that for some distributions of the censoring time \({\widehat{\varvec{\beta }}}_{oipcw}\) is more efficient than \({\widehat{\varvec{\beta }}}_{ipcw-glm}\), but for some others it is the other way around.

Proof

In the following, we will make extensive use of the law of iterated expectations, without explicitly mentioning it each time. We first note that \(E\big [ {\varvec{X}}D(t)1\!\! 1 \{ T\ge s \} \big ] =E\left[ {\varvec{X}}H_1(s,t,{\varvec{X}}) \right] \), with \(H_1(s,t,{\varvec{X}}) = F_1(t,{\varvec{X}})- F_1(s,{\varvec{X}})\). Further, it follows

Similarly, we obtain

with,

where \(S(s,{\varvec{X}})=P(T\ge s|{\varvec{X}})\), since we assumed \(Q(t,{\varvec{X}})=F_1(t,{\varvec{X}})\). Interestingly, because \(S(0)=S(0,{\varvec{X}})=1\) and \(H_1(0,t,{\varvec{X}})=F_1(t,{\varvec{X}})\), we note that the function \({\varvec{g}}: {\mathbb {R}} \rightarrow {\mathbb {R}}^{p\times p}\) starts being negative definite in \(s=0\), where

and because \(H_1(t,t,{\varvec{X}})=0\), it ends up being positive definite in \(s=t\), with

The consequence of this is that the average \(\int _0^t {\varvec{g}}(s) f_c(s)ds\) can be either positive definite or negative definite, depending on the shape of \(f_c(\cdot )\) within the interval [0, t]. This means that which set of estimating equations to prefer, between (2) and (3), depends on how the censoring times are distributed. \(\square \)

2.6 On naive standard error computation obtained using standard software

Most statistical software for fitting logistic models have a “weight” option. That is for instance the case of the function glm in R and of procedure LOGISTIC in SAS, which can be used to solve \({\widehat{U}}_{ipcw-glm}(\varvec{\beta }) = {\varvec{0}}\), using the weights \({\widehat{W}}_i(t), i=1,\dots ,n\). Other software can solve \({\widehat{U}}_{oipcw}(\varvec{\beta }) = {\varvec{0}}\) by solving the usual score equations of a logistic model for the weighted outcomes \({\widehat{W}}_i(t){\widetilde{D}}_i(t), i=1,\dots ,n\). That is the case of the function geese in R (from the geepack package) and of procedure GENMOD in SAS. Standard software can therefore be used to compute \({\widehat{\varvec{\beta }}}_{m}\) by solving \({\widehat{U}}_{m}(\varvec{\beta }) = {\varvec{0}}\), for \(m=oipcw\) and \(ipcw-glm\), once the weights have been computed in a previous step, e.g. using Kaplan-Meier.

From a practical point of view, it is of interest to know how the “naive” standard errors, which the software will compute by default in that case, will compare to the correct values given by the diagonal of \({\widehat{\varvec{\Sigma }}}_m\) in Corollary 1. In short, we expect them to be different as the software will ignore the fact that the weights \({\widehat{W}}_i(t)\) are estimated from the data and therefore are not known in advance. We show by simple arguments that the “naive” estimator of the variance of \({\widehat{\varvec{\beta }}}\) is conservative. As it will be illustrated in Sect. 2.8, and perhaps surprisingly, it can be very conservative. This emphasizes that using \({\widehat{\varvec{\Sigma }}}_m\) of Corollary 1 or Bootstrapping is important for correct standard error computation.

In the rest of this section, we restrict our attention to the case in which the censoring weights have been computed by simple Kaplan-Meier estimates, thus assuming C does not depend on any of the covariates. We first note that the “naive” computation of the software will estimate the variance of \(\sqrt{n} \big ({\widehat{\varvec{\beta }}}_m - \varvec{\beta }\big )\) by a variance that converges towards \(\varvec{{{\mathcal {I}}}}^{-1}\varvec{\Omega }_m' \varvec{{{\mathcal {I}}}}^{-1}\), where

where \(W(t)= \Delta (t)/G_c(t \wedge {{\widetilde{T}}})\). This follows from usual theory of estimating equations, see. e.g. Stefanski and Boos (2002), when a robust standard error is computed by the software. The fact that the “naive” robust standard errors are (asymptotically) systematically computed too large is therefore a consequence of the following proposition.

Proposition 1

The difference \(\varvec{\Omega }_{m}- \varvec{\Omega }_{m}'\) is negative definite for both \(m=oipcw\) and \(ipcw-glm\).

Proof

First, note that \(\text{ Var }\left\{ n^{-1/2}U_{m}(\varvec{\beta })\right\} =\varvec{\Omega }_{m}'\), where \(U_{m}(\varvec{\beta })\) is defined by replacing \({\widehat{W}}_i(t)\) by \(W_i(t)\) in \({\widehat{U}}_{m}(\varvec{\beta })\). Second, we note that \(\sqrt{n}\, U_m(\varvec{\beta })\) can be re-written as the same i.i.d. representation as that of \(\sqrt{n}\, {\widehat{U}}_m(\varvec{\beta })\) except for the absence of the conditional expectations terms in \(\varvec{\varphi }_{m}\big ({\varvec{X}}, D(t),s\big )\), that is, without the term \(E( {\varvec{X}}D(t) | T > t)\) for the OIPCW estimator or the term \(E( {\varvec{X}}\left[ D(t) - Q(t,{\varvec{X}})\right] | T > t)\) for the IPCW-GLM estimator. This follows from calculations already presented in Appendix A.1 and especially the identity \(W_i(t)=1 - \int _0^t \{1/G_c(u)\}dM_i^c(u)\). Consequently, following the same martingale arguments as in the proof of Corollary 1, it follows that

with \({\varvec{h}}_{oipcw}({\varvec{X}})={\varvec{X}}D(t)\) and \({\varvec{h}}_{ipcw-glm}({\varvec{X}})={\varvec{X}}\{ D(t) - Q(t,{\varvec{X}})\}\). This shows that \(\varvec{\Omega }_{m}- \varvec{\Omega }_{m}'\) is negative definite. \(\square \)

2.7 Augmented estimators

Following ideas from Robins and Rotnitzky (1992) and Bang and Tsiatis (2000), we now construct modifications of estimating Eqs. (2) and (3) which could lead to (asymptotically) more efficient estimators. Here we will again restrict our attention to the case where the Kaplan-Meier estimator is used to estimate the weights and C is independent of the covariates \((A,{\varvec{L}})\), as in the two previous sections. To do this, we first define augmented equations \({\widehat{U}}_m^{Aug}(\varvec{\beta })\) of the form

where \({\varvec{e}}_m({\varvec{X}},s)\) is an arbitrary function of \({\varvec{X}}\) and s. These modified equations still have mean zero (asymptotically) and thus any choice of \({\varvec{e}}_m({\varvec{X}},s)\) will provide a consistent estimator of \(\varvec{\beta }\). A natural question is therefore which choices lead to the most efficient estimators. The following proposition answers this question and shows that the optimal choice of \({\varvec{e}}_m({\varvec{X}},s)\) also makes the two augmented equations for OIPCW and IPCW-GLM become asymptotically equivalent.

Proposition 2

Let \({\widehat{\varvec{\beta }}}_m^{Aug}\) be the solution of \({\widehat{U}}_m^{Aug}(\varvec{\beta })\). Then, the asymptotic variance of \({\widehat{\varvec{\beta }}}_m^{Aug}\) attains its minimum when

In that case, \(\sqrt{n} \, \widehat{U}_{oipcw}^{Aug}(\varvec{\beta })=\sqrt{n} \, \widehat{U}_{ipcw-glm}^{Aug}(\varvec{\beta }) + o_p(1)\) and the asymptotic variances of \({\widehat{\varvec{\beta }}}_{oipcw}^{Aug}\) and \({\widehat{\varvec{\beta }}}_{ipcw-glm}^{Aug}\) are equal.

Proof

From the i.i.d representation of \(\sqrt{n} \, \widehat{U}_m(\varvec{\beta })\) given in Theorem 1, we get that of the augmented score, that is, \(\sqrt{n}\,{\widehat{U}}_{m}^{Aug}(\varvec{\beta }) = n^{-1/2} \sum _{i=1}^n \varvec{\Phi }_m^{Aug}\big \{{\varvec{X}}_i,D_i(t), {{\widetilde{T}}}_i, {\widetilde{\eta }}_i, t \big \} + o_p(1)\), with influence function

with \(\varvec{\varphi }_{m}^{Aug}({\varvec{X}}, D(t),s)={\varvec{e}}_m({\varvec{X}},s) + \varvec{\varphi }_{m}({\varvec{X}}, D(t),s)\). Similarly to (5) in the Proof of Corollary 1, it follows

where

which achieves its minimum for

Therefore, we have

hence the first result of the proposition. As \(\varvec{\varphi }_{m}^{Aug}({\varvec{X}}, D(t),s)={\varvec{e}}_m({\varvec{X}},s) + \varvec{\varphi }_{m}({\varvec{X}}, D(t),s)\), it further follows

This shows that \(\sqrt{n} \, \widehat{U}_{oipcw}^{Aug}(\varvec{\beta })=\sqrt{n} \, \widehat{U}_{ipcw-glm}^{Aug}(\varvec{\beta }) + o_p(1)\) and therefore also that the asymptotic variances of \({\widehat{\varvec{\beta }}}_{oipcw}^{Aug}\) and \({\widehat{\varvec{\beta }}}_{ipcw-glm}^{Aug}\) are equal. \(\square \)

When using the optimal choice of \({\varvec{e}}_m({\varvec{X}},s)\) of Proposition 2, the augmented equations become

One cannot solve \({\widehat{U}}^{Aug}(\varvec{\beta })={\varvec{0}}\) in practice, since the augmentation term depends on unknown parameters. However, one can instead solve a similar equation after replacing \(M_i^c(s)\) and \(G_c(s)\) by \({\widehat{M}}_i^c(s)\) and \({\widehat{G}}_c(s)\) (as defined in Appendix A.2) and the two terms in the brackets \(\{ \, \}\) above by consistent estimators of each. While the second term \(E\big [ {\varvec{X}}D(t) \, \big | \, T \ge s \big ]\) can be estimated nonparametrically in a simple fashion (as described in Appendix A.2), this is not necessarily the case for the first term because of the conditioning on \({\varvec{X}}_i\), especially if the dimension of \({\varvec{X}}_i\) is not small and/or some components of \({\varvec{X}}_i\) are continuous covariates. In that case, one will often need to model \(F_1(s,{\varvec{X}})\) for all \(s\in [0,t]\), build on the equality \(E\big [ {\varvec{X}}D(t) \, \big | \, {\varvec{X}}, T\ge s \big ]={\varvec{X}}H_1(s,t,{\varvec{X}})/S(s,{\varvec{X}})\) and instead solve

where \({\widehat{H}}_1(s,t,{\varvec{X}}) = \widehat{F}_1(t,{\varvec{X}})- \widehat{F}_1(s,{\varvec{X}})\) and \({\widehat{S}}(s,{\varvec{X}})\) is an estimator of \(S(s,{\varvec{X}})=P(T>s|{\varvec{X}})\). Popular methods to estimate \(F_1(s,{\varvec{X}})\) and \(S(s,{\varvec{X}})\) simultaneously over all time \(s\in [0,t]\) are based on cause-specific hazards modeling, see e.g. Ozenne et al. (2017). Although \({\widehat{E}}\big [ {\varvec{X}}D(t) \, \big | \, T \ge s \big ]\) can be computed non-parametrically, it might be more natural to compute it as \(\left\{ \sum _{i=1}^n Y_i(s) {\varvec{X}}_i \widehat{H}_1(s,t,{\varvec{X}}_i)\big /\widehat{S}(s,{\varvec{X}}_i)\right\} \) \(/ \big \{ \sum _{i=1}^n Y_i(s)\big \}\) in that case. From a computational point of view, it is also interesting to note that \(d\widehat{M}_i^c(s)\) in (7) can be replaced by \(dN_i^c(s)\), where \(N_i^c(s)=1\!\! 1 \{ {\widetilde{T}}_i\le s, \Delta _i=0 \}\).

Following Robins and Rotnitzky (1992), one could rigorously show that Eq. (7) provide the best augmented estimators and that this will provide more efficient estimators than (2) and (3), if the plugged-in estimates \({\widehat{F}}_1(s,{\varvec{X}})\) and \({\widehat{S}}(s,{\varvec{X}})\) are close enough to their counterpart for all \(s \in [0,t]\).

On one hand, this efficiency result makes the augmented estimating Eq. (7) appealing. This is especially the case when \(F_1(s,{\varvec{X}})\) and \(S(s,{\varvec{X}})\) do not appear particularly difficult to estimate consistently for all \(s \in [0,t]\). On the other hand, in practice it might be challenging to estimate all these quantities precisely. This might often happen when the dimension of \({\varvec{X}}\) is not small and/or some components of \({\varvec{X}}\) are continuous covariates. The prespecification of modeling choices, which is often deemed important in medical research (FDA 2021; Loder et al. 2010), might further complicate the task. This should be kept in mind when considering the use of the augmented approach in practice, since \({\widehat{\varvec{\beta }}}_{m}^{Aug}\) might be less efficient than \({\widehat{\varvec{\beta }}}_{m}\) when \(F_1(s,{\varvec{X}})\) and \(S(s,{\varvec{X}})\) are not estimated consistently for all \(s \in [0,t]\).

Finally, the Proposition 3 below provides a simple computational trick to solve estimating equations asymptotically equivalent to the augmented Eq. (6), using standard software, when \({\varvec{X}}\) is a vector of a few categorical variables. The results and its proof might also help to get some intuition for more general results, which state that efficiency gains can generally be obtained by using inverse probability of censoring weights that depends on \({\varvec{X}}\), even when the censoring time C is independent of \({\varvec{X}}\) (Robins and Rotnitzky 1992).

Proposition 3

Assume that \({\varvec{X}}\) is a vector of categorical variables defining K strata and let \(s({\varvec{x}}) \in \{ s_1,\dots ,s_K\}\) denote the strata corresponding to \({\varvec{x}}\). Further assume that a stratified Kaplan-Meier estimator, stratified on \(s({\varvec{X}})\), is used to compute the inverse probability of censoring weights. In that case, solving the equations \({\widehat{U}}_m(\varvec{\beta })={\varvec{0}}\) (given by (2) and (3)) is asymptotically equivalent to solving the augmented augmented \({\widehat{U}}_m^{Aug}(\varvec{\beta })\) (given by (6)), for which a marginal Kaplan-Meier estimator is used to to compute the weights. That is, \(\sqrt{n}\,\widehat{U}_{m}(\varvec{\beta })=\sqrt{n}\,{\widehat{U}}_{m}^{Aug}(\varvec{\beta }) + o_p(1)\).

Proof

First, as pointed out before at the beginning of Sect. 2.5, we note that \({\widehat{U}}_m(\varvec{\beta })\) is identical for both choices of m in that case. Second, using that \(\sum _{i=1}^n=\sum _{k=1}^K \sum _{i: s({\varvec{X}}_i)=s_k}\) and proceeding as in Appendix A.1 for each sum \(\sum _{i: s({\varvec{X}}_i)=s_k}\), we obtain a similar i.i.d decomposition as in Theorem 1 and it follows that \(\sqrt{n}\,{\widehat{U}}_{m}(\varvec{\beta }) = n^{-1/2} \sum _{i=1}^n \varvec{\Theta }_m\big \{{\varvec{X}}_i,D_i(t), {{\widetilde{T}}}_i, {\widetilde{\eta }}_i, t \big \} + o_p(1)\) with

We now note that conditioning on \({\varvec{X}}\) or \(s({\varvec{X}})\) is equivalent and that assuming C independent of \({\varvec{X}}\) implies \(M^c\big (s,s({\varvec{X}})\big )=M^c(s)\) and \(G_c\big (s,s({\varvec{X}})\big )=G_c(s)\). This shows that \(\sqrt{n}\,{\widehat{U}}_{m}(\varvec{\beta })=\sqrt{n}\,\widehat{U}_{m}^{Aug}(\varvec{\beta }) + o_p(1)\). \(\square \)

2.8 Theoretical computation and comparison of efficiency

In this section, we compare the asymptotic variances of \({\widehat{\varvec{\beta }}}_{ipcw-glm}\) and \({\widehat{\varvec{\beta }}}_{oipcw}\) in specific settings, to illustrate their theoretical differences detailed above. For a simple illustration, we consider \({\varvec{X}}=(X_1,X_2)\) where \(X_1\) and \(X_2\) are two independent binary covariates, with \(P(X_1=-1)=P(X_1=1)=0.5\) and \(P(X_2=0)=P(X_2=1)=0.5\). We assume that \(F_1(t,{\varvec{X}}) = \text{ expit }(\beta _0(t) + \beta _1X_1 + \beta _2X_2)\) with \(\beta _0(t) = \log [\rho _1 (1-e^{-t})]\), \(\beta _1=0.5\) and \(\beta _2=-0.5\). For the absolute risk of the competing event, i.e. \(F_2(t,{\varvec{X}})= P(T \le t, \eta =2 \vert {\varvec{X}})\), we assume \(F_2(t,{\varvec{X}})= \text{ expit }(\mu (t) - 0.5 \cdot X_1 + 0.5\cdot X_2)\cdot \{ 1 - F_1(6,{\varvec{X}})\}\) with \(\mu (t) = \log [\rho _2 (1-e^{-t})]\). This parametrization satisfies the constraint \(F_1(t,{\varvec{x}})+F_2(t,{\varvec{x}}) \le 1\) for all \({\varvec{x}}=(x_1,x_2)\) and \(t \in [0,6]\). To generate independent censoring, we used a constant hazard \(\lambda _c(t,{\varvec{X}})=r_c \).

To illustrate several scenarios, we use two values of each parameter \(\rho _1\), \(\rho _2\) and \(r_c\) and two time horizons \(t=1\) and 5. This leads to 16 scenarios with a marginal risk \(F_1(t)=E\{F_1(t,{\varvec{X}})\}\) ranging from 5% to 42% and a proportion of censored observations before time t, i.e. \(P({\widetilde{T}}\le t,\widetilde{\eta }=0)\), ranging from 4% to 80% (Table 1). We consider the estimation of the well-specified logistic model for \(F_1(t,{\varvec{X}})\) at time \(t=1\) and 5 and we compute the corresponding asymptotic variances, when using OPICW and IPCW-GLM. The computation was made using the theoretical (asymptotic) formulae derived in Sect. 2.5. In addition, we performed simulations that led to similar results with sample sizes \(n=400\) and \(n=1600\) (not shown), which confirmed the results presented below. In the simulation results, the gain for \(n=400\) for the OIPCW approach were slightly better than in the theoretical (asymptotic) computations.

The theoretical computations, presented in Table 1, reveal which type of IPCW adjustment gave the smallest asymptotic variances in our settings. Overall, OIPCW appears to be preferable as the gain using this method is sometimes considerable. For a high risk \(F_1(t)=42\%\) and a low risk of competing event (\(\rho _2=0.1\), which corresponds to 8% competing risk) the parameters \(\beta _0(t)\), \(\beta _1\) and \(\beta _2\) are estimated with a variance that is between 2.17 and 2.75 times larger when using IPCW-GLM instead of OIPCW.

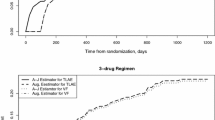

Diagonal terms for \((1/n){\varvec{{{\mathcal {I}}}}}^{-1} {\varvec{g}}(s) {\varvec{{{\mathcal {I}}}}}^{-1}\), for \(s\in [0,t]\), from which originates the difference in asymptotic variance between each parameter estimator, when using either OIPCW or IPCW-GLM (with \(n=400\)). The \(1^{st}\), \(2^{nd}\) and \(3^{rd}\) diagonal terms relate to the differences in variance for estimators of \(\beta _0(t)\), \(\beta _1\) and \(\beta _2\), respectively. The gray area and the right axis additionally show \(f_c(s)\) for \(s\in [0,t]\), which weights the contribution of \((1/n){\varvec{{{\mathcal {I}}}}}^{-1} {\varvec{g}}(s) {\varvec{{{\mathcal {I}}}}}^{-1}\), at each time \(s\in [0,t]\), to the variance differences given by \((1/n){\varvec{{{\mathcal {I}}}}}^{-1} \big \{ \int _0^t {\varvec{g}}(s) f_c(s)ds \big \} {\varvec{{{\mathcal {I}}}}}^{-1}\). The right and left plots correspond to situations with data generating parameters \(\rho _1,\rho _2,r_c\) and t of lines 14 and 8 of Table 1, respectively

In Fig. 1 we show the 3 diagonal components of the matrix \((1/n){\varvec{{{\mathcal {I}}}}}^{-1} {\varvec{g}}(s) {\varvec{{{\mathcal {I}}}}}^{-1}\), together with \(f_c(s)\), for \(s\in [0,t]\), for the two settings in which we saw the largest difference in asymptotic variance between the two methods favoring OIPCW or IPCW-GLM, respectively. This provides insights on the origin of the variance differences. As seen in Sect. 2.5, the differences in asymptotic variance, for each parameter estimator, are given by the diagonal of \((1/n){\varvec{{{\mathcal {I}}}}}^{-1} \big \{ \int _0^t {\varvec{g}}(s) f_c(s)ds \big \} {\varvec{{{\mathcal {I}}}}}^{-1}\), where \(f_c\) depends only on the censoring time distribution. In our settings, \(f_c(s)=r_ce^{r_c\cdot s}\), which increases fast with s. This makes the values of \({\varvec{g}}(s)\) for late times s particularly influential in the variance differences. This explains why the left plot corresponds to large variance differences whereas the right plot corresponds to smaller differences. On the left plot of Fig. 1, there is a large interval in which the diagonal terms of \({\varvec{g}}(s)\) are positive and \(f_c(s)\) is large. By contrast, on the right plot, the large interval for which the diagonal terms of \({\varvec{g}}(s)\) are negative contains a wide range of time s for which \(f_c(s)\) is rather small.

Figure 1 also illustrates the result that which method is asymptotically the most efficient depends on the censoring time distribution. Graphically, which method is most efficient depends on the location of the gray area and different distributions will lead to different shapes of the gray area. For instance, if the censoring times were all early with respect to the time-point t of interest, then the IPCW-GLM would be more efficient, as in that case \(f_c(s)\) would be non zero only for early times s. Conversely, if they were all located just before t, then OIPCW would be more efficient.

Finally, we compare the “naive” variance, which standard software can estimate by default, to the true variance (asymptotically). This exemplifies the asymptotic overestimation of the variance showed in Sect. 2.6. The results are provided in Table 2, for all scenarios with time \(t=5\). The table provides the results when using a simple (marginal) Kaplan-Meier estimator or a (fully) stratified Kaplan-Meier estimator to compute the weights. We note that for all settings and both estimators the variance is overestimated for \(\beta _0(t)\), and this is particularly so for the IPCW-GLM method. For instance, this will make the confidence interval for the predicted risk in the reference group too wide. The variance of covariate effect estimators are well estimated for the OPICW using the simple naive variance estimator, when using a simple Kaplan-Meier to compute the weight. However, when using the stratified Kaplan-Meier, we see differences. The IPCW-GLM “naive” standard errors were considerably off when compared to the truth when using the stratified Kaplan-Meier weights. We conclude that it is important to use appropriate methods to compute standard errors.

Comparing the results obtained using the stratified versus those using the simpler Kaplan-Meier estimator also illustrates the efficiency gain that can be obtained using the augmented estimators. This is because using the (fully) stratified Kaplan-Meier is asymptotically equivalent to using the augmented estimator, as seen in Proposition 3.

3 Average causal treatment effect and confounding

We now present estimators of the average causal treatment effect. We use potential outcome notations, as e.g. in Ozenne et al. (2020) or Young et al. (2020), to define the associated target parameters. Let \((T^a,\eta ^a)\) and \(D^a(t)\), for \(a=0,1\), denote the potential outcome variables \((T,\eta )\) and D(t) that are, or would have been observed, under the treatment value a. Averaged causal risks are defined by \(F_1(t,a)=P\{D^a(t)=1\}\), for \(a=0\) and 1, where the term “average” emphasizes that we average over baseline covariates, i.e. \(F_1(t,a)=E[P\{D^a(t)=1\vert {\varvec{L}}\}]\). The term “causal” emphasizes that \(F_1(t,a)\) relates to the potential outcome \(D^a(t)\). Within our context presented in Sect. 2.1, the interpretation of \(F_1(t,a)\) is that of the proportion of patients that would have been expected to die from a cardiovascular death within the 33 months of follow-up, had all the patients initiated a beta-blocker treatment, when \(a=1\), or had none of them been treated with beta-blocker, when \(a=0\). The corresponding risk difference \(\text{ Diff }(t)=F_1(t,1)-F_1(t,0)\), and risk ratio \(\text{ RR }(t)=F_1(t,1)\big /F_1(t,0)\), therefore each defines an average treatment effect.

Specific assumptions, in addition of those of Sect. 2.3, are required to make valid causal inference. In the rest of the manuscript, we will assume the usual “no unmeasured confounding”, “consistency” and “positivity” assumptions. No unmeasured confounding means that \((T^a,\eta ^a)\), and thus also \(D^a(t)\), are independent of A conditionally on \({\varvec{L}}\). Positivity means that \(\pi (a,{\varvec{l}}) >0\) for all l and \(a=0,1\), where \(\pi (a,{\varvec{l}})=P\{ A=a \vert {\varvec{L}}={\varvec{l}}\}\). Consistency means that \((T,\eta )=(1-A)\cdot (T^0,\eta ^0) + A\cdot (T^1,\eta ^1) \). See, e.g., Hernán and Robins (2020) for more details on these standard assumptions and counterfactual notations.

3.1 G-computation

As in Ozenne et al. (2020) and Zhang and Zhang (2011), for \(a=0,1\), we can estimate \(F_1(t,a)\) using the G-computation estimator

where \({\widehat{Q}}(t,a,{\varvec{L}}_i)=Q(t,a,{\varvec{L}}_i, {\widehat{\varvec{\beta }}})\), using any consistent estimator \({\widehat{\varvec{\beta }}}\) of \(\varvec{\beta }\) seen above, e.g., \({\widehat{\varvec{\beta }}}_{oipcw}\) or \({\widehat{\varvec{\beta }}}_{oipcw}\). With a slight abuse of notations, we will often write \(Q(t,A,{\varvec{L}},\varvec{\beta })\) for \(Q(t,{\varvec{X}},\varvec{\beta })\) in what follows, to emphasize that it depends on both A and \({\varvec{L}}\). Similarly, we will also write \(Q(t,a,{\varvec{L}},\varvec{\beta })\) in the case A is either observed with, or set to, the value a. The rational for \({\widehat{F_1}}^g(t,a)\) is that, under the above assumptions, \(F_1(t,a)=E\{P\{D(t)=1\vert A=a,{\varvec{L}}\}\}\). The g-computation estimators of \(\text{ Diff }(t)\) and RR(t) are then obtained as the difference or the ratio of \({\widehat{F_1}}(t,1)\) and \({\widehat{F_1}}(t,0)\). Instead of using a logistic model, Ozenne et al. (2020) previously suggested to combine two cause-specific Cox models to compute \({\widehat{F_1}}(t,a)\) for \(a=0,1\), whereas Zhang and Zhang (2011) suggested to use a Fine and Gray (1999) model.

Many asymptotic properties of \({\widehat{F_1}}^g(t,a)\), and of the corresponding estimators of \(\text{ Diff }(t)\) and \(\text{ RR }(t)\), can be derived directly from Taylor-expansions and the results of the previous sections. We especially note the following result, which provides insights to compare the asymptotic efficiency of different versions of the estimator \({\widehat{F_1}}^g(t,a)\), defined by plugging-in different estimators \({\widehat{\varvec{\beta }}}\) of \(\varvec{\beta }\). Maybe unsurprisingly, this result implies that using the most efficient estimator \({\widehat{\varvec{\beta }}}\) will lead to the most efficient estimator \({\widehat{F_1}}^g(t,a)\), when the model is well-specified.

Proposition 4

Assume that the model is well-specified, that is, \(Q(t,a,{\varvec{l}},\varvec{\beta })=F_1(t,a,{\varvec{l}})\) for all \({\varvec{l}}\), and that C does not depend on the covariates \((A,{\varvec{L}})\). Asymptotically, \(\sqrt{n}\left\{ \widehat{F_1}^g(t,a) - F_1(t,a)\right\} \) is normally distributed with variance

where \({\varvec{B}}_{\varvec{\beta }}(a)=E\big \{ \partial /\partial \varvec{\theta }^TQ(t,a,{\varvec{L}},\varvec{\theta })\vert _{\varvec{\theta }=\varvec{\beta }} \big \}\). Consequently, if we consider any two versions of \({\widehat{F_1}}^g(t,a)\), each defined by plugging-in a different estimator \({\widehat{\varvec{\beta }}}\) of \(\varvec{\beta }\), the most efficient of the two is obtained by plugging-in the most efficient estimator \({\widehat{\varvec{\beta }}}\) (asymptotically).

Proof

A proof can be found in Bartlett (2018, Appendix A.2), in a slightly different context. We repeat the main arguments in our context in Appendix A.3, for completeness. \(\square \)

3.2 Double robust estimating equations

The above g-computation estimator requires the model for \(F_1(t,a,{\varvec{l}})\) to be well-specified to be consistent (unless specific additional assumptions hold, as in Sect. 5). To relax this assumption, so called double-robust (DR) estimators have been proposed. These estimators build on estimators for both \(F_1(t,a,{\varvec{l}})\) (the “outcome model”) and \(\pi (a,{\varvec{l}})\) (the “propensity score model”) and requires only one of the two to be consistent for the DR estimator to be consistent. See e.g. Hernán and Robins (2020). Let \({\widehat{\pi }}(a,{\varvec{L}}_i)\) denote an estimator of \(\pi (a,{\varvec{L}}_i)\). For binary (uncensored data), the DR estimators of \(F_1(t,a)\) is

In short, the double robustness comes from the following properties. If the outcome model is correctly specified, the law of iterated expectations and the law of large number together imply that the first average on the right-hand side of Eq. (9) converges to zero. Specifically, the first average in the right-hand side of (9) will converge to

which will also converge to zero when \({\widehat{Q}} (t,A_i,{\varvec{L}}_i)\) converges to \(E[ D_i(t)|A_i,{\varvec{L}}_i]=F_1(t,A_i,{\varvec{L}}_i)\). Hence, in that case the DR-estimator has asymptotically the same mean as the g-computation estimator. If the propensity model is correctly specified, then the two ways of averging, using the empirical average \((1/n)\sum _i\) or the weighted average \((1/n)\sum _i \frac{1\!\! 1 \{ A_i=a \}}{\widehat{\pi }(a,{\varvec{L}}_i)}\), are both consistent for the population average. Hence the weighted and empirical average of \({\widehat{Q}} (t,a,{\varvec{L}}_i)\) cancel each other out (in the limit) and the DR estimator converges to the expectation of \((1/n)\sum _{i=1}^n \frac{1\!\! 1 \{ A_i=a \}}{\widehat{\pi }(a,{\varvec{L}}_i) } D_i(t)\), which is indeed \(F_1(t,a)\).

To define an estimator with right censored data, we note that only the first of the two averages on the right-hand side of Eq. (9) needs to be modified. Further, the formula of this first average resembles very much that of the estimating equation for binary data of Eq. (1). Hence we can build on the same idea: we can either weight the individual contribution to the average (as in (2)) or the censored outcome (as in (3)). The first option leads to

the second to

This second approach is similar to a DR estimator suggested by Ozenne et al. (2020). The only difference is that we suggest to use a logistic model for the outcome model \(Q(t,a,{\varvec{L}}_i)\), whereas they instead suggest a model based on two cause-specific Cox regressions. Estimators of \({\widehat{\text{ Diff }}}(t)\) and \({\widehat{RR}}(t)\) are then obtained as the difference or the ratio of the estimator for each group.

Standard errors may be computed either by bootstrapping or via asymptotic decompositions, which follow after Taylor expanding in the direction of the different parameters, similarly to what we did for the G-computation estimator in Appendix A.3.

3.3 Regression based DR estimators via clever covariates

Building on the work of Scharfstein et al. (1999, p. 1140–1441), Bang and Robins (2005) pointed out that a DR estimator closely related to that of Eq. (9) can be computed via simple averages of predicted risks similar to that of the G-computation estimator in (8). They suggested to proceed as follows. First, fit a logistic model using, in addition to the covariates \((A,{\varvec{L}})\), the two constructed covariates defined by \(h_a\{A,{\varvec{L}}\}=1\!\! 1 \{ A=a \}/\widehat{\pi }(a,{\varvec{L}})\), for \(a=0,1\), which Moore and van der Laan (2009) referred to as “clever covariates”. That is, fit the logistic regression model

where \(Q(t,A,{\varvec{L}})\) is the same logistic model as before. Then, instead of (8), compute,

Below, we refer to this estimator as the regression-based DR estimator. The rational for this approach is that \({\widehat{F_1}}^{rdr}(t,a)\) can be re-written almost exactly as the right-hand side of Eq. (9), with the only difference that \({\widehat{Q}}\) should be replaced by \({\widehat{Q}}'\). Indeed,

when fitting the logistic model with the clever covariates, using estimating Eq. (1). This is because the equation corresponding to the clever covariate \(h_a\{A,{\varvec{L}}\}\), for \(a=0,1\), is nothing else than (13), by definition of \(h_a\{A_i,{\varvec{L}}_i\}\) and because \(h_a\{A_i,{\varvec{L}}_i\}\cdot \widehat{Q} (t,A_i,{\varvec{L}}) = h_a\{A_i,{\varvec{L}}_i\}\cdot \widehat{Q}(t,a,{\varvec{L}})\).

Of course, the minor difference between \({\widehat{F_1}}^{rdr}(t,a)\) and \({\widehat{F_1}}^{dr}(t,a)\) has no consequence for the double robustness property. Indeed, if the outcome model \(Q(t,A,{\varvec{L}})\) is well specified, then \(Q'(t,A,{\varvec{L}})\) is also well-specified and adding the clever covariates or not into the model does not matter much, because estimators of parameter \(\beta _{h_0}\) and \(\beta _{h_1}\) will converge towards zero, as the sample size n increases. If the propensity score model is well specified, then the outcome model does not need to be correctly specified and thus it does not matter either for consistency.

Scharfstein et al. (1999) and Bang and Robins (2005) also note that if we only aim to estimate the causal difference \(\text{ Diff }(t)\) and not each of the two risks, then we can use a single clever covariate instead of two, when fitting the logistic model. That is, one can fit the logistic regression model

with the single clever covariate \(h_{\{1-0\}}\{A,{\varvec{L}}\}=h_1\{A,{\varvec{L}}\}-h_0\{A,{\varvec{L}}\}\). The risk difference can then be estimated by a simple average of differences,

The rational is similar to that of using two clever covariates. This becomes clear after noticing that

which holds because \(1\!\! 1 \{ A_i=a \}\cdot \widehat{Q} (t,A_i,{\varvec{L}}_i)=1\!\! 1 \{ A_i=a \}\cdot \widehat{Q} (t,a,{\varvec{L}}_i)\). Scharfstein et al. (1999) pointed out that this approach can be slightly more efficient than that based on the two clever covariates. However, we believe that often we do not aim to estimate only the risk difference but also the risk in each group. Hence, using two clever covariates is probably often the most attractive option to compute the three estimates in a unified way.

To define regression-based DR estimators for right censored data, it is sufficient to use either the estimating Eqs. (2) or (3) to fit the logistic model and otherwise proceed as in the binary uncensored case described above. The rational is exactly the same: the first average on the right-hand side of Eqs. (10) and (11) are similar to the estimation equation induced by the clever covariates in either (2) or (3). Finally, note that the DR estimators may further be censoring augmented along the lines of Sect. 2.7, and this has been done in Ozenne et al. (2020).

3.4 tMLE-like estimators

Moore and van der Laan (2009) suggested a very similar yet different approach to the regression-based DR approach. The regression models used for the outcome, i.e., \(Q'(t,A,{\varvec{L}})\), and the propensity score, i.e., \(\pi (A,{\varvec{L}})\), are the same. The estimator used for the propensity score, and thus to construct the clever covariates, are the same and the averaging step (12) is also exactly the same. However, the procedure to estimate the parameters of the outcome model \(Q'(t,A,{\varvec{L}})\), and hence to compute \({\widehat{Q}}'\big (t,a,{\varvec{L}}_i\big )\), is slightly different. Moore and van der Laan (2009) proposed to first estimate \(F_1(t,A_i,{\varvec{L}}_i)\) by fitting the model without the clever covariates, that is, \(Q(t,A,{\varvec{L}})\). Then, in a second step, the model is updated by regressing on the clever covariates while including \(\text{ logit }\big \{\widehat{Q} (t,A,{\varvec{L}})\big \}\), obtained from the previous step, as an offset in the model. Hence, the final estimate of the individual predicted risk \({\widehat{Q}}'\big (t,a,{\varvec{L}}_i\big )\) closely resembles that of the regression based DR, except for the fact that not all regression parameters have been estimated simultaneously. Instead, a two steps approach was used. First the parameters in \(Q(t,A_i,{\varvec{L}})\) were estimated, and then the parameters \(\beta _{h_0}\) and \(\beta _{h_1}\). A similar two steps approach is also suggested to compute \({\widehat{Q}}''\big (t,a,{\varvec{L}}_i\big )\), when using one clever covariate instead of two.

The double robustness property is of course preserved with this approach. If the outcome regression model is well specified, then consistency follows from the same argument as for the regression-based DR approach. If the propensity score model is consistent, here again the consistency follows because, in the second step, the estimating equations induced by the clever covariates are similar to the first average on the right-hand side of Eq. (9).

This estimator is referred to as the tMLE estimator by Moore and van der Laan (2009). This stands for “targeted maximum likelihood estimation”. Overviews about tMLE, in general, can be found in Van Der Laan and Rubin (2006) and Van der Laan and Rose (2011). The computation of the tMLE estimator described above is presented in details in a tutorial by Luque-Fernandez et al. (2018).

Interestingly, with right censored data, a similar approach to that of Moore and van der Laan (2009) can easily be used. One can just use the estimating Eqs. (2) or (3) to fit the logistic models and otherwise proceed as in the binary uncensored case.

4 Application to Danish registry data

We re-analyze data from Holt et al. (2021), who investigated the long-term cardio-protective effect associated with beta-blocker treatment in stable, optimally treated, myocardial infarction (MI) patients without heart failure. The motivation for this study was that the evidence of a protective beta-blocker effect relies on data from old randomized trials, which were conducted before catheter-based reperfusion became standard care during hospital admission for MI. Consequently, the evidence relates to a rather different patient population than the current population of MI patients. This has led to increasing skepticism about the benefit of initiating a beta-blocker treatment and to increasingly less systematic treatment initiations (Rossello et al. 2015).

As in Holt et al. (2021), in our analysis follow-up starts 3 months after MI and we compare the \(t=\) 33-months risk of cardiovascular death among patients who have initiated a beta-blocker treatment (\(A=1\)) to those who have not (\(A=0\)), among patients alive 3 months after MI. The data were collected from nationwide registers and we included Danish patients with first-time MI discharged between 2003 and 2018. Following inclusion criteria detailed in Holt et al. (2021), this results into 24,770 patients included in the treated group (\(A=1\)) and 5,407 in the untreated group (\(A=0\)). Follow-up ended at death, emigration, 33 months after inclusion or 31 December 2018, whichever came first. Here non-cardiovascular death is a competing risk. The definition of each variable \(T,\eta ,A\) and C within this context was already presented in Sect. 2.1.

We assume that the no unmeasured confounding assumption holds when adjusting on the following baseline variables \({\varvec{L}}\): age group (30-60, 60-70, 70-80, 80-85), sex, calendar year at inclusion (2003-2008, 2009-2013, 2014-2018), educational level (3 levels), procedure during MI hospital admission (3 types), diabetes, history of stroke, hypertension, peripheral arterial disease, kidney and liver disease, gastrointestinal bleeding and cancer.

Another important assumption of the methods discussed in this paper is the consistency of the estimator of the censoring probabilities. That is, the consistency of \({\widehat{G}}_c(u, {\varvec{x}})\), for all \(u\le t\) and \({\varvec{x}}\). This means that the choice of estimator matters and we therefore illustrate four choices, referred to as C1, C2, C3 and C4, in what follows.

4.1 Considered estimators for the censoring distribution

First, we consider the simplest and most common choice, that is, the use of a (marginal) Kaplan-Meier estimator (C1). Here the covariate values \({\varvec{x}}\) do not play any role. Second, we use a Kaplan-Meier estimator stratified by treatment and age group (C2). The rationale for stratifying by treatment group is the growing skepticism about the benefit of initiating beta-blocker treatment during the study period (2003-2018). Hence, patients included recently, e.g. in 2010’s, are more likely to be non treated than those included less recently, say in the 2000’s. The rationale for further stratifying by age group is to try to increase efficiency, see e.g. Malani (1995) and related remarks in Sect. 2.7. The third choice consists to use a Kaplan-Meier estimator stratified by group of inclusion year, before or after 1st of January 2015 (C3). The rationale for this choice is that patients included before 2015 can be lost of follow-up within 33-months only if they leave Denmark, which is extremely rare in the study population. By contrast, those included after March 2016 will all be censored within 33-months due to study end on 31 December 2018. The arbitrary cutoff in 2015 was chosen to strike a good balance between two conflicting objectives. The first is to define a strata containing only patients similar enough to those included after March 2016. The second is to include enough patients in this strata to obtain reliable estimates. These patients need to be similar enough with respect to standard health care received, including the propensity to receive a beta-blocker treatment. The fourth choice is similar to the third, except that we further stratified by age and treatment group in the strata of patients included in 2015-2018 (C4). Here the rationale is to both adjust for the increasing skepticism and increase efficiency.

Note that choices C3 and C4 closely relate to the work of Rotnitzky et al. (2007) and Lok et al. (2018), who modeled two competing risks of censoring, to rely on weaker assumptions when estimating the IPCW weights. In our context, two competing risks of censoring exist: one due to study end, the other due to emigration. With choices C3 and C4, we approximately modeled these two competing risks of censoring, by stratifying on the inclusion year (before or after 2015). We therefore believe that choices C3 and C4 are more suitable than C1 and C2. The choice C4 makes less assumptions than C3, hence we believe that it leads to the most trustworthy results.

4.2 Logistic models for the outcome and propensity models

All baseline variables described above were included in the logistic model for the 33-months risk of CV death. To capture potentially different treatment effect among patients of different groups of age, year of inclusion, procedure during MI hospital admission and sex, interaction terms between each of these variables and the treatment variable were added. The same model choices were used in Holt et al. (2021), up to minor differences in the definition of some groups. For the double robust estimators, modeling choices are also needed for the probably of initiating a beta-blocker treatment. We used a logistic model and all baseline variables were also included, without any interaction term.

4.3 Compared methods

We present results obtained for all estimators introduced in Sect. 3. For comparison purpose, we also present results obtained using three other approaches to estimate the risk \(F_1(t,a)\), for \(a=0,1\), and the risk difference \(F_1(t,1)-F_1(t,0)\). The first method is to estimate the risk in each treatment group using the Aalen-Johanssen estimator, as an unadjusted (crude) analysis. Note that this is algebraically equivalent to using a logistic model that only includes the treatment variable, when fitting the model using a Kaplan-Meier estimator stratified by treatment groups to compute the weights (Scheike et al. 2008; Geskus 2016). The second approach consists in using G-computation based on Cox regression for each cause-specific hazard, instead of logistic regression, as detailed in Ozenne et al. (2020). Here we used the same variables and interaction terms as in the logistic model, to model each cause specific hazard. Finally, the third approach uses inverse probability of treatment and censoring weighting, to estimate each risk \(F_1(t,a)\) as

By contrast to the approaches described in Sect. 3, the first of these approaches does not account for confounding and it is expected to lead to biased results. The second adjusts for confounding, but it relies on a correct model specification of the cause-specific hazards of the two competing risks, at any time within the 33 month of follow-up. However, it does not rely on any specific modeling assumption for the censoring distribution or the propensity score. Only the assumption of independent censoring is needed, which allows the censoring time to arbitrarily depend on the treatment variable A as well as baseline variables \({\varvec{L}}\). The third relies on a correct model specification of the propensity score and of the censoring distribution, but it does not rely on any modeling assumption about the conditional risk \(F_1(t,A,{\varvec{L}})\).

Note that for the initial (main) analysis of these data, whose results are detailed in Holt et al. (2021), we chose to use G-computation via logistic regression. We will now briefly explained why we preferred this approach to alternatives, in this specific context. Despite the large sample size (\(n=24,770 + 5,407\)), the number of observed cardiovascular deaths was not very large (\(333+72\)) and the prevalence of some of the many important baseline characteristic we needed to adjust for were rare (see Table 1 in Holt et al. (2021)). This seemed to rule out the use of very flexible modeling approaches, which generally need more data in each subgroup. Therefore, parametric modeling assumptions seemed needed and important to pre-specify carefully, using background knowledge, to obtain reliable results. We found the background clinical knowledge easier to translate into reliable parametric assumptions for the 33-months absolute risk of CV death than for the two cause-specific hazards at any time within the follow-up. This was especially the case when considering parametric assumptions related to the inclusion of interaction terms, which can be important. Consequently, we chose to pre-specify a G-computation approach based on logistic regression of the absolute risk over an alternative based on regressions of the two cause-specific hazards. That is, we chose the approach presented in Sect. 3.1 over that presented in Ozenne et al. (2020). Pre-specifying a reliable model for the likelihood of initiating a Beta-Blocker treatment appeared also rather challenging in our context, using background knowledge. That is why we pre-specified the choice of an analysis that did not use propensity scores either.

Finally, also for similar reasons, it would have been challenging to use the augmented estimators of Sect. 2.7 in a meaningful way. Therefore, we did not pursue the use of these interesting estimators in this application. All results building on logistic regressions that we present are based on estimators solving the estimating Eqs. (2) or (3).

4.4 Results

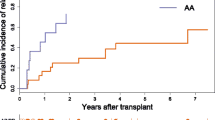

Figure 2 displays all results obtained from the different methods, with censoring modeling choice C4, which we believe to be the best. Standard errors (se) were computed by Bootstrap (400 samples) and 95% confidence intervals (CI) for the risk differences were computed as CI=estimate ± \(1.96\cdot \)se. Similar figures for choices C1, C2 and C3 are provided in the supplementary material.

Estimates risks per treatment group and risk differences, with 95% confidence intervals. Here the IPCW weights are based on choice C4. The labels on the Y-axis indicate the method used to obtained the results: “Crude”: unadjusted (crude) analysis using a stratified Aalen-Johanssen estimator; “Cox G-computation”: G-computation based on Cox regression for each cause-specific hazard; “IPTCW”:inverse probability of censoring and treatment weighting (\({\widehat{F_1}}^{iptcw}(t,a)\)); “G-computation”: G-computation based on logistic regression for the 33-month risk of CV death (\({\widehat{F_1}}^g(t,a)\)); “DR”: DR estimator of Sect. 3.2 (\({\widehat{F_1}}^{dr}(t,a)\)); “DR-reg: 1 cov” and “DR-reg: 2 cov”: regression based DR estimators of Sect. 3.3 with one, respectively two, clever covariates; “tmle: 1 cov” and “tmle: 2 cov”: tMLE-like estimators of Sect. 3.4 with one, respectively two, clever covariates

Figure 2 does not show large differences between the results obtained when using OIPCW or IPCW-GLM. The results of the crude analysis is surprisingly close to the results obtained with the other methods that adjust for confounding. The reason is probably that the distribution of the baseline covariates \({\varvec{L}}\) is actually quite similar in the two treatment groups, which is what Table 1 in Holt et al. (2021) suggests. That might also partly explain why all the other results are also quite similar. We note that the narrowest confidence interval is obtained when using G-computation based on Cox regressions for the two cause-specific hazards. This is not surprising, since this approach makes stronger parametric assumptions. However, the difference is modest and we believe that it is has a negligible impact on the clinical interpretation of the results.

Figure 3 provides an overview of the sensitivity of the results to choices C1-C4, when using the G-computation and tMLE-like estimators of Sects. 3.1 and 3.4, using either IPCW-GLM or OIPCW. Unlike in Fig. 2, here we show the results for only one of the five double robust estimators. This is because the results are quite similar for the others (see Figures in the supplementary material). Interestingly, Fig. 3 and choices C1-C4 illustrate that incorrectly modeling some truly existing associations between censoring and baseline covariates can lead to more bias than to not model them. Indeed, if we consider the choice C4 as the most rational in our context, we see that choice C2 leads to results further away from those of choice C4 than choice C1. This is the case although choice C2 accounts for the strong dependence between censoring and treatment in a flexible way, whereas choice C1 does not account for any dependence between censoring and baseline covariates. Figure 3 also illustrates that using OIPCW or IPCW-GLM can give substantially different results. For instance, the difference is substantial with censoring adjustment C2.

5 Efficient and robust analysis of RCT

When the data come from a randomized trial, one can estimate \(F_1(t,a)\), for \(a=0\) and 1, directly by an unadjusted logistic regression. This is because unlike in observational studies, A is independent of \({\varvec{L}}\) in RCTs, because of randomization. Hence, \(F_1(t,a)=P(D(t)=1 \vert A=a)\).

Note that also with censored data, when using a stratified Kaplan-Meier estimator, stratified on treatment A, to compute the IPCW weights, fitting the unadjusted logistic regression model is algebraically equivalent to a widespread approach. Indeed, it reduces to using a stratified Kaplan-Meier estimator, with survival data, or a stratified Aalen-Johanssen estimator, with competing risks data, to estimate \(F_1(t,a)\), for \(a=0,1\) (Geskus 2016).

However, instead of using an unadjusted analysis, one can gain efficiency by leveraging the information contained in the baseline covariates \({\varvec{L}}\). See, e.g., Robinson and Jewell (1991); Zhang et al. (2008). The simplest way to do that is to add the baseline covariates \({\varvec{L}}\) into the model, in addition to A, to model and estimate \(F_1(t,a,{\varvec{l}})\). Interestingly, even if this is done using an incorrect model, that is, even if \(Q(t,{\varvec{l}},a) \ne F_1(t,{\varvec{l}},a)\), the type-I error rate for testing a treatment effect will be controlled, as long as the Wald-type test statistic is computed with a robust standard error. Furthermore, the g-computation estimator \({\widehat{F_1}}^g(t,a)\) defined in (8) will still be consistent under arbitrary model misspecification (Moore and van der Laan 2009; Rosenblum and Steingrimsson 2016). Consequently, adjusting for baseline covariates \({\varvec{L}}\) when analyzing RCTs is recommended by regulatory agencies such as the Food and Drug Administration (FDA 2021) and the European Medicine Agency (EMA 2015). The latter states that “Variables known a priori to be strongly, or at least moderately, associated with the primary outcome and/or variables for which there is a strong clinical rationale for such an association should also be considered as covariates in the primary analysis.”.

In the next section, we start by briefly reviewing these robustness results, in the binary uncensored case. We then provide related results for the right censored case, when an additional censoring adjustment is made based on our IPCW weighted logistic regression models.

To the best of our knowledge, previous work on similar robustness properties with right-censored data focused on hazards modeling, not on risk modeling as we do below (Struthers and Kalbfleisch 1986; Lin and Wei 1989; DiRienzo and Lagakos 2001; Lu and Tsiatis 2008; Kim 2013; Vansteelandt et al. 2014).

5.1 Robustness for binary uncensored data

We will assume the use a simple logistic model, where \({\varvec{X}}=(1, A, {\varvec{L}})\). That is, the risk of \(D(t)=1\) given \({\varvec{x}}=(1, a, {\varvec{l}})\) being modeled by

Further, we assume that, as expected by design, randomization makes the treatment variable A independent of the baseline covariates \({\varvec{L}}\). With binary uncensored data and when fitting the above model (14) via usual maximum likelihood, i.e. by solving the estimating Eq. (1), then Rosenblum and Steingrimsson (2016) established the interesting robustness properties that follow. First, \(\beta _A\) converges in probability towards 0 if and only if the null hypothesis of no treatment effect, \(H_0 : F_1(t,0) = F_1(t,1)\), holds, under any model misspecification. Furthermore, the G-computation estimator \({\widehat{F_1}}^g(t,a)= (1/n)\sum _{i=1}^n Q(t,a,{\varvec{L}}_i,{\widehat{\varvec{\beta }}})\) converges in probability towards \(F_1(t,a)\), for \(a=0,1\), also under any model misspecification. The estimated treatment effects \({\widehat{F_1}}^g(t,1)-\widehat{F_1}^g(t,0)\) and \({\widehat{F_1}}^g(t,1)/\widehat{F_1}^g(t,0)\) are therefore also consistent.

As pointed out by Rosenblum and Steingrimsson (2016), the robustness property of the G-computation estimator is very useful in practice. It provides a simple and transparent approach to leverage the information contained in baseline variables which i) does not rely on any modeling assumptions and ii) estimates the same average treatment effect as that estimated by an unadjusted analysis. The use of this G-computation is therefore now promoted by the most recent FDA guidelines (FDA 2021).

For computing confidence intervals and p-values, Rosenblum and Steingrimsson (2016) note that one can compute robust standard errors via nonparametric bootstrap and exploit the asymptotic normal distribution of the estimators. Finally, note that Rosenblum and Steingrimsson (2016) also showed that the two hypothesis tests for \(H_0\), defined via the G-computation estimator \({\widehat{F_1}}^g(t,1)-\widehat{F_1}^g(t,0)\) and via the maximum likelihood estimator \({\widehat{\beta }}_A\) of \(\beta _A\), do not only both control the type-I error rate, but they are also equally powerful, asymptotically, under any model misspecification.

5.2 Results for right-censored data

With right-censored data, the robustness properties still hold under additional conditions, but not necessarily without. This the topic of the following theorems, for which we sketch the proofs in Appendix B.

Theorem 2

For both approaches, OPICW and IPCW-GLM, when the censoring adjustment model is correct, then the G-computation estimator \({\widehat{F_1}}^g(t,a)\) is consistent, for \(a=0,1\). Further, \({\widehat{\beta }}_A\) converges to 0 if and only if the null hypothesis of no treatment effect, \(H_0 : F_1(t,0) = F_1(t,1)\), is true. The two results hold under arbitrary misspecification of the working model \(Q(t,a,{\varvec{l}})\), in randomized trials where A is independent of \({\varvec{L}}\).

Consequently, hypothesis testing for a treatment effect based on either the average risk difference estimator \({\widehat{F_1}}^g(t,1)-\widehat{F_1}^g(t,0)\) or \({\widehat{\beta }}_A\) will control the type-I error, asymptotically, as long as an appropriate (robust) standard error is used in the Wald-type test statistic. However, the estimated value \({\widehat{\beta }}_a\) will usually not have a meaningful interpretation under model misspecification, unlike \({\widehat{F_1}}^g(t,1)-\widehat{F_1}^g(t,0)\). Indeed, if \(F_1(t,a,{\varvec{l}}) \ne Q(t,a,{\varvec{l}})\), the limit of \({\widehat{\beta }}_a\) does no longer have a simple interpretation as the logarithm of a conditional odds ratio.

For many clinical trials, the censoring distribution is not expected to depend on \((A,{\varvec{L}})\). This is for instance the case when only administrative censoring occurs. Consequently, using a Kaplan-Meier estimator, possibly stratified by treatment group, should be sufficient to ensure a correct censoring adjustment and thus guarantee the above robustness properties.

Without a correct censoring model, some of the robustness properties still hold under specific assumptions, when using a stratified Kaplan-Meier estimator to compute the IPCW weights. The next theorem identifies two such assumptions. The first is that the hazard of the censoring time C has an additive structure. The second is that there is no conditional treatment effect given baseline covariates, neither on the risk of the event of interest nor on the competing risk. Without these assumptions, it can be seen from the proof of the theorem (or simple simulations) that the robustness properties will generally no longer hold.

Theorem 3

Assume that i) \(\lambda _c(t,A,{\varvec{L}})= \lambda _0(t) + A \lambda _1(t) + \lambda _2(t,{\varvec{L}})\), where \(\lambda _0(t)\) and \(\lambda _1(t)\) do not depend on \((A,{\varvec{L}})\) and \(\lambda _2(t,{\varvec{L}})\) does not depend on A, and ii) \(F_k(s,0,{\varvec{l}})=F_k(s,1,{\varvec{l}})\) for all \({\varvec{l}}\), \(s \le t\) and \(k=1,2\), where \(F_k(s,a,{\varvec{l}})= P(T \le s, \eta =k \vert A=a, {\varvec{L}}={\varvec{l}})\). Further, assume that a stratified Kaplan-Meier estimator is used to compute the IPCW weights, stratified on A. Then, for both approaches, OPICW and IPCW-GLM, both the G-computation estimator of the risk difference, \({\widehat{F_1}}^g(t,1)-\widehat{F_1}^g(t,0)\), and \({\widehat{\beta }|}_A\), converge to 0. The results hold under arbitrary misspecification of the working model \(Q(t,a,{\varvec{l}})\), in randomized trials where A is independent of \({\varvec{L}}\).