Abstract

We provide explicit formulas for the Levi-Civita connection and Riemannian Hessian for a Riemannian manifold that is a quotient of a manifold embedded in an inner product space with a non-constant metric function. Together with a classical formula for projection, this allows us to evaluate Riemannian gradient and Hessian for several families of metrics on classical manifolds, including a family of metrics on Stiefel manifolds connecting both the constant and canonical ambient metrics with closed-form geodesics. Using these formulas, we derive Riemannian optimization frameworks on quotients of Stiefel manifolds, including flag manifolds, and a new family of complete quotient metrics on the manifold of positive-semidefinite matrices of fixed rank, considered as a quotient of a product of Stiefel and positive-definite matrix manifold with affine-invariant metrics. The method is procedural, and in many instances, the Riemannian gradient and Hessian formulas could be derived by symbolic calculus. The method extends the list of potential metrics that could be used in manifold optimization and machine learning.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the foundational paper [9], the authors introduced Riemannian optimization frameworks for Stiefel and Grassmann manifolds, where the Riemannian gradient and Hessian are given globally using a projection operator and a Christoffel function, a bilinear operator on the matrix space containing the Stiefel manifolds. The introduction of these global operators allows efficient computation when the manifolds are defined by constraints, or as quotients of constrained manifolds, as opposed to computation using local charts in an abstract manifold. The frameworks help popularize the field of Riemannian optimization. Since then, similar frameworks have been introduced for many manifolds with several software packages [8, 16, 26] implementing aspects of these frameworks. The Riemannian Hessian calculation is in general difficult. It could be computed using the calculus of variation. ”Doing so is tedious” [9], so the detailed calculations for Stiefel manifolds were not included in that paper, a generalization and full derivation recently appeared in [11].

In this article, we attempt to address the problem: given a manifold, described by equality constraints in a vector space, or quotient of such manifold, and a metric, also defined by an analytic formula, compute the gradient and Riemannian Hessian for a function on the manifold. Recall the Riemannian Hessian is computed using the Levi-Civita connection. By computing, we mean a procedural, not necessarily closed-form approach. We are looking for a sequence of equations, operators, and expressions to solve and evaluate, rather than starting from a distance minimizing problem. We believe that the approach we take, using a classical formula for projections together with an adaptation of the Christoffel symbol calculation to ambient space addresses the problem effectively for many manifolds encountered in applications. This method provides a very explicit and transparent procedure that we hope will be helpful to researchers in the field. The main feature is it can handle manifolds with non-constant embedded metrics, such as Stiefel manifolds with the canonical metric, or the manifold of positive-definite matrices with the affine-invariant metrics. The method allows us to compute Riemannian gradients and Hessian for several new families of metrics on manifolds often encountered in applications, including optimization and machine learning. While the effect of changing metrics on first-order optimization methods has been considered previously, we hope this will lead to future works on adapting metrics to second-order methods. The approach is also suitable in the case where the gradient formula is not of closed-form. We also provide several useful identities known in special cases.

As an application of the method developed here, we give a short derivation of the gradient and Hessian of a family of metrics studied recently in [11], extending both the canonical and embedded metric on the Stiefel manifolds with a closed-form geodesics formula. (We were ignorance of [11], which were done before we started this project in 2020). We derive the Riemannian framework for the induced metrics on certain quotients of Stiefel manifolds, including flag manifolds. We also give complete metrics on the fixed-rank positive-semidefinite matrix manifolds, with efficiently computable gradient, Hessian, and geodesics.

When the metric on a submanifold of a vector space (called the ambient space) is induced from the Euclidean metric on the vector space, the Riemannian gradient and Hessian correspond to the projected gradient and the projected Hessian in the literature of constrained optimization [9, section 4.9], [10]. When the metric is given by an analytic expression, (which is a metric when restricted to the constrained set, but not necessarily on the whole vector space), using [22, Lemma 4.3], we can compute Riemannian Hessian by projecting the Riemannian Hessian on the ambient space, and [22, Lemma 7.45] give us a similar computation for a quotient manifold. Representing the metric as an operator defined on the quotient/constrained manifold, we derive directly formulas for the Levi-Civita connection and the Riemannian Hessian that are dependent on the operator values on the manifold alone, giving us a procedural and simple approach, as explained. This approach has also been advocated in [1]. However, its usage is dependent on an extension with a computable Levi-Civita connection in the ambient space. This last step seems to restrict its use, a tricky extension is sometimes used, for example, in the case of the Grassmann manifold in [1]. We address this issue by working directly with the metric expression, giving an explicit formula for the connection in Eq. (3.10). This formula generalizes the connection formula using the Weingarten map in [2] for constant metrics.

Our framework works for both real and complex cases. The paper [15] gave the original treatment of the Hessian for the unitary/complex case. The case of Stiefel manifolds was studied in [9, 11], we reprove the results using our framework and provide complete formulas for the Hessian. Optimization on flag manifolds was studied in [21, 28] but a second-order method had not been given in an efficient form, an expression for the Riemannian Hessian vector product was not given/implemented. We provide second-order methods for the flag manifolds with the full family of metrics of [11], including efficient formulas for the Riemannian Hessian.

The affine-invariant metric on positive-definite matrices was also widely studied, for example in [12, 23,24,25]. There are numerous metrics on the fixed-rank positive-semidefinite (PSD) manifolds, which we mentioned [7] that motivated our approach. Although working with the same product of Stiefel and positive-definite manifolds with the affine-invariant metric, that paper did not use the Riemannian submersion metric on the quotient and focused on first-order methods. We compute the Levi-Civita connection for second-order methods. In [13, 27], two different families of metrics on PSD manifolds are studied. They both require solving Lyapunov equations but have different behaviors on the positive-definite part. Articles [15, 17] discuss the effect of adapting metrics to optimization problems ([17] adapts ambient metrics to the objective function using first-order methods.)

In the next section, we provide some background and summarize notations. In Sect. 3, we formulate and prove the main theoretical results of the paper. We then identify the adjoints of common operators on matrix spaces. We apply the theory developed to the manifolds discussed above. We then discuss numerical results and implementation. We conclude with a discussion of future directions.

2 Preliminaries

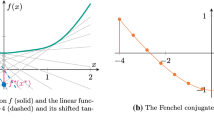

First-order approximation of a function f on \({\mathbb {R}}^n\) relies on the computation of the gradient and the second-order approximation relies on the Hessian matrix or Hessian-vector product. When a function f is defined on a Riemannian manifold \({\mathcal {M}}\), the relevant quantities are the Riemannian gradient, which provides a first-order approximation for f, and Riemannian Hessian, providing the second-order term.

When a manifold \({\mathcal {M}}\) is embedded in an inner product space \({\mathcal {E}}\) with the inner product denoted by \(\langle \cdots \rangle _{{\mathcal {E}}}\), if we have a function \({{\textsf{g}}}\) from \({\mathcal {M}}\) with values in the set of positive-definite operators operating on \({\mathcal {E}}\), we can define an inner product of two vectors \(\omega _1, \omega _2\in {\mathcal {E}}\) by \(\langle \omega _1, {{\textsf{g}}}(Y)\omega _2\rangle _{{\mathcal {E}}}\) for \(Y\in {\mathcal {M}}\). This induces an inner product on each tangent space \(T_Y{\mathcal {M}}\) and hence a Riemannian metric on \({\mathcal {M}}\), assuming sufficient smoothness. In this setup, if f is extended to a function \(\hat{f}\) on an open neighborhood of \({\mathcal {M}}\subset {\mathcal {E}}\), then the Riemannian gradient relates to the gradient of \(\hat{f}\) through a projection to \(T_Y{\mathcal {M}}\). In the theory of generalized least squares (GLS) [4], it is well-known that a projection to the nullspace of a full-rank matrix J in an inner product space equipped with a metric g (also represented by a matrix) is given by the formula \(I_{{\mathcal {E}}}-g^{-1}J^{{{\textsf{T}}}}(Jg^{-1}J^{{{\textsf{T}}}})^{-1}J\) (\(I_{{\mathcal {E}}}\) is the identity matrix/operator of \({\mathcal {E}}\)). If the tangent space is the nullspace of an operator \({{\,\textrm{J}\,}}\), and an operator \({{\textsf{g}}}\) is used to describe the metric instead of matrices J and g, we have a similar formula where the transposed matrix \(J^{{{\textsf{T}}}}\) is replaced by the adjoint operator \({{\,\textrm{J}\,}}^{{\mathfrak {t}}}\). \({{\,\textrm{J}\,}}\) is the Jacobian of a full rank equality constraint. This projection formula is not often used in the literature, the projection is usually derived directly by minimizing the distance to the tangent space. It turns out when \({{\,\textrm{J}\,}}\) is given by a matrix equation, \({{\,\textrm{J}\,}}^{{\mathfrak {t}}}\) is simple to compute. For manifolds common in applications, \({{\,\textrm{J}\,}}{{\textsf{g}}}^{-1}{{\,\textrm{J}\,}}^{{\mathfrak {t}}}\) could often be inverted efficiently. Thus, this will be our main approach to computing the Riemannian gradient.

The Levi-Civita connection of the manifold, which allows us to take covariant derivatives of the gradient, is used to compute the Riemannian Hessian. A vector field \(\xi \) in our context could be considered as a \({\mathcal {E}}\)-valued function from \({\mathcal {M}}\), such that \(\xi (Y)\in T_Y{\mathcal {M}}\) for all \(Y\in {\mathcal {M}}\). For two vector fields \(\xi , \eta \) on \({\mathcal {M}}\), the directional derivative \({\textrm{D}}_{\xi }\eta \) is an \({\mathcal {E}}\)-valued function but generally not a vector field (i.e. \(({\textrm{D}}_{\xi }\eta )(Y)\in T_Y{\mathcal {M}}\) may not hold). A covariant derivative [22] (or connection) associates a vector field \(\nabla _{\xi }\eta \) to two vector fields \(\xi , \eta \) on \({\mathcal {M}}\). The association is linear in \(\xi \), \({\mathbb {R}}\)-linear in \(\eta \) and satisfies the product rule

for a function f on \({\mathcal {M}}\), where \({\textrm{D}}_{\xi }f\) denotes the Lie derivative of f (the directional derivative of f along direction \(\xi _x\) at each \(x\in {\mathcal {M}}\)). For a Riemannian metric \(\langle ,\rangle _R\) on \({\mathcal {M}}\), the Levi-Civita connection is the unique connection that is compatible with metric, \({\textrm{D}}_{\xi }\langle \eta , \phi \rangle _R = \langle \nabla _{\xi } \eta ,\phi \rangle _R +\langle \eta , \nabla _{\xi }\phi \rangle _R\) (\(\phi \) is another vector field), and torsion-free, \(\nabla _{\xi }\eta - \nabla _{\eta }\xi = [\xi , \eta ]\). If a coordinate chart of \({\mathcal {M}}\) is identified with an open subset of \({\mathbb {R}}^n\) and \(\langle ,\rangle _R\) is given by a positive-definite operator \({{\textsf{g}}}_R\), (i.e. \(\langle \xi , \eta \rangle _R = \langle \xi ,{{\textsf{g}}}_R \eta \rangle _{{\mathbb {R}}^n}\))

where \({\mathcal {X}}(\xi , \eta )\in {\mathbb {R}}^n\) (uniquely defined) satisfies \(\langle {\textrm{D}}_{\phi }{{\textsf{g}}}_R\xi , \eta \rangle _{{\mathbb {R}}^n} = \langle \phi , {\mathcal {X}}(\xi , \eta )\rangle _{{\mathbb {R}}^n}\) for all vector field \(\phi \). The formula is valid for each coordinate chart, and it is often given in terms of Christoffel symbols in index notation ([22], proposition 3.13). We will generalize this operator formula. The gradient and \({\mathcal {X}}\) are examples of index-raising, translating from a (multi)linear scalar function h to a (multi)linear vector-valued function of one less variable, that evaluates back to h using the inner product pairing.

The Riemannian Hessian could be provided in two formats, as a bilinear form \({\textsf{rhess}}_f^{02}(\xi , \eta )\), returning a scalar function to every two vector fields \(\xi , \eta \) on the manifold, or a (Riemannian) Hessian vector product \({\textsf{rhess}}^{11}_f\xi \), an operator returning a vector field given a vector field input \(\xi \). In optimization, as we need to invert the Hessian in second-order methods, the Riemannian Hessian vector product form \({\textsf{rhess}}_f^{11}\) is more practical. However, \({\textsf{rhess}}^{02}_f\) is directly related to the Levi-Civita connection (see Eq. (3.12) below), and can be read from the geodesic equation: In [9], the authors showed the geodesic equation (for a Stiefel manifold) is given by \(\ddot{Y} + {\varGamma }(\dot{Y}, \dot{Y})=0\) where the Christoffel function \({\varGamma }\) (defined below) maps two vector fields to an ambient function and the bilinear form \({\textsf{rhess}}^{02}_f\) is \(\hat{f}_{YY}(\xi , \eta ) - \langle {\varGamma }(\xi , \eta ), \hat{f}_{Y}\rangle _{{\mathcal {E}}}\). Here, \(\hat{f}_{Y}\) and \(\hat{f}_{YY}\) are the ambient gradient and Hessian, see Sect. 3.

In application, we also work with quotient manifolds, an example of Riemannian submersion. Recall ([22], Definition 7.44) a Riemannian submersion \(\pi :{\mathcal {M}}\rightarrow {\mathcal {B}}\) between two manifolds \({\mathcal {M}}\) and \({\mathcal {B}}\) is a smooth, onto mapping, such that the differential \(d\pi \) is onto at every point \(Y\in {\mathcal {M}}\), the fiber \(\pi ^{-1}(b), b\in {\mathcal {B}}\) is a Riemannian submanifold of \({\mathcal {M}}\), and \(d\pi \) preserves scalar products of vectors normal to fibers. An important example is the quotient space by a free and proper action of a group of isometries. At each point \(Y\in {\mathcal {M}}\), the tangent space of \(\pi ^{-1}(\pi Y)\) is called the vertical space, and its orthogonal complement with respect to the Riemannian metric is called the horizontal space. The collection of horizontal spaces \({\mathcal {H}}_Y\) (\(Y\in {\mathcal {M}}\)) of a submersion is a subbundle \({\mathcal {H}}\). The horizontal lift, identifying a tangent vector \(\xi \) at \(b=\pi (Y)\in {\mathcal {B}}\) with a horizontal tangent vector \(\xi _{{\mathcal {H}}}\) at Y is a linear isometry between the tangent space \(T_b{\mathcal {B}}\) and \({\mathcal {H}}_Y\), the horizontal space at Y. The Riemannian framework for a quotient of embedded manifolds is studied through the horizontal lifts, the focus is on the horizontal bundle \({\mathcal {H}}\) instead of the tangent bundle \(T{\mathcal {M}}\).

The reader can consult [1, 9] for details of Riemannian optimization, including the basic algorithms once the Euclidean and Riemannian gradient and Hessian are computed. In essence, it has been recognized that many popular equation solving and optimization algorithms on Euclidean spaces can be extended to a manifold framework ([9, 10]). Steepest descent on real vector spaces could be extended to manifolds using the Riemannian gradient defined above together with a retraction. Here, a retraction R is a sufficiently smooth map mapping \(X\in {\mathcal {M}}, \eta \in T_X{\mathcal {M}}\) to \(R(X, \eta )\in {\mathcal {M}}\) for sufficiently small \(\eta \). Also, using the Riemannian Hessian, second-order optimization methods, for example, Trust-Region ([1]), could be extended to manifold context. At the i-th iteration step, an optimization algorithm produces a tangent vector \(\eta _{i}\) to the manifold point \(Y_i\), which will produce the next iteration point \(Y_{i+1}=R(Y_i, \eta _i)\) ([3], chapter 4 of [1]). For manifolds considered in this article, computationally efficient retractions are available.

2.1 Notations

We will attempt to state and prove statements for both the real and Hermitian cases at the same time when there are parallel results, as discussed in Sect. 4.1. The base field \({\mathbb {K}}\) will be \({\mathbb {R}}\) or \({\mathbb {C}}\). We use the notation \({\mathbb {K}}^{n\times m}\) to denote the space of matrices of size \(n\times m\) over \({\mathbb {K}}\). We consider both real and complex vector spaces as real vector spaces, and by \({{\,\mathrm{Tr_{{\mathbb {R}}}}\,}}\) we denote the trace of a matrix in the real case or the real part of the trace in the complex case. A real matrix space is a real inner product space with the Frobenius inner product \({{\,\mathrm{Tr_{{\mathbb {R}}}}\,}}ab^{{{\textsf{T}}}}\), while a complex matrix space becomes a real inner product space with inner product \({{\,\mathrm{Tr_{{\mathbb {R}}}}\,}}ab^{{{\textsf{H}}}}\) (see Sect. 4.1). We will use the notation \({\mathfrak {t}}\) to denote the real adjoint \({{\textsf{T}}}\) for a real vector space, and Hermitian adjoint \({{\textsf{H}}}\) for a complex vector space, both for matrices and operators. We denote \({\textrm{sym}}_{{\mathfrak {t}}}X = \frac{1}{2}(X + X^{{\mathfrak {t}}})\), \({\textrm{skew}}_{{\mathfrak {t}}}X = \frac{1}{2}(X - X^{{\mathfrak {t}}})\). We denote by \({\textrm{Sym}}_{{\mathfrak {t}}, {\mathbb {K}}, n}\) the space of \({\mathfrak {t}}\)-symmetric matrices \(X\in {\mathbb {K}}^{n\times n}\) with \(X^{{\mathfrak {t}}} = X\). The \({\mathfrak {t}}\)-antisymmetric space \({\textrm{Skew}}_{{\mathfrak {t}}, {\mathbb {K}}, n}\) is defined similarly. Symbols \(\xi , \eta \) are often used to denote tangent vector or vector fields, while \(\omega \) is used to denote a vector on the ambient space. The directional derivative in direction v is denoted by \({\textrm{D}}_{v}\), it applies to scalar, vector, or operator-valued functions. If \(\texttt{X}\) is a vector field and f is a function, the Lie derivatives will be written as \({\textrm{D}}_{\texttt{X}}f\). We also apply Lie derivatives on scalar or operator-valued functions when \(\texttt{X}\) is a vector field, and write \({\textrm{D}}_{\texttt{X}}{{\textsf{g}}}\) for example, where \({{\textsf{g}}}\) is a metric operator. Because the vector field \(\texttt{X}\) may be a matrix, we prefer the notation \({\textrm{D}}_{\texttt{X}}{{\textsf{g}}}\) to the usual Lie derivative notation \(\texttt{X}{{\textsf{g}}}\) which may be ambiguous. By \({\textrm{U}}_{{\mathbb {K}}, d}\) we denote the group of \({\mathbb {K}}^{d\times d}\) matrices U satisfying \(U^{{\mathfrak {t}}}U = I_d\) (called \({\mathfrak {t}}\)-orthogonal), thus \({\textrm{U}}_{{\mathbb {K}}, d}\) is the real orthogonal group \({\textrm{O}}(d)\) when \({\mathbb {K}}={\mathbb {R}}\) and unitary group \({\textrm{U}}(d)\) when \({\mathbb {K}}={\mathbb {C}}\).

In our approach, a subspace \({\mathcal {H}}_Y\) of the tangent space at a point Y on a manifold \({\mathcal {M}}\) is defined as either the nullspace of an operator \({{\,\textrm{J}\,}}(Y)\), or the range of an operator \({\textrm{N}}(Y)\), both are operator-valued functions on \({\mathcal {M}}\). Since we most often work with one manifold point Y at a time, we sometimes drop the symbol Y to make the expressions less lengthy. Other operator-valued functions defined in this paper include the ambient metric \({{\textsf{g}}}\), the projection \({\varPi }_{{\mathcal {H}},{{\textsf{g}}}}\) to \({\mathcal {H}}\), the Christoffel metric term \({\textrm{K}}\), and their directional derivatives. We also use the symbols \(\hat{f}_{Y}\) and \(\hat{f}_{YY}\) to denote the ambient gradient and Hessian (Y is the manifold variable). We summarize below symbols and concepts related to the main ideas in the paper, with the Stiefel case as an example (details explained in sections below).

Symbol | Concept |

|---|---|

\({\mathcal {E}}\) | Ambient space, \({\mathcal {M}}\) is embedded in \({\mathcal {E}}\). (e.g. \({\mathbb {K}}^{n\times p}\)). |

\({\mathcal {E}}_{{{\,\textrm{J}\,}}}, {\mathcal {E}}_{{\textrm{N}}}\) | Inner product spaces, range of \({{\,\textrm{J}\,}}(Y)\) and domain of \({\textrm{N}}(Y)\) below. |

\({\mathcal {H}}\) | A subbundle of \(T{\mathcal {M}}\). Either \(T{\mathcal {M}}\) or a horizontal bundle in practice. |

\({{\,\textrm{J}\,}}(Y)\) | Operator from \({\mathcal {E}}\) onto \({\mathcal {E}}_{{{\,\textrm{J}\,}}}\), \({{\,\textrm{Null}\,}}({{\,\textrm{J}\,}}(Y))={\mathcal {H}}_Y\subset T_Y{\mathcal {M}}\). (e.g. \(Y^{{\mathfrak {t}}}\omega + \omega ^{{\mathfrak {t}}}Y\)). |

\({\textrm{N}}(Y)\) | Inject. oper. from \({\mathcal {E}}_{{\textrm{N}}}\) to \({\mathcal {E}}\) onto \({\mathcal {H}}_Y\subset T_Y{\mathcal {M}}\) (e.g. \({\textrm{N}}(A, B) = Y A + Y_{\perp } B\)). |

\({{\,\textrm{xtrace}\,}}\) | Index-raising operator for the trace (Frobenius) inner product. |

\({{\textsf{g}}}(Y)\) | Metric given as self-adjoint operator on \({\mathcal {E}}\) (e.g. (\(\alpha _1YY^{{\mathfrak {t}}} + \alpha _0 Y_{\perp }Y_{\perp }^{{\mathfrak {t}}})\eta \)). |

\({\varPi }_{{{\textsf{g}}}}, {\varPi }_{{\mathcal {H}},{{\textsf{g}}}}\) | Projection to \({\mathcal {H}}\subset T{\mathcal {M}}\) in Proposition 3.2. |

\({\textrm{K}}(\xi , \eta )\) | Chrisfl. metric term \(\frac{1}{2}(({\textrm{D}}_{\xi }{{\textsf{g}}})\eta + ({\textrm{D}}_{\eta }){{\textsf{g}}}\xi -{{\,\textrm{xtrace}\,}}(\langle ({\textrm{D}}_\phi {{\textsf{g}}})\xi , \eta \rangle _{{\mathcal {E}}}, \phi ))\). |

\({\varGamma }_{{\mathcal {H}}}(\xi , \eta )\) | Chrisfl. function \({\varPi }_{{\mathcal {H}},{{\textsf{g}}}}{{\textsf{g}}}^{-1}{\textrm{K}}(\xi , \eta )-({\textrm{D}}_{\xi }{\varPi }_{{\mathcal {H}}, {{\textsf{g}}}})\eta \). |

3 Ambient Space and Optimization on Riemannian Manifolds

If \(\hat{f}\) is a scalar function from an open subset \({\mathcal {U}}\) of a Euclidean space \({\mathcal {E}}\), its gradient \({\textsf{grad}}\hat{f}\) satisfies \(\langle {\hat{\eta }}, {\textsf{grad}}\hat{f}\rangle _{{\mathcal {E}}} = {\textrm{D}}_{{\hat{\eta }}} \hat{f}\) for all vector fields \({\hat{\eta }}\) on \({\mathcal {U}}\) where \({\textrm{D}}_{{\hat{\eta }}} \hat{f}\) is the Lie derivative of \(\hat{f}\) with respect to \({\hat{\eta }}\). As well-known [1, 9], the Riemannian gradient and Hessian product of a function f on a submanifold \({\mathcal {M}}\subset {\mathcal {E}}\) could be computed from the Euclidean gradient and Hessian, which are evaluated by extending f to a function \(\hat{f}\) on a region of \({\mathcal {E}}\) near \({\mathcal {M}}\). The process is independent of the extension \(\hat{f}\).

Definition 3.1

We call an inner product (Euclidean) space \(({\mathcal {E}}, \langle ,\rangle _{{\mathcal {E}}})\) an embedded ambient space of a Riemannian manifold \({\mathcal {M}}\) if there is a differentiable (not necessarily Riemannian) embedding \({\mathcal {M}}\subset {\mathcal {E}}\).

Let f be a function on \({\mathcal {M}}\) and \(\hat{f}\) be an extension of f to an open neighborhood of \({\mathcal {E}}\) containing \({\mathcal {M}}\). We call \({\textsf{grad}}\hat{f}\) an ambient gradient of f. It is a vector-valued function from \({\mathcal {M}}\) to \({\mathcal {E}}\) such that for all vector fields \(\eta \) on \({\mathcal {M}}\)

or equivalently \(\langle \eta , {\textsf{grad}}\hat{f}\rangle _{{\mathcal {E}}} = {\textrm{D}}_\eta f\). Given an ambient gradient \({\textsf{grad}}\hat{f}\) with continuous derivatives, we define the ambient Hessian to be the map \({\textsf{hess}}\hat{f}\) associating to a vector field \(\xi \) on \({\mathcal {M}}\) the derivative \({\textrm{D}}_{\xi }{\textsf{grad}}\hat{f}\). We define the ambient Hessian bilinear form \({\textsf{hess}}\hat{f}^{02}(\xi , \eta )\) to be \(\langle ({\textrm{D}}_{\xi }{\textsf{grad}}\hat{f}),\eta \rangle _{{\mathcal {E}}}\). If \(Y\in {\mathcal {M}}\) is considered as a variable, we also use the notation \(\hat{f}_{Y}\) for \({\textsf{grad}}\hat{f}\) and \(\hat{f}_{YY}\) for \({\textsf{hess}}\hat{f}\).

By the Whitney embedding theorem, any manifold has an ambient space. Coordinate charts could be considered as a collection of compatible local ambient spaces.

From the embedding \({\mathcal {M}}\subset {\mathcal {E}}\), the tangent space of \({\mathcal {M}}\) at each point \(Y\in {\mathcal {M}}\) is considered as a subspace of \({\mathcal {E}}\). Thus, a vector field on \({\mathcal {M}}\) could be considered as an \({\mathcal {E}}\)-valued function on \({\mathcal {M}}\) and we can take its directional derivatives. This derivative is dependent on the embedding and hence not intrinsic. For a function f and two vector fields \(\xi , \eta \) on \({\mathcal {M}}\subset {\mathcal {E}}\) we have:

This follows from \({\textrm{D}}_{\xi }\langle \eta , \hat{f}_{Y}\rangle _{{\mathcal {E}}} = \langle {\textrm{D}}_{\xi }\eta , \hat{f}_{Y}\rangle _{{\mathcal {E}}} + \langle \eta , {\textrm{D}}_{\xi }(\hat{f}_{Y})\rangle _{{\mathcal {E}}} = {\textrm{D}}_{\xi }({\textrm{D}}_{\eta } f)\) by taking directional derivatives of Eq. (3.1), thus \(\langle \eta , {\textrm{D}}_{\xi }(\hat{f}_{Y})\rangle _{{\mathcal {E}}}\) can be evaluated by Eq. (3.2).

We begin with a standard result of inner product spaces. Recall that the adjoint of a linear map A between two inner product spaces V and W is the map \(A^{{\mathfrak {t}}}\) such that \(\langle Av, w\rangle _W = \langle v, A^{{\mathfrak {t}}}w\rangle _V\) where \(\langle ,\rangle _V, \langle ,\rangle _W\) denote the inner products on V and W, respectively. If A is represented by a matrix also called A in two orthogonal bases in V and W respectively, then \(A^{{\mathfrak {t}}}\) is represented by its transpose \(A^{{{\textsf{T}}}}\). A projection from an inner product space V to a subspace W is a linear operator \({\varPi }_W\) on V such that \({\varPi }_W v\in W\) and \(\langle v, w\rangle _V = \langle {\varPi }_W v, w\rangle _V\) for all \(w\in W\), \(v\in V\). It is well-known a projection always exists and unique, and \({\varPi }_W v\) minimizes the distance from v to W.

Proposition 3.1

Let \({\mathcal {E}}\) be a vector space with an inner product \(\langle ,\rangle _{{\mathcal {E}}}\). Let \({{\textsf{g}}}\) be a self-adjoint positive-definite operator on \({\mathcal {E}}\), thus \(\langle {{\textsf{g}}}e_1, e_2\rangle _{{\mathcal {E}}}= \langle e_1, {{\textsf{g}}}e_2\rangle _{{\mathcal {E}}}\). The operator \({{\textsf{g}}}\) defines a new inner product on \({\mathcal {E}}\) by \(\langle e_1, e_2\rangle _{{\mathcal {E}}, {{\textsf{g}}}}:= \langle e_1, {{\textsf{g}}}e_2\rangle _{{\mathcal {E}}}\). If \(W = {{\,\textrm{Null}\,}}({{\,\textrm{J}\,}})\) for a map \({{\,\textrm{J}\,}}\) from \({\mathcal {E}}\) onto an inner product space \({\mathcal {E}}_{{{\,\textrm{J}\,}}}\), the projection \({\varPi }_{{{\textsf{g}}}}={\varPi }_{{{\textsf{g}}}, W}\) from \({\mathcal {E}}\) to W under the inner product \(\langle , \rangle _{{\mathcal {E}}, {{\textsf{g}}}}\) is given by \({\varPi }_{{{\textsf{g}}}}e= e- {{\textsf{g}}}^{-1}{{\,\textrm{J}\,}}^{{\mathfrak {t}}}({{\,\textrm{J}\,}}{{\textsf{g}}}^{-1}{{\,\textrm{J}\,}}^{{\mathfrak {t}}})^{-1}{{\,\textrm{J}\,}}e\), where \({{\,\textrm{J}\,}}^{{\mathfrak {t}}}\) is the adjoint map of \({{\,\textrm{J}\,}}\) for all \(e\in {\mathcal {E}}\).

Alternatively, if \({\textrm{N}}\) is a one-to-one map from an inner product space \({\mathcal {E}}_{{\textrm{N}}}\) to \({\mathcal {E}}\) such that \(W={\textrm{N}}({\mathcal {E}}_{{\textrm{N}}})\), then the projection to W could be given by \({\varPi }_{{{\textsf{g}}}}e= {\textrm{N}}({\textrm{N}}^{{\mathfrak {t}}}{{\textsf{g}}}{\textrm{N}})^{-1}{\textrm{N}}^{{\mathfrak {t}}}{{\textsf{g}}}e\).

The operators \({{\textsf{g}}}{\varPi }_{{{\textsf{g}}}}\) and \({\varPi }_{{{\textsf{g}}}}{{\textsf{g}}}^{-1}\) are self-adjoint under \(\langle ,\rangle _{{\mathcal {E}}}\).

Proof

The assumption that \({{\,\textrm{J}\,}}\) is onto \({\mathcal {E}}_{{{\,\textrm{J}\,}}}\) shows \({{\,\textrm{J}\,}}^{{\mathfrak {t}}}\) is injective (as \({{\,\textrm{J}\,}}^{{\mathfrak {t}}}a = 0\) implies \(\langle a, {{\,\textrm{J}\,}}\omega \rangle _{{\mathcal {E}}_{{{\,\textrm{J}\,}}}} = 0\) for all \(\omega \in {\mathcal {E}}\), and since \({{\,\textrm{J}\,}}\) is onto this implies \(a = 0\)). This in turn implies \({{\,\textrm{J}\,}}{{\textsf{g}}}^{-1}{{\,\textrm{J}\,}}^{{\mathfrak {t}}}\) is invertible as if \({{\,\textrm{J}\,}}{{\textsf{g}}}^{-1}{{\,\textrm{J}\,}}^{{\mathfrak {t}}} a = 0\), then \(\langle {{\,\textrm{J}\,}}{{\textsf{g}}}^{-1}{{\,\textrm{J}\,}}^{{\mathfrak {t}}}a, a\rangle _{{\mathcal {E}}} = 0\), so \(\langle {{\textsf{g}}}^{-1}{{\,\textrm{J}\,}}^{{\mathfrak {t}}}a, {{\,\textrm{J}\,}}^{{\mathfrak {t}}}a\rangle _{{\mathcal {E}}} = 0\) and hence \({{\,\textrm{J}\,}}^{{\mathfrak {t}}}a = 0\) as \({{\textsf{g}}}\) is positive-definite. We can show \({\textrm{N}}^{{\mathfrak {t}}}{{\textsf{g}}}{\textrm{N}}\) is invertible similarly.

For the first case, if \(e_W \in W={{\,\textrm{Null}\,}}({{\,\textrm{J}\,}})\) and \(e\in {\mathcal {E}}\),

where the last term is zero because \(e_W\in {{\,\textrm{Null}\,}}({{\,\textrm{J}\,}})\), so \(\langle {\varPi }_{{{\textsf{g}}}} e, {{\textsf{g}}}e_W\rangle _{{\mathcal {E}}} = \langle e, {{\textsf{g}}}e_W\rangle _{{\mathcal {E}}}\). For the second case, assuming \(e_W ={\textrm{N}}(e_{{\textrm{N}}})\) for \(e_{{\textrm{N}}}\in {\mathcal {E}}_{{\textrm{N}}}\) then (Using \((AB)^{{\mathfrak {t}}} = B^{{\mathfrak {t}}}A^{{\mathfrak {t}}}\)):

The last statement follows from the defining equations of \({\varPi }_{{{\textsf{g}}}}\). \(\square \)

Recall the Riemannian gradient of a function f on a manifold \({\mathcal {M}}\) with Riemannian metric \(\langle ,\rangle _R\) is the vector field \({\textsf{rgrad}}_f\) such that \(\langle {\textsf{rgrad}}_f(Y), \xi (Y)\rangle _R = ({\textrm{D}}_{\xi } f)(Y)\) for any point \(Y\in {\mathcal {M}}\), and any vector field \(\xi \). Let \({\mathcal {H}}\) be a subbundle of the tangent bundle \(T{\mathcal {M}}\), we recall this means \({\mathcal {H}}\) is a collection of subspaces (fibers) \({\mathcal {H}}_Y\subset T_Y{\mathcal {M}}\) for \(Y\in {\mathcal {M}}\) such that \({\mathcal {H}}\) is itself a vector bundle on \({\mathcal {M}}\), i.e. \({\mathcal {H}}\) is locally a product of a vector space and an open subset of \({\mathcal {M}}\), together with a linear coordinate change condition (see [22], definition 7.24 for details). We can define the \({\mathcal {H}}\)-Riemannian gradient \({\textsf{rgrad}}_{{\mathcal {H}}, f}\) of f as the unique \({\mathcal {H}}\)-valued vector field such that \(\langle {\textsf{rgrad}}_{{\mathcal {H}}, f}, \xi _{{\mathcal {H}}}\rangle _R = {\textrm{D}}_{\xi _{{\mathcal {H}}}} f\) for any \({\mathcal {H}}\)-valued vector field \(\xi _{{\mathcal {H}}}\). Uniqueness follows from nondegeneracy of the inner product restricted to \({\mathcal {H}}\). Clearly, when \({\mathcal {H}}=T{\mathcal {M}}\), \({\textsf{rgrad}}_{{\mathcal {H}}, f}={\textsf{rgrad}}_{T{\mathcal {M}}}f\) is the usual Riemannian gradient. We have:

Proposition 3.2

Let \(({\mathcal {E}}, \langle ,\rangle _{{\mathcal {E}}})\) be an embedded ambient space of a manifold \({\mathcal {M}}\) as in definition 3.1. Let \({{\textsf{g}}}\) be a smooth operator-valued function associating each \(Y\in {\mathcal {M}}\) a self-adjoint positive-definite operator \({{\textsf{g}}}(Y)\) on \({\mathcal {E}}\). Thus, each \({{\textsf{g}}}(Y)\) defines an inner product on \({\mathcal {E}}\), which induces an inner product on \(T_Y{\mathcal {M}}\) and hence \({{\textsf{g}}}\) induces a Riemannian metric on \({\mathcal {M}}\). Let \({\mathcal {H}}\) be a subbundle of \(T{\mathcal {M}}\). Define \({\varPi }_{{\mathcal {H}},{{\textsf{g}}}}\) to be the operator-valued function such that \({\varPi }_{{\mathcal {H}},{{\textsf{g}}}}(Y)\) is the projection associated with \({{\textsf{g}}}(Y)\) from \({\mathcal {E}}\) to the fiber \({\mathcal {H}}_Y\), and for the case \({\mathcal {H}}=T{\mathcal {M}}\), define \({\varPi }_{{\mathcal {M}},{{\textsf{g}}}} = {\varPi }_{T{\mathcal {M}},{{\textsf{g}}}}\). For an ambient gradient \({\textsf{grad}}\hat{f}\) of f, the \({\mathcal {H}}\)-Riemannian gradient of f can be evaluated as:

If there is an inner product space \({\mathcal {E}}_{{{\,\textrm{J}\,}}}\) and a map \({{\,\textrm{J}\,}}\) from \({\mathcal {M}}\) to the space \({\mathfrak {L}}({\mathcal {E}}, {\mathcal {E}}_{{{\,\textrm{J}\,}}})\) of linear maps from \({\mathcal {E}}\) to \({\mathcal {E}}_{{{\,\textrm{J}\,}}}\), such that for each \(Y\in {\mathcal {M}}\), the range of \({{\,\textrm{J}\,}}(Y)\) is precisely \({\mathcal {E}}_{{{\,\textrm{J}\,}}}\), and its nullspace is \({\mathcal {H}}_Y\) then \({\varPi }_{{\mathcal {H}},{{\textsf{g}}}}(Y)e\) for \(e\in {\mathcal {E}}\) could be given by:

If there is an inner product space \({\mathcal {E}}_{{\textrm{N}}}\) and a map \({\textrm{N}}\) from \({\mathcal {M}}\) to the space \({\mathfrak {L}}({\mathcal {E}}_{{\textrm{N}}}, {\mathcal {E}})\) of linear maps from \({\mathcal {E}}_{{\textrm{N}}}\) to \({\mathcal {E}}\) such that for each \(Y\in {\mathcal {M}}\), \({\textrm{N}}(Y)\) is one-to-one, with its range is precisely \({\mathcal {H}}_Y\) then:

Proof

For any \({\mathcal {H}}\)-valued vector field \(\xi _{{\mathcal {H}}}\), we have:

because \({\varPi }_{{\mathcal {H}}, {{\textsf{g}}}}{{\textsf{g}}}^{-1}\) is self-adjoint and the projection is idempotent. The remaining statements are just a parametrized version of Proposition 3.1. \(\square \)

Note, we are not making any smoothness assumption on \({{\,\textrm{J}\,}}\) or \({\textrm{N}}\) yet, although \({\varPi }_{{\mathcal {H}},{{\textsf{g}}}}\) is assumed to be sufficiently smooth. In fact, \({\textrm{N}}\) is often not smooth. \({{\,\textrm{J}\,}}\) is usually smooth as it is constructed from a smooth constraint on \({\mathcal {M}}\), or on the horizontal requirements of a vector field.

Definition 3.2

A triple \(({\mathcal {M}}, {{\textsf{g}}}, {\mathcal {E}})\) with \({\mathcal {E}}\) an inner product space, \({\mathcal {M}}\subset {\mathcal {E}}\) a differentiable manifold submersion, and \({{\textsf{g}}}\) is a positive-definite operator-valued-function from \({\mathcal {M}}\) to \({\mathfrak {L}}({\mathcal {E}}, {\mathcal {E}})\) is called an embedded ambient structure of \({\mathcal {M}}\). \({\mathcal {M}}\) is a Riemannian manifold with the metric induced by \({{\textsf{g}}}\).

From the definition of Lie brackets, for an embedded ambient space \({\mathcal {E}}\) of \({\mathcal {M}}\) we have

Recall if \({\mathcal {M}}, \langle ,\rangle _R\) is a Riemannian manifold with the Levi-Civita connection \(\nabla \), the Riemannian Hessian (vector product) of a function f is the operator sending a tangent vector \(\xi \) to the tangent vector \({\textsf{rhess}}_f^{11}\xi = \nabla _{\xi }{\textsf{rgrad}}_f\). The Riemannian Hessian bilinear form is the map evaluating on two vector fields \(\xi , \eta \) as \(\langle \nabla _{\xi }{\textsf{rgrad}}_f, \eta \rangle _R\). For a subbundle \({\mathcal {H}}\) of \(T{\mathcal {M}}\) and a \({\mathcal {H}}\)-valued vector field \(\xi _{{\mathcal {H}}}\), we define the \({\mathcal {H}}\)-Riemannian Hessian similarly as \({\textsf{rhess}}_{{\mathcal {H}}, f}^{11}\xi _{{\mathcal {H}}} = {\varPi }_{{\mathcal {H}}, {{\textsf{g}}}}\nabla _{\xi _{{\mathcal {H}}}}{\textsf{rgrad}}_{{\mathcal {H}}, f}\) and we call \({\textsf{rhess}}_{{\mathcal {H}}, f}^{02}(\xi _{{\mathcal {H}}}, \eta _{{\mathcal {H}}}) = \langle {\varPi }_{{\mathcal {H}}, {{\textsf{g}}}}\nabla _{\xi _{{\mathcal {H}}}}{\textsf{rgrad}}_{{\mathcal {H}}, f}, \eta _{{\mathcal {H}}}\rangle _R = \langle \nabla _{\xi _{{\mathcal {H}}}}{\textsf{rgrad}}_{{\mathcal {H}}, f}, \eta _{{\mathcal {H}}}\rangle _R\) the \({\mathcal {H}}\)-Riemannian Hessian bilinear form. The next theorem shows how to compute the Riemannian connection and the associated Riemannian Hessian.

Theorem 3.1

Let \(({\mathcal {M}}, {{\textsf{g}}}, {\mathcal {E}})\) be an embedded ambient structure of a Riemannian manifold \({\mathcal {M}}\). There exists an \({\mathcal {E}}\)-valued bilinear form \({\mathcal {X}}\) sending a pair of vector fields \((\xi , \eta )\) to \({\mathcal {X}}(\xi , \eta )\in {\mathcal {E}}\) such that for any vector field \(\xi _0\):

Let \({\varPi }_{{\mathcal {M}}, {{\textsf{g}}}}\) be the projection from \({\mathcal {E}}\) to the tangent bundle of \({\mathcal {M}}\). Then \({\varPi }_{{\mathcal {M}}, {{\textsf{g}}}}{{\textsf{g}}}^{-1}{\mathcal {X}}(\xi , \eta )\) is uniquely defined given \(\xi , \eta \) and \({\mathcal {X}}(\xi , \eta )\) is also unique if we require \({\mathcal {X}}(\xi (Y), \eta (Y))\) to be in \(T_Y{\mathcal {M}}\) for all \(Y\in {\mathcal {M}}\). For two vector fields \(\xi , \eta \) on \({\mathcal {M}}\), define

Then \(\nabla _{\xi }\eta \) is the covariant derivative associated with the Levi-Civita connection. It could be written using the Christoffel function \({\varGamma }\):

If \({\mathcal {H}}\) is a subbundle of \(T{\mathcal {M}}\), and \(\xi _{{\mathcal {H}}}, \eta _{{\mathcal {H}}}\) are two \({\mathcal {H}}\)-valued vector fields, we have:

If f is a function on \({\mathcal {M}}\), \(\hat{f}_{Y}\) is an ambient gradient of f and \(\hat{f}_{YY}\) is the ambient Hessian operator, then \({\textsf{rhess}}_{{\mathcal {H}}, f}^{11}\xi _{{\mathcal {H}}}:= {\varPi }_{{\mathcal {H}};{{\textsf{g}}}}\nabla _{\xi _{{\mathcal {H}}}}{\textsf{rgrad}}_{{\mathcal {H}}, f}\) and \({\textsf{rhess}}_{{\mathcal {H}}, f}^{02}\) are given by:

The form \({\varGamma }(\xi , \eta )\) appeared in [9] and was computed for the case of a Stiefel manifold, and was called a Christoffel function. It includes the Christoffel metric term \({\textrm{K}}\) and the derivative of \({\varPi }_{{\mathcal {M}}, {{\textsf{g}}}}\). Evaluated at \(Y\in {\mathcal {M}}\), it depends only on the tangent vectors \(\eta (Y)\) and \(\xi (Y)\), not on the whole vector fields. Equation (2.57) in that reference is the expression of \({\textsf{rhess}}^{02}_f\) in terms of \({\varGamma }\) above. In [9], \({\varGamma }_{{\mathcal {H}}}\) was computed for a Grassmann manifold. The formulation for subbundles allows us to extend the result to Riemannian submersions and quotient manifolds. Equation 3.11 generalizes the Weingarten map formula in [2, equations 7,10] when \({{\textsf{g}}}=I_{{\mathcal {E}}}\), since by product rule

and \({\textsf{rhess}}_{{\mathcal {H}}, f}^{11}\xi _{{\mathcal {H}}}\) becomes

Here, \(V:= (I_{{\mathcal {E}}} -{\varPi }_{{\mathcal {H}};{{\textsf{g}}}})\hat{f}_{Y}\) is vertical (\({\varPi }_{{\mathcal {H}};{{\textsf{g}}}} V = 0\)) and \(({\textrm{D}}_{\xi _{{\mathcal {H}}}}{\varPi }_{{\mathcal {H}}, {{\textsf{g}}}})V\) is horizontal.

Proof

\({\mathcal {X}}\) is the familiar index-raising term: for \(Y\in {\mathcal {M}}\) and \(v_0, v_1, v_2\in T_Y{\mathcal {M}}\), as \(\langle v_1, ({\textrm{D}}_{v_0}{{\textsf{g}}}) v_2\rangle _{{\mathcal {E}}}\) is a tri-linear function on \(T_Y{\mathcal {M}}\) and the Riemannian inner product on \(T_Y{\mathcal {M}}\) is nondegenerate, the index-raising bilinear form \({\tilde{{\mathcal {X}}}}\) with value in \(T_Y{\mathcal {M}}\) is uniquely defined, so \({\mathcal {X}}(\xi (Y), \eta (Y))={\tilde{{\mathcal {X}}}}(\xi (Y), \eta (Y))\) satisfies Eq. (3.7), where we consider \(T_Y{\mathcal {M}}\) as a subspace of \({\mathcal {E}}\). Thus, we have proved the existence of \({\mathcal {X}}\). If we take another \({\mathcal {E}}\)-valued function \({\mathcal {X}}_1\) satisfying the same condition but not necessarily in the tangent space, the expression \({\varPi }_{{\mathcal {M}},{{\textsf{g}}}}{{\textsf{g}}}^{-1}{\mathcal {X}}_1\), hence \({\varPi }_{{\mathcal {M}},{{\textsf{g}}}}{{\textsf{g}}}^{-1}{\textrm{K}}\) is independent of the choice of \({\mathcal {X}}_1\), as for three vector fields \(\xi _0, \xi , \eta \)

We can verify directly that \(\nabla _{\xi }\eta \) satisfies the conditions of a covariant derivative: linear in \(\xi \) and satisfying the product rule with respect to \(\eta \). Similar to the calculation with coordinate charts, we can show \(\nabla \) is compatible with metric: for two vector fields \(\eta , \xi \), \(2\langle \nabla _{\xi }\eta , {{\textsf{g}}}\eta \rangle _{{\mathcal {E}}}= 2\langle {\varPi }_{{\mathcal {M}},{{\textsf{g}}}}{\hat{\nabla }}_{\xi } \eta , {{\textsf{g}}}\eta \rangle _{{\mathcal {E}}}\), which is \(2\langle {\textrm{D}}_{\xi } \eta +{{\textsf{g}}}^{-1}{\textrm{K}}(\xi , \eta ), {{\textsf{g}}}\eta \rangle _{{\mathcal {E}}}\) by definition and by property of the projection. Expanding the last expression and use \(\langle {\mathcal {X}}(\xi , \eta ), \eta \rangle _{{\mathcal {E}}}=\langle ({\textrm{D}}_{\eta }{{\textsf{g}}})\xi ,\eta \rangle _{{\mathcal {E}}}\)

Torsion-free follows from the fact that \({\textrm{K}}\) is symmetric and Eq. (3.6):

For Eq. (3.9), we note \({\varPi }_{{\mathcal {M}},{{\textsf{g}}}}{\textrm{D}}_{\xi }\eta = {\textrm{D}}_{\xi }({\varPi }_{{\mathcal {M}},{{\textsf{g}}}}\eta ) - ({\textrm{D}}_{\xi }{\varPi }_{{\mathcal {M}},{{\textsf{g}}}})\eta \) so

For Eq. (3.10), \({\varPi }_{{\mathcal {H}}, {{\textsf{g}}}}{\varPi }_{{\mathcal {M}}, {{\textsf{g}}}}= {\varPi }_{{\mathcal {H}}, {{\textsf{g}}}}\) if \(Y\in {\mathcal {M}}\), \({\mathcal {H}}_Y\subset T_Y{\mathcal {M}}\). Hence, Eq. (3.8) implies

and as before, we use \({\varPi }_{{\mathcal {H}},{{\textsf{g}}}}{\textrm{D}}_{\xi _{{\mathcal {H}}}}\eta _{{\mathcal {H}}}= {\textrm{D}}_{\xi _{{\mathcal {H}}}}({\varPi }_{{\mathcal {H}},{{\textsf{g}}}}\eta _{{\mathcal {H}}}) - ({\textrm{D}}_{\xi _{{\mathcal {H}}}}{\varPi }_{{\mathcal {H}},{{\textsf{g}}}})\eta _{{\mathcal {H}}}\) and \({\varPi }_{{\mathcal {H}},{{\textsf{g}}}}\eta _{{\mathcal {H}}}=\eta _{{\mathcal {H}}}\). The first line of Eq. (3.11) is by definition and \({\textrm{D}}_{\xi }({\varPi }_{{\mathcal {H}},{{\textsf{g}}}}{{\textsf{g}}}^{-1}\hat{f}_{Y}) = {\textrm{D}}_{\xi }({\varPi }_{{\mathcal {H}},{{\textsf{g}}}}{{\textsf{g}}}^{-1})\hat{f}_{Y}+{\varPi }_{{\mathcal {H}},{{\textsf{g}}}}{{\textsf{g}}}^{-1}\hat{f}_{YY}\). Expand, note \({\varPi }_{{\mathcal {H}},{{\textsf{g}}}}{{\textsf{g}}}^{-1}({{\textsf{g}}}{\varPi }_{{\mathcal {H}},{{\textsf{g}}}}{\textrm{D}}_{\xi _{{\mathcal {H}}}}{{\textsf{g}}}^{-1})\hat{f}_{Y}= -{\varPi }_{{\mathcal {H}},{{\textsf{g}}}}{{\textsf{g}}}^{-1}({\textrm{D}}_{\xi _{{\mathcal {H}}}}{{\textsf{g}}}){{\textsf{g}}}^{-1}\hat{f}_{Y}\) (as \({\varPi }_{{\mathcal {H}},{{\textsf{g}}}}\) is idempotent), we have the second line. For Eq. (3.12):

from compatibility with metric, idempotency of \({\varPi }_{{\mathcal {H}},{{\textsf{g}}}}\), Eqs. (3.10) and (3.2). \(\square \)

When the projection is given in terms of \({{\,\textrm{J}\,}}\), and \({{\,\textrm{J}\,}}\) is sufficiently smooth we have:

Proposition 3.3

If \({{\,\textrm{J}\,}}\) as in Proposition 3.2 is of class \(C^2\) then:

for two \({\mathcal {H}}\)-valued tangent vectors \(\xi _{{\mathcal {H}}},\eta _{{\mathcal {H}}}\) at \(Y\in {\mathcal {M}}\). We can evaluate \({\textsf{rhess}}_{{\mathcal {H}}, f}^{11}\xi _{{\mathcal {H}}}\) by setting \(\omega =\hat{f}_{Y}\) in the following formula, which is valid for all \({\mathcal {E}}\)-valued function \(\omega \):

Proof

The first expression follows by expanding \({\textrm{D}}_{\xi _{{\mathcal {H}}}}{\varPi }_{{\mathcal {H}},{{\textsf{g}}}}\) in terms of \({{\,\textrm{J}\,}}\), noting \({{\,\textrm{J}\,}}\eta _{{\mathcal {H}}}= 0\). For the second, expand \({\varPi }_{{\mathcal {H}}, {{\textsf{g}}}}{\hat{\nabla }}_{\xi _{{\mathcal {H}}}}({\mathcal {H}}, {\varPi }_{{{\textsf{g}}}}{{\textsf{g}}}^{-1}\omega )={\varPi }_{{\mathcal {H}}, {{\textsf{g}}}}{\textrm{D}}_{\xi _{{\mathcal {H}}}} ({\varPi }_{{\mathcal {H}},{{\textsf{g}}}}{{\textsf{g}}}^{-1}\omega )+ {\varPi }_{{\mathcal {H}},{{\textsf{g}}}}{{\textsf{g}}}^{-1}{\textrm{K}}(\xi _{{\mathcal {H}}}, {\varPi }_{{\mathcal {H}}, {{\textsf{g}}}}{{\textsf{g}}}^{-1}\omega )\), then expand the first term and use \({\varPi }_{{\mathcal {H}}, {{\textsf{g}}}}{{\textsf{g}}}^{-1}{{\,\textrm{J}\,}}^{{\mathfrak {t}}} = 0\). \(\square \)

The following proposition allows us to apply the results so far in familiar contexts:

Proposition 3.4

Let \(({\mathcal {M}}, {{\textsf{g}}}, {\mathcal {E}})\) be an embedded ambient structure.

-

1.

Fix an orthogonal basis \(e_i\) of \({\mathcal {E}}\), let f be a function on \({\mathcal {M}}\), which is a restriction of a function \(\hat{f}\) on \({\mathcal {E}}\), define \(\hat{f}_{Y}\) to be the function from \({\mathcal {M}}\) to \({\mathcal {E}}\), having the i-th component the directional derivative \({\textrm{D}}_{e_i}\hat{f}\), then \(\hat{f}_{Y}\) is an ambient gradient. If \({\mathcal {M}}\) is defined by the equation \(C(Y) = 0\) (\(Y\in {\mathcal {M}}\)) with a full rank Jacobian, then the nullspace of the Jacobian \({{\,\textrm{J}\,}}_{C}(Y)\) is the tangent space of \({\mathcal {M}}\) at Y, hence \({{\,\textrm{J}\,}}_{C}(Y)\) could be used as the operator \({{\,\textrm{J}\,}}(Y)\).

-

2.

(Riemannian submersion) Let \(({\mathcal {M}}, {{\textsf{g}}}, {\mathcal {E}})\) be an embedded ambient structure. Let \(\pi :{\mathcal {M}}\rightarrow {\mathcal {B}}\) be a Riemannian submersion, with \({\mathcal {H}}\) the corresponding horizontal subbundle of \(T{\mathcal {M}}\). If \(\xi , \eta \) are two vector fields on \({\mathcal {B}}\) with \(\xi _{{\mathcal {H}}}, \eta _{{\mathcal {H}}}\) their horizontal lifts, then the Levi-Civita connection \(\nabla ^{{\mathcal {B}}}_{\xi }\eta \) on \({\mathcal {B}}\) lifts to \({\varPi }_{{\mathcal {H}},{{\textsf{g}}}}\nabla _{\xi _{{\mathcal {H}}}}\eta _{{\mathcal {H}}}\), hence Eq. (3.10) applies. Also, Riemannian gradients and Hessians on \({\mathcal {B}}\) lift to \({\mathcal {H}}\)-Riemannian gradients and Hessians on \({\mathcal {M}}\).

Proof

The construction of \(\hat{f}_{Y}\) ensures \(\langle \hat{f}_{Y}, e_i\rangle _{{\mathcal {E}}} = {\textrm{D}}_{e_i}f\). The statement about the Jacobian is simply the implicit function theorem. Isometry of horizontal lift and [22], Lemma 7.45, item 3, gives us Statement 2. \(\square \)

In practice, \(\hat{f}_{Y}\) is computed by index-raising the directional derivative. For clarity, so far we use the subscript \({\mathcal {H}}\) to indicate the relation to a subbundle \({\mathcal {H}}\). For the rest of the paper, we will drop the subscripts \({\mathcal {H}}\) on vector fields (referring to \(\xi \) instead of \(\xi _{{\mathcal {H}}}\)) as it will be clear from the context if we discuss a vector field in \({\mathcal {H}}\), or just a regular vector field.

Remark 3.1

The results of this section offer two theoretical insights in deciding potential metric candidates in optimization problems:

-

(1.)

Non-constant ambient metrics may have the same big-O time picture as the constant one. This is the case with the examples in this paper when the constraint and the metrics are given in matrix polynomials or inversion. If the ambient Hessian could be computed efficiently, in many cases the (maybe tedious) Riemannian Hessian expressions, could be computed by operator composition with the same order-of-magnitude time complexity as the Riemannian gradient. This suggests non-constant metrics may be competitive if the improvement in convergence rate is significant. For certain problems involving positive-definite matrices, a non-constant metric is a better option([25]).

-

(2.)

There is a theoretical bound for the cost of computing the gradient, assuming that the metric \({{\textsf{g}}}\) is easy to invert. If the complexity of computing \({{\textsf{g}}}\) and \({{\,\textrm{J}\,}}\) is known, it remains to estimate the cost of inverting \({{\,\textrm{J}\,}}{{\textsf{g}}}^{-1}{{\,\textrm{J}\,}}^{{\mathfrak {t}}}\) (or \({\textrm{N}}^{{\mathfrak {t}}}{{\textsf{g}}}{\textrm{N}}\)). While in our examples these operators are reduced to simple ones that could be inverted efficiently, otherwise, \({{\,\textrm{J}\,}}{{\textsf{g}}}^{-1}{{\,\textrm{J}\,}}^{{\mathfrak {t}}}\) could be solved by a conjugate gradient (CG)-method (one example is in [14]). In that case, the time cost is proportional to the rank of \({{\,\textrm{J}\,}}\) (or \({\textrm{N}}))\) times the cost of each CG step, which can be estimated depending on the problem.

Example 3.1

Let \({\mathcal {M}}\) be a submanifold of \({\mathcal {E}}\), defined by a system of equations \(C(x)=0\), where \(C\) is a map from \({\mathcal {E}}\) to \({\mathbb {R}}^k\) (\(x\in {\mathcal {M}}\)). In this case, \({{\,\textrm{J}\,}}_{C}=C_{x}\) is the Jacobian of \(C\), assumed to be of full rank. The projection of \(\omega \in {\mathcal {E}}\) to the tangent space \(T_x{\mathcal {M}}\) given by

and the covariant derivative is given by \(\nabla _{\xi }\eta = {\textrm{D}}_{\xi }\eta + C_{x}^{{{\textsf{T}}}}(C_{x}C_{x}^{{{\textsf{T}}}})^{-1}({\textrm{D}}_\xi C_{x})\eta \) for two vector fields \(\xi , \eta \). With \({\varGamma }(\xi , \eta ) = C_{x}^{{{\textsf{T}}}}(C_{x}C_{x}^{{{\textsf{T}}}})^{-1}({\textrm{D}}_\xi C_{x})\eta \), the Riemannian Hessian bilinear form is computed from Eq. (3.12), and the Riemannian Hessian operator is:

The expression \((C_{x}C_{x}^{{{\textsf{T}}}})^{-1}C_{x}\hat{f}_x\) is often used as an estimate for the Lagrange multiplier, this result was discussed in section 4.9 of [9]. When \(C(x) = x^{{{\textsf{T}}}}x- 1\) (the unit sphere) \({{\,\textrm{J}\,}}_{C}\omega = x^{{{\textsf{T}}}}\omega \), the Riemannian connection is thus \(\nabla _{\xi }\eta = {\textrm{D}}_{\xi }\eta +x\xi ^{{{\textsf{T}}}}\eta \), a well-known result.

Our main interest is to study matrix manifolds. As seen, we need to compute \({\textrm{N}}^{{\mathfrak {t}}}\) or \({{\,\textrm{J}\,}}^{{\mathfrak {t}}}\). We will review adjoint operators for basic matrix operations.

4 Matrix Manifolds: Inner Products and Adjoint Operators

4.1 Matrices and Adjoints

We will use the trace (Frobenius) inner product on matrix vector spaces considered here. Again, the base field \({\mathbb {K}}\) is either \({\mathbb {R}}\) or \({\mathbb {C}}\). We use the letters m, n, p to denote the dimensions of vector spaces. We will prove results for both the real and complex cases together, as often there is a complex result using the Hermitian transpose corresponding to a real result using the real transpose. The reason is when \({\mathbb {C}}^{n\times m}\), as a real vector space, is equipped with the real inner product \({{\,\textrm{Re}\,}}{{\,\textrm{Tr}\,}}(ab^{{{\textsf{H}}}})\) (for \(a, b\in {\mathbb {C}}^{n\times m}\), \({{\textsf{H}}}\) is the Hermitian transpose), then the adjoint of the scalar multiplication operator by a complex number c, is the multiplication by the conjugate \({\bar{c}}\). T o fix some notations, we use the symbol \({{\,\mathrm{Tr_{{\mathbb {R}}}}\,}}\) to denote the real part of the trace, so for a matrix \(a\in {\mathbb {K}}^{n\times n}\), \({{\,\mathrm{Tr_{{\mathbb {R}}}}\,}}a = {{\,\textrm{Tr}\,}}a\) if \({\mathbb {K}}={\mathbb {R}}\) and \({{\,\mathrm{Tr_{{\mathbb {R}}}}\,}}a = {{\,\textrm{Re}\,}}({{\,\textrm{Tr}\,}}a)\) if \({\mathbb {K}}={\mathbb {C}}\). The symbol \({\mathfrak {t}}\) will be used on either an operator, where it specifies the adjoint with respect to these inner products, or to a matrix, where it specifies the corresponding adjoint matrix. When \({\mathbb {K}}={\mathbb {R}}\), we take \({\mathfrak {t}}\) to be the real transpose, and when \({\mathbb {K}}={\mathbb {C}}\) we take \({\mathfrak {t}}\) to be the hermitian transpose. The inner product of two matrices a, b is \({{\,\mathrm{Tr_{{\mathbb {R}}}}\,}}(ab^{{\mathfrak {t}}})\). Recall that we denote by \({\textrm{Sym}}_{{\mathfrak {t}}, {\mathbb {K}}, n}\) the space of all \({\mathfrak {t}}\)-symmetric matrices (\(A^{{\mathfrak {t}}} = A\)), and \({\textrm{Skew}}_{{\mathfrak {t}}, {\mathbb {K}}, n}\) the space of all \({\mathfrak {t}}\)-antisymmetric matrices (\(A^{{\mathfrak {t}}} = -A\)). We consider both \({\textrm{Sym}}_{{\mathfrak {t}}, {\mathbb {K}}, n}\) and \({\textrm{Skew}}_{{\mathfrak {t}}, {\mathbb {K}}, n}\) inner product spaces under \({{\,\mathrm{Tr_{{\mathbb {R}}}}\,}}\). We defined the symmetrizer \({\textrm{sym}}_{{\mathfrak {t}}}\) and antisymmetrizer \({\textrm{skew}}_{{\mathfrak {t}}}\) in Sect. 2.1, with the usual meaning.

Proposition 4.1

With the above notations, let \(A_i, B_i, X\) be matrices such that the functional \(L(X)= \sum _{i=1}^k {{\,\mathrm{Tr_{{\mathbb {R}}}}\,}}(A_i X B_i) + {{\,\mathrm{Tr_{{\mathbb {R}}}}\,}}(C_i X^{{\mathfrak {t}}} D_i)\) is well-formed. We have:

1. The matrix \({{\,\textrm{xtrace}\,}}(L, X) = \sum _{i=1}^k A_i^{{\mathfrak {t}}}B_i^{{\mathfrak {t}}} + D_i C_i\) is the unique matrix \(L_1\) such that \({{\,\mathrm{Tr_{{\mathbb {R}}}}\,}}L_1 X^{{\mathfrak {t}}}=L(X)\) for all \(X\in {\mathbb {K}}^{n\times m}\) (this is the gradient of L).

2. The matrix \({{\,\textrm{xtrace}\,}}^{{\textrm{sym}}}(L, X) = {\textrm{sym}}_{{\mathfrak {t}}}(\sum _{i=1}^k A_i^{{\mathfrak {t}}}B_i^{{\mathfrak {t}}} + D_i C_i)\) is the unique matrix \(L_2\in {\textrm{Sym}}_{{\mathfrak {t}}, {\mathbb {K}}, n}\) satisfying \({{\,\mathrm{Tr_{{\mathbb {R}}}}\,}}(L_2X^{{\mathfrak {t}}}) = L(X)\) for all \(X\in {\textrm{Sym}}_{{\mathfrak {t}}, {\mathbb {K}}, n}\).

3. The matrix \({{\,\textrm{xtrace}\,}}^{{\textrm{skew}}}(L, X) = {\textrm{skew}}_{{\mathfrak {t}}}(\sum _{i=1}^k A_i^{{\mathfrak {t}}}B_i^{{\mathfrak {t}}} + D_i C_i)\) is the unique matrix \(L_3\in {\textrm{Skew}}_{{\mathfrak {t}}, {\mathbb {K}}, n}\) satisfying \({{\,\mathrm{Tr_{{\mathbb {R}}}}\,}}(L_3X^{{\mathfrak {t}}}) = L(X)\) for all \(X\in {\textrm{Skew}}_{{\mathfrak {t}}, {\mathbb {K}}, n}\).

There is an abuse of notation as \({{\,\textrm{xtrace}\,}}(L, X)\) is not a function of two variables, but X should be considered a (symbolic) variable and L is a function in X, however, this notation is convenient in symbolic implementation.

Proof

We have \({{\,\mathrm{Tr_{{\mathbb {R}}}}\,}}({{\,\textrm{xtrace}\,}}(L)X^{{\mathfrak {t}}}) = L(X)\) from

and \({{\,\mathrm{Tr_{{\mathbb {R}}}}\,}}(C_i X^{{\mathfrak {t}}} D_i) = {{\,\mathrm{Tr_{{\mathbb {R}}}}\,}}( D_iC_i X^{{\mathfrak {t}}})\). Uniqueness follows from the fact that \({{\,\mathrm{Tr_{{\mathbb {R}}}}\,}}\) is a non-degenerate bilinear form. The last two statements follow from

-

\({{\,\mathrm{Tr_{{\mathbb {R}}}}\,}}({{\,\textrm{xtrace}\,}}(L)X^{{\mathfrak {t}}})={{\,\mathrm{Tr_{{\mathbb {R}}}}\,}}({{\,\textrm{xtrace}\,}}(L)^{{\mathfrak {t}}})X\) if \(X^{{\mathfrak {t}}} = X\).

-

\({{\,\mathrm{Tr_{{\mathbb {R}}}}\,}}({{\,\textrm{xtrace}\,}}(L)^{{\mathfrak {t}}}X)=-{{\,\mathrm{Tr_{{\mathbb {R}}}}\,}}({{\,\textrm{xtrace}\,}}(L)^{{\mathfrak {t}}})X\) if \(X^{{\mathfrak {t}}} = -X\).

\(\square \)

Remark 4.1

The index-raising operation/gradient \({{\,\textrm{xtrace}\,}}\) could be implemented as a symbolic operation on matrix trace expressions, as it involves only linear operations, matrix transpose, and multiplications. It could be used to compute an ambient gradient, for example. For another application, let \({\mathcal {M}}\) be a manifold with ambient space \({\mathbb {K}}^{n\times m}\), recall \({\textsf{rhess}}_f^{02}(\xi , \eta ) = \hat{f}_{YY}(\xi , \eta ) -{\varGamma }(\xi , \eta )\). Assume \(\langle {\varGamma }(\xi , \eta ),\hat{f}_{Y}\rangle _{{\mathcal {E}}} = \sum _i{{\,\mathrm{Tr_{{\mathbb {R}}}}\,}}(A_i \eta B_i) + {{\,\mathrm{Tr_{{\mathbb {R}}}}\,}}(C_i \eta ^{{\mathfrak {t}}} D_i)\) with \(A_i, B_i, C_i, D_i\) are not dependent on \(\eta \), and identify tangent vectors with their images in \({\mathbb {K}}^{n\times m}\), we have:

as the inner product of the right-hand side with \(\eta \) is \({\textsf{rhess}}_f^{02}(\xi , \eta )\), and the projection ensures it is in the tangent space. If the ambient space is identified with \({\textrm{Sym}}_{{\mathfrak {t}}, {\mathbb {K}}, n}\), \({\textrm{Skew}}_{{\mathfrak {t}}, {\mathbb {K}}, n}\) or a direct sum of matrix spaces, we also have similar statements.

Proposition 4.2

With the same notations as Proposition 4.1:

1. The adjoint of the left multiplication operator by a matrix \(A\in {\mathbb {K}}^{m\times n}\), sending \(X\in {\mathbb {K}}^{n\times p}\) to \(AX\in {\mathbb {K}}^{m\times p}\) is the left multiplication by \(A^{{\mathfrak {t}}}\), sending \(Y\in {\mathbb {K}}^{m\times p}\) to \(A^{{\mathfrak {t}}}Y \in {\mathbb {K}}^{m\times n}\).

2. The adjoint of the right multiplication operator by a matrix \(A\in {\mathbb {K}}^{m\times n}\) from \({\mathbb {K}}^{p\times m}\) to \({\mathbb {K}}^{p\times n}\) is the right multiplication by \(A^{{\mathfrak {t}}}\).

3. The adjoint of the operator sending \(X\mapsto X^{{\mathfrak {t}}}\) for \(X\in {\mathbb {K}}^{m\times m}\) is again the operator \(Y\mapsto Y^{{\mathfrak {t}}}\) for \(Y\in {\mathbb {K}}^{m\times m}\). Adjoint is additive, and \((F\circ G)^{{\mathfrak {t}}} = G^{{\mathfrak {t}}}\circ F^{{\mathfrak {t}}}\) for two linear operators F and G.

4. The adjoint of the left multiplication operator by \(A\in {\mathbb {K}}^{p\times n}\) sending \(X\in {\textrm{Sym}}_{{\mathfrak {t}}, {\mathbb {K}}, p}\) to \(AX\in {\mathbb {K}}^{p\times n}\) is the operator sending \(Y\mapsto \frac{1}{2}(A^{{\mathfrak {t}}}Y+Y^{{\mathfrak {t}}}A)\) for \(Y\in {\mathbb {K}}^{p\times n}\). Conversely, the adjoint of the operator \(Y\mapsto \frac{1}{2}(A^{{\mathfrak {t}}}Y+Y^{{\mathfrak {t}}}A)\in {\textrm{Sym}}_{{\mathfrak {t}}, {\mathbb {K}}, p}\) is the operator \(X\mapsto AX\).

5. The adjoint of the left multiplication operator by \(A\in {\mathbb {K}}^{p\times n}\) sending \(X\in {\textrm{Skew}}_{{\mathfrak {t}}, {\mathbb {K}}, p}\) to \(AX\in {\mathbb {K}}^{p\times n}\) is the operator sending \(Y\mapsto \frac{1}{2}(A^{{\mathfrak {t}}}Y-Y^{{\mathfrak {t}}}A)\) for \(Y\in {\mathbb {K}}^{p\times n}\). Conversely, the adjoint of the operator \(Y\mapsto \frac{1}{2}(A^{{\mathfrak {t}}}Y-Y^{{\mathfrak {t}}}A)\in {\textrm{Skew}}_{{\mathfrak {t}}, {\mathbb {K}}, p}\) is the operator \(X\mapsto AX\).

6. Adjoint is linear on the space of operators. If \(F_1\) and \(F_2\) are two linear operators from a space V to two spaces \(W_1\) and \(W_2\), then the adjoint of the direct sum operator (operator sending X to \(\begin{bmatrix}F_1 X&F_2 X\end{bmatrix}\)) is the map sending \(\begin{bmatrix}A\\ B\end{bmatrix}\) to \(F_1^{{\mathfrak {t}}}A + F_2^{{\mathfrak {t}}}B\). Adjoint of the map sending \(\begin{bmatrix}X_1 \\ X_2 \end{bmatrix}\) to \(FX_1\) is the map \(Y\mapsto \begin{bmatrix}F^{{\mathfrak {t}}}Y \\ 0 \end{bmatrix}\), and more generally a map sending a row block \(X_i\) of a matrix X to \(FX_i\) is the map sending Y to a matrix where the i-th block is \(F^{{\mathfrak {t}}}Y\), and zero outside of this block.

Most of the proof is just a simple application of trace calculus. For the first statement, the real case follows from \({{\,\textrm{Tr}\,}}(Aab^{{{\textsf{T}}}})={{\,\textrm{Tr}\,}}(a(A^{{{\textsf{T}}}}b)^{{{\textsf{T}}}})\), and \({{\,\mathrm{Tr_{{\mathbb {R}}}}\,}}(Aab^{{{\textsf{H}}}})={{\,\mathrm{Tr_{{\mathbb {R}}}}\,}}(a(A^{{{\textsf{H}}}}b)^{{{\textsf{H}}}})\) gives us the complex case. Statement 2. is proved similarly, statement 4 is standard. Statements 4. and 5. are checked by direct substitution, and 6. is just the operator version of the corresponding matrix statement, observing for example:

5 Application to Stiefel Manifold

The Stiefel manifold \({\textrm{St}}_{{\mathbb {K}}, d, n}\) on \({\mathcal {E}}={\mathbb {K}}^{n\times d}\) is defined by the equation \(Y^{{\mathfrak {t}}}Y= I_{d}\), where the tangent space at a point \(Y\) consists of matrices \(\eta \) satisfying \(\eta ^{{\mathfrak {t}}}Y+ Y\eta ^{{\mathfrak {t}}} = 0\). When \({\mathbb {K}}={\mathbb {R}}\) we assume \(d< n\), so the manifold is connected. We apply the results of Sect. 3 for the full tangent bundle \({\mathcal {H}}=T{\textrm{St}}_{{\mathbb {K}}, d, n}\). We can consider an ambient metric:

for \(\omega \in {\mathcal {E}}={\mathbb {K}}^{n\times d}\). It is easy to see \(\omega _0-YY^{{\mathfrak {t}}}\omega _0\) is an eigenvector of \({{\textsf{g}}}(Y)\) with eigenvalue \(\alpha _0\), and \(YY^{{\mathfrak {t}}}\omega _1\) is an eigenvector with eigenvalue \(\alpha _1\), for any \(\omega _0,\omega _1\in {\mathcal {E}}\), and these are the only eigenvalues and vectors. Hence, \({{\textsf{g}}}(Y)^{-1}\omega = \alpha _0^{-1}(I_n - YY^{{\mathfrak {t}}})\omega + \alpha ^{-1}_1YY^{{\mathfrak {t}}}\omega \) and \({{\textsf{g}}}\) is a Riemannian metric if \(\alpha _0, \alpha _1\) are positive. We can describe the tangent space as a nullspace of \({{\,\textrm{J}\,}}(Y)\) with \({{\,\textrm{J}\,}}(Y)\omega = \omega ^{{\mathfrak {t}}}Y+ Y^{{\mathfrak {t}}}\omega \in {\mathcal {E}}_{{{\,\textrm{J}\,}}}:={\textrm{Sym}}_{{\mathfrak {t}}, {\mathbb {K}}, d}\). We will evaluate everything at Y, so we will write \({{\,\textrm{J}\,}}\) and \({{\textsf{g}}}\) instead of \({{\,\textrm{J}\,}}(Y)\) and \({{\textsf{g}}}(Y)\), etc. By Proposition 4.2, \({{\,\textrm{J}\,}}^{{\mathfrak {t}}}a= (aY^{{\mathfrak {t}}})^{{\mathfrak {t}}} + Ya=2Ya\) for \(a\in {\mathcal {E}}_{{{\,\textrm{J}\,}}}\). We have \({{\textsf{g}}}^{-1}{{\,\textrm{J}\,}}^{{\mathfrak {t}}}a=\alpha _0^{-1}2Ya+(\alpha _1^{-1}-\alpha _0^{-1})2Ya= 2\alpha _1^{-1}Ya\). Thus \({{\,\textrm{J}\,}}{{\textsf{g}}}^{-1}{{\,\textrm{J}\,}}^{{\mathfrak {t}}}a= {{\,\textrm{J}\,}}(2\alpha _1^{-1}Ya) =4\alpha _1^{-1}a\) and by Proposition 3.1:

In this case, the ambient gradient \(\hat{f}_{Y}\) is the matrix of partial derivatives of an extension of f on the ambient space \({\mathbb {K}}^{n\times d}\). More conveniently, using the eigenspaces of \({{\textsf{g}}}\), \({\varPi }_{{{\textsf{g}}}}\nu = (I_n-YY^{{\mathfrak {t}}})v + Y{\textrm{skew}}_{{\mathfrak {t}}}Y^{{\mathfrak {t}}}v\) and \({{\textsf{g}}}^{-1}\hat{f}_{Y}= \alpha _0^{-1}(I_n-YY^{{\mathfrak {t}}})\hat{f}_{Y}+\alpha _1^{-1}YY^{{\mathfrak {t}}}\hat{f}_{Y}\)

If \(\xi \) and \(\eta \) are vector fields, \(({\textrm{D}}_{\xi }{{\textsf{g}}}) \eta = (\alpha _1-\alpha _0)(\xi Y^{{\mathfrak {t}}}+Y\xi ^{{\mathfrak {t}}})\eta \). Using Proposition 4.1, we can take the cross term \({\mathcal {X}}(\xi , \eta ) = (\alpha _1-\alpha _0)(\xi \eta ^{{\mathfrak {t}}}+\eta \xi ^{{\mathfrak {t}}})Y\), thus:

By the tangent condition, \((\xi Y^{{\mathfrak {t}}}\eta +\eta Y^{{\mathfrak {t}}}\xi )= -(\xi \eta ^{{\mathfrak {t}}}+\eta \xi ^{{\mathfrak {t}}})Y\), hence \({\textrm{K}}(\xi , \eta ) = \frac{\alpha _1-\alpha _0}{2}F\) with \(F = Y(\xi ^{{\mathfrak {t}}}\eta + \eta ^{{\mathfrak {t}}}\xi ) - 2(\xi ^{{\mathfrak {t}}}\eta + \eta ^{{\mathfrak {t}}}\xi )Y\), we see \(Y^{{\mathfrak {t}}}F\) is symmetric so \({\textrm{skew}}_{{\mathfrak {t}}}Y^{{\mathfrak {t}}}F = 0\), therefore

Using \({{\textsf{g}}}^{-1}{{\,\textrm{J}\,}}^{{\mathfrak {t}}}({{\,\textrm{J}\,}}{{\textsf{g}}}^{-1}{{\,\textrm{J}\,}}^{{\mathfrak {t}}})^{-1}({\textrm{D}}_\xi {{\,\textrm{J}\,}})\eta = \frac{1}{2}Y(\xi ^{{\mathfrak {t}}}\eta +\eta ^{{\mathfrak {t}}}\xi )\) to evaluate Eq. (3.13), the connection for two vector fields \(\xi , \eta \) is:

With \({\varPi }_0 = (I_n-YY^{{\mathfrak {t}}})\) and let \(\hat{f}_{YY}\) be the ambient Hessian, from Eq. (3.12):

By Remark 4.1, \({\textsf{rhess}}^{11}_f\xi \) is \({\varPi }_{{{\textsf{g}}}}{{\textsf{g}}}^{-1}{{\,\textrm{xtrace}\,}}({\textsf{rhess}}^{02}_{\xi , \eta }, \eta )\), thus

We note the term inside \({\varPi }_{Y, {{\textsf{g}}}}{{\textsf{g}}}^{-1}\) can be modified by any expression sent to zero by \({\varPi }_{Y, {{\textsf{g}}}}{{\textsf{g}}}^{-1}\). The case \(\alpha _0 = 1, \alpha _1=\frac{1}{2}\) correspond to the canonical metric on a Stiefel manifold, where the connection is given by formula 2.49 of [9], in a slightly different form, but we could show they are the same by noting \(YY^{{\mathfrak {t}}}(\xi \eta ^{{\mathfrak {t}}}+\eta \xi ^{{\mathfrak {t}}})Y=Y(\xi ^{{\mathfrak {t}}}YY^{{\mathfrak {t}}}\eta + \eta ^{{\mathfrak {t}}}YY^{{\mathfrak {t}}}\xi )\) using the tangent constraint. The case \(\alpha _0 = \alpha _1 = 1\) corresponds to the constant trace metric where we do not need to compute \({\textrm{K}}\). This family of metrics has been studied in [11], where a closed-form geodesic formula is provided. In [20] we also provide efficient closed-form geodesic formulas similar to those in [9].

6 Quotients of a Stiefel Manifold and Flag Manifolds

Continuing with the setup in the previous section, consider a Stiefel manifold \({\textrm{St}}_{{\mathbb {K}}, d, n}\) (we will assume \(0<d < n\)). The metric induced by the operator \({{\textsf{g}}}\) in Eq. (5.1), \(\alpha _0{{\,\mathrm{Tr_{{\mathbb {R}}}}\,}}\omega _1^{{\mathfrak {t}}}\omega _2 + (\alpha _1-\alpha _0){{\,\textrm{Tr}\,}}\omega _1^{{\mathfrak {t}}}YY^{{\mathfrak {t}}}\omega _2\) with \(Y\in {\textrm{St}}_{{\mathbb {K}}, d, n}\), \(\omega _1, \omega _2\in {\mathcal {E}}\) is preserved if we replace \(Y, \omega _1, \omega _2\) by \(YU, \omega _1U, \omega _2U\), for \(U^{{\mathfrak {t}}}U = I_d\), or if we define the \({\mathfrak {t}}\)-orthogonal group by \({\textrm{U}}_{{\mathbb {K}}, d}:= \{U\in {\mathbb {K}}^{d\times d}| U^{{\mathfrak {t}}}U = I_d\}\) then this is a group of isometries of \({{\textsf{g}}}\). Therefore, any subgroup G of \({\textrm{U}}_{{\mathbb {K}}, d}\) acts on \({\textrm{St}}_{{\mathbb {K}}, d, n}\) by right-multiplication also preserves the metric, and if G is compact, we can consider the quotient manifold \({\textrm{St}}_{{\mathbb {K}}, d, n}/G\), identifying \(Y\in {\textrm{St}}_{{\mathbb {K}}, d, n}\) with YU for \(U\in G\).

If the cost function f on the Stiefel manifold is invariant when applying transformations by a group G, it may be advantageous to consider optimization on a quotient manifold. The case of the Rayleigh quotient cost function \({{\,\mathrm{Tr_{{\mathbb {R}}}}\,}}Y^{{\mathfrak {t}}}A Y\) for \(Y\in {\textrm{St}}_{{\mathbb {K}}, d, n}\) and A is a positive-definite matrix is well-known. As the cost function is invariant if we replace Y by YU for \(U\in G={\textrm{U}}_{{\mathbb {K}}, d}\), we can optimize over the Grassmann manifolds \({\textrm{St}}_{{\mathbb {K}}, d, n}/{\textrm{U}}_{{\mathbb {K}}, d}\). When \(Y\in {\textrm{St}}_{{\mathbb {K}}, d, n}\) is divided into two column blocks \(Y=[Y_1|Y_2]\) of \(d_1 + d_2=d\) columns, the cost function \(f_1={{\,\mathrm{Tr_{{\mathbb {R}}}}\,}}Y_1^{{\mathfrak {t}}}A_1Y_1 + {{\,\mathrm{Tr_{{\mathbb {R}}}}\,}}Y_2^{{\mathfrak {t}}}A_2Y_2\) for two positive-definite matrices \(A_1, A_2\in {\mathbb {K}}^{n\times n}\) is invariant if we replace \(Y_1, Y_2\) by \(Y_1U_1, Y_2U_2\) for \(U_1\in {\textrm{U}}_{{\mathbb {K}}, d_1}, U_2\in {\textrm{U}}_{{\mathbb {K}}, d_2}\), while the cost function \(f_2 = {{\,\mathrm{Tr_{{\mathbb {R}}}}\,}}Y_1^{{\mathfrak {t}}}A_1Y_1 + {{\,\mathrm{Tr_{{\mathbb {R}}}}\,}}Y_2B_2Y_2^{{\mathfrak {t}}}\) for positive-definite matrices \(A_1\in {\mathbb {K}}^{n\times n}, B_2\in {\mathbb {K}}^{d_2\times d_2}\) is invariant if we replace \(Y_1\) by \(Y_1U_1\) only, for a generic \(B_2\). In the first case, we can optimize over \({\textrm{St}}_{{\mathbb {K}}, d, n}/({\textrm{U}}_{{\mathbb {K}}, d_1}\times {\textrm{U}}_{{\mathbb {K}}, d_2})\) and in the second case we can optimize over \({\textrm{St}}_{{\mathbb {K}}, d, n}/{\textrm{U}}_{{\mathbb {K}}, d_1}\). We will define an optimization framework for a quotient of \({\textrm{St}}_{{\mathbb {K}}, d, n}\) by a group G, \(\{I_d\}\subset G\subset {\textrm{U}}_{{\mathbb {K}}, d}\), where G consists of \(q+1\) blocks diagonal blocks, \(q\ge 0\), with at most one block could be trivial. The case \(G =\{I_d\}\) corresponds to the Stiefel manifold, \(G={\textrm{U}}_{{\mathbb {K}}, d}\) corresponds to the Grassmann manifold and the intermediate case includes flag manifolds. Background materials for optimization on flag manifolds are in [21, 28], but the examples just described and the review below should be sufficient to understand the setup and the results. We generalize the formula for \({\textsf{rhess}}^{02}\) in [28, Proposition 25] to the full family of metrics in [11] and provide a formula for \({\textsf{rhess}}^{11}\).

Let us describe the group G of block-diagonal matrices considered here. Assume there is a sequence of positive integers \(\varvec{\hat{d}}= \{d_1,\cdots , d_q\}\), \(d_i > 0\) for \(1\le i\le q\), such that \(\sum _{i=1}^q d_i \le d\). Set \(d_{q+1} = d - \sum _{i=1}^q d_i\), thus \(d_{q+1}\ge 0\). This sequence allows a partition of a matrix \(A\in {\mathbb {K}}^{d\times d}\) to \((q+1)\times (q+1)\) blocks \(A_{[ij]}\in {\mathbb {K}}^{d_i\times d_j}\), \(1\le i, j\le q+1\). The right-most or bottom blocks correspond to i or j equals to \(q+1\) are empty when \(d_{q+1} = 0\). Consider the subgroup \(G={\textrm{U}}_{{\mathbb {K}}, \varvec{\hat{d}}}= {\textrm{U}}_{{\mathbb {K}}, d_1}\times {\textrm{U}}_{{\mathbb {K}}, d_2}\cdots \times {\textrm{U}}_{{\mathbb {K}}, d_q}\times \{I_{d_{q+1}}\}\) of \({\textrm{U}}_{{\mathbb {K}}, d}\) of block-diagonal matrices U, with the i-th diagonal block from the top \(U_{[ii]}\in {\textrm{U}}_{{\mathbb {K}}, d_i}\), \(1\le i\le q\), and \(U_{[q+1, q+1]} = I_{d_{q+1}}\). An element \(U\in {\textrm{U}}_{{\mathbb {K}}, \varvec{\hat{d}}}\) has the form

and transforms \(Y = [Y_1| \cdots |Y_q|Y_{q+1}]\) to \([Y_1U_1| \cdots |Y_qU_q|Y_{q+1}]\). When \(q=0\), we define \(\varvec{\hat{d}}=\emptyset \) and \({\textrm{U}}_{{\mathbb {K}}, \varvec{\hat{d}}}= \{I_{d}\}\). We will consider the manifold \({\textrm{St}}_{{\mathbb {K}}, d, n}/G\) for \(G={\textrm{U}}_{{\mathbb {K}}, \varvec{\hat{d}}}\). Thus, when \(\varvec{\hat{d}}= \emptyset \), this quotient is the Stiefel manifold \({\textrm{St}}_{{\mathbb {K}}, d, n}\) itself, when \(\varvec{\hat{d}}= \{d\}\), it is the Grassmann manifold. When \(d_{q+1} = 0\) i.e. \(\sum _{i=1}^q d_i = d\), the quotient is called a flag manifold, denoted by \({{\,\textrm{Flag}\,}}(d_1,\cdots , d_q; n, {\mathbb {K}})\). In the example above for \(q=2\), if \(d_1 + d_2 = d\), \({\textrm{St}}_{{\mathbb {K}}, d, n}/({\textrm{U}}_{{\mathbb {K}}, d_1}\times {\textrm{U}}_{{\mathbb {K}}, d_2})\) is a flag manifold (we do not have a special name if \(d_1 + d_2 < d\)). Therefore, these quotients could be considered as intermediate objects between a Stiefel and a Grassmann manifold, as we will soon see more clearly.

Define the operator \({\textrm{symf}}\) acting on \({\mathbb {K}}^{d\times d}\), sending \(A\in {\mathbb {K}}^{d\times d}\) to \(A_{{\textrm{symf}}}\) such that \((A_{{\textrm{symf}}})_{[ij]} = \frac{1}{2}(A_{[ij]} +A_{[ji]}^{{\mathfrak {t}}})\) if \(1\le i\ne j\le q+1\) or \(i=j=q+1\), and \((A_{{\textrm{symf}}})_{[ii]} = A_{[ii]}\) if \(1 \le i\le q\). Thus, \({\textrm{symf}}\) preserves the diagonal blocks for \(1\le i \le q\), but symmetrizes the off-diagonal blocks and the \(q+1\)-th diagonal block. The following illustrates the operation when \(q=2\) for \(A = (A_{[ij]})\in {\mathbb {K}}^{d\times d}\).

For the case \(\varvec{\hat{d}}=\emptyset \) of the full Stiefel manifold, \({\textrm{symf}}\) is just \({\textrm{sym}}_{{\mathfrak {t}}}\) and for the case \(\varvec{\hat{d}}=\{d\}\) of the Grassmann manifold, \({\textrm{symf}}\) is the identity map. We show these quotients share similar Riemannian optimization settings.

Theorem 6.1

With the metric in Eq. (5.1), the horizontal space \({\mathcal {H}}_Y\) at \(Y\in {\textrm{St}}_{{\mathbb {K}}, d, n}\) of the quotient \({\textrm{St}}_{{\mathbb {K}}, d, n}\rightarrow {\textrm{St}}_{{\mathbb {K}}, d, n}/{\textrm{U}}_{{\mathbb {K}}, \varvec{\hat{d}}}\) consists of matrices \(\omega \in {\mathcal {E}}:={\mathbb {K}}^{n\times d}\) such that

or equivalently, \(Y^{{\mathfrak {t}}}\omega \) is \({\mathfrak {t}}\)-antisymmetric with first q diagonal blocks \(((Y^{{\mathfrak {t}}}\omega )_{{\textrm{symf}}})_{[ii]}\) vanish for \(1\le i\le q\). For \(\omega \in {\mathcal {E}}={\mathbb {K}}^{n\times d}\), the projection \({\varPi }_{{\mathcal {H}}}\) from \({\mathcal {E}}\) to \({\mathcal {H}}_Y\) and the Riemannian gradient are given by

Let \({\varPi }_0 = I_n - YY^{{\mathfrak {t}}}\). For two vector fields \(\xi , \eta \), the horizontal lift of the Levi-Civita connection and Riemannian Hessians are given by

Proof

First we note that \({\textrm{symf}}\) is a self-adjoint operator, as both the identity operator on the first q diagonal blocks and symmetrize operator on the remaining blocks are self-adjoint. The orbit of \(Y\in {\textrm{St}}_{{\mathbb {K}}, d, n}\) under the action of \({\textrm{U}}_{{\mathbb {K}}, \varvec{\hat{d}}}\) is \(Y{\textrm{U}}_{{\mathbb {K}}, \varvec{\hat{d}}}\), thus the vertical space consists of matrices of the form YD with D is block-diagonal, \({\mathfrak {t}}\)-skewsymmetric and \(D_{[(q+1),(q+1)]} = 0\). Since \({{\textsf{g}}}YD = \alpha _1 YD\), a horizontal vector \(\omega \) satisfies \({\textrm{skew}}_{{\mathfrak {t}}}(Y^{{\mathfrak {t}}}\omega ) = 0\) and \({{\,\mathrm{Tr_{{\mathbb {R}}}}\,}}(\omega ^{{\mathfrak {t}}}YD) = 0\). This shows the first q diagonal blocks of \(Y^{{\mathfrak {t}}}\omega \) are zero, hence \((Y^{{\mathfrak {t}}}\omega )_{{\textrm{symf}}} = 0\).

For the projection, we proceed like the Stiefel case, with the map \({{\,\textrm{J}\,}}\omega = (Y^{{\mathfrak {t}}}\omega )_{{\textrm{symf}}}\), mapping \({\mathcal {E}}\) to \({\mathcal {E}}_{{{\,\textrm{J}\,}}}= \{ A\in {\mathbb {K}}^{d\times d}| A_{[ij]} =A_{[ji]}^{{\mathfrak {t}}}, 1\le i \ne j\le q+1 \text { or } i=j=q+1\}\). Since \({\textrm{symf}}\) is self-adjoint, \({{\,\textrm{J}\,}}^{{\mathfrak {t}}} A = YA_{{\textrm{symf}}} = YA\) for \(A\in {\mathcal {E}}_{{{\,\textrm{J}\,}}}\). From here we get \(({{\,\textrm{J}\,}}{{\textsf{g}}}^{-1}{{\,\textrm{J}\,}}^{{\mathfrak {t}}})A = \alpha _1^{-1}A\) and Eq. (6.2) follows. Equation 6.3 is a substitution of \({{\textsf{g}}}^{-1}\hat{f}_{Y}\) to Eq. (6.2), noting \((Y^{{\mathfrak {t}}}({{\textsf{g}}}^{-1}\hat{f}_{Y}))_{{\textrm{symf}}} = \alpha _1^{-1}(Y^{{\mathfrak {t}}}\hat{f}_{Y})_{{\textrm{symf}}}\), using the eigen decomposition of \({{\textsf{g}}}\).

For the Levi-Civita connection, we use Eq. (3.10). For two horizontal vector fields \(\xi , \eta \), \(({\textrm{D}}_{\xi }{\varPi }_{{\mathcal {H}}})\eta = -\xi (Y^{{\mathfrak {t}}}\eta )_{{\textrm{symf}}} - Y(\xi ^{{\mathfrak {t}}}\eta )_{{\textrm{symf}}}=-Y(\xi ^{{\mathfrak {t}}}\eta )_{{\textrm{symf}}}\) and \({\varPi }_{{\mathcal {H}}}={\varPi }_{{\mathcal {H}}}{\varPi }\), where \({\varPi }\) is the Stiefel projection Eq. (5.2), hence \({\varPi }_{{\mathcal {H}}}{{\textsf{g}}}^{-1}{\textrm{K}}(\xi , \eta ) = {\varPi }{{\textsf{g}}}^{-1}{\textrm{K}}(\xi , \eta ) - Y(Y^{{\mathfrak {t}}}{\varPi }{{\textsf{g}}}^{-1}{\textrm{K}}(\xi , \eta ))_{{\textrm{symf}}}\). The last term vanishes from Eq. (5.3), and we have Eq. (6.4).

Equation (6.5) follows from Eq. (3.12). We derive Eq. (6.6) from remark 4.1 and self-adjointness of \({\textrm{symf}}\), expanding \({{\,\mathrm{Tr_{{\mathbb {R}}}}\,}}\hat{f}_{Y}Y(\xi ^{{\mathfrak {t}}}\eta )_{{\textrm{symf}}}\) to

\(\square \)

7 Positive-Definite Matrices

Consider the manifold \({\textrm{S}}^{+}_{{\mathbb {K}}, n}\) of \({{\mathfrak {t}}}\)-symmetric positive-definite matrices in \({\mathbb {K}}^{n\times n}\). In our approach, we take \({\mathcal {E}}={\mathbb {K}}^{n\times n}\) with its Frobenius inner product. The metric \({{\textsf{g}}}\) is \(\langle \xi , {{\textsf{g}}}\eta \rangle _{{\mathcal {E}}} = {{\,\textrm{Tr}\,}}(\xi Y^{-1}\eta Y^{-1})\), with the metric operator \({{\textsf{g}}}:\eta \mapsto Y^{-1}\eta Y^{-1}\) for two vector fields \(\xi , \eta \). The full tangent bundle \({\mathcal {H}}=T{\textrm{S}}^{+}_{{\mathbb {K}}, n}\) is identified fiber-wise with the nullspace of the operator \({{\,\textrm{J}\,}}:\eta \mapsto {{\,\textrm{J}\,}}\eta = \eta - \eta ^{{\mathfrak {t}}}\), with \({\mathcal {E}}_{{{\,\textrm{J}\,}}} = {\textrm{Skew}}_{{\mathfrak {t}}, {\mathbb {K}}, n}\). By item 5 in Proposition 4.2, we have \({{\,\textrm{J}\,}}^{{\mathfrak {t}}} a= 2a\) where a is a \({\mathfrak {t}}\)-antisymmetric matrix. From here \({{\,\textrm{J}\,}}{{\textsf{g}}}^{-1}{{\,\textrm{J}\,}}^{{\mathfrak {t}}}a= 4YaY\) and (write \({\varPi }\) for \({\varPi }_{{{\textsf{g}}}}\)):

Thus, the Riemannian gradient is \({\varPi }{{\textsf{g}}}^{-1}\hat{f}_{Y}=\frac{1}{2}Y(\hat{f}_{Y}+\hat{f}_{Y}^{{\mathfrak {t}}})Y\). Next, we compute \(({\textrm{D}}_{\xi }{{\textsf{g}}})\eta = {\textrm{D}}_{\xi |\eta \text { constant}}(Y^{-1}\eta Y^{-1}) = -Y^{-1}\xi Y^{-1}\eta Y^{-1} - Y^{-1}\eta Y^{-1}\xi Y^{-1}\), where we keep \(\eta \) constant in the derivative, as we evaluate \({\textrm{D}}_{\xi }{{\textsf{g}}}\) as an operator-valued function. From here, \(({\textrm{D}}_{\eta }{{\textsf{g}}})\xi =({\textrm{D}}_{\xi }{{\textsf{g}}})\eta \). We note for three vector fields \(\xi , \eta , \xi _0\)

Thus, we can take \({\mathcal {X}}(\xi , \eta )=-Y^{-1}\eta Y^{-1}\xi Y^{-1} - Y^{-1}\xi Y^{-1}\eta Y^{-1}\) and

Hence, the Riemannian Hessian bilinear form \({\textsf{rhess}}^{02}(\xi , \eta )\) is

Using a symmetric version of remark 4.1, \({\textsf{rhess}}^{11}_f\xi = {\varPi }_{{{\textsf{g}}}}{{\textsf{g}}}^{-1}{\textrm{sym}}_{{\mathfrak {t}}}(\hat{f}_{YY}\xi + \frac{1}{2}(\hat{f}_{Y}\xi Y^{-1} + Y^{-1}\xi \hat{f}_{Y}))\). We get the following formula, as in [8]:

8 A Family of Metrics for the Manifold of Positive-Semidefinite Matrices of Fixed Rank