Abstract

In this paper, we study a partially observed recursive optimization problem, which is time inconsistent in the sense that it does not admit the Bellman optimality principle. To obtain the desired results, we establish the Kalman–Bucy filtering equations for a family of parameterized forward and backward stochastic differential equations, which is a Hamiltonian system derived from the general maximum principle for the fully observed time-inconsistency recursive optimization problem. By means of the backward separation technique, the equilibrium control for the partially observed time-inconsistency recursive optimization problem is obtained, which is a feedback of the state filtering estimation. To illustrate the applications of theoretical results, an insurance premium policy problem under partial information is presented, and the observable equilibrium policy is derived explicitly.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The time-inconsistency control problem has a long research history. Among the first systematic treatments of this problem was the pioneering work of Strotz [1], in which a deterministic Ramsay problem is considered by viewing them within a game theoretic framework and looking for Nash equilibrium points. Further work along this line in continuous and discrete time had been done in [2–6]. Recently, a renewed interest in these problems has arisen, as for the first time, a precise definition of equilibrium among the class of feedback controls in continuous time was introduced in [7, 8], and [9]. The authors derived some generalized forms of the Hamilton–Jacobi–Bellman equations, which are systems of partial differential equations, and they also provided rigorous verification theorems.

However, despite the dynamic programming principle, there exists another powerful tool, the maximum principle, to deal with the optimal control problems. Peng [10] established the general maximum principle for a completely observed forward stochastic control system. It is well known that the optimal state equation and the associated adjoint equation consist of a Hamiltonian system, which is a forward and backward stochastic differential equation (FBSDE). Hu et al. [11] adopted the maximum principle method to investigate a time-inconsistency linear-quadratic stochastic control problem, where a family of parameterized FBSDEs, also called the Hamiltonian system, was introduced.

As mentioned above, they all assume that the information of dynamic systems is fully available to the controllers, i.e., they can observe the random noises and state equations completely. However, controllers usually can only get partial information, which renders this assumption unreasonable in reality. So there have been studies on forward stochastic control problems under partial information [12–16]. Yu [17] investigated a model of optimal investment and consumption with both habit formation and partial observations in incomplete Itô process markets by applying the Kalman–Bucy filtering theorem and dynamic programming arguments. Relying on a direct calculation for the derivative of cost functional, Huang, Wang, and Xiong [13] obtained a new kind of stochastic maximum principle for partial information control problems. Combining Girsanov’s theorem with a classical method used in the full information cases (see, e.g., [10, 18]), Li and Tang [15] and Tang [16] derived some global maximum principles. To get an observable maximum principle, Tang [16] used backward stochastic partial differential equations to describe the corresponding Hamiltonian system, which is a special forward and backward stochastic differential equation. In fact, it is natural to characterize the Hamiltonian system under partial information by filtering techniques for FBSDEs. However, there is only a few papers dealing with this problem, including Wu [19], who established a local maximum principle for partially observed recursive optimal control problems; Wang and Wu [20] proposed a backward separation technique for forward linear-quadratic Gaussian control system and obtained the filtering estimation of Hamiltonian system. In the present paper, we also adopt this technique to solve filtering estimation problems of FBSDEs.

Nonlinear backward stochastic differential equations (BSDEs) have been introduced by Pardoux and Peng [21] and Duffie and Epstein [22] independently. Duffie and Epstein [22] presented a concept of stochastic differential recursive utility, which is an extension of standard additive utility, with the instantaneous utility depending not only on the instantaneous consumption rate, but also on the future utility. As has been noted by El Karoui et al. [23], the stochastic differential recursive utility process can be regarded as the solution of a special BSDE. From the BSDEs’ point of view, El Karoui et al. [23] gave the formulation of recursive utilities and their properties. By virtue of solutions of BSDEs in describing the cost functionals of control systems, we establish the recursive optimal control problems. In the case of linear system, we study a partially observed time-inconsistency recursive control problem. A classical solving method is to combine celebrated Wonham’s separation theorem with a direct construction method introduced by Bensoussan [12]. In our framework, instead, we adopt a backward separation technique, which was proposed by Wang and Wu [20], to obtain the equilibrium control as a feedback of the state filtering estimation.

The rest of this paper is organized as follows. In the next section, we formulate a completely observed time-inconsistency recursive optimization problem. The verification theorem and a family of parameterized forward and backward stochastic differential equations are presented. As a preliminary to study the partially observed recursive optimization problem, in Sect. 3, we get the Kalman–Bucy filtering equations corresponding to the aforementioned parameterized FBSDEs. In Sect. 4, we obtain an observable equilibrium control of a partially observed time-inconsistency recursive optimization problem, which is a linear feedback of the state filtering estimation. In Sect. 5, an insurance optimal premium policy problem under partial information is investigated. We explicitly derive the observable optimal premium policy, which illustrates the applied prospect of our theoretical results obtained in this paper. The last section is devoted to conclude the novelty and distinctive feature of the paper and discuss the future research topics in related fields.

2 A Time Inconsistency Optimization Problem with Full Information

In this section, we first introduce a fully observed time-inconsistency control problem of forward and backward stochastic system.

Let \((\varOmega,\mathcal{F},P)\) be a filtered complete probability space equipped with the natural filtration \(\mathcal{F}_{s}=\sigma\{\xi ,W_{1}(r),W_{2}(r),0\le r\le s\}\), where (W 1(⋅),W 2(⋅)) is a two-dimensional standard Brownian motion defined on the space, T>0 is a fixed real number. ξ is a Gaussian random variable, independent of (W 1(⋅),W 2(⋅)), with mean m 0 and variance n 0 .

Throughout the paper, for the sake of convenience, we only consider the one-dimensional stochastic system. For the multidimensional case, similar results can be obtained by the same method.

Consider the forward and backward stochastic control system whose evolution is described by the following equation:

where the coefficients A(⋅), B(⋅), a(⋅), b(⋅), c(⋅), C 1(⋅), C 2(⋅), f 1(⋅), f 2(⋅) are \(\mathcal {F}_{t}\)-adapted processes, and \(u(\cdot)\in\mathcal{U}_{ad}\), defined by

Every element in \(\mathcal{U}_{ad}\) is called an admissible control.

At any time t∈[0,T], the payoff corresponding to \(u(\cdot)\in \mathcal{U}_{ad}\) is a functional given by

where \(\mathbb{E}_{t}[\cdot]:=\mathbb{E}[\cdot|\mathcal{F}_{t}]\) is the conditional expectation with respect to \(\mathcal{F}_{t}\), and (X(⋅),Y(⋅)) is the state trajectory under control u(⋅).

The first term \(\mathbb{E}_{t}[Y(t)]\) in the cost functional (2) represents the recursive utility of u(⋅) in a classical control problem, whereas the last two are unconventional. Specifically, the term \(-h e^{\int^{T}_{t}b(r)\,dr}(\mathbb{E}_{t}[X(T)])^{2}\) is motivated by the variance term in a mean-variance portfolio choice model [24, 25], and the term \(-(\mu_{1}X_{t}+\mu_{2})e^{\int^{T}_{t}b(r)\,dr}\mathbb{E}_{t}[X(T)]\), which depends on the state X t at time t, stems from a state-dependent utility function in economics [26]. Here, b(⋅) can be regarded as some discounting factor.

Each of these two terms introduces time-inconsistency of the underlying model. Thus, the notion “optimality” needs to be defined in another appropriate way. Motivated by [11], we adopt the concept of equilibrium solution, which is, for any t∈[0,T[, optimal only for spike variation in an infinitesimal way.

Given a control u ∗, for any t∈[0,T[, ε>0, and \(v\in L^{4}_{\mathcal{F}_{t}}(\varOmega;\mathbb{R})\), define

Definition 2.1

Let \(u^{*}\in\mathcal{U}_{ad}\) be a given control, and X ∗ be the state process corresponding to u ∗. The control u ∗ is called an equilibrium iff

where u t,ε,v is defined by (3), for any t∈[0,T[ and \(v\in L^{4}_{\mathcal{F}_{t}}(\varOmega;\mathbb{R})\).

Remark 2.1

For a standard time-consistent optimization control problem, if there exists an optimal control \(u^{*}\in\mathcal{U}_{ad}\), it must be an equilibrium, from which we can see that the concept of equilibrium is a natural generalization of optimal control for time-inconsistent control problems.

Finding out the equilibrium control subject to \(u(\cdot)\in\mathcal{U}_{ad}\) formulates a fully observed time-inconsistency recursive control problem. We also need the following assumption:

Assumption 2.1

a(⋅)≥0, c(⋅)≥ε>0, A(⋅), B(⋅), C 1(⋅), C 2(⋅), f 1(⋅), and f 2(⋅) are uniformly bounded deterministic functions with respect to s∈[0,T]. Besides, g≥h≥0, μ 1≥0, and μ 2 are all constants.

Since the drift term of dY(s) in (1) contains (Z 1(⋅),Z 2(⋅)), it brings us some trouble to express the cost functional. To simplify it, we redefine the probability measure Q on the space \((\varOmega ,\mathcal{F})\) by

According to Girsanov’s theorem, it follows that (U(⋅),V(⋅)) defined by

is a two-dimensional standard Brownian motion on the space \((\varOmega ,\mathcal{F},Q)\). It is easy to verify that (U(⋅),V(⋅)) and ξ remain mutually independent and ξ keeps the same probability law as before on \((\varOmega,\mathcal{F},Q)\).

Then we can rewrite the system as follows:

By the definition we know that if \(u(\cdot)\in\mathcal{U}_{ad}\), then \(\mathbb{E}^{Q}\int^{T}_{0}u^{4}(s)\,ds<+\infty\). In this case, \(\mathbb{E}^{Q} X^{4}(\cdot)<+\infty\), i.e., \(\mathbb{E}^{Q} Y^{2}(T)<+\infty\). So there exists a unique solution of (4). Therefore, the corresponding cost functional is rewritten as

where \(\mathbb{E}^{Q}_{t}[\cdot]:=\mathbb{E}^{Q}[\cdot|\mathcal{F}_{t}]\) denotes the conditional expectation with respect to \(\mathcal{F}_{t}\) on the space \((\varOmega,\mathcal{F},Q)\).

In the sequel, we present a general sufficient condition for equilibriums. We derive this condition by a second-order expansion in spike variation, with the same spirit of proving the stochastic Pontryagin maximum principle (see, e.g., [10, 18]).

Let u ∗ be a fixed control, and X ∗ be the corresponding state process. For any t∈[0,T[, define on the time interval [t,T] the processes

and

satisfying the following equations:

Note that, for each fixed t∈[0,T[, the above equations are backward stochastic differential equations (BSDEs). So they form a family of parameterized BSDEs. Since a(⋅)≥0 and g≥0 by Assumption 2.1, it follows that P(⋅;t)≥0.

Proposition 2.1

For any t∈[0,T[, ε>0, and \(v\in L^{4}_{\mathcal {F}_{t}}(\varOmega;\mathbb{R})\), define u t,ε,v by (3). Then

where \(\varLambda(s;t):= B(s) p(s;t)+2c(s)e^{\int^{s}_{t}b(r)\,dr} u^{*}(s)\).

Proof

Let X t,ε,v be the state process corresponding to u t,ε,v. Then, by the standard perturbation approach (see, e.g.,[18]) we have

where Z:=Z t,ε,v satisfies

Moreover, by Gronwall’s inequality, it is easy to verify that

Then we can calculate

Recalling that (p(⋅;t),k 1(⋅;t),k 2(⋅;t)) solves (6), we have

This proves (8). □

Since c(s)≥0, in view of (8), a sufficient condition for equilibrium is

Under some condition, the second equality in (9) is ensured by

The following theorem gives a sufficient condition of equilibrium for the fully observed time-inconsistency recursive optimization problem.

Theorem 2.1

Suppose that the system of stochastic differential equations

admits a solution (u ∗,X ∗,p,k 1,k 2) for any t∈[0,T[. If

satisfies condition (9) and \(u^{*}\in\mathcal{U}_{ad}\), then u ∗ is an equilibrium control.

Proof

Given (u ∗,X ∗,p,k 1,k 2) satisfying the condition in the theorem at any time t, for any \(v\in L^{4}_{\mathcal{F}_{t}}(\varOmega ;\mathbb{R})\), define Λ as in Proposition 2.1. Then

and this concludes the proof. □

3 Kalman–Bucy Filtering Equations

In this section, we derive the Kalman–Bucy filtering equations for parameterized forward and backward stochastic system (11) under partial information. It is crucial for solving the partially observed optimization problem, which will be formally established in the next section. For simplicity, we keep the same notation as before.

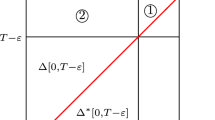

Suppose that the state variable (X ∗(⋅),p(⋅;t),k 1(⋅;t),k 2(⋅;t)) cannot be observed directly. However, we can observe a noisy process Z(⋅) related to X(⋅) whose dynamic is described by the equation

or, equivalently,

We introduce the following assumption.

Assumption 3.1

D(⋅), F(⋅), |H(⋅)|≥ε>0, and H −1(⋅) are uniformly bounded deterministic functions with respect to s.

Obviously, if Assumption 3.1 holds, then there exists a unique solution of (12) or (13).

Our filtering problem is to find the best estimation (in the sense of square error) of (X ∗(s),p(s;t),k 1(s;t),k 2(s;t)) with respect to the observation Z(⋅) up to time s, denoted by \((\hat{X}^{*}(s),\hat{p}(s;t),\hat {k}_{1}(s;t),\hat{k}_{2}(s;t))\) , i.e., we want to find the explicit expressions for

and their square error estimation. Here, \(\mathcal{Z}_{s}=\sigma\{Z(r), 0\le r\le s\}\).

Our method is first to look for the relations of (X ∗(s),p(s;t),k 1(s;t),k 2(s;t)) and then to compute \((\hat{X}^{*}(s),\hat{p}(s;t),\hat{k}_{1}(s;t),\hat {k}_{2}(s;t))\) by classical filtering theory for forward SDEs.

By the terminal condition of (11), given any t∈[0,T], we conjecture that

where M(⋅), N(⋅), Γ(⋅), and ϕ(⋅) are deterministic functions with respect to s∈[0,T].

For any fixed t, applying Itô’s formula to (14) in the time variable s, we get

Comparing the dU(s) and dV(s) terms with these of dp(s;t) in (11), we obtain

Now we ignore the difference between conditions (9) and (10), and put conjecture (14) of p(s;t) into (10). Then we have

from which we formally deduce

Next, comparing the ds term in (15) with that in (11) (we suppress the argument s here), we obtain

This leads to the following equations for M,N,Γ, and ϕ (again, the argument s is suppressed):

Obviously, the solution of (19) is \(\varGamma(s)=\mu_{1} e^{\int ^{T}_{s}(A(r)+b(r))\,dr}\). By setting J=M−N we note that it satisfies the Riccati equation

Thanks to Theorem 7.2 in Chap. 6 in Yong and Zhou [18], under Assumption 2.1, (21) admits a unique solution over [0,T]. Substituting the solutions of (19) and (21) into (17), (18), and (20), these three equations can also be solved.

We can check that M(⋅), N(⋅), Γ(⋅), and ϕ(⋅) are all uniformly bounded, and hence \(u^{*}\in\mathcal{U}_{ad}\) and \(X^{*}\in L^{2}(\varOmega;C(0,T;\mathbb{R}))\). By plugging p and u ∗ represented by (14) and (16) into Λ, defined as in Proposition 2.1, we have

Clearly, Λ satisfies the first condition in (9). Furthermore, we have

Hence ,Λ satisfies the second condition in (9). By Theorem 2.1, u ∗ is an equilibrium, and we obtain the relations of (X ∗(s),p(s;t),k 1(s;t),k 2(s;t)) as in (14).

In the following, we establish the filtering equations for forward–backward stochastic system (11). Obviously,

Then we only need to compute \(\hat{X}^{*}(s)\) and \(\hat{p}(s;t)\). From (4) it is easy to see that X ∗(⋅) is Gaussian, so are Z(⋅) and p(⋅;t). Let \(\gamma(s)=E^{Q}_{t}(X^{*}(s)-\hat{X}^{*}(s))^{2}\) be the square error of the estimation. From the facts that \((X^{*}(s)-\hat{X}^{*}(s))\perp\mathcal{Z}_{s}\) and \(X^{*}(s)-\hat{X}^{*}(s)\) is Gaussian we know that \(X^{*}(s)-\hat{X}^{*}(s)\) is independent of \(\mathcal {Z}_{s}\). So

By the classical filtering theory for forward SDEs (see, e.g., [27, 28]) we obtain the following equations for \(\hat{X}^{*}(s)\) and γ(s):

where the process

is an observable one-dimensional standard Brownian motion defined on \((\varOmega,\mathcal{Z},Q)\) with respect to the filtration \(\mathcal{Z}_{s}\), which is the so-called innovation process.

At the same time, we can get

where \(\hat{\mathbb{E}}^{Q}_{t}[\cdot]:=\mathbb{E}^{Q}[\cdot|\mathcal{Z}_{t}]\) denotes the conditional expectation with respect to \(\mathcal{Z}_{t}\) on the space \((\varOmega,\mathcal{Z},Q)\).

Taking conditional expectations on both sides of (14), we get

where \(\hat{X}^{*}(s)\) and \(\hat{\mathbb{E}}^{Q}_{t}[\hat{X}^{*}(s)]\) are the solutions of (23) and (25), respectively.

Therefore, we obtain the filtering estimation for parameterized FBSDEs (11).

Theorem 3.1

Let Assumptions 2.1 and 3.1 hold. Then the filtering estimation \((\hat{X}^{*}(s),\hat{p}(s;t),\hat{k}_{1}(s;t),\hat {k}_{2}(s;t))\) for solutions of parameterized forward and backward stochastic system (11) is given by (22), (23), (25), and (26), where M(s),N(s), Γ(s), and ϕ(s) are solutions of (17), (18), (19), and (20), respectively.

4 A Partially Observed Time-Inconsistency Recursive Control Problem

The objective of this section is to study the time-inconsistency recursive optimization control problem under partial information, which is closely related to the results in Sect. 3. We adopt the backward separation technique introduced by Wang and Wu [20] to find the equilibrium control.

Let us consider the following state and observation equations:

where \(u(\cdot)\in\mathcal{U}_{ad}\) and all coefficients satisfy Assumptions 2.1 and 3.1. For any \(u(\cdot)\in\mathcal{U}_{ad}\), it is easy to check that X 1(⋅)+X 2(⋅) and \(\bar{Z}_{1}(\cdot)+\bar{Z}_{2}(\cdot)\) are unique solutions of (4) and (13), respectively, i.e., X(⋅)=X 1(⋅)+X 2(⋅) and \(Z(\cdot)=\bar{Z}_{1}(\cdot)+\bar {Z}_{2}(\cdot)\).

Set \(\bar{\mathcal{Z}}_{s}=\sigma\{\bar{Z}_{1}(r),0\le r\le s\}\). We present the following:

Definition 4.1

A control variable u(⋅) is called admissible iff u(⋅) is an \(\mathbb{R}\)-valued stochastic process adapted to \(\mathcal{Z}_{s}\) and \(\bar{\mathcal{Z}}_{s}\) such that \(\mathbb {E}\int^{T}_{0}u^{4}(t)\,dt<+\infty\). The set of admissible controls is denoted by \(\bar{\mathcal{U}}_{ad}\).

Remark 4.1

By Definition 4.1 we see that if \(u(\cdot)\in\bar{\mathcal {U}}_{ad}\), then \(\mathcal{Z}_{s}=\bar{\mathcal{Z}}_{s}\), 0≤s≤T. So we can determine the control by observable process, but the observable process does not depend on the control. It is the main reason that the state and observation equations are decoupled. This kind of “decoupled” technique is inspired by Bensoussan [12]. Otherwise, there is an immediate difficulty when the observable process depends on the control.

It follows from Definition 4.1 and Remark 4.1 that

Again, by the classical filtering theory for forward SDEs, we can easily get the following result.

Proposition 4.1

For any \(u(\cdot)\in\bar{\mathcal{U}}_{ad}\), let Assumptions 2.1 and 3.1 hold. Then the state variable X(⋅), which is the solution of (4), has a filtering estimation

where the observable one-dimensional standard Brownian motion \(\bar {U}(\cdot)\) is defined as

and \(\Delta(\cdot)=\mathbb{E}^{Q}(X(\cdot)-\hat{X}(\cdot))^{2}\) satisfies

Remark 4.2

Obviously, the solution Δ(⋅) of (30) does not depend on admissible control \(u(\cdot)\in\bar{\mathcal{U}}_{ad}\). This is very important to solve our problem.

Under partially observed circumstance, the payoff of time-inconsistency recursive control problem corresponding to \(u(\cdot)\in\bar{\mathcal {U}}_{ad}\) is given by

Our problem is to seek an equilibrium control \(\bar{u}^{*}(\cdot)\in \bar{\mathcal{U}}_{ad}\) for the cost functional (31) subject to state system (4) and observation (13). Obviously, \(\bar{\mathcal{U}}_{ad}\subseteq\mathcal{U}_{ad}\), i.e., the equilibrium control \(\bar{u}^{*}(\cdot)\) is an element of \(\mathcal {U}_{ad}\). In order to obtain an observable equilibrium control, our intuition is to replace \(\bar{X}^{*}(s)\) by its filtering estimation \(\hat{\bar{X}}^{*}(s)\) in the equilibrium control (16) for full information:

where \(\bar{X}^{*}(s),\bar{p}(s;t),\bar{k}_{1}(s;t),\bar{k}_{2}(s;t)\) satisfies the FBSDEs

Although the drift term of forward SDE in (33) contains \(\hat{\bar {X}}^{*}(\cdot)\), fortunately, it is observable. So it does not bring any difficulty to compute \((\hat{\bar {X}}^{*}(s),\hat{\bar{p}}(s;t))\). From Proposition 4.1 we easily derive that

Solving (33) by usual techniques for BSDEs, we get

We now claim that

where M(⋅), N(⋅), Γ(⋅), and ϕ(⋅) are solutions of (17), (18), (19), and (20) respectively. In fact, if we let Ψ(⋅) be the fundamental solution of

combining (33) with (34), we have

where

It is easy to check that

Substituting (37) into (35), we have

with

From the existence and uniqueness of solutions to (17), (18), (19), and (20) it is easy to verify that \(\bar {M}(\cdot),\bar{N}(\cdot),\bar{\varGamma}(\cdot)\), and \(\bar{\phi}(\cdot)\) satisfy (17), (18), (19), and (20), i.e., \(M(\cdot)\equiv\bar{M}(\cdot),N(\cdot)\equiv\bar{N}(\cdot),\varGamma(\cdot )\equiv\bar{\varGamma}(\cdot),\phi(\cdot)\equiv\bar{\phi}(\cdot)\), concluding the claim (36).

Next, we will prove that the candidate (32) is indeed an equilibrium control of this problem, where \(\hat{\bar {X}}^{*}(\cdot)\) satisfies (34).

Since \(\hat{X}(\cdot)\perp(X(\cdot)-\hat{X}(\cdot))\), the cost functional (31) can be rewritten as

with

where \(\hat{X}(\cdot)\) and Δ(⋅) satisfy (29) and (30), respectively.

For any \(u^{t,\varepsilon,v}(\cdot)\in\bar{\mathcal{U}}_{ad}\) defined by (3) and the corresponding state process X t,ε,v, we easily derive that

with

Since all terms depending on Δ(⋅) have disappeared and the first three terms on the right-hand side of (39) are nonnegative, we only have to prove that

which implies that \(\bar{u}^{*}(\cdot)\) defined by (32) is an equilibrium control, and our intuition is true. In fact, noting (17), (18), (19), (20), and (36), it follows from Itô’s formula that

and

Substituting (42) and (43) into (40) and noting (32) and (36), we get

Thanks to Theorem 6.3 in Chap. 1 in Yong and Zhou [18], we have

where the constants K 1>0 and K 2>0 are independent of ε.

Recalling Assumptions 2.1 and 3.1, we confirm that Θ=o(ε). Then the conclusion (41) follows. Thus, we obtain the equilibrium for the time-inconsistency recursive optimization control problem under partial information.

Theorem 4.1

The equilibrium control for the partially observed time-inconsistency recursive optimization problem is given by (32), which is a feedback of the state filtering estimation, where M(⋅), N(⋅), Γ(⋅), and ϕ(⋅) are solutions of (17), (18), (19), and (20), respectively. Besides, \(\hat{\bar{X}}^{*}(\cdot)\) is the filtering state trajectory with respect to \(\mathcal{Z}_{s}\) under the equilibrium control, whose evolution is described by (34).

Remark 4.3

Recalling the cost functional (31), if the last two terms are deleted, then it becomes a standard time-consistent recursive optimization problem under partial information, which was deeply studied by Wang and Wu [20]. Theorem 4.1 generalizes the results obtained there.

5 Application in Insurance Premium Policy Problem

To illustrate the application of results obtained in this paper, we study a kind of optimal premium policy problem for an insurance firm under partial information.

We fix [0,T] a finite time horizon and \((\varOmega, \mathcal {F}^{W_{1},W_{2}},\{\mathcal{F}^{W_{1},W_{2}}_{s}\}_{0\le s\le T},P)\) a filtered probability space, on which two independent one-dimensional standard Brownian motions {W 1(s)}0≤s≤T and {W 2(s)}0≤s≤T are defined. Let \(\mathcal{F}^{W_{1},W_{2}}_{s}=\sigma\{W_{1}(s),W_{2}(s);0\le t\le s\}\) and \(\mathcal{F}^{W_{1},W_{2}}=\mathcal{F}^{W_{1},W_{2}}_{T}\). Now consider an insurance firm whose liability process is denoted by L(s). Recall that an insurance portfolio consists of a large number of independent individual claims, none of which can affect the total returns significantly, and thus, by the law of large numbers, L(s) can be approximated by (see Norberg [29] for more details)

Here, the liability rate l(s)>0 represents the expected liability per unit time due to premium loading, the premium rate (premium policy) v(s) acts as the control variable, while the volatility rate σ(s)>0 measures the liability risk. Note that we allow for v(s)<0, and this can be explained as “reward rate” or “dividend rate” to the claim holder. We assume that the insurance firm is not allowed to invest in any risky asset due to the supervisory regulations. Accordingly, the insurance firm only invests in a money account with compounded interest rate δ(s), and hence its cash-balance process X(s) is

where \(\varPhi(s)=\int^{s}_{0}\delta(r)\,dr\), and x 0>0 represents the initial reserve. According to Itô’s formula, we have

This is a controlled Ornstein–Uhlenbeck process, where the control v(⋅) is fully admissible in the sense of

Definition 5.1

An \(\mathbb{R}\)-valued premium policy v(⋅)={v(s)}0≤s≤T is called fully admissible iff v(s) is \(\mathcal{F}^{W_{1}}_{s}\)-adapted and \(\mathbb{E}\int^{T}_{0}v^{4}(s)\,ds<+\infty\). The set of all fully admissible policies is denoted by \(\mathcal{U}_{F}\).

Let us now turn to the preferences of a policymaker. For \(v(\cdot)\in \mathcal{U}_{F}\), we assume that the cost functional is

Here, β is a discounting factor, c 0 is some preset target, and R(s),G, and Q are the weighting factors that make the cost functional (45) more general and flexible to accommodate the preference of a policymaker.

From (45), we can see that the insurance firm has three objectives: first, to minimize the cost of premium policy over the whole time horizon; second, to minimize the terminal deviation of cash-balance process from the preset target; and, finally, to minimize the terminal variance of cash-balance process. In what follows, we adopt the following assumption.

Assumption 5.1

R(s)>0, R −1(s), δ(s), b(s), and σ(s) are all deterministic and uniformly bounded on [0,T]; the terminal weights G>0 and Q>0, and the discounting factor β>0.

However, in fact, it is only possible for the policymaker to partially observe the cash-balance process, due to the physical inaccessibility to underlying economic parameters, inaccuracies in measurements, discreteness of account information or possible delay in the actual payments. As a response, we study this premium problem with partial information for practice. In this regard, we confine ourselves to the following linear factor model (see, e.g., Bielecki and Pliska [30], Nagai and Peng [31], etc.):

where the cash-balance process X(s) is the underlying factor, which is partially observed through the observation S(s) with instantaneous volatility ρ(s). One typical example of S(s), in practice, is the stock price of the insurance firm. This is supported by Boswijk et al. [32], where the stock price is closely related to the underlying cash-balance process through the price-to-cash ratio, which is linear. We make the following assumption.

Assumption 5.2

Both c≠0 and a are constants, and ρ(s) and ρ −1(s) are both bounded deterministic functions.

For fixed X(s), clearly, (46) admits a unique solution under Assumption 5.2. Setting Z(s)≜logS(s) and applying Itô’s formula, we have

By the results obtained in Sect. 4 we decouple the state and observation equations as follows:

where \(v(\cdot)\in\mathcal{U}_{F}\), and all coefficients satisfy Assumptions 5.1 and 5.2. For any \(v(\cdot)\in\mathcal {U}_{F}\), it is easy to check that X 1(⋅)+X 2(⋅) and Z 1(⋅)+Z 2(⋅) are unique solutions of (44) and (47). Set \(\mathcal{F}^{Z}_{s}=\sigma\{ Z(r);0\le r\le s\}\) and \(\mathcal{F}^{Z_{1}}_{s}=\sigma\{Z_{1}(r);0\le r\le s\}\). To avoid that v(s) has an effect on \(\mathcal{F}^{Z}_{s}\), we introduce the following:

Definition 5.2

An \(\mathbb{R}\)-valued premium policy v(⋅)={v(s)}0≤s≤T is called partially admissible iff v(s) is \(\mathcal{F}^{Z}_{s}\)- and \(\mathcal{F}^{Z_{1}}_{s}\)-adapted with \(\mathbb{E}\int^{T}_{0}v^{4}(s)\,ds<+\infty\). The set of all partially admissible policies is denoted by \(\mathcal{U}_{P}\).

Let us now return to the cost functional (45). Introduce the backward SDE coupled with forward SDE (44)

Thus, the cost functional (45) can be rewritten as

Then, our goal is to find an equilibrium premium policy \(v^{*}(\cdot)\in\mathcal{U}_{P}\) for cost functional (50) subject to (44) and (47).

According to Theorem 4.5, we have the equilibrium policy in the form of

where \(\hat{X}^{*}(\cdot)\) is the filtering estimation of state process with respect to \(\mathcal{F}^{Z}_{s}\) under control \(v^{*}(\cdot)\in\mathcal{U}_{P}\), which satisfies the forward SDE

where \(\bar{W}_{1}(\cdot)\) is an observable standard Brownian motion defined as

and \(\Delta_{1}(\cdot)=\mathbb{E}^{Q}(X^{*}(\cdot)-\hat{X}^{*}(\cdot))^{2}\) satisfies

Besides, M 1(⋅),N 1(⋅),Γ 1(⋅), and ϕ 1(⋅) are solutions of the following ODEs, respectively:

Obviously, Γ 1(s)≡0. Let J 1=M 1−N 1. Then it satisfies

Solving (57) by usual techniques for ODEs, we get

Then we can also get

Therefore, we obtain the equilibrium premium policy as follows:

Theorem 5.1

Let Assumptions 5.1 and 5.2 hold. Then the observable equilibrium premium policy is

where J 1(⋅) and ϕ 1(⋅) are given by (58) and (59) explicitly, and \(\hat{X}^{*}(s)\) is the filtering cash-balance process with respect to \(\mathcal{F}^{Z}_{s}\) under the equilibrium premium policy (60), whose evolution is described by (52).

6 Conclusions

To the authors’ knowledge, it is the first attempt to study a partially observed time-inconsistency recursive optimization problem. On the whole, there are three distinguishing features of our paper: (1) The Kalman–Bucy filtering equations are first obtained for the Hamiltonian system, which is a family of parameterized FBSDEs; (2) The backward separation technique is introduced to overcome the difficulty of partial information optimization problem, and thus an observable equilibrium control is given as the feedback of state filtering estimation; (3) An insurance optimal premium policy problem under partial information is considered, and the observable equilibrium premium policy is obtained explicitly, which illustrates the applied prospect of our theory. Besides, our results have potential applications in lots of areas, especially in mathematical finance.

In our paper, the Hamiltonian system, which is a family of parameterized FBSDEs, is very interesting. We carried out one its solution by means of Riccati equations, whereas the general existence and uniqueness of its solution begs for systematic investigations. We also note that there are no state and control variables in the diffusion coefficients of the Hamiltonian system (11) and the control system (1). For those cases, we cannot get the explicit filtering estimation for this kind of forward and backward stochastic systems and for the equilibrium control for the partially observed time-inconsistency recursive optimization problem. To our best knowledge, it is still an open problem. We hope that we could furthermore develop this kind of theory and find more applications in the future work.

References

Strotz, R.: Myopia and inconsistency in dynamic utility maximization. Rev. Econ. Stud. 23, 165–180 (1955)

Goldman, S.: Consistent plans. Rev. Econ. Stud. 47, 533–537 (1980)

Krusell, P., Smith, A.: Consumption and savings decisions with quasi-geometric discounting. Econometrica 71, 366–375 (2003)

Peleg, B., Menahem, E.: On the existence of a consistent course of action when tastes are changing. Rev. Econ. Stud. 40, 391–401 (1973)

Pollak, R.: Consistent planning. Rev. Econ. Stud. 35, 185–199 (1968)

Vieille, N., Weibull, J.: Multiple solutions under quasi-exponential discounting. Econ. Theory 39, 513–526 (2009)

Ekeland, I., Lazrak, A.: Being serious about non-commitment: subgame perfect equilibrium in continuous time. Preprint, University of British Columbia (2006)

Ekeland, I., Privu, T.: Investment and consumption without commitment. Math. Financ. Econ. 2, 57–86 (2008)

Björk, T., Murgoci, A.: A general theory of Markovian time inconsistent stochastic control problems. SSRN: http://ssrn.com/abstract=1694759 (2010)

Peng, S.G.: A general stochastic maximum principle for optimal control problems. SIAM J. Control Optim. 28(4), 966–979 (1990)

Hu, Y., Jin, H., Zhou, X.: Time-inconsistent stochastic linear-quadratic control. Preprint (2011)

Bensoussan, A.: Stochastic Control of Partially Observable Systems. Cambridge University Press, Cambridge (1992)

Huang, J., Wang, G., Xiong, J.: A maximum principle for partial information backward stochastic control problems with applications. SIAM J. Control Optim. 48(4), 2106–2117 (2009)

Xiong, J., Zhou, X.: Mean-variance portfolio selection under partial information. SIAM J. Control Optim. 46(1), 156–175 (2007)

Li, X., Tang, S.: General necessary conditions for partially observed optimal stochastic controls. J. Appl. Probab. 32, 1118–1137 (1995)

Tang, S.: The maximum principle for partially observed optimal control of stochastic differential equations. SIAM J. Control Optim. 36(5), 1596–1617 (1998)

Yu, X.: An explicit example of optimal portfolio-consumption choices with habit formation and partial observations. arXiv:1112.2939 (2012)

Yong, J., Zhou, X.: Stochastic Controls: Hamiltonian Systems and HJB Equations. Springer, New York (1999)

Wu, Z.: The stochastic maximum principle for partially observed forward and backward stochastic control systems. Sci. China 53(11), 2205–2214 (2010)

Wang, G., Wu, Z.: Kalman–Bucy filtering equations of forward and backward stochastic systems and applications to recursive optimal control problems. J. Math. Anal. Appl. 342, 1280–1296 (2008)

Pardoux, E., Peng, S.G.: Adapted solutions of a backward stochastic differential equation. Syst. Control Lett. 14, 55–61 (1990)

Duffie, D., Epstein, L.: Stochastic differential utility. Econometrica 60, 353–394 (1992)

El Karoui, N., Peng, S.G., Quenez, M.C.: Backward stochastic differential equations in finance. Math. Finance 7, 1–71 (1997)

Hu, Y., Zhou, X.: Constrained stochastic LQ control with random coefficients and application to portfolio selection. SIAM J. Control Optim. 44, 444–466 (2005)

Zhou, X., Li, D.: Continuous-time mean-variance portfolio selection: a stochastic LQ framework. Appl. Math. Optim. 42, 19–33 (2000)

Bjork, T., Murgoci, A., Zhou, X.: Mean-variance portfolio optimization with state dependent risk aversion. Math. Finance (2012). doi:10.1111/j.1467-9965.2011.00515.x

Liptser, R.S., Shiryayev, A.N.: Statistics of Random Process. Springer, New York (1977)

Xiong, J.: An Introduction to Stochastic Filtering Theory. Oxford University Press, London (2008)

Norberg, R.: Ruin problems with assets and liabilities of diffusion type. Stoch. Process. Appl. 81, 255–269 (1999)

Bielecki, T., Pliska, S.: Risk-sensitive dynamic asset management. Appl. Math. Optim. 39, 337–360 (1999)

Nagai, H., Peng, S.: Risk-sensitive dynamic portfolio optimization with partial information on infinite time horizon. Ann. Appl. Probab. 12, 173–195 (2002)

Boswijk, H., Hommes, C., Manzan, S.: Behavioral heterogeneity in stock prices. J. Econ. Dyn. Control 31, 1938–1970 (2007)

Acknowledgements

This work is supported by the Natural Science Foundation of China (11221061 and 61174092) and the Natural Science Fund for Distinguished Young Scholars of China (11125102).

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Francesco Zirilli.

Rights and permissions

About this article

Cite this article

Wang, H., Wu, Z. Partially Observed Time-Inconsistency Recursive Optimization Problem and Application. J Optim Theory Appl 161, 664–687 (2014). https://doi.org/10.1007/s10957-013-0326-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10957-013-0326-4