Abstract

This study investigated to what extent the implementation of a Google Earth (GE)-based earth science curriculum increased students’ understanding of volcanoes, earthquakes, plate tectonics, scientific reasoning abilities, and science identity. Nine science classrooms participated in the study. In eight of the classrooms, pre- and post-assessments of earth science content, scientific reasoning, and science identity were completed. In one classroom, a staggered implementation of the curriculum was completed to control for student and teacher variables. In all nine classrooms, implementation of the GE curriculum advanced students’ science identity, earth science understanding, and science reasoning, but the curriculum was most transformative in terms of scientific reasoning. Two factors were identified related to student success. Students with strong science identities and high reading proficiencies demonstrated greater science learning outcomes. Math proficiency and gender did not affect learning outcomes.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Increasing access to satellite imagery tools such as Google Earth is revolutionizing the work of scientists and changing the way we understand Earth systems. Released in 2005 and downloaded over a billion times by 2011 (Google 2012), Google Earth allows users to access high-quality global information and visualize spatial data patterns (Elvidge and Tuttle 2008; Rakshit and Ogneva-Himmelberger 2008). While science teachers are increasingly incorporating Google Earth (GE) into their teaching, the majority of classroom applications limit students’ involvement to passive observation of locations in space, missing the opportunity to enable learners to manipulate, represent, and analyze spatial data (Bodzin et al. 2014; Bodzin 2011; Hall-Wallace and McAuliffe 2002; Trautmann and MaKinster 2010). In response, project leaders secured National Science Foundation funding to develop, implement, and evaluate a GE-based curriculum designed to foster teacher and student use of GE as a geoscientist would—to view, explore, and create geospatial representations that advance earth science understanding.

Google Earth and Science Learning

The growing research literature on using GE to advance science learning indicates the use of GE promotes spatial thinking and advances science interest and understanding (Bodzin et al. 2013; Thompson et al. 2006; Bailey and Chen 2011; Treves and Bailey 2012). Kulo and Bodzin (2013) developed an 8-week energy unit where eighth-grade students used GE to determine the best location for new solar, wind, and geothermal power facilities. Using pre-/post-content knowledge assessments, findings indicated a significant increase in energy content knowledge with large effect sizes. Wilson et al. (2009) designed an inquiry-based curriculum to introduce middle school students to landscape change. Using GE, students explored changes in plant and animal diversity over time at sites located across the globe. Survey results showed that using GE provided a motivational tool to advance students’ understanding of the relationships between land cover and biological diversity. Cruz and Zellers (2006) compared the use of GE and standard textbook instruction when teaching undergraduate students about landforms. Students in the GE condition demonstrated deeper understanding of the related science content. Barnett et al. (2014) developed an urban ecology curriculum where middle school students used GE to locate and analyze data patterns regarding water quality, urban street trees, bioacoustics, and soil quality. While the data varied across the three study years, the general trend in post-assessment results showed an increase in understanding of urban ecology. Gobert et al. (2012) developed GE curriculum to foster undergraduate student understanding of Iceland’s geology and geography. Using a pre-/post-design, learning gains were identified, regardless of prior knowledge or gender.

Others researchers move beyond the goal of science content learning and contend that the ability to use geospatial technologies such as GE is a basic citizenship requirement given the ubiquitous use of geospatial images and databases across many professional fields (Bednarz and van der Schee 2006; NRC 2006; Makinster et al. 2014). While GE is but one geospatial technology tool, it is widely recognized as the easiest entry point for teachers and students to begin exploring the field of geospatial technologies before pursuing more sophisticated geospatial technologies such as geographic information systems (Kolvoord et al. 2014; Schulz et al. 2008; Whitmeyer et al. 2010; Blank et al. 2012).

Within the growing collection of GE curricula, few attempts have been made to embed GE within a holistic curriculum that scaffolds investigations to systematically build students’ science content understanding, scientific reasoning abilities, and science identities. Further, limited research exists on what factors affect student success within a GE-centered curriculum. Consequently, this study focused on understanding to what extent the implementation of a GE-based curriculum increased students’ science content understanding, scientific reasoning abilities, and science identity and identified factors affecting student success.

Background

Google Earth Curriculum

Project leaders developed a six-week, Google Earth-based, middle school science curriculum covering volcanoes, earthquakes, and plate tectonics. The curriculum targeted three Next Generation Science Standard (NGSS) performance expectations:

-

MS-ESS2-2: construct an explanation based on evidence for how geoscience processes have changed Earth’s surface at varying time- and spatial scales;

-

MS-ESS2–3: analyze and interpret data on the distribution of fossils and rocks, continental shapes, and seafloor structures to provide evidence of the past plate motions;

-

MS-ESS3-2: analyze and interpret data on natural hazards to forecast future catastrophic events and inform the development of technologies to mitigate their effects (NGSS Lead States 2013).

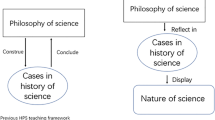

Three modules with three investigations each were developed to introduce and apply twenty-two earth science concepts, seventeen discrete GE skills, and thirty-nine data analysis opportunities (Table 1). In contrast to most curricula addressing an understanding of plate tectonics, the GE curriculum in this study begins with volcanoes, proceeds to earthquakes, and ends with plate tectonics. This was a deliberate attempt to (1) help students experience the historical process of discovery scientific communities have engaged in as they struggled to delineate the mechanisms responsible for observable Earth processes, and (2) foster students’ appreciation and understanding of a scientific worldview.

All materials are available on the curriculum companion Web site, which includes a curriculum overview, teacher’s guide, introductory GE activity, introductory video, GE (kmz) files, student field notebooks, notebook grading rubric, challenge activities (assessments), and answer keys. A detailed description of the curriculum, an overview of the curriculum development process, and analysis of students’ use of GE and development of geospatial skills have been published elsewhere (Blank et al. 2012; Almquist et al. 2012).

Theoretical Framework

A Learning-for-Use design framework (a variant of the Learning Cycle and the Five Es) guided the curriculum development process (Edelson 2001). This design framework was expected to promote engagement and motivation, and foster the development of useful science understandings that students could then apply in new contexts. Four basic principles from cognitive science research guide the Learning-for-Use design framework:

-

1.

Learning takes place through the construction and modification of knowledge structures.

-

2.

Knowledge construction is a goal-directed process that is guided by a combination of conscious and unconscious understanding of goals.

-

3.

The circumstances in which knowledge is constructed and subsequently used determine its accessibility for future use.

-

4.

Knowledge must be constructed in a form that supports use before it can be applied.

These principles form the foundation for a learning process involving (1) motivation, (2) knowledge construction, and (3) knowledge refinement. In this first step, teachers motivate students by creating a need for specific content understanding. Students recognize the usefulness of what they are learning and then develop meaningful constructs for applying new and valuable understandings. In the second step, students build new knowledge structures that are anchored to previous understandings. In the third step, students organize, connect, and reinforce knowledge structures (Anderson 1983; Glaser 1992; Kolodner 1993; Schank 1982; Simon 1980).

As an example, the “Volcano Hazards and Benefits” module challenges students to consider, “Are all volcanoes dangerous?” Students then use GE to visit a series of volcanoes, explore their respective benefits and hazards, collect data (e.g., volcano width, height, and slope), and view a series of related animations highlighting volcano types (e.g., cinder cone, shield, or composite). Students then apply this knowledge to answer the initial question and develop a plan for monitoring volcanic activity in Yellowstone National Park.

Scientific Reasoning

Fostering students’ ability to cumulatively evaluate evidence and construct science explanations through scaffolded investigations served as a central organizing feature for the GE curriculum. Engaging students in the practice of seeking evidence and reasons for their knowledge claims deepens their content knowledge and understanding of science as a social process where knowledge is constructed, not compiled and memorized (Zohar and Nemet 2002; Bell and Linn 2000). Consequently, each investigation in each module included activities calling for the construction of scientific explanations using evidence collected from GE. To support teachers in establishing the use of scientific explanations as a classroom norm, students used a claims, evidence, and reasoning (Table 2) science explanation framework (McNeill and Krajcik 2009, 2012; McNeill et al. 2006).

Prior research has shown that when teachers explicitly define a science explanation and then delineate and model its components, students successfully construct science explanations. Erduran et al. 2004; Lizottee et al. 2004; McNeill and Krajcik 2009). In the first GE module, teachers introduced science explanations and explicitly defined each component: claim—statement in response to a problem or question posed; evidence—scientific data that are appropriate and sufficient to support the claim; reasoning—justification using appropriate and sufficient scientific principles showing why the data counts as evidence to support the claim. Teachers then modeled how to construct a science explanation using the CER framework, completed science explanations for each investigation as a whole-class activity, and evaluated those explanations as a whole class providing explicit instruction on features that make a strong or weak claim, evidence, or reasoning.

In the second module, students were again provided the CER framework and grading rubric, but independently developed science explanations and peer-edited their science explanations using the grading rubric. In the third module, students independently developed science explanations without being provided the CER framework and self-assessed their science explanations using a grading rubric.

Science Identity

In developing the curriculum, it was assumed that science is inherently a “community of practice into which members must be acculturated” and that a student’s science identity can be a powerful predictor of science interest and persistence (Calabrese Barton et al. 2013; Carlone and Johnson 2007; Lave and Wenger 1991). Research by Tai et al. (2006) reported that science interest and identity is often more important than achievement in science courses for predicting who will initiate and persist in a science major. They explain this is because increased interest and motivation develops a scientific self-concept (i.e., I am someone who is interested in science) and a scientific possible self (i.e., I can see myself as a scientist one day). Students with strong science identities can imagine themselves as scientists, recognize themselves as competent in the understanding of scientific principles, and are often publically recognized by others for their abilities (Britner and Pajares 2006). When students have a strong vision of themselves as a scientist, they more often pursue and persist in a science major or career.

Given these assumptions, each GE investigation challenged students to explore questions unique to specific scientific careers (e.g., volcanologist and seismologist) and included diverse images and narratives of working scientists (e.g., female and African American).

Math and Reading Proficiency

Using education, labor, and census data, the US STEM Education Model examined 200 variables unique to the goal of increasing the number of students earning a STEM degree (Wells et al. 2007). From their analysis, two dominant variables were identified: proficiency in mathematics and student interest in STEM. Students who are proficient in math and interested in STEM are more likely to pursue and successfully sustain a STEM major or career. Given this finding, it was assumed that math proficiency would be a significant factor in students’ earth science content understanding and scientific reasoning outcomes.

Regarding reading proficiency, it is widely recognized that “The root of deep understanding of science concepts and scientific processes is the ability to use language to form ideas, theorize, reflect, share and debate with others, and ultimately, communicate clearly to different audiences” (Douglas et al. 2006, p. xi). Students with proficient reading levels have more access to content area text and, consequently, experience more success in building science understanding and explanations (Beck et al. 2002). Multimedia environments that offer a variety of reading supports including animation, embedded dictionaries, and videos can enhance content area reading comprehension outcomes (Chambers et al. 2006).

Because the GE curriculum included rich and abundant non-text learning episodes (e.g., GE, video, and flash animations) and an embedded “mouse-over” glossary for all science vocabulary, it was expected that reading proficiency would play a reduced role in students’ science understanding and reasoning outcomes.

Research Questions

Using the curriculum described above, the following research questions were explored:

-

1.

Do students who complete the curriculum experience increased knowledge of volcanoes, earthquakes, and plate tectonics after module completion?

-

2.

Do students who complete the curriculum experience increased scientific reasoning abilities [as defined by the claims, evidence, and reasoning (CER) strategy] after module completion?

-

3.

Do students who complete the curriculum experience increased science identity after module completion?

-

4.

Does science identity, math proficiency, reading proficiency, and gender influence earth science learning outcomes?

Methods

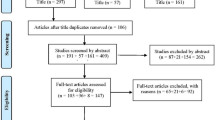

Participants

Teachers were recruited from a large network of Pacific Northwest middle school science teachers who had participated in STEM-related professional development courses sponsored by the authors over the past 8 years. Nine middle school science teachers participated in the study, including five females and four males, all indicating Euro-American as race. Most were experienced teachers with seven having taught earth science for 15 years or more. One was just beginning her second year of teaching.

Participating classes represented both rural and urban communities and ranged in size from six to twenty-nine students per class. 233 students completed the curriculum and all associated assessments: 123 (53 %) males, 110 (47 %) females, 212 Euro-American students (90 %), fourteen (6 %) Native American students, and seven Latino students (Table 3). Students receiving free and reduced lunch (FRL) ranged from 10 to 43 percent across classroom sites.

Teacher Training

In April 2011, prior to beginning the curriculum, participating teachers attended a two-day orientation workshop. There they became familiar with the curriculum framework and resources, including the project Web site teacher’s guides, field notebooks, and GE files. They worked through portions of each module in order to gain a sense of how the curriculum was set up and the various types of activities. Teachers were encouraged to ask questions and offer suggestions for curriculum improvements.

Curriculum Implementation and Technical Support

Teachers implemented the six-week curriculum during the 2011–2012 school year. Three teachers covered the material in the fall semester, five in the spring, and one both fall and spring, depending on where it fit into their course schedules and other curricula (102 students in fall; 131 students in spring). As teachers implemented the curriculum, they were provided ongoing technical support from project staff. This assistance primarily concerned hardware and software compatibility, bandwidth, and Internet access issues. Teachers also “checked in” with project leaders several times during their implementations by videoconference to address questions pertaining to scientific content and pedagogy.

Measures

All Classrooms

All students in the nine participating classrooms were assessed for changes in science content understanding, scientific reasoning, and science identity before and after completing the curriculum.

Earth Science Content Understanding Assessment

The earth science content understanding assessment instrument consisted of 30 selected-response (SR) items, including eight items dealing with volcanoes, 10 items on earthquakes, and 12 items on plate tectonics. All SR items were selected from the Misconceptions Oriented Standards-Based Assessment Resources for Teachers (MOSART) database (Sadler et al. 2010). Developed and validated by a team of researchers in the Harvard University Science Education Department, MOSART assessment items are available free to users in the areas of K-12 physical science and earth science content. The MOSART earth science assessment instrument includes a series of SR items linked to national content standards and the research literature on students’ science misconceptions. Each of the 30 SR items in the assessment employed in this study had four choices worth one point for a total of 30 possible points.

Scientific Reasoning Assessment

The curriculum endeavored to advanced students’ scientific reasoning skills by promoting and scaffolding student use of the CER strategy when constructing science explanations. While several valid and reliable instruments exist to measure scientific reasoning, none include the CER framework used in the GE curriculum. Consequently, a constructed-response item (CR) measuring students’ ability to develop a science explanation was adapted specifically for this project and administered as part of the science understanding assessment (Fig. 1).

In the CR item, students considered four recordings of seismic waves generated by an earthquake at point R and recorded at two locations, W and X. Based on this information, students were asked to select the seismograph recording that was most accurate and then construct a science explanation to support their decision. A successful CER science explanation included an accurate and complete claim, appropriate and sufficient evidence to support the claim, and reasoning that linked the evidence to the claim including relevant scientific principles.

The CR item was worth 12 points, including four points for each CER dimension. Table 4 delineates the grading rubric used including representative student responses. It should be noted that an inaccurate claim received a score of one, rather than a two, to maintain consistent values when applying the rubric across all three criteria.

Science Identity Survey

The science identity survey instrument was adapted from one developed by Aschbacher and Roth (2009) to measure tenth-grade students’ interests, experiences, and attitudes that influence science identity, science participation, and college and career choices as part of the Caltech Precollege Science Initiative. For the current study, project leaders adapted the reading level (e.g., shorter sentences and concrete and age-appropriate vocabulary) and eliminated questions that did not apply to middle school age learners (e.g., science course selection). The adapted survey included 11 Likert scale items and six SR items covering five science identity dimensions: competence, recognition, performance, perception of scientists, and interest in a science career. For each Likert scale item, students rated their reactions to statements as: agree strongly, agree somewhat, disagree somewhat to disagree strongly (Table 5).

Staggered Implementation Case Study

It was anticipated that (1) teaching style would affect student outcomes, and (2) students would increase scientific content knowledge during the course of the year as a result of hearing stories on the news (e.g., tsunami in Japan) and other engagements with plate tectonics-related content in everyday life. Therefore, in one of the nine classrooms, a staggered implementation case study was used to study the impact of the curriculum while controlling for teacher variables and threats to internal validity. The teacher having the most students (124 of 233) in the overall study agreed to participate in this case study. He was an experienced teacher, having taught science for 15 years. He implemented the GE curriculum in six sections (A–F) of seventh-grade science.

The six sections were divided into two conditions. Condition One included 60 students in sections A, B, and C. These students engaged in the GE curriculum during the fall semester and in the regular curriculum during the spring semester. Condition Two included 64 students in sections D, E, and F. These students participated in the regular curriculum during the fall semester and in the GE curriculum during the spring semester (Table 6).

Students’ science content understanding and scientific reasoning were assessed three times during the school year. Pretests were administered at the beginning of the school year; mid-tests were administered at the end of the fall semester; and posttests were administered at the end of the school year. The pre-, mid-, and posttests were administered to all students at the same time. In addition, fall semester reading and math Measures of Academic Progress® (MAP) test scores (described below) were collected for these students. Not all of the students were present during the administration of each of the tests. There were 124 students at pretest, 113 at mid-test, and 115 at posttest.

Measures of Academic Progress® (MAP) Test Scores

Measures of math and reading proficiency were assessed using the Measures of Academic Progress assessment (MAP). MAP is a standardized, state-aligned, computerized, adaptive assessment program for grades 3–10. Developed by the Northwest Evaluation Association, the MAP measures on- or off-grade proficiency and growth over time in critical reading and math content. It is administered annually by participating school districts to all students in September, February, and May.

Based on the item response theory, MAP scores report a RIT (Rasch unit) score that measures individual item difficulty values and a percentile score that indicates where a student falls in relation to the norming group for his or her particular grade level. Scores depend on two things: how many questions are answered correctly and the difficulty of each question. Because the system adapts to the students’ responses based on the accuracy of their answers, the number of questions varies for each student with an average of 20 items/test. MAPS data are available only for the case study students as the other participating schools either did not collect MAP data or did not consent to the use of the MAP data.

Analysis

Paired-Sample t Test

These analyses were conducted to compare pre- and post-implementation test scores in science content understanding and scientific reasoning. Levels of significance were set at p < .05. Effect sizes (ES) are reported as a standardized metric for quantifying the effectiveness of the curricular intervention where an ES of 0.2 is small, 0.5 is medium, and 0.8 large (Bodzin 2011; Kantner 2009; Coe 2002).

Wilcoxon Signed Rank Test

This nonparametric related samples test is used when the dependent data are ordinal, which is the case for science identity outcomes. Consequently, this test was conducted to understand any pre-/posttest differences in students’ content understanding and scientific reasoning abilities related to science identity.

Between-Subject ANOVA

The factorial analysis of variance was used to test whether the independent variables of pretest earth science content understanding, gender, math proficiency, reading proficiency, and science identity affected the posttest earth science content understanding. Then, a general linear model was used to explore the combination and degree these items might play in predicting student earth science content understanding outcomes. Partial eta-squared values are provided to understand effect sizes. A small ES is 0.01, medium is 0.06, and large is 0.14 (Cohen 1988, 1992).

Results

Earth Science Content Understanding: All Students

The paired-sample t test for science content understanding for all students indicated statistically significant gains with a large effect size (Table 7). Specifically, the average test score increased from 65 % on the pretest to 94 % on the posttest. The independent samples Mann–Whitney test showed no difference in the distribution of scores for science content understanding across gender. As well, no differences were noted in a fall versus a spring curriculum implementation or across schools.

Scientific Reasoning: All Students

One rater using the grading rubric in Table 3 first scored all of the science explanations. Then, a second independent rater scored 20 percent of the assessed science explanations. Inter-rater reliability was calculated by percent agreement. The inter-rater agreement was 100 % for claim, 97 % for evidence, and 93 % for reasoning. Scientific reasoning scores for all students indicated statistically significant gains with large effect sizes (Table 8). The average scores for all students increased from 20 % on the pretest to 47 % on the posttest—a 135 % change.

On the pretest, the majority of student responses included only a claim and one piece of evidence with no reasoning provided. On the posttest, the majority of students’ science explanations included a claim, evidence, and reasoning. For those students who struggled, this was either because: (1) they did not include at least three pieces of evidence, or (2) their reasoning was appropriate, but not sufficient.

To be considered sufficient evidence, students were expected to provide the following three pieces of evidence: (1) The seismograph shows that the seismic waves reached location X before location W; (2) Location X is closer to point R than location W is to point R; and (3). The seismic waves are traveling at the same rate of speed from point R to points W and X. While most students successfully identified the first two pieces of evidence, many failed to include rate of wave speed.

Student science explanation scores were highest in those classrooms where: (1) teachers reported high levels of self-efficacy in creating and teaching science explanations; and (2) teachers first modeled the construction of science explanations as a whole-class activity (scaffold level 1) followed by independent practice (scaffold level 2) with guided peer editing (scaffold level 3) and self-assessment opportunities (scaffold level 4) using the CER grading rubric.

While this scaffolding was outlined in the curriculum, many teachers reported that the development of science explanations took too long so they elected to develop only whole-class science explanations and eliminated the peer edit and self-assessing of science explanations. These proved to be critical decisions in terms of science explanation outcomes. Teachers with the lowest student science explanations scores employed limited scaffolding opportunities for students (Fig. 2). No differences were noted in a fall versus a spring implementation.

Science Identity: All Students

Results for all students indicated that students’ perceptions of themselves as scientists aggregated across the five dimensions increased on average from pretest to posttest (p = .022). When these results were disaggregated by dimension, the related samples Wilcoxon signed rank test showed that one dimension of science identity—performance—significantly contributed to the variance observed in posttest scores for science content understanding and scientific reasoning. Students who viewed themselves as capable of being a good scientist one day—performance—had higher combined scores on the science understanding and scientific reasoning. The Wilcoxon signed rank test yielded no significant differences for the remaining science identity dimensions. As well, no differences were noted in a fall versus a spring implementation or across schools (Table 9).

Earth Science Content Understanding and Math/Reading Proficiencies: Case Study Students

Average September reading and math proficiency scores for all case study students ranged from 226–238 in math and 212–220 in reading (Table 10). In seventh grade, a math MAP RIT score of 226 meets the state’s expected performance level; a reading MAP RIT score of 218 meets the state’s expected performance level. These results show that all students met the performance expectations for math, but not for reading. Students from Condition One had an average reading score of 219 and an average math score of 233. Students from Condition Two had an average reading score of 219 and an average math score of 231.

To better understand any role that math and reading proficiency might play in student earth science content understanding outcomes, case study students’ pretest earth science content understanding scores between the two conditions were adjusted using the MAP math and reading scores as covariates. The SPSS general linear model results showed no statistically significant differences in pretest scores at the p < .05 level. The average score for students from Condition One was 36.1 % correct and that for Condition Two students was 34.3 % correct (Table 11).

All students were administered the mid-test right after Condition One students completed the curriculum. As anticipated, Condition One students (who had engaged in the GE curriculum) scored, on average, significantly higher at the p < .05 level than did Condition Two students. Specifically, Condition One students’ average score increased by 8.4 %, whereas Condition Two students’ average score increased by only 1.5 % (Table 12).

Once Condition Two students completed the curriculum, all students were administered the posttest. Condition Two students’ average score increased 12.2 % from mid-test to posttest, while Condition One students’ average score decreased by 2.9 %.

When earth science understanding posttest scores were adjusted by pretest score, and MAP reading and math scores, the data continued to show a significant difference in posttest scores between the two conditions (Table 13) confirming that the curriculum was responsible for the increased earth science content understanding.

Factors Affecting Earth Content Science Understanding and Scientific Reasoning: Case Study Students

A between-subject ANOVA was used to further understand how the independent variables of pretest earth science content understanding, gender, math proficiency, reading proficiency, and science identity dimensions affected the posttest earth science content understanding and scientific reasoning scores. Table 14 shows a significant main effect for reading proficiency, F(1,8) = .001 and science identity, F(1,87) = .022, p < .05.

Science identity and reading proficiency explained 46 % of the variance in earth science content understanding and scientific reasoning scores. Students with strong science identities and high reading proficiencies demonstrated higher learning outcomes on the posttest assessments. The ES for reading (.122) was medium strong, while the ES for science identity was medium (.06).

Discussion

The GE-based curriculum examined in this study was designed to foster teacher and student use of GE as a geoscientist would—to view, explore, and create geospatial representations that advance earth science understanding. Four research questions were asked:

-

1.

Do students who complete the curriculum experience increased knowledge of volcanoes, earthquakes, and plate tectonics after module completion?

-

2.

Do students who complete the curriculum experience increased scientific reasoning abilities (as defined by the claims, evidence, and reasoning (CER) strategy) after module completion?

-

3.

Do students who complete the curriculum experience increased science identity after module completion?

-

4.

Does science identity, math proficiency, reading proficiency, and gender influence earth science learning outcomes?

In answer to research questions one–three, implementation of the GE curriculum advanced students’ science identity, understanding of volcanoes, earthquakes, and plate tectonics and science reasoning abilities as defined by the CER framework. The large effect size for science content understanding is consistent with the findings of previous GE-based curriculum research and confirm GE can be a useful tool for advancing student science learning.

Still, the curriculum was most transformative in terms of scientific reasoning. A 135 % increase in science reasoning scores confirm this, but an average post-score of 47 % suggests more work is needed to elevate the quality of students’ CER science explanations. Of the three CER components, scientific reasoning proved to be the most challenging. Similar to McNeill et al.’s (2006) findings, students’ reasoning was appropriate, but insufficient. Students successfully related location and timing of the observed seismic activity, but failed to relate the data recordings on the seismograph to the seismic waves generated by the earthquake. Sandoval (2001) suggests insufficient evidence is particularly common for students when they are trying to make sense of data within a new content domain, as was the case for this curriculum.

As well, it is important to note the high standard deviations observed in the science reasoning scores. Several explanations are possible. While the CER strategy was new for all participating teachers, each reported varying levels of self-efficacy in using the CER framework. Five of the nine did not feel completely successful in their modeling of a science explanation because they were still developing their explanations, and these results suggest the need for continuing teacher professional development in formulating science explanations using sufficient evidence for claims and reasoning and targeted classroom discussion regarding science explanation criteria and establishment as a classroom norm (Tabak 2004; Erduran et al. 2004).

Finally, the GE curriculum scaffolding for scientific reasoning included explicit CER definitions, modeling, and rationale, but science explanations were not connected to everyday explanations—a component other researchers have indicated as helpful in developing high-quality science explanations (Moje et al. 2007; McNeill and Krajcik 2009). Adding this component into the GE curriculum and related teacher professional development activities may elevate future science reasoning outcomes.

In terms of research question four, two factors were identified related to student success in using a GE-based curriculum: (1) the capacity of students to see themselves as scientists—a scientific possible self, and (2) reading proficiency. Gender and math proficiency were not important factors. Given that students’ math proficiency levels prior to curriculum implementation were above the expected state proficiency level while the average reading scores were at or below the proficiency level in several classes, this outcome is understandable, but problematic.

Even though the GE curriculum provided rich and abundant non-text learning episodes (e.g., GE, video, and flash animations), reading proficiency remained a stable factor in science understanding outcomes. Cates et al. (2003) found that low-proficiency readers become easily distracted in technology-rich environments when more than one browser window is open. Within the GE curriculum, students often had a second window open. Future curriculum revisions are planned to limit these kinds of distractions.

As well, developing science explanations requires students to sift through competing relevant evidence—an advanced literacy task. Perhaps this is an area where science and language arts teachers can collaborate to effect greater science reasoning and ELA Common Core outcomes. Being able to “read, write, and speak grounded in evidence” is a performance expectation outlined in the English Language Arts (ELA) Common Core. What level of reading proficiency is needed for construction of high-quality science explanations? What strategies successfully help students identify competing relevant evidence?

Regarding a scientific possible self, students with strong science identities on the performance dimension had higher science understanding and reasoning outcomes. Spiegel et al. (2013) argue this is because students with strong science identities are more receptive and engaged with science materials as a form of self-verification. In this study, low science identity students selected “agree strongly” with the science identity assessment item, “Being a scientist takes too much time.” When students “… can’t see a clear, transparent connection between their program of study and tangible opportunities in the labor market.” they often discard the idea of a STEM career (Harvard Graduate School of Education Pathways to Prosperity 2011). Given the plethora of jobs in science that require a two-year degree, perhaps the curriculum would have been more successful in developing positive science identities if science career opportunities highlighted a full range of training and career pathways. As it was, a review of the curriculum revealed few examples of scientists working with a 2-year degree.

Targeting this aspect of science identity could increase student engagement in the GE curriculum. Hidi and Renninger (2006) confirm that low science identity can be enhanced by first triggering attention to a topic—situational interest. Providing near-peer scientific role models, rather than interfacing only with working scientists well into their careers, as was the case in the GE curriculum, may help students appreciate that completing a science career pathway is personally achievable. Possible future research questions include “What kinds of interactions with near-peers create situational interest?” “How many interactions are needed?”

Finally, it is interesting to compare these results with Bodzin and Fu (2014). They implemented a climate change GE curriculum with eighth-grade students and also reported increased science understanding using a pre-/post-design. In contrast to our results, Bodzin and Fu found gender, students’ initial climate change knowledge, and teaching years to be significant predictors for students’ posttest scores—with students’ pretest scores being the strongest predictor. Continued research efforts focused on identifying factors that predict student outcomes is recommended to help guide future GE curriculum efforts.

References

Almquist H, Blank L, Estrada J (2012) Developing a scope and sequence for using Google Earth in the middle school earth science classroom. GSA Special Papers 492:403–412

Anderson JR (1983) The architecture of cognition. Harvard University Press, Cambridge

Aschbacher P, Roth E (2009) Is science me? High school students’ identities, participation and aspirations in science, engineering, and medicine. Journal of Research in Science Teaching 47(5):564–582

Bailey J, Chen A (2011) The role of virtual globes in geosciences. Comput Geosci 37(1):1–2

Barnett M, Houle M, Hirsch L, Minner D, Strauss E, Mark S, Hoffman E (2014) Participatory professional development: geospatially enhanced urban ecological field studies. In: Makinster J, Trautmann N, Barnett M (eds) Teaching science and investigating environmental issues with geospatial technology: designing effective professional development for teachers. Springer, Dordrecht, pp 13–33

Beck IL, McKeown MG, Kucan L (2002) Improving reading comprehension: research-based principles and practices. York Press, Baltimore

Bednarz SW, van der Schee Joop (2006) Europe and the United States: the implementation of Geographical Information Systems in secondary education in two contexts. Technol Pedag Educ 15(2):191–206

Bell P, Linn MC (2000) Scientific arguments as learning artifacts: designing learning from the Web with KIE. Int J Sci Educ 22:797–817

Blank LM, Plautz M, Almquist H, Crews J, Estrada J (2012) Using Google Earth as a gateway to teach plate tectonics and science explanations. Sci Scope 35(9):41–48

Bodzin A (2011) The implementation of a geospatial information technology (GIT)-supported land use change curriculum with urban middle school learners to promote spatial thinking. J Res Sci Teach 48(3):281–300

Bodzin A, Fu Q (2014) The effectiveness of the geospatial curriculum approach on urban middle-level students’ climate change understandings. J Sci Educ Technol 23(4):575–590

Bodzin A, Anastasio D, Kulo V (2013) Designing Google Earth activities for learning earth and environmental science. In: Makinster J, Trautmann N, Barnett M (eds) Teaching science and investigating environmental issues with geospatial technology: designing effective professional development for teachers. Springer, Dordrecht, pp 213–232

Bodzin A, Fu Q, Kulo V, Peffer T (2014) Examining the effect of enactment of a geospatial curriculum on students’ geospatial thinking and reasoning. J Sci Educ Technol 23(4):575–590

Britner SL, Pajares F (2006) Sources of self-efficacy beliefs of middle school students. J Res Sci Teach 43(5):485–499

Calabrese Barton A, Kang H, Tan E, O’Neil T, Guerra JB, Brecklin C (2013) Crafting a future in science. Am Educ Res J 50(1):37–75

Carlone H, Johnson A (2007) Understanding the science experiences of successful women of color: science identity as an analytic lens. J Res Sci Teach 44(8):1187–1218

Cates M, Price B, Bodzin A (2003) Implementing technology-rich materials: findings from the exploring Life project. Comput Schools 20(1/2):153–169

Chambers B, Cheung A, Madden N, Slavin RE, Gifford R (2006) Achievement effects of embedded multimedia in a Success for All reading program. J Educ Psychol 98(1):232–237

Coe, R. (2002). It’s the effect size, stupid: what effect size is and why it is important. Paper presented at the British Educational Association: University of Exeter

Cohen J (1988) Statistical power analysis for the behavioral sciences, 2nd edn. Erlbaum, Hillsdale

Cohen J (1992) A power primer. Psychol Bull 112(1):155–159

Cruz D, Zellers SD (2006) Effectiveness of Google Earth in the study of geologic landforms. Geol Soc Am Abstr Programs 38(7):498

Douglas R, Klentschy M, Worth K, Binder W (eds) (2006) Linking science and literacy in the K-8 classroom. NSTA Press, Arlington

Edelson D (2001) Learning-for-use: a framework for the design of technology-supported inquiry activities. J Res Sci Teach 38(3):355–385

Elvidge CD, Tuttle BT (2008) How virtual globes are revolutionizing earth observation data access and integration. Int Arch Photogramm Remote Sens Spat Inf Sci 37(B6a):137–140

Erduran S, Simon S, Osborne J (2004) TAPping into argumentation: developments in the application of Toulmin’s argument pattern for studying science discourse. Sci Educ 88:9150933

Glaser R (1992) Expert knowledge and process of thinking. In: Halpern DF (ed) Enhancing thinking skills in the sciences and mathematics. Erlbaum, Hillsdale

Gobert J, Wild S, Rossi L (2012) Testing the effects of prior coursework and gender on geoscience learning with Google Earth. Geolog Soc Am Spec Pap 492:453–468

Google (2012) Google in education: a new and open world for learning. Retrieved from http://www.google.com/edu/about.html. 27 May 2013

Hall-Wallace MK, McAuliffe CM (2002) Design, implementation, and evaluation of GIS-based learning materials in an introductory geoscience course. J Geosci Educ 50(1):5–14

Harvard Graduate School of Education Pathways to Prosperity Project (2011) Meeting the Challenge of Preparing Young Americans for the 21st Century. Harvard, Boston

Hidi S, Renninger KA (2006) The four-phase model of interest development. Educ Psychol 41(2):111–127

Kantner DE (2009) Doing the project and learning the content. Designing project-based curricula for meaningful understanding. Sci Educ 94(3):525–551

Kolodner JL (1993) Case-based reasoning. Morgan Kaufmann, San Mateo

Kolvoord J, Charles M, Purcell S (2014) What happens after the professional development: case studies on implementing GIS in the classroom. In: Makinster J, Trautmann N, Barnett M (eds) Teaching science and investigating environmental issues with geospatial technology: designing effective professional development for teachers. Springer, Dordrecht, pp 13–33

Kulo V, Bodzin A (2013) The impact of a geospatial technology-supported energy curriculum on middle school students’ science achievement. J Sci Educ Technol 22(1):25–36

Lave J, Wenger E (1991) Situated learning: legitimate peripheral participation. Cambridge University Press, Cambridge

Lizottee DJ, McNeill KL, Karjcik J (2004) Teacher practices that support students’ construction of scientific explanations in middle school classrooms. In: Kafai Y, Sandoval W, Enyedy N, Nixon A, Herrera F (eds) Proceedings of the Sixth International Conference of the Learning Sciences. Lawrence Erlbaum, Mahwah, pp 310–317

Makinster J, Trautmann N, Barnett GM (2014) Teaching science and investigating environmental issues with geospatial technology: designing effective professional development for teachers, edited volume. Springer, Dordrecht

McNeill KL, Krajcik J (2009) Synergy between teacher practices and curricular scaffolds to support students in using domain specific and domain general knowledge in writing arguments to explain phenomena. J Learn Sci 18(3):416–460

McNeill KL, Krajcik J (2012) Supporting grade 5–8 students in constructing explanations in science: the claim, evidence, and reasoning framework for talk and writing. Pearson Education, Upper Saddle River

McNeill KL, Lizotte DJ, Krajcik J, Marx RW (2006) Supporting students’ construction of scientific explanations by fading scaffolds in instructional materials. J Learn Sci 15(2):153–191

Moje EB, Tucker-Raymond E, Varelas M, Pappas CC (2007) FORUM: giving oneself over to science—exploring the roles of subjectivities and identities in learning science. Cult Sci Edu 1:593–601

NGSS Lead States (2013) Next generation science standards: for states, by states. The National Academies Press, Washington

NRC (2006) Learning to think spatially: GIS as a support system in K-12 education. National Academy Press, Washington

Rakshit R, Ogneva-Himmelberger Y (2008) Application of virtual globes in education. Geography Compass 2(6):1995–2010

Sadler P, Coyle H, Miller J, Cook-Smith N, Dussault M, Gould R (2010) The astronomy and space science concept inventory: development and validation of assessment instruments aligned with the K-12 National Science Standards. Astron Educ Rev, 8:010111-1, 10.3847. http://www.cfa.harvard.edu/smgphp/mosart/images/sadler_article.pdf

Sandoval W (2001) Conceptual and epistemic aspects of students’ scientific explanations. J Learn Sci 12(1):5–51

Schank RC (1982) Dynamic memory. Cambridge University Press, Cambridge

Schulz R, Kerski J, Patterson T (2008) The use of virtual globes as a spatial teaching tool with suggestions for metadata standards. J Geogr 107(1):27–34

Simon HA (1980) Problem solving and education. In: Tuma DT, Reif R (eds) Problem solving and education: issues in teaching and research. Erlbaum, Hilldale, pp 81–96

Spiegel A, McQuillan J, Halpin P, Matuk C, Diamond J (2013) Engaging teenagers with science through comics. Res Sci Educ 43(6):2310–2326

Tabak I (2004) Synergy: a complement to emerging patterns in distributed scaffolding. J Learn Sci 13(3):305–335

Tai R, Liu C, Maltese A, Fan X (2006) Planning early for careers in science. Science 312:1143–1144

Thompson K, Swan RH, Hambia WK (2006) Linking geoscience visualization tools: Google Earth, oblique aerial panoramas, and illustrations and mapping software. Geol Soc Am Abstr Programs 38(7):325

Trautmann NM, MaKinster JG (2010) Flexibly adaptive professional development in support of teaching science with geospatial technology. J Sci Teacher Educ 21:351–370. doi:10.1007/s10972-009-9181-4

Treves R, Bailey J (2012) Best practices on how to design Google Earth tours for education. Geol Soc Am Spec Pap 492:383–394

Wells B, Sanchez A, Attridge J (2007) Systems engineering the US Education System. Ratheon Co, Waltham

Whitmeyer SJ, Nicolettie J, De Paor DG (2010) The digital revolution in geologic mapping. GSA Today 20(4):4–10

Wilson C, Murphy J, Trautmann N, Makinster J (2009) Local to global: a bird’s eye view of changing landscapes. Am Biol Teach 71(7):412–417

Zohar A, Nemet F (2002) Fostering students’ knowledge and argumentation skills through dilemmas in human genetics. J Res Sci Teach 39:35–62

Acknowledgments

We sincerely thank the dedicated classroom teachers who made this project possible. This material is based upon work supported by the National Science Foundation, Grant No. DRL-0918683. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of the National Science Foundation.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Blank, L.M., Almquist, H., Estrada, J. et al. Factors Affecting Student Success with a Google Earth-Based Earth Science Curriculum. J Sci Educ Technol 25, 77–90 (2016). https://doi.org/10.1007/s10956-015-9578-0

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10956-015-9578-0