Abstract

A fast two-level linearized scheme with nonuniform time-steps is constructed and analyzed for an initial-boundary-value problem of semilinear subdiffusion equations. The two-level fast L1 formula of the Caputo derivative is derived based on the sum-of-exponentials technique. The resulting fast algorithm is computationally efficient in long-time simulations or small time-steps because it significantly reduces the computational cost \(O(MN^2)\) and storage O(MN) for the standard L1 formula to \(O(MN\log N)\) and \(O(M\log N)\), respectively, for M grid points in space and N levels in time. The nonuniform time mesh would be graded to handle the typical singularity of the solution near the time \(t=0\), and Newton linearization is used to approximate the nonlinearity term. Our analysis relies on three tools: a recently developed discrete fractional Grönwall inequality, a global consistency analysis and a discrete \(H^2\) energy method. A sharp error estimate reflecting the regularity of solution is established without any restriction on the relative diameters of the temporal and spatial mesh sizes. Numerical examples are provided to demonstrate the effectiveness of our approach and the sharpness of error analysis.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A two-level linearized method is considered to numerically solve the following semilinear subdiffusion equation on a bounded domain

where \(\partial \Omega \) is the boundary of \(\Omega :=(x_{l},x_{r})\times (y_{l},y_{r})\), and the nonlinear function f(u) is smooth. In (1.1a), \({\mathcal {D}}^{\alpha }_t={}_{~0}^{C}\!{\mathcal {D}}_t^{\alpha }\) denotes the Caputo fractional derivative of order \(\alpha \):

where the weakly singular kernel \(\omega _{1-\alpha }\) is defined by \(\omega _{\mu }(t):=t^{\mu -1}/{\Gamma (\mu )}\). It is easy to verify that \(\omega _{\mu }^{\prime }(t)=\omega _{\mu -1}(t)\) and \(\int _0^t\omega _{\mu }(s)\,\mathrm {d}s=\omega _{\mu +1}(t)\) for \(t>0\).

In any numerical methods for solving semilinear fractional diffusion equations (1.1a), a key consideration is the singularity of the solution near the time \(t=0\), see [5, 9, 19, 23]. For example, under the assumption that the nonlinear function f is Lipschitz continuous and the initial data \(u^0\in H^2(\Omega )\cap H_0^1(\Omega )\), Jin et al. [5, Theorem 3.1] prove that problem (1.1) has a unique solution u for which \(u\in C\left( [0,T];H^2(\Omega )\cap H_0^1(\Omega )\right) \), \({\mathcal {D}}^{\alpha }_tu\in C\left( [0,T];L^2(\Omega )\right) \) and \( \partial _t u \in L^2(\Omega )\) with

where \(C_u>0\) is a constant independent of t but may depend on T. Their analysis of numerical methods for solving (1.1) is applicable to both the L1 scheme and backward Euler convolution quadrature on a uniform time grid of diameter \(\tau \); a lagging linearized technique is used to handle the nonlinearity f(u), and [5, Theorem 4.5] shows that the discrete solution is \(O(\tau ^{\alpha })\) convergent in \(L^\infty (L^2)\).

This work may be considered as a continuation of [14], in which a sharp error estimate for the L1 formula on nonuniform meshes was obtained for linear subdiffusion-reaction equations based on a discrete fractional Grönwall inequality and a global consistency analysis. In this paper, we combine the L1 formula and the sum-of-exponentials (SOEs) technique to develop a one-step fast difference algorithm for the semilinear subdiffusion problem (1.1) by using the Newton’s linearization to approximate nonlinear term, and present the corresponding sharp error estimate of the proposed scheme without any restriction on the relative diameters of temporal and spatial mesh sizes.

It is known that the Caputo fractional derivative involves a convolution kernel. The total number of operations required to evaluate the sum of L1 formula is proportional to \(O(N^2)\), and the active memory to O(N) with N representing the total time steps, which is prohibitively expensive for the practically large-scale and long-time simulations. Recently, a simple fast algorithm based on SOEs approximation is proposed to significantly reduce the computational complexity to \(O(N \log N)\) and \(O(\log N)\) when the final time \(T\gg 1\), see [4, 10]. For an evolution equation with memory, a fast summation algorithm was also proposed [17] by interval clustering. Very recently, another fast algorithm for the evaluation of the fractional derivative has been proposed in [1], where the compression is carried out in the Laplace domain by solving the equivalent ODE with some one-step A-stable scheme. Here the technique of SOEs approximation is used to develop a two-level fast L1 formula by combining a nonuniform mesh suited to the initial singularity with a fast time-stepping algorithm for the historical memory in (1.2). As an interesting property, this scheme computes the current solution by only using the solution at previous time-level, so it would be useful to develop efficient parallel-in-time algorithms, cf. [21], for time-fractional differential equations.

On the other hand, the nonlinearity of the problem also results in the difficulty for the numerical analysis. To establish an error estimate of the two-level linearized scheme at time \(t_n\), it always requires to prove the boundedness of the numerical solution at the previous time level, that is \(\Vert u^{n-1}\Vert _{\infty }\le C_u\). Traditionally it is gotten by using the mathematical induction and some inverse estimate, assuming the underlaying scheme is accurate of order \(O(\tau ^{\beta }+h^2)\) with \(\beta \) representing the temporal convergence order,

This leads to a restriction of the time-space grid \(\tau =O(h^{1/\beta })\) in the theoretical analysis even though it is nonphysical and may be unnecessary in numerical simulations. In this paper, we will extend the discrete \(H^2\) energy method developed in [11,12,13] to prove unconditional convergence of our fully discrete solution without any time-space grid restrictions. The main idea of discrete \(H^2\) energy method is to separately treat the temporal and spatial truncation errors. This method avoids some nonphysical time-space grid restrictions in the error analysis. Another related approach in a finite element setting is discussed in [6,7,8].

The convergence rate of L1 formula for the Caputo derivative is limited by the smoothness of the solution. We approximate the Caputo fractional derivative (1.2) on a (possibly nonuniform) time mesh \(0=t_0<\cdots<t_{k-1}<t_{k}<\cdots <t_N=T\), with the time-step sizes \(\tau _k:=t_k-t_{k-1}\) for \(1\le k\le N\), the maximum time-step \(\tau =\max _{1\le k\le N}\tau _k\) and the step size ratios

The analysis here is based on the following assumptions on the continuous solution

for \(0< t \le T\), where \(\sigma \in (0,1)\cup (1,2)\) is a regularity parameter. To satisfy the regularity conditions in (1.3), appropriate regularity and compatibility assumptions should be imposed on the given data in problem (1.1). Investigating this is beyond the scope of the paper. Noting that, the first two regularity conditions in (1.3) can be relaxed by using the finite elements instead of finite differences [15]. Throughout the paper, any subscripted C, such as \(C_u\), \(C_{\gamma }\), \(C_{\Omega }\), \(C_v\), \(C_0\) and \(C_{F}\), denotes a generic positive constant, not necessarily the same at different occurrences, which is always dependent on the given data and the continuous solution u, but independent of the time-space grid steps.

To resolve the singularity at \(t=0\), it is reasonable to use a nonuniform mesh that concentrates grid points near \(t=0\), see [2, 3, 14, 16, 20]. We make the following assumption on the time mesh:

- AssG :

-

For a parameter \(\gamma \ge 1\), there are positive constant \(C_{\gamma }\) and \({\widetilde{C}}_{\gamma }\), independent of k, such that \(\tau _k\le C_{\gamma }\tau \min \{1,t_k^{1-1/\gamma }\}\) for \(1\le k\le N\), and \(t_{k}\le {\widetilde{C}}_{\gamma }t_{k-1}\) for \(2\le k\le N\).

The assumption AssG implies that \(\tau _1=O(\tau ^{\gamma })\), and allows the time-step size \(\tau _k\) to increase as the time \(t_k\) increases, meanwhile, one may has \(\tau _k=O(\tau )\) as those bounded \(t_k\) away from \(t=0\). The parameter \(\gamma \) controls the distribution density of the grid points concentrated near \(t=0\): increasing \(\gamma \) will refine the time-step sizes near \(t=0\) and so move mesh points closer to \(t=0\). A simple example of a family of meshes satisfying AssG is the graded grid \(t_k=T(k/N)^{\gamma }\), see discussions in [2, 14, 16, 18,19,20]. Although nonuniform time meshes are flexible and reasonably convenient for practical implementations, they also significantly complicate the numerical analysis of schemes, both with respect to stability and consistency. In this paper, our analysis will rely on a generalized fractional Grönwall inequality [15], which would be applicable for any discrete fractional derivatives having the discrete convolution form. As the main result shown in Theorem 4.2, the proposed two-level linearized fast scheme is proved to be unconditionally convergent in the sense that (\(\epsilon \) is the SOE approximation error and h is the maximum spatial length)

where \(C_u\) may depend on u and T, but is uniformly bounded with respect to \(\alpha \) and \(\sigma \).

The paper is organized as follows. Section 2 presents the two-level fast L1 formula and the corresponding linearized fast scheme. The global consistency analysis of fast L1 formula and the Newton’s linearization are presented in Sect. 3. A sharp error estimate for the linearized fast scheme is proved in Sect. 4. Two numerical examples in Sect. 5 are given to demonstrate the sharpness of our analysis.

2 A Two-Level Fast Method

In space we use a standard finite difference method on a tensor product grid. Let \(M_1\) and \(M_2\) be two positive integers. Set \(h_{1}=(x_{r}-x_{l})/M_1, \ h_{2}=(y_{r}-y_{l})/ M_2\) and the maximum spatial length \(h=\max \{h_1,h_2\}\). Then the fully discrete spatial grid

Set \({\Omega }_h={{\bar{\Omega }}}_h\cap \Omega \) and the boundary \(\partial \Omega _h={{\bar{\Omega }}}_h\cap \partial \Omega \). Given a grid function \(v = \{v_{ij}\}\), define

Also, discrete operators \(v_{i,j-\frac{1}{2}},\ \delta _yv_{i,j-\frac{1}{2}}, \ \delta _x\delta _yv_{i-\frac{1}{2},j-\frac{1}{2}}\) and \(\delta _y^2v_{ij}\) can be defined analogously. The second-order approximation of \(\Delta v(\varvec{x}_{h})\) for \(\varvec{x}_h\in \Omega _h\) is \(\Delta _hv_{h} := (\delta _x^2+\delta _y^2)v_{h}\). Let \({\mathcal {V}}_{h}\) be the space of grid functions,

For \(v, w \in {\mathcal {V}}_h\), define the discrete inner product \(\left\langle v,w\right\rangle =h_1h_2\sum _{\varvec{x}_h\in \Omega _h}v_{h}w_{h}\), the discrete \(L^2\) norm \(\Vert v\Vert :=\sqrt{\left\langle v,v\right\rangle }\), the discrete \(H^1\) seminorms

\(\Vert \nabla _h v\Vert =\sqrt{\Vert \delta _xv\Vert ^2+\Vert \delta _yv\Vert ^2}\) and the maximum norm \(\Vert v\Vert _{\infty }=\max _{\varvec{x}_h\in \Omega _h}\left| v_h\right| \). For any \(v\in {\mathcal {V}}_{h}\), by [13, Lemmas 2.1, 2.2 and 2.5] there exists a constant \(C_{\Omega }>0\) such that

2.1 A Fast Variant of the L1 Formula

On our nonuniform mesh, the standard L1 approximation of the Caputo derivative is

where \(\nabla _{\tau }v^k:=v^k-v^{k-1}\) and the convolution kernel \(a_{n-k}^{(n)}\) is defined by

Lemma 2.1

For any fixed integer \(n\ge 2\), the convolution kernel \(a^{(n)}_{n-k}\) of (2.3) satisfies

-

(i)

\(\displaystyle a_{n-k-1}^{(n)}>\omega _{1-\alpha }(t_n-t_{k})>a_{n-k}^{(n)},\quad 1\le k\le n-1;\)

-

(ii)

\(\displaystyle a^{(n)}_{n-k-1}-a^{(n)}_{n-k}>\tfrac{1}{2}\left[ \omega _{1-\alpha }(t_{n}-t_k) -\omega _{1-\alpha }(t_{n}-t_{k-1})\right] ,\quad 1\le k\le n-1.\)

Proof

The integral mean value theorem yields

which implies the result (i) directly since the kernel \(\omega _{1-\alpha }\) is decreasing, also see [14, 23]. For any function \(q\in C^2[t_{k-1},t_{k}]\), let \(\Pi _{1,k}q\) be the linear interpolant of q(t) at \(t_{k-1}\) and \(t_k\). Let

be the error in this interpolant. For \(q(s)=\omega _{1-\alpha }(t_{n}-s)\) one has \(q^{\prime \prime }(s)=\omega _{-\alpha -1}(t_{n}-s)>0\) for \(0<s<t_n\), so the Peano representation of the interpolation error [14, Lemma 3.1] shows that

Thus the definition (2.3) of \(a^{(n)}_{n-k}\) yields

Subtract this inequality from (i) to obtain (ii) immediately. \(\square \)

One can see that the direct evaluation of the L1 formula (2.2) is quite inefficient as it requires the information of solutions at all previous time levels while solving problem (1.1). This motivates us to develop a fast L1 formula based on the SOEs approach given in [4, 10, 22]. A basic result of SOE approximation (see [4, Theorem 2.5] or [22, Lemma 2.2]) is as follows:

Lemma 2.2

Given \(\alpha \in (0,1)\), an absolute tolerance error \(\epsilon \ll 1\), a cut-off time \(\Delta {t}>0\) and a final time T, there exists a positive integer \(N_{q}\), positive quadrature nodes \(\theta ^{\ell }\) and positive weights \(\varpi ^{\ell }\)\((1\le \ell \le N_q)\) such that

where the number \(N_q\) of quadrature nodes satisfies

To design the fast L1 algorithm, we divide the Caputo derivative \(({\mathcal {D}}^{\alpha }_tv)(t_n)\) of (1.2) into a sum of a local part (an integral over \([t_{n-1}, t_n]\)) and a history part (an integral over \([0, t_{n-1}]\)), and approximate \(v^{\prime }\) by linear interpolation in the local part (as the same as the standard L1 method) and use the SOE technique of Lemma 2.2 to approximate the kernel \(\omega _{1-\alpha } (t-s)\) in the history part. It arrives at

where \({\mathcal {H}}^{\ell }(t_k) := \int _0^{t_{k}}e^{-\theta ^{\ell }(t_k-s)}u^{\prime }(s)\,\mathrm {d}s\) with \({\mathcal {H}}^{\ell }(t_0)=0\) for \(1\le \ell \le N_q\,\). To compute \({\mathcal {H}}^{\ell }(t_k)\) efficiently we apply linear interpolation in each cell \([t_{k-1}, t_{k}]\) to have

where the positive coefficient is given by

In summary, we now have the two-level fast L1 formula

where \(H^{\ell }(t_{k})\) satisfies \(H^{\ell }(t_{0})=0\) and the recurrence relationship

2.2 The Two-Level Linearized Scheme

Let \(U_{h}^n=u(\varvec{x}_h,t_n)\) for \(\varvec{x}_h\in {\bar{\Omega }}_h\), \(0\le n\le N\), and \(u_{h}^n\) be the discrete approximation of \(U_{h}^n\). Using the fast L1 formula (2.5) and the Newton linearization, we obtain a linearized scheme for problem (1.1): find \(\{u_h^{N}\}\in {\mathcal {V}}_{h}\) such that

The Newton linearization of a general nonlinear function \(f=f(\varvec{x},t,u)\) at \(t=t_n\) is taken as the form

The scheme (2.6) is a two-level procedure for computing \(\{u_h^{n}\}\), because (2.6a) can be equivalently reformulated as

Thus, once the values \(\{u_h^{n-1},\;H_h^{\ell }(t_{n-1})\}\) at the previous time-level \(t_{n-1}\) are available, the current solution \(\{u_h^{n}\}\) can be found by (2.7) with a fast matrix solver and the historic term \(\{H_h^{\ell }(t_{n})\}\) will be updated explicitly by the recurrence formula (2.8).

Remark 2.3

At each time level the scheme (2.6) requires \(O(MN_q)\) storage and \(O(MN_q)\) operations, where \(M=M_1M_2\) is the total number of spatial grid points. Given a tolerance error \(\epsilon _0\), by virtue of Lemma 2.2, the number of quadrature nodes \(N_q=O(\log N)\) if the final time \(T\gg 1\). Hence our fast method is efficient for long time simulations since it computes the final solution using in total \(O(M\log N)\) storage and \(O(MN\log N)\) operations.

2.3 Discrete Fractional Grönwall Inequality

Our analysis relies on a generalized discrete fractional Grönwall inequality developed in [15], which is applicable for any discrete fractional derivative having the discrete convolution form

provided that \(A^{(n)}_{n-k}\) and the time-steps \(\tau _n\) satisfy the following three assumptions:

- Ass1 :

-

The discrete kernel is monotone, that is,

$$\begin{aligned} A^{(n)}_{k-2}\ge A^{(n)}_{k-1}>0\quad \text {for }2\le k\le n\le N. \end{aligned}$$ - Ass2 :

-

There is a constant \(\pi _A>0\) such that

$$\begin{aligned} A^{(n)}_{n-k}\ge \frac{1}{\pi _A}\int _{t_{k-1}}^{t_k} \frac{\omega _{1-\alpha }(t_n-s)}{\tau _k}\,\mathrm {d}{s}\quad \text {for }1\le k\le n\le N. \end{aligned}$$ - Ass3 :

-

There is a constant \(\rho >0\) such that the time-step ratios

$$\begin{aligned} \rho _k\le \rho \quad \text {for }1\le k\le N-1. \end{aligned}$$

The complementary discrete kernel \(P^{(n)}_{n-k}\) was introduced by Liao et al. [14, 15]; it satisfies the following identity

Rearranging this identity yields a recursive formula that defines \(P^{(n)}_{n-k}\) :

From [15, Lemma 2.2] one can see that \(P^{(n)}_{n-k}\) is well-defined and non-negative if the assumption Ass1 holds true. Furthermore, if Ass2 holds true, then

Recall that the Mittag–Leffler function \(E_\alpha (z) = \sum _{k=0}^\infty \frac{z^k}{\Gamma (1+k\alpha )}\). We state the following (slightly simplified) version of [15, Theorem 3.2]. This result differs substantially from the fractional Grönwall inequality of Jin et al. [5, Theorem 4] since it is valid on very general nonuniform time meshes.

Theorem 2.4

Let Ass1–Ass3 hold true. Suppose that the sequences \((\xi _1^n)_{n=1}^N\), \((\xi _2^n)_{n=1}^N\) are nonnegative. Assume that \(\lambda _0\) and \(\lambda _1\) are non-negative constants and the maximum step size \(\tau \le 1/\root \alpha \of {2\pi _A\Gamma (2-\alpha )(\lambda _0+\lambda _1)}\). If the nonnegative sequence \((v^k)_{k=0}^N\) satisfies

then it holds that for \(1\le n\le N\),

To facilitate our analysis, we now eliminate the historic term \(H^{\ell }(t_{n})\) from the fast L1 formula (2.5a) for \( (D^{\alpha }_fu)^n\). From the recurrence relationship (2.5b), it is easy to see that

Inserting this in (2.5a) and using the definition (2.4), one obtains the alternative formula

where the discrete convolution kernel \(A_{n-k}^{(n)}\) is henceforth given as

The formula (2.13) takes the form of (2.9), and we now verify that our \(A_{n-k}^{(n)}\) defined by (2.14) satisfy Ass1 and Ass2, allowing us to apply Theorem 2.4 and establish the convergence of our computed solution. Part (I) of the next lemma ensures that Ass1 is valid, while part (II) implies that Ass2 holds true with \(\pi _A=\frac{3}{2}\).

Lemma 2.5

If the tolerance error \(\epsilon \) of SOE satisfies \(\epsilon \le \min \left\{ \frac{1}{3}\omega _{1-\alpha }(T),\alpha \,\omega _{2-\alpha }(T)\right\} \), then the discrete convolutional kernel \(A_{n-k}^{(n)}\) of (2.14) satisfies

-

(I)

\( A_{k-1}^{(n)}>A_{k}^{(n)}>0,\;\;1\le k\le n-1;\)

-

(II)

\( A_{0}^{(n)}=a_{0}^{(n)}\) and \(A_{n-k}^{(n)}\ge \frac{2}{3}a_{n-k}^{(n)},\;\; 1\le k\le n-1.\)

Proof

The definition (2.3) and Lemma 2.1 (i) yield

The definition (2.14) and Lemma 2.2 imply that \(A_{0}^{(n)}=a_{0}^{(n)}>a_{1}^{(n)}+\epsilon >A_{1}^{(n)}\). Lemma 2.2 also shows that \(\theta ^{\ell }, \varpi ^{\ell }>0\) for \(\ell =1,\dots , N_q\); the mean-value theorem now yields property (I). By Lemma 2.1 (i) and our hypothesis on \(\epsilon \) we have

Hence Lemma 2.2 gives

The proof is complete. \(\square \)

3 Global Consistency Error Analysis

We now proceed with the consistency error analysis of our fast linearized method, and begin with the consistency error of the standard L1 formula \((D^{\alpha }_\tau u)^n\) of (2.2).

Lemma 3.1

For \(v\in C^2(0,T]\) with \(\int _0^T t \,|v^{\prime \prime }(t)|\,\mathrm {d}s < \infty \), one has

where the L1 kernel \(a_{n-k}^{(n)}\) is defined by (2.3) and \(G^k :=2\int _{t_{k-1}}^{t_k}\left( t-t_{k-1}\right) \left| v^{\prime \prime }(t)\right| \,\mathrm {d}t\).

Proof

From Taylor’s formula with integral remainder, the truncation error of the standard L1 formula at time \(t=t_n\) is (see [14, Lemma 3.3])

where \(Q(t)=\omega _{2-\alpha }(t_n-t)\) and we use the notation of the proof of Lemma 2.1. By the error formula for linear interpolation [14, Lemma 3.1], we have

where the Peano kernel \(\chi _k(t,y)=\max \{t-y,0\}-\frac{t-t_{k-1}}{\tau _k}(t_{k}-y)\) satisfies

Observing that for each fixed \(n\ge 1\) the function Q is decreasing and \(Q^{\prime \prime }(t)=\omega _{-\alpha }(t_n-t)<0\), we arrive at the interpolation error \(\big (\widetilde{\Pi _{1,k}}Q\big )(t)\ge 0\) for \(1\le k\le n\), with

where Lemma 2.1 (ii) is used in the last inequality. Thus, (3.1) yields

and the desired result follows from the definition of \(G^k\). \(\square \)

Remark 3.2

Compared with the previous estimate in [14, Lemma 3.3], Lemma 3.1 removes the restriction of time-step ratios \(\rho _k\le 1\), which is an undesirable restriction on the mesh for problems that allow the rapid growth of the solution at the time far away from \(t=0\).

We now focus on the fast L1 method by taking the initial singularity into account. Here and hereafter, we denote \({\hat{T}}=\max \{1,T\}\) and \({\hat{t}}_{n}=\max \{1,t_{n}\}\) for \(1\le n\le N\). Next lemma presents the estimate of the global consistency error \(\sum _{j=1}^nP_{n-j}^{(n)}\big |\Upsilon ^j\big |\) accumulating from \(t=t_1\) to \(t=t_n\) with the discrete convolution kernel \(P_{n-j}^{(n)}\).

Lemma 3.3

Assume that \(v\in C^2((0,T])\) and that there exists a constant \(C_v>0\) such that

where \(\sigma \in (0,1)\cup (1,2)\) is a parameter. Let

denote the local consistency error of the fast L1 formula (2.13). Assume that the SOE tolerance error satisfies \(\epsilon \le \frac{1}{3}\min \{\omega _{1-\alpha }(T),3\alpha \,\omega _{2-\alpha }(T)\}\). Then the global consistency error can be bounded by

for \(1\le n\le N\). Moreover, if the mesh satisfies AssG, then

Proof

The main difference between the fast L1 formula (2.13) and the standard L1 formula (2.2) is that the convolution kernel is approximated by SOEs with an absolute tolerance error \(\epsilon \). Thus, comparing the standard L1 formula (2.2) with the corresponding fast L1 formula (2.13), by Lemma 2.2 and the regularity assumption (3.2) one has

Lemma 2.2 implies that \(\big |A_{n-k}^{(n)}-a_{n-k}^{(n)}\big |\le \epsilon \) for \(1\le k\le n-1\). Recalling that \(A_{0}^{(n)}=a_{0}^{(n)}\), one has

Then Lemma 3.1 and the regularity assumption (3.2) lead to

Now a triangle inequality gives

Multiplying the above inequality (3.4) by \(P_{n-j}^{(n)}\) and summing the index j from 1 to n, one can exchange the order of summation and apply the definition (2.11) of \(P_{n-j}^{(n)}\) to obtain

where the property (2.12) with \(\pi _A=3/2\) is used in the last inequality. If the SOE approximation error \(\epsilon \le \frac{1}{3}\min \{\omega _{1-\alpha }(T),3\alpha \,\omega _{2-\alpha }(T)\},\) Lemma 2.5 (II) and Lemma 2.1 (i) imply that

and then

Furthermore, the identical property (2.10) for the complementary kernel \(P^{(n)}_{n-j}\) gives

The regularity assumption (3.2) gives

Thus it follows from (3.5) that

The claimed estimate (3.3) is verified. In particular, if AssG holds, one has

where \(\beta =\min \{2-\alpha ,\gamma \sigma \}\). The final estimate follows since \(\tau _1^{\sigma }\le C_{\gamma }\tau ^{\gamma \sigma }\le C_{\gamma }\tau ^{\beta }\). \(\square \)

Next lemma describes the global consistency error of Newton’s linearized approach, which is smaller than that generated by the above L1 approximation. In addition, there is no error in the linearized approximation if \(f=f(u)\) is a linear function.

Lemma 3.4

Assume that \(v\in C([0,T])\cap C^2((0,T])\) satisfies the regularity condition (3.2), and the nonlinear function \(f=f(u)\in C^2({\mathbb {R}})\). Denote \(v^n=v(t_n)\) and the local truncation error

such that the global consistency error

Moreover, if the assumption AssG holds, one has

Proof

Applying the formula of Taylor expansion with integral remainder, one has

Under the regularity conditions, one has

and

Note that, Lemma 2.5 (II) and the definition (2.3) give \(A_{0}^{(k)}=a_{0}^{(k)}=\omega _{2-\alpha }(\tau _k)/\tau _k\), so the identical property (2.10) shows

Moreover, the bounded estimate (2.12) with \(\pi _A=\frac{3}{2}\) gives

Thus, it follows that

If AssG holds, one has

and

where \(\beta =\min \{2,2\gamma \sigma \}\). The second estimate follows since \(\tau _1^{2\sigma }\le C_{\gamma }\tau ^{2\gamma \sigma }\le C_{\gamma }\tau ^{\beta }\). \(\square \)

4 Unconditional Convergence

Assume that the time mesh fulfills Ass3 and AssG in the error analysis. We here extend the discrete \(H^2\) energy method in [11,12,13] to prove the unconditional convergence of discrete solutions to the two-level linearized scheme (2.6). In this section, \(K_0\), \(\tau ^{*}\), \(\tau _0^{*}\), \(\tau _1^{*}\), \(h_0\), \(\epsilon _0\) and any numeric subscripted c, such as \(c_0\), \(c_1\), \(c_2\) and so on, are fixed values, which are always dependent on the given data and the continuous solution, but independent of the time-space grid steps and the inductive index k in the mathematical induction as well. To make our ideas more clearly, four steps are listed to obtain unconditional error estimate as follows.

4.1 STEP 1: Construction of Coupled Discrete System

We introduce a function \(w:={\mathcal {D}}^{\alpha }_tu-f(u)\) with the initial-boundary values \(w(\varvec{x},0):=\Delta u^0(\varvec{x})\) for \(\varvec{x}\in \Omega \) and \(w(\varvec{x},t):=-f(0)\) for \(\varvec{x}\in \partial \Omega \). The problem (1.1a) can be formulated into

Let \(w_{h}^n\) be the numerical approximation of function \(W_{h}^n=w(\varvec{x}_h,t_n)\) for \(\varvec{x}_h\in {\bar{\Omega }}_h\). As done in subsection 2.2, one has an auxiliary discrete system: to seek \(\{u_h^{n},\,w_h^n\}\) such that

Obviously, by eliminating the auxiliary function \(w_h^n\) in above discrete system, one directly arrives at the computational scheme (2.6). Alternately, the solution properties of two-level linearized method (2.6) can be studied via the auxiliary discrete system (4.1)–(4.3).

4.2 STEP 2: Reduction of Coupled Error System

Let \({\tilde{u}}_{h}^n=U_{h}^n-u_{h}^n\), \({\tilde{w}}_{h}^n=W_{h}^n-w_{h}^n\) be the solution errors for \(\varvec{x}_h\in {\bar{\Omega }}_h\). The solution errors satisfy the governing equations as

where \(\xi _h^n\) and \(\eta _h^n\) denote temporal and spatial truncation errors, respectively, and

Acting the difference operators \(\Delta _h\) and \(D^{\alpha }_f\) on the Eqs. (4.4)–(4.5), respectively, gives

By eliminating the term \((D^{\alpha }_f\Delta _h{\tilde{u}}_h)^n\) in the above two equations, one gets

where the initial and boundary conditions are derived from the error system (4.4)–(4.6).

4.3 STEP 3: Continuous Analysis of Truncation Error

According to the first regularity condition in (1.3), one has

Since the spatial error \(\eta _h^n\) is defined uniformly at the time \(t=t_n\) [there is no temporal error in the Eq. (4.2)], we can define a continuous function \(\eta _{h}(t)\) for \(\varvec{x}_h=(x_i,y_j)\in \Omega _h,\)

such that \(\eta _h^n=\eta _h(t_n)\). The second condition in (1.3) implies

Hence, applying the fast L1 formula (2.13) and the equality (2.10), one has

Since the time truncation error \(\xi _h^n\) in (4.4) is defined uniformly with respect to grid point \(\varvec{x}_h\in {{\bar{\Omega }}}_h\), we can define a continuous function \(\xi ^n(\varvec{x})=\xi _1^n(\varvec{x})+\xi _2^n(\varvec{x})\), where \(\xi _1^n\), \(\xi _2^n\) denote the truncation errors of fast L1 formula and Newton’s linearized approach, namely,

such that \(\xi _h^n=\xi ^n(x_i,y_j)\) for \(\varvec{x}_h\in {{\bar{\Omega }}}_h\). By the Taylor expansion formula, one has

Applying Lemma 3.3 with the second and third regularity conditions in (1.3), we have

Similarly, one may have an integral expression of \(\Delta _h\big (\xi _{2}^n\big )_{ij}\) by using the Taylor expansion. Assuming \(f\in C^4({\mathbb {R}})\) and taking the maximum time-step size

we apply Lemma 3.4 with the second regularity condition in (1.3) to find,

Thus, the triangle inequality leads to

4.4 STEP 4: Error Estimate by Mathematical Induction

For a positive constant \(C_0\), let \({\mathcal {B}}(0,C_0)\) be a ball in the space of grid functions on \({{\bar{\Omega }}}_h\) such that

for any grid function \(\{\psi _h\}\in {\mathcal {B}}(0,C_0)\). Always, we need the following result to treat the nonlinear terms but leave the proof to Appendix A.

Lemma 4.1

Let \(F\in C^2({\mathbb {R}})\) and a grid function \(\{\psi _h\}\in {\mathcal {B}}(0,C_0)\). Thus there is a constant \(C_F>0\) dependent on \(C_0\) and \(C_{\Omega }\) such that,

Under the regularity assumption (1.3) with \(U_h^k=u(\varvec{x}_h,t_k)\), we define a constant

For a smooth function \(F\in C^2({\mathbb {R}})\) and any grid function \(\{v_h\}\in {\mathcal {V}}_{h}\), we denote the maximum value of \(C_F\) in Lemma 4.1 as \(c_0\) such that

Let \(c_5\) be the maximum value of \(C_{\Omega }\) to verify the embedding inequalities in (2.1), and

Also let

and

For the simplicity of presentation, we define the following notations for \(1\le k\le N\),

We now apply the mathematical induction to prove that

if the time-space grids and the SOE approximation satisfy

Here \(\tau _0^{*}\), \(\tau ^{*}\), \(h_0\) and \(\epsilon _0\) are fixed constants defined by (4.14)–(4.15). Note that, the restrictions in (4.17) ensures the error function \(\{{\tilde{u}}_h^{k}\}\in {\mathcal {B}}(0,1)\) for \(1\le k\le N\).

Consider \(k=1\) firstly. Since \({\tilde{u}}_h^0=0\), \(\{u_h^0\}\in {\mathcal {B}}(0,K_0)\subset {\mathcal {B}}(0,K_0+1)\) and the nonlinear term (4.7) gives \({\mathcal {N}}_h^1=f^{\prime }(u_h^{0}){\tilde{u}}_h^{1}\). For the function \(f\in C^3({\mathbb {R}})\), the inequality (4.13) implies

where the Eq. (4.5) and the estimate (4.10) are used. Taking the inner product of the Eq. (4.8) (for \(n=1\)) by \({\tilde{w}}_h^{1}\), one gets

because the zero-valued boundary condition in (4.9) leads to \(\big \langle \Delta _h{\tilde{w}}^{1},{\tilde{w}}^{1}\big \rangle \le 0\). With the view of Cauchy–Schwarz inequality and (4.18), one has

and then

Setting \(\tau _1\le \tau _0^{*}\le 1/\root \alpha \of {3\Gamma (2-\alpha )c_0}\), we apply Theorem 2.4 (discrete fractional Grönwall inequality) with \(\xi _1^1=\big \Vert (D^{\alpha }_f\eta )^1-\Delta _h\xi ^1\big \Vert \) and \(\xi _2^1=c_0c_1h^2\) to get

where the initial condition (4.9) and the error estimates (4.10)–(4.12) are used. Thus, the Eq. (4.5) and the inequality (4.10) yield the estimate (4.16) for \(k=1\),

Assume that the error estimate (4.16) holds for \(1\le k\le n-1\) (\(n\ge 2\)). Thus we apply the embedding inequalities in (2.1) to get

Under the priori settings in (4.17), we have the error function \(\{{\tilde{u}}_h^{k}\}\in {\mathcal {B}}(0,1)\), the discrete solution \(\{u_h^{k}\}\in {\mathcal {B}}(0,K_0+1)\) for \(1\le k\le n-1\), and the continuous solution

Then, for the function \(f\in C^4({\mathbb {R}})\), one applies the inequality (4.13) to find that

where \(0\le s\le 1\). From the expression (4.7) of \({\mathcal {N}}^n\) and the triangle inequality, one has

where the Eq. (4.5) and the estimate (4.10) are used.

Now, taking the inner product of (4.8) by \({\tilde{w}}_h^{n}\), one gets

because the zero-valued boundary condition in (4.9) leads to \(\big \langle \Delta _h{\tilde{w}}^{n},{\tilde{w}}^{n}\big \rangle \le 0\). Lemma 2.5 (I) says that the kernels \(A^{(n)}_{n-k}\) are decreasing, so the Cauchy–Schwarz inequality gives

Thus with the help of Cauchy–Schwarz inequality and (4.19), it follows from (4.20) that

Setting the maximum time-step size

we apply Theorem 2.4 with \(\xi _1^n=\big \Vert (D^{\alpha }_f\eta )^n-\Delta _h\xi ^n\big \Vert \) and \(\xi _2^n=(2K_0+3)c_0c_1h^2\) to get

where the initial data (4.9) and the three estimates (4.10)–(4.12) are used. Then the error equation (4.5) with (4.10) implies that the claimed error estimate (4.16) holds for \(k=n\),

The principle of induction and the third inequality in (2.1) give the following result.

Theorem 4.2

Assume that the nonlinear function \(f\in C^4({\mathbb {R}})\) and the solution of nonlinear subdiffusion problem (1.1) fulfills the regularity assumption (1.3) with a regularity parameter \(\sigma \in (0,1)\cup (1,2)\). Suppose that the SOE approximation error \(\epsilon \), the maximum time-step size \(\tau \), and the maximum spatial length h satisfy

where \(\epsilon _0\), \(\tau _0^{*}\), \(\tau ^{*}\) and \(h_0\) are fixed constants defined by (4.14)–(4.15). Then the discrete solution of two-level linearized fast scheme (2.6), on the nonuniform time mesh satisfying Ass3 and AssG, is unconditionally convergent in the maximum norm, that is,

for \(1\le k\le N\), where

The numerical solution achieves an optimal time accuracy of order \(O(\tau ^{2-\alpha })\) if the grading parameter is taken by \(\gamma \ge \max \{1,(2-\alpha )/\sigma \}\).

5 Numerical Experiments

Two numerical examples are reported here to support our theoretical analysis. The two-level linearized scheme (2.6) runs for solving the fractional Fisher equation

subject to zero-valued boundary data, with two different initial data and exterior forces:

-

(Example 1) \(u^0(\varvec{x})=\sin x \sin y\) and \(g(\varvec{x},t)=0\) such that no exact solution is available;

-

(Example 2) \(g(\varvec{x},t)\) is specified such that \(u(\varvec{x},t)=\omega _{\sigma }(t)\sin x \sin y\), \(0<\sigma <2\).

Note that, Example 2 with the regularity parameter \(\sigma \) is set to examine the sharpness of predicted time accuracy on nonuniform meshes. Actually, our present theory also fits for the semilinear problem with a nonzero force \(g(\varvec{x},t)\in C({\bar{\Omega }}\times [0,T])\).

In our simulations, the spatial domain \(\Omega \) is divided uniformly into M parts in each direction \((M_1 = M_2=M)\) and the time interval [0, T] is divided into two parts \([0, T_0]\) and \([T_0, T]\) with total \(N_T\) subintervals. According to the suggestion in [14], the graded mesh \(t_k=T_0\left( k/N\right) ^{\gamma }\) is applied in the cell \([0, T_0]\) and the uniform mesh with time step size \(\tau \ge \tau _{N}\) is used over the remainder interval. Given certain final time T and a proper number \(N_T\), here we would take \(T_0=\min \{1/\gamma ,T\}\), \(N=\big \lceil \frac{N_T}{T+1-\gamma ^{-1}}\big \rceil \) such that

Always, the absolute tolerance error of SOE approximation is set to \(\epsilon =10^{-12}\) such that the two-level L1 formula (2.5a) is comparable with the L1 formula (2.2) in time accuracy.

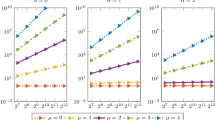

In Example 1, we investigate the asymptotic behavior of solution near \(t=0\) and the computational efficiency of the linearized method (2.6). Setting \(M = 100\), \(T=1/\gamma \) and \(N_T= 100\), Figs. 1 and 2 depict, in log–log plot, the numerical behaviors of first-order difference quotient \(\nabla _{\tau }u_h^n/\tau _n\) at three spatial points near the initial time for different fractional orders and grading parameters. Observations suggest that \(\log \left| u_t(\varvec{x},t)\right| \approx C_u(\varvec{x})+(\alpha -1)\log t \) as \(t\rightarrow 0\), and the solution is weakly singular near the initial time. Compared with the uniform grid, the graded mesh always concentrates much more points in the initial time layer and provides better resolution for the initial singularity.

To see the effectiveness of our linearized method (2.6), we also consider another linearized method by replacing the two-level fast L1 formula \((D^{\alpha }_fu_h)^n\) with the nonuniform L1 formula \((D^{\alpha }_\tau u_h)^n\) defined in (2.2). Setting \(\alpha = 0.5\), \(\gamma =2\), and \(M = 50\), the two schemes are run for Example 1 to the final time \(T = 50\) with different total numbers \(N_T\). Figure 3 shows the CPU time in seconds for both linearized procedures versus the total number \(N_T\) of subintervals. We observe that the proposed method has almost linear complexity in \(N_T\) and is much faster than the direct scheme using traditional L1 formula.

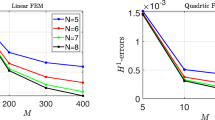

Since the spatial error \(O(h^{2})\) is standard, the time accuracy due to the numerical approximations of Caputo derivative and nonlinear reaction is examined in Example 2 with \(T=1\). The maximum norm error \(e(N,M)=\max _{1\le l\le N}\big \Vert U(t_l)-u^l\big \Vert _{\infty }.\) To test the sharpness of our error estimate, we consider three different scenarios, respectively, in Tables 1, 2, and 3:

-

Table 1: \(\sigma =2-\alpha \) and \(\gamma =1\) with fractional orders \(\alpha =0.4\), 0.6 and 0.8.

-

Table 2: \(\alpha =0.4\) and \(\sigma =0.4\) with grid parameters \(\gamma =1\), \(\frac{3}{4}\gamma _{\texttt {opt}}\), \(\gamma _{\texttt {opt}}\) and \(\frac{5}{4}\gamma _{\texttt {opt}}\).

-

Table 3: \(\alpha =0.4\) and \(\sigma =0.8\) with grid parameters \(\gamma =1\), \(\frac{3}{4}\gamma _{\texttt {opt}}\), \(\gamma _{\texttt {opt}}\) and \(\frac{5}{4}\gamma _{\texttt {opt}}\).

Table 1 lists the solution errors, for \(\sigma =2-\alpha \), on the gradually refined grids with the coarsest grid of \(N=50\). Numerical data indicates that the optimal time order is of about \(O(\tau ^{2-\alpha })\), which dominates the spatial error \(O(h^{2})\). Always, we take \(M=N\) in Tables 1, 2, and 3 such that \(e(N,M)\approx e(N)\). The experimental rate (listed as Order in tables) of convergence is estimated by observing that \(e(N)\approx C_u\tau ^{\beta }\) and then \(\beta \approx \log _{2}\left[ {e(N)}/{e(2N)}\right] .\)

Numerical results in Tables 2 and 3 (with \(\alpha =0.4\) and \(\sigma <2-\alpha \)) support the predicted time accuracy in Theorem 4.2 on the smoothly graded mesh \(t_k=T(k/N)^{\gamma }\). In the case of a uniform mesh \((\gamma =1)\), the solution is accurate of order \(O(\tau ^{\sigma })\), and the nonuniform meshes improve the numerical precision and convergence rate of solution evidently. The optimal time accuracy \(O(\tau ^{2-\alpha })\) is observed when the grid parameter \(\gamma \ge (2-\alpha )/\sigma \).

References

Baffet, D., Hesthaven, J.S.: A kernel compression scheme for fractional differential equations. SIAM J. Numer. Anal. 55(2), 496–520 (2017)

Brunner, H.: Collocation Methods for Volterra Integral and Related Functional Differential Equations, vol. 15. Cambridge University Press, Cambridge (2004)

Brunner, H., Ling, L., Yamamoto, M.: Numerical simulations of 2D fractional subdiffusion problems. J. Comput. Phys. 229, 6613–6622 (2010)

Jiang, S., Zhang, J., Zhang, Q., Zhang, Z.: Fast evaluation of the Caputo fractional derivative and its applications to fractional diffusion equations. Commun. Comput. Phys. 21(3), 650–678 (2017)

Jin, B., Li, B., Zhou, Z.: Numerical analysis of nonlinear subdiffusion equations. SIAM J. Numer. Anal. 56(1), 1–23 (2018)

Li, B., Sun, W.: Error analysis of linearized semi-implicit Galerkin finite element methods for nonlinear parabolic equations. Int. J. Numer. Anal. Model. 10, 622–633 (2013)

Li, B., Sun, W.: Unconditional convergence and optimal error estimates of a Galerkin-mixed FEM for incompressible miscible flow in porous media. SIAM J. Numer. Anal. 51, 1959–1977 (2013)

Li, B., Wang, J., Sun, W.: The stability and convergence of fully discrete Galerkin FEMs for porous medium flows. Commun. Comput. Phys. 15, 1141–1158 (2014)

Li, C., Yi, Q., Chen, A.: Finite difference methods with non-uniform meshes for nonlinear fractional differential equations. J. Comput. Phys. 316, 614–631 (2016)

Li, J.: A fast time stepping method for evaluating fractional integrals. SIAM J. Sci. Comput. 31, 4696–4714 (2010)

Liao, H.-L., Sun, Z.Z., Shi, H.S.: Error estimate of fourth-order compact scheme for solving linear Schrödinger equations. SIAM. J. Numer. Anal. 47(6), 4381–4401 (2010)

Liao, H.-L., Sun, Z.Z., Shi, H.S.: Maximum norm error analysis of explicit schemes for two-dimensional nonlinear Schrödinger equations. Sci. China Math. 40(9), 827–842 (2010). (in Chinese)

Liao, H.-L., Sun, Z.Z.: Maximum norm error bounds of ADI and compact ADI methods for solving parabolic equations. Numer. Methods PDEs 26, 37–60 (2010)

Liao, H.-L., Li, D., Zhang, J.: Sharp error estimate of nonuniform L1 formula for linear reaction–subdiffusion equations. SIAM J. Numer. Anal. 56(2), 1112–1133 (2018)

Liao, H.-L., McLean, W., Zhang, J.: A discrete Grönwall inequality with application to numerical schemes for reaction–subdiffusion problems. SIAM J. Numer. Anal. 57(1), 218–237 (2019)

McLean, W., Mustapha, K.: A second-order accurate numerical method for a fractional wave equation. Numer. Math. 105, 481–510 (2007)

McLean, W.: Fast summation by interval clustering for an evolution equation with memory. SIAM J. Sci. Comput. 34, A3039–A3056 (2012)

Mustapha, K., McLean, W.: Discontinuous Galerkin method for an evolution equation with a memory term of positive type. Math. Comput. 78(268), 1975–1995 (2009)

Mustapha, K., Mustapha, H.: A second-order accurate numerical method for a semilinear integro-differential equation with a weakly singular kernel. IMA J. Numer. Anal. 30(2), 555–578 (2010)

Stynes, M., O’Riordan, E., Gracia, J.L.: Error analysis of a finite difference method on graded meshes for a time-fractional diffusion equation. SIAM J. Numer. Anal. 55(2), 1057–1079 (2017)

Xu, Q., Hesthaven, J.S., Chen, F.: A parareal method for time-fractional differential equations. J. Comput. Phys. 293(C), 173–183 (2015)

Yan, Y., Sun, Z.Z., Zhang, J.: Fast evaluation of the Caputo fractional derivative and its applications to fractional diffusion equations: a second-order scheme. Commun. Comput. Phys. 22, 1028–1048 (2017)

Zhang, Y.N., Sun, Z.Z., Liao, H.-L.: Finite difference methods for the time fractional diffusion equation on nonuniform meshes. J. Comput. Phys. 265, 195–210 (2014)

Acknowledgements

The authors gratefully thank Professor Martin Stynes for his valuable discussions and fruitful suggestions during the preparation of this paper. Hong-lin Liao would also thanks for the hospitality of Beijing CSRC during the period of his visit.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Hong-lin Liao was partially supported by a Grant 1008-56SYAH18037 from NUAA Scientific Research Starting Fund of Introduced Talent, and a Grant DRA2015518 from 333 High-level Personal Training Project of Jiangsu Province. Jiwei Zhang was partially supported by NSFC under Grants 11771035, 91430216 and NSAF U1530401.

A Proof of Lemma 4.1

A Proof of Lemma 4.1

Proof

Consider \(F(\psi )=\psi \) firstly. It is easy to check that, at point \(\varvec{x}_h=(x_i,y_j)\in \Omega _h\),

so that

Similarly,

Moreover, one has

due to the fact

Noticing that \(\left\| \Delta _hv\right\| ^2=\Vert \delta _x^2v\Vert ^2+2\Vert \delta _x\delta _yv\Vert ^2+\Vert \delta _y^2v\Vert ^2\), we apply the embedding inequalities in (2.1) to obtain, also see [11, Lemma 2.2],

where the constant \(C_F\) is dependent on \(C_0\) and \(C_{\Omega }\). For the general case \(F\in C^2({\mathbb {R}})\), one has

The formula of Taylor expansion with integral remainder gives

such that \(\Vert \delta _xF(\psi )\Vert \le C_{F}\) and \(\Vert \delta _x^2F(\psi )\Vert \le C_{F}\,\). Therefore, simple calculations arrive at

By presenting similar arguments as those in the above simple case, it is straightforward to get claimed estimate and complete the proof. \(\square \)

Rights and permissions

About this article

Cite this article

Liao, Hl., Yan, Y. & Zhang, J. Unconditional Convergence of a Fast Two-Level Linearized Algorithm for Semilinear Subdiffusion Equations. J Sci Comput 80, 1–25 (2019). https://doi.org/10.1007/s10915-019-00927-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10915-019-00927-0