Abstract

We consider the nonconvex nonsmooth minimization problem over abstract sets, whose objective function is the sum of a proper lower semicontinuous biconvex function of the entire variables and two smooth nonconvex functions of their private variables. Fully exploiting the problem structure, we propose an alternating structure-adapted Bregman proximal (ASABP for short) gradient descent algorithm, where the geometry of the abstract set and the function is captured by employing generalized Bregman function. Under the assumption that the underlying function satisfies the Kurdyka–Łojasiewicz property, we prove that each bounded sequence generated by ASABP globally converges to a critical point. We then adopt an inertial strategy to accelerate the ASABP algorithm (IASABP), and utilize a backtracking line search scheme to find “suitable” step sizes, making the algorithm efficient and robust. The global O(1/K) sublinear convergence rate measured by Bregman distance is also established. Furthermore, to illustrate the potential of ASABP and its inertial version (IASABP), we apply them to solving the Poisson linear inverse problem, and the results are promising.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Let \(X\subset {\mathbb {R}}^n\) and \(Y\subset {\mathbb {R}}^m\) be nonempty open convex sets whose closures are denoted by \({\overline{X}}\), \({\overline{Y}}\) respectively. Let \(F:{\mathbb {R}}^n\rightarrow {\mathbb {R}}\cup \{+\infty \}\) and \(G:{\mathbb {R}}^m\rightarrow {\mathbb {R}}\cup \{+\infty \}\) be proper lower semicontinuous nonconvex functions; and let \(H:{\mathbb {R}}^n\times {\mathbb {R}}^m\rightarrow {\mathbb {R}}\cup \{+\infty \}\) be a proper lower semicontinuous biconvex function. In this paper, we consider the optimization problem

which captures numerous applications, such as Poisson inverse problems (see, for instance, the recent review paper [6, 8] and references therein), nonnegative matrix factorization [1, 2], parallel magnetic resonance imaging [7], multi-modal learning for image classification [29].

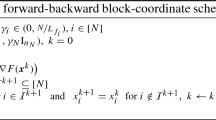

An intuitive method for solving problem (1) is the alternating minimization method (also known as the Gauss-Seidel method or the block coordinate descent method), which was originally proposed for the case \(X={\mathbb {R}}^n,~Y={\mathbb {R}}^m\),

If \(\Phi \) is continuously differentiable and strictly convex with respect to one argument while the other one is fixed, every limit point of the sequence \(\left\{ (x^k,y^k)\right\} \) generated by scheme (2) minimizes \(\Phi \) [9, 10]. A classical technique to relax the strict convexity condition is the proximal alternating minimization method [3, 4]. However, minimizing \(\Phi (\cdot , y)\) or \(\Phi (x, \cdot )\) which is the sum of two functions per iteration is not an easy task. To alleviate the computational cost, Bolte et al. [11] considered linearizing H and proposed the proximal alternating linearized minimization (PALM) algorithm,

The step sizes \(\varsigma _k\) and \(\varrho _k\) are computed according to

where “Lip(\(\cdot \))” denotes the Lipschitz constant. Under the assumption that \(\Phi \) satisfies the Kurdyka–Łojasiewicz (KL) property (see Def. 2.14 for its definition) and a few other conditions, PALM globally converges to a critical point of \(\Phi \). This scheme has great advantage when the functions F and G are simple in the sense that their proximal operators have closed-form expressions or can be evaluated via a fast scheme, while the stepsizes need to change with the iteration sequence.

When the coupling term H has a good structure, e.g., it is a quadratic function [11, 21, 27], the difficulties in solving the minimization problems of (2) and (3) may be caused by the component functions F and G. For this case, Nikolova and Tan [20] proposed the alternating structure-adapted proximal (ASAP) gradient descent algorithm,

where

ASAP (4) is much suitable for the case that the component function H has a good structure in the sense of being simple with respect to each variable x and y so that the proximal operators of \(H(\cdot ,y)\) and \(H(x,\cdot )\) have closed-form expressions.

ASAP (4) is a good alternative to PALM (3). Nevertheless, both algorithms are restricted to the case that there is no abstract sets, while in many applications, there are abstract sets in the model (1), i.e., either \(X\ne {\mathbb {R}}^n\) or \(Y\ne {\mathbb {R}}^m\), or both \(X\ne {\mathbb {R}}^n\) and \(Y\ne {\mathbb {R}}^m\) [6, 8]. Certainly, one can use the indicator functions \(\delta _{{\overline{X}}}(x)\) and \(\delta _{{\overline{Y}}}(y)\) to equivalently convert problem (1) to

where

However, in this case, the subproblems in ASAP algorithm (4) are constrained minimization problems, which unavoidably degrades its efficiency. Another key point of the ASAP algorithm is that, F and G are assumed to be globally Lipschitz smooth on the entire spaces, which is very restrictive and excludes many applications. Furthermore, the proximal parameters \({\bar{\varsigma }}\) and \({\bar{\varrho }}\) in (4), playing essential roles in numerical implementations, depend on the estimation of the Lipschitz constants of \(\nabla F\) and \(\nabla G\), which is not an easy task.

There are several techniques that can be utilized to handle some of these difficulties [6, 12, 13, 15, 25]. For example, [13, 15] chose Legendre or Bregman functions to absorb the abstract sets and hence automatically kept the iterates in the abstract sets; Bauschke et al. [6] introduced a NoLips algorithm, avoiding the dependence of the Lipschitz smoothness, which is then extended to the nonconvex case [5, 12, 17, 18, 25, 26, 28]. However, the convergence of this powerful algorithm is limited to the convex case, or the nonconvex case but with the assumptions that \(X={\mathbb {R}}^n, Y={\mathbb {R}}^m\). Moreover, for the nonconvex case, the Lipschitz smoothness is still required, which contradicts to the original main intention of NoLips algorithm.

In this paper, we propose an alternating structure-adapted Bregman proximal (ASABP) gradient descent algorithm,

where we choose the generalized Bregman functions h and \(\psi \), such that the pairs (F, h) and \((G,\psi )\) are GL-smad on X and Y (see Definition 2.6 and Definition 2.12). By associating the generalized Bregman functions h and \(\psi \) to the objective functions F and G in a suitable way, ASABP can absorb the abstract sets and result in the subproblems unconstrained ones. Merely assuming that the underlying function satisfies the Kurdyka–Łojasiewicz (KL) property yet without the Lipschitz smoothness, we establish the global convergence to a critical point. This result paves a new way for analyzing the NoLips algorithms in solving nonsmooth nonconvex optimization problems with abstract sets.

Numerically, the vectors \(x^k\) and \(y^k\) and the parameters \(\tau _k\) and \(\sigma _k\) influent the performance of the algorithm greatly. To improve the numerical performance, we merge an inertial strategy [22] into the proposed ASABP algorithm, leading to the inertial alternating structure-adapted Bregman proximal (IASABP) gradient descent algorithm (see Algorithm 2). The inertial stepsizes are judiciously selected by backtracking line search strategy. Under mild conditions, we can get the O(1/K) convergence rate of the sequence generated by IASABP measured by Bregman distance.

The rest of this paper is organized as follows. In Sect. 2, we list some basic notations and preliminary materials. In Sect. 3, we present the new alternating structure-adapted Bregman proximal (ASABP) gradient descent algorithm and its inertial version IASABP in detail, and give the convergence analysis results. To show the ability of IASABP and ASABP in dealing with non-Lipschitz problem with abstract sets, we implement them to solve the Poisson linear inverse problem and perform numerical experiment in Sect. 4. Finally, we make some conclusions in Sect. 5.

2 Preliminaries

In this section, we summarize some notations and elementary facts to be used for further analysis.

The Euclidean scalar product in \({\mathbb {R}}^d\) and its corresponding norm are respectively denoted by \(\langle \cdot ,\cdot \rangle \) and \(\Vert \cdot \Vert \). If \(F:{\mathbb {R}}^n\rightrightarrows {\mathbb {R}}^m\) is a point-to-set mapping, its graph is defined by

Similarly the graph of a real-extended-valued function \(f:{\mathbb {R}}^d\rightarrow {\mathbb {R}}\cup \{+\infty \}\) is defined by

Given a function \(f:~{\mathbb {R}}^d\rightarrow {\mathbb {R}}\cup \{+\infty \}\), its domain is

and f is proper if and only if \(\text {dom}f\ne \emptyset \). The proximal operator associated with f is given by

The indicator function of a nonempty set C is defined by

The normal cone of a nonempty closed convex set \(C\subset {\mathbb {R}}^d\) at \(x\in C\) is defined by

and if \(x\not \in C\), we set \( N_{C}(x)=\emptyset \). For any subset \(C\subset {\mathbb {R}}^d\) and any \(x\in {\mathbb {R}}^d\), the distance from x to C, denoted by \(\hbox {dist}(x,C)\), is defined as

When \(C=\emptyset \), we set \(\hbox {dist}(x,C)=+\infty \) for all \(x\in {\mathbb {R}}^d\).

2.1 Subdifferentials and partial subdifferentials

Let us first recall a few definitions and results concerning subdifferentials of a given function.

Definition 2.1

[3] Let \(f:~{\mathbb {R}}^d\rightarrow {\mathbb {R}}\cup \{+\infty \}\) be a proper lower semicontinuous function.

-

(i)

For each \(x\in \text {dom}f\), the Fréchet subdifferential of f at x, written as \({\hat{\partial }} f(x)\), is the set of vectors \(u\in {\mathbb {R}}^d\) which satisfy

$$\begin{aligned} \liminf _{y\ne x ~y\rightarrow x}\frac{f(y)-f(x)-\langle u,y-x\rangle }{\Vert y-x\Vert }\ge 0. \end{aligned}$$If \(x\notin \text {dom}f\), we set \({\hat{\partial }}f(x)=\emptyset \).

-

(ii)

The set

$$\begin{aligned} \partial f(x):=\left\{ u\in {\mathbb {R}}^d:\exists ~ x^k\rightarrow x,~f(x^k)\rightarrow f(x),~u^k\in {\hat{\partial }}f(x^k)\rightarrow u\right\} , \end{aligned}$$is the limiting subdifferential, or simply the subdifferential for short, of f at \(x\in \text {dom}f\).

Remark 2.2

[24] From Definition 2.1, we have the following conclusions.

-

(i)

\({\hat{\partial }}f(x) \subset \partial f(x)\) for each \(x\in {\mathbb {R}}^d\); both sets are closed; and \({\hat{\partial }}f(x)\) is convex while \(\partial f(x)\) is not necessarily convex.

-

(ii)

Let \((x^k,u^k)\in \text {Graph}~\partial f\) be a sequence that converges to (x, u). If \(f(x^k)\) converges to f(x) as \(k\rightarrow +\infty \), then \((x,u)\in \text {Graph}~\partial f\).

-

(iii)

(Fermat’s rule) A necessary but not sufficient condition for \(x\in {\mathbb {R}}^d\) to be a local minimizer of f is that x is a critical point, that is,

$$\begin{aligned} 0\in \partial f(x). \end{aligned}$$The set of critical points of f is denoted by crit f.

-

(iv)

If \(f:{\mathbb {R}}^d\rightarrow {\mathbb {R}}\cup \{+\infty \}\) is proper lower semicontinuous and \(h:{\mathbb {R}}^d\rightarrow {\mathbb {R}}\) is continuously differentiable, then for any \(x\in \text {dom}f\), \(\partial (f+h)(x)=\partial f(x)+\nabla h(x)\).

In first order alternating methods (e.g., scheme (5)), each iteration is equivalent to finding a minimizer of the auxiliary function, which is characterized by Fermat’s rule. Since Fermat’s rule is defined on subgradients, applying alternating schemes leads us to consider partial subgradients defined as follows.

Definition 2.3

[20] Given \(H:{\mathbb {R}}^n\times {\mathbb {R}}^m\rightarrow {\mathbb {R}}\cup \{+\infty \}\), the subdifferential of H at \(\left( x^{+},y^{+}\right) \) is denoted by \(\partial H\left( x^{+},y^{+}\right) \). In addition, \(\partial _{x}H\left( x^{+},y^{+}\right) \) and \(\partial _{y}H\left( x^{+},y^{+}\right) \) are the partial subdifferentials of \(H(\cdot ,y^{+})\) at \(x^{+}\) and \(H(x^{+},\cdot )\) at \(y^{+}\) respectively.

Remark 2.4

Note that the desired critical point is characterized by its whole subgradient, namely \((0,0)\in \partial H(x^*,y^*)\). Let \(H:{\mathbb {R}}^n\times {\mathbb {R}}^m\rightarrow {\mathbb {R}}\cup \{+\infty \}\) be proper function. Then

holds naturally [24]. If the function H also satisfies

at least for \((x,y)=(0,0)\), then \(0\in \partial _x H(x^*,y^*)\times \partial _y H(x^*,y^*)\) means that \((x^*,y^*)\) is a critical point. (7) is automatically satisfied in the form as shown in the following example, which gives a sufficient condition.

Example 1

Let \(H:~{\mathbb {R}}^n\times {\mathbb {R}}^m\rightarrow {\mathbb {R}}\cup \{+\infty \}\) be of the form

where \(h:~{\mathbb {R}}^n\times {\mathbb {R}}^m\rightarrow {\mathbb {R}}\) is continuously differentiable; \(\xi :~{\mathbb {R}}^n\rightarrow {\mathbb {R}}\cup \{+\infty \}\) and \(\eta :~ {\mathbb {R}}^m\rightarrow {\mathbb {R}}\cup \{+\infty \}\) are proper lower semicontinuous functions. Then, for any \((x, y) \in \textrm{dom}H=\textrm{dom}\xi \times \textrm{dom}\eta \), H satisfies (7).

More details and examples can refer to [20]. Besides condition (7), another property, closely linked to the closedness of the partial subdifferential, is crucial.

Definition 2.5

[20] (Parametric closedness of the partial subdifferentials) Let \(H:~{\mathbb {R}}^n\times {\mathbb {R}}^m\rightarrow {\mathbb {R}}\cup \{+\infty \}\) be a function, \(\{(x^k,y^k)\}_{k\in {\mathbb {N}}}\) and \((x^+,y^+)\in {\textrm{dom}}H\) such that for \(k\rightarrow +\infty \),

The x-partial subdifferentail of H is said to be parametrically closed at \((x^+,y^+)\) with respect to the sequence \(\{(x^k,y^k)\}_{k\in {\mathbb {N}}}\) if, for any sequence \(\{p_x^k\}_{k\in {\mathbb {N}}}\),

then \(p_x^+\in \partial _x H(x^+,y^+)\). Similarly, we can define the parametrically closeness of y-partial subdifferentail of H at \((x^+,y^+)\) with respect to the sequence \(\{(x^k,y^k)\}_{k\in {\mathbb {N}}}\). The x-partial and y-partial subdifferentials of H are said to be parametrically closed if they are parametrically closed at any point \((x^+,y^+)\in {\textrm{dom}}H\) with respect to any sequence satisfying (8).

Example 2

[20] Let \(H:~{\mathbb {R}}^n\times {\mathbb {R}}^m\rightarrow {\mathbb {R}}\cup \{+\infty \}\) be a biconvex function continuous on its domain. Then its partial subdifferentials are parametrically closed at any point \((x^+,y^+)\in {\textrm{dom}}H\).

2.2 Generalized Bregman function and Bregman distance

To define the Bregman distance, we first give the definition of generalized Bregman function.

Definition 2.6

(Generalized Bregman function) Let \(\Omega \) be a nonempty convex open subset of \({\mathbb {R}}^d\), and \({\overline{\Omega }}\) denotes its closure. Associated with \(\Omega \), a function \(h:{\mathbb {R}}^d\rightarrow {\mathbb {R}}\cup \{+\infty \}\) is called a generalized Bregman function if it satisfies the following:

-

(i)

h is proper lower semicontinuous and convex with \(\textrm{dom} h\subset {\overline{\Omega }}\).

-

(ii)

\({\textrm{dom}}\partial h=\Omega =int~{\textrm{dom}}h\).

-

(iii)

h is strictly convex on \(int~{\textrm{dom}}h\).

-

(iv)

If \(\{x^k\}\in \Omega \) converges to \({\bar{x}}\in \partial \Omega \) (the boundary of \(\Omega \)), then \(\displaystyle \lim _{k\rightarrow +\infty }\langle \nabla h(x^k), u-x^k\rangle =-\infty \) for all \(u\in \Omega \).

We denote the class of generalized Bregman functions by \({\mathcal {G}}(\Omega )\).

Definition 2.7

(Bregman distance) Given \(h\in {\mathcal {G}}(\Omega )\), the Bregman distance associated to h, denoted by \(D_h\): \({\textrm{dom}}h\times int~{\textrm{dom}}h\rightarrow {\mathbb {R}}_{+}\), is defined by

Remark 2.8

We give some remarks on Definition 2.6 and Definition 2.7.

-

(i)

The class of functions which satisfy (i) and (ii) in Definition 2.6 are the Kernel generating distance [12]. And the class of functions satisfying (i)-(iii) are Legendre functions [6].

-

(ii)

Definition 2.6 (ii) is equivalent to the statement that h is essentially smooth [23, 25], which means that \(\partial h(x)=\{\nabla h(x)\}\) when \(x\in int~\textrm{dom}h\), while \(\partial h(x)=\emptyset \) when \(x\notin int~\textrm{dom}h\).

-

(iii)

Given \(h\in {\mathcal {G}}(\Omega )\). If h is continuous on \({\overline{\Omega }}\), Definition 2.6 (iv) holds if and only if \(\displaystyle \lim _{k\rightarrow +\infty }D_{h}(u, x^k)=+\infty \) for all \(u\in \Omega \) and all sequence \(\{x^k\}\in \Omega \) which converges to a point \({\bar{x}}\in \partial \Omega \).

-

(iv)

Note that the structural form of \(D_h\) is also useful when h is not convex, and still enjoys two simple but remarkable properties,

-

The three point identity: For any \(y,z\in int~\textrm{dom}h\) and \(x\in {\textrm{dom}}h\), we have

$$\begin{aligned} D_h(x,z)-D_h(x,y)-D_h(y,z)=\langle \nabla h(y)-\nabla h(z), x-y \rangle . \end{aligned}$$ -

Linear additivity: For any \(\alpha ,\beta \in {\mathbb {R}}\), and any functions \(h_1\) and \(h_2\), we have

$$\begin{aligned} D_{\alpha h_1+\beta h_2}(x,y)=\alpha D_{h_1}(x,y)+\beta D_{h_2}(x,y), \end{aligned}$$for any \((x,y)\in ({\textrm{dom}}h_1\bigcap {\textrm{dom}}h_2)^2\) such that both \(h_1\) and \(h_2\) are differentiable at y.

It is obvious that Bregman distance is, in general, not symmetric. It is thus natural to introduce a measure for the symmetry of \(D_h\).

Definition 2.9

[6] (Symmetry coefficient) Given \(h\in {\mathcal {G}}(\Omega )\), its symmetry coefficient is defined by

Remark 2.10

The symmetry coefficient has the following properties:

-

(i)

Clearly, the closer \(\alpha (h)\) gets to 1, the more symmetric \(D_h\) is.

-

(ii)

For any \(x,y \in int~{\textrm{dom}}h\),

$$\begin{aligned} \alpha (h) D_h(x,y)\le D_h(y,x) \le \frac{1}{\alpha (h)} D_h(x,y), \end{aligned}$$where we have adopted the convention that \(\frac{1}{0}=+\infty \) and \(+\infty \times r = +\infty \) for any \(r\ge 0\).

Lemma 2.11

Given \(h\in {\mathcal {G}}(\Omega )\), for any proper lower semicontinuous convex function \(\Gamma :{\mathbb {R}}^d\rightarrow {\mathbb {R}}\cup \{+\infty \}\) and any \(z \in int~{\textrm{dom}}h\), if

then for any \(x\in {\textrm{dom}}h\),

2.3 Generalized L-smooth adaptable and extended descent lemma

We denote \({\mathcal {G}}(f,h)\) the set of pair of functions (f, h) satisfies

-

(i)

\(h\in {\mathcal {G}}(\Omega )\),

-

(ii)

\(f:{\mathbb {R}}^d\rightarrow {\mathbb {R}}\cup \{+\infty \}\) is proper lower semicontinuous nonconvex with \(\textrm{dom}h\subset {\textrm{dom}}f\), which is continuously differentiable on \({\overline{\Omega }}\).

Definition 2.12

(Generalized L-smooth adaptable) A pair of functions \((f,h)\in {\mathcal {G}}(f,h)\) is called generalized L-smooth adaptable (GL-smad) on \(\Omega \) if there exists \(L>0\) such that \(Lh+f\) and \(Lh-f\) are convex on \(\Omega \).

From the above definition we immediately obtain the two-sided descent lemma, which complements and extends the NoLips descent lemma derived in [6].

Lemma 2.13

(Extended descent lemma) The pair of functions \((f,h)\in {\mathcal {G}}(f,h)\) is GL-smad on \(\Omega \) if and only if

Due to the structural form of \(D_f\), the extended descent lemma reads equivalently as

When the function f is assumed to be convex, the convexity condition of \(Lh+f\) naturally holds. In this case, the NoLips descent lemma given in [6] is recovered. When \(h(z)=\frac{1}{2}\Vert z\Vert ^2\) and consequently \(D_h(x,y)=\frac{1}{2}\Vert x-y\Vert ^2\), Lemma 2.13 would be reduced to the descent lemma [19],

2.4 Kurdyka–Łojasiewicz property

Let \(f:{\mathbb {R}}^d\rightarrow {\mathbb {R}}\cup \{+\infty \}\) be a proper lower semicontinuous function. For \(-\infty<\eta _1<\eta _2\le +\infty \), define

Definition 2.14

[4] (Kurdyka–Łojasiewicz property) The function f is said to have the Kurdyka–Łojasiewicz (KL) property at \({\bar{x}}\in \text {dom}\partial f\) if there exist \(\eta \in (0,+\infty ]\), a neighborhood U of \({\bar{x}}\) and a continuous concave function \(\varphi :[0,\eta )\rightarrow {\mathbb {R}}_+\) such that

-

(i)

\(\varphi (0)=0\);

-

(ii)

\(\varphi \) is \(C^1\) on \((0,\eta )\);

-

(iii)

for all \(s\in (0,\eta )\), \({\varphi }'(s)>0\);

-

(iv)

for all x in \(U\cap [f({\bar{x}})<f<f({\bar{x}})+\eta ]\), the Kurdyka–Łojasiewicz inequality holds, i.e.,

$$\begin{aligned} {\varphi }'(f(x)-f({\bar{x}}))\hbox {dist}(0,\partial f(x))\ge 1. \end{aligned}$$

Remark 2.15

-

(i)

If f satisfies the KL property at each point of \(\text {dom}\partial f\), then f is called a KL function;

-

(ii)

For convenience, denote the class of functions which satisfy (i), (ii) and (iii) in Definition 2.14 as \(\Phi _\eta \).

The following lemma gives the important uniformized KL property. More properties and examples can refer to [3, 11, 14].

Lemma 2.16

[11] (Uniformized KL property) Let C be a compact set and let \(f:{\mathbb {R}}^d\rightarrow {\mathbb {R}}\cup \{+\infty \}\) be a proper lower semicontinuous function. Assume that f is constant on C and satisfies the KL property at each point of C. Then, there exist \(\varepsilon >0\), \(\eta >0\) and \(\varphi \in \Phi _\eta \) such that for any \(\bar{x} \in C\) and all x in the following intersection,

one has,

3 Algorithms and convergence

In this section, we first describe our alternating structure-adapted Bregman proximal (ASABP) gradient descent algorithm and its inertial version (IASABP algorithm) for solving

where \(X\subset {\mathbb {R}}^n\) and \(Y\subset {\mathbb {R}}^m\) are open convex sets with nonempty interiors, and \({\overline{X}}\) and \({\overline{Y}}\) are their closures, respectively. Then we present their convergence rate results and establish the global convergence result for ASABP algorithm. Throughout the rest of this section, we suppose the following assumption, which guarantees that the algorithms are well-defined.

Assumption 3.1

-

(i)

\(\inf \{\Phi (x,y): (x,y)\in {\overline{X}}\times {\overline{Y}}\}>-\infty \) and \(\Phi \) is coercive.

-

(ii)

\(h\in {\mathcal {G}}(X)\) and \(\psi \in {\mathcal {G}}(Y)\) such that the pairs \((F,h)\in {\mathcal {G}}(F,h)\) and \((G,\psi )\in {\mathcal {G}}(G,\psi )\) are GL-smad on X and Y with coefficients \(L_h\) and \(L_{\psi }\) respectively.

-

(iii)

For the sequence \(\{x^k\}_{k\in {\mathbb {N}}}\in int~{\textrm{dom}}h\) and \(x\in {\textrm{dom}}h\), \(\Vert x^k-x\Vert \rightarrow 0 \Longleftrightarrow D_{h}(x,x^k)\rightarrow 0\). Similarly, \(\Vert y^k-y\Vert \rightarrow 0 \Longleftrightarrow D_{\psi }(y,y^k)\rightarrow 0\) for any sequence \(\{y^k\}_{k\in {\mathbb {N}}}\in int~{\textrm{dom}}\psi \) and \(y\in \textrm{dom}\psi \),.

-

(iv)

\(H:{\mathbb {R}}^n\times {\mathbb {R}}^m\rightarrow {\mathbb {R}}\cup \{+\infty \}\) is a proper lower semicontinuous biconvex function.

3.1 The ASABP and IASABP algorithm

We now first introduce the alternating structure-adapted Bregman proximal (ASABP) gradient descent algorithm.

Under Assumption 3.1, \(x^{k+1}\) will be automatically in X and \(y^{k+1}\) will be automatically in Y. Hence, the problems (9) and (10) are essentially unconstrained optimization problems, which in many cases enable these problems to possess closed-form solutions.

Note that the efficiency of ASABP depends on the vectors \(x^k\) and \(y^k\), and the parameters \(\tau _k\) and \(\sigma _k\). For the first order algorithms, it was found that extrapolation can accelerate the convergence, and the adaptive line search scheme can alleviate the difficulty in choosing the parameters. Hence, we give the inertial variant of ASABP algorithm, i.e, the inertial alternating structure-adapted Bregman proximal gradient descent algorithm, which we denote as IASABP for short.

Remark 3.1

-

(i)

Notice that (11) and (13) hold naturally when taking \(\alpha _k^0 = 0\) and \(\beta _k^0=0\) for all \(k\ge 0\). In this case, the inertial strategy makes no difference, i.e., \({\bar{x}}^k=x^k,~{\bar{y}}^k=y^k\). IASABP algorithm then reduces to the ASABP algorithm.

-

(ii)

(Finite termination of line search) There exists \(J_{k}\in {\mathbb {N}}\) in step (11) such that for any \(j\ge J_{k}\), \(\alpha _k=\eta ^j\alpha ^0_k\) satisfies

$$\begin{aligned} D_h(x^k,x^{k}+\alpha _k(x^{k}-x^{k-1}))\le \theta C_k^1 D_h(x^k,x^{k-1}). \end{aligned}$$Similarly, we can get \(\beta _k\) via finite number of step (13).

We then verify that the ASABP and IASABP algorithms are well defined. To this end, choose arbitrarily \(u\in int~{\textrm{dom}}h\) and stepsize \(0<\tau < \frac{1}{L_h}\). Fixed \(y\in int~{\textrm{dom}}\psi \), we define the Bregman proximal mapping,

where the second equality follows from the fact that \(\textrm{dom}h\subset {\overline{X}}\). Observe that \(x^{k+1}\in {\mathcal {B}}_{\tau _k}(u^k; y^k)\), where \(u^k=x^k\) in ASABP algorithm and \(u^k={\bar{x}}^k\) for IASABP algorithm. Hence from Proposition 3.2, (9) and (12) are well defined, and so are the (10) and (14).

Proposition 3.2

Suppose that Assumption 3.1 holds. Let \(y\in int~\textrm{dom}\psi \), \(u\in int~{\textrm{dom}}h\) and \(0<\tau < \frac{1}{L_h}\). Then the set \({\mathcal {B}}_{\tau }(u; y)\) is a nonempty compact single valued mapping from \(\hbox {int}~{\textrm{dom}}h\) to \(\hbox {int}~{\textrm{dom}}h\).

Proof

Fix any \(y\in int~{\textrm{dom}}\psi \), \(u\in int~{\textrm{dom}}h\) and \(0<\tau <\frac{1}{L_h}\). For any \(x\in {\mathbb {R}}^n\), we define

so that \({\mathcal {B}}_{\tau }(u; y)=\arg \min _{x\in {\mathbb {R}}^n}\Phi _{h}(x, u; y)\). Since h is strictly convex and H is biconvex as assumed in Assumption 3.1, the objective \(\Phi _{h}(x, u; y)\) have at most one minimizer. Taking into account that (F, h) is GL-smad with \(L_h\),

According to Assumption 3.1 (i), it holds that

Since \(\Phi _h\) is also proper and lower semicontinuous, invoking Weierstrass’s theorem, it follows that the set \({\mathcal {B}}_{\tau }(u; y)\) is nonempty and compact. Furthermore, the optimality condition for \({\mathcal {B}}_{\tau }(u; y)\) implies that \(\partial h({\mathcal {B}}_{\tau }(u; y))\) must be nonempty, and Definition 2.6 indicates that \({\mathcal {B}}_{\tau }(u; y)\) belongs to \(\hbox {int}~{\textrm{dom}}h\). \(\square \)

3.2 Rate of convergence

In this subsection, we establish the global sublinear rate of convergence for ASABP and IASABP, measured by Bregman distance. Using the extended descent lemma, we obtain the following key estimation for the objective function \(\Phi \), which will play an important role in deriving our main convergence results.

Lemma 3.3

Suppose that Assumption 3.1 holds. Let \(\left\{ (x^k,y^k)\right\} _{k\in {\mathbb {N}}}\) be the sequence generated by IASABP, then we have

where \(\alpha (h)\) and \(\alpha (\psi )\) are the symmetric coefficients of \(h,~\psi \) respectively.

Proof

Applying Lemma 2.11 with \(\Gamma (x)=\tau _k\left( H(x, y^{k})+\langle \nabla F({\bar{x}}^{k}), x-{\bar{x}}^k\rangle \right) \), it yields that for any \(x\in \textrm{dom}h\),

where the second inequality follows from Lemma 2.13 and the last inequality follows from the GL-smad property of (F, h). Particularly, taking \(x=x^k\), together with \(0<\tau _k<\frac{1}{L_h}\) and the nonnegativity of Bregman distance \(D_h\), we obtain

If \({\bar{x}}^k=x^k\) we get \(D_h(x^k,{\bar{x}}^k)=0\). Hence,

If \({\bar{x}}^k\ne x^k\), according to (11), we have \(D_{h}(x^k, {\bar{x}}^{k})\le \theta C_k^1 D_h(x^k,x^{k-1})\). Recalling the definition of \(C_k^1\), we have

Consequently, for any case we conclude that for any \(k\in {\mathbb {N}}\),

We can obtain the following inequality in a similar way,

Adding (15) and (16) and we can obtain that for any \(k\in {\mathbb {N}}\),

Combining with Remark 2.10 (ii) completes the proof. \(\square \)

Equipped with Lemma 3.3, we introduce the following useful notations,

And we define an auxiliary sequence by

where \(M_1=\frac{1}{2}\left( \frac{\theta }{{\underline{\tau }}}+\frac{\alpha (h)}{{\bar{\tau }}}\right) \) and \(M_2=\frac{1}{2}\left( \frac{\theta }{{\underline{\sigma }}}+\frac{\alpha (\psi )}{{\bar{\sigma }}}\right) \). Choosing \(\theta < \min \left\{ \frac{{\underline{\tau }}\alpha (h)}{{\bar{\tau }}},\frac{{\underline{\sigma }}\alpha (\psi )}{{\bar{\sigma }}}\right\} \), we have

Lemma 3.4

Suppose that Assumption 3.1 holds. Let \(\left\{ z^k_{\mathfrak {I}}= (x^k,y^k)\right\} _{k\in \mathbb {N}}, \left\{ \bar{z}^k_{\mathfrak {I}}=(\bar{x}^k,\bar{y}^k) \right\} _{k\in \mathbb {N}}\) be the sequences generated by IASABP.

-

(i)

The sequence \(\{J(u_{\small {{\mathfrak {I}}}}^k)\}_{k\in {\mathbb {N}}}\) is monotonically nonincreasing and in particular for all \(k\ge 0\),

$$\begin{aligned} \begin{aligned}&\delta _{{\mathfrak {I}}}\left( D_h(x^k,x^{k-1})+D_\psi (y^k,y^{k-1})+D_{h}( x^{k+1},x^k)+D_{\psi }( y^{k+1},y^k)\right) \\&\quad \le J(u_{\small {{\mathfrak {I}}}}^k)-J(u_{\small {{\mathfrak {I}}}}^{k+1}), \end{aligned} \end{aligned}$$(18)where \(\delta _{{\mathfrak {I}}}=\min \{\delta _1,~\delta _2\}\) and \(\delta _1, \delta _2\) are defined as (17). Moreover, \(\left\{ J(u_{\small {{\mathfrak {I}}}}^k)\right\} _{k\in {\mathbb {N}}}\) converges to some finite value, denoted by \(J^*\).

-

(ii)

We have

$$\begin{aligned} \sum _{k=0}^{+\infty }\left( D_{h}( x^{k+1},x^k)+D_{\psi }( y^{k+1},y^k)\right) <+\infty , \end{aligned}$$and hence \(\displaystyle \lim _{k\rightarrow +\infty }D_{h}( x^{k+1},x^k)=0\), \(\displaystyle \lim _{k\rightarrow +\infty }D_{\psi }( y^{k+1},y^k)=0\). Moreover, \(\displaystyle \lim _{k\rightarrow +\infty }\Vert z^{k+1}_{{\mathfrak {I}}}-z^k_{{\mathfrak {I}}}\Vert =0\) and \(\displaystyle \lim _{k\rightarrow +\infty }\Vert z^{k}_{{\mathfrak {I}}}-{\bar{z}}^k_{{\mathfrak {I}}}\Vert =0\).

-

(iii)

(Convergence rate) For any \(K\ge 0\), it holds that

$$\begin{aligned} \min _{0\le k\le K}\left\{ D_{h}( x^{k+1},x^k)+D_{\psi }( y^{k+1},y^k)\right\} \le \frac{1}{\delta _{{\mathfrak {I}}}(K+1)}\left( J(u_{\small {{\mathfrak {I}}}}^0)-J^*\right) . \end{aligned}$$

Proof

(i) Choose \(\theta < \min \left\{ \frac{{\underline{\tau }}\alpha (h)}{{\bar{\tau }}},\frac{{\underline{\sigma }}\alpha (\psi )}{{\bar{\sigma }}}\right\} \) and \(\delta _1,~\delta _2\) as in (17), then (18) follows immediately from Lemma 3.3. Meanwhile, \(\Phi \) is assumed to be bounded below and J is also bounded below. Hence \(\left\{ J(u_{\small {{\mathfrak {I}}}}^k)\right\} _{k\in {\mathbb {N}}}\) converges to some real number \(J^*\).

(ii) Using the inequality (18), we have for all \(k\ge 0\),

For \(K\ge 0\), summing from \(k=0\) to K and using the statement (i) yields

Taking the limit as \(K\rightarrow +\infty \) leads to

which deduces that \(\lim _{k\rightarrow +\infty }\Vert z^{k}_{{\mathfrak {I}}}-z^{k+1}_{{\mathfrak {I}}}\Vert =0\). And from IASABP algorithm, we have \(\displaystyle \lim _{k\rightarrow +\infty }\Vert z^{k}_{{\mathfrak {I}}}-{\bar{z}}^k_{{\mathfrak {I}}}\Vert =0\).

(iii) Using (19) yields

Hence, we obtain

\(\square \)

Similar to Lemma 3.3 and Lemma 3.4, we have the following sublinear rate of convergence for ASABP.

Lemma 3.5

Suppose that Assumption 3.1 holds. Let \(\left\{ (x^k,y^k)\right\} _{k\in {\mathbb {N}}}\) be the sequence generated by ASABP. Then

-

(i)

The sequence \(\left\{ \Phi (x^k,y^k)\right\} _{k\in {\mathbb {N}}}\) is monotonically nonincreasing and in particular for all \(k\ge 0\),

$$\begin{aligned} \begin{aligned} \Phi (x^{k+1},y^{k+1})&\le \Phi (x^{k},y^{k})-\frac{1}{\tau _k} D_{h}(x^k,x^{k+1})-\frac{1}{\sigma _k}D_{\psi } (y^k,y^{k+1})\\&\le \Phi (x^{k},y^{k} )-\frac{1}{{\bar{\tau }}} D_{h}(x^k,x^{k+1})-\frac{1}{{\bar{\sigma }}} D_{\psi }(y^k,y^{k+1})\\&\le \Phi (x^{k},y^{k})-\delta \left( D_{h}( x^k,x^{k+1})+D_{\psi }(y^k,y^{k+1})\right) , \end{aligned} \end{aligned}$$(20)where \(\delta =\min \{\frac{1}{{\bar{\tau }}},\frac{1}{{\bar{\sigma }}}\}\). And \(\left\{ \Phi (x^k,y^k)\right\} _{k\in {\mathbb {N}}}\) converges to some finite value, denoted by \(\Phi ^*\).

-

(ii)

(Convergence rate) For any \(K\ge 0\), it holds that

$$\begin{aligned} \min _{0\le k\le K}\left\{ D_{h}( x^k, x^{k+1})+D_{\psi }( y^k, y^{k+1})\right\} \le \frac{1}{\delta (K+1)}\left( \Phi (x^0,y^0)-\Phi ^*\right) . \end{aligned}$$

3.3 Global convergence of ASABP

The analysis in Sect. 3.2 can only give the monotonically nonincreasing property of the potential function of ASABP and IASABP algorithms. In this subsection, without the Lipschitz continuity requirement on the gradients of F and G, we aim to prove that the sequence generated by ASABP algorithm is a Cauchy sequence, which hence converges to a critical point of model (1). It is worth noticing that all the NoLips algorithms for the nonconvex case [5, 12, 18, 25], the global convergence results were limited to the case that \(X= {\mathbb {R}}^n,~Y={\mathbb {R}}^m\) and the Lipschitz smoothness condition holds.

We start our analysis by specializing the assumptions on the coupling term H.

Assumption 3.2

-

(i)

The subdifferential of H obeys

$$\begin{aligned} \partial _xH(x,y)\times \partial _yH(x,y)\subset \partial H(x,y),~~~\forall (x,y)\in {\textrm{dom}}H. \end{aligned}$$ -

(ii)

The domain of H is closed.

Moreover, H satisfies one of the following conditions.

-

(iii)

H is continuous on its domain.

-

(iv)

\(H:~{\mathbb {R}}^n\times {\mathbb {R}}^m\rightarrow {\mathbb {R}}\cup \{+\infty \}\) has the form

$$\begin{aligned} H(x,y)=\phi (x,y)+w(x), \end{aligned}$$where \(w:~{\mathbb {R}}^n\rightarrow {\mathbb {R}}\cup \{+\infty \}\) is continuously differentiable on its domain; \(\phi :~{\mathbb {R}}^n\times {\mathbb {R}}^m\rightarrow {\mathbb {R}}\cup \{+\infty \}\) is continuous on \({\textrm{dom}}H\), such that \(\phi (\cdot ,y)\) is differentiable on \({\mathbb {R}}^n\) of gradient \(\nabla _x\phi \) for any \( y\in {\mathcal {D}}_y=\{y\in {\mathbb {R}}^m|(x,y)\in \textrm{dom}H\}\ne \emptyset \). For each bounded subset \({\mathcal {B}}_x\times {\mathcal {B}}_y\subset {\textrm{dom}}H\), there exists \(\xi >0\) such that, for any \({\bar{x}}\in {\mathcal {B}}_x\) and any \((y,{\bar{y}})\in {\mathcal {B}}_y^2\),

$$\begin{aligned} \Vert \nabla _x\phi ({\bar{x}},y)-\nabla _x\phi ({\bar{x}},{\bar{y}})\Vert \le \xi \Vert y-{\bar{y}}\Vert . \end{aligned}$$

Remark 3.6

We give some remarks on Assumption 3.2.

-

(i)

Since F and G are continuous differentiable on \({\overline{X}}\) and \({\overline{Y}}\) respectively, Assumption 3.2 (i) is equivalent to having \(\partial _x\Phi (x,y)\times \partial _y\Phi (x,y)\subset \partial \Phi (x,y)\) for any \((x,y)\in {\overline{X}}\times {\overline{Y}}\cap \textrm{dom}H\), which is nonempty and closed.

-

(ii)

Assumption 3.2 (iii) and (iv) aim at guaranteeing that the cluster points of the sequence generated by the algorithm are critical points. However, they are sufficient but not necessary, and the results presented in this paper can be established for a broader class of functions H.

-

(iii)

Note that for Assumption 3.2 (iv), the regularity assumptions on H are not symmetric in x and y. In particular, \(\phi (x,\cdot )\) does not need to be differentiable.

Theorem 3.7

Suppose that Assumptions 3.1 and 3.2 hold. If the sequence \(\left\{ z^k=(x^k,y^k)\right\} _{k\in {\mathbb {N}}}\) generated by ASABP converges to \({\hat{z}}=({\hat{x}},{\hat{y}})\), then it is a critical point of the problem (1) with the abstract sets \({\overline{X}},~{\overline{Y}}\), i.e.,

where \(N_{{\overline{X}}\times {\overline{Y}}}({\hat{z}})\) denotes the normal cone of \({\overline{X}}\times {\overline{Y}}\) at \({\hat{z}}\).

Proof

The first order optimality condition of the subproblem (9) shows that there exists \({\hat{H}}_{x}^{k+1}\in \partial _xH(x^{k+1},y^k)\),

or equivalently,

We first investigate the behavior of the following term,

Fix some arbitrary \(x\in {\overline{X}}\). We consider the following two possible cases.

The fist case is that the sequence \(\{D_{h}(x,x^k)\}\) converges. For this case, the three point identity (see Remark 2.8) yields

Combining with the facts that H is biconvex and F is continuously differentiable on \({\overline{X}}\), passing to the limit in the optimality condition (21), we obtain

i.e.,

In particular, if \(x^k\rightarrow {\hat{x}}\in X\), one has that \(\lim _{k\rightarrow +\infty }\Vert \nabla h(x^{k+1})-\nabla h(x^k)\Vert =0\) because of the continuity of \(\nabla h\) on X. The problem (1) in this case is equivalent to an unconstrained problem and (22) holds naturally. Certainly, the sequence \(\{D_{h}(x,x^k)\}\) must be convergent for this situation.

The second case is that the sequence \(\{D_{h}(x,x^k)\}\) fails to converge. For this case, since \(\{D_{h}(x,x^k)\}\) is nonnegative, it cannot be monotonically decreasing. Thus, there are infinitely many \(k\in {\mathbb {N}}\) such that \(D_h(x,x^{k+1})\ge D_{h}(x,x^k)\). Let \(\{k_{l}\}\subset {\mathbb {N}}\) be a subsequence with \(D_h(x,x^{k_{l}+1})\ge D_{h}(x,x^{k_{l}})\) for arbitrary l. Then for all \(x\in {\overline{X}}\),

This means that

Hence,

Since the corresponding subsequence also converges to \({\hat{x}}\), passing to the limit \(l\rightarrow +\infty \) in the optimality condition, \(0\in \partial _xH({\hat{z}})+ \nabla F({\hat{x}})+N_{{\overline{X}}}({\hat{x}})\) holds. Especially, if \(x^k\rightarrow {\hat{x}}\in \partial X\) (the boundary of X), for any \(x\in X\), the sequence \(\{D_{h}(x,x^k)\}\) fails to converge due to Remark 2.8 (iii). It provides a sufficient condition for this case.

Similarly, we have

Combining with Assumption 3.2, we complete the proof. \(\square \)

Denote the set of cluster points of the sequence \(\left\{ z^k=(x^k,y^k)\right\} _{k\in {\mathbb {N}}}\) generated by ASABP by \(\Omega ^*\), i.e.,

Lemma 3.8

Suppose that Assumptions 3.1 and 3.2 hold. Let \(\left\{ z^k=(x^k,y^k)\right\} _{k\in {\mathbb {N}}}\) be the sequence generated by ASABP which is assumed to be bounded. Then the following assertions hold.

-

(i)

\(\Omega ^*\) is a nonempty compact set, and

$$\begin{aligned} \lim _{k\rightarrow +\infty }{\textrm{dist}}(z^k,\Omega ^*)=0. \end{aligned}$$(23) -

(ii)

\(\lim _{k\rightarrow +\infty }\Phi (z^k)=\Phi (z^*)\) and \(\Phi \) is constant on \(\Omega ^*\).

Proof

(i) \(\Omega ^*\) is nonempty because the sequence \(\left\{ z^k\right\} _{k\in {\mathbb {N}}}\) generated by ASABP is bounded. By definition, it is trivial that the set \(\Omega ^*\) is compact. We now prove (23) by contradiction. Suppose that \(\{{\textrm{dist}}(z^k,\Omega ^*)\}_{k\in {\mathbb {N}}}\) does not converge to zero. Then there exist \(C>0\) and an increasing sequence \(\{k_j\}_{j\in {\mathbb {N}}}\) such that, for any \(j\in {\mathbb {N}}\), \({\textrm{dist}}(z^{k_j},\Omega ^*)>C\). However, since \(\left\{ z^{k_j}\right\} _{j\in \mathbb {N}}\) is a subsequence of the bounded sequence \(\left\{ z^{k}\right\} _{k\in {\mathbb {N}}}\), it has a convergent subsequence \(\left\{ z^{k_{j_n}} \right\} _{n\in \mathbb {N}}\) with limit \(z^*\in \Omega ^*\). Then,

which leads to a contradiction.

(ii) Taking an arbitrary point \(z^*\in \Omega ^*\), then there exists a subsequence \(\left\{ z^{k_j}\right\} _{j\in {\mathbb {N}}}\) of \(\left\{ z^{k}\right\} _{k\in {\mathbb {N}}}\) converging to \(z^*\). Notice that \((x^{k_j},y^{k_j})\in {\overline{X}}\times {\overline{Y}}\cap {\textrm{dom}}H\), which is assumed to be closed. Hence, \((x^*,y^*)\in {\overline{X}}\times {\overline{Y}}\cap {\textrm{dom}}H\), and by continuity one has

Since \(\left\{ \Phi (z^{k})\right\} _{k\in {\mathbb {N}}}\) is a convergent sequence by Lemma 3.5, this proves assertion (ii). \(\square \)

To establish the convergence of the sequence generated by ASABP, we need some extra assumptions on the Bregman functions h and \(\psi \), which are also required in [5, 12, 18, 25].

Assumption 3.3

-

(i)

\(h,~\psi \) are strongly convex functions with coefficients \(\mu _h,~\mu _{\psi }\) respectively.

-

(ii)

\(\nabla h,~\nabla \psi \) are Lipschitz continuous with coefficients \(L_1,~L_2\) respectively on any bounded subsets.

Remark 3.9

-

(i)

It is worth noticing that Assumption 3.3 (i) is not actually stringent. For example, Burg’s entropy \(h(x)=-\log x\) with \({\textrm{dom}}h=(0,+\infty )\) is a generalized Bregman function which is not strongly convex. However, regularized Burg’s entropy \({\hat{h}}(x)=-\mu \log x+\frac{\sigma }{2}x^2\) with \(\sigma , \mu >0\), is strongly convex with coefficient \(\sigma \) on \(\textrm{dom}h=(0,+\infty )\).

-

(ii)

Combining linear additivity of Bregman distance (see Remark 2.8), Assumption 3.1 (ii) and Assumption 3.3 (ii), \(\nabla F\) is Lipschitz continuous with coefficient \(L_{h}L_{1}\) on any bounded subset in \({\overline{X}}\). Similarly, \(\nabla G\) is Lipschitz continuous with coefficient \(L_{\psi }L_2\) on any bounded subset in \({\overline{Y}}\).

Proposition 3.10

Suppose that Assumptions 3.1, 3.2 with (iv) and Assumption 3.3 hold. Let \(\left\{ z^k=(x^k,y^k)\right\} _{k\in {\mathbb {N}}}\) be the sequence generated by ASABP which is assumed to be bounded. For all \(k\ge 0\), define

where

Then \(A^{k+1}\in \partial \Phi (z^{k+1})\) and there exists \({\hat{\delta }}>0\) such that

where \({\hat{\delta }}=\sqrt{2}\max \left\{ L_{h}L_1+\frac{L_1}{{\underline{\tau }}},~L_{\psi }L_2+\frac{L_2}{{\underline{\sigma }}}+\xi \right\} \).

Proof

The first order optimality conditions of (9) and (10) yield

and

respectively. Following from these relations and Assumption 3.2, it is clear that \(A^{k+1}\in \partial \Phi (z^{k+1})\). Combining with Assumption 3.3, we get that

Similarly, we can get

Rearranging the terms and completing the proof. \(\square \)

We are now ready for proving the main result of this section.

Theorem 3.11

Let the objective function \(\Phi \) be a KL function. Suppose that Assumptions 3.1, 3.2 with (iv) and Assumption 3.3 hold. Let \(\left\{ z^k=(x^k,y^k)\right\} _{k\in {\mathbb {N}}}\) be the sequence generated by ASABP which is assumed to be bounded. Then

and as a sequence, we have \(\{z^k\}_{k\in {\mathbb {N}}}\) converges to a critical point of model (1) with the abstract sets \({\overline{X}},~{\overline{Y}}\).

Proof

From Lemma 3.8, we get that

Then we consider the following two cases.

First, if there exists an integer \(k'\) for which \(\Phi (z^{k'})=\Phi (z^*)\), according to the strongly convexity of h and \(\psi \) and (20), we have that for any \(k>k'\),

where \({\bar{\delta }}=\delta \min \left\{ \frac{\mu _h}{2},~\frac{\mu _{\psi }}{2}\right\} \). Combining with the monotonically nonincreasing of \(\Phi (z^{k})\), one can obtain that \(z^{k+1}=z^k\) for any \(k\ge k'\), and the assertion holds.

Then we consider the case that \(\Phi (z^k)>\Phi (z^*)\) for all \(k\ge 0\). Since \(\lim _{k\rightarrow +\infty }\Phi (z^k)=\Phi (z^*)\), it follows that for any \(\eta >0\), there exists a nonnegative integer \(k_0\) such that \(\Phi (z^k)<\Phi (z^*)+\eta \) for all \(k\ge k_0\). From (23), we know that \(\lim _{k\rightarrow +\infty }\textrm{dist}(z^k,\Omega ^*)=0\). This means that for any \(\varepsilon >0\), there exists a positive integer \(k_1\) such that \(\textrm{dist}(z^k,\Omega ^*)<\varepsilon \) for all \(k\ge k_1\). Consequently, for all \(k>l:=\max \{k_0,k_1\}\),

Applying Lemma 2.16 and Lemma 3.8, we deduce that for any \(k>l\),

From Proposition 3.10,

On the other hand, from the concavity of \(\varphi \), we get that

For convenience, we define

Combining (24) with (25) and (26) yields that, for any \(k>l\),

or equivalently,

Using the fact that \(2\sqrt{\alpha \beta }\le \alpha +\beta \) for all \(\alpha , \beta > 0\), we have

Summing up (27) for \(k=l+1,\cdots ,m\) yields

where the last inequality due to \(\varphi (\Phi (z^{m+1})-\Phi (z^*))\ge 0\). Letting \(m\rightarrow +\infty \) yield

Equipped with the fact that \(\bar{\delta } \) is bounded below, we have

and the sequence \(\{z^k\}_{k\in {\mathbb {N}}}\) is a Cauchy sequence. Combining with Theorem 3.7, \(\{z^k\}_{k\in {\mathbb {N}}}\) converges to a critical point of model (1) with the abstract sets \({\overline{X}},~{\overline{Y}}\). \(\square \)

4 Numerical experiment

In this section, we numerically verify the ability of ASABP and IASABP analyzed in previous sections. All experiments are performed in MATLAB R2014a on a 64-bit PC with an Intel Core i7-8550U CPU (1.80GHz) and 16GB of RAM.

We consider the Poisson linear inverse problems [6], which can be conveniently described as follows. Given a matrix \(A\in {\mathbb {R}}_{+}^{m\times n}\) modeling the experimental protocol, and \(b\in {\mathbb {R}}^{m}_{+}\) the vector of measurement, the goal is to reconstruct the signal or image \(x\in {\mathbb {R}}_{+}^{n}\) from the noisy measurement b such that \(Ax\simeq b\). Often these problems can be represented as a minimize problem like

where \(\lambda >0\) is used to control the trade-off between matching the data fidelity criteria and the weight given to its regularizer, and \(d(\cdot ,\cdot )\) denotes a convex proximity measure between two vectors. Poisson linear inverse problems emerged in many fields, like astronomy, nuclear medicine, electronic microscopy, statistical and image science [6, 8, 16]. Therefore, the design of methods for such problems has been studied intensively.

By introducing an auxiliary variable \(y\in {\mathbb {R}}^{n}\), we can solve (28) approximately according to the following optimization problem

by defining

where \(\mu \) is a positive penalization parameter.

Adopting the above model (29), we take the first component in the objective function based on the Boltzmann-Shannon entropy,

It is easy to find that \(F(x)=d(b,Ax)\) has no globally Lipschitz continuous gradient [6], but satisfies GL-samd condition with a generalized Bregman function called Burg’s entropy, denoted as

and so now the Bregman distance is given by

and for any \(L_h\) satisfying \(L_h\ge \Vert b\Vert _{1}=\sum _{i=1}^{m}b_{i}\), the function \(L_h h-F\) is convex on \({\mathbb {R}}^{n}_{+}\). In this case, the iterates in subproblem (9) and (12) reduce to solve n one-dimensional convex problems. Note that Assumption 3.3 does not hold for this case.

We consider Tikhonov regularization in model (29), i.e., the regularizer is \(G(y)=\lambda g(y)=\frac{\lambda }{2}\Vert y\Vert ^2\). Take Energy \(\psi =\frac{1}{2}\Vert y\Vert ^2\), which corresponding Bregman distance is defined as \(D_{\psi }(y,z)=\frac{1}{2}\Vert y-z\Vert ^2\), and for any \(L_{\psi }\) satisfying \(L_{\psi }\ge \lambda \), the function \(L_{\psi }\psi -G\) is convex on \({\mathbb {R}}^{n}\). It is worth noticing that the objective function in model (29) in this case is KL function according to [3, 11].

Now we give the setting for the model to be tested. For the model (29), the entries of \(A\in {\mathbb {R}}_{+}^{m\times n}\) and \(b\in {\mathbb {R}}^{m}_{+}\) are generated following independent uniform distributions over the interval [0, 1]; we set \(\lambda =1\) and the penalization parameter \(\mu \) as large as the conditions permit. We initialize all the algorithms at the vector generated by uniform distribution over the interval (0, 1), and terminate it when \(Error:=\frac{\Vert x^k-x^{k-1}\Vert }{\max \{1,\Vert x^k\Vert \}} \le 10^{-6}\). We also terminate the algorithms when the number of iteration hits 100.

As these methods can be applied to overdetermined (\(m>n\)) and underdetermined (\(m<n\)) problems, we have preformed numerical tests on both cases. As commented before, the main parameters in IASABP are setting as follows: \(\eta \) is a random number between 0.90 and 0.95 and \(\theta = 0.99\); \(\alpha _k^0=\beta _{k}^{0}=1\) for all k; \(L_h=\Vert b\Vert _1\) and \(L_{\psi }=\lambda \).

For this Poisson linear inverse problem, Fig. 1 records the trend of the residual functions of ASABP and IASABP with respect to the overdetermined and underdetermined situations. We can see that IASABP converges faster than ASABP. It shows that the IASABP algorithm has an advantage over ASABP, giving an interesting option for real problems.

5 Conclusions

We have proposed an alternating structure-adapted Bregman proximal (ASABP) gradient descent algorithm for solving the nonconvex nonsmooth minimization problems with abstract sets. An improved version with inertial accelerated scheme and line search strategy (IASABP) was also presented. Under mild conditions, we proved that both ASABP and IASABP possess an O(1/K) sublinear rate of convergence, measured by Bregman distance. Furthermore, if the objective function has Kurdyka–Łojasiewicz property, we proved that the sequence generated by ASABP converges to the critical point of the constrained problem. Theoretically, our results are extension of the recent NoLips algorithms, which are either limited to the convex case, or the nonconvex case but there is no abstract sets. Numerically, our results avoid the estimation of the Lipschitz constants, an annoying task in implementation. We applied ASABP and IASABP to Poisson linear inverse problem, and their performance is very promising.

Data availability

All data generated or analysed during this study are included in the paper.

References

Ahookhosh, M., Hien, L., Gillis, N., Patrinos, P.: A block inertial Bregman proximal algorithm for nonsmooth nonconvex problems with application to symmetric nonnegative matrix tri-factorization. J. Optim. Theory Appl. 190, 234–258 (2021)

Ahookhosh, M., Hien, L., Gillis, N., Patrinos, P.: Multi-block Bregman proximal alternating linearized minimization and its application to orthogonal nonnegative matrix factorization. Comput. Optim. Appl. 79, 681–775 (2021)

Attouch, H., Bolte, J., Redont, P., Soubeyran, A.: Proximal alternating minimization and projection methods for nonconvex problems: an approach based on the Kurdyka-Łojasiewicz inequality. Math. Oper. Res. 35, 438–457 (2010)

Attouch, H., Bolte, J., Svaiter, B.: Convergence of descent methods for semi-algebraic and tame problems: proximal algorithms, forward-backward splitting, and regularized gauss-seidel methods. Math. Program. 137, 91–129 (2013)

Bauschke, H., Bolte, J., Chen, J., Teboulle, M., Wang, X.: On linear convergence of non-Euclidean gradient methods without strong convexity and Lipschitz gradient continuity. J. Optim. Theory Appl. 182, 1068–1087 (2019)

Bauschke, H., Bolte, J., Teboulle, M.: A descent lemma beyond Lipschitz gradient continuity: first-order methods revisited and applications. Math. Oper. Res. 42, 330–348 (2016)

Benning, M., Betcke, M., Ehrhardt, M., Schonlieb, C.: Choose your path wisely: gradient descent in a Bregman distance framework. SIAM J. Imaging Sci. 14, 814–843 (2021)

Bertero, M., Boccacci, P., Desiderà, G., Vicidomini, G.: Image deblurring with Poisson data: from cells to galaxies. Inverse Probl 25, 123006 (2009)

Bertsekas, D.: Nonlinear programming. J. Oper. Res. Soc. 48, 334–334 (1997)

Bertsekas, D., Tsitsiklis, J.: Parallel and Distributed Computation: Numerical Methods. Athena Scientific (2015)

Bolte, J., Sabach, S., Teboulle, M.: Proximal alternating linearized minimization for nonconvex and nonsmooth problems. Math. Program. 146, 459–494 (2014)

Bolte, J., Sabach, S., Teboulle, M., Vaisbourd, Y.: First order methods beyond convexity and Lipschitz gradient continuity with applications to quadratic inverse problems. SIAM J. Optim. 28, 2131–2151 (2018)

Censor, Y., Iusem, A., Zenios, S.: An interior point method with Bregman functions for the variational inequality problem with paramonotone operators. Math. Program. 81, 373–400 (1998)

Gao, X., Cai, X., Han, D.: A Gauss-Seidel type inertial proximal alternating linearized minimization for a class of nonconvex optimization problems. J. Global Optim. 76, 863–887 (2020)

Han, D.: A new hybrid generalized proximal point algorithm for variational inequality problems. J. Global Optim. 26, 125–140 (2003)

Hohage, T., Werner, F.: Inverse problems with Poisson data: statistical regularization theory, applications and algorithms. Inverse Probl 32, 093001 (2016)

Lu, H., Freund, R., Nesterov, Y.: Relatively smooth convex optimization by first-order methods, and applications. SIAM J. Optim. 28, 333–354 (2018)

Mukkamala, M., Ochs, P., Pock, T., Sabach, S.: Convex-concave backtracking for inertial Bregman proximal gradient algorithms in nonconvex optimization. SIAM J. Math. Data Sci. 2, 658–682 (2020)

Nesterov, Y.: Introductory Lectures on Convex Optimization: A Basic Course. Springer (2003)

Nikolova, M., Tan, P.: Alternating structure-adapted proximal gradient descent for nonconvex nonsmooth block-regularized problems. SIAM J. Optim. 29, 2053–2078 (2019)

Pock, T., Sabach, S.: Inertial proximal alternating linearized minimization (iPALM) for nonconvex and nonsmooth problems. SIAM J. Imaging Sci. 9, 1756–1787 (2016)

Polyak, B.: Some methods of speeding up the convergence of iteration methods. USSR Comput. Maths. Math. Phys. 4, 1–17 (1964)

Rockafellar, R.: Convex Analysis. Princeton University Press, Princeton (1970)

Rockafellar, R., Wets, R.: Variational Analysis. Springer (2009)

Teboulle, M.: A simplified view of first order methods for optimization. Math. Program. 170, 67–96 (2018)

Wu, Z., Li, C., Li, M., Lim, A.: Inertial proximal gradient methods with bregman regularization for a class of nonconvex optimization problems. J. Global Optim. 79, 617–644 (2021)

Xu, Y., Yin, W.: A block coordinate descent method for regularized multiconvex optimization with applications to nonnegative tensor factorization and completion. SIAM J. Imaging Sci. 6, 1758–1789 (2013)

Zhang, H., Dai, Y., Guo, L., Peng, W.: Proximal-like incremental aggregated gradient method with linear convergence under Bregman distance growth conditions. Math. Oper. Res. 46, 61–81 (2021)

Zhang, X., Zhang, X., Li, X., Li, Z., Wang, S.: Classify social image by integrating multi-modal content. Multimed. Tools. Appl. 77, 7469–7485 (2018)

Acknowledgements

The authors are grateful to the editor and the anonymous reviewer for their valuable comments and suggestions toward the improvement of this paper. This work was supported by National Natural Science Foundation of China (12201173, 12131004, 12126603).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Gao, X., Cai, X., Wang, X. et al. An alternating structure-adapted Bregman proximal gradient descent algorithm for constrained nonconvex nonsmooth optimization problems and its inertial variant. J Glob Optim 87, 277–300 (2023). https://doi.org/10.1007/s10898-023-01300-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10898-023-01300-0

Keywords

- Proximal gradient decent

- Bregman distance

- Inertial

- Kurdyka–Łojasiewicz property

- Nonconvex nonsmooth optimization