Abstract

A new type of decomposition by population subgroup is proposed for the Gini inequality index. The decomposition satisfies the completely identical distribution (CID) condition, whereby the between-group inequality is null if and only if the distribution within each subgroup is identical to all the others. Thus, this decomposition contrasts strikingly with the subgroup decomposition of the generalized entropy measures, which satisfy the condition that the between-group inequality is null if the mean within each subgroup equals those of all the others. The new decomposition can be generalized to the distance-Gini index and the volume-Gini index, two multivariate Gini indices introduced by Koshevoy and Mosler, with some modification of the index definition and a somewhat loosened CID condition in the latter case. The source decomposition is also generalized to these multi-dimensional indices. Interaction terms appear among sources of different attributes in the decomposition for the modified volume–Gini index.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Decomposability of inequality measures into contributions of population subgroups and contributions of sources is a desirable property for studies of economic inequality status and trends in populations. In fact, several types of subgroup decomposition of the Gini inequality index have been proposed so far. However, these decompositions have disadvantages such as inconsistency and impracticality. In contrast, the new type of decomposition presented in this paper has good properties. It is notable that the new decomposition satisfies the completely identical distribution (CID) condition, whereby the between-group inequality is null if and only if the distribution within each subgroup is identical to all the others. This is in striking contrast to the well-known subgroup decomposition of the Theil index or the generalized entropy measures, which satisfy the condition whereby the between-group inequality is null if the mean within each subgroup equals those of all the others. It should also be noted that the new decomposition can be generalized to multivariate Gini indices while essentially maintaining its properties, indicating its suitability for the Gini decomposition.

Generalization of the Gini index to multivariate settings is a relatively new research issue, although the Gini index has been the most popular inequality measure for many years. Koshevoy and Mosler [11] proposed two types of multivariate Gini index, the distance-Gini index and the volume-Gini index. The former was formulated using an approach involving generalization of the univariate relative mean difference. The latter is a modification of the multivariate index proposed by Oja [14], which can be formulated with an approach using generalization of the Lorenz curve. Koshevoy and Mosler [11] showed that their indices are decomposable into subgroups in a similar way as the two-term decomposition of the ordinary univariate Gini index [see (14) in the next section] proposed by several researchers, including Rao [16] and Dagum [4]. However, their decomposition has disadvantages in terms of practicality and consistency. The new decomposition in this paper can easily be generalized based on studies of the multivariate Cramér test [2] in the case of the distance-Gini index. The generalization can also be achieved in the case of the volume-Gini index based on the Brunn–Minkowski inequality or Minkowski’s first inequality concerning mixed volume, with some modifications of the index definition. The CID condition needs to be loosened somewhat in the latter case. The source decomposition of Rao [16] can also be generalized to both multivariate indices. It is notable that interaction terms appear among sources of different attributes in the source decomposition of the modified volume-Gini index.

The paper is organized as follows. The next section is devoted to subgroup decomposition of the usual univariate Gini index. Section 2.1 introduces the new type of subgroup decomposition, which is extended to the multilevel decomposition in section 2.2, and compared with other types of subgroup decomposition previously proposed in section 2.3. In section 2.4, the new decomposition is applied to Japanese household income data. The results for age-group decomposition and regional decomposition are presented. Section 3 is devoted to subgroup and source decomposition of the multivariate Gini indices. The new subgroup decomposition is generalized to the distance-Gini index in section 3.1, and to the volume-Gini index in section 3.2, with modifications of the index definition. Source decomposition of both indices is introduced in section 3.3, followed by applications of the subgroup decomposition to Japanese household income and expenditure data in section 3.4. Section 4 concludes discussions, with some remarks concerning multivariate inequality measures.

2 Subgroup decomposition of the Gini index

2.1 New type of subgroup decomposition

Let F(y) represent the cumulative distribution function of a nonnegative random variable Y such as income, with a finite positive expectation μ. The Gini mean difference M(F) can be presented in several ways, as follows:

In the literature, double M(F) is often called the Gini mean difference; however, M(F) is defined as the Gini mean difference in this paper. Among the four equivalent representations in Eq. 1, the first is the original expression of the Gini mean difference, the fourth express it as the covariance between the variable Y and its rank F(Y) (see [13]). Strictly speaking, when taking non-continuous distributions into consideration, the fourth representation does not hold. The second representation expresses M(F) as the co-moment between the rank function F(y) and its reverse rank function 1−F(y). Since the integrand of the second representation \( F{\left( y \right)}{\left( {1 - F{\left( y \right)}} \right)} \) equals the expected variance of the binary variable “whether the random variable Y takes a value less than or equal to y”, the second representation is also interpretable as the total of the expected variance of the binary variable over various values of Y. The second representation can be proved using Lemma 2.1 of Baringhaus and Franz [2]. The third and fourth representations are derived from the second using integration by parts. As for the Gini relative mean difference, in other words, the Gini inequality index \( R{\left( F \right)} = {M{\left( F \right)}} \mathord{\left/ {\vphantom {{M{\left( F \right)}} \mu }} \right. \kern-\nulldelimiterspace} \mu \), the corresponding representations are obtained by division by μ in Eq. 1.

Assume that the population consists of groups 1,2,...,n. Let F i (y), μ i , and p i represent the cumulative distribution function, the expected value and the share of group i in the overall population, respectively. Note that \( F{\left( y \right)} = {\sum {p_{i} F_{i} {\left( y \right)}} } \). Then, using the second representations in Eq. 1, the Gini mean difference M(F) and the Gini index R(F) can be decomposed by subgroup, respectively, as follows:

The proof is by direct calculation. The first term on the right-hand side of Eq. 3 corresponds to the within-group inequality, and the second term corresponds to the between-group inequality. The contribution of each group to the between-group inequality can be naturally defined as follows:

\( cv{\left( {G,F} \right)}: = {\int {{\left( {G{\left( y \right)} - F{\left( y \right)}} \right)}^{2} dy} } \) satisfies the following equality [2], which is useful for generalizing the decompositions 2 and 3 to multivariate settings, as shown in the next section:

Equality 5 forms the basis of the Cramér two-sample test and its generalization to multivariate settings [2]. In this connection, I call the between-group inequality, the second term on the right-hand side of Eq. 3, the Cramér coefficient of variation among groups 1,...,n.

It may be felt that the second term should not be regarded as the between-group inequality because the functional form appears different to that of the Gini index. However, the second term has essentially the same form as the Gini index, because the Gini index holds the following equality, which can be regarded as a special case of Eq. 3 with the null within-group inequality:

where I [x, ∞)(y)=1 if y ≥ x, or 0 if y < x. Equality 6 expresses the notion that the Gini index is identical to the Cramér coefficient of variation if each population unit forms an individual group. Note that the indicator function I [x, ∞) in Eq. 6 corresponds to the one-point distribution function for a random variable, which takes value x almost surely. For proof note that \( {\int {{\left( {I_{{[x,\infty )}} {\left( y \right)} - F{\left( y \right)}} \right)}^{2} dF{\left( y \right)}} } = F{\left( y \right)}{\left( {1 - F{\left( y \right)}} \right)} \).

The following decompositions are also true:

These decompositions can be proved by direct calculation. Decomposition 8 attributes the between-group inequality to the relative mean squared difference of distribution functions between each pair of groups. From Eqs. 5 and 1, the following equality is obtained for cv(F i ,F):

Thus, cv(F i ,F) is four-fold greater than the surplus of dispersion in terms of the Gini mean difference for the horizontal merger of group i and the overall population. Assuming the ɛ to 1−ɛ merger ratio, where ɛ is a small positive number, the following representation is also obtained for cv(F i ,F) by substituting ɛ, 1−ɛ, F i , F and \( \varepsilon F_{i} + {\left( {1 - \varepsilon } \right)}F \) for p 1, p 2, F 1, F 2 and F, respectively in Eq. 7:

Thus, cv(F i , F) equals the surplus of the dispersion relative to the merger ratio when merger with an infinitely small merger ratio of group i takes place.

Obviously, the between-group inequality in decompositions 3 and 8 is null if and only if the distribution within each group is identical to those of all other groups. I call this condition the completely identical distribution (CID) condition. For this reason, the decomposition is quite different from that of the generalized entropy measures of inequality, in which the between-group inequality is null if and only if the mean within each group is equal to all the others. Bhattacharya and Mahalanobis [3] mentioned that, intuitively, it is reasonable to lay down the between-group component should not change if the group distributions F i are changed, keeping μ i fixed. However, Dagum [4] was opposed to taking the income means of subpopulations as their representative values to estimate the between-subpopulation inequality because income distributions significantly depart from normality. I believe that the new decomposition 3 favors Dagum’s view, although he pursued a different approach that added an extra component besides the between-group component of Bhattacharya and Mahalanobis, as shown in section 2.3.

2.2 Extension to multilevel decomposition

Let F ij (y), μ ij , and p ij represent the cumulative distribution function, the expected value and the share of subgroup j in group i, respectively. Noting that \(F_{i} {\left( y \right)} = {\sum\nolimits_j {p_{{ij}} F_{{ij}} {\left( y \right)}} }\), and \( \mu _{i} = {\sum\nolimits_j {p_{{ij}} \mu _{{ij}} } }\), the two-level decomposition of the Gini index R(F) is derived by further decomposing the Gini index within each group R(F i ) in Eq. 3 as follows:

The second term on the right-hand side of Eq. 11 corresponds to the sum of the between-subgroup inequalities within groups. One of the advantages of decomposition 3 is that the between-group inequality is consistently defined with hierarchical grouping systemsFootnote 1, since the between-subgroup inequality in the overall population equals the sum of the between-subgroup inequalities within groups and the between-group inequality in the overall population, i.e. the following equality is true for each group:

Obviously, decomposition 11 can be further extended to thicker-layered decompositions, retaining the consistency.

2.3 Comparison with other types of subgroup decomposition

Several researchers, such as Pyatt [15] and Dagum [4], proposed the following three-term decomposition:

where\( D_{{ij}} = {\int {{\text{d}}F_{j} {\left( x \right)}{\int_0^x {{\left( {x - y} \right)}{\text{d}}F_{i} } }{\left( y \right)}} }\;if{\text{ }}\mu _{i} > \mu _{j} ,{\text{ }}or{\int {{\text{d}}F_{i} {\left( x \right)}{\int_0^x {{\left( {x - y} \right)}{\text{d}}F_{j} } }{\left( y \right)}} }\;if{\text{ }}\mu _{i} < \mu _{j} \).The first term can be regarded as the contribution of the within-group inequality since the term is a weighted sum of the within-group inequality values. The third term on the right-hand side of (13) equals the between-group inequality defined by Bhattacharya and Mahalanobis [3]. The second term is regarded as the contribution of the trans-variation intensity, which measures a degree of overlap between the within-group distributions. This three-term decomposition is less satisfactory because it is inconsistent with multilevel groupings, and the weights assigned to the subgroups in the first term do not sum up to one.

The sum of the second and third terms is called the gross between-group Gini index, which, with the first term, comprises the following two-term decomposition:

Although the two-term decomposition 14 can be extended to a multilevel decomposition consistently in a sense, the gross between-group Gini index cannot be regarded as the between-group inequality because it does not take the minimum constant value (usually normalized to null) when the within-group distributions or means are identical to each other.

Making use of the fourth representation of the Gini mean difference in Eq. 1, Yitzhaki and Lerman [24] proposed a different type of three-term decomposition, which yields a between-group inequality that is much closer to the counterpart of the new decomposition 3, as shown in section 2.4.

where \( O_{{0i}} = \frac{{{\int {{\left( {y - \mu _{i} } \right)}F{\left( y \right)}{\text{d}}F_{i} } }{\left( y \right)}}} {{{\int {{\left( {y - \mu _{i} } \right)}F_{i} {\left( y \right)}{\text{d}}F_{i} } }{\left( y \right)}}},{\text{ }}G_{i} = {\int {F{\left( y \right)}{\text{d}}F_{i} {\left( y \right)}} } \) In Eq. 15, O 0i measures the degree to which the overall distribution is included in the range of the within-group distribution i, and G i is the expected rank of observations belonging to group i if they are ranked according to the ranking of the overall population. The third term on the right-hand side of Eq. 15 is the covariance between the within-group means and the average ranks of the respective groups. Thus, the third term can be regarded as the between-group inequality, which vanishes if the within-group mean μ i equals that of all the others, or the average rank of each group G i equals that of all the others. Although their decomposition has disadvantages in that the between-group inequality may take a negative value and there is inconsistency with multilevel groupings, it notably takes a step towards the new decomposition presented in this paper, in that the between-group inequality is defined using more than a single type of aggregates.

The contribution of each group to the between-group inequality in decomposition 3 shows the following relation to the components in decomposition 15 if F i is continuous for any group:

where \( {\text{O}}_{{i0}} = \frac{{{\int {{\left( {y - \mu } \right)}F_{i} {\left( y \right)}{\text{d}}F} }{\left( y \right)}}}{{{\int {{\left( {y - \mu } \right)}F{\left( y \right)}{\text{d}}F{\left( y \right)}} }}} \). The proof is given in the Appendix. Note that the third term on the right-hand side of Eq. 16 vanishes on summation.

2.4 Applications to Japanese family income data

2.4.1 Decomposition of income inequality into age groups for household heads

The most recent survey results drew attention to a sharp rise in income inequality among the young generation in Japan, although the overall inequality has not risen notably if age effects are excluded. Table 1 shows trends in income inequality within each age group for household heads measured by the Gini index and the squared coefficient of variance (SCV). These indices measure the annual income inequality among households with two or more members. The estimates of the indices are derived from the National Survey of Family Income and Expenditures, a large-scale family budget survey of approximately 50,000 households, conducted by the Statistics Bureau, Ministry of Internal Affairs and Communications every 5 years. The Gini indices are estimated from two-way tables of income class by age group of the household head using the composite Simpson’s rule for approximations of Lorenz domains in a similar manner to the official Gini estimates. SCVs are picked up from the existing statistical tables, so the estimates are virtually calculated from the micro data. There are 10 income classes, ranging from <2 million yen to ≥15 million yen. Such Gini estimates are empirically considered to be good approximations to those estimated from the micro data.

As shown in Table 2, decomposition into age groups for household headsFootnote 2 reveals that the youngest group, with a household head <30 years old, did not contribute to the slight increase in overall inequality between 1999 and 2004, despite the sharp increase in the within-group inequality. The increase in relative income of the youngest group rather contributed to the decrease in the between-group inequality, which canceled out the positive contribution of the within-group inequality. Thus, the slight increase in overall inequality between 1999 and 2004 should be attributed to contributions of other age groups, which did not draw much attention.

The between-group inequality and contribution of each age group measured by the Gini decomposition of Yitzhaki and Lerman [24] are approximately two-fold greater than their counterparts in the new decomposition with the same sign.

2.4.2 Regional inequality in income distribution

It has recently been speculated that the between-region inequality, in particular the gap between the metropolitan areas including Greater Tokyo and other areas, is increasing, as well as the between-household inequality. However, the actual trend may differ somewhat from this speculation according to the results derived from the new decomposition. Table 3 shows the recent trends in regional income inequality in Japan, measured by three types of the Gini decomposition and decomposition of the generalized entropy measures. The single-parameter entropy family is defined as follows:

where \( \varphi _{c} {\left( {y \mathord{\left/ {\vphantom {y \mu }} \right. \kern-\nulldelimiterspace} \mu } \right)} = {\left( {{\left( {y/\mu } \right)}^{c} - 1} \right)}/c{\left( {c - 1} \right)} \) if c ≠ 0,1,\( \varphi _{1} {\left( {y \mathord{\left/ {\vphantom {y \mu }} \right. \kern-\nulldelimiterspace} \mu } \right)} = {\left( {y \mathord{\left/ {\vphantom {y \mu }} \right. \kern-\nulldelimiterspace} \mu } \right)}\log {\left( {y \mathord{\left/ {\vphantom {y \mu }} \right. \kern-\nulldelimiterspace} \mu } \right)} \), \( \varphi _{0} {\left( {y \mathord{\left/ {\vphantom {y \mu }} \right. \kern-\nulldelimiterspace} \mu } \right)} = \log {\left( {\mu \mathord{\left/ {\vphantom {\mu y}} \right. \kern-\nulldelimiterspace} y} \right)} \). The inequality indices drawn from the entropy family 17 satisfy the following subgroup decomposition:

The first and second terms on the right-hand side of Eq. 18 correspond to the within- and between-group inequality, respectively. In Table 3, the between-region inequality indices in the case of c = 0,1 2 are denoted by E 0, E 1 and E 2, respectively. E 2 is equivalent to SCV.

A two-level regional grouping is used for calculation of the regional inequality. The whole country consists of 47 prefectures. Each prefecture was subdivided into approximately 3,000 municipalities (cities, wards, towns and villages) before many municipality mergers took place in 2005. The municipalities in each prefecture were grouped for the National Survey of Family Income and Expenditures, based on the more detailed municipality grouping used for the Establishment and Enterprise Census. Each municipality group consists of neighboring municipalities within the same prefecture. These groups were determined by taking into consideration spheres of residential life or economic relations among municipalities. There are 274 municipality groups in total. Similar to the application in section 2.4.1, Gini indices and their breakdowns by the new decomposition are estimated from two-way tables of income class by regions using the composite Simpson’s rule for approximations of Lorenz domains.

The Gini decomposition of Bhattacharya and Mahalanobis [3] and decomposition of the generalized entropy measures indicate an increase in the between-prefecture inequality between 1999 and 2004 as well as an increase in the between-municipality-group inequality. In contrast, the new Gini decomposition indicates a continuance of the downtrend of the between-prefecture inequality, despite an upturn of the between-municipality-group inequality. The Gini decomposition of Yitzhaki and Lerman shows little change in the between-prefecture inequality in the same period. Thus, the new decomposition and the decomposition of Yitzhaki and Lerman imply that the regional inequality within prefectures should be an issue rather than the gaps among prefectures. If attaching greater importance to consistency with the measurement of between-household inequality usually made by the Gini index in Japan, the implication derived from the new Gini decomposition should be noted, and it deserves further investigation.

Shorrocks and Wan [19] pointed out that the Gini decomposition of Bhattacharya and Mahalanobis produces considerably greater shares for the between-group inequality in the overall inequality compared to the decompositions of other indices. However, the new Gini decomposition produces slightly smaller shares for the between-group inequality compared to the decompositions of other indices, as shown in Table 4. The Gini decomposition of Yitzhaki and Lerman produces slightly greater shares. Similar to the decomposition into age groups for household heads shown in section 2.4.1, the between-prefecture inequality derived from the Yitzhaki and Lerman decomposition is approximately two-fold greater than that of the new decomposition. However, for the between-municipality-group inequality, the relative difference is less than double. It seems intuitive to suppose that the more minutely the population is subdivided, the smaller is the relative difference becomes.

3 Decomposition of the multivariate Gini index

3.1 Subgroup decomposition of the distance Gini index

In this section, the new type of subgroup decomposition for the Gini index is generalized to multivariate Gini indices. First, the corresponding decomposition of the distance-Gini index, a variation of the multivariate Gini index proposed by Koshevoy and Mosler [11], is introduced, applying the achievement of Baringhaus and Franz [2] concerning the multivariate Cramér test.

Let x={x k } and y={y k } be d-dimensional vectors, and let F(y) represent the distribution function of a d-variate random variable Y on the orthant \( R^{d}_{ + } \) with a finite positive expectation vector μ={μ k }. Koshevoy and Mosler [11] defined the distance-Gini mean difference M D(F) as follows:

Let x/μ be a vector {x k /μ k }, and \( \widetilde{F}{\left( y \right)} \) be the distribution function of a random variable Y/μ. Then Koshevoy and Mosler [11] defined the distance-Gini relative mean difference (the distance-Gini index) R D(F) as \( M_{D} {\left( {\widetilde{F}} \right)} \). In the univariate case (d = 1), the distance-Gini index is identical to the ordinary Gini index.

The Euclidean norm can be represented as follows (e.g. [9]; the proof is also given in a more general form in the Appendix):

where υ is the uniform distribution on the unit sphere \( S^{{d - 1}} = {\left\{ {\left. {a \in R^{d} \;} \right|\;{\left\| a \right\|} = 1} \right\}} \), and \( C_{d} = {\Gamma {\left( {{{\left( {d + 1} \right)}} \mathord{\left/ {\vphantom {{{\left( {d + 1} \right)}} 2}} \right. \kern-\nulldelimiterspace} 2} \right)}} \mathord{\left/ {\vphantom {{\Gamma {\left( {{{\left( {d + 1} \right)}} \mathord{\left/ {\vphantom {{{\left( {d + 1} \right)}} 2}} \right. \kern-\nulldelimiterspace} 2} \right)}} {2\pi }}} \right. \kern-\nulldelimiterspace} {2\pi }^{{{{\left( {d - 1} \right)}} \mathord{\left/ {\vphantom {{{\left( {d - 1} \right)}} 2}} \right. \kern-\nulldelimiterspace} 2}} \). Equation 20 allows the distance-Gini mean difference to be represented as follows [12]:

where F(·, a) denotes the distribution function of a·X, the projection of the random variable X on the line spanned by vector a. The corresponding representation for the distance-Gini index is obtained by substituting \( \widetilde{F}{\left( { \cdot ,a} \right)} \) for F(·, a), where \( \widetilde{F}{\left( { \cdot ,a} \right)} \) denotes the distribution function of a·X/μ. Similar to the second representation of the Gini mean difference in Eq. 1, the second representation of M D(F) in Eq. 21 can be interpreted as the total expected variance for whether or not a·X ≤ u occurs over the relative level u and the projection direction a.

Koshevoy and Mosler [12] mentioned that the distance-Gini index is decomposable into subgroup in a similar manner to the two-term decomposition 14. However, such decomposition has the same disadvantages as Eq. 14.

An extension of Eq. 5 to multivariate settings allows the (new) subgroup decomposition for the distance-Gini index. Let G(y) represents another d-variate distribution function. Baringhaus and Franz [2, Theorem 2.1) proved the following inequality, where the equality holds if and only if F = G:

Using Eqs. 4, 5 and 20, cvD(G, F) can be represented as follows:

Assume the population consists of groups 1,2,...,n. Let F i (y), μ i , and p i represent the d-variate distribution function, the expectation vector and the share of group i in the overall population, respectively. Let \( \widetilde{F}_{i} {\left( y \right)} \) and \( \overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{F} _{i} {\left( y \right)} \) be the distribution functions of Y/μ i and Y/μ within group i, respectively. Then, the distance-Gini mean difference M D(F) and the distance-Gini index R D(F) can be decomposed as follows:

where r D(F i )=0 if R D(F i )=0 or \( {M_{{\text{D}}} {\left( {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{F} _{i} } \right)}} \mathord{\left/ {\vphantom {{M_{{\text{D}}} {\left( {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{F} _{i} } \right)}} {M_{{\text{D}}} {\left( {\widetilde{F}_{i} } \right)}}}} \right. \kern-\nulldelimiterspace} {M_{{\text{D}}} {\left( {\widetilde{F}_{i} } \right)}} \) otherwise. The proof is given in the Appendix. r D(F i ) in Eq. 25 corresponds to the average relative level of group i. If μ i = μ, then r D(F i )=1. However, if μ i ≠ μ, r D(F i ) depends on the distribution F i unlike the univariate case. The second term on the right-hand side of Eq. 25 corresponds to the between-group inequality. It is null if and only if F i = F for any group. That is, subgroup decomposition 25 satisfies the CID condition.

The distance-Gini mean difference and the distance-Gini index also have decompositions that correspond to Eqs. 7 and 8, respectively. The decomposition 8 is extended as follows:

The decomposition 26 can be proved in a similar manner to the proof of Eq. 25. cvD(F i ,F) holds Eq. 27, which correspond to Eq. 10.

3.2 Subgroup decomposition of the modified volume-Gini index

3.2.1 Modified Torgersen index

Several types of multivariate Gini index proposed in the past can be defined based on the generalized Lorenz domain. For the introduction of such indices, let γ={γ i } be a non-negative d(≥2)-dimensional constant vector, and define the quantity M T(F|γ) as follows:

Thus, a type of multivariate Gini index R T (F|γ) is defined as \( M_{{\text{T}}} {\left( {\widetilde{F}\left| \gamma \right.} \right)}\). R T(F|γ) ranges from zero to unity, as proved in the Appendix. Since R T(F|γ) equals the multivariate Gini index of Torgersen [21] if γ = 0, R T(F|γ) is called the modified Torgersen index. M T(F|γ), which corresponds to the Gini mean difference, is hereafter called the modified Torgersen mean difference or mean volume. As explained later, R T(F|1), where 1={1,...,1}, is preferable to the original Torgersen index R T(F|0). Similarly, M T(F|μ) is preferable to M T(F|0). Oja [14] proposed a different generalization, as follows:

\( R_{O} {\left( F \right)} = M_{O} {\left( {\widetilde{F}} \right)} \). If d = 1, M O(F) is equivalent to the univariate Gini mean difference, and R O(F) is equivalent to the ordinary Gini index. M O(F) was introduced as a variation of the generalized variance of Wilks [23], which can be presented as follows:

The third representation (rightmost side) in Eq. 30 expresses the notion that the generalized variance equals the determinant of the variance–covariance matrix of distribution F. M O(F) and M T(F| μ) can be regarded as counterparts of the first and second representations of the generalized variance, respectively.

3.2.2 Multidimensional Lorenz domain

The modified Torgersen index and the Oja index can be defined based on the volume of the Lorenz zonoids, which is a generalization of the Lorenz domain, introduced by Koshevoy and Mosler [10]. The relation between the indices and the Lorenz zonoids was studied by Koshevoy and Mosler [11]. This relation is utilized to derive the subgroup decomposition of the indices in this paper. For a measurable function:\( \phi :R^{d}_{ + } \to {\left[ {0,1} \right]} \), consider a d-dimensional vector z(φ,F|γ), where

The following set Z T(F|γ), consisting of all z(φ,F|γ), is called the γ-zonoid of the distribution F.

\( Z_{T} {\left( {\widetilde{F}\left| \gamma \right.} \right)} \), the γ-zonoid of \( \ifmmode\expandafter\tilde\else\expandafter\~\fi{F} \) that corresponds to the distribution function of Y/μ, is called the γ−Lorenz zonoid of F. Z O(F), the lift zonoid of F, is defined in the d+1 dimensional space \( {\left[ {0,1} \right]} \times R^{d}_{ + } \) as follows:

\( Z_{{\text{O}}} {\left( {\widetilde{F}} \right)} \) is called the Lorenz zonoid of F. The γ-zonoids and lift zonoids belong to the family of the convex bodies – i.e. nonempty, compact and convex subsets of R d (regardless of whether they contain interior points or not). Z O(F) and \( Z_{{\text{O}}} {\left( {\widetilde{F}} \right)} \) are projected onto Z T(F|γ) and \( Z_{{\text{T}}} {\left( {\widetilde{F}\left| \gamma \right.} \right)} \), respectively, by the following linear transformation from \( {\left[ {0,1} \right]} \times R^{d}_{ + } \) onto \( R^{d}_{ + } \):

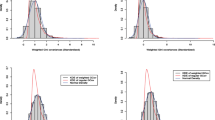

In the univariate case (d = 1), the Lorenz zonoid has the shape shown in Fig. 1. The boundary of the univariate Lorenz zonoid consists of the Lorenz curve and the inverse Lorenz curve, which is equivalent to the Lorenz curve if rotated on the center point (1/2, 1/2) at an angle of 180°.

The volumes of Z T(F|γ) and \( Z_{T} {\left( {\widetilde{F}|\gamma } \right)} \) multiplied by the reciprocal of 1 + Σγ i equal the modified Torgersen mean difference M T(F|γ) and the modified Torgersen index R T(F|γ), respectively. Similarly, the volumes of Z O(F) and \( Z_{O} {\left( {\widetilde{F}} \right)} \) equal the Oja mean difference M O(F) and the Oja index R O(F), respectively. The relation between the Lorenz zonoid and the Oja index was proved by Koshevoy and Mosler [11, Theorem 5.1). The relation between the γ-Lorenz zonoid and the modified Torgersen index can also be proved along the same lines. In the case of finite-point distributions, it is essentially the relation between the Minkowski sum of line segments and its volume (e.g. [20]). Koshevoy and Mosler generalized this using the existence of a sequence of finite-point distributions, which converges weakly to any distribution.

3.2.3 Subgroup decomposition of the modified Torgersen index

To introduce the subgroup decomposition of the modified Torgersen index, we define the mixed volume of Z T(F|γ) and Z T(G|γ) with d−1 repetitions of Z T(F|γ) as follows:

Definition 35 is equivalent to the following ordinary definition (e.g. [8]):

where \( Z_{{\text{T}}} {\left( {F\left| \gamma \right.} \right)} + \varepsilon Z_{{\text{T}}} {\left( {G\left| \gamma \right.} \right)} = {\left\{ {x + \varepsilon y{\text{ }}\left| {x \in {\text{ }}Z_{{\text{T}}} {\left( {F\left| \gamma \right.} \right)}} \right.,{\text{ }}y \in {\text{ }}Z_{{\text{T}}} {\left( {G\left| \gamma \right.} \right)}} \right\}} \) is the Minkowski sum of Z T(F|γ) and ɛZ T(G|γ), and vol(•) denotes the volume of the γ-zonoid. Note that \( {\text{vol}}{\left( {Z_{T} {\left( {F\left| \gamma \right.} \right)}} \right)}{\text{ }} = {\left( {1 + \Sigma \gamma _{i} } \right)}M_{T} {\left( {F\left| \gamma \right.} \right)} \). According to Minkowski’s first inequality concerning the mixed volume (e.g. [8]), the following inequality is true if M T(F|γ) > 0.

The equality holds if and only if Z T(F|γ) and Z T(G|γ) are homothetic – i.e. \( Z_{T} {\left( {F\left| \gamma \right.} \right)}{\text{ }} = {\text{ }}\alpha Z_{T} {\left( {G\left| \gamma \right.} \right)} \), where α is a positive constant.

Assume that the population consists of groups 1,2,... ,n. Let F i (y), μ i , and p i represent the d-variate distribution function, the expectation vector and the share of group i in the overall population, respectively. Let \( \overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{F} _{i} {\left( y \right)} \) be the distribution functions of Y/μ within group i. Then inequality 37 allows the following decompositions by subgroup:

where \( r_{{\text{T}}} {\left( {F_{i} \left| \gamma \right.} \right)} = 0 \) if \( R_{{\text{T}}} {\left( {F\left| \gamma \right.} \right)} = 0 \), or \( {M_{{\text{T}}} {\left( {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{F} _{i} \left| \gamma \right.} \right)}^{{1 \mathord{\left/ {\vphantom {1 d}} \right. \kern-\nulldelimiterspace} d}} } \mathord{\left/ {\vphantom {{M_{{\text{T}}} {\left( {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{F} _{i} \left| \gamma \right.} \right)}^{{1 \mathord{\left/ {\vphantom {1 d}} \right. \kern-\nulldelimiterspace} d}} } {R_{{\text{T}}} {\left( {F_{i} \left| \gamma \right.} \right)}^{{1 \mathord{\left/ {\vphantom {1 d}} \right. \kern-\nulldelimiterspace} d}} }}} \right. \kern-\nulldelimiterspace} {R_{{\text{T}}} {\left( {F_{i} \left| \gamma \right.} \right)}^{{1 \mathord{\left/ {\vphantom {1 d}} \right. \kern-\nulldelimiterspace} d}} } \) otherwise, and cvT(G,F|γ) = 0 if M T(F|γ)=0. Note the following equality for the derivation of decompositions 38:

The corresponding equality for the derivation of Eq. 39 is obtained by substituting \( \widetilde{F} \) and \( \overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{F} _{i} \) for F and F i , respectively. The second term in decomposition 39 corresponds to the between-group inequality. According to Minkowski’s first inequality concerning the mixed volume, the second terms on the right-hand side of Eqs. 38 and 39 vanishes if and only if Z T(F i |γ) is homothetic to Z T(F|γ) for any group. This is true if M T(F|γ) > 0 – i.e. Z T(F|γ) has interior points. If M T(F|γ) = 0 – i.e. Z T(F|γ) is on some hyperplane, the Brunn-Minkowski inequality (e.g. [8]) asserts that Z T(F i |γ) is on the same hyperplane as Z T(F|γ); however, Z T(F i |γ) does not need to be homothetic to Z T(F|γ) in this case.

On the assumption that R T(F|γ) > 0, if the between-group inequality is null, the mean within each group μ i equals \( \alpha _{i} \mu + {\left( {1 - \alpha _{i} } \right)}\gamma \mu \) with some homothetic ratio α i > 0. Thus, if γ = 1, μ i equals μ if the between-group inequality is null, while μ i equals α i μ if γ = 0. On this basis, R T(F|1) is preferable to the original Torgersen index R T(F|0), whereas r T(F i |γ) can be expressed as the simpler form (Πμ i /μ)1/d in the latter case.

It may be reasonable to assert that R T(F|γ)1/d should be used as a multivariate inequality index instead of R T(F|γ), taking the decomposability into consideration. This question is left open in this paper, since the subsequent discussions do not require any specific decision on this, although R T(F|γ)1/d is used for definition of the modified volume-Gini index.

cvT(F i ,F|γ) can be regarded as the contribution of each group to the between-group mean difference relative to its population share. It has the following representation, which corresponds to Eq. 10 for the univariate Gini mean difference and Eq. 27 for the distance-Gini mean difference:

The proof is given in the Appendix. The representation 41 expresses the notion that cvT(F i ,F|γ) equals the surplus of the dispersion relative to the merger ratio when a merger with an infinitely small ratio of group i takes place.

3.2.4 Modified volume-Gini index and its subgroup decomposition

The Oja and the modified Torgersen indices vanish not only when the distribution is egalitarian – i.e. for a one-point distribution – but also when the distribution is on a hyperplane. In this extreme case in which only one population unit monopolizes all income and property, the inequality measures zero. To avoid this drawback, Koshevoy and Mosler [11] proposed the volume-Gini mean difference, as follows:

where \( F^{{j_{1} \cdots j_{s} }} \) is the marginal distribution in the space of sub-coordinate axes \( {\left\{ {\,j_{1} , \cdots ,j_{s} } \right\}} \). the volume-Gini index R KM(F) is defined as \( M_{{KM}} {\left( {\widetilde{F}} \right)} \), namely, the average of the Oja sub-indices for the distribution F and all its marginal distributions in the spaces of the sub-coordinate axes. Since the Oja sub-index for any univariate marginal distribution (identical to the ordinary Gini index) vanishes if R KM(F) equals zero, R KM(F) vanishes if and only if the distribution is egalitarian. Thus, the drawback is surely overcome. However, further modification seems to be desirable, taking it into consideration that the Oja sub-indices for marginal distributions vary in homothetic degree to the following enlargement with dilation factor λ (> 0) and center at the mean μ:

Note that the d-variate Oja index is of homothetic degree d – i.e. \( R_{{\text{O}}} {\left( {T_{{\lambda ,\mu }} {\left( F \right)}} \right)} = \lambda ^{d} R_{{\text{O}}} {\left( F \right)} \). For this reason, the greater the dilation, the higher is the relative contribution of higher sub-dimensional marginal distributions, although the shape of the distribution and the mean remains the same. Furthermore, the decomposability of the volume-Gini index is also questionable. Koshevoy and Mosler [12] showed a type of generalization of the two-term decomposition 14. However, their decomposition has a very complex form, in addition to the same disadvantages as Eq. 14.

Thus, the volume-Gini mean difference should be modified by replacing the Oja mean sub-volumes, except for the univariate cases, with the modified Torgersen mean sub-volumes, power-transformed by the reciprocal of the dimensions, as follows:

Strictly speaking, \( M_{T} {\left( {F^{{j_{1} \cdots j_{s} }} \left| \gamma \right.} \right)}^{{1 \mathord{\left/ {\vphantom {1 s}} \right. \kern-\nulldelimiterspace} s}} \) in Eq. 44 should be denoted as \( M_{T} {\left( {F^{{j_{1} \cdots j_{s} }} \left| {\gamma ^{{j_{1} \cdots j_{s} }} } \right.} \right)}^{{1/s}} \), where \( \gamma ^{{j_{1} \cdots j_{s} }} = {\left\{ {\gamma _{{j_{1} }} , \cdots ,\gamma _{{j_{s} }} } \right\}} \); However, the above notation is used for simplicity. The modified volume-Gini index R V(F|γ) is defined as \( M_{V} {\left( {\widetilde{F}\left| \gamma \right.} \right)} \). R V(F|γ) as well as the original volume-Gini index R KM(F) equals zero if and only if F is egalitarian. In addition, if γ = 1, R V(F) is of homothetic degree one to the enlargement T λ,μ . and the relative contribution of any sub-index is invariant to T λ,μ . Furthermore, M V(F|γ) and R V(F|γ) are decomposable into subgroups, as shown below. If considering only the homotheticity, the Oja sub-indices need not be replaced and the power transformations by reciprocal dimensions are sufficient. However, the decomposability is an open question in this case.

Assume M T(F|γ)>0. Then, according to inequalities 4 and 37, the following inequality is true:

The equality holds if and only if G s = F s for any coordinate axis and Z T(F|γ) = Z T(G|γ). Proof of the condition for the equality is given in the Appendix.

Assume that the population consists of groups 1,2, ...,n. Let F i (y), μ i , and p i represent the d-variate distribution function, the expectation vector and the share of group i in the overall population, respectively. Let \( \overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{F} _{i} {\left( y \right)} \) be the distribution functions of Y/μ within group i. Then inequality 45 allows the following subgroup decomposition of the modified volume-Gini mean difference and the modified volume-Gini index:

and

where r V(F i |γ)=0 if R V(F|γ)=0, or \( M_{V} {{\left( {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{F} _{i} \left| \gamma \right.} \right)}} \mathord{\left/ {\vphantom {{{\left( {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{F} _{i} \left| \gamma \right.} \right)}} {R_{V} }}} \right. \kern-\nulldelimiterspace} {R_{V} }{\left( {F_{i} \left| \gamma \right.} \right)} \) otherwise. The second term on the right-hand side of Eq. 47, which corresponds to the between-group inequality, equals zero if and only if \( F^{s}_{i} = F^{s} \) for any group and any coordinate axis, and Z T(F i |γ)=Z T(F|γ) for any group. The proof is given in the Appendix. Thus, M V(F i |γ)=M V(F|γ), and R V(F i |γ)=R V(F|γ) for any group if the between-group inequality is null. Unfortunately, equality of the γ-zonoids plus equality of the univariate marginal distributions is not equivalent to equality of the multivariate distributions. This is dissimilar to the equality of the lift zonoids [11]. A counterexample is given below.

Example

Let F 1 be a bivariate distribution evenly distributed at the six points {1,1}, {4,4}, {0,2}, {3,2}, {2,0} and {2,3}, and let F 2 be another bivariate distribution evenly distributed at the six points {0,0}, {3,3}, {1,2}, {4,2}, {2,1} and {2,4}. Let λ and 1-λ be the population share of F 1 and F 2, respectively – i.e. \( F = \lambda F_{1} + {\left( {1 - \lambda } \right)}F_{2} \). Then, F 1 and F 2 have the identical marginal distributions to F. Their means equal 2={2,2}. Their γ-zonoids Z T(F 1|2) and Z T(F 2|2) are also identical to Z T(F|2) (Fig. 2).

A pair of bivariate distributions evenly distributed within triangles with vertices at {0,2}, {2,0}, {4,4} and {0,0}, {4,2}, {2,4}, respectively, is also a counterexample, yet decomposition 47 can be considered to nearly satisfy the CID condition, since the condition of the null between-group inequality ensures some equivalence among within-group distributions in terms of dilation ordering defined by the γ-zonoid 32 in addition to the equivalence of the univariate marginal distributions. At least, the mean and dispersion of any group measured by the modified volume-Gini index must agree with each other if the between-group inequality vanishes.

Before closing this subsection, the representation of cvV(F i ,F|γ), which corresponds to that of cvT(F i ,F|γ) in Eq. 41, is given below:

Similar to Eq. 41, representation 48 expresses the notion that cvV(F i ,F|γ) equals the surplus of the dispersion relative to the merger ratio for a merger with an infinitely small ratio of group i.

3.3 Source decomposition of the multivariate Gini indices

In this subsection, source decomposition of the distance-Gini index and the modified volume-Gini index is introduced.

3.3.1 Source decomposition of the distance-Gini index

Assume that attribute i consists of contributions from m i types of sources (i = 1,...,d). Let \( x^{{{\left( {k_{i} } \right)}}}_{i} \) and \( y^{{{\left( {k_{i} } \right)}}}_{i} \) be the expected contributions from source k i to attribute i for the conditions x={x i } and y={y i }, respectively, and \( \mu ^{{{\left( {k_{i} } \right)}}}_{i} \) be the unconditional mean of the contribution. Taking it into consideration that the distance-Gini mean difference is proportional to the average of the Gini mean differences for univariate marginal distributions on lines in all directions (see Eq. 21), it is intuitive to define the contribution of each source as the average of the quasi-Gini mean differences for univariate marginal distributions on lines in all directions with the same multiplier as Eq. 21, as follows:

where υ is the uniform distribution on the unit sphere \( S^{{d - 1}} = {\left\{ {a \in R^{d} \left| {{\left\| a \right\|}} \right. = 1} \right\}} \), and sgn(x)=1 if x ≥ 0, or −1 otherwise. \( M^{{{\left( {k_{i} } \right)}}}_{D} {\left( F \right)} \), the contribution of each source to M D(F), should be called the quasi–distance-Gini mean difference. The corresponding decomposition for the distance-Gini index is obtained by substituting \( \widetilde{F} \) for F. Taking the definition of the univariate quasi-Gini index into consideration, the quasi-distance-Gini index \( R^{{{\left( {k_{i} } \right)}}}_{D} {\left( F \right)} \) should be defined as the contribution of each source to R D(F) relative to the amount share, as follows: \( M^{{{\left( {k_{i} } \right)}}}_{D} {\left( {\widetilde{F}} \right)} = \frac{{\mu ^{{{\left( {k_{i} } \right)}}}_{i} }} {{\mu _{i} }}R^{{{\left( {k_{i} } \right)}}}_{D} {\left( F \right)} \). Since the following equality is true (the proof is given in the Appendix),

the quasi-distance-Gini mean difference can be expressed as follows:

Note that the integrand in Eq. 51 is assumed to be zero if x = y.

The quasi-distance-Gini mean difference of each source is also derived in the following manner. Assume that the contributions from source k i increase by an infinitely small rate ɛ uniformly – i.e. \( x^{{{\left( {k_{i} } \right)}}}_{i} \) increases to \( {\left( {1 + \varepsilon } \right)}x^{{{\left( {k_{i} } \right)}}}_{i} \) on any population unit; then the increase in the distance-Gini mean difference relative to rate ɛ equals \( M^{{{\left( {k_{i} } \right)}}}_{D} {\left( F \right)} \). This derivation is also applicable to the univariate Gini source decomposition of Rao [16].

3.3.2 Source decomposition of the modified volume-Gini index

The modified volume-Gini mean difference and the modified volume-Gini index seemingly do not have such intuitive derivation of source decomposition as the distance-Gini mean difference and the distance-Gini index. However, if adhering to the reasoning that the contribution of each source to the mean difference is derived from differentiation by rate of a uniform increase in amount for each source as mentioned in section 3.3.1, then a type of source decomposition is obtained for the modified volume-Gini mean difference and the modified volume-Gini index if \( M_{T} {\left( {F^{{i_{1} \cdots i_{s} }} \left| \bf γ \right.} \right)} \) > 0 for any marginal distribution in space of any sub-coordinate axes. Due to space limitations, only the result of the derivation for the modified volume-Gini index is presented here.

For introduction of the source decomposition, the following s × s matrices are first defined:

where \( y^{{(k_{{i1}} \cdots k_{{is}} )}}_{ \bullet } = {\left\{ {y^{{(k_{{il}} )}}_{{ \bullet i_{l} }} } \right\}}_{{l = 1, \cdots ,s}} \), and \( {\bf μ} ^{{(k_{{i1}} \cdots k_{{is}} )}} = {\left\{ {\mu ^{{(k_{{il}} )}}_{{i_{l} }} } \right\}}_{{l = 1, \cdots ,s}} \). Note that abbreviated notations are used in (52) for simplicity. \( {\left[ {{\bf y}_{1} - {\bf γ} , \cdots ,{\bf y}_{s} - {\bf γ} } \right]} \) is an abbreviation of \( {\left[ {{\bf y}_{1} - {\bf γ} ^{{i_{1} \cdots i_{s} }} , \cdots ,{\bf y}_{s} - {\bf γ} ^{{i_{1} \cdots i_{s} }} } \right]} \), for instance. In the t-th row of \( N^{{{\left( {k_{{i1}} \cdots k_{{is}} } \right)}}} {\left( {{\bf y}_{1} , \cdots ,{\bf y}_{s} \left| {i_{1} \cdots i_{s} } \right.} \right)} \), each element is equal to the conditional expected contribution of source k it to attribute i t relative to the unconditional expected contribution \( \mu ^{{{\left( {k_{{it}} } \right)}}}_{{i_{t} }} \) minus γ it .

By performing some manipulation after the above-mentioned derivation, the source decomposition of the modified volume-Gini index can be expressed as follows:

where \( R^{{{\left( {k_{i} } \right)}}} {\left( {F^{i} } \right)} = {\int {{\int {\operatorname{sgn} {\left( {x_{i} - y_{i} } \right)}{\left( {\frac{{x^{{{\left( {k_{i} } \right)}}}_{i} }} {{\mu ^{{{\left( {k_{i} } \right)}}}_{i} }} - \frac{{y^{{{\left( {k_{i} } \right)}}}_{i} }} {{\mu ^{{{\left( {k_{i} } \right)}}}_{i} }}} \right)}{\text{d}}F^{i} {\left( {x_{i} } \right)}{\text{d}}F^{i} {\left( {y_{i} } \right)}} }} } \) and \(R_T^{\left( {k_{i1} \cdots k_{is} } \right)} \left( {F^{i_1 \cdots i_s } \left| \gamma \right.} \right) = \frac{1}{{s!\left( {1 + \sum\limits_{l = 1}^s {\gamma _{i_l } } } \right)}}\int { \cdots \int {\operatorname{sgn} \left( {\det \left( {M\left( {y_1 , \cdots ,y_s \left| {i_1 \cdots i_s } \right.} \right)} \right)} \right)\det \left( {N^{\left( {k_{i1} \cdots k_{is} } \right)} \left( {y_1 , \cdots ,y_s \left| {i_1 \cdots i_s } \right.} \right)} \right){\text{d}}F\left( {y_1 } \right) \cdots {\text{d}}F\left( {y_s } \right)} .} \) \( R^{{{\left( {k_{i} } \right)}}} \) (F i) in the first term is the quasi-Gini index of the univariate marginal distribution in subspace of attribute i. The second term can be regarded as an interaction term among sources of different attributes, which makes a notable difference with the decomposition of the distance-Gini index.

3.4 Application to Japanese family budget data

Several types of multivariate Gini indices are estimated for annual income and consumption of Japanese households with two or more members using tabulated data from the National Survey of Family Income and Expenditures. Three-way tables of consumption class by income class by age class of household head are available for the household distribution. However, owing to the unavailability of the three-way table for average income and consumption, average income in two-way tables of income class by age class of household head are used for the estimation instead, irrespective of consumption class, and the intermediate value between the lower and upper limits of each consumption class is used as average consumption, irrespective of income class and age class of the household head. The estimates after adjustment by excluding the age effects are presented in Table 5. These estimates should be treated carefully because of the above-mentioned approximation; nevertheless, it is notable that the multivariate Gini indices for 2004 relative to 1989 are higher than the Gini indices of both univariate marginal distributions for income and consumption. For example, the distance-Gini index and the modified volume-Gini index for 2004 relative to 1989 are 98.4 and 98.6, respectively, while the Gini indices for annual income and consumption are 98.3 and 98.1, respectively. This indicates that consumption tends to vary more widely than before within the same income class, although the whole consumption distribution does not disperse as before.

It is also notable that the modified volume-Gini index is relatively close to the distance-Gini index in comparison with the Oja index, the modified Torgersen index or their 1/2-th power transformations.

The contributions of age groups for household heads to changes in these indices are also estimated using the subgroup decomposition technique (Table 6). The multivariate Gini indices show similar tendencies to the univariate Gini indices, although the magnitudes of the contributions vary somewhat.

4 Concluding remarks

In this paper, a new type of subgroup decomposition for the Gini index is proposed. The new decomposition is consistent with multilevel sub-groupingsFootnote 3, and is characterized by the CID condition – i.e. the between-group inequality vanishes if and only if distributions within groups are identical to all the others.

The new decomposition is then generalized to two types of multivariate Gini indices introduced by Koshevoy and Mosler [11]. In the case of the distance-Gini index, the decomposition strictly satisfies the CID condition, while for the volume-Gini index, the decomposition satisfies the condition not strictly, but nearly after the index definition is modified to be of homothetic degree one to enlargement with the center at the mean.

Source decompositions of the two types of multivariate Gini indices are also introduced as a generalization of the Gini decomposition of Rao [16].

I hope this new decomposition will advance studies of economic inequality. The following remarks concerning the definition or concept of multivariate Gini indices may be helpful for further research.

Anderson [1] used the following multivariate Gini index, which is similar to the distance-Gini index:

where \( {\left\| {\frac{x} {\mu } - \frac{y} {\mu }} \right\|}_{W} = {\sqrt {{\sum\limits_{i = 1}^d {w_{i} {\left( {\frac{{x_{i} }} {{\mu _{i} }} - \frac{{y_{i} }} {{\mu _{i} }}} \right)}^{2} } }} },{\text{ }}w_{i} > 0{\text{ }}and{\text{ }}{\sum\limits_{i = 1}^d {w_{i} } } = d. \) .

The differences between Eq. 54 and the distance-Gini index highlight two issues. First, the Anderson index (Eq. 54) almost equals unity if the amounts for all attributes are monopolized by only one population unit and all other units have no contributions. The index exceeds unity if all the amounts for each attribute belong to only one population unit, but the monopolist for each attribute differs. The index almost equals \( \frac{1} {{{\sqrt d }}}{\sum\limits_{i = 1}^d {{\sqrt {w_{i} } }} }{\left( { > 1} \right)} \) in this case. The index can be limited to less than unity by modifying d for \( {\sqrt d } \) in Eq. 54 and \( w^{2}_{i} \) for w i in definition of the between-unit distance. Note that the weights constraint \( {\sum\limits_{i = 1}^d {w_{i} } } = d \) needs not be replaced. Such an index can be regarded as the weighted distance-Gini index. The subgroup and source decomposition for the distance-Gini index presented in this paper can easily be extended to it. However, the following naïve question still remains open:

Which situations should be judged to be higher in inequality, only one population unit makes all contributions to all attributes (the absolute monopolistic situation) or different monopolists exist for each attribute?

Since the Lorenz zonoid in the latter situation is wider than that in the former, the Oja index, the (modified) Torgersen index and the (modified) volume-Gini index are higher in the latter case. So is the distance-Gini index because of its consistency with the dilation ordering of the Lorenz zonoid [11]. This characteristic is seemingly not necessarily a disadvantage, at least in terms of the weighting problem described in the next paragraph. Nevertheless, several researchers have pursued multivariate inequality measures that are higher in the absolute monopolistic situation. Tsui [22] studied the multidimensional generalized entropy measures satisfying a condition of consistency with the correlation increasing majorization (CIM) as well as some other conditions. If a multidimensional inequality measure satisfies the CIM condition, the absolute monopolistic situation is judged to be higher in inequality. However, imposition of this condition is seemingly too restrictive (see also [7]). For example, Tsui’s multidimensional extension of the Theil measure is outside the restriction. The above question may rarely arise if wealthy population units contributing to one attribute tend to contribute to other attributes in practice. It thus seems likely that one of the approaches to determine the appropriateness of the CIM condition is to verify whether a set of attributes or the subject to be studied can be accounted by uni- or multi-dimensional factors after excluding measurement errors. If multiple factors are identified, then the way to extract each factor with mutual relations should be explored for measurement of inequality. In usual cases, attributes seem to be determined by one major common factor and some additional factors peculiar to individual attributes. In this context, it is notable that Easterlin [5, 6] pointed out that correlation between income and self-reported well-being is weak at least over the life cycle, implying that (subjective) well-being is affected by multiple factors.

Another issue raised by the Anderson index is the weight assignment to attributes concerned. If economic inequality is measured in terms of income, consumption and education level achieved, it seems to be reasonable to assign smaller weights to income and consumption relative to educational attainment because of their similarity. However, as the multivariate Gini indices automatically have lower outcomes if attributes are correlated with each other – in other words, the indices run counter to the CIM condition – the weighting problem is not considered to be serious relative to multivariate inequality measures that satisfy the CIM condition. The way to determine appropriate weights is not a trivial matter.

Thus, further research is still needed for applications of the multivariate Gini indices or other multivariate inequality measures.

Notes

The definition of “consistency with hierarchical grouping systems” in this paper is entirely different from that of “subgroup consistency” defined by Shorrocks [18].

As noted above, estimates for the new decomposition are made from aggregates using the composite Simpson’s rule (with Eq. 9 for calculations of the between-group inequalities).

See footnote 1.

References

Anderson, G.: Indices and tests for multidimensional inequality: multivariate generalizations of the Gini coefficient and Kolmogorov–Smirnov two sample test. Paper prepare for the 28th general conference of the IARIW, Cork, Ireland, 22–28 Aug. 2004

Baringhaus, L., Franz, C.: On a new multivariate two-sample test. J. Multivar. Anal. 88, 190–206 (2004)

Bhattacharya, N., Mahalanobis, B.: Regional disparities in household consumption in India. J. Am. Stat. Assoc. 62, 143–161 (1967)

Dagum, C.: A new approach to the decomposition of the Gini income inequality ratio. Emp. Econ. 22, 515–531 (1997)

Easterlin, R.: Does economic growth improve the human lot? Some empirical evidence. In: David P., Reder M. (eds.) Nations and households in economic growth: essays in honour of Moses Abramobitz, pp. 89–125. Academic, New York (1974)

Easterlin, R.: Building a better theory of well-being. In: Bruni, L., Porta, P.L. (eds.) Economics and happiness: framing the analysis, pp. 29–64. Oxford University Press, New York (2006)

Gajdos, T., Weymark, J.A.: Multidimensional generalized Gini indices. Varnderbilt University Working Paper 3-W11R, Department of Economics, Varnderbilt University, Nashville, Tennessee (2003)

Gardner, R.J.: The Brunn–Minkowski inequality. Bull. Am. Math. Soc. 39, 355–405 (2002)

Helgason, S.: The Radon Transform. Birkhauser, Boston (1980)

Koshevoy, G.. A., Mosler, K.: The Lorenz zonoid of a multivariate distribution. J. Am. Stat. Assoc. 91, 873–882 (1996)

Koshevoy, G.A., Mosler, K.: Multivariate Gini indices. J. Multivar. Anal. 60, 252–276 (1997)

Koshevoy, G.A., Mosler, K.: Multivariate Lorenz dominace based on zonoids. Advance in Statistical Analysis 91, 57–76 (2007)

Lerman, R.I., Yitzhaki, S.: A note on the calculation and interpretation of the Gini index. Econ. Lett. 15, 363–368 (1984)

Oja, H.: Descriptive statistics for multivariate distributions. Stat. Probab. Lett. 1, 327–332 (1983)

Pyatt, G.: On the interpretation and disaggregation of Gini coefficients. Econ. J. 86, 243–255 (1976)

Rao, V.M.: Two decompositions of concentration ratio. J. R. Stat. Soc. A 132, 418–425 (1969)

Schneider, R.: Convex Bodies: The Brunn-Minkowski Theory. Cambridge University Press, New York (1993)

Shorrocks, A.: Aggregation issues in inequality measurement. In: W. Eichhorn (ed.) Measurement in Economics, pp. 429–451. Physica-Verlag, Heidelberg (1988)

Shorrocks, A., Wan, G.: Spatial decomposition of inequality. J. Economic Geography 5, 59–81 (2005)

Shephard, G.C.: Combinatorial properties of associated zonotopes. Can. J. Math. 26, 302–321 (1974)

Torgersen, E.: Comparison of Statistical Experiments. Cambridge University Press, Cambridge (1991)

Tsui, K.-Y.: Multidimensional inequality and multidimensional generalized entropy measures: an axiomatic derivation. Soc Choice Welf 16, 145–157 (1999)

Wilks, S.S.: Multidimensional statistical scatter. In: Olkin, et. al. (eds.) Contributions to Probability and Statistics, pp. 486–503. Stanford University Press, Stanford, (1960)

Yitzhaki, S., Lerman, R.I.: Income stratification and income inequality. Rev. Income Wealth 37, 313–329 (1991)

Acknowledgement

I wish to thank the editors and anonymous reviewers at this journal whose comments and suggestions have been very helpful to improve the paper.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

1.1 Proofs

1.1.1 Proof of Eq. 16

On the assumption that F i is continuous, by applying integration by parts, cv(F i ,F) can be expanded as follows:

Note that ∫F i dF i =∫FdF=1/2. Then, using the equality \( {\int {F{\text{d}}F_{i} - 1 \mathord{\left/ {\vphantom {1 2}} \right. \kern-\nulldelimiterspace} 2 = - {\int {F_{i} {\text{d}}F + 1 \mathord{\left/ {\vphantom {1 2}} \right. \kern-\nulldelimiterspace} 2} }} }\) and the notation in section 2.3, Eq. 16 is obtained.

1.1.2 Proof of Eqs. 24 and 25

By applying the definition of cvD(F i ,F) in Eq. 22, the distance-Gini mean difference M D(F) can be expanded as follows:

Note that F = Σp i F i . Subtracting M D(F) from both sides after doubling, decomposition 24 is obtained. Equation 25 is easily derived by replacing F with \( \widetilde{F} \).

1.1.3 Range of the modified Torgersen index

Since

where \( {\sum\limits_i {{\left[ {0,e_{i} } \right]}} } + {\left[ {0, - \gamma } \right]} \) is the Minkowski sum of the line segments [0,e i ] (i = 1,...,d) and [0,−γ], and e i = {e ij } is a unit vector consisting of elements e ij = 0 if j ≠ i, or =1 if j = i, then, the volume of \( Z_{{\text{T}}} {\left( {\widetilde{F}\left| \gamma \right.} \right)}\) is less than or equal to the volume of \( {\sum\limits_i {{\left[ {0,e_{i} } \right]}} } + {\left[ {0, - \gamma } \right]} \), which is calculated at 1 + Σγ i by using the following formula \( {\text{vol}}{\left( {{\sum\limits_{i = 1}^n {{\left[ {0,a_{i} } \right]}} }} \right)} = {\sum\limits_{1 \leqslant i_{1} < \cdots < i_{d} < n} {{\left| {\det {\left( {a_{{i_{1} }} , \cdots ,a_{{i_{d} }} } \right)}} \right|}} }\) (e.g. [20]). Thus, the modified Torgersen index R T(F|γ) is less than or equal to unity.

Assume that F is an n-point d-variate distribution in which there is a different monopolist for each variable (attribute). Then, R T(F|γ) → 1 if n → ∞.

1.1.4 Proof of Eq. 41

By applying Eq. 38, Eq. 58 is derived.

where \( G{\left( \varepsilon \right)} = \varepsilon F_{i} + {\left( {1 - \varepsilon } \right)}F \). The modified Torgersen mean difference M T(G(ε)|γ) and the mixed volume MV d−1(F,G(ε)|γ) have the following derivatives at ε = 0:

The derivative of \({\text{cv}}_{{\text{T}}} {\left( {F,G{\left( \varepsilon \right)}\left| \gamma \right.} \right)} \) at ε = 0 equals zero from its definition and Eq. 59. Thus, the second term on the right-hand side of Eq. 58 → 0 if ε → 0. Since the left-hand side → \( {\text{cv}}_{{\text{T}}} {\left( {F_{i} ,F\left| \gamma \right.} \right)} \) if ε→0, Eq. 41 is derived.

1.1.5 Proof of the equality condition in Eq. 45 and the condition for null between-inequality in Eq. 47

It is trivial that \( {\text{cv}}_{{\text{V}}} {\left( {G,F\left| \gamma \right.} \right)} = 0{\text{ }}if{\text{ }}G^{s} = F^{s} \) for any coordinate axis, and \( Z_{{\text{T}}} {\left( {G\left| \gamma \right.} \right)} = Z_{{\text{T}}} {\left( {F\left| \gamma \right.} \right)} \)If \( {\text{cv}}_{{\text{V}}} {\left( {G,F\left| \gamma \right.} \right)} = 0 \), all terms constituting cvV(G,F|γ) in Eq. 45 should be zero, including cvT(G,F|γ). On the assumption that MT(F|γ)>0, this means that \( Z_{{\text{T}}} {\left( {G\left| \gamma \right.} \right)} = \alpha Z_{{\text{T}}} {\left( {F\left| \gamma \right.} \right)}\), where α is a positive constant, according to Minkowski’s first inequality concerning the mixed volume. Since cv(G s, F s) should also be zero at the same time, G s=F s for any coordinate axis. This forces α to be unity. Thus, the equality condition in Eq. 45 is proved.

If M T(F|γ)>0, the equality condition in Eq. 45 can be applied to the proof of the condition for the null between-inequality in Eq. 47. If M T(F|γ)=0, the Brunn-Minkowski inequality (e.g. [17]) asserts that Z T(F i |γ) is on the same hyperplane as Z T(F|γ). Let k 1,...,k t be the highest-dimensional sub-coordinate axes under the condition that \( M_{{\text{T}}} {\left( {F^{{j_{1} \cdots j_{s} }} \left| \gamma \right.} \right)} > 0 \). The equality condition in Eq. 45 asserts that \( Z_{{\text{T}}} {\left( {F^{{k_{1} \cdots k_{t} }}_{i} \left| \gamma \right.} \right)} = Z_{{\text{T}}} {\left( {F^{{k_{1} \cdots k_{t} }} \left| \gamma \right.} \right)} \) (Note that \( {\text{cv}}_{{\text{V}}} {\left( {F^{{k_{1} \cdots k_{t} }}_{i} ,F^{{k_{1} \cdots k_{t} }} \left| \gamma \right.} \right)} = 0 \) if \( {\text{cv}}_{{\text{V}}} {\left( {F_{i} ,F\left| \gamma \right.} \right)} = 0 \)). From this, ZT(F i |γ) should be identical to Z T(F|γ). G s = F s is true for any coordinate axis, irrespective of the value of M T(F|γ). Thus, the condition for null between-inequality in Eq. 47 is proved.

1.1.6 Proof of Eqs. 20 and 50

First, the proof of Eq. 50 is given inductively. Without loss of generality, we can assume that i = 1 and x={x 1,x 2,0,...,0} because of invariance for rotation on axis i and axis permutation. If d = 2, Eq. 50 can be proved as follows:

where a={cosθ,sinθ}, and \( {\bf x} = {\left\{ {x_{1} ,x_{2} } \right\}} = {\left\{ {{\left\| \bf x \right\|}\cos \varphi ,{\text{ }}{\left\| \bf x \right\|}\sin \varphi } \right\}} \). Assume that Eq. 50 is proved if the dimension is d−1. Then,

Equation 50 is derived by summing Eq. 50 over i after multiplying by x i .

Rights and permissions

About this article

Cite this article

Okamoto, M. Decomposition of gini and multivariate gini indices. J Econ Inequal 7, 153–177 (2009). https://doi.org/10.1007/s10888-007-9069-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10888-007-9069-5