Abstract

Common method variance (CMV) is an ongoing topic of debate and concern in the organizational literature. We present four latent variable confirmatory factor analysis model designs for assessing and controlling for CMV—those for unmeasured latent method constructs, Marker Variables, Measured Cause Variables, as well as a new hybrid design wherein these three types of method latent variables are used concurrently. We then describe a comprehensive analysis strategy that can be used with these four designs and provide a demonstration using the new design, the Hybrid Method Variables Model. In our discussion, we comment on different issues related to implementing these designs and analyses, provide supporting practical guidance, and, finally, advocate for the use of the Hybrid Method Variables Model. Through these means, we hope to promote a more comprehensive and consistent approach to the assessment of CMV in the organizational literature and more extensive use of hybrid models that include multiple types of latent method variables to assess CMV.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

It is generally well understood, but useful to remember, that social and organizational scientists test theories that specify relations among constructs and that this process may become compromised when indicators used to represent the constructs are assessed with a “common” or shared measurement “method.” The potential compromise may occur because the use of a common measurement method can affect the observed relation between the indicators, in which case it becomes difficult to determine if the observed relation among the latent variables (e.g., factor correlation) accurately represents the true relation among the constructs. In other words, to the extent that common method variance (CMV) exists in the relations among study constructs, the factor correlation may be biased (common method bias) and the validity of findings regarding the magnitude of those relations may be called into question. As a result, organizational researchers have sought “a magic bullet that will silence editors and reviewers” (Spector and Brannick 2010, p. 2), who often emphasize the prospect of a CMV-based explanation for obtained substantive findings when rejecting papers during the manuscript review process.

In terms of what contributes to potential CMV, Podsakoff et al. (2003) and Spector (2006) described characteristics of instruments, people, situations, and the nature of constructs; Podsakoff et al. (2003) and Podsakoff et al. (2012) also described “procedural remedies” to address these causes. We offer a strong recommendation that researchers consider these procedural remedies when designing their studies and build such remedies into their data collections. In conjunction with, or as a less desirable alternative to procedural remedies, researchers now commonly turn to statistical methods for assessing and controlling for CMV as their “magic bullet,” seeking to assuage reviewers’ and editors’ concerns about common method bias. However, the use of these statistical methods appears to be incomplete and inconsistent. In most studies, the full range of statistical information available to reach conclusions about CMV and potential bias is not examined, and, across studies, authors focus on different types of statistical information. Consequences of this variability in how researchers use statistical remedies include decreased confidence in study conclusions and greater difficulty for cumulative knowledge to emerge across studies.

Overview

Within this context, we will review the emergence of confirmatory factor analysis (CFA) approaches to assess CMV and provide an overview of four specific research designs with which they may be used. Note that, when we refer to a “design” we are referring to both the variables that are included in the model and associated steps in the data analysis and not to different sources of information as often considered in the MTMM design. The latter of these four designs, which we will refer to as the Hybrid Method Variables Model, is a combination of the other three designs, in that it includes three types of latent variables representing method effects. The Hybrid Method Variables Model may be the best among these sets of research designs for attempting statistical control to address CMV concerns, yet notably, details on its implementation have not yet appeared in the literature. We will then describe a Comprehensive Analysis Strategy that can be used with all four designs, and demonstrate this strategy with a sample dataset using the Hybrid Method Variables Design. Perhaps most importantly, we close with a discussion of practical recommendations, along with issues that should be considered by those using CFA to test for CMV and associated bias. Thus, the goals of this paper are aligned with those of other recent Methods Corner articles that have sought to clarify methodological techniques and provide readers with recommendations regarding their implementation, including one on common method variance (Conway and Lance 2010), and others on moderation analysis (Dawson 2014), combining meta-analysis and SEM (Landis 2013), and historiography (Zickar 2015).

Emergence of Latent Variable Approaches to CMV

Concern with CMV and its potential biasing effects on research inferences increased with the development of the multitrait-multimethod matrix (MTMM) by Campbell and Fiske (1959). In subsequent years, as confirmatory factor analysis (CFA) techniques became popular, their use with MTMM was seen as having several advantages (e.g., Schmitt 1978; Schmitt and Stults 1986) and CFA techniques for assessing CMV are still widely used today. See Fig. 1a for a basic CFA model. CMV may be represented with the addition of another latent variable representing the common measurement method and relations between this latent variable and the indicators of the substantive latent variables (method factor loading or MFL—see Fig. 1b).Footnote 1

a CFA Model. A and B are latent variables; a1–a3 and b1–b3 are measured indicators. FC Substantive factor correlation, SFL substantive factor loading, EV error variance. b CFA model with method variable. A and B are latent variables; a1–a3 and b1–b3 are measured indicators. SFL substantive factor loading, EV error variance, FC factor correlation, M method variable, MFL1–MFL6 method factor loadings

Currently used CFA techniques for CMV vary based on whether (a) a presumed source of CMV is not included in the data (commonly referred to as the unmeasured latent method construct and which we refer to as the ULMC), (b) an included source is an indirect measure of some variable presumed to underlie CMV (Marker Variable) or a direct measure (Measured Cause Variable), and (c) multiple types of latent method variables are included in the same design (e.g., Hybrid Method Variables Model). Please see Table 1 for a summary of each design. For historical purposes, we present the ULMC first; yet, as we explain later, we do not mean to imply that the ULMC is superior to the other designs.

Design 1: Unmeasured Latent Method Construct (ULMC)

For single unmeasured method factor models, multiple indicators of substantive variables/factors are required, wherein each indicator might be a single question, a parcel based on subsets of questions from a scale, or the scale itself, yet the method factor does not have any indicators of its own. Podsakoff et al. (2003) referred to such a model as controlling for the effects of a single unmeasured latent method construct. An early example of this approach was provided by Podsakoff et al. (1990), and a recent review indicates it has been used over 50 times since 2010 (McGonagle et al. 2014). An example is presented in Fig. 2.

Bagozzi (1984) noted a disadvantage of this model, namely that the effects of systematic error are accounted for but the source of the error is not explicitly identified. As further discussed by Podsakoff et al. (2003), and noted in our Table 1, the method factor in the ULMC design may include not only CMV but also possibly additional shared variance due to relations beyond those associated with the method causes. One case wherein this can occur is when one or more substantive latent variables are multidimensional and this is not properly accounted for (each is represented with a single latent variable). In this case, some of the residual systematic variance in its indicators may be due to unaccounted for substantive variance associated with the multiple dimensions (Williams 2014). In this case, the researcher would likely control some substantive variance when including a ULMC method factor and overstate the amount of CMV.

Although we do not know the particular source(s) of variance accounted for by the ULMC, the notion that it does present a way to capture multiple sources of extraneous shared method variance may be seen as an advantage of this design. Said differently, a wide range of factors, including instruments, people, situations, and constructs have been offered as causes of CMV (see Podsakoff et al. 2003)—the fact that the specific source(s) or cause(s) accounted for by the ULMC is not known may be less important than the fact that multiple sources can be captured. It may be better to incorrectly attribute some bias to common method variance, when it is actually substantive variance, than to completely ignore the shared variance due to uncertainty as to its specific source(s). Additionally, the ULMC has been supported in recent simulation work; Ding and Jane (2015) reported that the technique worked well and recovered correct parameter values when properly specified models were evaluated.

Design 2: Marker Variables

The second design, the Marker Variable Design, improves on the limitation of the ULMC noted above. Specifically, it partially avoids ambiguity around what form of common variance exists by including an indirect measure of the variable(s) presumed to underlie the CMV. For instance, a Marker Variable community satisfaction may tap into sources of common method variance related to affect-driven response tendencies that impact measurement of the substantive variable job satisfaction, but it does not measure this affective response tendency directly. Simmering et al. (2015) reported that Marker Variables have been used in 62 studies since 2001.

Marker Variables were initially defined as being theoretically unrelated to substantive variables in a given study and for which the expected correlations with such substantive variables are zero (Lindell and Whitney 2001). Therefore, any non-zero correlation (representing shared covariance) of the marker with the substantive variables is assumed to be due to CMV. With respect to our example Marker Variable of community satisfaction, since it would not be expected to be substantively related to typical organizational behavior variables, any shared variance with these variables would be presumed to reflect affect-based CMV. More recently, Richardson et al. (2009) referred to these variables with expected correlations of zero with substantive measures as “ideal markers.” Williams et al. (2010) advocated that researchers consider what specific measurement biases may exist in their data when selecting Marker Variables for inclusion in their data collections, noting that it is incomplete to choose a Marker Variable only on the basis of a lack of substantive relation of the marker with substantive variables. Therefore, Williams et al. (2010) expanded Lindell and Whitney’s (2001) definition of Marker Variables to include “…capturing or tapping into one or more of the sources of bias that can occur in the measurement context for given substantive variables being examined, given a model of the survey response process…” (p. 507). In our example, community satisfaction satisfies this requirement because, as mentioned, it taps into affectively based sources of CMV.

Although Lindell and Whitney (2001) originally proposed examining Marker Variables using a partial correlation approach, researchers later incorporated Marker Variables into CFA models. As noted by Richardson et al. (2009), this approach was proposed and discussed by Williams et al. (2003a, b). Subsequently, Williams et al. (2010) refined this approach and developed a comprehensive strategy with the CFA Marker Technique, and it is now the preferred analytical approach for Marker Variables (e.g., Podsakoff et al. 2012). The CFA Marker Model is shown in Fig. 3. Although the Marker Variable approach has an advantage over the ULMC in that it includes something that is measured, its effective use depends on linking it with a theory of measurement and sources of CMV (Simmering et al. 2015; Spector et al. 2015; Williams et al. 2010). If it is linked appropriately, it may allow researchers to tap a broader range of causes of CMV than when the attempt is made to measure one or more specific causes, as described in the next section. Additionally, the CFA marker technique has recently been validated; it was found to work very well at recovering true substantive relations while appropriately correcting for CMV (Williams and O’Boyle 2015).

Design 3: Measured Cause Variables

A third design for assessing CMV involves including measured variables that are direct sources of method effects—specifically, measurements of one or more variables presumed to be sources or causes of CMV (e.g., Simmering et al. 2015). An advantage of this approach over the marker approach is that the researcher may directly assess and control for identified source(s) of CMV, such as negative affect or social desirability. Williams and Anderson (1994) referred to these types of variables as measured method effect variables. Simmering et al. (2015) refer to “measured CMV cause models” (p. 21); we follow Simmering et al. and use the term “Measured Cause” to refer to this type of method latent variable. One of the earliest examples of a Measured Cause Variable was social desirability, as discussed by Ganster et al. (1983) and Podsakoff and Organ (1986). Similar to Marker Variables, partial correlation (and, later, regression analysis) approaches to analysis of social desirability effects were common (see Brief et al. 1988; Chen and Spector 1991).

Bagozzi (1984) was among the first to describe the use of CFA techniques for data involving Measured Cause Variables. In the organizational literature, CFA approaches to Measured Cause Variables were discussed first with negative affectivity by Schaubroeck et al. (1992), Williams and Anderson (1994), and Williams et al. (1996). Social desirability was also studied by Barrick and Mount (1996) as well as Smith and Ellingson (2002), and different sources of method bias in selection processes were examined by Schmitt et al (1995) and Schmitt et al. (1996). Finally, Simmering et al. (2015) have discussed acquiescence response bias as a Measured Cause source of method variance. As noted in Table 1, a strength of the Measured Cause Variable design is that it allows a more definitive test of specific method variance effects (Spector et al. 2015) by directly representing potential CMV causes so that researchers can expressly identify types of CMV present (Simmering et al. 2015). Yet, there are a limited number of CMV causes that can be directly measured, and those that can do not cover the full range of sources of CMV. Since both the Marker Variable Model and the Measured Cause Variable Model include a method variable that is assessed using its own measure(s), their path models are identical (see Fig. 3).

Design 4: Hybrid Method Variables Model

The preceding sections have described the evolution of three latent variable approaches and associated designs for addressing CMV in organizational research. Our next design includes method variables of all three types: ULMC, Marker, and Measured Cause. Although not yet implemented in the studies we have reviewed, the possibility of including multiple types of method variables in the same latent variable model has been considered. For example, Williams et al. (2010) discussed examining multiple sources of method variance within the same study, and suggested adding a Marker Variable to designs that include Measured Cause Variable or multiple methods (MTMM). More recently, Simmering et al. (2015) stated, “In reality though, the most comprehensive and broadly useful approach could be to include both multiple markers and multiple measurable CMV causes” (p. 33). Similarly, Spector et al. (2015) described what they refer to as the “hybrid approach” as being based on a combination of Marker Variables and method effect variables. We adopt the term hybrid to convey that this model includes multiple types of method variables, but extend the discussions of Spector et al. (2015) and Simmering et al. (2015) by including the ULMC in our demonstrated Hybrid Method Variables Model. This addition allows a researcher to potentially control for additional common method sources beyond those accounted for by Measured Cause Variables and (measured) Marker Variables. We feel that the Hybrid Method Variables Design should be a first choice in most research situations because it incorporates the strengths of all three types of method variables while overcoming some of the limitations of each.

Before presenting our Hybrid Method Variables Model, we note that there are examples of researchers investigating effects due to more than one latent Measured Cause Variable (e.g., Barrick and Mount 1996; Williams and Anderson 1994). Similarly, studies have included multiple Marker Variables—Simmering et al. (Table 1, 2015) report six studies using multiple markers of mixed types. More recently, researchers have also examined different types of method variables within the same article. Yet the researchers did not include all the method variables in the same model with the same sample (Johnson et al. 2011; Simmering et al. 2015). Therefore, no example is available in the literature that includes all three types of method variable, nor have recommendations been provided for data analysis with these types of complex designs involving all three types of method variables.

A path model representation of our Hybrid Method Variables Model is provided in Fig. 4. This model includes latent method variables of all three types, Marker (M), Measured Cause (C), and ULMC (U). Note that each of the three latent method variables affects all of the substantive indicators through MFLs. Further, all three latent method variables are uncorrelated with the two substantive latent variables, as was true in models associated with the three when used individually. And, the two latent method variables represented with their own indicators are allowed to correlate with each other (MFC), but must be uncorrelated with the ULMC latent method factor to achieve model identification. As noted in Table 1, the Hybrid Method Variables Model provides an advantage over other types of multiple method variable models in that it allows researchers to potentially control for all three types of method variance—that associated with indirectly and directly measured sources as well as those due to unmeasured sources. The use of all three types of latent method variables within the same design allows researchers a nice balance of specificity and breadth, and in combination they can provide good overall information about the role that CMV processes potentially play in their studies.

Hybrid Method Variables Model. A and B are latent substantive variables, a1–a3 and b1–b3 are measured substantive indicators, M latent Marker Variable, C latent Measured Cause Variable, U unmeasured latent method construct, m1–m3 Marker Variable indicators, c1–c3 Measured Cause Variable indicators, MFC method factor correlation

A Comprehensive Analysis Strategy for CFA Method Variance Models

Given the similarity of the four research designs and associated CFA models, we seek to promote completeness and consistency in how analyses are implemented across the designs, helping advance better tests of underlying substantive theories and improve understanding of CMV processes and effects. Toward this end, we now describe a Comprehensive Analysis Strategy that may be used with any of the four aforementioned designs and use labels of the various components of the strategy following the general framework originally developed for the CFA Marker Technique by Williams et al. (2010). A summary of the strategy is provided in Table 2 and a diagrammatic overview of specific model tests is provided in Fig. 5. The current framework extends Williams et al. (2010) by adding steps within each phase, placing new emphasis on the evaluation of the initial measurement model (Phase 1a), adding an intermediate step for MFL equality comparisons in the model comparisons (Phase 1c), and explicitly examining item reliability (Phase 2a).

Phase 1a: Evaluate the Measurement Model

In this phase, the goal is to examine the measurement model linking the latent variables to their indicators, ensuring that the latent variables have adequate discriminant validity and that the latent variables and their indicators have desirable measurement properties. This phase has its roots in Anderson and Gerbing (1988). It is based on the evaluation of a CFA Measurement Model using all latent variables and their indicators (and control variables if measured with self-reports), including those of the method variables that are represented with their own indicators (Marker Variable, Measured Cause Variable). For all analyses, we recommend achieving identification by standardizing latent variables (setting each factor variance equal to 1.0), as compared to using a referent factor loading set to 1.0, as in our experience fewer estimation and convergence problems occur. The evaluation of CFA results should focus on overall model fit using established criteria, including the Chi-square value which describes absolute fit and values of fit indices like the CFI, RMSEA, and SRMR that focus on relative fit (e.g., Kline 2010). It should be noted that there are advocates of both absolute and relative fit approaches, although most researchers give priority to the latter over the former (e.g., West et al. 2012).

If the model is demonstrated to have adequate fit, factor correlations linking all latent variables should be examined to determine if any are high enough to warrant concerns about discriminant validity (e.g., greater than .80, Brown 2006) which might lead the researcher to drop or combine one or more latent variables. Following Anderson and Gerbing (1988), we suggest that elements of the residual covariance matrix for the indicators may also be used to judge degree of model fit, and may be helpful at understanding CMV that is subsequently found. However, we note this is not the same as allowing for covariance/correlations among indicator residuals, especially when the latter is done to improve model fit (which we do not recommend). Finally, the reliabilities of the indicators should be shown to be adequate (e.g., greater than .36, based on standardized factor loadings of .6, Kline 2010), using the squared multiple correlations for each indicator (amount of variance in each attributable to its latent variable). Also, the composite reliability for each latent variable (e.g., Williams et al. 2010) should be computed to demonstrate that each latent variable is well represented by its multiple indicators.

Phase 1b: Establish the Baseline Model

The goal of this phase is to establish a Baseline Model that will be used in subsequent tests for the presence of CMV effects. Two actions are required for implementation of the Baseline Model and we do not separate them into different steps because they can be implemented at the same time. First, the meaning of latent method variables with their own indicators needs to be established. Specifically, for Marker Variable and Measured Cause Variables, the latent method variables are linked via factor loadings to their own indicators and the indicators of the substantive latent variables. This creates an interpretational problem because, if all of these factor loadings are simultaneously freely estimated, the meaning of the latent method variable becomes a mix of whatever is measured by the method indicator(s) and the four substantive indicators and the meaning of the latent method variable is ambiguous. This compromises the interpretation of the MFLs—it is not clear what type of variance is being “extracted” from the four substantive indicators.

Fortunately, this problem is easily resolved by fixing the factor loading(s) and error variances of the latent method variable on its own indicator(s) before examining the full model with the method factors also impacting substantive indicators. If there is only a single method indicator, then to achieve model identification its factor loading should be fixed at 1 and its error variance at the quantity [(one minus the reliability of the indicator) (variance of the indicator)]. If the single method indicator is a composite of a multiple item scale, coefficient alpha should be used as the estimate of the reliability. However, if multiple indicators are used to represent the latent method variables (e.g., Fig. 4), values are needed for fixing the multiple factor loadings of the method indicators and their associated error variances. Specifically, estimates of the unstandardized factor loadings and error variances for the latent method variable indicators are retained from the CFA Measurement Model for each latent method variable for use as fixed parameters in establishing the Baseline Model. These values are obtained from the CFA Measurement Model examined in Phase 1a, using results based on models with standardized latent variables (but unstandardized indicators).Footnote 2 Finally, since a ULMC method factor does not have its own indicators, the only action required for them at this step is to add an orthogonal latent method variable to the basic measurement model.

Second, all three types of the latent method variables have to be made orthogonal to the substantive latent variables. Specifically, the Baseline Model assumes that each latent method variable is uncorrelated with the substantive latent variables, but the substantive latent variables are allowed to correlate with each other. Forcing the method and substantive factor correlations to zero—in other words creating an orthogonal method factor—is necessary for the model to be identified and it simplifies the partitioning of indicator variance in a subsequent step. For the Hybrid Design, the Marker and Measured Cause Method variables may be allowed to correlate with each other, but each must be orthogonal to the substantive latent variables and the ULMC for identification purposes. Finally, we emphasize that there is no direct model comparison between the Measurement and Baseline Models, and relatively poor fit of the Baseline Model may occur due to its restrictive nature and this should not preclude further use of our strategy as long as the initial CFA Measurement Model demonstrates adequate fit.

Phase 1c: Test for Presence and Equality of Method Effects

The first two phases provide information about the adequacy of the latent variables and their indicators (Phase 1a) and establish a model that will serve as the comparison for subsequent tests of method variance effects (Phase 1b). Phase 1c requires three additional models that add MFLs (MethodU, MethodI, MethodC, which are implemented using three steps) and an associated set of nested model comparisons implemented using Chi-square difference tests (e.g., Kline 2010). We use the term “Method” as a generic label that can be applied to any type of latent method variable, borrowing from Williams et al. (2010) who used this label with Marker Variables to indicate their use of MFLs linking the method variable to the substantive indicators. In contrast to Williams et al., who used “Method-C,” “Method-I,” and “Method-C” as their labels, we use the U, I, and C labels as subscripts to facilitate discussion of hybrid models with different types of method latent variables. For Hybrid Method Variables Models, an additional label is needed to supplement the subscript to indicate the type of latent variable [ULMC (U), Marker (M), and Measured Cause (C)]. A complete description of our labeling scheme for identifying types of latent variables and patterns of equality in MFLs can be found in Table 1.

A key distinction between the MethodU, MethodI, and MethodC Models is the assumption made about the equality of method effects, both within and between substantive latent variables. The MethodU Model is based on the models shown in Figs. 3 and 4, and includes the MFLs linking the latent method variable to the substantive indicators that were restricted to zero in the Baseline Model (labeled MFL). In the MethodU Model label, the U refers to “unrestricted” in reference to its MFLs, as this is a model in which all of the MFLs are allowed to have different values and are freely estimated. This model is often referred to as examining congeneric method effects (Richardson et al. 2009). In Step 1 of Phase 1c, a significant Chi-square difference test between the Baseline and MethodU Models leads to the rejection of the hypotheses that the MFLs are zero and leads to the tentative conclusion that unequal method effects are present.

If the MethodU Model is retained, the researcher proceeds to Step 2 of Phase 1c, which includes examination of a MethodI (“intermediate”) Model, in which the MFLs to substantive indicators are allowed to vary between substantive factors but are constrained to be equal within these factors. In other words, MFL1, MFL2, and MFL3 would be constrained equal and MFL4, MFL5, and MFL6 would be (separately) constrained to be equal. The MethodU and MethodI Models are nested, so the fit of the MethodI model is compared with that of the MethodU Model using a Chi-square difference test, and the hypothesis that the MFLs are equal within factors is tested. If the MethodI model is rejected, the researcher concludes that unequal method effects are present within the substantive latent variables and moves on to Phase 1d analyses that test for bias in substantive relations using results from MethodU. If the MethodI Model is retained and within-factor equal method effects are supported, the researcher proceeds to test a third model in this phase (Step 3, Phase 1c), the MethodC Model.

The “C” in the label for this model reflects the fact that all of the MFLs are constrained to be equal (both within and across substantive factors). Thus, this model is referred to as testing for non-congeneric method effects (Richardson et al. 2009). In Step 3 of Phase 1c, a direct comparison of the MethodI Model with the MethodC Model provides a test of the presence of equal method effects across the substantive latent variables. The null hypothesis is that the MFLs are equal both within and between substantive factors. If the obtained Chi-square difference exceeds the critical Chi-square value for the associated degrees of freedom, the null hypothesis of equal CMV effects across substantive latent variables is rejected. In this case, the MethodI Model with its within substantive latent variable equality constraints is tentatively retained, and the researcher proceeds to Phase 1d. If the Chi-square difference test is not significant, the MethodC Model is retained and the researcher concludes that non-congeneric (equal) method effects are present within and between substantive latent variables and proceeds to Phase 1d.

Phase 1d: Test for Common Method Bias in Substantive Relations

If it is determined that CMV is present based on the comparison of the Baseline Model with MethodU, MethodI, or MethodC Models, the focus shifts to how the factor correlations from models with and without controlling for method variance may differ (i.e., are biased by the presence of CMV). In the context of our Comprehensive Analysis Strategy, the substantive factor correlations from the Baseline Model are compared with those from the MethodU, MethodI, or MethodC Models (depending on which is retained Phase 1c). Specifically, the researcher conducts an overall statistical test of differences between the two sets of factor correlations. This test requires another model, Method-R, where the “R” label indicates that this model contains “restrictions” on key parameters, the substantive factor correlations. We use the Method-R label for consistency with Williams et al. (2010). With the Method-R Model, the substantive factor correlations from the Baseline Model in Step 1b are entered as fixed parameter values in a model with latent method variables specified as determined in Phase 1c (again, MethodU, MethodI, or MethodC, depending on which is retained in Phase 1c). If the test is significant, the null hypothesis of equal sets of factor correlations is rejected, and we conclude that there is bias in substantive factor correlations due to CMV. If the model comparison test supports the presence of bias, researchers can consider the implementation of alternative Method-R Models that restrict only one factor correlation at a time to identify specific instances of bias. Researchers may want to restrict specific correlational tests to those involving relationships between key study substantive model exogenous and endogenous variables to avoid conducting an excessive number of model comparison tests. And, if several of these tests are conducted, a Bonferroni adjustment to significance level may be used to avoid an increasing Type-I error rate.

Phase 2a: Quantify Amount of Method Variance in Substantive Indicators

It is also important to quantify the amount of CMV that exists in both the (a) substantive indicators and (b) substantive latent variables. Note that researchers should do Phases 2a and 2b if Phase 1c Step 1 results indicated the presence of CMV, regardless of whether substantive bias in correlations was found in Phase 1d and regardless of whether the method variance effects have been demonstrated to be unequal (MethodU), unequal between but not within substantive factors (MethodI), or equal within and between substantive factors (MethodC) in Phase 1c.

The process for quantifying the amount of CMV associated with a method latent variable in each substantive indicator was developed by those applying CFA techniques to MTMM designs (e.g., Schmitt and Stults 1986; Widaman 1985). To obtain estimates, the researcher simply squares the standardized factor loadings linking each substantive indicator to the associated substantive or method factor from the retained MethodC, MethodI, or MethodU model. This is done for each substantive indicator, and results are often summarized across all the indicators for a given latent variable or across all substantive indicators in the study using an average or median value. This process can be followed for all four of the research designs, but if multiple correlated latent method variables are included, results for the variance partitioning of each indicator should be calculated separately for each method latent variable based on the final retained method model, and not aggregated across the different method variables.

Phase 2b: Quantify Amount of Method Variance in Substantive Latent Variables

It is also valuable to consider how CMV influences the measurement of latent variables. An important advantage of latent variable procedures is that they allow for an estimation of what it is called the reliability of the composite (e.g., Bagozzi 1982). This composite reliability estimate is seen as superior to coefficient alpha because it requires less restrictive assumptions about the equality of the items involved (e.g., Fornell and Larcker 1981; Heise and Bohrnstedt 1970; Jöreskog 1971). Williams et al. (2010) described “reliability decomposition” to determine how much of the obtained value for the reliability of the composite estimate is due to substantive variance as compared to the particular form of method variance being examined. It can be seen as resulting in a partitioning of variance at the latent variable level that parallels that described earlier at the indicator level. As noted in Williams et al. (2010), the equations for composite (total), substantive, and method reliability, respectively, are as follows:

-

1.

R tot = (sum of factor ldgs.)2/[(sum of factor ldgs.)2 + sum of error variances],

-

2.

R sub = (sum of substantive factor ldgs.)2/[(sum of substantive factor ldgs.)2 + (sum of method factor ldgs.)2 + sum of error variances],

-

3.

R meth = (sum of method factor ldgs.)2/[(sum of substantive factor ldgs.)2 + (sum of method factor ldgs.)2 + sum of error variances].

If the design involves use of multiple correlated latent method variables, it is still appropriate to decompose effects due to each source separately, but results should not be aggregated across the latent method variables due to their inter-correlations which are not accounted for in the formulas above.Footnote 3

Hybrid Method Variables Model Demonstration

Next, we provide a demonstration of the Comprehensive Analysis Strategy applied to the Hybrid Method Variables Model. We used three substantive variables, job control, coworker satisfaction, and work engagement. Theoretical and empirical support exists for the inter-relatedness of these three variables in the organizational literature (particularly, both job control and coworker support with engagement, but we examine correlations among all three variables as potentially being affected by CMV). We chose negative affectivity as our Measured Cause Variable due to concerns that it may directly impact responses to each of our substantive variables, possibly resulting in biased correlations. We chose life satisfaction as our Marker Variable because it is affective based and it does not directly address work domain satisfaction and engagement (as opposed to, for example, supervisor satisfaction or pay satisfaction).

Method

Participants and Procedure

Participants included working adults in various occupations recruited to complete an online survey from the MTurk site run by Amazon.com. Data were collected for use in another published study examining predictors of work ability perceptions (McGonagle et al 2015). Yet, the data were not used with an application of the methods described herein (i.e., use of the data for this demonstration is unique). The final sample was N = 752. Participants were, on average, 35.65 years of age (SD = 9.82) and worked 38.27 h per week (SD = 9.30). A slight majority of the participants were male (n = 407, 56 %).

Substantive Variable Measures

Job Control

Three items from the Decision Latitude scale (Karasek 1979; reported in Smith et al. 1997) were used to measure job control, e.g., “My job allows me to make a lot of decisions on my own.” A four-point Likert-type response scale was used, ranging from 1 (strongly disagree) to 4 (strongly agree). Composite reliability was .90.

Coworker Support

Three items from Haynes et al. (1999) were used to measure coworker support. A sample item is, “My coworkers listen to me when I need to talk about work-related problems.” The response scale ranged from 1 (strongly disagree) to 4 (strongly agree). Composite reliability was .83.

Work Engagement

The nine-item version of the Utrecht Work Engagement Scale (UWES; Schaufeli and Bakker 2003) was used to measure work engagement. Three sub-dimensions are included, each measured with three items: vigor (e.g., “At my job, I feel strong and vigorous”), dedication (e.g., “I am proud of the work that I do”), and absorption (e.g., “I get carried away when I am working”). The Likert-type response scale ranged from 1 (never) to 7 (always—every day). Despite the existence of sub-dimensions, due to high inter-correlations among the dimensions and equivocal differences in model fit when all items load on one latent variable versus three separate latent variables, Schaufeli et al. (2006) promote the use of the nine-item UWES as a single overall measure of work engagement particularly when the goal of the study is to examine overall work engagement.

We used a domain representativeness approach to create parcels for the engagement variable (see Williams and O’Boyle 2008), which is appropriate due to the theoretical three-factor dimensionality of the UWES and the strong correlations between the three dimensions found in other research studies (Schaufeli et al. 2006) and in this study (vigor-dedication r = .96; absorption-vigor r = .97; absorption-dedication r = .91). We included items from each of the three dimensions within every parcel, using a factorial algorithm (Rogers and Schmitt 2004). Composite reliability was .96.

Method Variable Measures

Measured Cause Variable

The brief PANAS scale was used (Thompson 2007; see also Watson et al. 1988); 5 items measured negative affectivity. Participants were asked to rate the extent to which they felt a series of ways in the last 30 days (e.g., “ashamed”) on a 5-point scale ranging from 1 (not at all) to 5 (very much). Composite reliability was .87.

Marker Variable

The five-item Satisfaction with Life scale was used to measure life satisfaction (Diener et al. 1985). A sample item is, “In most ways my life is close to ideal.” The response scale ranged from 1 (strongly disagree) to 7 (strongly agree). Composite reliability was .94.

Analysis and Results

Descriptive statistics, bivariate correlations, and composite reliabilities are presented in Table 3. As expected, both job control and coworker support were moderately positively correlated with work engagement (r = .39 and .50, respectively), and job control was weakly to moderately correlated with coworker support (r = .26).

Phase 1a: Evaluate the Measurement Model

We first estimated a CFA measurement model with the three substantive variables, the Measured Cause Variable (negative affectivity), and the Marker Variable (life satisfaction). The fit of the measurement model was good according to guidelines from Kline (2010): χ 2(142) = 390.90, p < .001; CFI = .976; RMSEA = .048 (.043, .054), SRMR = .03. None of the factor correlations were so high as to warrant concerns about discriminant validity (see Table 3; none exceeded .80; Brown 2006). Variance in indicators accounted for by the latent variables ranged from .67 to .81 (job control), .41 to .80 (coworker support), .83 to .88 (engagement), .46 to .66 (negative affectivity), and .48 to .84 (life satisfaction; all exceeding .36; Kline 2010).

Phase 1b: Establish the Baseline Model

We estimated a Baseline Model by fixing the substantive factor loadings and error variances for the indicators of the Measured Cause Variable and Marker Variable to their respective unstandardized values obtained from Phase 1a and constraining the correlations between (a) the Measured Cause Variable and the substantive variables and (b) the Marker Variable and the substantive variables to zero. Note that the two method variables were allowed to inter-correlate and the substantive latent variables were allowed to inter-correlate, but the added method factors were assumed to be orthogonal with substantive latent variables. The Baseline Model fit statistics are noted in Table 4; these are needed for later model comparison. The standardized substantive factor loadings from the Baseline Model are noted in Table 5, and the Baseline Model substantive factor correlations are noted in Table 6 (also needed for later comparisons). Note that in Table 6, we also present correlation values obtained from our other model tests to give the reader information on how these change with each sequential model tested. The reader should also be aware that these correlation changes at each step will vary depending on the order of method variable entry into the Hybrid Method Variables Model.

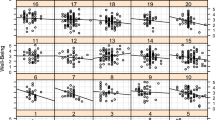

Phase 1c: Test for Presence and Equality of Method Effects

In Phase 1c, we conducted a series of sequential model comparisons—Steps 1, 2, and 3 (see Table 2). In terms of the sequence for entry of the multiple method variables, we started with the Measured Cause Variable due to its relative superiority to our other two method variable in terms of knowing which specific source of CMV for which we are estimating effects. For Step 1 of this phase, we added nine MFLs from the Measured Cause Variable to the substantive indicators, estimating them freely with no equality constraints (Method CU Model in Table 4, where the C label indicates a Measured Cause Variable and the U subscript indicates unconstrained MFLs). Because the comparison of the Method CU Model with the Baseline Model revealed significantly better fit of the Method CU Model (see Table 4, comparison 1), we retained the Method CU Model; we also note that all but one of the Measured Cause Variable MFLs were significant (values ranging from −.06 to −.27).

Next, we proceeded to add the Marker Variable unrestricted MFLs to each substantive indicator, represented by adding MU to our model label. As noted in Table 4 (comparison 2), this Method CUMU Model fit the data significantly better than the Method CU Model; therefore, we retained the Method CUMU Model with the Marker Variable added. Notably, at this point we observed that, with the addition of the nine Marker Variable MFLs, all nine of the Measured Cause Variable MFLs became non-significant, and now ranged from 0 to −.06, whereas the Marker Variable MFLs were all statistically significant and ranged from .20 to .48. We then proceeded to add the ULMC MFLs to the substantive indicators (Method CUMUUU Model). The Method CUMUUU Model demonstrated improved fit over the Method CUMU Model, and was retained (see comparison 3 in Table 4). With the addition of the ULMC MFLs, the Marker Variable MFLs remained significant and did not change much in value (standardized values ranging from .20 to .48). The reader should note that these values are not presented in Table 5, which only includes MFLs from our final retained model. Seven of the nine ULMC factor loadings were statistically significant (standardized values ranging from .05 to .59). In sum, for Step 1, we found evidence to support the inclusion of each of our method variables with unconstrained MFLs, but note that the Measured Cause factor loadings became non-significant. Despite the non-significant factor loadings for the Measured Cause Variable, we do not drop it from the model at this point (see our discussion of model trimming in the Discussion section).

Next, we determined whether the MFLs were equal within and across substantive indicators for each of our method variables via the MethodI (Step 2) and MethodC (Step 3) Models. For Step 2, we added equality constraints to MFLs within each substantive factor, again starting with the Measured Cause Variable (Method CIMUUU Model). Results, depicted in Table 4, indicated a non-significant decline in fit (comparison 4), so we failed to reject the within substantive variable constraints for the Measured Cause Variable and retained the Method CIMUUU Model. Using this model, we then added constraints within substantive variable MFLs for the Marker Variable (Method CIMIUU Model). Again we observed a non-significant decrease in model fit (Table 4, comparison 5), so we retained the Method CIMIUU Model and proceeded to test the equality of the ULMC MFLs within substantive variables (Method CIMIUI Model). As displayed in Table 4 (comparison 6), we observed a significant decline in fit with the Method CIMIUI Model, and therefore rejected the within variable ULMC MFL equality constraints and retained the Method CIMIUU Model for further testing. At this point, we concluded that the Marker Variable MFLs do not vary within substantive factors. Further, we examined the Measured Cause Variable MFLs at this step and they were all still small in magnitude and not statistically significant as they were in Step 1.

Due to retention of MethodI constraints for the Measured Cause and Marker Variables (Method CIMIUU Model), we proceeded to Step 3, in which we further tested the equality of the Measured Cause and Marker Variable MFLs by sequentially constraining the MFLs for each to be equal both within and between substantive factors. Table 4 displays results. The Method CCMIUU Model (where the Measured Cause Variable MFLs were constrained equal within and between substantive factors) demonstrated a non-significant change in fit from the Method CIMIUU Model (comparison 7); therefore, we failed to reject the hypothesis that the MFLs are equal between and within substantive factors and retained the Method CCMIUU Model. Alternatively, the Method CCMCUU Model demonstrated significantly worse fit than the Method CCMIUU Model (Table 4, comparison 8); therefore, we rejected the hypothesis of MFL equality both within and between substantive factors for the Marker Variable and retained the Method CCMIUU Model for further testing. As displayed in Table 5, the Measured Cause MFLs are small in magnitude and non-significant, Marker Variable MFLs are equal within but not between substantive variables, and ULMC factor loadings vary both within and between substantive variables. At this point, we again note that many of the ULMC and Marker Variable MFLs were statistically significant, although the Measured Cause Variable factor loadings were not significant (see Table 5).

Phase 1d: Test for Common Method Bias in Substantive Relations

We estimated the Method-R Model by constraining the factor correlations estimated in the retained model from Phase 1c, the Method CCMIUU Model, to equal those estimated in the Baseline Model. In our Baseline Model, the three correlations were .26, .50, and .39, while in Method CCMIUU Model, their respective values were .18, .15, and .27, as shown in Table 6. The results of our Step 1 model comparison are included in Table 4. Because we found a significant decline in model fit (see comparison 9), we rejected the hypothesis that there was no difference between the correlation values and concluded that our substantive correlations are biased by the presence of CMV. Given the pattern of differences between the two sets of factor correlations and lack of substantive hypotheses regarding structural paths, we did not examine alternative Method-R models to identify specific cases of bias, yet researchers may opt to do this.

Phase 2a: Quantify Amount of Method Variance in Substantive Indicators

To quantify the amount of CMV that exists in the substantive indicators, we calculated the average variance accounted for in the indicators by each of the method variables by squaring the standardized MFLs from our retained model in this example, the Method CCMIUU Model (see Table 5) and computing means. The ULMC accounted for an average of 17.2 % of the variance in the substantive indicators, the Measured Cause Variable accounted for an average of 0 % of the variance in the substantive indicators, and the Marker Variable accounted for an average of 10.5 % of the variance in the substantive indicators overall.

Phase 2b: Quantify Amount of Method Variance in Substantive Latent Variables

Next, we decomposed the total reliability of each substantive latent variable into substantive reliability and method reliability. In this Hybrid Method Variables Model example, we present latent variable decomposition results for each method variable separately using estimates from our retained model, Method CCMIUU Model. Yet, we do not combine them to get an estimate of total method reliability, as the equations for estimating substantive and method reliability are based on an assumption of orthogonality of method factors, which is not met here. Table 7 displays method (ULMC, Measured Cause, Marker Variable) reliability coefficients for each substantive latent variable that we attained using the equations noted above. The amount of total reliability in the substantive variables for the Measured Cause Variable was zero; for the Marker Variable, it was .06 (coworker support), .08 (job control), and .32 (engagement); for the ULMC, it was .02 (coworker support), .28 (engagement), and .38 (job control).

Conclusions and Recommendations

One key purpose of this paper is to explain and demonstrate our Comprehensive Analysis Strategy, which provides an overarching framework for conducting CFA analyses of four types of designs involving different types of latent method variables and assessment of CMV. Additionally, we address the lack of examples and guidance on how to implement the Hybrid Method Variables design in the current organizational literature with our demonstration. In the paragraphs that follow, we provide some general comments on CFA models for assessing CMV, in some instances linking them to our model results, as well as offer guidelines and recommendations for researchers related to the use and interpretation of CMV models.

Considerations for Researchers When Implementing CFA Models for CMV

We recommend the Hybrid Method Variables Model Design as being preferable because it provides a comprehensive view of the degree to which various types of CMV exist and bias study findings, including types associated with both measured (direct and indirect) and unmeasured sources. As noted earlier, the inclusion in this design of all three types of method variables offers a balance of the strengths and weaknesses of all three single method variable designs. Yet, researchers may find that the Hybrid Method Variables Model is not feasible in all situations. The two most common reasons this model will not be able to be used likely relate to (a) the necessary inclusion of relevant items for conceptually related Measured Cause and Marker Variables, and (b) the need for a relatively large sample size, given the number of parameters to be estimated. Recommendations for minimum sample sizes are typically based on a ratio of cases to free parameters, and range from 10:1 to 20:1 (Kline 2010).

We generally recommend that researchers think carefully about likely sources of CMV prior to implementing their studies and include measures of those corresponding Marker Variables and Measured Cause Variables in their data collection. Likely sources of CMV will vary based on the data collection strategy and the substantive study variables. For example, a study of work stress relating to job satisfaction may elicit mood-based CMV; therefore, researchers should consider including a mood-based Measured Cause Variable (e.g., negative affect) in their surveys. If the survey is not anonymous, researchers may consider including a measure of social desirability as another Measured Cause Variable. An appropriate Marker Variable may be a measure of community satisfaction, which would tap into affect-based response tendencies. More generally, Spector et al. (2015) have emphasized the importance of linking specific sources of method variance to specific substantive variables.

Yet, we also realize that researchers have limited space in surveys and will need to make decisions about whether to include additional substantive variables versus method variables in a Hybrid Model. To this end, we recommend that researchers use validated short forms of scales, such as the brief PANAS we used in our demonstration, whenever possible to measure method variables. Further, parceling (although controversial) may be used for method variable indicators (see Williams and O’Boyle 2008), as well as the single indicator approach based on reliability information described earlier. Appropriate decisions about variable inclusion will ultimately differ depending on the study question and method. Survey length considerations may necessitate researchers thinking carefully about potential for model misspecification in terms of omitted substantive variables versus including multiple method variables (Markers, Measured Cause Variable) to test for CMV. Further, we remind readers that it is important with Hybrid Models to meet the various statistical assumptions and data requirements required of CFA models (including sample size), as discussed within the context of Marker Variables by Williams et al. (2010). Finally, if study variables are non-normally distributed, it is important that researchers apply appropriate corrections to Chi-square values for difference testing or use alternative appropriate model difference testing approaches (e.g., see Bryant and Satorra 2012).

Regarding the execution of the Hybrid Method Variables Design, researchers may be wondering about sequence of variable entry; that is, which to enter first, second, and third, as the order will affect the results and conclusions drawn. Sequence should be determined based both on conceptual considerations and relative utility of each type of method variable, with some caveats. Due to the relative superiority of the Measured Cause Variable design over the other two approaches (based on knowing what is being measured/controlled for), we advocate that a Measured Cause Variable should be entered first, followed by the Marker Variable latent variable and associated effects, and finally adding the ULMC MFLs. Yet, as a variation on the Hybrid Method Variables Model, if a researcher has multiple Measured Cause or multiple Marker Variables, the one that is deemed most conceptually relevant as a source of CMV should be entered first. This logic should apply to all subsequent model comparisons. Finally, we add that if the entry of unconstrained MFLs for a particular method variable (Phase 1c, Step 1) is not supported and found to not lead to significant model improvement, that method variable may be dropped from further use in subsequent stages of the research.

If a researcher has specific hypotheses regarding one or more method variables, the order may be different depending on his/her research question. In our case, our questions asked what marker variance was present beyond Measured Cause, and then what ULMC variance was present beyond that associated with Measured Cause and Marker Variables. Researchers should also note that, for the sake of limiting the overall number of model comparison tests, we chose not to examine each substantive variable separately for equivalence in MFLs. In other words, when we tested for unconstrained factor loadings, we freed all of the MFLs to substantive factors simultaneously, rather than stepwise, e.g., first freeing the coworker support MFLs, then the job control MFLs, then the engagement MFLs. However, there may be circumstances in which the researcher chooses to introduce non-simultaneous testing for equivalence of MFLs by substantive variable. This would be indicated only when theory suggests that differences exist; e.g., that MFLs should be equal for one substantive variable but unequal for another.

We would like to discuss two other issues related to MFLs relevant for the Hybrid Method Variables Model Design (as well as the other three designs on which it is based). First, we would like to point out that, as we proceeded through our Phase 1c model comparisons, all MFLs from a given model were retained in subsequent models that were examined, even those that were found to not be significantly different from zero. There is little guidance in the SEM technical literature on this issue, and in substantive studies, examples are available where researchers have followed this practice, while other in other examples researchers have “trimmed” as they go and dropped non-significant parameters. It is the case that whether they are retained in these subsequent models or not has little impact on model fit, given that they have already been determined to be non-significant. However, it is also the case that their inclusion may have the effect of increasing the standard errors of other parameters (e.g., Bentler and Moojart 1989), and this may be especially important given the complexity of these types of measurement models and expected correlations among the various MFL estimates. Second, it has been established in the literature on CFA analysis of MTMM matrices that MFLs that are equal or even nearly equal may create estimation and empirical under identification problems that should be taken into account and may preclude success during their evaluation.

We now raise some additional issues for consideration related to each of the three method variables included in the Hybrid Method Variables Model Design. These comments also apply when they are used separately. As noted, when using ULMC latent method variables, the effects of systematic error are accounted for but the source of the error is not identified. This means that the ULMC may include not only CMV but also possibly additional shared variance due to other sources, leading to potentially ambiguous results. The inability to know specific sources of variance with the ULMC is generally seen quite negatively and has led some researchers to denounce its use. However, the ULMC does offer a way to capture any source of misspecification, and therefore, it provides a conservative test of the substantive hypotheses, which may be desirable in some circumstances. In other words, it may sometimes lead to the incorrect conclusions of CMV effects due to the ULMC method factor actually reflecting substantive variance, leading to a Type-I error, but this may not be seen as bad as if it led to Type-II errors and incorrect missing of CMV effects. When considering use of the ULMC, researchers should also be aware that ULMC models with equality constraints on MFLs may sometimes suffer from estimation problems (e.g., Kenny and Kashy 1992), which can create ambiguity in their interpretation.

As noted, the use of Marker Variables improves on limitations of the ULMC by including an indirect measure of what is presumed to be causing the CMV. Yet, good markers are difficult to identify. Researchers must carefully choose which Marker Variables to include in their studies, noting that “ideal” markers have been touted as those that are conceptually unrelated to substantive study variables. However, it is simplistic and incomplete to choose a Marker Variable only on the basis of a lack of substantive relation of the marker with substantive variables (Williams et al. 2010). Specifically, if a given Marker Variable is chosen because it is not theoretically linked to substantive variables, but its link to some part of the measurement process is weak, it will not share any variance with the substantive variables. Therefore, researchers should be very cautious with claims that they do not have a CMV problem based on use of Marker Variables—lack of CMV effects may be due to use of poor Marker Variables.

We provide our strongest encouragement that at the time they are finalizing their design and data collection procedures, researchers considering use of a Marker Variable should have a clear rationale for their choice and link it with one or more sources of CMV described above. In our demonstration, we used life satisfaction as a Marker Variable, given that it is only indirectly conceptually related to our work-related substantive variables and is an indirect measure of affective response tendencies. Therefore, it may have also accounted for substantive shared variance between our substantive variables and we note that it may be over-estimating the degree to which CMV biased our substantive correlations.

Measured Cause Variables offer improvements over both the ULMC and Marker Variables because they directly (as opposed to indirectly) assess source of method effects. When using the Measured Cause Variable, researchers should remember that they are not controlling of any other sources of CMV that may be present, so conclusions should be offered quite tentatively about overall CMV effects. We also reiterate that researchers should carefully choose which Measured Cause Variables to include based on conceptual rationale before starting their data collections. For example, if a study asks individuals to report counterproductive work behaviors, then social desirability responding is a likely concern, particularly if the study is not anonymous.

Our demonstration of very small CMV effects due to our measured method variable, negative affectivity, was unexpected, given the fact that our substantive variables, particularly, work engagement, have conceptual linkages to affect. These small effects may be due to the nature of our substantive variables, as some research has suggested that negative affect may have weaker effects for more positively framed substantive variables (e.g., Williams et al. 1996), and positive affect may be a more important driver of method variance with such positive variables (e.g., Chan 2001; Williams and Anderson 1994). Such an explanation may account for why significant MFLs for negative affectivity became non-significant when life satisfaction was added; the latter may have more completely tapped into positive affect that was linked to our positively framed substantive variables.

What Researchers Should Do After Finding Evidence of CMV Biasing Results

If you do not find any evidence of CMV biasing your substantive variable correlations (i.e., no significant change in model fit when entering any of the MethodU MFLs), you do not need to include method variables in any further model testing. However, if you do find evidence of CMV and associated bias (as demonstrated by significant reduction(s) in substantive variable correlations in Phase 1d), you have several alternatives for testing the structural or theoretical model while attempting to control for CMV. A first alternative involves estimating the structural model with the supported method variable specification as identified through the Comprehensive Analysis Strategy model testing. However, researchers may encounter difficulties with model identification and estimation with the inclusion of multiple MFLs to be estimated in the context of a model with exogenous and endogenous latent variables and indicators. There are a number of steps one can take, which should be used sequentially, including (1) the use of starting values for the MFLs and other parameters, (2) dropping all non-significant MFLs from the model, and/or (3) fixing the values of the MFLs and error variances to those values attained in the final retained model from the Comprehensive Analysis Strategy. A different strategy that has not been discussed is a two-step process in which the factor correlations from the final method variance model, which correct for CMV, are used as input in a path model that does not incorporate indicator variables. This approach was recommended as a potential for addressing non-CMV measurement problems, but that may also work for CMV biases (e.g., McDonald and Ho 2002).

What Researchers Should Report in their Results Regarding CMV

Because tests for CMV are often seen as secondary to tests of theoretical substantive variable relations, it may be the case that authors are reluctant to report all of the information that we have in this demonstration paper, and reviewers and Editors may ask authors to decrease the amount of manuscript space devoted to assessment and treatment of CMV. We recommend that authors include a summary of the information we described in our Comprehensive Analysis Strategy steps. At a minimum, researchers should report results of Phase 1c model testing (which model is retained and why) and results of Phase 1d model testing of bias in substantive relations, along with a summary of the differences between substantive correlation values attained from the Baseline Model and the model retained in Phase 1c. Also included should be a summary of standardized MFLs, squared values and average variance accounted for by method variable(s), and method reliability values for each substantive latent variable. A recent example of such a summary can be found in Ragins et al. (2014).

Conclusions

Through this paper, we hope to provide some clarity regarding the use of latent variable models to assess common method variance and bias through our explanation and demonstration of the Comprehensive Analysis Strategy. We also provide guidance on the use of the Hybrid Method Variables Model. Throughout, we have highlighted the need to place more emphasis on theory related to method effects as a key to optimal implementation of our Comprehensive Analysis Strategy, and the need to think through issues related to CMV during the study design process. We hope to see a more unified approach to assessing and controlling for CMV and greater use of the Hybrid Method Variables Model in the organizational literature.

Notes

It is preferable to test for CMV using a CFA model, as compared to a full structural equation model with exogenous and endogenous latent variables. This preference is based on the fact that the CFA model is the least restrictive in terms of latent variable relations (all latent variables are related to each other), so there is no risk of path model misspecification compromising CMV tests. Also, there are likely fewer estimation/convergence problems due to the complex method variance measurement model when implemented in a CFA vs. a path model.

With LISREL, latent variable standardization is the default, and the factor loadings and error variances to be used are referred to as LISREL Estimates. With Mplus, the default is to achieve identification by setting a referent factor loading equal to 1.0 and the factor variance is estimated, so this default must be released so that the referent factor loading is estimated and the corresponding factor variance is set equal to 1.0. Assuming this has occurred, the Mplus unstandardized estimates are used as fixed values for the relevant factor loadings and error variances.

As part of the original CFA Marker Technique, Williams et al. (2010) also included a Phase III Sensitivity Analysis based on Lindell and Whitney (2001) to address the degree to which conclusions might be influenced by sampling error (see Williams et al. pp. 500; 503). In our current strategy, Sensitivity Analysis is not included, but can be seen as optional. Based on the results of Williams et al., we note that researchers may consider including it only if their sample sizes are very small and there is a concern that sampling error may be influencing their point estimates and method variance effects might be underestimated.

References

Anderson, J. C., & Gerbing, D. W. (1988). Structural equation modeling in practice: A review and recommended two-step approach. Psychological Bulletin, 103(3), 411–423.

Bagozzi, R. P. (1982). A field investigation of causal relations among cognitions, affect, intentions, and behavior. Journal of Marketing Research, 19, 562–584.

Bagozzi, R. P. (1984). Expectancy-value attitude models an analysis of critical measurement issues. International Journal of Research in Marketing, 1(4), 295–310.

Barrick, M. R., & Mount, M. K. (1996). Effects of impression management and self-deception on the predictive validity of personality constructs. Journal of Applied Psychology, 81, 261–272.

Bentler, P. M., & Moojart, A. (1989). Choice of structural model via parsimony: A rationale based on precision. Psychological Bulletin, 106, 315–317.

Brief, A. P., Burke, M. J., George, J. M., Robinson, B. S., & Webster, J. (1988). Should negative affectivity remain an unmeasured variable in the study of job stress? Journal of Applied Psychology, 73, 193–198.

Brown, T. A. (2006). Confirmatory factor analysis for applied research. London: Guilford Press.

Bryant, F. B., & Satorra, A. (2012). Principles and practice of scaled difference Chi square testing. Structural Equation Modeling: A Multidisciplinary Journal, 19, 372–398.

Campbell, D. T., & Fiske, D. W. (1959). Convergent and discriminant validation by the multitrait-multimethod matrix. Psychological Bulletin, 56(2), 81–105.

Chan, D. (2001). Method effects of positive affectivity, negative affectivity, and impression management in self-reports of work attitudes. Human Performance, 14(1), 77–96.

Chen, P. Y., & Spector, P. E. (1991). Negative affectivity as the underlying cause of correlations between stressors and strains. Journal of Applied Psychology, 76, 398–407.

Conway, J. M., & Lance, C. E. (2010). What reviewers should expect from authors regarding common method bias in organizational research. Journal of Business and Psychology, 25, 325–334.

Dawson, J. F. (2014). Moderation in management research: What, why, when, and how. Journal of Business and Psychology, 29, 1–19.

Diener, E., Emmons, R. A., Larsen, R. J., & Griffin, S. (1985). The satisfaction with life scale. Journal of Personality Assessment, 49, 71–75.

Ding, C., & Jane, T. (2015, August). Re-examining the effectiveness of the ULMC technique in CMV detection and correction. In L. J. Williams (Chair), Current topics in common method variance, Academy of Management Conference, Vancouver, BC.

Fornell, C., & Larcker, D. F. (1981). Evaluating structural equation models with unobservable variables and measurement error. Journal of Marketing Research, 18(1), 39–50.

Ganster, D. C., Hennessey, H. W., & Luthans, F. (1983). Social desirability response effects: Three alternative models. Academy of Management Journal, 26, 321–331.

Haynes, C. E., Wall, T. D., Bolden, R. I., Stride, C., & Rick, J. E. (1999). Measures of perceived work characteristics for health services research: Test of a measurement model and normative data. British Journal of Health Psychology, 4, 257–275.

Heise, D. R., & Bohrnstedt, G. W. (1970). Validity, invalidity, and reliability. Sociological Methodology, 2, 104–129.

Johnson, R. E., Rosen, C. C., & Djurdjevic, E. (2011). Assessing the impact of common method variance on higher order multidimensional constructs. Journal of Applied Psychology, 96, 744–761.

Jöreskog, K. G. (1971). Simultaneous factor analysis in several populations. Psychometrika, 36, 409–426.

Karasek, R. (1979). Job demands, job decision latitude, and mental strain: Implications for job re-design. Administrative Science Quarterly, 24, 285–306.

Kenny, D. A., & Kashy, D. A. (1992). Analysis of multitrait-multimethod matrix by confirmatory factor analysis. Psychological Bulletin, 112, 165–172.

Kline, R. B. (2010). Principles and practice of structural equation modeling (3rd ed.). New York: Guilford Press.

Landis, R. S. (2013). Successfully combining meta-analysis and structural equation modeling: Recommendations and strategies. Journal of Business and Psychology, 28, 251–261.

Lindell, M. K., & Whitney, D. J. (2001). Accounting for common method variance in cross-sectional research designs. Journal of Applied Psychology, 86, 114–121.

McDonald, R. P., & Ho, M. H. R. (2002). Principles and practice in reporting statistical equation analyses. Psychological Methods, 7, 64–82.

McGonagle, A. K., Fisher, G. G., Barnes-Farrell, J. L., & Grosch, J. W. (2015). Individual and work factors related to perceived work ability and labor force outcomes. Journal of Applied Psychology, 100, 376–398. doi:10.1037/a0037974.

McGonagle, A., Williams, L. J., & Wiegert, D. (2014, August). A review of recent studies using an unmeasured latent method construct in the organizational literature. In L. J. Williams (Chair), Current issues in investigating common method variance. Presented at annual Academy of Management conference, Philadelphia, PA.

Podsakoff, P. M., MacKenzie, S. B., Lee, J. Y., & Podsakoff, N. P. (2003). Common method biases in behavioral research: A critical review of the literature and recommended remedies. Journal of Applied Psychology, 88, 879–903.

Podsakoff, P. M., MacKenzie, S. B., Moorman, R. H., & Fetter, R. (1990). Transformational leader behaviors and their effects on followers’ trust in leader, satisfaction, and organizational citizenship behaviors. Leadership Quarterly, 1(2), 107–142.

Podsakoff, P. M., MacKenzie, S. B., & Podsakoff, N. P. (2012). Sources of method bias in social science research and recommendations on how to control it. Annual Review of Psychology, 63, 539–569.

Podsakoff, P. M., & Organ, D. W. (1986). Self-reports in organizational research: Problems and prospects. Journal of Management, 12, 531–544.

Ragins, B. R., Lyness, K. S., Williams, L. J., & Winkel, D. (2014). Life spillovers: The spillover of fear of home foreclosure to the workplace. Personnel Psychology, 67, 763–800.

Richardson, H. A., Simmering, M. J., & Sturman, M. C. (2009). A tale of three perspectives: Examining post hoc statistical techniques for detection and correction of common method variance. Organizational Research Methods, 12, 762–800.

Rogers, W. M., & Schmitt, N. (2004). Parameter recovery and model fit using multidimensional composites: A comparison of four empirical parceling algorithms. Multivariate Behavioral Research, 39, 379–412.

Schaubroeck, J., Ganster, D. C., & Fox, M. L. (1992). Dispositional affect and work-related stress. Journal of Applied Psychology, 77, 322–335.

Schaufeli, W. B., & Bakker, A. B. (2003). The Utrecht Work Engagement Scale (UWES). Test manual. Utrecht: Department of Social & Organizational Psychology.

Schaufeli, W. B., Bakker, A. B., & Salanova, M. (2006). The measurement of work engagement with a short questionnaire a cross-national study. Educational and Psychological Measurement, 66, 701–716.

Schmitt, N. (1978). Path analysis of multitrait-multimethod matrices. Applied Psychological Measurement, 2, 157–173.

Schmitt, N., Nason, E., Whitney, D. J., & Pulakos, E. D. (1995). The impact of method effects on structural parameters in validation research. Journal of Management, 21, 159–174.

Schmitt, N., Pulakos, E. D., Nason, E., & Whitney, D. J. (1996). Likability and similarity as potential sources of predictor-related criterion bias in validation research. Organizational Behavior and Human Decision Processes, 68(3), 272–286.

Schmitt, N., & Stults, D. M. (1986). Methodology review: Analysis of multitrait-multimethod matrices. Applied Psychological Measurement, 10(1), 1–22.

Simmering, M. J., Fuller, C. M., Richardson, H. A., Ocal, Y., & Atinc, G. M. (2015). Marker variable choice, reporting, and interpretation in the detection of common method variance: A review and demonstration. Organizational Research Methods, 18, 473–511. doi:10.1177/1094428114560023.

Smith, D. B., & Ellingson, J. E. (2002). Substance versus style: A new look at social desirability in motivating contexts. Journal of Applied Psychology, 87, 211–219.

Smith, C. S., Tisak, J., Hahn, S. E., & Schmieder, R. A. (1997). The measurement of job control. Journal of Organizational Behavior, 18, 225–237.

Spector, P. E. (2006). Method variance in organizational research truth or urban legend? Organizational Research Methods, 9, 221–232.