Abstract

External electric and mechanical stimuli can induce shape deformation in excitable media because of its intrinsic flexible property. When the signals propagation in the media is described by a neural network, creation of heterogeneity or defect is considered as the effect of shape deformation due to accumulation or release of energy in the media. In this paper, a temperature-light sensitive neuron model is developed from a nonlinear circuit composed of a phototube and a thermistor, and the physical energy is kept in capacitive and inductive terms. Furthermore, the Hamilton energy for this function neuron is obtained in theoretical way. A regular neural network is built on a square array by activating electric synapse between adjacent neurons, and a few of neurons in local area is excited by noisy disturbance, which induces local energy diversity, and continuous coupling enables energy propagation and diffusion. Initially, the Hamilton energy function for a temperature-light sensitive neuron can be obtained. Then, the finite neurons are applied noise to obtain energy diversity to explore the energy spread between neurons in the network. For keeping local energy balance, one intrinsic parameter is regulated adaptively until energy diversity in this local area is decreased greatly. Regular pattern formation indicates that local energy balance creates heterogeneity or defects and a few of neurons show continuous parameter shift for keeping energy balance in a local area, which supports gradient energy distribution for propagating waves in the network.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Artificial neuron models can be helpful to explore working mechanism for biological neurons by taming the firing patterns and discovering its energy characteristic. The improved and proposed neuron models by applying mathematical and physical theories, which have potential practical implications for understanding brain working patterns and constructing brain-like networks. In 1952, Hodgkin and Huxley [1] proposed the first Hodgkin-Huxley (HH) neuron model by capturing and analyzing the experimental data about membrane potential series from squid axon. Neural networks consisting of HH neurons have been used to clarify the mechanisms of neural diseases [2, 3] and the stability of collective behaviors in networks [4, 5]. The setting of complex parameters of HH neurons is not conducive to the study of a single aspect of the electrical activity of neurons. In 1961, FitzHugh [6] designed a simple FitzHugh-Nagumo (FHN) model based on biological features for HH model, the FHN model composes two ordinary differential equations, and a variety of firing patterns can be induced by adjusting the amplitude or frequency of external periodic stimulus. In 1984, Hindmarsh and Rose [7] obtained the 3D Hindmarsh-Rose (HR) neuron model by voltage clamp experiments in snail nerve cells. The HR model neglects ion channel effects and is able to mimic the irregular firing behavior produced by mollusk neurons. Subsequently, many different types of neuron models have been proposed. For example, the Morris-Lecar neuron model [8,9,10], the Chay neuron model [11, 12], the memristive HR model [13], and the memristive FHN model [14].

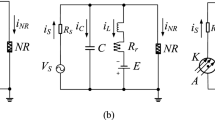

For the these neuron models, due to the simple structure of the FHN model, the equivalent circuit is easy to achieve, and the rich firing behaviors and other characteristics can be explored by changing the external stimulus. Functional neuron models have been obtained by introducing different electronic components with special functions into simple FHN neural circuits. For instance, in Refs. [15, 16], a photosensitive neuron model is proposed by connecting a photocell into different branches of a simple neural circuit. An auditory neuron model [17] is proposed by connecting a neural circuit to a piezoelectric ceramic into. Xu et al. [18] designed a thermosensitive neuron by applying a thermistor to replace resistor of different branches in the FHN neural circuit. In Ref. [19], an ideal Josephson junction and a magnetic flux-affected memristor are in parallel connected into a FHN neural circuit for estimating the effect of external magnetic field. Yang et al. [20] developed a physical neuron for estimating electric field disturbance by connecting a charge-relative memeristor into a simple circuit. An improved photosensitive memristive neural circuit [21] is introduced by in parallel connecting a memristor into photosensitive neural circuit.

Neurons can be excited to show a rich dynamic behavior, such as resting state, spiking firing, bursting firing, chaotic firing and mixed firing. For memristive neurons, appearance of multistability supports coexistence of multiple firing patterns. The process of electrical activity requires a supply of energy. The Hamilton energy function for a neuron in oscillator form can be obtained by applying the Helmholtz’s theorem [22], and the energy function can also be mapped from the field energy for its equivalent neural circuit. In Refs. [23, 24], the energy correlation with firing modes of neuron model was discussed. Energy feedback control of dynamic for neuron model is investigated in Refs. [25,26,27]. Energy for a memristor coupled neurons model is calculated in Ref. [28]. For two or more neurons, energy propagation and exchange target to possible energy balance, so that the coupled neurons can be tamed in synchronous firing patterns. For example, energy balance [29,30,31,32,33] between neurons and energy balance in the neural networks [34] are explored. According to in Ref. [35], the exact Hamilton energy function is the most suitable Lyapunov function for this system. For further guidance, approach of Hamilton energy functions for some chaotic systems are presented in Refs. [36,37,38]. Energy flow is used to control the growth of synaptic [39] and synchronization between neurons [40, 41], in this way, adaptive regulation mechanism in parameter and energy shift for neurons are clarified. The recent work in Ref. [42] claimed that four main firing modes in the Hindmarsh-Rose neuron are relative to four energy levels approached by calculating the average energy under different firing modes. Mathematical neuron models seldom provide exact energy functions rather than generic Lyapunov functions. When specific electric components are used to connect nonlinear circuits for developing potential neural circuits [43,44,45,46], and the field energy kept in capacitor, inductor and memristors can be classified as capacitive and inductive forms. Ideal Josephson junction can discern external magnetic field but it seldom saves energy at all. Based on these memristive neurons, diverse electromagneitc induction [47,48,49,50,51], uniform and non-uniform electromagnetic radaition [52,53,54,55] can be discerned by measuring the pattern stability and wave propagation in the memristive networks. Furthermore, field coupling between memristive neurons throws light on knowing self-organization of neural networks without synaptic connections [56,57,58,59]. For a neural network, heterogeneity [60] can be formed by accumulating energy for finite neurons, and defect area [61] is obtained when reduction energy of finite neurons in the network.

The shape deformation of neurons is irregular due to their flexible structures and energy absorption from external stimuli. It is interesting to investigate energy diffusion phenomenon when the shape deformation of neuron occurs under noise disturbance and non-uniform stimuli. When energy function is available, energy distribution and energy balance between neurons present some clues to explore the wave propagation and self-organization in the functional neural network clustered with functional neurons. In this work, a regular network composed of temperature-light sensitive neurons is designed, energy function is defined and adaptive law is presented to control parameter shift for keeping local energy balance, which supports emergence of gradient energy distribution for emitting wave fronts. This study presents some guidance for exploring and understanding the transition of collective behaviors in a neural network controlled by energy flow.

2 Model and scheme

A temperature-light sensitive neural circuit is designed by connecting a phototube and a thermistor in a simple nonlinear circuit. This neuron can perceive external light and changes of temperature, and its the relation between electric components is displayed in Fig. 1.

According to the Kirchhoff's law, the circuit variables for Fig. 1 can be controlled under the criterion

where the current through the nonlinear resistor RN in Fig. 1 can be estimated by

where ρ and V0 represent the normalized parameters, and the variant voltage Vs for the phototube is considered with periodic form

where B0 is a constant and A0 and f0 represent the amplitude and angular frequency of the periodic voltage source. The resistance across thermistor can be estimated by

where q is the activation energy, T denotes the temperature, K represents the Boltzmann constant, and R∞ is a constant corresponding to the thermistor resistivity at an infinitely high temperature T (T→∞). The physical parameters and variables for Eqs. (1– 4) are replaced with dimensionless forms as follows

where T0 is the reference temperature and it is selected a value as the material constant B′ (=q/K). Therefore, the neuron model controlled by photocurrent and temperature can be described by

Furthermore, the physical field energy in the neural circuit in Fig. 1 is calculated by

By applying the scale transformation in Eq. (5), the dimensionless Hamilton energy function is mapped from Eq.(7), it presents

From Eq. (8), the Hamilton energy function of an isolated neuron mainly depends on the variables (x, y) and parameters c. To theoretically approach of the Hamilton energy function for the neuron, the Helmholtz’s theorem requires a vector form for the neuron models in Eq. (6) as follows

The Helmholtz theorem defines that the Hamilton energy function H meets the following criterion

A sole Hamilton energy function can be exact solution for formula as follows

The superscript T indicates transpose operation of matrices for the gradient energy. As a result, a solution for Eq.(11) can be obtained to match the Hamilton energy function presented in Eq. (8).

When the neurons are activated by applying with different external stimuli, energy diversity between neurons can be generate. The connected channels will open and transmit energy for keeping energy balance. In presence of resistive coupling between these neurons, a regular network on a square array can be described by

where the subscripts (i, j) mean the node position and Gij denotes adjacent regulation on the node (i, j) in the regular network, k is the coupling intensity for electric synaptic connection. For an isotropic network, all nodes have the same parameters setting, while a gradient distribution of temperature may induce spatial diversity and homogeneity in the network.

3 Results and discussion

In this section, the numerical solutions for a single functional neuron and coupled neurons regular network are obtained on the MATLAB platform by applying the fourth-order Runge-Kutta algorithm with time step h=0.01. The bifurcation diagrams for the single neuron by changing one parameter are shown in Fig. 2.

Bifurcation diagram for a neuron with different parameters setting. For a ξ=0.175, B=0.6, A=0.9; b T′=5.5, B=0.6, A=0.9; c T′=5.5, ξ=0.175, A=0.9; d ξ=0.175, T′=5.5, B=0.6. Other parameters are chosen as a=0.7, b=0.8, c=0.1, f=1, and initial value is set as (0.1, 0.3). xpeak denotes peak values of membrane potential x

The results in Fig. 2 show that photocurrent can excite the neuron to show periodic and chaotic firing patterns by adjusting parameter values including forcing signal and temperature. Furthermore, Fig. 3 plotted the largest Lyapunov exponent (LLE) with different parameters.

From Fig. 3, LLE greater than zero means occurrence of chaotic state, while LLE less than zero predicts appearance of periodic patterns. In fact, bursting and spiking firing patterns are periodic modes, furthermore, the sampled time series for membrane potential and Hamilton energy by changing frequency f are shown in Fig. 4.

Fig. 4 illustrates that the neuron occurs three kinds of firing modes by adjusting the frequency, and the neuron has higher mean value of Hamilton energy with bursting and spiking firing patterns, while it has lower mean value of Hamilton energy with chaotic modes. To investigate collective dynamical behaviors for neural networks presented in Eq. (12), the firing patterns in networks under different coupling intensities k are calculated, the results are displayed in Fig. 5 for the network composed of 120*120 neurons by applying no-flux boundary condition. For simplicity, all nodes start from the random values within 0~1.

From Fig. 5, it is demonstrated that spiral wave segments can coexist with target wave in local area of the network during adjusting coupling intensity. With the further increase of coupling intensity, the target waves will be broken to develop a spiral wave close to the border of the network. The energy diffusion and energy distribution in the network with different coupling intensities k are shown in Fig. 6.

Similar to results in Fig. 5, random diffusion of energy will form the coexistence of target waves and spiral waves, when the coupling intensity continues to increase, the coexistence of travelling waves will evolve into a single spiral wave. To dicern the collective behaviors response of neural network under noisy excitation, the dynamic equations of the coupled functional neural network are expressed by

where ζ(τ) denotes the Gaussian white noise with zero average. The statistical relation is <ζ(τ)>=0, <ζ(τ)ζ(τ′)>=2Dδ(τ-τ′), here D means noise intensity and D=exp(−1/T′) accounts for temperature-dependent noisy disturbance. The noise is imposed on the network in two ways. When noisy disturbance is applied on different regions, the energy injection from external stimuli breaks the energy balance and induces parameter diversity in the network. In the first case, the noise is applied on the center of the network, and the size of the region is selected as N/2−10≤i, j≤N/2−10. In the second case, the noise is added on the boundary region of the network, and the size of the region is fixed as 1≤i≤10, 1≤j≤N. Firstly, the case that noise is imposed on the center of the network is investigated. The firing patterns in networks in presence of different noise intensities are displayed in Fig. 7.

It is confirmed that the stable and perfect target wave can be formed in the network with the decrease of noise intensity D (temperatures T′ increase). Indeed, noise is applied on the center area, which captures external energy for further spatial diffusion, and wave can be propagated in the network. Furthermore, the energy propagation in the network is analyzed by changing noise intensity carefully, and the energy patterns are plotted in Fig. 8 for showing the energy distribution under synaptic coupling.

The results in Fig. 8 show that energy diffusion in the network formed stable and perfect target waves by applying noise on center region of network. It is interesting to investigate the case when the noise is added on the boundary region of the network, and the firing patterns of the network by adjusting noise intensity are displayed in Fig. 9.

It is demonstrated from Fig. 9 that the modes in the network are transferred from a heterogeneity state to a stable traveling wave when noisy excitation is applied on the boundary region of the network. This result indicates that noisy excitation on the boundary of the network can be effective to induce similar plane wave with further increasing the temperature in the network. Fig. 10 show that energy propagation in the network during the wave propagation. It is found that the profile of energy levels has similar distribution of the membrane potential, that is, firing patterns are relative to the energy level in close way.

Similar to the results in Fig. 9, the stable traveling wave can be formed by applying noise on the boundary region of the network. Based on the adaptive property of biological neurons, a neural network with energy diversity controls parameter shifts is described as follows

where the superscripts and subscripts (i, j) mean the node position in the regular network, m and n represent the heterogeneity size or defect size. g denotes the gain for adjusting the internal parameter a. As presented in Eq. (6), the neuron model has several adjustable parameters including (a, b, c) and two parameters (A, B) are relative to temperature. The parameter a in Eqs. (6) and (14) accounts for the reverse voltage of the neural circuit and resting potential of one ion channel. In presence of energy diversity or external energy injection for electric stimuli, the excitable media suffers from depolarization and thus the reverse potential of ion channels can be switched. Therefore, Eq. (14) describes the adaptive growth of parameter a under energy flow. For extensive studies, readers can explore similar case for mode transition, pattern formation and energy shift when other adjustable intrinsic parameters (b, c) are regulated in similar adaptive law. The Heaviside function θ(*) is used to control the growth of parameter a. For finite neurons, higher energies occur in heterogeneous regions, while lower energies occur in defect regions. When m1=m2=N/2−10, n1=n2=N/2+10, the parameter a in the center of the network is increased from 0.1, and the parameter a for other areas remains 0.7. In this case, firing patterns in the network are shown in Fig. 11.

From Fig. 11, continuous target waves can be induced by applying the possible shift in the center of the network presenting in chaotic and/or periodic states. Heterogeneous regions will form target waves in the network within finite transient period. In this case, energy diffussion in the network is shown in Fig. 12.

It is confirmed that the propagation of target wave in the network is supported by energy diversity formed from center of the network. Furthermore, m1= m2=1, n1=10, n2=N, the parameter a on the boundary region of the network is increased from 0.1, and the parameter a for other areas remains 0.7. The firing patterns in the network in this case are shown in Fig. 13.

Similar to the case of the noise is applied on the boundary region of the network, stable and perfect traveling waves can be formed. The coexistence of traveling wave and spiral wave is formed within finite periods. The energy propagation in the network is shown in Fig. 14.

The results in Fig. 14 show that energy diversity exists on the boundary of the network, and parameter shift is induced to regulate energy diversity for reaching local energy balance and synchronization. The distribution of energy values is similar to the profile of membrane potential patterns because the firing patterns are relative to the energy levels of the neurons.

In a summary, connection of phototube and thermistor makes the photocurrent become dependent on the temperature. As a result, any changes of temperature will modify the excitability and mode selection is controlled completely. As a result, energy level is switched during the changes of temperature in a single neuron. When more neurons are clustered, synaptic coupling is helpful to decrease energy diversity and energy balance supports complete synchronization for developing homogeneous states in the network. Local noisy disturbance injects energy and breaks energy balance for developing wave fronts, which can diffuse the energy in the network. For fast approach of energy balance, one intrinsic controllable parameter shows adaptive growth under energy diversity until they reach stable energy balance and the growing parameter will keep a saturation value, which supports stable wave propagation and regular spatial patterns in the neural network.

4 Open problem

As is known, thermistors are divided into two types considering the its negative or positive signs for dR/dT. For negative temperature coefficient thermistor (NTCT) dR/dT<0 and its resistance is decreased by increasing the temperature. A positive temperature coefficient thermistor (PTCT) requires dR/dT>0, and the resistance is increased with temperature. When the thermistor in Fig. 1 is considered as a PTCT, the neural circuit described in Fig. 1 is the same as in Eq.(1). The channel current along RN in Fig. 1 can be estimated by Eq. (2) or other forms, and the time-varying voltage Vs across the phototube is considered as Eq. (3). The resistance across PTCT can be estimated by

R∞ measures the maximal resistance for the PTCT at T→0. Similar scale transformation for the physical variables and intrinsic parameters are updated with a group of dimensionless variables as follows

As a result, temperature-light sensitive neuron model can be updated as follows

The field energy is mainly kept in two energy storage components including the capacitor and induction coil, the two kinds of neural circuits (and the equivalent neuron models) have the same energy function even the temperature changes have different impact on the mode transition and energy propagation in the neurons. This neuron model can be further used to explore the collective patterns and energy distribution in neural networks with gradient temperature distribution. Furthermore, memristive terms can also be introduced into Eq.(17), and the energy is shunted and shared in the memristive channel besides the capacitive and inductive components. When the functional neural network is excited by spatial stimuli, for example, gradient distribution in the illumination and temperature, all the neurons become non-identical, the self-organization and emergence of regularity in the networks can give possible clues to understand the regulation role of energy flow in the network. Besides the functional electric component as thermistor and piezoelectric ceramic, memristor is considered as important candidate for intelligent computation in AI design and adaptive control [62].

5 Conclusion

In this work, a temperature-light sensitive neuron is obtained by connecting a phototube and a thermistor into a simple capacitive-inductive circuit coupled with a nonlinear resistor. A regular network on square array is built to discern energy distribution during pattern formation and wave propagation under synaptic coupling. The heterogeneity and defect created accompanying with shift of the parameter a in a few neurons when noisy excitation is applied on local area of the network. Energy diffusion for center area and boundary area in the network are investigated, respectively. The result indicates that the energy diversity of different regions of the network will form stable and perfect target and traveling waves. Adaptive growth of one or more intrinsic parameters are helpful for decreasing energy diversity, and stable energy balance in the network is effective to induce continuous wave fronts and regular patterns can be developed in the network. As a result, external signals can be perceived and then signals are propagated in the network in effective way.

Data availability

Data are available upon reasonable request from the corresponding author.

References

Hodgkin, A.L., Huxley, A.F.: A quantitative description of membrane current and its application to conduction and excitation in nerve. J. Physiol. 117, 500 (1952)

Zhang, X., Gu, H., Wu, F.: Memristor reduces conduction failure of action potentials along axon with Hopf bifurcation. Eur. Phys. J. Special Topics 228, 2053–2063 (2019)

Khodashenas, M., Baghdadi, G., Towhidkhah, F.: A modified Hodgkin-Huxley model to show the effect of motor cortex stimulation on the trigeminal neuralgia network. J. Math. Neurosci. 9, 1–23 (2019)

Bao, H., Zhang, Y.Z., Liu, W.B., Bao, B.C.: Memristor synapse-coupled memristive neuron network: synchronization transition and occurrence of chimera. Nonlinear Dyn. 100, 937–950 (2020)

Baysal, V., Saraç, Z., Yilmaz, E.: Chaotic resonance in Hodgkin-Huxley neuron. Nonlinear Dyn. 97, 1275–1285 (2019)

FitzHugh, R.: Impulses and physiological states in theoretical models of nerve membrane. Biophys. J. 1, 445–466 (1961)

Hindmarsh, J.L., Rose, R.M.: A model of neuronal bursting using three coupled first order differential equations. Proc. Royal Soc. London Ser. B 221, 87–102 (1984)

Tsumoto, K., Kitajima, H., Yoshinaga, T., Aihara, K., Kawakami, H.: Bifurcations in Morris-Lecar neuron model. Neurocomput. 69(4–6), 293–316 (2006)

Hu, X., Liu, C., Liu, L., Ni, J., Li, S.: An electronic implementation for Morris-Lecar neuron model. Nonlinear Dyn. 84, 2317–2332 (2016)

Hayati, M., Nouri, M., Haghiri, S., Abbott, D.: Digital multiplierless realization of two coupled biological Morris-Lecar neuron model. IEEE T. Circuits-I 62, 1805–1814 (2015)

Xu, Q., Tan, X., Zhu, D., Bao, H., Hu, Y.H., Bao, B.C.: Bifurcations to bursting and spiking in the Chay neuron and their validation in a digital circuit. Chaos Solitons Fract. 141, 110353 (2020)

Shadizadeh, S.M., Nazarimehr, F., Jafari, S., Rajagopal, K.: Investigating different synaptic connections of the Chay neuron model. Physica A 607, 128242 (2022)

Bao, H., Liu, W., Ma, J., Wu, H.: Memristor initial-offset boosting in memristive HR neuron model with hidden firing patterns. Int. J. Bifurcat. Chaos 30, 2030029 (2020)

Fang, X., Duan, S., Wang, L.: Memristive FHN spiking neuron model and brain-inspired threshold logic computing. Neurocomput. 517, 93–105 (2023)

Liu, Y., Xu, W., Ma, J., Alzahrani, F., Hobiny, A.: A new photosensitive neuron model and its dynamics. Front. Information. Technol. Electron. Eng. 21, 1387–1396 (2020)

Xie, Y., Yao, Z., Hu, X.K., Ma, J.: Enhance sensitivity to illumination and synchronization in light-dependent neurons. Chin. Phys. B 30, 120510 (2021)

Guo, Y., Zhou, P., Yao, Z., Ma, J.: Biophysical mechanism of signal encoding in an auditory neuron. Nonlinear Dyn. 105, 3603–3614 (2021)

Xu, Y., Guo, Y., Ren, G., Ma, J.: Dynamics and stochastic resonance in a thermosensitive neuron. Appl. Math. Comput. 385, 125427 (2020)

Zhang, Y., Xu, Y., Yao, Z., Ma, J.: A feasible neuron for estimating the magnetic field effect. Nonlinear Dyn. 102, 1849–1867 (2020)

Yang, F., Xu, Y., Ma, J.: A memristive neuron and its adaptability to external electric field. Chaos 33, 023110 (2023)

Njitacke, Z.T., Ramadoss, J., Takembo, C.N., Rajagopal, K., Awrejcewicz, J.: An enhanced FitzHugh–Nagumo neuron circuit, microcontroller-based hardware implementation: Light illumination and magnetic field effects on information patterns. Chaos Solitons Fract. 167, 113014 (2023)

Kobe, D.H.: Helmholtz’s theorem revisited. Am. J. Phys. 54, 552–554 (1986)

Wang, Y., Wang, C.N., Ren, G.D., Tang, J., Jin, W.Y.: Energy dependence on modes of electric activities of neuron driven by multi-channel signals. Nonlinear Dyn. 89, 1967–1987 (2017)

Yang, Y., Ma, J., Xu, Y., Jia, Y.: Energy dependence on discharge mode of Izhikevich neuron driven by external stimulus under electromagnetic induction. Cogn. Neurodyn. 15, 265–277 (2021)

Usha, K., Subha, P.A.: Energy feedback and synchronous dynamics of Hindmarsh-Rose neuron model with memristor. Chin. Phys. B 28, 020502 (2019)

An, X.L., Qiao, S.: The hidden, period-adding, mixed-mode oscillations and control in a HR neuron under electromagnetic induction. Chaos Solitons Fract. 143, 110587 (2021)

Ma, J., Wu, F.Q., Jin, W.Y., Zhou, P., Hayat, T.: Calculation of Hamilton energy and control of dynamical systems with different types of attractors. Chaos 27, 053108 (2017)

Njitacke, Z.T., Koumetio, B.N., Ramakrishnan, B., Leutcho, G.D., Fozin, T.F., Tsafack, N., Rajagopal, K., Kengne, J.: Hamiltonian energy and coexistence of hidden firing patterns from bidirectional coupling between two different neurons. Cogn. Neurodyn. 16, 899–916 (2022)

Xie, Y., Yao, Z., Ma, J.: Phase synchronization and energy balance between neurons. Front. Inform. Technol. Electron. Eng. 23, 1407–1420 (2022)

Wang, Y., Sun, G.P., Ren, G.D.: Diffusive field coupling-induced synchronization between neural circuits under energy balance. Chin. Phys. B 32, 040504 (2023)

Sun, G.P., Yang, F.F., Ren, G.D., Wang, C.N.: Energy encoding in a biophysical neuron and adaptive energy balance under field coupling. Chaos Solitons Fract. 169, 113230 (2023)

Yang, F., Ma, J.: Creation of memristive synapse connection to neurons for keeping energy balance. Pramana 97, 55 (2023)

Wang, C., Sun, G., Yang, F., Ma, J.: Capacitive coupling memristive systems for energy balance. AEU-Int. J. Electron. Commun. 153, 154280 (2022)

Yang, F., Ma, J.: Synchronization and energy balance of star network composed of photosensitive neurons. Eur. Phys. J. Spec. Top. 231(22–23), 4025–4035 (2022)

Zhou, P., Hu, X., Zhu, Z., Ma, J.: What is the most suitable Lyapunov function? Chaos Solitons Fract. 150, 111154 (2021)

Zhang, G., Wang, C., Alsaedi, A., Ma, J., Ren, G.: Dependence of hidden attractors on non-linearity and Hamilton energy in a class of chaotic system. Kybernetika 54, 648–663 (2018)

He, F., Abdullah, Z.K., Saberi-Nik, H., Awrejcewicz, J.: The bounded sets, Hamilton energy, and competitive modes for the chaotic plasma system. Nonlinear Dyn. 111, 4847–4862 (2023)

Leutcho, G.D., Khalaf, A.J.M., Tabekoueng, Z.N., Fozin, T.F., Kengne, J., Jafari, S., Hussain, I.: A new oscillator with mega-stability and its Hamilton energy: infinite coexisting hidden and self-excited attractors. Chaos 30, 033112 (2020)

Ma, X., Xu, Y.: Taming the hybrid synapse under energy balance between neurons. Chaos Solitons Fract. 159, 112149 (2022)

Xie, Y., Zhou, P., Ma, J.: Energy balance and synchronization via inductive-coupling in functional neural circuits. Appl. Math. Model. 113, 175–187 (2023)

Yao, Z., Zhou, P., Zhu, Z., Ma, J.: Phase synchronization between a light-dependent neuron and a thermosensitive neuron. Neurocomput. 423, 518–534 (2021)

Li, X., Xu, Y.: Energy level transition and mode transition in a neuron. Nonlinear Dyn. 112, 2253–2263 (2024)

Wu, F., Wang, R.: Synchronization in memristive HR neurons with hidden coexisting firing and lower energy under electrical and magnetic coupling. Commun. Nonlin. Sci. Numer. Simulat. 126, 107459 (2023)

Wu, F., Kang, T., Shao, Y., Wang, Q.: Stability of Hopfield neural network with resistive and magnetic coupling. Chaos Solitons Fract. 172, 113569 (2023)

Wu, F., Yao, Z.: Dynamics of neuron-like excitable Josephson junctions coupled by a metal oxide memristive synapse. Nonlinear Dyn. 111, 13481–13497 (2023)

Yao, Z., Sun, K., He, S.: Firing patterns in a fractional-order FitzHugh-Nagumo neuron model. Nonlinear Dyn. 110, 1807–1822 (2022)

Kafraj, M.S., Parastesh, F., Jafari, S.: Firing patterns of an improved Izhikevich neuron model under the effect of electromagnetic induction and noise. Chaos Solitons Fract. 137, 109782 (2020)

Baysal, V., Yilmaz, E.: Effects of electromagnetic induction on vibrational resonance in single neurons and neuronal networks. Physica A 537, 122733 (2020)

Upadhyay, R.K., Sharma, S.K., Mondal, A., Mondal, A.: Emergence of hidden dynamics in different neuronal network architecture with injected electromagnetic induction. Appl. Math. Model. 111, 288–309 (2022)

Rostami, Z., Jafari, S.: Defects formation and spiral waves in a network of neurons in presence of electromagnetic induction. Cogn. Neurodyn. 12, 235–254 (2018)

Wang, G., Yang, L., Zhan, X., Li, A., Jia, Y.: Chaotic resonance in Izhikevich neural network motifs under electromagnetic induction. Nonlinear Dyn. 107, 3945–3962 (2022)

Guo, Y., Xie, Y., Ma, J.: Nonlinear responses in a neural network under spatial electromagnetic radiation. Physica A 626, 129120 (2023)

Xu, Y., Ren, G., Ma, J.: Patterns stability in cardiac tissue under spatial electromagnetic radiation. Chaos Solitons Fract. 171, 113522 (2023)

Vignesh, D., Ma, J., Banerjee, S.: Multi-scroll and coexisting attractors in a Hopfield neural network under electromagnetic induction and external stimuli. Neurocomput. 564, 126961 (2024)

Guo, Y., Lv, M., Wang, C., Ma, J.: Energy controls wave propagation in a neural network with spatial stimuli. Neural Netw. 171, 1–13 (2024)

Xu, Y., Jia, Y., Wang, H., Liu, Y., Wang, P., Zhao, Y.: Spiking activities in chain neural network driven by channel noise with field coupling. Nonlinear Dyn. 95, 3237–3247 (2019)

Wu, Y., Ding, Q., Yu, D., Li, T., Jia, Y.: Pattern formation induced by gradient field coupling in bi-layer neuronal networks. Eur. Phys. J. Special Topics 231(22–23), 4077–4088 (2022)

Ge, M., Jia, Y., Xu, Y., Lu, L., Wang, H., Zhao, Y.: Wave propagation and synchronization induced by chemical autapse in chain Hindmarsh-Rose neural network. Appli. Math. Comput. 352, 136–145 (2019)

Zhou, P., Zhang, X., Hu, X., Ren, G.: Energy balance between two thermosensitive circuits under field coupling. Nonlinear Dyn. 110, 1879–1895 (2022)

Xie, Y., Yao, Z., Ma, J.: Formation of local heterogeneity under energy collection in neural networks. Sci. China Technol. Sci. 66, 439–455 (2023)

Yang, F., Wang, Y., Ma, J.: Creation of heterogeneity or defects in a memristive neural network under energy flow. Commun. Nonlin. Sci. Numer. Simulat. 119, 107127 (2023)

Poznanski, R.R., Cacha, L.A., Sbitnev, V.I., Iannella, N., Parida, S., Brandas, E.J., Achimowicz, J.Z.: Intentionality for better communication in minimally conscious AI design. J. Multis. Neurosci. 3, 1–12 (2024)

Funding

National Natural Science Foundation of China, 12072139.

Author information

Authors and Affiliations

Contributions

Feifei Yang finished the definition of dynamical model, numerical results, and figures. Qun Guo verified the proof and numerical solutions. Guodong Ren edited the draft and presented analysis. Jun Ma suggested this research, edited this writing and explained the results.

Corresponding author

Ethics declarations

Ethics approval

This is a theoretical study. The Lanzhou University of Technological Research Ethics Committee has confirmed that no ethical approval is required.

Informed consent

All the authors (Feifei Yang, Qun Guo, Guodong Ren, and Jun Ma) agree to submit and publish this work in the Journal of Biological Physics.

Competing interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Yang, F., Guo, Q., Ren, G. et al. Wave propagation in a light-temperature neural network under adaptive local energy balance. J Biol Phys (2024). https://doi.org/10.1007/s10867-024-09659-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10867-024-09659-1