Abstract

A multiple baseline design was used across probe sets to evaluate the effect of detect, practice, and repair (DPR) on the math fact fluency rates of a third-grade class. DPR was applied with a large group (n = 11) selected from an intact class (n = 17) in a manner that allowed each student to work on specific problems from a specific basic fact operation (i.e., subtraction, multiplication, or division) based on a daily assessment of their instructional needs. Prior to the implementation of DPR, students were computing an average of 18.4 digits correct per minute (DCPM). After using DPR for 11 sessions, students were computing an average of 31.2 DCPM. This 12.8 DCPM increase over 36 problems required approximately 132 min (12 min per session). Discussion focuses on the need for and challenges associated with developing intervention strategies and procedures that efficiently increase student skills at the class-wide level, with an emphasis on simultaneously meeting the needs of both high and low achievers by differentiating instruction.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

According to the most recent findings presented by the United States Department of Education, students are struggling with basic mathematical skills (National Center for Education Statistics (NCES), 2011). Results showed that only 40 % of fourth-graders and 35 % of eighth-graders performed at, or above, the proficient level on the National Assessment of Educational Progress (NAEP). These results converge to support the need for empirically validated interventions to increase mathematics skills. Furthermore, the pervasive occurrence of math skill deficiencies suggests that researchers need to identify educational treatments to remedy math skills not only for at-risk students and students with disabilities, but also at the classroom level.

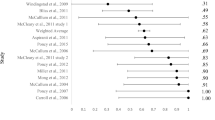

Two recent meta-analyses by Codding et al. (2009) and Burns et al. (2010) collectively identified 40 studies spanning eight intervention procedures designed to increase fact skills. The results cited moderate to large effect size for most of the studies, which targeted basic as well as advanced computation skills. While these data are encouraging, a majority of these studies demonstrated the efficacy of these interventions on small groups or individual students. Given the prevalence of math skills deficiencies across the general school population (NCES 2011), researchers need to supplement these data with studies investigating procedures designed to prevent and/or remedy math fact deficits at the class-wide level (Hawkins 2010; Poncy et al. 2010a; Poncy and Skinner 2011).

One group-oriented intervention targeting math facts that has been empirically validated is Detect, Practice, and Repair (DPR) (Poncy et al. 2006, 2010b). DPR is a set of procedures that were designed to individualize math fact instruction for each student while in a class-wide setting targeting a specific basic fact group (e.g., subtraction). To achieve this goal, a three-phase test-teach-test procedure is used (i.e., Detect–Practice–Repair).

The detect phase uses a paced pre-test to identify items that each student will use to practice. Specifically, an apparatus (e.g., a metronome) is used to prompt student responding to each math problem at a predetermined rate (e.g., one problem every 1.5–2 s). After the defined time period elapses, students are prompted to go to the next problem, until all of the items have been attempted. The paced assessment was designed to differentiate between items that could be completed with automaticity (i.e., under 1.5–2 s) and items that a student would complete accurately but slowly (i.e., more than 2 s). To successfully complete the detect phase, students need to automatically respond to each problem. Problems that cannot be automatically completed are identified and practiced during the next phase. After the detect phase is completed, each student identifies the first five uncompleted problems on his/her particular pre-test and uses these targeted facts in the practice phase.

During the practice phase, Cover, Copy, and Compare (CCC) procedures (Skinner et al. 1989) are used to practice each of the five dysfluent items identified in the detect phase five times. To maximize the effectiveness of the practice phase, students should be instructed to complete the items starting with the first item and progressing to the fifth item and then repeating the process until they complete all 25 items or a pre-determined amount of time expires (i.e., “Do not do the first problem five consecutive times, then the second problem five times,” see Belfiore et al. 1995). Lastly, the students complete the repair phase. The repair phase consists of an explicit timing (ET) procedure (Rhymer et al. 2002), with an alternate form of the detect assessment to elicit generalized responding with the five targeted fact problems within the larger pool of items. Student performance on this math sprint is totaled and graphed by each student.

To date, there have been four published studies investigating DPR (Axtell et al. 2009; Parkhurst et al. 2010; Poncy et al. 2006, 2010b). DPR was first introduced by Poncy et al. (2006) in a study that targeted basic subtraction facts with a class of 14 third-grade students. Results supported the use of DPR as an effective class-wide procedure as the average digits correct per minute (DCPM) score rose from 11 to 20.5 across a 6-week period. However, there were several limitations. Most notably, the investigation used a non-experimental (A–B) design, which does not control for threats to internal validity. Another serious limitation was the failure of the researchers to collect treatment integrity measures from the involved teachers (Poncy et al. 2006). Although preliminary findings favored the use of DPR as a class-wide treatment, experimental studies were needed to validate the A–B design.

Axtell et al. (2009) used an experimental group design to investigate the effect of DPR on 36 middle school students’ division fluency. Results showed that students made significant gains compared to a control group across a 17-day period (p = .016). The treatment group showed an increase in approximately 10 DCPM (pre-test score of 14 DCPM and a post-test score of 24 DCPM). These researchers were the first to experimentally validate DPR to increase math fact fluency and targeted the division fluency of a group of low-performing middle school students in a remedial summer program.

Poncy et al. (2010b) used a multiple-probe-across-problem-sets design with a group of seven third-grade students in need of remediation with multiplication facts. Phase averages indicated that students grew from a baseline average of 20.9 DCPM to 33.3 DCPM over an 11-day period targeting 36 distinct problems. This 12.4 DCPM increase occurred over approximately 132 min (12 min per session) of instruction and resulted in an average increase of 5.7 DCPM per hour of instructional time.

Parkhurst et al. (2010) used DPR to target multiplication fact fluency using a multiple baseline design with a group of 10 low-achieving fifth-grade students. Although they largely adhered to previous administration guidelines for DPR, they altered the pacing procedure in an effort to more validly identify the problems used in the practice phase. Specifically, they replaced the use of a metronome with a paced PowerPoint slide that showed each problem for 3 s. Students were given a record sheet with 12 spots to write the answer for the 12 corresponding problems provided by the slide show. To control for students who would work ahead or fall behind the metronome, no fact problems were provided on the detect assessment sheet. Thus, each problem could be seen as a distinct single-problem automaticity assessment. When they finished the detect phase, a PowerPoint slide with the problems and correct answers was displayed so that students could identify the first five problems that they did not complete accurately within 3 s. Results supported this variation with students in the study growing an average of 21 DCPM over 14 days of instruction on 36 problems.

DPR is a relatively new intervention package that has been empirically validated across grades, skills, and research designs. Although these data are encouraging, more studies investigating DPR need to be conducted to provide evidence of internal, external, and contextual validity (i.e., evidence that teacher can apply the procedures in the classroom, see Foster and Skinner 2011). For example, Poncy et al. (2006) originally described DPR as an intervention package that used class-wide procedures to differentiate curricular items for each student (i.e., each student works on problems specific to his/her needs). To date, researchers have demonstrated curricular differentiation but have targeted groups of students in need of remediation (i.e., Tier 2 application) and have targeted a single operation (e.g., multiplication facts). In later elementary grades (e.g., 4–6), it is not uncommon to find students with varying levels of skill development across basic facts (Howell et al. 1993); for example, some students may need to work on enhancing subtraction fact fluency, while others need to work on division fact fluency. The purpose of the current study is to examine the effects of DPR on the basic fact fluency of a large group (n = 11) of students selected from an intact fourth-grade classroom (n = 17) with each student assigned basic fact operations that best matched his or her needs.

Method

Participants and Setting

Participants included 11 fourth-grade students with ages ranging from 8 to 10 years old who attended an elementary school in north-central Iowa. Ten (91 %) of the students were Caucasian, and one (9 %) student was Asian. Seven (64 %) were female, and four (36 %) were male. Lastly, one (9 %) of the students received special education services in the area of mathematics. The study was conducted during the last month of the fourth-grade school year and all students had received instruction in all basic fact problems. The school psychologist assigned to the elementary building conducted the intervention with the assistance of an undergraduate student majoring in psychology. Intervention sessions were conducted once per day and occurred in the general education classroom during the first 15 min of the scheduled math period. All assessment data were collected at the beginning of each school day. Convenience sampling was used as the school psychologist invited the teacher and her students to participate.

All students in the class (n = 17) were screened for participation in the study using a worksheet containing 80 math fact problems in the areas of division, subtraction, and multiplication. Students were classified as proficient if they were able to finish the targeted skill test in 2 min with a minimum of 90 % accuracy. Initially, all students were given a division test. Five students demonstrated proficient responding (i.e., they finished the test in 2 min with more than 90 % accuracy) and were excluded from the study. Next, the 12 remaining students were tested on subtraction. All but two met the proficiency criteria for subtraction. The 10 students who met subtraction criteria were tested on multiplication. Seven of these students met the multiplication criteria. These screening assessments consequently identified two students in need of subtraction, three students in need of multiplication, and seven in need of division fact fluency instruction. The five students excluded from the study went with the classroom teacher and worked on multi-digit multiplication problems during the course of the study. The data for one student who was deficient in multiplication were dropped from the study due to 3 consecutive absences.

Materials

Materials used for the intervention consisted of an assessment packet, an intervention packet, and a student folder with a graph. Other materials included an assessment and intervention integrity checklist (see “Appendix”), a stopwatch to time each phase of the intervention, and a metronome used to prompt students during the tap-a-problem phase of DPR.

Assessment Packet

Baseline and intervention assessment data were collected using experimenter-constructed probes at the beginning of each school day. Basic facts used in the assessment probes were divided into three mutually exclusive sets containing 12 problems each. This was done for each of the operations used in the study (see Table 1). Fact sets were selected by first eliminating all problems containing a zero or a one. Next, reciprocal facts were removed (e.g., 6 × 8 was selected, and therefore, 8 × 6 was excluded). Last experimenters attempted to evenly distribute problems containing specific numbers (e.g., facts containing 4) across the sets. This is consistent with previous research that has used specific math fact sets with single case designs to evaluate student growth (see McCallum et al. 2006; Poncy and Skinner 2011; Poncy et al. 2010b, 2007).

For each set of problems, six different assessment probes were constructed. Each probe contained 48 problems in 8 rows of 6 problems, with 4 groups of the 12 problems on each page. Problems were arranged so that the same problem was never directly above or below itself and the same problem was never repeated (i.e., the last problem of row was never the first problem of the next row). During data collection of the dependent variable, each student received a packet containing three probes (i.e., a probe from set A, a set B, and a set C) in his or her identified target skill (i.e., subtraction, multiplication, or division). All probes were counterbalanced.

Intervention Packet

When completing the intervention, students recorded responses on a corresponding packet of three sheets. The first page consisted of the detect sheet which was an intervention probe that was taken from a pool of six alternate forms constructed from the problems of set A, set B, or set C for the corresponding operation. While the problems were the same for both the intervention and assessment probes, all problems on the intervention forms were in different sequences than those used with the assessment probes. This initial intervention probe was used with the detect portion of the DPR. The second sheet used in the practice phase was a CCC sheet that contained 36 boxes with six rows and six columns. The box in the top left hand corner labeled the column where the problem and answer were to be written. The five boxes in the first column under the problem and answer label were for the student to write the five problems identified during the detect procedure. To the right of each problem box were five empty spaces to write responses using the CCC procedure. This resulted in a total of 25 empty boxes for students to write their responses when using the CCC procedures. The third sheet used for the practice phase was an alternate intervention probe that students used to complete as many problems as possible in 1 min (i.e., a math sprint).

Student Folder and Graph

To house the intervention packet, a plain folder with the student’s name on it was used that had a time series graph stapled on the inside. The students used the graph to record the number of problems completed during the daily 1 min math sprint.

Dependent Measures and Scoring Procedures

The dependent measure for the intervention was the number of DCPM. A digit was scored as correct when the appropriate number was written in the proper column (Deno and Mirkin 1977; Shinn 1989). When a student finished the probe before the min expired, the number of correct digits was multiplied by 60 and divided by the number of seconds taken to complete the assessment probe. Scores were rounded to the nearest whole number.

At the beginning of the day, students were handed their folders which contained a packet of three assessment probes to collect DCPM data on their specified target skill. Students were instructed to take out their assessment packet, place it face down on their desk, and write their name on the back. Students were then read the following directions, “The sheets on your desk are math facts. When I say ‘Begin’ start answering the problems. Begin with the first problem and work across the page, then go to the next row. If you cannot answer a problem, mark an ‘X’ through it and go to the next one. Are there any questions? Turn your packets over. Begin.” The experimenter started a stopwatch and told the students to stop after 1 min had passed. Students were observed during the assessment period to determine whether any of the students finished before the 1 min expired. When this occurred, the experimenter would record the number of seconds it took for the student to complete the probe. At the end of the 1 min timing, packets were collected and scored.

Experimental Design and Analysis

A multiple baseline design was used across three exclusive probe sets to evaluate the effect of the class-wide implementation of DPR on basic fact fluency rates (Poncy et al. 2007). Given the purpose of the study to investigate the overall impact of DPR on a group of students, the class-wide average DCPM scores were plotted on a time series graph and visual analysis was used to interpret the data (McCallum et al. 2006; Poncy et al. 2012). To supplement the visual analysis of the group data, individual data were also examined. To analyze individual student data, within-phase mean comparisons were used to provide descriptive data about student growth (Parkhurst et al. 2010; Poncy et al. 2010a; b; Poncy and Skinner 2011).

DPR Intervention Procedures

The goal of DPR is to focus a student’s instructional time on problems that cannot be completed accurately and/or fluently and promote the efficient use of time spent practicing basic fact problems. DPR consists of three activities: (1) the detect phase, (2) the practice phase using CCC, and (3) the repair phase consisting of a 1-min math sprint (i.e., explicit timing procedure) with self-graphing. The intervention packets were pre-made with each student’s target operation materials placed in their folders. The experimenter began the intervention by passing out the folders, and the students would take out their packets and lay them on their desks face down.

The Detect Phase

During the detect phase, students took out their initial sheet and were instructed to try to write their answer before the click of the metronome. The metronome was set at 30 beats per min, and the tap-a-problem phase took approximately 96–100 s to complete. Students were instructed to move from problem to problem with the click of the metronome until they were instructed to stop. After this phase was completed, students were instructed to circle the first five problems that were left uncompleted. If there were less than five problems left blank, students were told to select problems that they felt were most problematic for them. These methods were used to identify and supply the problems for the CCC portion of DPR.

The Practice Phase: Cover, Copy, Compare

During CCC, the students wrote the identified problems in the “problem and answer” boxes of the CCC sheet (first column). If a student was unsure of an answer, he or she was instructed to raise his or her hand and the experimenter would check the accuracy of the answer. After the students wrote in the problems, the experimenter read a scripted set of directions (see “Appendix”) and the students used CCC procedures to practice the identified problems. Specifically, students read the printed problem and answer, covered the problem and answer, wrote the problem and answer, and then checked the accuracy of the response by comparing it to the model. If they finished before the 5 min had expired, they were instructed to continue to use CCC, but instead of writing the problem and answer, they were to say the problem and answer quietly to themselves (see Belfiore et al. 1995). After 5 min had transpired, the class was told to stop and turn to the third sheet of the intervention packet.

The Repair Phase: Math Sprint with Self-Graphing

Next, students turned to the final sheet and were instructed to complete as many problems as possible in 1 min, referred to as a “math sprint.” Specifically, students were told “You will have 1 min to do as many problems as possible, while it is important to go fast it is more important to write down the correct answer, so work with an emphasis on being accurate. Ready. Go!” When students were finished with the math sprint, they were instructed to count the number of problems they had completed and correctly document that number on the graph stapled to the inside of their folder. After this was done, they shut their folders and handed them to the front for the experimenter to collect.

Procedural Integrity and Interscorer Agreement

A second observer was in the classroom and used the implementation integrity checklist (“Appendix”) to monitor procedural fidelity during 4 of the 15 days (27 %) for the DPR intervention as well as the morning collection of the dependent measure. These data showed that the experimenter correctly implemented 100 % of the steps involved in the interventions and collection of assessment data.

To calculate interscorer agreement (IA), 100 of the 477 administered probes (21 %) were photocopied and independently scored by a trained researcher. For each probe, IA was calculated by dividing the number of agreements on each digit correct by the number of agreements and disagreements on each digit correct and multiplying by 100. Subsequently, the percentage IA for each of the 100 probes were summed and divided by the number of probes scored by the independent scorer. This resulted in an average IA of approximately 98 % with a range of 84–100 %.

Results

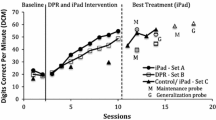

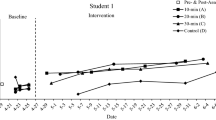

Figure 1 displays the time series class-wide DCPM data on assessment probes across baseline, intervention, and maintenance phases. Visual analysis of aggregated treatment group data in Fig. 1 shows that DPR resulted in an immediate level change across problem sets on student DCPM that maintained after DPR was removed. Specifically, baseline data were relatively stable for problem set A and B, while set C showed a slight increase. Immediately after the first DPR intervention was applied, data for the targeted set showed a sharp increase relative to baseline. Across all three sets of problems, we found no overlap between baseline and intervention phase data. Although there was a marked increase when DPR was implemented, trend data were inconsistent across the sets. Specifically, data from set A showed no increase in trend, whereas data from both sets B and C demonstrated increasing trends. When DPR was withdrawn, DCPM data for sets A and B remained stable. Due to the end of the school year, maintenance data were not collected for set C. Regardless, the immediate and sharp increases as treatments were applied to one set were not accompanied by similar increases in sets still in baseline. This suggests that the intervention, as opposed to some uncontrolled threat to internal validity, caused the increases in DCPM. Additionally, the aggregated maintenance phase data showed that students largely maintained their improved fluency for sets A and B over time.

Visual analysis of graphed data was only done at the group level for two primary reasons. First, the question under investigation was the effect of DPR as a group intervention; second, because phase change decisions were made in conjunction with group data patterns, individually graphed data may or may not match phase change decisions if DPR was being used with an individual student. An obvious disadvantage of this analysis technique is that meaningful variations with individual data are lost. We supplemented the visual analysis of group data with phase averages for both individuals and skill groups (e.g., multiplication) to provide some insight into individual performance across baseline, intervention, and maintenance conditions (Gast 2010).

Visual analysis data of group data were supported by phase average data. The aggregated baseline average across the problem sets was 18.5 DCPM, intervention phase average was 30.9 DCPM, and maintenance phase average was 30.2 DCPM. This resulted in an average difference score of 12 DCPM between the baseline and intervention phase and a difference of 11.4 DCPM between the baseline and maintenance phase. In addition to investigating the phase averages of the class, data were also investigated across fact operations (i.e., subtraction, multiplication, and division), and for individual students.

DPR was applied to a group of 11 students with two students working on subtraction, two students working on multiplication, and seven students working on division. Phase average data are summarized in Table 2. Baseline phase averages were 9.7, 18.3, and 20.7 DCPM for subtraction, multiplication, and division indicating that students working on subtraction had substantially lower fluency scores than those working on multiplication and division. Intervention phase averages were 12.5, 29.3, and 35.6 DCPM, respectively, for subtraction, multiplication, and division. Differences between baseline and intervention data suggest that DPR was effective for students working on multiplication (11 DCPM difference) and division (14.9 DCPM difference), but was not effective for the students working on subtraction (2.8 DCPM difference).

Individual phase average data were reported in Table 3. Differences between baseline and intervention data showed that DPR resulted in gains of approximately 9–19 DCPM for 9 of the 11 students (82 %). The two students who showed the smallest amount of gain, increased by 2.5 DCPM (student 1) and 3 DCPM (student 2) were working on subtraction, with one of the students already having a goal for mathematics on his individual education plan (IEP) and the other was receiving remedial support services from the math specialist, approximately 20 min per day, 3 days per week.

Discussion

To meet a variety of different skill levels in the general education setting (i.e., Universal Instruction, or Tier 1), teachers need to differentiate instruction (Poncy et al. 2011). This can be done in many ways, with the primary methods focusing on instruction (i.e., how we teach) and/or curriculum (i.e., what we teach). The goal of differentiation is to increase student learning rates by providing an appropriate skill/instruction match. For example, a teacher may assign a math-related task from a text that is geared to the mean skill level of the class. In this scenario, class-wide instruction may be inefficient as high achieving students practice items that have already been mastered while low-achieving students may become frustrated as they attempt to perform tasks that they cannot do. To enhance learning, classroom teachers need procedures that allow them to provide more complex generalized tasks for high-skill students and additional practice and feedback for low-skill students. The goal of the current study was to demonstrate and evaluate DPR to determine whether this procedure could simultaneously meet the needs of students both across and within basic fact computation skills.

The current study used DPR with a group of 11 students working on one of three computations skills (division, multiplication, or subtraction). All students increased DCPM skills, although increases were variable across skill groups. Students working on division facts had an average increase of 14.9 DCPM, students working on multiplication facts showed an average increase of 11 DCPM increase, and students working on subtraction facts grew an average of 2.8 DCPM. Poncy et al. (2006) found DPR increased subtract fact fluency, and differential learning rates between the students receiving instruction in division and multiplication (14.9 and 11 DCPM) and subtraction (2.8 DCPM) were likely due to student characteristics, as opposed to operational differences. Specifically, the lack of response with the two students working on subtraction was likely due to a combination of low entry skills (i.e., prior knowledge) and learning problems; one student was diagnosed with a learning disability (LD), and the other was diagnosed with a LD the following year. Future researchers should investigate whether students with disabilities consistently fail to respond to DPR and, if so, whether the lack of response can be attributed to either procedural or curricular issues. For example, from a procedural standpoint, perhaps the pace of the tap-a-problem was too fast for these students. A slower pace may result in a more accurate identification of items that need targeted. From a curricular standpoint, it could be that item set size of 12 in the current study contained a large ratio of difficult to easy problems for the subtraction group, and therefore, consistent practice on specific items never occurred. This hypothesis may be supported by the large jump in the data upon the first intervention day for the multiplication and division groups. It could be that those groups were struggling with only a handful of problems. Once these “few” problems were identified and practiced, mastery was achieved.

Another aspect of the data that deserves attention is the large and consistent level changes upon introduction of DPR. It is hypothesized that the focus on specific problems that each student needed may explain the large level jump across the data. For example, each set contained 12 problems, for many students, it could have been that a small number of problems were responsible for decreasing DCPM scores. Hence, students did not need massed practice on all items of an operation, just a selected few. After these were practiced, students were able to meaningfully increase DCPM scores. Future researchers could investigate this hypothesis by conducting pre-test assessment that measures automatic responding to each item to determine whether DPR is more effective when the pool of targeted items is reduced.

There are various empirically validated interventions that have been shown to increase math fact fluency scores including CCC (Poncy et al. 2010a; Poncy and Skinner 2011; Skinner et al. 1989), Taped Problems (TP; McCallum et al. 2006; Poncy et al. 2010b), and ET (Van Houten and Thompson 1976). DPR is unique from these other approaches because it uses a group format paced assessment to differentiate instruction by identifying problems for each individual student. After the detect phase, DPR combines CCC to provide immediate feedback, ET for high rates of practice, and self-graphing to provide performance feedback. This combination was designed to maximize instructional efficiency across a classroom and to avoid some of the obstacles to differentiation inherent in traditional classroom instruction, TP, CCC, and/or ET.

Traditional math text-based instruction results in the class doing the same assignment and practicing the same problems, usually receiving no feedback until the next school day. A benefit of this widely used approach is that it takes little response effort from teachers as materials are already present. TP interventions are effective as students are engaged in high rates of responding with immediate feedback; however, all students must work on the same problems when the audio is played for the classroom (McCallum et al. 2006; Poncy et al. 2012). Teachers also need create, or have access to, worksheets and an audio recording that are paired. CCC sheets unique to each student can be made but require a significant amount of teacher time and effort to achieve differentiation. Explicit timing can be more easily differentiated as teachers can use prior assessment to indentify skills needed for each student (i.e., multiplication, division), and a generic worksheet for each operation can be selected (DPR select worksheets for the tap-a-problem this way). However, instructional efficiency may be compromised as worksheets would be generic and contain rule governed problems (i.e., zeros and ones) or other problems easy for the student that do not need practice. In addition, insufficient practice on difficult problems for the student may result from time spent on already mastered problems (Skinner 2008). No one approach is ideal as each of them have strengths and weaknesses, and future research is needed to compare learning rates across interventions taking into account contextual validity (e.g., time, effort, resources) (Skinner et al. 2013).

Limitations and Future Research

Although DPR is unique in having procedures to identify specific facts for individuals in a group setting, these procedures also are associated with some limitations and concerns, specifically with implementation integrity, teacher acceptability, and student fidelity. DPR requires that teachers manage a four-part intervention where they run the detect phase, transition students to the CCC phase where students identify and transfer items they did not answer within the time allotment. After 5 min of practice during the CCC phase, the teacher reads the directions for, and times, the ET phase. Lastly, the teacher will need to assist students with self-graphing. In other words, DPR is labor intensive. To assist teachers and increase the probability that DPR would be used consultants would need to provide materials (e.g., worksheets, folders, graphs, implementation checklist), model implementation, reward teacher performance, and provide consistent performance feedback.

All DPR studies have been run by researchers, so no data exist on whether teachers would be willing to run such a complex intervention, and if so, whether they would continue to do so for any significant amount of time. Future researchers should conduct additional applied studies where trained teachers independently apply DPR while researchers collect data on integrity, acceptability, and the degree to which the teachers sustain and/or adapt these procedures (see Mason et al. 2009). Also, after training teachers to use the procedure researchers could check back in later in the school year or the next school year to determine whether they choose to sustain the procedure, alter the procedure, or ceasing using it altogether (see Fudge et al. 2008).

As students perform DRP procedure independently, student fidelity and acceptability are also concerns (Skinner and Smith 1992). For the appropriate items to be selected, students need to adhere to the metronome and switch from fact to fact items correctly. Students could fall behind or jump ahead, negating the appropriate identification of problems that need practice (Parkhurst et al. 2010). Additionally, if students find the DPR procedure unacceptable, they may choose not to perform one or more of the four stages. Consequently, researchers should conduct additional studies design to measure student acceptability and fidelity.

Researchers may want to conduct studies designed to evaluate if DPR procedure can be modified to enhance learning rates. For example, research may find that targeting more or fewer than 5 problems for CCC practice at once enhances learning rates. Additionally, researchers may want to manipulate the number of CCC trials completed with each problem. Perhaps most of the learning occurs during the first two or three trials.

The current study also has external validity limitations. Researchers should apply similar procedures across students, classrooms, and target behaviors (e.g., spelling). Additionally, researchers may find that they can apply various steps in less time using technology. For example, computers could be used during the detect phase to measure the response latency for each problem and rank order facts from easiest to hardest or to list each problem a student was unable to complete in specified amount of time (see Parkhurst et al. 2010). Such procedures may enhance both fidelity and acceptability. In the current study, DPR was compared to the passage of time, and therefore, significant increases are not surprising. Future researchers should begin to evaluate and compare DPR to other empirically validated treatments (e.g., CCC, TP, direct instruction approaches) using both single case and randomized group designs to determine which treatments work most efficiently.

We found that nine students benefited from DPR; however, the two students with perhaps the weakest math skills benefited little. While this lack of response is discouraging, DPR does support the inclusion of struggling students in core instructional activities and provides a model to teachers for ways to differentiate for struggling students. This suggests that future researchers should consider conducting aptitude treatment interaction or skill-development treatment interaction studies so that they could determine those who may and may not benefit from these procedures (Rhymer et al. 2002). Such studies may encourage researcher to adapt DPR procedures so that they can be successfully applied with students who fail to benefit.

Conclusion

The current study provides some initial evidence supporting the use of DPR as a Tier 1 procedure to effectively differentiate math fact instruction for a large group of students both within and across fact skills. As educators look for efficient ways to differentiate instruction in class-wide settings, DPR is an example of how this can be successfully completed. While DPR shows promise for use in classroom settings, a variety of limitations exist. Future research needs to be done to refine procedures to increase student learning outcomes across settings (e.g., intact classrooms), populations (e.g., students with disabilities), and skills to strengthen, support, and extend the evidence base for DPR.

References

Axtell, P. K., McCallum, R. S., Bell, S. M., & Poncy, B. C. (2009). Developing math automaticity using a class-wide fluency building procedure for middle-school students. Psychology in the School, 46, 526–538.

Belfiore, P. J., Skinner, C. H., & Ferkis, M. (1995). Effects of response and trial repetition on sight-word training for students with learning disabilities. Journal of Applied Behavior Analysis, 28, 347–348.

Burns, M. K., Codding, R. S., Boice, C. H., & Lukito, G. (2010). Meta-analysis of acquisition and fluency math interventions with instructional and frustration level skills: Evidence for a skill-by-treatment interaction. School Psychology Review, 39, 69–83.

Codding, R. S., Hilt-Panahon, A., Panahon, C. J., & Benson, J. L. (2009). Addressing mathematics computation problems: A review of simple and moderate intensity interventions. Education and Treatment of Children, 32, 279–312.

Deno, S. L., & Mirkin, P. K. (1977). Data-based program modification: A manual. Restin, VA: Council for Exceptional Children.

Foster, L. N., & Skinner, C. H. (2011). Evidence supporting the internal, external, and contextual validity of a writing program targeting middle school students with disabilities. Evidence-Based Communication Assessment and Intervention, 5, 37–43.

Fudge, D. L., Skinner, C. H., Williams, J. L., Cowden, D., Clark, J., & Bliss, S. L. (2008). The color wheel classroom management system: Increasing on-task behavior in every student in a second-grade classroom. Journal of School Psychology, 46, 575–592.

Gast, D. L. (2010). Single subject research methodology in behavioral sciences. New York: Routledge.

Hawkins, R. O. (2010). Introduction to the special issue: Identifying effective class wide interventions to promote positive outcomes for all students. Psychology in the Schools, 47, 869–870.

Howell, K. W., Fox, S. L., & Morehead, M. K. (1993). Curriculum-based evaluation teaching and decision making (2nd ed.). Columbus, OH: Charles E. Merrill.

Mason, L. H., Kubina, R. M., & Taft, R. J. (2009). Developing quick writing skills of middle school students with disabilities. Journal of Special Education, 44(4), 205–220.

McCallum, E., Skinner, C. H., Turner, H., & Saecker, L. (2006). The taped-problems intervention: Increasing multiplication fact fluency using a low-tech, class-wide, time- delay intervention. School Psychology Review, 35, 419–434.

National Center for Education Statistics (2011). The nation’s report card: Mathematics 2011 (NCES 2012-458). National Center for Education Statistics, Institute of Sciences, U.S. Department of Education: Washington, DC.

Parkhurst, J., Skinner, C. H., Yaw, J., Poncy, B. C., Adcock, W., & Luna, E. (2010). Efficient class-wide remediation: Using technology to identify idiosyncratic math facts for additional automaticity drills. International Journal of Behavioral Consultation and Therapy, 6, 111–123.

Poncy, B. C., McCallum, E., & Schmit, A. (2010a). Cover, copy, and compare versus facts that last: Evaluating and comparing a behavioral and a constructivist intervention targeting computational fluency. Psychology in the Schools, 47, 917–930.

Poncy, B. C., McCallum, L. E., & Skinner, C. H. (2011). Advocating for effective instruction: School psychologists as an instructional leader. In T. Lionetti, E. Snyder, & R. Christner (Eds.), A practical guide to building professional competencies in school psychology (pp. 155–173). New York: Springer.

Poncy, B. C., & Skinner, C. H. (2011). Combining class-wide cover, copy, and compare (CCC) with an interdependent group contingency to enhance addition-fact fluency in a first-grade classroom. Journal of Applied School Psychology, 27, 1–20.

Poncy, B. C., Skinner, C. H., & Axtell, P. K. (2010b). An investigation of detect, practice, and repair (DPR) to remedy math fact deficits in third-grade students. Psychology in the Schools, 47, 342–353.

Poncy, B. C., Skinner, C. H., & Jaspers, K. E. (2007). Evaluating and comparing interventions designed to enhance math fact accuracy and fluency: Cover, copy, and compare versus taped problems. Journal of Behavioral Education, 16, 27–37.

Poncy, B. C., Skinner, C. H., & McCallum, E. (2012). A comparison of class-wide taped problems and cover, copy, and compare for enhancing mathematic fluency. Psychology in the Schools, 49, 744–755.

Poncy, B. C., Skinner, C. H., & O’Mara, T. (2006). Detect, practice and repair: The effects of a class-wide intervention on elementary students’ math fact fluency. Journal of Evidence Based Practices for Schools, 7, 47–68.

Rhymer, K. N., Skinner, C. H., Jackson, S., McNeill, S., Smith, T., & Jackson, B. (2002). The 1-minute explicit timing intervention: The influence of mathematics problem difficulty. Journal of Instructional Psychology, 29, 305–311.

Shinn, M. R. (Ed.). (1989). Curriculum-based measurement: Assessing special children. New York: Guilford Press.

Skinner, C. H. (2008). Theoretical and applied implications of precisely measuring learning rates. School Psychology Review, 37, 309–315.

Skinner, C. H., McCleary, D. F., Skolits, G. J., Poncy, B. C., & Cates, G. (2013). Enhancing our remediation evidence-based via response-to-intervention: On the verge of a breakthrough? Psychology in the Schools, 50, 272–289.

Skinner, C. H., & Smith, E. S. (1992). Issues surrounding the use of self-managed interventions for increasing academic performance. School Psychology Review, 21, 202–210.

Skinner, C. H., Turco, T. L., Beatty, K. L., & Rasavage, C. (1989). Cover, copy, and compare: An intervention for increasing multiplication performance. School Psychology Review, 18, 212–220.

Van Houten, R., & Thompson, C. (1976). The effects of explicit timing on math performance. Journal of Applied Behavior Analysis, 9, 227–230.

Author information

Authors and Affiliations

Corresponding author

Appendix: Treatment Integrity Checklists

Appendix: Treatment Integrity Checklists

Assessment Procedures

Have a stopwatch. _____

Pass out the student folders containing assessment probes to students. Have the students take out their assessment packets and place them face down on their desks. Make sure the students have a functional writing utensil. _____

Read the following directions verbatim, “The sheets on your desk are math facts. When I say ‘Begin’ start answering the problems. Begin with the first problem, work across the page then go to the next row. Do each problem then go to the next? Turn your packets over. Ready. Begin.” For the last two assessments, prompt students saying, “Ready. Begin.” _____

Intervention Procedures

Pass out student folders containing the intervention packets. _____

Read the following directions, “Here are your intervention worksheets. We will begin by doing the tap-a-problem. Remember to start on the first click and move to the next problem with each click; remember it is ok if problems are blank as these will be used in the next phase of the intervention. Ready (start metronome), Begin.” _____

After the tap-a-problem is finished, read the following directions. “Now you need to circle the first five blank problems on your tap-a-problem sheet. If you do not have five incomplete problems, I want you to select the problems that you feel are most challenging for you. Write these problems in the column of boxes on the cover, copy, and compare sheet. You will have 5 min to do as many problems as you can use the cover, copy, compare worksheet. If you finish the worksheet before 5 min you will need to continue to use cover, copy, compare but instead of writing the problem answer you will need to say it quietly to yourself. Ready. Begin.” _____

Stop them after 5 min, have them turn to the math sprint worksheet and read the following directions, “Now you are going to do a 1-minute math sprint. When I say, ‘Go!’ I want you to do as many problems as possible in 1 min. Remember, although it is important to go fast, your answers also need to be correct. Ready, Set, Go”! _____

Stop the class after 1 min has expired and read the following directions, “Now it is time to see how many problems you did compared to the last time we did a math sprint. Count up the number of problems you did and chart it on the graph located on the inside of your folder.” _____

Instruct the students to place their packets in their folders and hand in their packets. _____

*Remember to constantly be walking around the room to prompt and/or help students who are not working. Look for any mistakes and give students feedback so they can correct any incorrect response(s). ______

Rights and permissions

About this article

Cite this article

Poncy, B.C., Fontenelle, S.F. & Skinner, C.H. Using Detect, Practice, and Repair (DPR) to Differentiate and Individualize Math Fact Instruction in a Class-Wide Setting. J Behav Educ 22, 211–228 (2013). https://doi.org/10.1007/s10864-013-9171-7

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10864-013-9171-7