Abstract

The need for efficient normal integration methods is driven by several computer vision tasks such as shape-from-shading, photometric stereo, deflectometry. In the first part of this survey, we select the most important properties that one may expect from a normal integration method, based on a thorough study of two pioneering works by Horn and rooks (Comput Vis Graph Image Process 33(2): 174–208, 1986) and Frankot and Chellappa (IEEE Trans Pattern Anal Mach Intell 10(4): 439-451, 1988). Apart from accuracy, an integration method should at least be fast and robust to a noisy normal field. In addition, it should be able to handle several types of boundary condition, including the case of a free boundary and a reconstruction domain of any shape, i.e., which is not necessarily rectangular. It is also much appreciated that a minimum number of parameters have to be tuned, or even no parameter at all. Finally, it should preserve the depth discontinuities. In the second part of this survey, we review most of the existing methods in view of this analysis and conclude that none of them satisfies all of the required properties. This work is complemented by a companion paper entitled Variational Methods for Normal Integration, in which we focus on the problem of normal integration in the presence of depth discontinuities, a problem which occurs as soon as there are occlusions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Computing the 3D-shape of a surface from a set of normals is a classical problem of 3D-reconstruction called normal integration. This problem is well posed, except that a constant of integration has to be fixed, but its resolution is not as straightforward as it could appear, even in the case where the normal is known at every pixel of an image. One may well be surprised that such a simple problem has given rise to such a large number of papers. This is probably due to the fact that, like many computer vision problems, it simultaneously meets several requirements. Of course, a method of integration is expected to be accurate, fast, and robust re noisy data or outliers, but we will see that several other criteria are important as well.

In this paper, a thorough study of two pioneering works is done: a paper by Horn and Brooks based on variational calculus [29]; another one by Frankot and Chellappa resorting to Fourier analysis [19]. This preliminary study allows us to select six criteria apart from accuracy, through which we intend to qualitatively evaluate the main normal integration methods. Our survey is summarized in Table 1. Knowing that no existing method is completely satisfactory, this preliminary study impels us to suggest several new methods of integration which will be found in a companion paper [48].

The organization of the present paper is the following. We derive the basic equations of normal integration in Sect. 2. Horn and Brooks’ and Frankot and Chellappa’s methods are reviewed in Sect. 3. This allows us, in Sect. 4, to select several properties that are required by any normal integration method and to comment the most relevant related works. In Sect. 5, we conclude that a completely satisfactory method of integration is still lacking.

2 Basic Equations of Normal Integration

Suppose that, in each point \(\overline{\mathbf {x}} = [u,v]^\top \) of the image of a surface, the outer unit-length normal \(\mathbf {n}(u,v) = [n_1(u,v), n_2(u,v), n_3(u,v)]^\top \) is known. Integrating the normal field \(\mathbf {n}\) amounts to searching for three functions x, y and z such that the normal to the surface at the surface point \(\mathbf {x}(u,v) = [x(u,v), y(u,v), z(u,v)]^\top \), which is conjugate to \(\overline{\mathbf {x}}\), is equal to \(\mathbf {n}(u,v)\). Following [28], let us rigorously formulate this problem when the projection model is either orthographic, weak-perspective or perspective.

2.1 Orthographic Projection

We attach to the camera a 3D-frame \(\mathbf {c}xyz\) whose origin \(\mathbf {c}\) is located at the optical center, and such that \(\mathbf {c}z\) coincides with the optical axis (see Fig. 1).

The origin of pixel coordinates is taken as the intersection \(\mathbf {o}\) of the optical axis \(\mathbf {c}z\) and the image plane \(\overline{\pi }\). In practice, \(\overline{\pi }\) coincides with the focal plane \(z = f\), where f denotes the focal length.

Assuming orthographic projection, a 3D-point \(\mathbf {x}\) projects orthogonally onto the image plane, i.e.,

By normalizing the cross product of both partial derivatives \(\partial _u \mathbf {x}\) and \(\partial _v \mathbf {x}\), and choosing the sign so that \(\mathbf {n}\) points toward the camera, we obtain (the dependencies in u and v are omitted, for the sake of simplicity):

where \(\nabla z = [\partial _u z,\partial _v z]^\top \) denotes the gradient of the depth map z. From (2), we conclude that \(n_3 < 0\).

Of course, 3D-points such that \(n_3 \ge 0\) also exist. Such points are non-visible if \(n_3 > 0\). If \(n_3 = 0\), they are visible and project onto the occluding contour (see point \(\overline{\mathbf {x}}^o_2\) in Fig. 1). Thus, even if the normal \(\mathbf {n}\) is easily determined on the occluding contour, since \(\mathbf {n}\) is both parallel to \(\overline{\pi }\), and orthogonal to the contour, computing the depth z by integration in such points is impossible.

Now, let us consider the image points which do not lie on the occluding contour. Equation (2) immediately gives the following pair of linear PDEs in z:

where

Equation (3) shows that integrating a normal field, i.e., computing z from \(\mathbf {n}\), amounts to integrating the vector field \([p,q]^\top \). The solution of (3) is straightforward:

regardless of the integration path between some point \((u_0,v_0)\) and (u, v), as soon as p and q satisfy the constraint of integrability \(\partial _v p = \partial _u q\) (Schwartz theorem). If they do not, the integral in Eq. (5) depends on the integration path.

If there is no point \((u_0,v_0)\) where z is known, it follows from (5) that z(u, v) is computable up to an additive constant. This constant can be chosen so as to minimize the root-mean-square error (RMSE) in z, provided that the ground-truth is available.Footnote 1

2.2 Weak-Perspective Projection

Weak-perspective projection assumes that the camera is focused on a plane \(\pi \) of equation \(z = \mathsf {d}\), supposed to match the mean location of the surface. Any visible point \(\mathbf {x}\) projects first orthogonally onto \(\pi \), then perspectively onto \(\overline{\pi }\), with \(\mathbf {c}\) as projection center (see Fig. 2).

Assuming weak-perspective projection, we have:

Weak-perspective projection: \(\mathbf {x}_1\) and \(\mathbf {x}_2\) are conjugate to \(\overline{\mathbf {x}}_1^w\) and \(\overline{\mathbf {x}}_2^w\), respectively. Point \(\overline{\mathbf {x}}_2^w\) lies on the occluding contour. The plane \(\pi \), which is conjugate to \(\overline{\pi }\), is supposed to match the mean location of the surface

Denoting by \(\mathsf {m}\) the image magnification \(\mathsf {f}/\mathsf {d}\), the outer unit-length normal \(\mathbf {n}\) now reads:

For the image points which do not lie on the occluding contour, the pair of PDEs (3) becomes:

which explains why “weak-perspective projection” is also called “scaled orthographic projection.” From (8), analogously to (5):

2.3 Perspective Projection

We now consider perspective projection (see Fig. 3).

As major difference to weak-perspective, \(\mathsf {d}\) must be replaced with z(u, v) in Eq. (6):

The cross product of \(\partial _u{\mathbf {x}}\) and \(\partial _v{\mathbf {x}}\) is a little more complicated than in the previous case:

Writing that this vector is parallel to \(\mathbf {n}(u,v)\), this provides us with the three following equations:

Since this system is homogeneous in z, and knowing that \(z>0\), we introduce the change of variable:

which makes (12) linear with respect to \(\partial _u \tilde{z}\) and \(\partial _v \tilde{z}\):

System (14) is non-invertible if it has rank less than 2, i.e., if its three determinants are zero:

As \(n_1\), \(n_2\) and \(n_3\) cannot simultaneously vanish, because vector \(\mathbf {n}\) is unit-length, the equality (15) holds true if and only if \(u\,n_1+v\,n_2+\mathsf {f}\,n_3 = 0\). Knowing that \([u,v,\mathsf {f}]^\top \) are the coordinates of the image point \(\overline{\mathbf {x}}^p\) in the 3D-frame \(\mathbf {c}xyz\), this happens if and only if \(\overline{\mathbf {x}}^p\) lies on the occluding contour (see point \(\overline{\mathbf {x}}_3^p\) in Fig. 3). It is noticeable that for a given object, the occluding contour depends on which projection model is assumed.

For the image points which do not lie on the occluding contour, System (14) is easily inverted, which gives us the following pair of linear PDEs in \(\tilde{z}\):

where

Hence, under perspective projection, integrating a normal field \(\mathbf {n}\) amounts to integrating the vector field \([\tilde{p}, \tilde{q}]^\top \). The solution of Eq. (16) is straightforward:

from which we deduce, using (13):

It follows from (19) that z is computable up to a multiplicative constant. Notice also that (17), and therefore (19), require that the focal length \(\mathsf {f}\) is known, as well as the location of the principal point \(\mathbf {o}\), since the coordinates u and v depend on it (see Fig. 3).

The similarity between (3), (8) and (16) shows that any normal integration method can be extended to weak-perspective or perspective, provided that the intrinsic parameters of the camera are known.Footnote 2 Let us emphasize that such extensions are generic, i.e., not restricted to a given method of integration. We can thus limit ourselves to solving the following pair of linear PDEs, which we consider as the model problem:

where \((z,\mathbf {g})\) means \((z,[p,q]^\top )\), \((z,\frac{1}{\mathsf {m}}\,[p,q]^\top )\), or \((\tilde{z},[\tilde{p},\tilde{q}]^\top )\), depending on whether the projection model is orthographic, weak-perspective, or perspective, respectively.

2.4 Integration Using Quadratic Regularization

From now on, we do not care more about the projection model. We just have to solve the generic Eq. (20).

As already noticed, the respective solutions (5), (9) and (18) of Eqs. (3), (8) and (16), are independent from the integration path if and only if the constraint of integrability \(\partial _v p = \partial _u q\), or \(\partial _v \tilde{p} = \partial _u \tilde{q}\), is satisfied. In practice, a normal field is never rigorously integrable (or curl-free). Apart from using several integration paths and averaging the integrals [12, 26, 60], a natural way to deal with the lack of integrability is to turn (20) into an optimization problem [29]. Using quadratic regularization, this amounts to minimizing the functional:

where \({\varOmega }\subset \mathbb {R}^2\) is the reconstruction domain, and the gradient field \(\mathbf {g} = [p, q]^\top \) is the datum of the problem.

The functional \(\mathcal {F}_{L_2}\) is strictly convex in \(\nabla z\), but does not admit a unique minimizer \(z^*\) since, for any \(\kappa \in \mathbb {R}\), \(\mathcal {F}_{L_2}(z^*+\kappa ) = \mathcal {F}_{L_2}(z^*)\). Its minimization requires that the associated Euler–Lagrange equation is satisfied. The calculus of variation provides us with the following:

This necessary condition is rewritten as the following Poisson equation Footnote 3:

Solving Eq. (23) is not a sufficient condition for minimizing \(\mathcal {F}_{L_2}(z)\), except if z is known on the boundary \(\partial {\varOmega }\) (Dirichlet boundary condition), see [40] and the references therein. Otherwise, the so-called natural boundary condition, which is of the Neumann type, must be considered. In the case of \(\mathcal {F}_{L_2}(z)\), this condition is written [29]:

where vector \(\varvec{\eta }\) is normal to \(\partial {\varOmega }\) in the image plane.

Using different boundary conditions, one could expect that the different solutions of (23) would coincide on most part of \({\varOmega }\), but this is not true. For a given gradient field \(\mathbf {g}\), the choice of a boundary condition has a great influence on the recovered surface. This is noted in [29]: “Eq. (23) does not uniquely specify a solution without further constraint. In fact, we can add any harmonic function to a solution to obtain a different solution also satisfying (23).” A harmonic function is a solution of the Laplace equation:

As an example, let us search for the harmonic functions taking the form \(z(u,v) = z_1(u)\,z_2(v)\). Knowing that \(z_1\ne 0\) and \(z_2\ne 0\), since \(z > 0\), Eq. (25) gives:

Both sides of Eq. (26) are thus equal to the same constant \(K\in \mathbb {R}\). Two cases may occur, according to the sign of K. If \(K<0\), we pose \(K = -\,\omega ^2\), \(\omega \in \mathbb {R}\):

where \(\mathsf {j}\) is such that \(\mathsf {j}^2 = -1\). Finally, we obtain:

Note that a real harmonic function defined on \(\mathbb {R}^2\) can be considered as the real part or as the imaginary part of a holomorphic function. All the functions of the form (28) are indeed holomorphic. Their real and imaginary parts thus provide us with the following two families of harmonic functions:

Adding to a given solution of Eq. (23) any linear combination of these harmonic functions (many other such functions exist), we obtain other solutions. The way to select the right solution is to carefully manage the boundary.

In the case of a free boundary, the variational calculus tells us that minimizing \(\mathcal {F}_{L_2}(z)\) requires that (24) is imposed on the boundary, but the solution is still non-unique, since it is known up to an additive constant. The same conclusion holds true for any Neumann boundary condition. On the other hand, as soon as \({\varOmega }\) is bounded, a Dirichlet boundary condition ensures existence and uniqueness of the solution.Footnote 4

3 Two Pioneering Normal Integration Methods

Before a more exhaustive review, we first make a thorough study of two pioneering normal integration methods which have very different peculiarities. This will allow us to detect the most important properties that one may expect from any method of integration.Footnote 5

3.1 Horn and Brooks’ Method

A well-known method due to Horn and Brooks [29], which we denote by \(\mathcal {M}_{\text {HB}}\), attempts to solve the following discrete analogue of the Poisson Eq. (23), where u and v denote the pixels of a square 2D-grid:

The left-hand and right-hand sides of (30) are second-order finite differences approximations of the Laplacian and of the divergence, respectively. As stated in [25], other approximations can be considered, as long as the orders of the finite differences are consistent.

In [29], Eq. (30) is solved using a Jacobi iteration, for \((u,v) \in \mathring{{\varOmega }}\), i.e., for the pixels \((u,v) \in {\varOmega }\) whose four nearest neighbors are inside \({\varOmega }\):

The values of z for the pixels \((u,v) \in \partial {\varOmega }\) can be deduced from a discrete analogue of the natural boundary condition (24), “provided that the boundary curve is polygonal, with horizontal and vertical segments only.”

It is standard to show the convergence of this scheme [53], whatever the initialization, but it converges very slowly if the initialization is far from the solution [16].

However, it is not so easy to discretize the natural boundary condition properly, because many cases have to be considered (see, for instance, [7]).

3.2 Improvement in Horn and Brooks’ Method

Horn and Brooks’ method can be extended in order to more properly manage the natural boundary condition, by discretizing the functional \(\mathcal {F}_{L_2}(z)\) defined in (21), and then solving the optimality condition, rather than discretizing the continuous optimality condition (23).

This simple idea allowed Durou and Courteille to design in [16] an improved version of \(\mathcal {M}_{\text {HB}}\), denoted by \(\mathcal {M}_{\text {DC}}\), which attempts to minimize the following discrete approximation of \(F_{L_2}(z)\):

where \(\delta \) is the distance between neighboring pixels (from now on, the scale is chosen so that \(\delta = 1\)), \({\varOmega }_1\) and \({\varOmega }_2\) contain the pixels \((u,v) \in {\varOmega }\) such that \((u+1,v)\in {\varOmega }\) or \((u,v+1)\in {\varOmega }\), respectively, and \(\mathbf {z} = [z_{u,v}]_{(u,v) \in {\varOmega }}\).

In (32), the values of p and q are averaged using forward finite differences, in order to ensure the equivalence with \(\mathcal {M}_{\text {HB}}\). Indeed, one gets from (32) and from the characterization \(\nabla F_{L_2} = 0\) of an extremum, the same optimality condition (30) as discretized by Horn and Brooks, for the pixels \((u,v) \in \mathring{{\varOmega }}\).

However, handling the boundary is much simpler than with \(\mathcal {M}_{\text {HB}}\), because the appropriate discretization along the boundary naturally arises from the optimality condition \(\nabla F_{L_2} = 0\). Indeed, for a pixel \((u,v) \in \partial {\varOmega }\), the equation \(\partial F_{L_2}/\partial z_{u,v} = 0\) does not take the form (30) any more. Figure 4 shows the example of a pixel \((u,v) \in \partial {\varOmega }\) such that \((u+1,v)\) and \((u,v+1)\) are inside \({\varOmega }\), while \((u-1,v)\) and \((u,v-1)\) are outside \({\varOmega }\).

a Test surface \(\mathcal {S}_{\text {vase}}\). b Gradient field \(\mathbf {g}_{\text {vase}}\), obtained by sampling of the analytically computed gradient of \(\mathcal {S}_{\text {vase}}\). c Reconstructed surface obtained by integration of \(\mathbf {g}_{\text {vase}}\), using as reconstruction domain the image of the vase, at convergence of \(\mathcal {M}_{\text {DC}}\) (\(\hbox {RMSE}=0.93\)). d Same test, but on the whole grid (RMSE = 4.51). In both tests, the constant of integration is chosen so as to minimize the RMSE

In this case, Eq. (30) must be replaced with:

Since \(\varvec{\eta } = -\sqrt{2}/2\,[1,1]^\top \) is a plausible unit-length normal to the boundary \(\partial {\varOmega }\) in this case, the natural boundary condition (24) reads:

It is obvious that (33) is a discrete approximation of (34). More generally, it is easily shown that the equation \(\partial F_{L_2}/\partial z_{u,v} = 0\), for any \((u,v) \in \partial {\varOmega }\), is a discrete approximation of the natural boundary condition (24).

Let us test \(\mathcal {M}_{\text {DC}}\) on the surface \(\mathcal {S}_{\text {vase}}\) shown in Fig. 5a, which models a half-vase lying on a flat ground. To this end, we sample the analytically computed gradient of \(\mathcal {S}_{\text {vase}}\) on a regular grid of size \(312\times 312\), which provides the gradient field \(\mathbf {g}_{\text {vase}}\) (see Fig. 5b). We suppose that a preliminary segmentation allows us to use as reconstruction domain the image of the vase, which constitutes an additional datum. The reconstructed surface obtained at convergence of \(\mathcal {M}_{\text {DC}}\) is shown in Fig. 5c.

On the other hand, it is well known that quadratic regularization is not well adapted to discontinuities. Let us now test \(\mathcal {M}_{\text {DC}}\) using as reconstruction domain the whole grid of size \(312\times 312\), which contains discontinuities at the top and at the bottom of the vase. The reconstructed surface at convergence is shown in Fig. 5d. It is not satisfactory, since the discontinuities are not preserved. This is numerically confirmed by the RMSE, which is much higher than that of Fig. 5c.

a Gradient field \(\mathbf {g}_{\text {face}}\) of a face estimated via photometric stereo. b Reconstructed surface obtained by integration of \(\mathbf {g}_{\text {face}}\), using Frankot and Chellappa’s method [19]. Since a periodic boundary condition is assumed, the depth is forced to be the same on the cheek and on the nose. As a consequence, this much distorts the result

We know that removing the flat ground from the reconstruction domain suffices to reach a better result (see Fig. 5c), since this eliminates any depth discontinuity. However, this requires a preliminary segmentation, which is known to be a hard task, and also requires that the integration can be carried out on a non-rectangular reconstruction domain.

Otherwise, it is necessary to appropriately handle the depth discontinuities, in order to limit the bias. The lack of integrability of the vector field \([p,q]^\top \) is a basic idea to detect the discontinuities, as shown in our companion paper [48]. Unfortunately, this characterization of the discontinuities is neither necessary nor sufficient. On the one hand, shadows can induce outliers if \([p,q]^\top \) is estimated via photometric stereo [49], which can therefore be non-integrable along shadow limits, even in the absence of depth discontinuities. On the other hand, the vector field \([p,q]^\top \) of a scale seen from above is uniform, i.e., perfectly integrable.

3.3 Frankot and Chellappa’s Method

A more general approach to overcome the possible non-integrability of the gradient field \(\mathbf {g} = [p,q]^\top \) is to first define a set \(\mathcal {I}\) of integrable vector fields, i.e., of vector fields of the form \(\nabla z\), and then compute the projection \(\nabla \bar{z}\) of \(\mathbf {g}\) on \(\mathcal {I}\), i.e., the vector field \(\nabla \bar{z}\) of \(\mathcal {I}\) the closest to \(\mathbf {g}\), according to some norm. Afterward, the (approximate) solution of Eq. (20) is easily obtained using (5), (9) or (18), since \(\nabla \bar{z}\) is integrable.

Nevertheless, the boundary conditions can be complicated to manage, because \(\mathcal {I}\) depends on which boundary condition is imposed (including the case of the natural boundary condition). It is noticed in [2] that minimizing the functional \(\mathcal {F}_{L_2}(z)\) amounts to following this general approach, in the case where \(\mathcal {I}\) contains all integrable vector fields and the Euclidean norm is used.

The most cited normal integration method, due to Frankot and Chellappa [19], follows this approach in the case where the Fourier basis is considered. Let us use the standard definition of the Fourier transform:

where \((\omega _u,\omega _v) \in \mathbb {R}^2\). Computing the Fourier transforms of both sides of (23), we obtain:

In Eq. (36), the data \(\hat{p}\) and \(\hat{q}\), as well as the unknown \(\hat{z}\), depend on the variables \(\omega _u\) and \(\omega _v\). For any \((\omega _u,\omega _v)\) such that \(\omega _u^2+\omega _v^2\ne 0\), this equation gives us the following expression of \(\hat{z}(\omega _u,\omega _v)\):

Indeed, computing the inverse Fourier transform of (37) will provide us with a solution of (23). This method of integration [19], which we denote by \(\mathcal {M}_{\text {FC}}\), is very fast thanks to the FFT algorithm.

The definition of the Fourier transform may be confusing, because several definitions exist. Instead of pulsations \((\omega _u,\omega _v)\), frequencies \((n_u,n_v)\) can be used. Knowing that \((\omega _u,\omega _v) = 2\pi (n_u,n_v)\), Eq. (37) then becomes:

It is written in [41] that the accuracy of Frankot and Chellappa’s method “relies on a good input scale.” In fact, it only happens that, in a publicly available code of this method, the \(2\pi \) coefficient in (38) is missing.

Since the right-hand side of Eq. (37) is not defined if \((\omega _u,\omega _v) = (0,0)\), Frankot and Chellappa assert that it “simply means that we cannot recover the average value of z without some additional information.” This is true but incomplete, because \(\mathcal {M}_{\text {FC}}\) provides the solution of Eq. (23) up to the addition of a harmonic function over \({\varOmega }\). Saracchini et al. note in [51] that “the homogeneous version of [(23)] is satisfied by an arbitrary linear function of position \(z(u,v) = a\,u+b\,v+c\), which when added to any solution of [(23)] will yield infinitely many additional solutions.” Affine functions are harmonic indeed, but we know from Sect. 2.4 that many other harmonic functions also exist.

Applying \(\mathcal {M}_{\text {FC}}\) to \(\mathbf {g}_{\text {vase}}\) would give the same result as that of Fig. 5d, but much faster, when the reconstruction domain is equal to the whole grid. On the other hand, such a result as that of Fig. 5c could not be reached, since \(\mathcal {M}_{\text {FC}}\) is not designed to manage a non-rectangular domain \({\varOmega }\).

Besides, \(\mathcal {M}_{\text {FC}}\) works well if and only if the surface to be reconstructed is periodic. This clearly appears in the example of Fig. 6: the gradient field \(\mathbf {g}_{\text {face}}\) of a face (see Fig. 6a), estimated via photometric stereo [57], is integrated using \(\mathcal {M}_{\text {FC}}\), which results in the reconstructed surface shown in Fig. 6b. Since the face is non-periodic, but the depth is forced to be the same on the left and right edges of the face, i.e., on the cheek and on the nose, the result is much distorted.

This failure of \(\mathcal {M}_{\text {FC}}\) was first exhibited by Harker and O’Leary in [23], who show on some examples that the solution provided by \(\mathcal {M}_{\text {FC}}\) is not always a minimizer of the functional \(\mathcal {F}_{L_2}(z)\). They moreover explain: “The fact that the solution [of Frankot and Chellappa] is constrained to be periodic leads to a systematic bias in the solution” (This periodicity is clearly visible on the recovered surface of Fig. 6b.) Harker and O’Leary also conclude that “any approach based on the Euler–Lagrange equation is only valid for a few special cases.” The improvements in \(\mathcal {M}_{\text {FC}}\) that we describe in the next section make this assertion highly questionable.

3.4 Improvements in Frankot and Chellappa’s Method

A first improvement in \(\mathcal {M}_{\text {FC}}\) suggested by Simchony et al. in [52] amounts to solving the discrete approximation (30) of the Poisson equation using the discrete Fourier transform, instead of discretizing the solution (37) of the Poisson Eq. (23).

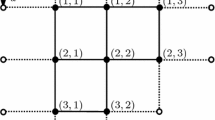

Consider a rectangular 2D domain \({\varOmega }= [0,d_u]\times [0,d_v]\), and choose a lattice of equally spaced points \((u\frac{d_u}{m},v\frac{d_v}{n})\), \(u\in [0,m]\), \(v\in [0,n]\). Let us denote by \(f_{u,v}\) the value of a function \(f : {\varOmega }\rightarrow \mathbb {R}\) at \((u\frac{d_u}{m},v\frac{d_v}{n})\). The standard definition of the discrete Fourier transform of f is as follows, for \(k\in [0,m-1]\) and \(l\in [0,n-1]\):

The inverse transform of (39) reads:

Replacing any term in (30) by its inverse discrete Fourier transform of the form (40), and knowing that the Fourier family is a basis, we obtain:

The expression in brackets of the left-hand side is zero if and only if \((k,l) = (0,0)\). As soon as \((k,l)\ne (0,0)\), Eq. (41) provides us with the expression of \(\hat{z}_{k,l}\):

which is rewritten by Simchony et al. as follows:

Comparing (35) and (39) shows us that \(\omega _u\) corresponds to \(2\pi \frac{k}{m}\) and \(\omega _v\) to \(2\pi \frac{l}{n}\). Using these correspondences, we would expect to be able to identify (37) and (43). This is true if \(\sin \left( 2\pi \frac{k}{m}\right) \) and \(\sin \left( 2\pi \frac{l}{n}\right) \) tend toward 0, and \(\cos ^2\left( \pi \frac{k}{m}\right) \) and \(\cos ^2\left( \pi \frac{l}{n}\right) \) tend toward 1, which occurs if k takes either the first values or the last values inside \([0,m-1]\), and the same for l inside \([0,n-1]\), which is interpreted by Simchony et al. as follows: “At low frequencies our result is similar to the result obtained in [19]. At high frequencies we attenuate the corresponding coefficients since our discrete operator has a low-pass filter response [...] the surface z obtained in [19] may suffer from high frequency oscillations.” Actually, the low values of k and l correspond to the lowest values of \(\omega _u\) and \(\omega _v\) inside \(\mathbb {R}^+\), and the high values of k and l may be interpreted as the lowest absolute values of \(\omega _u\) and \(\omega _v\) inside \(\mathbb {R}^-\).

Moreover, using the inverse discrete Fourier transform (40), the solution of (30) which follows from (42) will be periodic in u with period \(d_u\), and in v with period \(d_v\). This means that \(z_{m,v} = z_{0,v}\), for \(v\in [0,n]\), and \(z_{u,n} = z_{u,0}\), for \(u\in [0,m]\). The discrete Fourier transform is therefore appropriate only for problems which satisfy periodic boundary conditions.

More relevant improvements in \(\mathcal {M}_{\text {FC}}\) due to Simchony et al. are to suggest the use of the discrete sine or cosine transforms if the problem involves, respectively, Dirichlet or Neumann boundary conditions.

Dirichlet Boundary Condition It is easily checked that any function that is expressed as an inverse discrete sine transform of the following formFootnote 6:

satisfies the homogeneous Dirichlet condition \(f_{u,v} = 0\) on the boundary of the discrete domain \({\varOmega }= [0,m] \times [0,n]\), i.e., for \(u = 0\), \(u = m\), \(v = 0\), and \(v = n\).

To solve (30) on a rectangular domain \({\varOmega }\) with the homogeneous Dirichlet condition \(z_{u,v} = 0\) on \(\partial {\varOmega }\), we can therefore write \(z_{u,v}\) as in (44). This is still possible for a non-homogeneous Dirichlet boundary condition:

A first solution would be to solve a pair of problems. We could search for both a solution \(z^0_{u,v}\) of (30) satisfying the homogeneous Dirichlet boundary condition, and a harmonic function \(h_{u,v}\) on \({\varOmega }\) satisfying the boundary condition (45). Then, \(z^0_{u,v}+h_{u,v}\) is a solution of Eq. (30) satisfying this boundary condition.

But it is much easier to replace \(z_{u,v}\) with \(z'_{u,v}\), such that \(z'_{u,v} = z_{u,v}\) everywhere on \({{\varOmega }}\), except on its boundary \(\partial {\varOmega }\) where \(z'_{u,v} = z_{u,v}-b^D_{u,v}\). The Dirichlet boundary condition satisfied by \(z'_{u,v}\) is homogeneous, so we can actually write \(z'_{u,v}\) under the form (44).

In practice, we just have to change the right-hand side \(g_{u,v}\) of Eq. (30) for the pixels \((u,v) \in {\varOmega }\) which are adjacent to \(\partial {\varOmega }\). Either one or two neighbors of these pixels lie on \(\partial {\varOmega }\). For example, only the neighbor (0, v) of pixel (1, v) lies on \(\partial {\varOmega }\), for \(v \in [2,n-2]\). In such a pixel, the right-hand side of Eq. (30) must be replaced with:

On the other hand, among the four neighbors of the corner pixel (1, 1), both (0, 1) and (1, 0) lie on \(\partial {\varOmega }\). Therefore, the right-hand side of Eq. (30) must be modified as follows, in this pixel:

Knowing that the products of sine functions in (44) form a linearly independent family, we get from (30) and (44), \(\forall (k,l) \in [1,m-1] \times [1,n-1]\):

From (48), we easily deduce \(z'_{u,v}\) using the inverse discrete sine transform (44), thus \(z_{u,v}\).

Neumann Boundary Condition The reasoning is similar, yet a little bit trickier, in the case of a Neumann boundary condition. Any function that is expressed as an inverse discrete cosine transform of the following form:

satisfies the homogeneous Neumann boundary condition \(\nabla f_{u,v} \cdot \varvec{\eta }_{u,v} = 0\) on \(\partial {\varOmega }\), where \(\varvec{\eta }_{u,v}\) is the outer unit-length normal to \(\partial {\varOmega }\) in pixel (u, v). To solve (30) on a rectangular domain \({\varOmega }\) with the homogeneous Neumann condition on \(\partial {\varOmega }\), we can thus write \(z_{u,v}\) as in (49). Consider now a non-homogeneous Neumann boundary condition:

A similar trick as before consists in defining an auxiliary function \(z''_{u,v}\) such that \(z''_{u,v}\) is equal to \(z_{u,v}\) on \({\varOmega }\), but differs from \(z_{u,v}\) outside \({\varOmega }\) and satisfies the homogeneous Neumann boundary condition on \(\partial {\varOmega }\). Let us first take the example of a pixel \((0,v) \in \partial {\varOmega }\), \(v \in [1,n-1]\). By discretizing (50) using first-order finite differences, it is easily verified that \(z''\) satisfies the homogeneous Neumann boundary condition in such a pixel if \(z''_{-1,v} = z_{-1,v}+b^N_{0,v}\). Similar definitions of \(z''\) arise on the other three edges of \({{\varOmega }}\).

Reconstructed surfaces obtained by integration of \(\mathbf {g}_{\text {face}}\) (see Fig. 6a), using Simchony, Chellappa and Shao’s method [52], and imposing two different boundary conditions. a Since the homogeneous Dirichlet boundary condition \(z = 0\) is clearly false, the surface is much distorted. b The (Neumann) natural boundary condition provides a much more realistic result

Let us now take the example of a corner of \({\varOmega }\), for instance pixel (0, 0). Knowing that \(\varvec{\eta }_{0,0} = -\frac{1}{\sqrt{2}}\,[1,1]^\top \), one can easily check, by discretizing (50) using first-order finite differences, that appropriate modifications of z in this pixel are \(z''_{-1,0} = z_{-1,0} + \frac{\sqrt{2}}{2} \, b^N_{0,0}\) and \(z''_{0,-1} = z_{0,-1} + \frac{\sqrt{2}}{2} \, b^N_{0,0}\). With these modifications, the function \(z''_{u,v}\) indeed satisfies the homogeneous Neumann boundary condition in pixel (0, 0).

In practice, we just have to change the right-hand side \(g_{u,v}\) of Eq. (30) for the pixels \((u,v) \in \partial {\varOmega }\). Either one or two neighbors of these pixels lie outside \({\varOmega }\). For example, only the neighbor \((-1,v)\) of pixel (0, v) lies outside \({\varOmega }\), for \(v \in [1,n-1]\). In such a pixel, the right-hand side of Eq. (30) must be replaced with:

A problem with this expression is that \(p_{-1,v}\) is unknown. Using a first-order discretization of the homogeneous Neumann boundary condition (on p, not on z) \(\nabla p_{0,v} \cdot \varvec{\eta }_{0,v} = 0\), we obtain the approximation \(p_{-1,v} \approx p_{0,v}\), and thus (51) is turned into:

On the other hand, among the four neighbors of pixel (0, 0), which is a corner of \({\varOmega }\), both \((0,-1)\) and \((-1,0)\) lie outside \({\varOmega }\). Therefore, the right-hand side of Eq. (30) must be modified as follows:

Knowing that the products of cosine functions in (49) form a linearly independent family, we get from (30) and (49), \(\forall (k,l) \in [0,m-1] \times [0,n-1]\):

except for \((k,l) = (0,0)\). Indeed, we cannot determine the coefficient \(\bar{\bar{z}}''_{0,0}\), which simply means that the solution of the Poisson equation using a Neumann boundary condition (as, for instance, the natural boundary condition) is computable up to an additive constant, because the term which corresponds to the coefficient \(\bar{\bar{f}}_{0,0}\) in the double sum of (49) does not depend on (u, v). Keeping this point in mind, we can deduce \(z''_{u,v}\) from (54) using the inverse discrete cosine transform (49), thus \(z_{u,v}\).

Accordingly, the method \(\mathcal {M}_{\text {SCS}}\) designed by Simchony, Chellappa and Shao in [52] works well even in the case of a non-periodic surface (see Fig. 7). Knowing moreover that this method is as fast as \(\mathcal {M}_{\text {FC}}\), we conclude that it improves Frankot and Chellappa’s original method a lot.

On the other hand, the useful property of the solutions (44) and (49), i.e., they satisfy a homogeneous Dirichlet or Neumann boundary condition, is obviously valid only if the reconstruction domain \({\varOmega }\) is rectangular. This trick is hence not useable for any other form of domain \({\varOmega }\). It is claimed in [52] that embedding techniques can extend \(\mathcal {M}_{\text {SCS}}\) to non-rectangular domains, but this is neither detailed, nor really proved. As a consequence, such a result as that of Fig. 5c could not be reached applying \(\mathcal {M}_{\text {SCS}}\) to the gradient field \(\mathbf {g}_{\text {vase}}\): the result would be the same as that of Fig. 5d.

4 Main Normal Integration Methods

4.1 A List of Expected Properties

This may appear as a truism, but a basic requirement of 3D-reconstruction is accuracy. Anyway, the evaluation/comparison of 3D-reconstruction methods is a difficult challenge. Firstly, it may happen that some methods require more data than the others, which makes the evaluation/comparison biased in some sense. Secondly, it usually happens that the choice of the benchmark has a great influence on the final ranking. Finally, it is a hard task to implement a method just from its description, which gives in practice a substantial advantage to the designers of a ranking process, whatever their methodology, in the case when they also promote their own method. In Sect. 4.3, we will review the main existing normal integration methods. However, in accordance with these remarks, we do not intend to evaluate their accuracy. We will instead quote their main features.

In view of the detailed reviews of the methods of Horn and Brooks and of Frankot and Chellappa (see Sect. 3), we may expect, apart from accuracy, five other properties from any normal integration method:

-

\(\mathcal {P}_{\text {Fast}}\): The desired method should be as fast as possible.

-

\(\mathcal {P}_{\text {Robust}}\): It should be robust to a noisy normal field.Footnote 7

-

\(\mathcal {P}_{\text {FreeB}}\): The method should be able to handle a free boundary. Accordingly, each method aimed at solving the Poisson Eq. (23) should be able to solve the natural boundary condition (30) in the same time.

-

\(\mathcal {P}_{\text {Disc}}\): The method should preserve the depth discontinuities. This property could allow us, for example, to use photometric stereo on a whole image, without segmenting the scene into different parts without discontinuity.

-

\(\mathcal {P}_{\text {NoRect}}\): The method should be able to work on a non-rectangular domain. This happens for example when photometric stereo is applied to an object with background. This property could partly remedy a method which would not satisfy \(\mathcal {P}_{\text {Disc}}\), knowing that segmentation is usually easier to manage than preserving the depth discontinuities.

An additional property would also be much appreciated:

-

\(\mathcal {P}_{\text {NoPar}}\): The method should have no parameter to tune (only the critical parameters are involved here). In practice, tuning more than one parameter often means that an expert of the method is needed. One parameter is often considered as acceptable, but no parameter is even better.

4.2 Integration and Integrability

Among the required properties, we did not explicitly quote the ability of a method to deal with non-integrable normal fields, but this is implicitly expected through \(\mathcal {P}_{\text {Robust}}\) and \(\mathcal {P}_{\text {Disc}}\). In other words, the two sources of non-integrability that we consider are noise and depth discontinuities. A normal field estimated using shape-from-shading could be very far from being integrable, because of the ill-posedness of this technique, to such a point that integrability is sometimes used to disambiguate the problem [19, 29]. Also the uncalibrated photometric stereo problem is ill-posed and can be disambiguated imposing integrability [63]. However, we are over all interested in calibrated photometric stereo with \(n \ge 3\) images, which is well posed without resorting to integrability. An error in the intensity of one light source is enough to cause a bias [31], and outliers may appear in shadow regions [49], thus providing normal fields that can be highly non-integrable, but we argue that such defects do not have to be compensated by the integration method itself. In other words, we suppose that the only outliers of the normal field we want to integrate are located on depth discontinuities.

In order to know whether a normal integration method satisfies \(\mathcal {P}_{\text {Disc}}\), a shape like \(\mathcal {S}_{\text {vase}}\) (see Fig. 5a) is well indicated, but a practical mistake must be avoided, which is not obvious. A discrete approximation of the integrability term \(\iint _{(u,v) \in {\varOmega }} [\partial _v p(u,v) -\partial _u q(u,v)]^2 \,\mathrm {d}u\,\mathrm {d}v\), which is used in [29] to measure the departure of a gradient field \([p,q]^\top \) from being integrable, is as follows:

where \({\varOmega }_3\) denotes the set of pixels \((u,v)\in {\varOmega }\) such that \((u,v+1)\) and \((u+1,v)\) are inside \({\varOmega }\). Let us suppose in addition that the discrete values \(p_{u,v}\) and \(q_{u,v}\) are numerically approximated using as finite differences:

Reporting the expressions (56) of \(p_{u,v}\) and \(q_{u,v}\) in (55), this always implies \(E_{\text {int}} = 0\). Using such a numerically approximated gradient field is thus biased, since it is integrable even in the presence of discontinuities,Footnote 8 whereas for instance, \(E_{\text {int}} = 390\) in the case of \(\mathbf {g}_{\text {vase}}\).

4.3 Most Representative Normal Integration Methods

The problem of normal integration is sometimes considered as solved, because its mathematical formulation is well established (see Sect. 2), but we will see that none of the existing methods simultaneously satisfies all the required properties. In 1996, Klette and Schlüns stated that “there is a remarkable deficiency of literature about integration techniques, at least in computer vision” [37]. Many contributions have appeared afterward, but a detailed review is still missing.

The way to cope with a possible non-integrable normal field was seen as a property of primary importance in the first papers on normal integration. The most obvious way to solve the problem amounts to use different paths in the integrals of (5), (9) or (18) and to average the different values. Apart from this approach, which has given rise to several heuristics [12, 26, 60], we propose to separate the main existing normal integration methods into two classes, depending on whether they care about discontinuities or not.

4.3.1 Methods Which Do Not Care About Discontinuities

According to the discussion conducted in Sect. 2.4, the most natural way to overcome non-integrability is to solve the Poisson Eq. (23). This approach has given rise to the method \(\mathcal {M}_{\text {HB}}\) (see Sect. 3.1), pioneered by Ikeuchi in [33] and then detailed by Horn and Brooks in [29], which has been the source of inspiration of several subsequent works. The method \(\mathcal {M}_{\text {HB}}\) satisfies the property \(\mathcal {P}_{\text {Robust}}\) (much better than the heuristics cited above), as well as \(\mathcal {P}_{\text {NoPar}}\) (there is no parameter), \(\mathcal {P}_{\text {NoRect}}\) and \(\mathcal {P}_{\text {FreeB}}\). A drawback of \(\mathcal {M}_{\text {HB}}\) is that it does not satisfy \(\mathcal {P}_{\text {Fast}}\), since it uses a Jacobi iteration to solve a large linear system whose size is equal to the number of pixels inside \(\mathring{{\varOmega }} = {\varOmega }\backslash \partial {\varOmega }\). Unsurprisingly, \(\mathcal {P}_{\text {Disc}}\) is not satisfied by \(\mathcal {M}_{\text {HB}}\), since this method does not care about discontinuities.

Frankot and Chellappa address shape-from-shading using a method which “also can be used as an integrator” [19]. The gradient field is projected on a set \(\mathcal {I}\) of integrable vector fields \(\nabla z\). In practice, the set of functions z is spanned by the Fourier basis. The method \(\mathcal {M}_{\text {FC}}\) (see Sect. 3.3) not only satisfies \(\mathcal {P}_{\text {Robust}}\) and \(\mathcal {P}_{\text {NoPar}}\), but also \(\mathcal {P}_{\text {Fast}}\), since it is non-iterative and, thanks to the FFT algorithm, much faster than \(\mathcal {M}_{\text {HB}}\). On the other hand, the reconstruction domain is implicitly supposed to be rectangular, even if the following is claimed: “The Fourier expansion could be formulated on a finite lattice instead of a periodic lattice. The mathematics are somewhat more complicated [...] and more careful attention could then be paid to boundary conditions.” Hence, \(\mathcal {P}_{\text {NoRect}}\) is not really satisfied, not more than \(\mathcal {P}_{\text {Disc}}\). Finally, \(\mathcal {P}_{\text {FreeB}}\) is not satisfied, since the solution is constrained to be periodic.Footnote 9

Simchony, Chellappa and Shao suggest in [52] a non-iterative way to solve the Poisson Eq. (23) using direct analytical methods. It is noteworthy that the resolution of the discrete Poisson equation described in Sect. 3.4, in the case of a Dirichlet or Neumann boundary condition, does not exactly match the original description in [52], but is rather intended to be pedagogic. As observed by Lee in [39], the discretized Laplacian operator on a rectangular domain is a symmetric tridiagonal Toeplitz matrix if a Dirichlet boundary condition is used, whose eigenvalues are analytically known. Simchony, Chellappa and Shao show how to use the discrete sine transform to diagonalize such a matrix. They design an efficient solver for Eq. (23), which satisfies \(\mathcal {P}_{\text {Fast}}\), \(\mathcal {P}_{\text {Robust}}\) and \(\mathcal {P}_{\text {NoPar}}\). Another extension of \(\mathcal {M}_{\text {SCS}}\) to Neumann boundary conditions using the discrete cosine transform is suggested, which allows \(\mathcal {P}_{\text {FreeB}}\) to be satisfied. Even if embedding techniques are supposed to generalize this method to non-rectangular domains, \(\mathcal {P}_{\text {NoRect}}\) is not satisfied in practice. Neither is \(\mathcal {P}_{\text {Disc}}\).

In [30, 31], Horovitz and Kiryati improve \(\mathcal {M}_{\text {HB}}\) in two ways. They show how to incorporate the depth in some sparse control points, in order to correct a possible bias in the reconstruction. They also design a coarse-to-fine multigrid computation, in order to satisfy \(\mathcal {P}_{\text {Fast}}\).Footnote 10 This acceleration technique, however, requires a parameter to be tuned, which loses \(\mathcal {P}_{\text {NoPar}}\).

As in [19], Petrovic et al. enforce integrability [47], but the normal field is directly handled under its discrete writing, in a Bayesian framework. “Imposing the integrability over elementary loops in [a] graphical model will correct the irregularities in the data.” An iterative algorithm known as belief propagation is used to converge toward the MAP estimate of the unknown surface. It is claimed that “discontinuities are maintained,” but too few results are provided to evaluate \(\mathcal {P}_{\text {Disc}}\).

In [36], Kimmel and Yavneh show how to accelerate the multigrid method designed by Horovitz and Kiryati [30] in the case where “the surface height at specific coordinates or along a curve” is known, using an algebraic multigrid approach. Basically, their method has the same properties as [30], although \(\mathcal {P}_{\text {Fast}}\) is even better satisfied.

An alternative derivation of Eq. (37) is yielded by Wei and Klette in [56], in which the preliminary derivation of the Euler–Lagrange Eq. (23) associated to \(\mathcal {F}_{L_2}(z)\) is not needed. They claim that “to solve the minimization problem, we employ the Fourier transform theory rather than variational approach to avoid using the initial and boundary conditions,” but since a periodic boundary condition is actually used instead, \(\mathcal {P}_{\text {FreeB}}\) is not satisfied. They also add two regularization terms to the functional \(\mathcal {F}_{L_2}(z)\) given in (21), “in order to improve the accuracy and robustness.” The property \(\mathcal {P}_{\text {NoPar}}\) is thus lost. On the other hand, even if Wei and Klette note that “[\(\mathcal {M}_{\text {HB}}\)] is very sensitive to abrupt changes in orientation, i.e., there are large errors at the object boundary,” their method does not satisfy \(\mathcal {P}_{\text {Disc}}\) either, whereas we will observe that loosing \(\mathcal {P}_{\text {NoPar}}\) is often the price to satisfy \(\mathcal {P}_{\text {Disc}}\).

Another method inspired by \(\mathcal {M}_{\text {FC}}\) is that of Kovesi [38]. Instead of projecting the given gradient field on a Fourier basis, Kovesi suggests to compute the correlations of this gradient field with the gradient fields of a bank of shapelets, which are in practice a family of Gaussian surfaces.Footnote 11 This method globally satisfies the same properties as \(\mathcal {M}_{\text {FC}}\) but, in addition, it can be applied to an incomplete normal field, i.e., to normals whose tilts are known up to a certain ambiguity.Footnote 12 Although photometric stereo computes the normals without ambiguity, this peculiarity could indeed be useful when a couple of images only is used, or a fortiori a single image (shape-from-shading), since the problem is not well posed in both these cases.

In [27], Ho et al. derive from (20) the eikonal equation \(\Vert \nabla z\Vert ^2 = p^2+\,q^2\), and aspire to use the fast marching method for its resolution. Unfortunately, this method requires that the unknown z has a unique global minimum over \({\varOmega }\). Thereby, a more general eikonal equation \(\Vert \nabla (z+\lambda \, f)\Vert ^2 = (p+\lambda \, \partial _u f)^2+(q+\lambda \, \partial _v f)^2\) is solved, where f is a known function and the parameter \(\lambda \) has to be tuned so that \(z+\lambda \, f\) has a unique global minimum. The main advantage is that \(\mathcal {P}_{\text {Fast}}\) is (widely) satisfied. Nothing is said about robustness, but we guess that error accumulation occurs as the depth is computed level set by level set.

As already explained in Sect. 3.2, Durou and Courteille improve \(\mathcal {M}_{\text {HB}}\) in [16], in order to better satisfy \(\mathcal {P}_{\text {FreeB}}\).Footnote 13 A very similar improvement in \(\mathcal {M}_{\text {HB}}\) is proposed by Harker and O’Leary in [23]. The latter loses the ability to handle any reconstruction domain, whereas the reformulation of the problem as a Sylvester equation provides two appreciable improvements. First, it deals with matrices of the same size as the initial (regular) grid and resorts to very efficient solvers dedicated to Sylvester equations, thus satisfying \(\mathcal {P}_{\text {Fast}}\). Moreover, any form of discrete derivatives is allowed. In [24, 25], Harker and O’Leary moreover propose several variants including regularization, which are still written as Sylvester equations, but one of them loses the property \(\mathcal {P}_{\text {FreeB}}\), whereas the others lose \(\mathcal {P}_{\text {NoPar}}\).

In [17], Ettl et al. propose a method specifically designed for deflectometry, which aims at measuring “height variations as small as a few nanometers” and delivers normal fields “with small noise and curl,” but the normals are provided on an irregular grid. This is why Ettl et al. search for an interpolating/approximating surface rather than for one unknown value per sample. Of course, this method is highly parametric, but \(\mathcal {P}_{\text {NoPar}}\) is still satisfied, since the parameters are the unknowns. Its main problem is that \(\mathcal {P}_{\text {Fast}}\) is rarely satisfied, depending on the number of parameters that are used.

The fast marching method [27] is improved in [20] by Galliani et al. in three ways. First, the method by Ho et al. is shown to be inaccurate, due to the use of analytical derivatives \(\partial _u f\) and \(\partial _v f\) in the eikonal equation, instead of discrete derivatives. An upwind scheme is more appropriate to solve such a PDE. Second, the new method is more stable and the choice of \(\lambda \) is no more a cause for concern. This implies that \(\mathcal {P}_{\text {NoPar}}\) is satisfied de facto. Finally, any form of domain \({\varOmega }\) can be handled, but it is not clear whether \(\mathcal {P}_{\text {NoRect}}\) was not satisfied by the former method yet. Not surprisingly, this new method is not robust, even its robustness is improved in a more recent paper [6], but it can be used as initialization for more robust methods based, for instance, on quadratic regularization [7].

The integration method proposed by Balzer in [9] is based on second-order shape derivatives, allowing for the use of a fast Gauss–Newton algorithm. Hence, \(\mathcal {P}_{\text {Fast}}\) is satisfied. A careful meshing of the problem, as well as the use of a finite element method, make \(\mathcal {P}_{\text {NoRect}}\) to be satisfied. However, for the very same reason, \(\mathcal {P}_{\text {NoPar}}\) is not satisfied. Moreover, the method is limited to smooth surfaces, and therefore \(\mathcal {P}_{\text {Disc}}\) cannot be satisfied. Finally, \(\mathcal {P}_{\text {Robust}}\) can be achieved thanks to a preliminary filtering step. Balzer and Mörwald design in [10] another finite element method, where the surface model is based on B-splines. It satisfies the same properties as the previous method.Footnote 14

In [61], Xie et al. deform a mesh “to let its facets follow the demanded normal vectors,” resorting to discrete geometry processing. As a nonparametric surface model is used, \(\mathcal {P}_{\text {FreeB}}\), \(\mathcal {P}_{\text {NoPar}}\) and \(\mathcal {P}_{\text {NoRect}}\) are satisfied. In order to avoid oversmoothing, sharp features can be preserved. However, \(\mathcal {P}_{\text {Disc}}\) is not addressed. On the other hand, \(\mathcal {P}_{\text {Fast}}\) is not satisfied since the proposed method alternates local and global optimization.

In [62], Yamaura et al. design a new method based on B-splines, which has the same properties as that proposed in [10]. But since the latter “relies on second-order partial differential equations, which is inefficient and unnecessary, as normal vectors consist of only first-order derivatives,” a simpler formalism with higher performances is proposed. Moreover, a nice application to surface editing is exhibited.

4.3.2 Methods Which Care About Discontinuities

The first work which really addresses the problem of \(\mathcal {P}_{\text {Disc}}\) is by Karaçali and Snyder [34, 35], who show how to define a new orthonormal basis of integrable vector fields which can incorporate depth discontinuities. They moreover show how to detect such discontinuities, in order to partially enforce integrability. The designed method thus satisfies \(\mathcal {P}_{\text {Disc}}\), as well as \(\mathcal {P}_{\text {Robust}}\) and \(\mathcal {P}_{\text {FreeB}}\), but \(\mathcal {P}_{\text {Fast}}\) is lost, despite the use of a block processing technique inspired by the work of Noakes et al. [43, 45]. In accordance with a previous remark, since it is often the price to satisfy \(\mathcal {P}_{\text {Disc}}\), \(\mathcal {P}_{\text {NoPar}}\) is also lost.

In [1], Agrawal et al. consider the pixels as a weighted graph, such that the weights are of the form \(p_{u,v+1}-p_{u,v}-q_{u+1,v}+q_{u,v}\). Each edge whose weight is greater than a threshold is cut. A minimal number of suppressed edges are then restored, in order to reconnect the graph while minimizing the total weight. As soon as an edge is still missing, one gradient value \(p_{u,v}\) or \(q_{u,v}\) is considered as possibly corrupted. It is shown how these suspected gradient values can be corrected, in order to enforce integrability “with the important property of local error confinement.” Neither \(\mathcal {P}_{\text {FreeB}}\) nor \(\mathcal {P}_{\text {NoPar}}\) is satisfied, and \(\mathcal {P}_{\text {Fast}}\) is not guaranteed as well, even if the method is non-iterative. Moreover, the following is asserted in [49]: “Under noise, the algorithm in [1] confuses correct gradients as outliers and performs poorly.” Finally, \(\mathcal {P}_{\text {Disc}}\) may be satisfied since strict integrability is no more uniformly imposed over the entire gradient field.

In [2], Agrawal et al. propose a general framework “based on controlling the anisotropy of weights for gradients during the integration.”Footnote 15 They are much inspired by classical image restoration techniques. It is shown how the (isotropic) Laplacian operator in Eq. (23) must be modified using “spatially varying anisotropic kernels,” thus obtaining four methods: two based on robust estimation, one on regularization, and one on anisotropic diffusion.Footnote 16 A homogeneous Neumann boundary condition \(\nabla z \cdot \varvec{\eta } = 0\) is assumed, which looks rather unrealistic. Thus, the four proposed methods do not satisfy \(\mathcal {P}_{\text {FreeB}}\), neither \(\mathcal {P}_{\text {Fast}}\) nor \(\mathcal {P}_{\text {NoPar}}\). Nevertheless, a special attention is given to satisfy \(\mathcal {P}_{\text {Robust}}\) and \(\mathcal {P}_{\text {Disc}}\).

A similar method to the first one proposed in [2] is designed by Fraile and Hancock in [18]. A minimum spanning tree is constructed from the same graph of pixels as in [1], except that the weights are different (several weights are tested). The integral in (6) along the unique path joining each pixel to a root pixel is then computed. Of course, this method is less robust than those based on quadratic regularization (or than the weighted quadratic regularization proposed in [2]), since “the error due to measurement noise propagates along the path,” and it is rather slow because of the search for a minimum spanning tree. But, depending on which weights are used, it could preserve depth discontinuities: in such a case as that of Fig. 5a, each pixel could be reached from a root pixel without crossing any discontinuity.

In [59], Wu and Tang try to find the best compromise between integrability and discontinuity preservation. In order to segment the scene into pieces without discontinuities, “one plausible method [...] is to identify where the integrability constraint is violated,” but “in real case, [this] may produce very poor discontinuity maps rendering them unusable at all.” A probabilistic method using the expectation-maximization (EM) algorithm is thus proposed, which provides a weighted discontinuity map. The alternating iterative optimization is very slow and a parameter is used, but this approach is promising, even if the evaluation of the results remains qualitative.

In [41, 42], Ng et al. do not enforce integrability over the entire domain, because with such an enforcement “sharp features will be smoothed out and surface distortion will be produced.” Since “either sparse or dense, residing on a 2D regular (image) or irregular grid space” gradient fields may be integrated, it is concluded that “a continuous formulation for surface-from-gradients is preferred.” Gaussian kernel functions are used, in order to linearize the problem and to avoid the need for extra knowledge on the boundary.Footnote 17 Unfortunately, at least two parameters must be tuned. Also \(\mathcal {P}_{\text {Fast}}\) is not satisfied, not more than \(\mathcal {P}_{\text {FreeB}}\), as shown in [10] on the basis of several examples. On the other hand, the proposed method outputs “continuous 3D representation not limited to a height field.”

In [49], Reddy et al. propose a method specifically designed to handle heavily corrupted gradient fields, which combines “the best of least squares and combinatorial search to handle noise and correct outliers, respectively.” Even if it is claimed that “\(L_1\) solution performs well across all scenarios without the need for any tunable parameter adjustments,” \(\mathcal {P}_{\text {NoPar}}\) is not satisfied in practice. Neither is \(\mathcal {P}_{\text {FreeB}}\).

In [15], Durou et al. are mainly concerned by \(\mathcal {P}_{\text {Disc}}\). Knowing that quadratic regularization works well in the case of smooth surfaces, but is not well adapted to discontinuities, the use of other regularizers, or of other variational models inspired by image processing, as in [2], allows \(\mathcal {P}_{\text {Disc}}\) to be satisfied. This is detailed in the companion paper [48].

The integration method proposed in [50, 51] by Saracchini et al.Footnote 18 is a multi-scale version of \(\mathcal {M}_{\text {HB}}\). A system is solved at each scale using a Gauss–Seidel iteration in order to satisfy \(\mathcal {P}_{\text {Fast}}\). Since reliability in the gradient is used as local weight, “each equation can be tuned to ignore bad data samples and suspected discontinuities,” thus allowing \(\mathcal {P}_{\text {Disc}}\) to be satisfied. Finally, setting the weights outside \({\varOmega }\) to zero allows \(\mathcal {P}_{\text {FreeB}}\) and \(\mathcal {P}_{\text {NoRect}}\) to be satisfied as well. However, this method “assumes that the slope and weight maps are given,” But such a weight map is a crucial clue, and the following assertion somehow avoids the problem: “practical integration algorithms require the user to provide a weight map.”

A similar approach is followed by Wang et al. in [55], but the weight map is binary and automatically computed. In addition to the gradient map, the photometric stereo images themselves are required. Eight cues are used by two SVM classifiers, which have to be trained using synthetic labeled data. Even if the results are nice, the proposed method seems rather difficult to manage in practice, and clearly loses \(\mathcal {P}_{\text {Fast}}\) and \(\mathcal {P}_{\text {NoPar}}\).

In [4, 5], Badri et al. resort to \(L_p\) norms, \(p\in \,]0,1[\). As p decreases, the \(L_0\) norm is approximated, which is a sparse estimator well adapted to outliers. Indeed, the combination of four terms allows Badri et al. to design a method which simultaneously handles noise and outliers, thus ensuring that \(\mathcal {P}_{\text {Robust}}\) and \(\mathcal {P}_{\text {Disc}}\) are satisfied. However, since the problem becomes non-convex, the proposed half-quad resolution is iterative and requires a good initialization: \(\mathcal {P}_{\text {Fast}}\) is lost. It happens that neither \(\mathcal {P}_{\text {NoPar}}\) is satisfied. Finally, each iteration resorts to FFT, which implies a rectangular domain and a periodic boundary condition; hence, \(\mathcal {P}_{\text {FreeB}}\) and \(\mathcal {P}_{\text {NoRect}}\) are not satisfied either.

4.4 Summary of the Review

Our discussion on the most representative methods of integration is summarized in Table 1, where the methods are listed in chronological order. It appears that none of them satisfies all the required properties, which is not surprising. Moreover, even if accuracy is the most basic property of any 3D-reconstruction technique, let us recall that it would have been impossible in practice to numerically compare all these methods.

In view of Table 1, almost every method differs from all the others, regarding the six selected properties. Of course, it may appear that such a binary (\(+\)/−) table is hardly informative, but more levels in each criterion would have led to more arbitrary scores. On the other hand, the number of \(+\) should not be considered as a global score for a given method: it happens that a method perfectly satisfies a subset of properties, while it does not care at all about the others.

5 Conclusion and Perspectives

Even if robustness to outliers was not selected in our list of required properties, let us cite a paper specifically dedicated to this problem. In [14], Du et al. compare the \(L_2\) and \(L_1\) norms, as well as a number of M-estimators, faced to the presence of outliers in the normal field: \(L_1\) is shown to be the globally best parry. This paper being worthwhile, one can wonder why it does not appear in Table 1. On the one hand, even if \(\mathcal {P}_{\text {Robust}}\) is satisfied at best, none of the other criteria are considered. On the other hand, let us recall why we did not select robustness to outliers as a pertinent feature: the presence of outliers in the normal field does not have to be compensated by the integration method. In [5], it is said that “[photometric stereo] can fail due to the presence of shadows and noise,” but recall that photometric stereo can be robust to outliers [32, 58].

Another property was ignored: whether the depth can be fixed at some points or not. As integration is a well-posed problem without any additional knowledge on the solution, we considered that this property is appreciable, although not required. Let us however quote, once again, the papers by Horovitz and Kiryati [31] and by Kimmel and Yavneh [36], in which this problem is specifically dealt with.

Some other works on normal integration have not been mentioned in our review, since they address other problems or do not face the same challenges. Let us first cite a work by Balzer [8], in which a specific problem with the normals delivered by deflectometry is highlighted: as noted by Ettl et al. in [17], such normals are usually not noisy, but Balzer points out that they are distant-dependent, which means that the gradient field \(\mathbf {g} = [p, q]^\top \) also depends on the depth z. The iterative method proposed in [8] to solve this more general problem seems to be quite limited, but an interesting extension to normals provided by photometric stereo is suggested: “one could abandon the widespread assumption that the light sources are distant and the lighting directions thus constant.”

Finally, Chang et al. address in [11] the problem of multiview normal integration, which aims at reconstructing a full 3D-shape in the framework of multiview photometric stereo. The original variational formulation (21) is extended to such normal fields, and the resulting PDE is solved via a level set method, which has to be soundly initialized. The results are nice but are limited to synthetic multiview photometric stereo images. However, this approach should be continued, since it provides complete 3D-models. Moreover, it is noted in [11] that the use of multiview inputs is the most intuitive way to satisfy \(\mathcal {P}_{\text {Disc}}\).

As noticed by Agrawal et al. about the range of solutions proposed in [2], but this is more generally true, “the choice of using a particular algorithm for a given application remains an open problem.” We hope this review will help the reader to make up its own mind, faced to so many existing approaches.

Finally, even if none of the reviewed methods satisfies all the selected criteria, this work helped us to develop some new normal integration methods. In a companion paper entitled Variational Methods for Normal Integration [48], we particularly focus on the problem of normal integration in the presence of discontinuities, which occurs as soon as there are occlusions.

Notes

The same procedure is used by Klette and Schlüns in [37]: “The reconstructed height values are shifted in the range of the original surface using LSE optimization.”

A MATLAB implementation of the methods presented in this section is available at https://github.com/yqueau/normal_integration.

Whereas the Fourier transform coefficients of f are denoted by \(\hat{f}\), the sine and cosine transform coefficients are denoted by \(\bar{f}\) and \(\bar{\bar{f}}\), respectively.

If the normal field is estimated via photometric stereo, we suppose that the images are corrupted by an additive Gaussian noise, as recommended in [44]: “in previous work on photometric stereo, noise is [wrongly] added to the gradient of the height function rather than camera images.”

This problem is also noted by Saracchini et al. in [51]: “Note that taking finite differences of the reference height map will not yield adequate test data.”

Goldman et al. [21] do the same using conjugate gradient.

According to [2], Kovesi uses “a redundant set of non-orthogonal basis functions.”

A preliminary version of this method was already described in [13].

A similar approach will be detailed in [48].

References

Agrawal, A., Chellappa, R., Raskar, R.: An algebraic approach to surface reconstruction from gradient fields. In: Proceedings of the 10th IEEE International Conference on Computer Vision, vol. I, pp. 174–181. Beijing, China (2005)

Agrawal, A., Raskar, R., Chellappa, R.: What is the range of surface reconstructions from a gradient field? In: Proceedings of the 9th European Conference on Computer Vision, vol. I. Lecture Notes in Computer Science, vol. 3951, pp. 578–591. Graz, Austria (2006)

Aubert, G., Aujol, J.F.: Poisson skeleton revisited: a new mathematical perspective. J. Math. Imaging Vis. 48(1), 149–159 (2014)

Badri, H., Yahia, H.: A non-local low-rank approach to enforce integrability. IEEE Trans. Image Process. 25, 3562 (2016)

Badri, H., Yahia, H., Aboutajdine, D.: Robust surface reconstruction via triple sparsity. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Columbus, Ohio, USA (2014)

Bähr, M., Breuß, M.: An improved Eikonal equation for surface normal integration. In: Proceedings of the 37th German Conference on Pattern Recognition. Aachen, Germany (2015)

Bähr, M., Breuß, M., Quéau, Y., Boroujerdi, A.S., Durou, J.D.: Fast and accurate surface normal integration on non-rectangular domains. Comput. Vis. Media 3, 107–129 (2017)

Balzer, J.: Shape from specular reflection in calibrated environments and the integration of spatial normal fields. In: Proceedings of the 18th IEEE International Conference on Image Processing, pp. 21–24. Brussels, Belgium (2011)

Balzer, J.: A Gauss–Newton method for the integration of spatial normal fields in shape space. J. Math. Imaging Vis. 44(1), 65–79 (2012)

Balzer, J., Mörwald, T.: Isogeometric finite-elements methods and variational reconstruction tasks in vision—a perfect match. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Providence, Rhode Island, USA (2012)

Chang, J.Y., Lee, K.M., Lee, S.U.: Multiview normal field integration using level set methods. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Workshop on Beyond Multiview Geometry: Robust Estimation and Organization of Shapes from Multiple Cues. Minneapolis, Minnesota, USA (2007)

Coleman, E.N., Jain, R.: Obtaining 3-dimensional shape of textured and specular surfaces using four-source photometry. Comput. Graph. Image Process. 18(4), 309–328 (1982)

Crouzil, A., Descombes, X., Durou, J.D.: A multiresolution approach for shape from shading coupling deterministic and stochastic optimization. IEEE Trans. Pattern Anal. Mach. Intell. 25(11), 1416–1421 (2003)

Du, Z., Robles-Kelly, A., Lu, F.: Robust surface reconstruction from gradient field using the L1 norm. In: Proceedings of the 9th Biennial Conference of the Australian Pattern Recognition Society on Digital Image Computing Techniques and Applications, pp. 203–209. Glenelg, Australia (2007)

Durou, J.D., Aujol, J.F., Courteille, F.: Integration of a normal field in the presence of discontinuities. In: Proceedings of the 7th International Workshop on Energy Minimization Methods in Computer Vision and Pattern Recognition. Lecture Notes in Computer Science, vol. 5681, pp. 261–273. Bonn, Germany (2009)

Durou, J.D., Courteille, F.: Integration of a normal field without boundary condition. In: Proceedings of the 11th IEEE International Conference on Computer Vision, 1st Workshop on Photometric Analysis for Computer Vision. Rio de Janeiro, Brazil (2007)

Ettl, S., Kaminski, J., Knauer, M.C., Häusler, G.: Shape reconstruction from gradient data. Appl. Opt. 47(12), 2091–2097 (2008)

Fraile, R., Hancock, E.R.: Combinatorial surface integration. In: Proceedings of the 18th International Conference on Pattern Recognition, vol. I, pp. 59–62. Hong Kong (2006)

Frankot, R.T., Chellappa, R.: A method for enforcing integrability in shape from shading algorithms. IEEE Trans. Pattern Anal. Mach. Intell. 10(4), 439–451 (1988)

Galliani, S., Breuß, M., Ju, Y.C.: Fast and robust surface normal integration by a discrete Eikonal equation. In: Proceedings of the 23rd British Machine Vision Conference. Guildford, UK (2012)

Goldman, D.B., Curless, B., Hertzmann, A., Seitz, S.M.: Shape and spatially-varying BRDFs from photometric stereo. In: Proceedings of the 10th IEEE International Conference on Computer Vision, vol. I. Beijing, China (2005)

Grisvard, P.: Elliptic Problems in Nonsmooth Domains, Monographs and Studies in Mathematics. Pitman, London (1985)

Harker, M., O’Leary, P.: Least squares surface reconstruction from measured gradient fields. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Anchorage, Alaska, USA (2008)

Harker, M., O’Leary, P.: Least squares surface reconstruction from gradients: direct algebraic methods with spectral, Tikhonov, and constrained regularization. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Colorado Springs, Colorado, USA (2011)

Harker, M., O’Leary, P.: Regularized reconstruction of a surface from its measured gradient field. J. Math. Imaging Vis. 51(1), 46–70 (2015)

Healey, G., Jain, R.: Depth recovery from surface normals. In: Proceedings of the 7th International Conference on Pattern Recognition, pp. 894–896. Montreal, Canada (1984)

Ho, J., Lim, J., Yang, M.H.: Integrating surface normal vectors using fast marching method. In: Proceedings of the 9th European Conference on Computer Vision, vol. III. Lecture Notes in Computer Science, vol. 3953, pp. 239–250. Graz, Austria (2006)

Horn, B.K.P.: Robot Vision. MIT Press, Cambridge (1986)

Horn, B.K.P., Brooks, M.J.: The variational approach to shape from shading. Comput. Vis. Graph. Image Process. 33(2), 174–208 (1986)

Horovitz, I., Kiryati, N.: Bias correction in photometric stereo using control points. Technical report, Department of Electrical Engineering Systems, Tel Aviv University, Israel (2000)

Horovitz, I., Kiryati, N.: Depth from gradient fields and control points: bias correction in photometric stereo. Image Vis. Comput. 22(9), 681–694 (2004)

Ikehata, S., Wipf, D., Matsushita, Y., Aizawa, K.: Photometric stereo using sparse Bayesian regression for general diffuse surfaces. IEEE Trans. Pattern Anal. Mach. Intell. 36(9), 1816–1831 (2014)

Ikeuchi, K.: Constructing a depth map from images. Technical Memo AIM-744, Artificial Intelligence Laboratory, Massachusetts Institute of Technology. Cambridge, Massachusetts, USA (1983)

Karaçali, B., Snyder, W.: Reconstructing discontinuous surfaces from a given gradient field using partial integrability. Comput. Vis. Image Underst. 92(1), 78–111 (2003)

Karaçali, B., Snyder, W.: Noise reduction in surface reconstruction from a given gradient field. Int. J. Comput. Vis. 60(1), 25–44 (2004)

Kimmel, R., Yavneh, I.: An algebraic multigrid approach for image analysis. SIAM J. Sci. Comput. 24(4), 1218–1231 (2003)

Klette, R., Schlüns, K.: Height data from gradient fields. In: Proceedings of the Machine Vision Applications, Architectures, and Systems Integration, Proceedings of the International Society for Optical Engineering, vol. 2908, pp. 204–215. Boston, Massachusetts, USA (1996)

Kovesi, P.: Shapelets correlated with surface normals produce surfaces. In: Proceedings of the 10th IEEE International Conference on Computer Vision, vol. II, pp. 994–1001. Beijing, China (2005)

Lee, D.: A provably convergent algorithm for shape from shading. In: Proceedings of the DARPA Image Understanding Workshop, pp. 489–496. Miami Beach, Florida, USA (1985)

Miranda, C.: Partial Differential Equations of Elliptic Type, 2nd edn. Springer, Berlin (1970)

Ng, H.S., Wu, T.P., Tang, C.K.: Surface-from-gradients with incomplete data for single view modeling. In: Proceedings of the 11th IEEE International Conference on Computer Vision. Rio de Janeiro, Brazil (2007)

Ng, H.S., Wu, T.P., Tang, C.K.: Surface-from-gradients without discrete integrability enforcement: a Gaussian kernel approach. IEEE Trans. Pattern Anal. Mach. Intell. 32(11), 2085–2099 (2010)

Noakes, L., Kozera, R.: The 2-D leap-frog: integrability, noise, and digitization. In: Bertrand, G., Imiya, A., Klette, R. (eds.) Digital and Image Geometry. Lecture Notes in Computer Science, vol. 2243, pp. 352–364. Springer (2001)

Noakes, L., Kozera, R.: Nonlinearities and noise reduction in 3-source photometric stereo. J. Math. Imaging Vis. 18(2), 119–127 (2003)

Noakes, L., Kozera, R., Klette, R.: The lawn-mowing algorithm for noisy gradient vector fields. In: Vision Geometry VIII, Proceedings of the International Society for Optical Engineering, vol. 3811, pp. 305–316. Denver, Colorado, USA (1999)

Pérez, P., Gangnet, M., Blake, A.: Poisson image editing. ACM Trans. Graph. 22(3), 313–318 (2003)

Petrovic, N., Cohen, I., Frey, B.J., Koetter, R., Huang, T.S.: Enforcing integrability for surface reconstruction algorithms using belief propagation in graphical models. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, vol. I, pp. 743–748. Kauai, Hawaii, USA (2001)

Quéau, Y., Durou, J.D., Aujol, J.F.: Variational methods for normal integration (2017). https://arxiv.org/abs/1709.05965/

Reddy, D., Agrawal, A., Chellappa, R.: Enforcing integrability by error correction using l1-minimization. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Miami, Florida, USA (2009)

Saracchini, R.F.V., Stolfi, J., Leitão, H.C.G., Atkinson, G.A., Smith, M.L.: Multi-scale depth from slope with weights. In: Proceedings of the 21st British Machine Vision Conference. Aberystwyth, UK (2010)

Saracchini, R.F.V., Stolfi, J., Leitão, H.C.G., Atkinson, G.A., Smith, M.L.: A robust multi-scale integration method to obtain the depth from gradient maps. Comput. Vis. Image Underst. 116(8), 882–895 (2012)

Simchony, T., Chellappa, R., Shao, M.: Direct analytical methods for solving Poisson equations in computer vision problems. IEEE Trans. Pattern Anal. Mach. Intell. 12(5), 435–446 (1990)

Stoer, J., Burlirsch, R.: Introduction to Numerical Analysis. Text in Applied Mathematics. Springer, Berlin (2002)

Tankus, A., Kiryati, N.: Photometric stereo under perspective projection. In: Proceedings of the 10th IEEE International Conference on Computer Vision, vol. I, pp. 611–616. Beijing, China (2005)

Wang, Y., Bu, J., Li, N., Song, M., Tan, P.: Detecting discontinuities for surface reconstruction. In: Proceedings of the 21st International Conference on Pattern Recognition, pp. 2108–2111. Tsukuba, Japan (2012)