Abstract

We consider a class of linear integral operators with impulse responses varying regularly in time or space. These operators appear in a large number of applications ranging from signal/image processing to biology. Evaluating their action on functions is a computationally intensive problem necessary for many practical problems. We analyze a technique called product-convolution expansion: The operator is locally approximated by a convolution, allowing to design fast numerical algorithms based on the fast Fourier transform. We design various types of expansions, provide their explicit rates of approximation and their complexity depending on the time-varying impulse response smoothness. This analysis suggests novel wavelet-based implementations of the method with numerous assets such as optimal approximation rates, low complexity and storage requirements as well as adaptivity to the kernels regularity. The proposed methods are an alternative to more standard procedures such as panel clustering, cross-approximations, wavelet expansions or hierarchical matrices.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We are interested in the compact representation and fast evaluation of a class of space- or time-varying linear integral operators with regular variations. Such operators appear in a large number of applications ranging from wireless communications [28, 37] to seismic data analysis [23], biology [22] and image processing [38].

In all these applications, a key numerical problem is to efficiently evaluate the action of the operator and its adjoint on given functions. This is necessary—for instance—to design fast inverse problems solvers. The main objective of this paper is to analyze the complexity of a set of approximation techniques coined product-convolution expansions.

We are interested in bounded linear integral operators \(H:L^2(\varOmega )\rightarrow L^2(\varOmega )\) defined from a kernel K by:

for all \(u\in L^2(\varOmega )\), where \(\varOmega = {{\mathbb {R}}}\setminus {{\mathbb {Z}}}\) is the one dimensional torus. Extensions to bounded and higher-dimensional domains will be mentioned at the end of the paper. Evaluating integrals of type (1) is a major challenge in numerical analysis, and many methods have been developed in the literature. Nearly, all methods share the same basic principle: decompose the operator kernel as a sum of low-rank matrices with a multi-scale structure. This is the case in panel clustering methods [24], hierarchical matrices [6], cross-approximations [35] or wavelet expansions [3, 13, 14]. The method proposed in this paper basically shares the same idea, except that the time-varying impulse response T of the operator is decomposed instead of the kernel K. The time-varying impulse response (TVIR) T of H is defined by:

The TVIR representation of H allows formalizing the notion of regularly varying integral operator: The functions \(T(x,\cdot )\) should be “smooth” for all \(x\in \varOmega \). Intuitively, the smoothness assumption means that two neighboring impulse responses should only differ slightly. Under this assumption, it is tempting to approximate H locally by a convolution. Two different approaches have been proposed in the literature to achieve this. The first one is called convolution-product expansion of order m and consists of approximating H by an operator \(H_m\) of type:

where \(h_k\) and \(w_k\) are real-valued functions defined on \(\varOmega \), \(\odot \) denotes the standard multiplication for functions and the Hadamard product for vectors, and \(\star \) denotes the convolution operator. The second one, called product-convolution expansion of order m, is at the core of this paper and consists of using an expansion of type:

Function \(w_k\) is usually chosen as a windowing function localized in space, while \(h_k\) is a kernel describing the operator on the support of \(w_k\). These two types of approximations have been used for a long time in the field of imaging (and to a lesser extent mobile communications and biology) and progressively became more and more refined [1, 17, 21, 22, 27, 28, 33, 34, 40]. In particular, the recent work [17] provides a nice overview of existing choices for the functions \(h_k\) and \(w_k\) as well as new ideas leading to significant improvements. Many different names have been used in the literature to describe expansions of type (3) and (4) depending on the communities: sectional methods, overlap-add and overlap-save methods, piecewise convolutions, anisoplanatic convolutions, parallel product-convolution, filter flow, windowed-convolutions... The term product-convolution comes from the field of mathematics [7]Footnote 1. We believe that it precisely describes the set of expansions of type (3) and therefore chose this naming. It was already used in the field of imaging by [1]. Now that product-convolution expansions have been described, natural questions arise:

-

(i)

How to choose the functions \(h_k\) and \(w_k\)?

-

(ii)

What is the numerical complexity of evaluating products of type \(H_m u\)?

-

(iii)

What is the resulting approximation error \(\Vert H_m-H\Vert \), where \(\Vert \cdot \Vert \) is a norm over the space of operators?

-

(iv)

How many operations are needed in order to obtain an approximation \(H_m\) such that \(\Vert H_m-H\Vert \le \epsilon \)?

Elements (i) and (ii) have been studied thoroughly and improved over the years in the mentioned papers. The main questions addressed herein are points (iii) and (iv). To the best of our knowledge, they have been ignored until now. They are however necessary in order to evaluate the theoretical performance of different product-convolution expansions and to compare their respective advantages precisely.

The main outcome of this paper is the following: Under smoothness assumptions of type \(T(x,\cdot ) \in H^s(\varOmega )\) for all \(x\in \varOmega \) (the Hilbert space of functions in \(L^2(\varOmega )\) with s derivatives in \(L^2(\varOmega )\)), most methods proposed in the literature—if implemented correctly—ensure a decay of type \(\Vert H_m-H\Vert _{HS}= O(m^{-s})\), where \(\Vert \cdot \Vert _{HS}\) is the Hilbert–Schmidt norm. Moreover, this bound cannot be improved uniformly on the considered smoothness class. By adding a support condition of type \(\mathrm {supp}(T(x,\cdot )) \subseteq [-\kappa /2,\kappa /2]\), the bound becomes \(\Vert H_m-H\Vert _{HS}= O(\sqrt{\kappa } m^{-s})\). More importantly, bounded supports allow reducing the computational burden. After discretization on n time points, we show that the number of operations required to satisfy \(\Vert H_m-H\Vert _{HS}\le \epsilon \) varies from \(O\left( \kappa ^{\frac{1}{2s}}n\log _2(n)\epsilon ^{-1/s}\right) \) to \(O\left( \kappa ^{\frac{2s+1}{2s}} n\log _2(\kappa n)\epsilon ^{-1/s}\right) \) depending on the choices of \(w_k\) and \(h_k\). We also show that the compressed operator representations of Meyer [32] can be used under additional regularity assumptions.

An important difference of product-convolution expansions compared to most methods in the literature [3, 6, 20, 24, 35] is that they are insensitive to the smoothness of \(T(\cdot ,y)\). The smoothness in the x direction is a useful property to control the discretization error, but not the approximation rate. The proposed methodology might therefore be particularly competitive in applications with irregular impulse responses.

The paper is organized as follows. In Sect. 2, we describe the notation and introduce a few standard results of approximation theory. In Sect. 3, we precisely describe the class of operators studied in this paper, show how to discretize them and provide the numerical complexity of evaluating product-convolution expansions of type (4). Sections 4 and 5 contain the full approximation analysis for two different kinds of approaches called linear or adaptive methods. Section 6 contains a summary and a few additional comments.

2 Notation

Let a and b denote functions depending on some parameters. The relationship \(a\asymp b\) means that a and b are equivalent, i.e., that there exists \(0<c_1\le c_2\) such that \(c_1 a \le b \le c_2 a\). Constants appearing in inequalities will be denoted by C and may vary at each occurrence. If a dependence on a parameter exists (e.g., \(\epsilon \)), we will use the notation \(C(\epsilon )\).

In most of the paper, we work on the unit circle \(\varOmega = {{\mathbb {R}}}\backslash {{\mathbb {Z}}}\) sometimes identified with the interval \(\left[ -\frac{1}{2},\frac{1}{2}\right] \). This choice is driven by simplicity of exposition, and the results can be extended to bounded domains such as \(\varOmega =[0,1]^d\) (see Sect. 6.2). Let \(L^2(\varOmega )\) denote the space of square integrable functions on \(\varOmega \). The Sobolev space \(H^s(\varOmega )\) is defined as the set of functions in \(L^2(\varOmega )\) with weak derivatives up to order s in \(L^2(\varOmega )\). The k-th weak derivative of \(u\in H^s(\varOmega )\) is denoted \(u^{(s)}\). The norm and semi-norm of \(u\in H^s(\varOmega )\) are defined by:

The sequence of functions \((e_k)_{k \in {{\mathbb {Z}}}}\) where \(e_k:x \mapsto \exp (-2i\pi k x)\) is an orthonormal basis of \(L^2(\varOmega )\) (see, e.g., [29]).

Definition 1

Let \(u\in L^2(\varOmega )\) and \(e_k:x \mapsto \exp (-2i\pi k x)\) denote the k-th Fourier atom. The Fourier series coefficients \({\hat{u}}[k]\) of u are defined for all \(k\in {{\mathbb {Z}}}\) by:

The space \(H^s(\varOmega )\) can be characterized through Fourier series.

Lemma 1

(Fourier characterization of Sobolev norms)

Definition 2

(B-spline of order \(\alpha \)) Let \(\alpha \in {{\mathbb {N}}}\) and \(m\ge \alpha +2\) be two integers. The B-spline of order 0 is defined by

The B-spline of order \(\alpha \in {{\mathbb {N}}}^*\) is defined by recurrence by:

The set of cardinal B-splines of order \(\alpha \) is denoted \({\mathcal {B}}_{\alpha ,m}\) and defined by:

In this work, we use the Daubechies wavelet basis for \(L^2({{\mathbb {R}}})\) [15]. We let \(\phi \) and \(\psi \) denote the scaling and mother wavelets and assume that the mother wavelet \(\psi \) has \(\alpha \) vanishing moments, i.e.,

Daubechies wavelets satisfy \(\mathop {\mathrm {supp}}(\psi )=[-\alpha +1,\alpha ]\), see [31, Theorem 7.9, p. 294]. Translated and dilated versions of the wavelets are defined, for all \(j > 0\) by

The set of functions \((\psi _{j,l})_{j\in {{\mathbb {N}}}, l\in Z}\), is an orthonormal basis of \(L^2({{\mathbb {R}}})\) with the convention \(\psi _{0,l}=\phi (x-l)\). There are different ways to construct a wavelet basis on the interval \([-1/2,1/2]\) from a wavelet basis on \(L^2({{\mathbb {R}}})\). Here, we use boundary wavelets defined in [12]. We refer to [16, 31] for more details on the construction of wavelet bases. This yields an orthonormal basis \((\psi _\lambda )_{\lambda \in \varLambda }\) of \(L^2(\varOmega )\), where

We let \(I_\lambda = \mathop {\mathrm {supp}}(\psi _\lambda )\) and for \(\lambda \in \varLambda \), we use the notation \(|\lambda |=j\).

Let u and v be two functions in \(L^2(\varOmega )\), the notation \(u\otimes v\) will be used both to indicate the function \(w\in L^2(\varOmega \times \varOmega )\) defined by

or the Hilbert–Schmidt operator \(w:L^2(\varOmega )\rightarrow L^2(\varOmega )\) defined for all \(f\in L^2(\varOmega )\) by:

The meaning can be inferred depending on the context. Let \(H:L^2(\varOmega )\rightarrow L^2(\varOmega )\) denote a linear integral operators. Its kernel will always be denoted K and its time-varying impulse response T. The linear integral operator with kernel T will be denoted J.

The following result is an extension of the singular value decomposition to operators.

Lemma 2

(Schmidt decomposition [36, Theorem 2.2] or [26, Theorem 1 p. 215]) Let \(H:L^2(\varOmega ) \rightarrow L^2(\varOmega )\) denote a compact operator. There exists two finite or countable orthonormal systems \(\{e_1, \ldots \}\), \(\{f_1, \ldots \}\) of \(L^2(\varOmega )\) and a finite or infinite sequence \(\sigma _1 \ge \sigma _2 \ge \ldots \) of positive numbers (tending to zero if it is infinite), such that H can be decomposed as:

A function \(u\in L^2(\varOmega )\) is denoted in regular font, whereas its discretized version \(\varvec{u}\in {{\mathbb {R}}}^n\) is denoted in bold font. The value of function u at \(x\in \varOmega \) is denoted u(x), while the i-th coefficient of vector \(\varvec{u}\in {{\mathbb {R}}}^n\) is denoted \(\varvec{u}[i]\). Similarly, an operator \(H : L^2(\varOmega ) \rightarrow L^2(\varOmega )\) is denoted in upper-case regular font, whereas its discretized version \(\varvec{H} \in {{\mathbb {R}}}^{n \times n}\) is denoted in upper-case bold font.

3 Preliminary Facts

In this section, we gather a few basic results necessary to derive approximation results.

3.1 Assumptions on the Operator and Examples

All the results stated in this paper rely on the assumption that the TVIR T of H is a sufficiently simple function. By simple, we mean that i) the functions \(T(x,\cdot )\) are smooth for all \(x\in \varOmega \), and ii) the impulse responses \(T(\cdot ,y)\) have a bounded support or a fast decay for all \(y\in \varOmega \).

There are numerous ways to capture the regularity of a function. In this paper, we assume that \(T(x,\cdot )\) lives in the Hilbert spaces \(H^s(\varOmega )\) for all \(x\in \varOmega \). This hypothesis is deliberately simple to clarify the proofs and the main ideas.

Definition 3

( Class \({\mathcal {T}}^s\) ) We let \({\mathcal {T}}^s\) denote the class of functions \(T : \varOmega \times \varOmega \rightarrow {{\mathbb {R}}}\) satisfying the smoothness condition: \(T(x,\cdot ) \in H^s(\varOmega ), \ \forall x \in \varOmega \) and \(\Vert T(x,\cdot )\Vert _{H^s(\varOmega )}\) is uniformly bounded in x, i.e:

Note that if \(T\in {\mathcal {T}}^s\), then H is a Hilbert–Schmidt operator since:

We will often use the following regularity assumption.

Assumption 1

The TVIR T of H belongs to \({\mathcal {T}}^s\).

In many applications, the impulse responses have a bounded support, or at least a fast spatial decay allowing to neglect the tails. This property will be exploited to design faster algorithms. This hypothesis can be expressed by the following assumption.

Assumption 2

\(T(x,y)=0, \forall |x|>\kappa /2\).

3.2 Examples

We provide three examples of kernels that may appear in applications. Figure 1 shows each kernel as a 2D image, the associated TVIR and the spectrum of the operator J (the linear integral operator with kernel T) computed with an SVD.

Example 1

A typical kernel that motivates our study is defined by:

The impulse responses \(K(\cdot ,y)\) are Gaussian for all \(y\in \varOmega \). Their variance \(\sigma (y)>0\) varies depending on the position y. The TVIR of K is defined by:

The impulse responses \(T(\cdot ,y)\) are not compactly supported, therefore, \(\kappa =1\) in Assumption 2. However, it is possible to truncate them by setting \(\kappa =3\sup _{y\in \varOmega } \sigma (y)\) for instance. This kernel satisfies Assumption 1 only if \(\sigma :\varOmega \rightarrow {{\mathbb {R}}}\) is sufficiently smooth. In Fig. 1, left column, we set \(\sigma (y) = 0.08+ 0.02\cos (2\pi y)\).

Example 2

The second example is given by:

The impulse responses \(T(\cdot ,y)\) are cardinal B-splines of degree 1 and width \(\sigma (y)>0\). They are compactly supported with \(\kappa =\sup _{y\in \varOmega } \sigma (y)\). This kernel satisfies Assumption 2 only if \(\sigma :\varOmega \rightarrow {{\mathbb {R}}}\) is sufficiently smooth. In Fig. 1, central column, we set \(\sigma (y) = 0.1 + 0.3 (1-|y|)\). This kernel satisfies Assumption 1 with \(s=1\).

Different kernels K, the associated TVIR T and the spectrum of the operator J. Left column corresponds to Example 1. Central column corresponds to Example 2. Right column corresponds to Example 3. a Kernel 1, b kernel 2, c kernel 3, d TVIR 1, e TVIR 2, f TVIR 3, g spectrum 1, h spectrum 2, and i spectrum 3

Example 3

The last example is a discontinuous TVIR. We set:

where \(g_{\sigma }(x) =\frac{1}{\sqrt{2\pi }} \exp \left( -\frac{x^2}{\sigma ^2}\right) \). This corresponds to the last column in Fig. 1, with \(\sigma _1=0.05\) and \(\sigma _2=0.1\). For this kernel, both Assumptions 1 and 2 are violated. Notice, however, that T is the sum of two tensor products and can therefore be represented using only four 1D functions. The spectrum of J should have only 2 non zero elements. This is verified in Fig. 1i, where the spectrum is 0 (up to numerical errors of order \(10^{-13}\)), except for the first two elements.

3.3 Product-Convolution Expansions as Low-Rank Approximations

Though similar in spirit, convolution-product (3) and product-convolution (4) expansions have a quite different interpretation captured by the following lemma.

Lemma 3

The TVIR \(T_m\) of the convolution-product expansion in (3) is given by:

The TVIR \(T_m\) of the product-convolution expansion in (4) is given by:

Proof

We only prove (26) since the proof of (25) relies on the same arguments. By definition:

By identification, this yields:

so that

\(\square \)

As can be seen in (26), product-convolution expansions consist of finding low-rank approximations of the TVIR. This interpretation was already proposed in [17] for instance and is the key observation to derive the forthcoming results. The expansion (25) does not share this simple interpretation, and we do not investigate it further in this paper.

3.4 Discretization

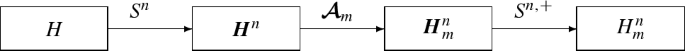

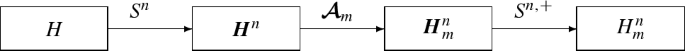

In order to implement a product-convolution expansion of type 4, the problem first needs to be discretized. We address this problem with a Galerkin formalism. Let \((\varphi _1,\ldots , \varphi _n)\) be a basis of a finite dimensional subspace \(V^n\) of \(L^2(\varOmega )\). Given an operator \(H:L^2(\varOmega )\rightarrow L^2(\varOmega )\), we can construct a matrix \(\varvec{H}^n\in {{\mathbb {R}}}^{n\times n}\) defined for all \(1\le i,j\le n\) by \(\varvec{H}^n[i,j] = \langle H\varphi _j, \varphi _i \rangle .\) Let \(S^n:H\mapsto \varvec{H}^n\) denote the discretization operator. From a matrix \(\varvec{H}^n\), an operator \(H^n\) can be reconstructed using, for instance, the pseudo-inverse \(S^{n,+}\) of \(S^n\). We let \(H^n=S^{n,+}(\varvec{H}^n)\). For instance, if \((\varphi _1,\ldots , \varphi _n)\) is an orthonormal basis of \(V^n\), the operator \(H^n\) is given by:

This paper is dedicated to analyzing methods denoted \({\mathcal {A}}_m\) that provide an approximation \(H_m={\mathcal {A}}_m(H)\) of type (4), given an input operator H. Our analysis provides guarantees on the distance \(\Vert H-H_m\Vert _{HS}\) depending on m and the regularity properties of the input operator H, for different methods. Depending on the context, two different approaches can be used to implement \({\mathcal {A}}_m\).

-

Compute the matrix \(\varvec{H}_m^n = S^n(H_m)\) using numerical integration procedures. Then, create an operator \(H_m^n=S^{n,+}(\varvec{H}_m^n)\). This approach suffers from two defects. First, it is only possible by assuming that the kernel of H is given analytically. Moreover, it might be computationally intractable. It is illustrated below.

-

In many applications, the operator H is not given explicitly. Instead, we only have access to its discretization \(\varvec{H}^n\). Then, it is possible to construct a discrete approximation algorithm \(\varvec{{\mathcal {A}}}_m\) yielding a discrete approximation \(\varvec{H}_m^n = \varvec{{\mathcal {A}}}_m(\varvec{H}^n)\). This matrix can then be mapped back to the continuous world using the pseudo-inverse: \(H_m^n=S^{n,+}(\varvec{H}_m^n)\). This is illustrated below. In this paper, we will analyze the construction complexity of \(\varvec{H}_m^n\) using this second approach.

Ideally, we would like to provide guarantees on \(\Vert H-H_m^n\Vert _{HS}\) depending on m and n. In the first approach, this is possible by using the following inequality:

where \(\epsilon _a(m)\) is the approximation error studied in this paper and \(\epsilon _d(n)\) is the discretization error. Under mild regularity assumptions on K, it is possible to obtain results of type \(\epsilon _d(n) = O(n^{-\gamma })\), where \(\gamma \) depends on the smoothness of K. For instance, if \(K\in H^{r}(\varOmega \times \varOmega )\), the error satisfies \(\epsilon _d(n) = O(n^{-r/2})\) for many bases including Fourier, wavelets and B-splines [10]. For \(K\in BV(\varOmega \times \varOmega )\), the space of functions with bounded variations, \(\epsilon _d(n) = O(n^{-1/4})\), see [31, Theorem 9.3]. As will be seen later, the approximation error \(\epsilon _a(m)\) behaves like \(O(m^{-s})\). As will be seen later, the proposed approximation technique will be of interest only in the case \(m\ll n\), since otherwise, it will require storing too much data. Under this assumption, the discretization error can be considered negligible compared to the approximation error. In all the paper, we assume that \(\epsilon _d(n)\) is negligible compared to \(\epsilon _a(m)\) without mention.

In the second approach, the error analysis is more complex since there is an additional bias due to the algorithm discretization. This bias is captured by the following inequality:

The bias

accounts for the difference between using the discrete or continuous approximation algorithm. In this paper, we do not study this bias error and assume that it is negligible compared to the approximation error \(\epsilon _a\).

3.5 Implementation and Complexity

Let \(\varvec{F}_n\in {\mathbb {C}}^{n\times n}\) denote the discrete inverse Fourier transform and \(\varvec{F}_n^*\) denote the discrete Fourier transform. Matrix-vector products \(\varvec{F}_n\varvec{u}\) or \(\varvec{F}_n^*\varvec{u}\) can be evaluated in \(O(n\log _2(n))\) operations using the fast Fourier transform (FFT). The discrete convolution-product \(\varvec{v}=\varvec{h}\star \varvec{u}\) is defined for all \(i\in {{\mathbb {Z}}}\) by \(\varvec{v}[i] = \sum _{j=1}^n \varvec{u}[i-j]\varvec{h}[j]\), with circular boundary conditions.

Discrete convolution-products can be evaluated in \(O(n\log _2(n))\) operations by using the following fundamental identity:

Hence, a convolution can be implemented using three FFTs (\(O(n\log _2(n))\) operations) and a point-wise multiplication (O(n) operations). This being said, it is straightforward to implement formula (4) with an \(O(mn\log _2(n))\) algorithm.

Under the additional assumption that \(w_k\) and \(h_k\) are supported on bounded intervals, the complexity can be improved. We assume that, after discretization, \(\varvec{h}_k\) and \(\varvec{w}_k\) are compactly supported, with support length \(q_k\le n\) and \(p_k\le n\), respectively.

Lemma 4

A matrix-vector product of type (4) can be implemented with a complexity that does not exceed

operations.

Proof

A convolution-product of type \(\varvec{h}_k\star (\varvec{w}_k\odot \varvec{u})\) can be evaluated in \(O((p_k+q_k) \log (p_k+q_k))\) operations. Indeed, the support of \(\varvec{h}_k\star (\varvec{w}_k\odot \varvec{u})\) has no more than \(p_k+q_k\) contiguous nonzero elements. Using the Stockham sectioning algorithm [39], the complexity can be further decreased to \(O((p_k+q_k) \log _2(\min (p_k,q_k)))\) operations. This idea was proposed in [27]. \(\square \)

4 Projections on Linear Subspaces

We now turn to the problem of choosing the functions \(h_k\) and \(w_k\) in Eq. (4). The idea studied in this section is to fix a subspace \(E_m=\mathrm {span}(e_k, k\in \{1,\ldots , m\})\) of \(L^2(\varOmega )\) and to approximate \(T(x,\cdot )\) as:

For instance, the coefficients \(c_k\) can be chosen so that \(T_m(x,\cdot )\) is a projection of \(T(x,\cdot )\) onto \(E_m\). We propose to analyze three different family of functions \(e_k\): Fourier atoms, wavelets atoms and B-splines. We analyze their complexity and approximation properties as well as their respective advantages.

Kohn–Nirenberg symbols of the kernels given in Examples 1, 2 and 3 in \(\log _{10}\) scale. Observe how the decay speed from the center (low frequencies) to the outer parts (high frequencies) changes depending on the TVIR smoothness. Note the lowest values of the Kohn–Nirenberg symbol have been set to \(10^{-4}\) for visualization purposes. a Kernel 1, b kernel 2 and c kernel 3

4.1 Fourier Decompositions

It is well known that functions in \(H^s(\varOmega )\) can be well approximated by linear combination of low-frequency Fourier atoms. This loose statement is captured by the following lemma.

Lemma 5

([18, 19]) Let \(f\in H^s(\varOmega )\) and \(f_m\) denote its partial Fourier series:

where \(e_k(y) =\exp (-2 i \pi k y)\). Then

The so-called Kohn–Nirenberg symbol N of H is defined for all \((x,k)\in \varOmega \times {{\mathbb {Z}}}\) by

Illustrations of different Kohn–Nirenberg symbols are provided in Fig. 2.

Corollary 1

Set \(e_k(y) =\exp (-2 i \pi k y)\) and define \(T_m\) by:

Then, under Assumptions 1 and 2

Proof

for some constant C and for all \(x \in \varOmega \). In addition, by Assumption 2, \(\Vert T_m(x,\cdot )-T(x,\cdot ) \Vert _{L^2(\varOmega )}=0\) for \(|x|>\kappa /2\). Therefore:

\(\square \)

As will be seen later, the convergence rate (41) is optimal in the sense that no product-convolution expansion of order m can achieve a better rate under the sole Assumptions 1 and 2.

Corollary 2

Let \(\epsilon >0\) and set \(m = \lceil C \epsilon ^{-1/s} \kappa ^{1/2s}\rceil \). Under Assumptions 1 and 2, \(H_m\) satisfies \(\Vert H - H_m \Vert _{HS} \le \epsilon \) and products with \(H_m\) and \(H_m^*\) can be evaluated with no more than \(O(\kappa ^{1/2s} n \log n\epsilon ^{-1/s} )\) operations.

Proof

Since Fourier atoms are not localized in the time domain, the modulation functions \(\varvec{w}_k\) are supported on intervals of size \(p = n\). The complexity of computing a matrix vector product is therefore \(O( m n \log (n) )\) operations by Lemma 4. \(\square \)

Finally, let us mention that computing the discrete Kohn–Nirenberg symbol \(\varvec{N}\) costs \(O(\kappa n^2\log _2(n))\) operations (\(\kappa n\) discrete Fourier transforms of size n). The storage cost of this Fourier representation is \(O(m\kappa n)\) since one has to store \(\kappa n\) coefficients for each of the m vectors \(\varvec{h}_k\).

In the next two sections, we show that replacing Fourier atoms by wavelet atoms or B-splines preserves the optimal rate of convergence in \(O(\sqrt{\kappa } m^{-s})\), but has the additional advantage of being localized in space, thereby reducing complexity.

4.2 Spline Decompositions

B-Splines form a Riesz basis with dual Riesz basis of form [8]:

The projection \(f_m\) of any \(f\in L^2(\varOmega )\) onto \({\mathcal {B}}_{\alpha ,m}\) can be expressed as:

Theorem 1

( [4, p. 87] or [19, p. 420]) Let \(f \in H^s(\varOmega )\) and \(\alpha \ge s\), then

The following result directly follows.

Corollary 3

Set \(\alpha \ge s\). For each \(x\in \varOmega \), let \((c_k(x))_{0\le k\le m-1}\) be defined as

Define \(T_m\) by:

If \(\alpha \ge s\), then, under Assumptions 1 and 2,

Proof

The proof is similar to that of Corollary (1). \(\square \)

Corollary 4

Let \(\epsilon >0\) and set \(m = \lceil C \epsilon ^{-1/s} \kappa ^{1/2s} \rceil \). Under Assumptions 1 and 2, \(H_m\) satisfies \(\Vert H - H_m \Vert _{HS} \le \epsilon \) and products with \(H_m\) and \(H_m^*\) can be evaluated with no more than

operations. For small \(\epsilon \) and large n, the complexity behaves like

Proof

In this approximation, m B-splines are used to cover \(\varOmega \). B-splines have a compact support of size \((\alpha +1)/m\). This property leads to windowing vector \(\varvec{w}_k\) with support of size \(p = \lceil (\alpha +1)\frac{n}{m}\rceil \). Furthermore, the vectors \((\varvec{h}_k)\) have a support of size \(q = \kappa n\). Combining these two results with Lemma 4 and Corollary 3 yields the result for the choice \(\alpha = s\). \(\square \)

The complexity of computing the vectors \(\varvec{c}_k\) is \(O(\kappa n^2\log (n))\) (\(\kappa n\) projections with complexity \(n\log (n)\), see, e.g., [41]).

As shown in Corollary (4), B-splines approximations are preferable over Fourier decompositions whenever the support size \(\kappa \) is small.

4.3 Wavelet Decompositions

Lemma 6

([31, Theorem 9.5]) Let \(f\in H^s(\varOmega )\) and \(f_m\) denote its partial wavelet series:

where \(\psi \) is a Daubechies wavelet with \(\alpha > s\) vanishing moments and \(c_\mu =\langle \psi _{\mu },f\rangle \). Then

A direct consequence is the following corollary.

Corollary 5

Let \(\psi \) be a Daubechies wavelet with \(\alpha = s+1\) vanishing moments. Define \(T_m\) by:

where \(c_\mu (x) = \langle \psi _{\mu },T(x,\cdot ) \rangle \). Then, under Assumptions 1 and 2

Proof

The proof is identical to that of Corollary (1). \(\square \)

Proposition 1

Let \(\epsilon >0\) and set \(m = \lceil C \epsilon ^{-1/s} \kappa ^{1/2s} \rceil \). Under Assumptions 1 and 2, \(H_m\) satisfies \(\Vert H - H_m \Vert _{HS} \le \epsilon \) and products with \(H_m\) and \(H_m^*\) can be evaluated with no more than

operations. For small \(\epsilon \), the complexity behaves like

Proof

In (56), the windowing vectors \(\varvec{w}_k\) are wavelets \(\varvec{\psi }_\mu \) of support of size \(\min ( (2s+1) n 2^{-|\mu |}, n )\). Therefore, each convolution has to be performed on intervals of size \(|\varvec{\psi }_\mu | + q + 1\). Since there are \(2^{j}\) wavelets at scale j, the total number of operations is:

\(\square \)

“Wavelet symbols” of the operators given in Examples 1, 2 and 3 in \(\log _{10}\) scale. The red bars indicate separations between scales. Notice that the wavelet coefficients in kernel 1 rapidly decay as scales increase. The decay is slower for kernels 2 and 3 which are less regular. The adaptivity of wavelets can be visualized in kernel 3: Some wavelet coefficients are non zero at large scales, but they are all concentrated around discontinuities. Therefore, only a few number of couples \((c_\mu ,\psi _\mu )\) will be necessary to encode the discontinuities. This was not the case with Fourier or B-spline atoms. a Kernel 1, b, kernel 2 and c kernel 3 (Color figure online)

Computing the vectors \(\varvec{c}_\mu \) costs \(O(\kappa s n^2)\) operations (\(\kappa n\) discrete wavelet transforms of size n). The storage cost of this wavelet representation is \(O(m\kappa n)\) since one has to store \(\kappa n\) coefficients for each of the m functions \(\varvec{h}_k\).

As can be seen from this analysis, wavelet and B-spline approximations roughly have the same complexity over the class \({\mathcal {T}}^s\). The main advantage of wavelets compared to B-splines with fixed knots is that they are known to characterize much more general function spaces than \(H^s(\varOmega )\). For instance, if all functions \(T(x,\cdot )\) have a single discontinuity at a given \(y\in \varOmega \), only a few coefficients \(c_\mu (x)\) will remain of large amplitude. Wavelets will be able to efficiently encode the discontinuity, while B-splines with fixed knots—which are not localized in nature—will fail to well approximate the TVIR. It is therefore possible to use wavelets in an adaptive way. This effect is visible in Fig. 3c: Despite discontinuities, only wavelets localized around the discontinuities yield large coefficients. In the next section, we propose two other adaptive methods, in the sense that they are able to automatically adapt to the TVIR regularity.

4.4 Interpolation VS Approximation

In all previous results, we constructed the functions \(w_k\) and \(h_k\) in 4 by projecting \(T(x,\cdot )\) onto linear subspaces. This is only possible if the whole TVIR T is available. In very large-scale applications, this assumption is unrealistic, since the TVIR contains \(n^2\) coefficients, which cannot even be stored. Instead of assuming a full knowledge of T, some authors (e.g., [34]) assume that the impulse responses \(T(\cdot , y)\) are available only at a discrete set of points \(y_i=i/m\) for \(1\le i\le m\).

In that case, it is possible to interpolate the impulse responses instead of approximating them. Given a linear subspace \(E_m=\mathrm {span}(e_k, k\in \{1,\ldots , m\})\), where the atoms \(e_k\) are assumed to be linearly independent, the functions \(c_k(x)\) in (36) are chosen by solving the set of linear systems:

In the discrete setting, under Assumption 2, this amounts to solving \(\lceil \kappa n \rceil \) linear systems of size \(m\times m\). The analysis of such a method requires using very different tools. We refer the interested reader to our recent work [5], where we investigate the rates of convergence with respect to the number of impulse responses, their geometry and the level of noise on the data.

4.5 On Meyer’s Operator Representation

Up to now, we only assumed a regularity of T in the y direction, meaning that the impulse responses vary smoothly in space. In many applications, the impulse responses themselves are smooth. In this section, we show that this additional regularity assumption can be used to further compress the operator. Finding a compact operator representation is a key to treat identification or estimation problems (e.g., blind deblurring in imaging), see, e.g., [30].

Since \((\psi _{\lambda })_{\lambda \in \varLambda }\) is a Hilbert basis of \(L^2(\varOmega )\), the set of tensor product functions \((\psi _\lambda \otimes \psi _\mu )_{\lambda \in \varLambda ,\mu \in \varLambda }\) is a Hilbert basis of \(L^2(\varOmega \times \varOmega )\). Therefore, any \(T\in L^2(\varOmega \times \varOmega )\) can be expanded as:

The main idea of the construction in this section consists of keeping only the coefficients \(c_{\lambda ,\mu }\) of large amplitude. A similar idea was proposed in the BCR paper [3]Footnote 2, except that the kernel K was expanded instead of the TVIR T. Decomposing T was suggested by Beylkin at the end of [2] without a precise analysis.

In this section, we assume that \(T\in H^{r,s}(\varOmega \times \varOmega )\), where

This space arises naturally in applications, where the impulse response regularity r might differ from the regularity s of their variations. Notice that \(H^{2s}(\varOmega \times \varOmega )\subset H^{s,s}(\varOmega \times \varOmega ) \subset H^{s}(\varOmega )\).

Theorem 2

Assume that \(T\in H^{r,s}(\varOmega \times \varOmega )\) and satisfies Assumption 2. Assume that \(\psi \) has \(\max (r,s)+1\) vanishing moments. Let \(c_{\lambda ,\mu }= \langle T, \psi _\lambda \otimes \psi _\mu \rangle \). Define

Let \(m\in {{\mathbb {N}}}\), set \(m_1 =\lceil m^{s/(r+s)}\rceil \), \(m_2=\lceil m^{r/(r+s)}\rceil \) and \(H_m=H_{m_1,m_2}\). Then

Proof

First notice that

where \(c_\mu (x) = \langle T(x,\cdot ) , \psi _\mu \rangle \). From Corollary 5, we get:

Now, notice that \(c_\mu \in H^r(\varOmega )\). Indeed, for all \(0 \le k \le r\), we get:

Therefore, we can use Lemma 6 again to show:

Finally, using the triangle inequality, we get:

By setting \(m_1=m_2^{s/r}\), the two approximation errors in the right-hand side of (79) are balanced. This motivates the choice of \(m_1\) and \(m_2\) indicated in the theorem. \(\square \)

The approximation result in inequality (70) is worse than the previous ones. For instance if \(r=s\), then the bound becomes \(O(\sqrt{\kappa } m^{-s/2})\) instead of \(O(\sqrt{\kappa }m^{-s})\) in all previous theorems. The great advantage of this representation is the operator storage: Until now, the whole set of vectors \((\varvec{c}_\mu )\) had to be stored (\(O(\kappa n m)\) values), while now, only m coefficients \(c_{\lambda ,\mu }\) are required. For instance, in the case \(r=s\), for an equivalent precision, the storage cost of the new representation is \(O(\kappa m^2)\) instead of \(O(\kappa nm)\). Figure 4 illustrates the compression properties of Meyer’s representations.

In addition, evaluating matrix-vector products can be achieved rapidly by using the following trick:

By letting \(\tilde{\varvec{c}}_\mu = \sum _{|\lambda |\le \log _2(m_1)} c_{\lambda ,\mu } \varvec{\psi }_{\lambda }\), we get

which can be computed in \(O(m_2 \kappa n \log _2(\kappa n))\) operations. This remark leads to the following proposition.

Proposition 2

Assume that \(T\in H^{r,s}(\varOmega \times \varOmega )\) and that it satisfies Assumption 2. Set \(m=\left\lceil \left( \frac{\epsilon }{C\sqrt{\kappa }} \right) ^{-(r+s)/rs}\right\rceil \). Then, the operator \(H_m\) defined in Theorem 2 satisfies \(\Vert H-H_m\Vert _{HS}\le \epsilon \) and the number of operations necessary to evaluate a product with \(H_m\) or \(H_m^*\) is bounded above by \(O\left( \epsilon ^{-1/s} \kappa ^{\frac{2s+1}{2s}} n \log _2(n)\right) \).

Notice that the complexity of matrix-vector products is unchanged compared to the wavelet or spline approaches with a much better compression ability. However, this method requires a preprocessing to compute \(\tilde{\varvec{c}}_\mu \) with complexity \(\epsilon ^{-1/s} \kappa ^{1/2s} n\) (Fig. 4).

5 Adaptive Decompositions

In the last section, all methods shared the same principle: project \(T(x,\cdot )\) on a fixed basis for each \(x\in \varOmega \). Instead of fixing a basis, one can try to find a basis adapted to the operator at hand. This idea was proposed in [21] and [17].

5.1 Singular Value Decompositions

The authors of [21] proposed to use a singular value decomposition (SVD) of the TVIR in order to construct the functions \(h_k\) and \(w_k\). In this section, we first detail this idea and then analyze it from an approximation theoretic point of view. Let \(J:L^2(\varOmega )\rightarrow L^2(\varOmega )\) denote the linear integral operator with kernel \(T\in {\mathcal {T}}^s\). First notice that J is a Hilbert–Schmidt operator since \(\Vert J\Vert _{HS}=\Vert H\Vert _{HS}\). By Lemma 2 and since Hilbert–Schmidt operators are compact, there exists two orthonormal bases \((e_k)\) and \((f_k)\) of \(L^2(\varOmega )\) such that J can be decomposed as

leading to

The following result is a standard.

Theorem 3

For a given m, a set of functions \((h_k)_{1\le k\le m }\) and \((w_k)_{1\le k\le m }\) that minimizes \(\Vert H_m - H\Vert _{HS}\) is given by:

Moreover, if \(T(x,\cdot )\) satisfies Assumptions 1 and 2, we get:

Proof

The proof of optimality (86) is standard. Since \(T_m\) is the best rank m approximation of T, it is necessarily better than bound (41), yielding (86). \(\square \)

Theorem 4

For all \(\epsilon > 0\) and \(m<n\), there exists an operator H with TVIR satisfying 1 and 2 such that:

Proof

In order to prove (87), we construct a “worst case” TVIR T. We first begin by constructing a kernel T with \(\kappa =1\) to show a simple pathological TVIR. Define T by:

where \(f_k(x) = \exp (2i\pi k x)\) is the k-th Fourier atom, \(\sigma _0=0\) and \(\sigma _k = \sigma _{-k} = \frac{1}{|k|^{s+1/2+\epsilon /2}}\) for \(|k|\ge 1\). With this choice,

is real for all (x, y). We now prove that \(T\in {\mathcal {T}}^s\). The k-th Fourier coefficient of \(T(x,\cdot )\) is given by \(\sigma _k f_k(x)\) which is bounded by \(\sigma _k\) for all x. By Lemma 1, \(T(x,\cdot )\) therefore belongs to \(H^{s}(\varOmega )\) for all \(x\in \varOmega \). By construction, the spectrum of T is \((|\sigma _k|)_{k\in {{\mathbb {N}}}}\), therefore for any rank \(2m+1\) approximation of T, we get:

proving the result for \(\kappa =1\). Notice that the kernel K of the operator with TVIR T only depends on x:

Therefore, the worst-case TVIR exhibited here is that of a rank 1 operator H. Obviously, it cannot be well approximated by product-convolution expansions.

Let us now construct a TVIR satisfying Assumption 2. For this, we first construct an orthonormal basis \((\tilde{f}_k)_{k\in {{\mathbb {Z}}}}\) of \(L^2([-\kappa /2,\kappa /2])\) defined by:

The worst-case operator considered now is defined by:

Its spectrum is \((|{\tilde{\sigma }}_k|)_{k\in {{\mathbb {Z}}}}\), and we get

By Lemma 5, if \({\tilde{\sigma }}_k = \frac{\kappa }{(1+|k|^2)^s|k|^{1+\epsilon }}\), then \(\Vert T(x,\cdot )\Vert _{H^s(\varOmega )}\) is uniformly bounded by a constant independent of \(\kappa \). Moreover, by reproducing the reasoning in (90), we get:

\(\square \)

Even if the SVD provides an optimal decomposition, there is no guarantee that functions \(e_k\) are supported on an interval of small size. As an example, it suffices to consider the “worst case” TVIR given in Eq. (88). Therefore, vectors \(\varvec{w}_k\) are generically supported on intervals of size \(p = n\). This yields the following proposition.

Corollary 6

Let \(\epsilon >0\) and set \(m = \lceil C \epsilon ^{-1/s} \kappa ^{1/2s}\rceil \). Then, \(H_m\) satisfies \(\Vert H - H_m \Vert _{HS} \le \epsilon \) and a product with \(H_m\) and \(H_m^*\) can be evaluated with no more than \(O( \kappa ^{1/2s} n \log n\epsilon ^{-1/s})\) operations.

Computing the first m singular vectors in (84) can be achieved in roughly \(O(\kappa n^2\log (m))\) operations thanks to recent advances in randomized algorithms [25]. The storage cost for this approach is O(mn) since the vectors \(\varvec{e}_k\) have no reason to be compactly supported.

5.2 The Optimization Approach in [17]

In [17], the authors propose to construct the windowing functions \(w_k\) and the filters \(h_k\) using constrained optimization procedures. For a fixed m, they propose solving:

under an additional constraint that \(\mathrm {supp}(w_k)\subset \omega _k\) with \(\omega _k\) chosen so that \(\cup _{k=1}^m \omega _k =\varOmega \). A decomposition of type 99 is known as structured low-rank approximation [9]. This problem is nonconvex, and to the best of our knowledge, there currently exists no algorithm running in a reasonable time to find its global minimizer. It can however be solved approximately using alternating minimization like algorithms.

Depending on the choice of the supports \(\omega _k\), different convergence rates can be expected. However, by using the results for B-splines in Sect. 4.2, we obtain the following proposition.

Proposition 3

Set \(\omega _k=[(k-1)/m,k/m+s/m]\) and let \((h_k,w_k)_{1\le k \le m}\) denote the global minimizer of (99). Define \(T_m\) by \(T_m(x,y) = \sum _{k=1}^m h_k(x) w_k(y)\). Then:

Set \(m=\lceil \kappa ^{1/2s} C\epsilon ^{-1/s}\rceil \), then \(\Vert H_m-H\Vert _{HS}\le \epsilon \) and the evaluation of a product with \(H_m\) or \(H_m^*\) is of order

Proof

First notice that cardinal B-Splines are also supported on \([(k-1)/m,k/m+s/m]\). Since the method in [17] provides the best choices for \((h_k,w_k)\), the distance \(\Vert H_m-H\Vert _{HS}\) is necessarily lower than that obtained using B-splines in Corollary 3. \(\square \)

Finally, let us mention that—owing to Corollary 5—it might be interesting to use the optimization approach (99) with windows of varying sizes.

6 Summary and Extensions

6.1 A Summary of All Results

Table 1 summarizes the results derived so far under Assumptions 1 and 2. In the particular case of Meyer’s methods, we assume that \(T\in H^{r,s}(\varOmega \times \varOmega )\) instead of Assumption 1. As can be seen in this table, different methods should be used depending on the application. The best methods are:

-

Wavelets: They are adaptive and have a relatively low construction complexity, and matrix-vector products also have the best complexity.

-

Meyer: This method has a big advantage in terms of storage. The operator can be represented very compactly with this approach. It has a good potential for problems where the operator should be inferred (e.g., blind deblurring). It however requires stronger regularity assumptions.

-

The SVD and the method proposed in [17] both share an optimal adaptivity. The representation however depends on the operator and it is more costly to evaluate it.

6.2 Extensions to Higher Dimensions

Most of the results provided in this paper are based on standard approximation results in 1D, such as Lemmas 5, 6 and 1. All these lemmas can be extended to higher dimension, and we refer the interested reader to [18, 19, 31, 36] for more details.

We now assume that \(\varOmega =[0,1]^d\) and that the diameter of the impulse responses is bounded by \(\kappa \in [0,1]\). Using the mentioned results, it is straightforward to show that the approximation rate of all methods now becomes

The space \(\varOmega \) can be discretized on a finite dimensional space of size \(n^d\). Similarly, all complexity results given in Table 1 are still valid by replacing n by \(n^d\), \(\epsilon ^{-1/s}\) by \(\epsilon ^{-d/s}\) and \(\kappa \) by \(\kappa ^d\).

6.3 Extensions to Least Regular Spaces

Until now, we assumed that the TVIR T belongs to Hilbert spaces (see, e.g., Assumption 1). This assumption was deliberately chosen easy to clarify the presentation. The results can most likely be extended to much more general spaces using nonlinear approximation theory results [18].

For instance, assume that \(T\in BV(\varOmega \times \varOmega )\), the space of functions with bounded variations. Then, it is well known (see, e.g., [11]) that T can be expressed compactly on an orthonormal basis of tensor product wavelets. Therefore, the product-convolution expansion 4 could be used by using the trick proposed in 82.

Similarly, most of the kernels found in partial differential equations (e.g., Calderòn–Zygmund operators) are singular at the origin. Once again, it is well known [32] that wavelets are able to capture the singularities and the proposed methods can most likely be applied to this setting too.

A precise setting useful for applications requires more work and we leave this issue open for future work.

6.4 Controls in Other Norms

In all the paper, we only controlled the Hilbert–Schmidt norm \(\Vert \cdot \Vert _{HS}\). This choice simplifies the analysis and also allows getting bounds for the spectral norm

since \(\Vert H\Vert _{2\rightarrow 2} \le \Vert H\Vert _{HS}\). In applications, it often makes sense to consider other operator norms defined by

where \(\Vert \cdot \Vert _{X}\) and \(\Vert \cdot \Vert _{Y}\) are norms characterizing some function spaces. We showed in [20] that this idea could highly improve practical approximation results.

Unfortunately, it is not clear yet how to extend the proposed results and algorithms to such a setting and we leave this question open for the future. Let us mention that our previous experience shows that this idea can highly change the method’s efficiency.

7 Conclusion

In this paper, we analyzed the approximation rates and numerical complexity of product-convolution expansions. This approach was shown to be efficient whenever the time- or space-varying impulse response of the operator is well approximated by a low-rank tensor. We showed that this situation occurs under mild regularity assumptions, making the approach relevant for a large class of applications. We also proposed a few original implementations of this methods based on orthogonal wavelet decompositions and analyzed their respective advantages precisely. Finally, we suggested a few ideas to further improve the practical efficiency of the method.

References

Arigovindan, M., Shaevitz, J., McGowan, J., Sedat, J.W., Agard, D.A.: A parallel product-convolution approach for representing the depth varying point spread functions in 3D widefield microscopy based on principal component analysis. Opt. Express 18(7), 6461–6476 (2010)

Beylkin, G.: On the representation of operators in bases of compactly supported wavelets. SIAM J. Numer. Anal. 29(6), 1716–1740 (1992)

Beylkin, G., Coifman, R., Rokhlin, V.: Fast wavelet transforms and numerical algorithms I. Appl. Comput. Harmon. Anal. 44(2), 141–183 (1991)

Bezhaev, A.Y., Vasilenko, V.A.: Variational Theory of Splines. Springer, Berlin (2001)

Bigot, J., Escande, P., Weiss, P.: Estimation of linear operators from scattered impulse responses. arXiv preprint arXiv:1610.04056 (2016)

Börm, S., Grasedyck, L., Hackbusch, W.: Introduction to hierarchical matrices with applications. Eng. Anal. Bound. Elem. 27(5), 405–422 (2003)

Busby, R., Smith, H.: Product-convolution operators and mixed-norm spaces. Trans. Am. Math. Soc. 263(2), 309–341 (1981)

Christensen, O.: An Introduction to Frames and Riesz Bases. Springer, New York (2013)

Chu, M., Funderlic, R., Plemmons, R.: Structured low rank approximation. Linear Algebra Appl. 366, 157–172 (2003)

Ciarlet, P.G.: The Finite Element Method for Elliptic Problems, vol. 40. SIAM, Philadelphia (2002)

Cohen, A., Dahmen, W., Daubechies, I., DeVore, R.: Harmonic analysis of the space BV. Rev. Mat. Iberoam. 19(1), 235–263 (2003)

Cohen, A., Daubechies, I., Vial, P.: Wavelets on the interval and fast wavelet transforms. Appl. Comput. Harmon. Anal. 1(1), 54–81 (1993)

Dahmen, W., Prößdorf, S., Schneider, R.: Wavelet approximation methods for pseudodifferential equations II: matrix compression and fast solution. Adv. Comput. Math. 1(3), 259–335 (1993)

Dahmen, W., Prössdorf, S., Schneider, R.: Wavelet approximation methods for pseudodifferential equations: I stability and convergence. Math. Z. 215(1), 583–620 (1994)

Daubechies, I.: Orthonormal bases of compactly supported wavelets. Commun. pur. appl. math. 41(7), 909–996 (1988)

Daubechies, I.: Ten Lectures on Wavelets, vol. 61. SIAM, Philadelphia (1992)

Denis, L., Thiébaut, E., Soulez, F., Becker, J.-M., Mourya, R.: Fast approximations of shift-variant blur. Int. J. Comput. Vis. 115(3), 253–278 (2015)

DeVore, R.A.: Nonlinear approximation. Acta. Numer. 7, 51–150 (1998)

DeVore, R.A., Lorentz, G.G.: Constructive Approximation, vol. 303. Springer, Berlin (1993)

Escande, P., Weiss, P.: Sparse wavelet representations of spatially varying blurring operators. SIAM J. Imaging Sci. 8(4), 2976–3014 (2015)

Flicker, R.C., Rigaut, F.J.: Anisoplanatic deconvolution of adaptive optics images. J. Opt. Soc. Am. A 22(3), 504–513 (2005)

Gilad, E., Von Hardenberg, J.: A fast algorithm for convolution integrals with space and time variant kernels. J. Comput. Phys. 216(1), 326–336 (2006)

Griffiths, L.J., Smolka, F.R., Trembly, L.D.: Adaptive deconvolution: a new technique for processing time-varying seismic data. Geophysics 42(4), 742–759 (1977)

Hackbusch, W., Nowak, Z.P.: On the fast matrix multiplication in the boundary element method by panel clustering. Numer. Math. 54(4), 463–491 (1989)

Halko, N., Martinsson, P.-G., Tropp, J.A.: Finding structure with randomness: probabilistic algorithms for constructing approximate matrix decompositions. SIAM Rev. 53(2), 217–288 (2011)

Helemskii, A.Y.: Lectures and Exercises on Functional Analysis, vol. 233. American Mathematical Society, Providence (2006)

Hirsch, M., Sra, S., Scholkopf, B., Harmeling, S.: Efficient filter flow for space-variant multiframe blind deconvolution. In: Proc. CVPR IEEE (2010)

Hrycak, T., Das, S., Matz, G., Feichtinger, H.G.: Low complexity equalization for doubly selective channels modeled by a basis expansion. IEEE Trans. Signal Process. 58(11), 5706–5719 (2010)

Katznelson, Y.: An Introduction to Harmonic Analysis. Cambridge University Press, Cambridge (2004)

Kozek, W., Pfander, G.E.: Identification of operators with bandlimited symbols. SIAM J. Math. Anal. 37(3), 867–888 (2005)

Mallat, S.: A Wavelet Tour of Signal Processing. Academic Press, New York (1999)

Meyer, Y.: Wavelets and Operators, vol. 1. Cambridge University Press, Cambridge (1995)

Miraut, D., Portilla, J.: Efficient shift-variant image restoration using deformable filtering (Part I). EURASIP J. Adv. Signal Process. 2012, 100 (2012)

Nagy, J.G., O’Leary, D.P.: Restoring images degraded by spatially variant blur. SIAM J. Sci. Comput. 19(4), 1063–1082 (1998)

Oseledets, I., Tyrtyshnikov, E.: TT-cross approximation for multidimensional arrays. Linear Algebra Appl. 432(1), 70–88 (2010)

Pinkus, A.: N-Widths in Approximation Theory, vol. 7. Springer, Berlin (2012)

Roh, J.C., Rao, B.D.: An efficient feedback method for MIMO systems with slowly time-varying channels. In: IEEE WCNC, vol. 2, pp. 760–764. IEEE (2004)

Sawchuk, A.A.: Space-variant image motion degradation and restoration. Proc. IEEE 60(7), 854–861 (1972)

Stockham, T.G.: High-speed convolution and correlation. In: Proceedings of the April 26–28, 1966, Spring joint computer conference, pp. 229–233. ACM (1966)

Trussell, J., Fogel, S.: Identification and restoration of spatially variant motion blurs in sequential images. IEEE Trans. Image Process. 1(1), 123–126 (1992)

Unser, M., Aldroubi, A., Eden, M.: B-spline signal processing. Part I—theory. IEEE Trans. Signal Process. 41(2), 821–833 (1993)

Acknowledgements

We began investigating a much narrower version of the problem in a preliminary version of [20]. An anonymous reviewer however suggested that it would be more interesting to make a general analysis and we therefore discarded the aspects related to product-convolution expansions from [20]. We thank the reviewer for motivating us to initiate this research. We also thank Sandrine Anthoine, Jérémie Bigot, Caroline Chaux, Jérôme Fehrenbach, Hans Feichtinger, Clothilde Mélot, Anh-Tuan Nguyen and Bruno Torrésani for fruitful discussions on related matters. The authors are particularly grateful to Hans Feichtinger for his numerous feedbacks on a preliminary version of this paper and for suggesting the name product-convolution.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Escande, P., Weiss, P. Approximation of Integral Operators Using Product-Convolution Expansions. J Math Imaging Vis 58, 333–348 (2017). https://doi.org/10.1007/s10851-017-0714-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10851-017-0714-8

Keywords

- Integral operators

- Wavelet

- Spline

- Structured low-rank decomposition

- Numerical complexity

- Approximation rate

- Fast Fourier transform