Abstract

In recent years, \(\ell _1\)-regularized least squares have become a popular approach to image deblurring due to the edge-preserving property of the \(\ell _1\)-norm. In this paper, we consider the nonnegatively constrained quadratic program reformulation of the \(\ell _1\)-regularized least squares problem and we propose to solve it by an efficient modified Newton projection method only requiring matrix–vector operations. This approach favors nonnegative solutions without explicitly imposing any constraints in the \(\ell _1\)-regularized least squares problem. Experimental results on image deblurring test problems indicate that the developed approach performs well in comparison with state-of-the-art methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Image deblurring is an important inverse problem arising in many image processing applications in which an unknown image \(\mathbf {x}\in \mathbb {R}^{n}\) has to be estimated from noisy observations \(\mathbf {b}\in \mathbb {R}^{m}\) defined by

where \(\varvec{\eta }\) is the unknown white Gaussian noise vector and \(\mathbf {A}\in \mathbb {R}^{m\times n}\) is the discretized linear blur operator. As usual, images are assumed to be represented as vectors, by storing the pixel values in some (e.g. lexicographical) order.

Least squares optimization with \(\ell _1\)-regularization is now the state-of-the-art approach to image restoration. In fact, in imaging problems, the presence of edges often causes the prior distribution of the unknown image \(\mathbf {x}\) to be not Gaussian and leads to outliers in the regularization term. In this context, the \(\ell _1\)-norm, which is less sensitive to outliers, is advantageous compared to the \(\ell _2\)-norm employed in Tikhonov-like regularization, and can be effectively used to promote sparse solutions [11, 43, 49, 52]. For these reason, the \(\ell _1\)-regularized least squares problem

has recently attracted considerable attention both in the context of imaging inverse problems [1, 26, 42, 58] and of compressed sensing [14–19, 21, 50]. (For simplicity of notation, hereafter \(\Vert \cdot \Vert \) will denote the \(\ell _2\)-norm \(\Vert \cdot \Vert _2\)).

In this work, we focus on \(\ell _1\)-regularized least squares problems (2) arising in image deblurring applications where the observation operator \(\mathbf {A}\) describes spatially invariant blur [6]. Under periodic boundary conditions, \(\mathbf {A}\) is a block circulant with circulant blocks (BCCB) matrix and matrix–vector products can be efficiently performed via the FFT [33]. We suppose that \(m \ge n\) and that \(\mathbf {A}^H\mathbf {A}\) has full rank. This assumption is satisfied in a variety of practical applications and is frequently used in image deblurring and in the literature of imaging inverse problems (see, for example, [4, 23, 27–29, 33, 34, 58]).

A variety of algorithms have been proposed in the literature for the solution of (2), especially in compressed sensing. The state-of-the-art methods for (2) are probably gradient descent-type methods since their computational cost is mainly due to matrix–vector products with \(\mathbf {A}\) and \(\mathbf {A}^H\). This class of methods includes the following popular methods: IST [20], TwIST [10], SparSA [56], FISTA [4], NESTA [5] and AdaptiveSPARSA [30]. SPGL1 [53] and GPSS [42] are gradient projection-type methods for the equivalent Lasso formulation of (2).

Fixed-point continuation methods [31, 32], as well as methods based on Bregman iterations [25, 57] and variable splitting, as SALSA [1] and C-SALSA [2], have also been recently proposed.

The dual formulation of (2) has been considered in the literature; in [27] and [34], a Newton projection method and an active-set-type method are respectively proposed to solve the dual problem.

A different approach to handle the nondifferentiability of the objective function of (2) consists in reformulating the nondifferentiable unconstrained problem (2) as a constrained differentiable one. The disadvantage of this strategy is that, usually, the number of variable is doubled. The interior point method of [35] solves a quadratic programming formulations of (2) obtained by introducing an auxiliary variable; it has been shown to be efficient for large scale problems since a preconditioned gradient method is used to compute the search direction.

An equivalent formulation of (2) as a quadratic programming problem with nonnegativity constraints can be done by splitting \(\mathbf {x}\) into two nonnegative variables representing its positive and negative parts. Several methods have been proposed for the solution of the resulting bound constrained quadratic program as gradient projection [22], interior point [23] and Newton projection [45–47] methods.

The Newton projection methods (also referred to as Two-Metric projection methods) can be interpreted as scaled gradient projection methods where the scaling matrix is the inverse of a modified Hessian [8, 9, 24]. In particular, only the variables in the working set are scaled by the inverse of the corresponding submatrix of the Hessian, where the working set is defined as the complementary of the set of the null variables with positive gradient. Under proper hypothesis on the scaling matrix, the global convergence and local superlinear convergence of Newton projection methods con be proved [8, 24].

In the literature, Newton-like projection methods have been recently developed which are tailor-made for image restoration problems formulated as \(\ell _2\)-regularized optimization problems under nonnegativity constraints, both in the case of Gaussian and Poisson noise [38–40]. In these methods, the Hessian matrix is suitably approximated so that its inversion can be performed in the Fourier space at a very low computational cost. Then, the variables in the working set are scaled by the corresponding submatrix of the inverse approximated Hessian. It is worth mentioning that, using this scaling, the superlinear convergence rate of Newton-based methods is lost. However, this Newton-like projection methods have been shown to perform well compared to accelerated gradient projection algorithms for \(\ell _2\)-regularized least squares problems [12, 37].

This work aims at developing a new efficient and effective \(\ell _1\)-based method for the restoration of images corrupted by blur and Gaussian noise. In particular, we consider the analysis formulation of the \(\ell _1\)-regularized least squares problem for the restoration of images which are quite sparse in the pixel representation as, for example, medical images and astronomical images. This approach has been introduced in [23, 36] and recently in [58]. Observe that in astronomical imaging, the \(\ell _1\)-norm penalty is used as a flux conservation constraint. Therefore, the contribution of this paper is twofold. First, we show, by numerical evidence, that the \(\ell _1\)-regularized least squares problem can be effectively used as a mathematical model of the problem of restoring images degraded by blur and Gaussian noise. Second, we develop an efficient Newton-like projection method for its solution. In the proposed approach, problem (2) is firstly formulated as a nonnegatively constrained quadratic program by splitting the variable \(\mathbf {x}\) into the positive and negative parts. Then, the quadratic program is solved by a special purpose Newton-like projection method where a fair regularized approximation to the Hessian matrix is proposed so that products of its inverse and vectors can be computed at low computational cost. As a result, the only operations required for the search direction computation are matrix–vector products involving \(\mathbf {A}\) and \(\mathbf {A}^H\). Since the developed method uses a fair modification to the Hessian matrix, in the sequel, it will be referred to as Modified Newton Projection (MNP) method. Moreover, the regularization strategy, used to improve the conditioning of the Hessian matrix, penalizes negative entries in \(\mathbf {x}\) so that nonnegative solutions are favored. The convergence of the MNP method is proved even if MNP slows the convergence rate of Newton-type methods. Even if the size of the problem is doubled, the low computational cost per iteration and less iterative steps make MNP quite efficient. It is worth mentioning that a similar approach is described in [46]; however, the projection L1 method of [46] uses, as the classical Two-Metric projection methods, a suitable sub-matrix of the Hessian for scaling the gradient.

The performance of MNP is evaluated on some image restoration problems and is compared with that of the state-of-the-art methods. The results of the comparative study show that MNP is competitive and in some cases is also able to outperform the state-of-the-art methods in terms of computational complexity and achieved accuracy.

The paper is organized as follows. In Sect. 2, the MNP method is presented and its convergence is analyzed. In this section, the computation of the search direction is also discussed. In Sect. 3, the numerical results are presented. Conclusions are given in Sect. 4.

2 The Modified Newton Projection Method

Before introducing our MNP method, we need to reformulate the \(\ell _1\)-regularized least squares problem (2) as a nonnegatively constrained quadratic program (NCQP). This strategy for dealing with the nondifferentiability of the \(\ell _1\)-norm is quite classic in the literature and has been previously adopted by several authors [13, 22, 23, 45, 46].

2.1 Nonnegatively Constrained Quadratic Program Formulation

If we split \(\mathbf {x}\) into its positive and negative parts, that is

where

then, we obtain the following nonnegatively constrained quadratic program formulation of the original \(\ell _1\)-regularized least squares problem (2):

where \(\mathbf {1}\) denotes the \(n\)-dimensional column vector of ones. In [13, 22, 44] it is shown that problems (2) and (3) share the same solutions and that at the solution of (3) either \(u_i\) or \(v_i\) or both are equal to zero.

The gradient of \(\mathcal {F}(\mathbf {u},\mathbf {v})\) is defined by

Henceforth, we will denote by \(\mathbf {y}\) and \(\mathbf {g}\) the \(2n\)-dimensional vectors

where \(\mathbf {g}_\mathbf {u}\) and \(\mathbf {g}_\mathbf {v}\) are respectively the partial derivatives of \(\mathcal {F}\) with respect to \(\mathbf {u}\) and \(\mathbf {v}\).

Observe that, even if by reformulating (2) as a NCQP we have doubled the problem size, the computation of the objective function and its gradient values indeed requires only one multiplication by \(\mathbf {A}\) and one by \(\mathbf {A}^H\).

2.2 Hessian Approximation

The MNP method is basically a Newton-based method where the search direction computation requires the inversion of the Hessian matrix. Unfortunately, the Hessian \(\mathbf {H}\) of \(\mathcal {F}(\mathbf {u},\mathbf {v})\)

is a positive semidefinite matrix. The idea underlying the proposed approach is to substitute the Hessian with a nonsingular approximation. In MNP, we modify \(\mathbf {H}\) by adding a small perturbation to its negative part. More precisely, we use the following Hessian approximation:

where \(\tau \) is a positive parameter and \(\mathbf {I}\) and \(\mathbf {0}\) are respectively the identity and zero matrix of size \(n\). There are several reasons for this choice of the Hessian modification. Firstly, an explicit formula for the inverse of \(\mathbf {H}_\tau \) can be derived, secondly the search direction is computable at moderate cost and finally, negative part of images are penalized. Naturally, other nonsingular Hessian modifications are possible. Adding the positive constant \(\tau \) to all elements of the diagonal \(\mathbf {H}\) results in an Hessian modification such that the multiplication of its inverse by a vector requires more computational cost. Moreover, our numerical experiments indicate that this Hessian approximation tends to be more sensitive to the choice of the parameter \(\tau \). On the other hand, adding a small perturbation to the positive part of \(\mathbf {H}\) would result in a penalization of the positive part of images and in less accurate reconstructions.

Proposition 2.1

Assume that \(\mathbf {A}^H\mathbf {A}\) is nonsingular. Then, \(\mathbf {H}_\tau \) is nonsingular and its inverse is

Proof

We prove that \(\mathbf {H}_\tau \mathbf {M}_\tau =\mathbf {M}_\tau \mathbf {H}_\tau =\mathbf {I}_{2n}\) where \(\mathbf {I}_{2n}\) is the identity matrix of size \(2n\).

We have

Similarly, we have \(\mathbf {M}_\tau \mathbf {H}_\tau =\mathbf {I}_{2n}\) and this concludes the proof.

Proposition 2.2

The inverse Hessian approximation \(\mathbf {M}_\tau \) is a symmetric positive definite matrix.

Proof

Let \(\mathbf {z},\mathbf {w}\in \mathbb {R}^{n}\). We have

Equality holds in (8) if and only if \(\mathbf {z}=0\) and \(\mathbf {z}+\mathbf {w}=0\), i.e. if and only if \(\mathbf {z}=0\) and \(\mathbf {w}=0\). This concludes the proof.

Remark 2.1

The developed strategy for approximating the Hessian matrix \(\mathbf {H}\) is closely related to the method of Lavrentiev regularization [6, 41, 48, 51]. In fact, in Lavretiev regularization, a regularized solution of a linear ill-posed problem with positive semidefinite linear operator \(\mathbf {K}\) is obtained as the solution of a slightly modified equation whose linear operator is \(\mathbf {K}+\tau \mathbf {I}\). Therefore, the Hessian approximation \(\mathbf {H}_\tau \) is indeed a Lavretiev-type regularized approximation to \(\mathbf {H}\) where only the last \(n\) variables are penalized. In this way, large values of the last \(n\) components of the search direction are penalized.

2.3 Algorithm

Firstly, let us introduce the basic notation that enables us to formalize the description of the MNP method. In the sequel, \(\mathcal {A}(\mathbf {y})\) will indicate the set of indices [8, 9, 55]:

where \(\overline{\varepsilon }\) is a small positive parameter and \([\cdot ]^+\) denotes the projection on the positive orthant.

Finally, let \(\mathbf {E}\) and \(\mathbf {F}\) denote the diagonal matrices [55] such that

Given a feasible initial iterate \(\mathbf {y}^{(0)}\in \mathbb {R}^{2n}\), the MNP method is defined by the general iteration

where

and the step-length \(\alpha ^{(k)}\) is determined by the Armijo rule along the projection arc [8, 9]. That is, \(\alpha ^{(k)}\) is the first number of the sequence \(\{2^{-m}\}_{m\in \mathbb {N}}\) such that

where \(\mathbf {y}^{(k)}(2^{-m}) = [\mathbf {y}^{(k)}-2^{-m}\mathbf {p}^{(k)}]^+\), \(\beta \in (0,\frac{1}{2})\). For easier notation, in the following, \(\mathbf {E}^{(k)}\), \(\mathbf {F}^{(k)}\) and \(\mathcal {A}^{(k)}\) will denote respectively the diagonal matrices \(\mathbf {E}(\mathbf {y}^{(k)})\) and \(\mathbf {F}(\mathbf {y}^{(k)})\) and the index set \(\mathcal {A}(\mathbf {y}^{(k)})\).

Remark 2.2

In the Two-Metric projection method originally proposed by Bertsekas [8, 9, 24], the scaling matrix is

Using this scaling, the standard Newton-like projection methods attain the superlinear convergence rate of Newton-like methods. However, the inversion of a submatrix of \(\mathbf {H}\), required in (12), is often impracticable in image deblurring applications. Therefor, in practice, a conjugate gradient version of the Newton-like projection method has to be used where the CG iterations are terminated when the relative residual becomes smaller than a given tolerance. The resulting approximate Newton-CG projection method may slow the convergence rate.

On the other hand, the scaling matrix (10) involves the inverse of the whole approximated Hessian matrix \(\mathbf {H}_\tau \) for which an explicit formula is given in Proposition 2.1. As a result, the search direction of MNP can be computed very quickly.

However, the scaling (10) may significantly slow the convergence rate and MNP may have worse convergence properties.

2.4 Convergence Analysis

As proved in [8, 24], the convergence of Newton-like projection methods only requires the scaling matrices \(\mathbf {S}^{(k)}\) to be positive definite matrices with uniformly bounded eigenvalues. In particular, the global convergence property of these methods can be proved under the general following assumptions [8].

- A1:

-

The gradient \(\nabla _{(\mathbf {u},\mathbf {v})} \mathcal {F}\) is Lipschitz continuous on each bounded set of \(\mathbb {R}^{2n}\).

- A2:

-

There exist positive scalars \(c_1\) and \(c_2\) such that

$$\begin{aligned}&c_1\Vert \mathbf {y}\Vert ^2 \le \mathbf {y}^H \mathbf {S}^{(k)} \mathbf {y} \le c_2 \Vert \mathbf {y}\Vert ^2, \quad \forall \mathbf {y}\in \mathbb {R}^{2n}, \quad k=0,1,\ldots \end{aligned}$$

The key convergence result is provided in Proposition 2 of [7] which is restated here for the shake of completeness.

Proposition 2.3

[7, Proposition 2] Let \(\{\mathbf {y}^{(k)}\}\) be a sequence generated by iteration (9) where \(\mathbf {S}^{(k)}\) is a positive definite symmetric matrix which is diagonal with respect to \(\mathcal {A}^{(k)}\) and \(\alpha ^k\) is computed by the Armijo rule along the projection arc. Under assumptions A1 and A2 above, every limit point of a sequence \(\{\mathbf {y}^{(k)}\}\) is a critical point with respect to problem (3).

Since the objective \(\mathcal {F}\) of (3) is twice continuously differentiable, it satisfies assumption A1.

The inverse Hessian approximation \(\mathbf {M}_\tau \) is a symmetric positive definite matrix (Proposition 2.2) and hence, the scaling matrix \(\mathbf {S}^{(k)}\) defined by (10) is a positive definite symmetric matrix which is diagonal with respect to \(\mathcal {A}^{(k)}\). Therefore, the global convergence of the MNP method is guaranteed if \(\mathbf {S}^{(k)}\) verifies assumption A2.

Proposition 2.4

Given a positive parameter \(\tau >0\), there exist two positive scalars \(c_1^\tau \) and \(c_2^\tau \) such that

Proof

Since \(\mathbf {M}_\tau \) is positive definite, then

where \(\sigma _1^\tau \) and \(\sigma _{2n}^\tau \) are the largest and smallest eigenvalue of \(\mathbf {M}_\tau \), respectively. We have

From (13) it follows that

Moreover we have

Hence, we obtain

and

The thesis immediately follows by setting

Propositions 2.3 and 2.4 ensure the global convergence of the MNP method.

2.5 Implementation of the Search Direction Computation

The computation of the search direction \(\mathbf {p}^{(k)}\) requires the multiplication of a vector by \(\mathbf {M}_\tau \). Let \(\begin{bmatrix}\mathbf {z} \\ \mathbf {w}\end{bmatrix}\in \mathbb {R}^{2n}\) be a given vector, then it immediately follows that

In many image restoration applications, the blurring matrix \(\mathbf {A}\) is severely ill-conditioned and computing \((\mathbf {A}^H\mathbf {A})^{-1}\mathbf {z}\) requires a regularization strategy. Therefore, in our implementation, a Tikhonov-like technique is employed in the search direction computation by approximating the matrix–vector product (14) with

where \(\gamma \) is a positive parameter. The inversion of \(\mathbf {A}^H\mathbf {A}+\gamma \mathbf {I}\) can be efficiently performed in the Fourier space with computational complexity of two Fast Fourier Transforms as follows. In fact, assuming periodic boundary conditions, \(\mathbf {A}\) can be factorized as

where \(\mathbf {U}\) is the two dimensional unitary Discrete Fourier Transform (DFT) matrix and \(\mathbf {D}\) is the diagonal matrix containing the eigenvalues of \(\mathbf {A}\). Thus

The products involving \(\mathbf {U}\) and \(\mathbf {U}^*\) can be performed by using the FFT algorithm at the cost of \(O(n\log _2n)\) operations while the inversion of the diagonal matrix \((|\mathbf {D}|^2+\gamma \mathbf {I})\) has the cost \(O(n)\).

3 Numerical Results

In this section, we present the numerical results of several image restoration test problems. The numerical experiments aim at illustrating the performance of MNP compared with several state-of-the-art methods as SALSA [1], SPARSA [56], FISTA [4], NESTA [5], CGIST [26] and the Split Bregman method [25, 57]. In our comparative study, we also consider first and second-order methods solving, as MNP, the quadratic program (3) such as the nonmonotonic version of GPSR using the Barzilai Borwein technique for the step-length selection [22], the original Two-Metric Projection (TMP) method of Gafni and Bertsekas [8, 24, 45] employing the scaling (12) and a modified Newton projection method obtained by regularizing the full diagonal of the Hessian matrix (5). This last method, which will be indicated as MNP2, requires the inversion of the Hessian approximation:

It can be prove (the proof is similar to that of Proposition 2.1) that the inverse of \(\mathbf {H}_\tau \) is defined as

Formula (16) indicates that, in MNP2, the computation of the search direction requires more computational effort than in MNP.

Finally, MNP has also been compared with the OWLQN method of Andrew and Gao [3] which is probably one of the most effective method proposed for general large-scale \(\ell _1\)-regularized learning.

3.1 Overall Assessment of the Considered Methods

The Matlab source code of the considered methods, when made publicly available by the authors, has been used in the numerical experiments. The OWLQN method is coded, by the authors, in C; a Matlab version, following the instruction provided in [3], has been implemented.

In TMP, the linear system for the search direction computation has been solved by the Conjugate Gradient method. The relative residual tolerance has been fixed at 0.01 and a maximum number of 100 iterations has been allowed. We remark that lower values for the CG relative tolerance produce restorations of worse quality since the Hessian matrix is ill-conditioned.

The numerical experiments have been executed on a Sun Fire V40z server consisting of four 2.4 GHz AMD Opteron 850 processors with 16 GB RAM using Matlab 7.10 (Release R2010a).

For all the considered methods, the initial iterate \(\mathbf {x}^{(0)}\) has been chosen to be the zero image whose pixel values are all equal to zero. The regularization parameter \(\lambda \) of (2) has been heuristically chosen.

MNP depends on the \(\tau \) and \(\gamma \) parameters. After a wide experimentation, we heuristically found that good values for these parameters are

These values have been used in all the presented numerical experiments. It is worth remarking that the values (17) for \(\tau \) and \(\gamma \) are not optimal for each experiments and that the numerical results could be further improved. However, with this parameter setting, the MNP algorithm only depends on the regularization parameter \(\lambda \). We also observe that the SALSA method depends on a penalty parameter \(\mu \) [1] and that the Split Bregman method depends on a “splitting” regularization coefficient \(\nu \) [25, 57]. In all the presented experiments, the values of these parameters have been handtuned for the best mean squared error reduction. The default parameters of the other methods, suggested by the authors have been chosen.

The termination criterion of all the considered methods is based on the relative change in the objective function at the last step. In particular, the methods iteration is terminated when

where \(tol_\phi \) is a small positive parameter. The selection of a fair stopping criterium in image deblurring application is a critical issue. In our numerical experiments, this criterium, also used in [5, 56] appears to provide sufficiently accurate solutions while avoiding excessive computation costs. In general, criterion (18) may not always reliably indicate progress towards optimality, although we did not observe this in our experiments. In such cases, a more appropriate stopping criterion would be based on the norm of the projected gradient or on the duality gap.

A maximum number of 500 iterations has been allowed for each method.

The quality of the restorations provided by the compared methods has been measured by using the Peak Signal-to-Noise Ratio (PSNR) values.

All pixels of the original images described in the following experiments have been first scaled into the range between 0 and 1.

3.2 Experiment 1: The Satellite Image

In the first experiment, the famous \(256\times 256\) satellite image has been considered. The satellite image is a good test image for \(\ell _1\)-based image restoration because it has many pixels with value equal to zero. The observed image of Fig. 1 has been generated by convolving the original image, also shown in Fig. 1, with a Gaussian PSF with variance equal to 2, obtained with the code psfGauss from [33], and then by adding Gaussian noise with noise level equal to \(5\cdot 10^{-3}\). (The noise level \(\text {NL}\) is defined as \(\text {NL}:={\Vert \varvec{\eta }\Vert }\big /{\Vert \mathbf {A}\mathbf {x}_\text {original}\Vert }\) where \(\mathbf {x}_\text {original}\) is the original image.) In Fig. 1 and following, the satellite image intensities are displayed in “negative gray-scale”.

For this test problem, the \(\mu \) and \(\nu \) parameters of the SALSA and Split Bregman methods have been set to \(\mu =5\lambda \) and \(\nu =0.025\lambda \), respectively.

Table 1 reports the PSNR values, the objective values, the CPU times in seconds, the number of performed iterations and the percentage of negative pixel values in the restored images. All the results are averaged over ten runs of each method. The numerical results given in Table 1 have been obtained by using the stopping tolerance values \(tol_\phi =10^{-3}\) and \(tol_\phi =10^{-5}\). Smaller values of \(tol_\phi \) do not improve the visual quality of the restored images even if they produce more accurate solutions to the optimization problem (2). The information in Table 1 is summarized in Fig. 2 where the PSNR values versus time are plotted for all the considered methods.

The results in Table 1 and Fig. 2 show that MNP is able to provide good quality restorations at low computational effort. They also indicate that the inversion of the modified Hessian adopted in MNP2 requires more computational effort.

In Fig. 3, the performance of MNP is compared to the performance of the first-order and second order methods (NESTA has been omitted from these comparisons because of its high computational time, see Table 1). Since PSNR is inversely proportionally to the Mean Squared Error (MSE), Fig. 3 illustrates, in a semi-logarithmic scale, the MSE behavior and the decreasing of the objective function versus time. For the sake of readability, all the methods are not represented in the same picture. The convergence rates of the considered second-order methods (MNP, MNP2, TMP and OWLQN) have also been compared. For these methods, Fig. 4 shows, in a semi-logarithmic scale, the behavior of the gradient norm values versus time; from the graphs it is evident that MNP, MNP2 and TMP decrease the gradient norm slower than OWLQN. As expected, the scaling of the gradient direction employed by MNP and MNP2 reduces the local convergence rate; moreover, in TMP, the use of an iterative solver for the search direction computation leads to slower convergence rate.

Figure 5 compares the image obtained by MNP at \(tol_\phi =10^{-3}\) with those provided by SALSA, Split Bregman, CGIST, MNP2 and OWLQN at \(tol_\phi =10^{-5}\). (For these methods, the images obtained at \(tol_\phi =10^{-3}\) are not shown since their visual quality is clearly poor.) In the Bregman image, for a better visualization, the pixels with negative values image has been set to zero. The images restored by SPARSA and FISTA are not shown because they are practically indistinguishable from those obtained by SALSA and MNP. The visual quality of the GPSR, NESTA and TMP images is inferior and therefore these images are not displayed (see also their PSNR values in Table 1). Observe that the MNP image is visually comparable to the SALSA image while the CGIST image has an inferior visual quality even if its PSNR value is comparable to that of SALSA. Moreover, the MNP2 and OWLQN images are not comparable, in terms of visual quality, to the MNP image; in particular, the MNP2 image seems to be too smooth.

The restored images obtained by SALSA, NESTA and the Split Bregman methods have pixels with negative entries. They are displayed in Fig. 6 where the black pixels correspond to nonnegative pixels values of the reconstructions.

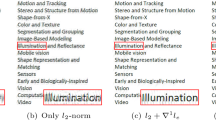

Finally, in order to assess the performance of the image restoration criterium (2), we have compared the \(\ell _1\)-norm regularizer with the \(\ell _2\)-norm and the Total Variation (TV) regularizers, which are widely-used in image restoration. The Lagged Diffusivity Fixed Point method [54] has been considered for the solution of the TV-regularized least squares problem. The \(\ell _2\)-regularized least squares problem has been solved by inverting, in the Fourier space, the linear system of its first order conditions. The MNP method is used for the solution of (2). Table 2 reports the PSNR values and the percentage of negative pixels; Fig. 7 displays the restored images. The superiority of the \(\ell _1\)-norm regularized image is evident both in terms of PSNR values and visual quality improvement. In fact, edges are preserved by the restoration model (2) without any staircasing or blurring effects which are respectively evident in the TV-regularized and \(\ell _2\)-norm regularized images.

3.3 Experiment 2: The Flintstones Image

In the second experiment, the \(512\times 512\) Flintstones image (Fig. 8) has been used. This image is a good test image since has a nice mixture of detail and flat regions. The original Flintstones image has been degraded by two blurring operators, shown in Fig. 8, and by adding varying amounts of Gaussian noise.

In this experiment, MNP has been compared to SALSA, Split Bregman, FISTA, MNP2 and OWLQN. The reasons why we limit our comparative study to these methods are the following. From experiment 1, it is evident that SALSA and FISTA are able to achieve good quality results in little time. Moreover, Split Bregman and OWLQN have been considered because they are well-known methods for general \(\ell _1\) minimization not only proposed for image restoration applications. Finally, MNP2 has been considered because closely-related to MNP.

We have fixed the values \(\mu =2.5\lambda \) and \(\nu =\lambda \) for the SALSA and Split Bregman parameter. The numerical results are summarized in Table 3 where the level of the added noise (first column), the PSNR values, the objective values and the CPU times in seconds are reported for each blurring operator. The reported numerical values are the average over 10 run of each experiment and have been obtained using the tolerance values \(tol_\phi =10^{-3}\) since smaller values of \(tol_\phi \) do not improve the visual quality of the restored images. Figure 9 depicts the MNP restorations from the degraded images with highest noise level (NL=0.01). They asses the ability of the image restoration model (2) to remove blur and noise from the degraded images.

3.4 Experiment 3

This last experiment is based on four famous test images with different features: the \(256\times 256\) Text image, the \(358\times 358\) Moon image, the \(364\times 364\) Concord image and the \(490\times 490\) Spine image (see Fig. 10). These images are example images from the Matlab distribution. They have been corrupted by the blurring operators depicted in Fig. 8 and then Gaussian noise has been added with the same noise level values used in experiment 2. For each noise level, 10 Gaussian noise vectors have been obtained with different noise realizations. This amounts to 100 experiments for each image and to 400 experiments in total.

Also in this experiment, MNP has been compared to SALSA, Split Bregman, FISTA, MNP2 and OWLQN. The numerical results have been obtained by using the tolerance value \(tol_\phi =10^{-3}\). The \(\mu \) and \(\nu \) parameters of the SALSA and Split Bregman methods have been set to the same values of the previous experiment.

Let \(\text {PSNR}_\textsc {method}\) denote the PSNR value given by a method among those considered (MNP, SALSA, Split Bregman, FISTA, MNP2 and OWLQN) and let \({\textsc {Time}}_\textsc {method}\) denote the corresponding CPU time, in seconds. The performance of the methods has been compared in terms of percentage of experiments producing a reconstructed image such that

and

where, for each experiment, \(\text {PSNR}_\textsc {max}\) is the maximum PSNR value and \({\textsc {Time}}_\textsc {min}\) is the minimum time. For each image and for the total 400 experiments, the corresponding percentage values are reported in Tables 4 and 5. Figure 10 depicts the MNP restorations from the images degraded by the \(h1\) blurring operator and Gaussian noise with NL=0.005.

4 Conclusions

This paper describes a new approach to the solution of \(\ell _1\)-regularized least squares problems whose matrix is supposed to be overdetermined and full-rank. This approach solves the nonnegatively constrained quadratic programming reformulation of the original least squares problem by a modified Newton projection method where the Hessian matrix is approximated so that it can be efficiently inverted in the Fourier space. The developed MNP method favors nonnegative solutions of the \(\ell _1\)-regularized least squares problem without explicitly imposing any constraints in the optimization problem. Thus, MNP can also be applied to image restoration problems whose solution may have negative components as in compressed sensing when \(\mathbf {x}\) represents the coefficient vector of the image under some basis. A comparative study with some state-of-the-art methods has been performed in order to evaluate the potential of the described approach. The numerical results show that MNP is competitive with the considered methods in terms of PSNR values while SALSA is often the fastest method.

References

Afonso, M.V., Bioucas-Dias, J.M., Figueiredo, M.A.T.: Fast image recovery using variable splitting and constrained optimization. IEEE Trans. Image Process. 19, 2345–2356 (2010)

Afonso, M.V., Bioucas-Dias, J.M., Figueiredo, M.A.T.: An augmented Lagrangian approach to the constrained optimization formulation of imaging inverse problems. IEEE Trans. Image Process. 20(3), 681–695 (2011)

Andrew, G., Gao, J.F.: Scalable training of l1-regularized log-linear models. In: Proceedings of the 24th International Conference on Machine Learning (2007)

Beck, A., Teboulle, M.: A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2(1), 183–202 (2009)

Becker, S., Bobin, J., Candès, E.J.: NESTA: a fast and accurate first-order method for sparse recovery. SIAM J. Imaging Sci. 4, 1–39 (2011)

Bertero, M., Boccacci, P.: Introduction to Inverse Problems in Imaging. IOP Publishing, Bristol (1998)

Bertsekas, D.: Constrained Optimization and Lagrange Multiplier Methods. Academic Press, New York (1982)

Bertsekas, D.: Projected Newton methods for optimization problem with simple constraints. SIAM J. Control Optim. 20(2), 221–245 (1982)

Bertsekas, D.: Nonlinear Programming, 2nd edn. Athena Scientific, Belmont (1999)

Bioucas-Dias, J., Figueiredo, M.: A new twist: two-step iterative shrinkage/thresholding algorithms for image restoration. IEEE Trans. Image Process. 16(12), 29923004 (2007)

Bloomfield, P., Steiger, W.: Least Absolute Deviations: Theory, Applications, and Algorithms. Progress in Probability and Statistics. Birkhäuser, Boston (1983)

Bonettini, S., Landi, G., Loli Piccolomini, E., Zanni, L.: Scaling techniques for gradient projection-type methods in astronomical image deblurring. Int. J. Comput. Math. 90(1), 9–29 (2013)

Cai, Y., Sun, Y., Cheng, Y., Li, J., Goodison, S.: Fast implementation of l1 regularized learning algorithms using gradient descent methods. In: Proceedings of the 10th SIAM International Conference on Data Mining (SDM), pp. 862–871 (2010)

Candès, E., Romberg, J.: Quantitative robust uncertainty principles and optimally sparse decompositions. Found. Comput. Math. 6, 227–254 (2006)

Candès, E., Romberg, J.: Sparsity and incoherence in compressive sampling. Invest. Probl. 23, 969–985 (2007)

Candès, E., Tao, T.: Near-optimal signal recovery from random projections: universal encoding strategies? IEEE Trans. Inf. Theory 52(12), 5406–5425 (2006)

Candès, E., Romberg, J., Tao, T.: Stable signal recovery from incomplete and inaccurate measurements. Commun. Pure Appl. Math. 59(8), 12071223 (2005)

Candès, E., Romberg, J., Tao, T.: Robust uncertainty principles: exact signal reconstruction from highly incomplete frequency information. IEEE Trans. Inf. Theory 52(2), 489509 (2006)

Chen, S., Donoho, D., Saunders, M.: Atomic decomposition by basis pursuit. SIAM J. Sci. Comput. 20, 33–61 (1998)

Daubechies, I., De Friese, M., De Mol, C.: An iterative thresholding algorithm for linear inverse problems with a sparsity constraint. Commun. Pure Appl. Math. 57, 14131457 (2004)

Donoho, D.: Compressed sensing. IEEE Trans. Inf. Theory 52(4), 12891306 (2006)

Figueiredo, M.A.T., Nowak, R.D., Wright, S.J.: Gradient projection for sparse reconstruction: application to compressed sensing and other inverse problems. IEEE J. Sel. Top. Signal Process. 1, 586–597 (2007)

Fu, H., Ng, M.K., Nikolova, M., Barlow, J.L.: Efficient minimization methods of mixed l2-l1 and l1-l1 norms for image restoration. SIAM J. Sci. Comput. 27(6), 1881–1902 (2006)

Gafni, E.M., Bertsekas, D.P.: Two-metric projection methods for constrained optimization. SIAM J. Control. Optim. 22, 936–964 (1984)

Goldstein, T., Osher, S.: The split Bregman method for l1 regularized problems. SIAM J. Imag. Sci. 2(2), 323–343 (2009)

Goldstein, T., Setzer, S.: High-order methods for basis pursuit. Technical Report CAM report 10–41, UCLA (2010)

Gong, P., Zhang, C.: A fast dual projected Newton method for l1-regularized least squares. In: Proceedings of the Twenty-Second International Joint Conference on Artificial Intelligence, IJCAI’11, vol. 2, pp. 1275–1280 (2011)

Guerquin-Kern, M., Baritaux, J.-C., Unser, M.: Efficient image reconstruction under sparsity constraints with application to MRI and bioluminescence tomography. In ICASSP, IEEE, pp. 5760–5763 (2011)

Guo, X., Li, F., Ng, M.K.: A fast l1-TV algorithm for image restoration. SIAM J. Sci. Comput. 31(3), 2322–2341 (2009)

Hager, W.W., Phan, D.T., Zhang, H.: Gradient-based methods for sparse recovery. SIAM J. Imaging Sci. 4(1), 146–165 (2011)

Hale, E.T., Yin, W., Zhang, Y.: A fixed-point continuation method for l1-regularized minimization with applications to compressed sensing. Technical Report CAAM TR07-07, Rice University, Houston, TX (2007)

Hale, E.T., Yin, W., Zhang, Y.: Fixed-point continuation for l1-minimization: methodology and convergence. SIAM J. Optim. 19, 1107–1130 (2008)

Hansen, P.C., Nagy, J., O’Leary, D.P.: Deblurring Images. Matrices, Spectra and Filtering. SIAM, Philadelphia (2006)

Kim, J., Park, H.: Fast active-set-type algorithms for l1-regularized linear regression. In: Proceedings of the AISTAT, pp. 397–404 (2010)

Kim, S.J., Koh, K., Lustig, M., Boyd, S., Gorinevsky, D.: An interior-point method for large-scale l1-regularized least squares. IEEE J. Sel. Top. Signal Process. 1, 606–617 (2007)

Kuo, S.-S., Mammone, R.J.: Image restoration by convex projections using adaptive constraints and the l1 norm. Trans. Sig. Proc. 40(1), 159 (1992)

Landi, G., Loli Piccolomini, E.: Quasi-Newton projection methods and the discrepancy principle in image restoration. Appl. Math. Comput. 218(5), 2091–2107 (2011)

Landi, G., Loli Piccolomini, E.: An efficient method for nonnegatively constrained total variation-based denoising of medical images corrupted by poisson noise. Comput. Med. Imaging Graph. 36(1), 38–46 (2012)

Landi, G., Loli Piccolomini, E.: An improved Newton projection method for nonnegative deblurring of Poisson-corrupted images with Tikhonov regularization. Numer. Algorithms 60(1), 169–188 (2012)

Landi, G., Loli Piccolomini, E.: NPTool: a Matlab software for nonnegative image restoration with Newton projection methods. Numer. Algorithms 62(3), 487–504 (2013)

Lavrentiev, M.M.: Some Improperly Posed Problems of Mathematical Physics. Springer, New York (1967)

Loris, I., Bertero, M., De Mol, C., Zanella, R., Zanni, L.: Accelerating gradient projection methods for l1-constrained signal recovery by steplength selection rules. Appl. Comput. Harmon. Anal. 27(2), 247–254 (2009)

Nikolova, M.: Minimizers of cost-functions involving nonsmooth data-fidelity terms. Application to the processing of outliers. SIAM J. Numer. Anal. 40, 965994 (2002)

Schmidt, M.: Graphical model structure learning with L1-regularization. Ph.D. thesis, University of British Columbia (2010)

Schmidt, M., Fung, G., Rosalesm, R.: Fast optimization methods for l1 regularization: a comparative study and two new approaches. In: Proceedings of the 18th European Conference on Machine Learning, ECML ’07, Springer, pp. 286–297 (2007)

Schmidt, M., Fung, G., Rosales, R.: Optimization methods for l1-regularization. Technical Report TR-2009-19, UBC (2009)

Schmidt, M., Kim, D., Sra, S.: Optimization for Machine Learning. Projected Newton-type Methods in Machine Learning. MIT Press, Cambridge (2011)

Tautenhahn, U.: On the method of Lavrentiev regularization for nonlinear illposed problems. Invest. Probl. 18, 191–207 (2002)

Tibshirani, R.: Regression shrinkage and selection via the Lasso. J. R. Stat. Soc. Ser. B 58(1), 267288 (1996)

Tropp, J.: Just relax: convex programming methods for identifying sparse signals in noise. IEEE Trans. Inf. Theory 52(3), 10301051 (2006)

Tikhonov, A.N., Arsenin, V.Y.: Solutions of Ill-Posed Problems. Wiley, New York (1977)

Tibshirani, R., Hastie, T., Friedman, J.: The Elements of Statistical Learning. Springer Series in Statistics. Springer, New York (2001)

van den Berg, E., Friedlander, M.P.: Probing the Pareto frontier for basis pursuit solutions. SIAM J. Sci. Comput. 31, 890–912 (2008)

Vogel, C.R., Oman, M.E.: Iterative methods for total variation denoising. SIAM J. Sci. Comput. 17, 227–238 (1996)

Vogel, C.R.: Computational Methods for Inverse Problems. SIAM, Philadelphia (2002)

Wright, S., Nowak, R., Figueiredo, M.: Sparse reconstruction by separable approximation. IEEE Trans. Signal Process. 57(7), 24792493 (2009)

Yin, W., Osher, S., Goldfarb, D., Darbon, J.: Bregman iterative algorithms for l1-minimization with applications to compressed sensing. SIAM J. Imaging Sci. 1, 143–168 (2008)

Zhang, J.J., Morini, B.: Solving regularized linear least-squares problems by alternating direction methods with applications to image restoration. ETNA 40, 356–372 (2013)

Acknowledgments

The author is grateful to the two anonymous referees for many helpful and perceptive suggestions.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Landi, G. A Modified Newton Projection Method for \(\ell _1\)-Regularized Least Squares Image Deblurring. J Math Imaging Vis 51, 195–208 (2015). https://doi.org/10.1007/s10851-014-0514-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10851-014-0514-3