Abstract

This mixed methods study explores EFL students’ experiences and perceptions as they learn to write a composition with ChatGPT’s support in a classroom instructional context. Students’ perceptions are explored in terms of their motivation to learn about ChatGPT, cognitive load and satisfaction with the learning process. In a workshop format, twenty-one Hong Kong secondary school students were introduced to ChatGPT, learned prompt engineering skills, and attempted a 500-word English language writing task with ChatGPT’s support. Data collected included a pre-workshop motivation questionnaire, think-aloud protocols during the writing task, and a post-workshop questionnaire on motivation, cognitive load, and satisfaction. Results revealed no significant difference in students’ motivation before and after the workshop, but mean motivation scores increased slightly. Students reported high cognitive load during the writing task, especially during prompt engineering. However, students expressed high satisfaction with the workshop overall. Findings indicate ChatGPT’s potential to engage EFL students in the writing classroom, but its use can impose heavy cognitive demands. To ensure that ChatGPT use supports EFL writing without overwhelming students, educators should consider an iterative design process for activities and instructional materials and carefully scaffolding instruction, especially for prompt engineering.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Generative artificial intelligence (AI) language models (LMs) such as OpenAI’s GPT-2, GPT-3 and GPT-4 have captivated educators’ interest, because they can generate large chunks of coherent text indistinguishable from human writing (Brown et al., 2020) and proficiently perform a variety of natural language processing tasks when instructed or prompted (Ouyang et al., 2022). Furthermore, ChatGPT has popularized interaction with LMs through a chatbot interface, that is, a conversational user interface that enables people to engage in meaningful verbal or text-based exchanges with an LM (Kim et al., 2022). As ChatGPT has captured popular imagination, ChatGPT is used as a catchall phrase for chatbots that use transformers-based LMs (Vaswani et al., 2017).

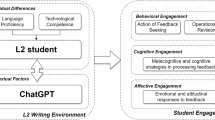

ChatGPT enables students to write with a machine-in-the-loop, which refers to a collaborative process between a student and a chatbot to complete a writing task. As defined by Clark et al. (2018) and illustrated in Fig. 1, the process is iterative. First, a student prompts or delivers a set of instructions to guide ChatGPT such as a question, an imperative statement or an excerpt from a text. Based on its understanding of the student’s prompt, ChatGPT generates output. The student then evaluates the output, accepting, rejecting or modifying ChatGPT’s output for integration into the student’s written composition. The cycle loops until the completion of the writing task with the student retaining full control over the written composition. Having previously been applied to creative writing, researchers found writers appreciated ChatGPT’s fresh ideas, which helped overcome writer's block, while still maintaining ownership over their work. However, the quality of written compositions have not necessarily improved with ChatGPT suggestions (Calderwood et al., 2020; Clark et al., 2018).

Notwithstanding ChatGPT’s potential benefits, the integration of ChatGPT into the English as a foreign language (EFL) writing classroom remains largely unexplored in terms of students' experiences and perceptions. This study aims to fill this research gap in the context of Hong Kong secondary school students learning to compose a written EFL composition with ChatGPT support. The objective is to explore how students perceive their experience of learning this innovative writing approach in terms of their motivation to learn about ChatGPT, cognitive load, and satisfaction with the learning process. These aspects are critical as they directly impact students' learning behaviors, engagement, and ultimately, their writing outcomes. Furthermore, understanding these aspects can provide valuable insights for educators, informing instructional approaches for integrating ChatGPT into the EFL writing classroom. The overarching question guiding this research is: How do EFL students perceive learning to write with ChatGPT in a classroom context?

1.1 Potential of ChatGPT in the EFL writing classroom

In the EFL writing classroom, students can face difficulty in retrieving intended English words and take time to translate ideas from their first language to English (Gayed et al., 2022). Students can struggle to generate ideas independently (Woo et al., 2023) and may not have sufficient and effective engagement with peer feedback in the writing process (Zhang & Hyland, 2023), although collaborative writing is an effective pedagogical practice (Li & Zhang, 2023) and students’ quality of writing can benefit from collaboration (Hsu, 2023).

The implementation of ChatGPT in an EFL writing classroom may support learning opportunities for students. This is because a chatbot can act as an ideal collaborative partner for EFL students (Guo et al., 2022), and ChatGPT is highly capable of natural language tasks such as brainstorming ideas, generating texts, answering questions, rewriting texts and summarizing texts (Ouyang et al., 2022). Conceptual studies have explored the use of ChatGPT in EFL writing classrooms, suggesting hypothetical use cases. For instance, Hwang and Chen (2023) suggested the potential application of students using ChatGPT as a proofreader for academic writing in EFL courses. Su et al. (2023) explored the potential of ChatGPT in assisting students with preparing outlines, revising content, proofreading, and reflecting.

However, some EFL teachers fear that students may become dependent on ChatGPT and its dubious suggestions (Ulla et al., 2023). ChatGPT could reinforce biased ideas (Mohamed, 2023). Additionally, students could use ChatGPT with neither much effort nor student input to complete writing assignments, undermining students’ acquisition of English and writing skills (Gayed et al., 2022), and critical and creative thinking (Barrot, 2023). Empirical studies featuring actual use cases of ChatGPT support in the EFL writing classroom show mixed results. For instance, Cao and Zhong (2023) compared ChatGPT feedback, EFL teacher feedback and student feedback for improving 45 university students’ written translation performance and found ChatGPT feedback was less effective than other feedback types in improving performance. On the other hand, Athanassopoulos et al. (2023) examined ChatGPT’s effectiveness as a writing vocabulary and grammar feedback tool for eight, 15-year old migrants and refugees. After writing a task and receiving improved versions of their writing generated by ChatGPT, the students could increase the total number of words, the unique words and the number of words per sentence when writing a similar task.

1.2 Genre writing and prompt engineering as genre in the EFL classroom

How teachers should approach the instruction of writing with ChatGPT in an EFL classroom is a complex issue. From an EFL teaching and learning perspective, a teacher adopting an explicit, instructional approach to EFL writing appears necessary for whether implementing ChatGPT intentionally benefits or hinders students’ acquisition of knowledge and skills. In this regard, although process writing has been a popular, inductive writing strategy, Hyland (2007) has argued it has limited value for EFL learners who lack access to cultural knowledge that facilitates effective, independent writing. Instead, this study approaches EFL students’ acquisition of writing through genre, which emphasizes communicating effectively through different types of texts, and their specific conventions, language features, and structures (Hyland, 2019). As illustrated in Fig. 2, a genre-approach to writing instruction is explicit, including stages such as a teacher modeling a genre, joint construction of a text in the genre, and a student’s independent construction of a text in the genre. Like a conventional EFL teacher, ChatGPT could support students at each stage by, for example, generating model texts of a genre, identifying the genre’s linguistic features, collaboratively writing sections of a text with a student, and suggesting vocabulary, grammar and outlines and providing feedback for a student’s independent construction of a text.

When students write with a machine-in-the-loop, the effect of ChatGPT on students' knowledge and skill development in genre writing depends on how well students give instructions or prompts for ChatGPT. Proficient crafting of prompts or prompt engineering can significantly enhance the quality of ChatGPT’s generated output and the overall effectiveness of the interaction with ChatGPT (Reynolds & McDonell, 2021). Since constructing appropriate prompts is not straightforward for non-technical users (Zamfirescu-Pereira et al., 2023), and ChatGPT prompts are an emergent genre, scholars have proposed example prompts for hypothetical ChatGPT use cases in the literature (Hwang & Chen, 2023; Kohnke et al., 2023; Su et al., 2023).

The implication for the EFL writing classroom is that ChatGPT’s capability to support students at different stages of genre writing would depend on teachers not only developing students’ knowledge and skills of the target text type but also developing student’s prompt engineering knowledge and skills. Furthermore, the authors anticipate prompt engineering instruction could compose a significant part of students’ learning to write with ChatGPT. For instance, teachers could orient students towards what AI is, what a chatbot is, ChatGPT capabilities, exemplary prompts to unlock ChatGPT capabilities and vocabulary and grammar for students to independently construct prompts to unlock ChatGPT capabilities. Given the iterative nature of the machine-in-the-loop writing process, students’ may spend much time crafting prompts. Thus, exploring EFL students' perceptions during the prompt engineering phase of genre writing could inform more effective instruction to develop students’ prompt engineering knowledge and skills.

In summary, previous research has suggested potential for ChatGPT to support EFL students’ writing yet realizing that potential in the classroom may require not only effective writing instruction but also effective prompt engineering instruction. Furthermore, ChatGPT may convey benefits for EFL students’ genre writing if ChatGPT does not replace the teacher, but rather students use ChatGPT alongside teacher instruction to ethically and effectively develop writing skills (Shaikh et al., 2023). Empirical research on EFL student perceptions is a means to evaluate student experiences when learning to write with ChatGPT a classroom context.

1.3 Student perceptions about learning to write with ChatGPT

While ChatGPT shows potential to support students’ writing, it is crucial to understand students’ perceptions about learning to write with ChatGPT in their classroom context. Student perceptions encompass students' subjective assessment of their learning environment (e.g. curriculum; instructional methods and materials; and other services and contextual factors) (Biggs, 1999), and can significantly influence their learning behaviors, engagement, and ultimately academic achievement. This is because positive perceptions may foster a deep learning approach, whereas negative perceptions may facilitate a surface learning approach. The following literature review elaborates three aspects of student perceptions that are often examined to evaluate learning environments.

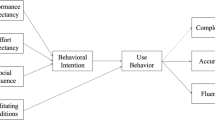

1.3.1 Motivation to learn

Motivation to learn refers to students' desire and willingness to engage with the learning materials and activities (Keller, 1987). It is an important factor influencing how students approach and persist with learning tasks. It's especially crucial in the context of EFL writing, a challenging task demanding cognitive effort and continual practice. In the case of writing with ChatGPT, students' motivation can be influenced by their perceived usefulness and ease of use of the technology (Davis, 1989). Motivation to learn is often evaluated through questionnaire items. Hwang and Chang (2011) found their formative assessment-based mobile learning environment improved students’ learning motivation toward the target content, the authors suggesting appropriate challenges had motivated students during the learning process. Shim et al. (2023) found their experiential chatbot workshop was instrumental in positively motivating their students to learn chatbot competencies. Kim and Lee (2023) found socio-economically disadvantaged Korean middle school students were far more motivated to learn about AI than students who were not socio-economically disadvantaged. On the other hand, Hwang et al. (2013) found a concept map-embedded game did not have a significant impact on students’ learning motivation when compared to a digital game without a concept mapping strategy. Alternatively, Jeon (2022) adopted qualitative methods to explore how chatbots affected EFL primary students’ motivation to learn English, identifying chatbot affordances and limitations that facilitated and decreased, respectively, students’ motivation to learn English through chatbots. Similarly, Chan and Hu (2023) asked Hong Kong university students open-ended questions to collect data on students’ willingness to use ChatGPT and found most participants were motivated to use it, identifying several reasons.

1.3.2 Cognitive load

Cognitive load theory (Sweller, 1988) posits that people’s capacity to process information during learning is limited. In this way, a heavy cognitive load impedes learning but a manageable level of cognitive load facilitates it. Furthermore, cognitive load is a multidimensional concept comprising two components (Paas, 1992). Mental load refers to the load imposed by task demands. Mental effort refers to the amount of cognitive capacity allocated to address the task demands. Sweller et al. (1998) elaborated a cognitive architecture and proposed that when designing instruction, information should be organized and presented in a way to reduce cognitive load on working memory and increase knowledge stored in long-term memory. In evaluating innovative educational technology approaches, researchers have evaluated cognitive load in students through surveys and have found, for example, a formative assessment-based mobile learning environment could improve learning achievement with appropriate cognitive load (Hwang & Chang, 2011); and a concept map-embedded game also improved students’ learning achievement and decreased their cognitive load (Hwang et al., 2013).

1.3.3 Satisfaction with learning

Satisfaction is a basic measure of how participants react to a program or learning process. It can be characterized as either positive or negative. Importantly, although satisfaction with the learning process does not ensure learning, dissatisfaction may impede learning (Kirkpatrick & Kirkpatrick, 2006). When designing instruction, high satisfaction can validate standards of performance for future programs. Satisfaction is often evaluated quantitatively through questionnaires. For instance, Fisher et al. (2010) found that teachers who participated in a virtual professional development program were as satisfied as teachers who participated in an in person program; and that students were satisfied by the instruction from both groups of teachers. Shim et al. (2023) found 91% of their students were satisfied with an experiential learning chatbot workshop with no students indicating dissatisfaction. With regards to ChatGPT, Amaro et al. (2023) found that their cohort of Italian university students exhibited a high level of satisfaction during a guided interaction with ChatGPT. However, they also observed that satisfaction levels decreased because students became aware of ChatGPT’s ability generate false information, particularly when students’ awareness arose early in the interaction. Escalante et al. (2023) conducted a study in which 43 university EFL students received writing feedback from both human tutors and ChatGPT over a six-week period. The students reported similar levels of satisfaction with the feedback from both sources. Alternatively, in a mixed-methods study by Belda-Medina and Calvo-Ferrer (2022), 176 Spanish and Polish undergraduates interacted with three AI chatbots over a four-week period. Through analysis of survey data, the researchers found gender-related differences in levels of satisfaction and by analysis of students’ written reports to open-ended questions, identified key factors for students’ satisfaction.

To conclude, after the literature review, the overarching research question is operationalized into three questions, each addressing a particular aspect of student perception:

-

RQ1: How does the use of ChatGPT in writing impact EFL students' motivation to learn about ChatGPT?

-

RQ2: What is the cognitive load experienced by EFL students when writing with ChatGPT?

-

RQ3: How satisfied are EFL students with the experience of writing with ChatGPT?

2 Methods

2.1 Context and sample

This research used a convenience sample. Twenty-one students voluntarily participated in the study, where they were provided information about the study's objectives and tasks, their rights as participants, and the option to withdraw their participation at any point during the study. They were informed in English and Chinese language, verbally and in text, and were allowed to raise any questions or concerns about their participation with the researchers. No students declined participation.

The participants in this study were students from an all-girls secondary school in Hong Kong where the first author worked as an English as a Foreign Language (EFL) teacher. The school’s students have academic achievement ranging from the 44th to the 55th percentile based on their results in the secondary school entrance exams (Lee & Chiu, 2017) and compared to peers in the school’s geographic district. The demographic information of the sample is shown in Table 1. The average age was 13.6 years. Seven students lived in public housing, indicating a lower socio-economic status background in Hong Kong. Student’s EFL writing proficiency was defined by their last EFL writing exam mark. As the school’s passing mark for a writing exam is 50 out of 100, the majority of students (n = 11) were mediocre writers scoring between 40 and 60.

60% of students (n = 12) reported having used ChatGPT prior to the workshop, and 40% (n = 8) reported not having used ChatGPT. However, only 25% of students (n = 5) reported that they had used ChatGPT to complete English language homework, suggesting the majority of students have no experience with ChatGPT use cases in the EFL writing classroom.

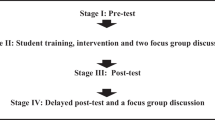

2.2 Materials and procedure

The study took place in the school’s STEM classroom on July 5, 2023 and repeated on July 6. Six students attended on July 5 and 16 on July 6. The study’s environment for learning to write with ChatGPT took the form of a human-AI creative writing workshop. Each workshop lasted one-hour, 45-min. Because writing with ChatGPT in the EFL classroom is novel, the authors’ developed the workshop activities and materials by design-based research (DBR) (Wang & Hannafin, 2005), that is, a flexible and systematic methodology that can improve educational practice iteratively through design, development, implementation and analysis. The authors adopted an outcome-based learning design, that is, a framework for describing learning environments and learning activities (Conole & Wills, 2013). First, the researchers designed the workshop’s purpose and intended learning outcomes (ILOs), that is, what students should achieve by the end of the workshop. Then the authors designed the learning activities, that is, basic units of interaction with or among learners. Table 2 summarizes the workshop design, which comprises its (1) title, (2) purpose, (3) ILOs, (4) learning activities, and (5) materials and resources. By evaluating student perceptions, the learning design can be improved for subsequent implementations.

In the workshop, the authors introduced students to the genre of effective written communication with chatbots before introducing students to the writing task that they would attempt with ChatGPT. (1) The concept of chatbots was introduced using an inductive approach by showing a chatbot screenshot and asking students, “What are you looking at?” (2) Students were asked to interact with a chatbot, before asking students what this type of generative AI is and how to interact with it. (3) The features of chatbots were introduced, including turn-taking and memory. (4) The principles for chatbot prompting such as the garbage-in-garbage-out principle were introduced by showing a chatbot screenshot to students and asking, “What is a problem with this conversation?” (5) For students to take advantage of ChatGPT’s novel capabilities and to get desired output, the concepts of prompts and prompt engineering were defined. The authors introduced different ChatGPT use cases for writing and example prompts for those use cases based on classmates’ actual prompts and theoretical prompts from a literature review. The use cases included asking ChatGPT to act as a particular role, to act as a search engine, to analyze a text input, to answer a question, to auto-complete a text input, to explain its reasoning for its text output, to paraphrase a text input, to provide additional information to its text output, to summarize a text input, and to translate a text input. The authors did not introduce a use case of prompting ChatGPT to generate a complete composition that replaces human effort in writing. The instructional materials such as the slide deck (see Supplemental Material) were delivered in English by the first author. At the same time, the first author’s colleague provided simultaneous spoken translation in Cantonese Chinese language.

After the introduction to prompt engineering for ChatGPT, students began writing with ChatGPT and other state-of-the-art chatbots on school-supplied iPads, on which the Platform for Open Exploration (POE) app was loaded. At the time of study, the app granted free access to ChatGPT and five other chatbots (i.e. Sage, GPT-4, Claude + , Claude-instant, and Google-PaLM) that rely on commercial LMs hundreds of billions of parameters in size. Figure 3 shows the POE app interface on iPad from which students could select from the six chatbots.

The students were given 45 min to attempt a writing task using ChatGPT and other POE chatbots. The task was designed for students to demonstrate the range of writing skills and genre assessed in their EFL school curriculum, and to compel students to engage ChatGPT. (1) Students were instructed to write either a feature article or a letter to the editor. Figure 4 shows the prompts selected by the authors, taken from the 2023 Hong Kong university entrance examination for the EFL subject area (HKDSE), writing paper, which Hong Kong secondary school students take in their final year. (2) Students were instructed to write no more than 500 words on Google Docs, using their own words and words generated from POE chatbots. Students could prompt any POE chatbot in any way possible, as many times as necessary and use any chatbot output. (3) Students were instructed to differentiate their own words from AI words in their writing by highlighting words from each chatbot in a specific color. Figures 5 and 6 show a completed feature article and letter to the editor, respectively, following the color-coding scheme.

The research team had monitored student progress as students attempted the writing task during the workshop. Students were not required to complete the task during the workshop as the students and research team had agreed on a task completion deadline after the workshop.

2.3 Data collection

This mixed method study followed an embedded design (Creswell & Clark, 2007) where two sets of quantitative data and one set of qualitative data were collected in a workshop (see Table 3). In sum, the quantitative data from the pre-workshop questionnaire and the qualitative data from the think aloud protocols were collected to support quantitative data collected from the post-workshop questionnaire.

2.3.1 Pre-workshop questionnaire

To collect data on students’ learning motivation about ChatGPT before writing the task, a pre-workshop questionnaire, which also collected student background information, was developed. The learning motivation part comprised seven items with a six-point rating scheme (see Appendix), which was adapted from a measurement tool developed by Hwang and Chang (2011) to assess the motivation of fifth-grade primary school students towards a local culture course. The original scale has undergone thorough review, adoption, and adaptation by researchers (e.g., Cai et al., 2014; Huang et al., 2023) in diverse contexts to evaluate students' motivation. In accordance with their procedures, the authors modified the scale by replacing the course name with terms relevant to learning ChatGPT to ensure content validity. Furthermore, a pilot study involving 46 participants was conducted to establish the construct validity of this questionnaire. The results of a confirmatory factor analysis (CFA) yielded favorable indices: X2/df = 1.02, P(CMIN) = 0.422, CMIN/DF = 1.018, root mean square error of approximation (RMSEA) = 0.022, and comparative fit index (CFI) = 0.989, thereby confirming the construct validity. The reliability of the learning motivation questionnaire in this study was found to be 0.95, indicating a high level of internal consistency. As students are taught Chinese language and English language literacy in school, the questionnaire was delivered in English language and traditional Chinese language text. Students completed the questionnaire at the workshop, before the delivery of instructional materials. The questionnaire was introduced to students verbally, in English and in Cantonese Chinese, most students’ mother tongue. The research team monitored students while they completed the questionnaire and was available to answer any questions.

2.3.2 Think aloud protocols

To collect data on students’ cognitive load during the prompt engineering phase of writing the task, thinking aloud (TA), a research method where a student speaks their thoughts and feelings during an activity (Ericsson & Simon, 1993), was utilized. Scholars (Charters, 2003; Yoshida, 2008) have claimed that think aloud protocols provide insights into students’ cognitive load from demanding language tasks that can influence working memory and verbalization. In this way, students may not suffer great cognitive load if they can speak effortlessly and fluently.

The authors randomly sampled nine students for the think-aloud method. Not least because of the smaller sample, this data was supplementary to retrospective data collection from a larger sample. Furthermore, the authors took a pragmatic view (Cotton & Gresty, 2006) to students’ think-aloud protocols, actively moderating them. At the workshop, before students attempted the task, the selected students were briefed on think-aloud protocols in English language and Cantonese Chinese language; and the authors demonstrated a protocol. The fourth author administered the think-aloud protocols, spending six minutes with each student, video-recording students’ iPad screens and iteratively asking students when they arrived at specific interaction points with a POE chatbot, (1) What do you think about this prompt? (visual cue: student has cursor in chatbot input box or is typing in chatbot input box) (2) What do you think about this output? (visual cue: chatbot has completed its output; and student is not typing anything) (3) How do you feel? (visual cue: student appears to have stopped answering question two) Students could answer in either or both English language and Cantonese Chinese language.

Of the nine think-aloud protocols video-recorded on July 5 and 6, only the five collected on July 5 had sound. These five protocols were transcribed for each thought, the sequence of the thought, the timestamp on the video recording, and the chatbot and prompt used at the time.

2.3.3 Post-workshop questionnaire

A post-workshop questionnaire was the primary method to collect data on (1) learning motivation, (2) satisfaction, and (3) cognitive load. The seven learning motivation items were the same as those administered in the pre-workshop questionnaire except in the post-workshop items, the term “ChatGPT” had been replaced with the phrase “ChatGPT and other POE chatbots.” For instance, item 1 in the post-workshop questionnaire was, “I think learning ChatGPT and other POE chatbots is interesting and valuable [我認為學習ChatGPT和其他POE聊天機器人很有趣且有價值].” These terms were replaced because by the end of the task, students had been introduced to additional chatbots besides ChatGPT. The 14 satisfaction items were adapted from Fisher et al. (2010) (see Appendix). The eight, cognitive load items with a six-point Likert rating scheme were developed based on the measures of Paas (1992) and Sweller et al. (1998) (see Appendix). To ensure content validity, two experts with knowledge and expertise related to the construct of “satisfaction” were invited to evaluate the items for relevance, clarity, and comprehensiveness. Both experts confirmed the acceptability of the questionnaire, supporting its content validity. Additionally, the CFA on the pilot study showed that X2/df = 1.14, P(CMIN) = 0.21, CMIN/DF = 1.14, RMSEA = 0.07, and CFI = 0.97, confirming the construct validity. The Cronbach’s alpha value for this satisfaction questionnaire was 0.98, indicating a high level of consistency.

The cognitive load questionnaire consists of five items related to mental load and three items pertaining to mental effort. The original questionnaire, as presented in Hwang et al. (2013), was initially developed to assess the mental load and mental efforts of sixth-grade primary school students engaged in a game-based learning activity. Since then, this scale has been extensively examined, adopted, and adapted by researchers in various learning contexts to explore students' cognitive load (e.g., Dong et al., 2020; Hsu, 2017). Following their methodological guidelines, minor adjustments were made by substituting the term “learning activity” with “workshop” to measure students’ cognitive load, thereby ensuring content validity. Furthermore, the CFA conducted during the pilot study revealed that X2/df = 1.08, P(CMIN) = 0.37, CMIN/DF = 1.08, RMSEA = 0.058, and CFI = 0.99, thereby confirming the construct validity. The dimensions of mental load and mental effort exhibited high levels of internal consistency, with Cronbach’s alpha values of 0.97 and 0.95, respectively. The questionnaire was delivered in English language and traditional Chinese language text. Students completed the post-workshop questionnaire at the end of the workshop on Google Forms. Like the pre-workshop questionnaire, the post-workshop questionnaire was introduced to students verbally, in English and in Cantonese Chinese, the research team monitored students while they completed the questionnaire.

2.4 Data analysis

To investigate EFL students' learning motivation, cognitive load, and satisfaction after their active participation in the study, the authors analyzed post-workshop questionnaire data, employing basic descriptive statistics, including mean, standard deviation, minimum, and maximum values.

To further investigate EFL students’ learning motivation, the descriptive statistics were applied to the pre-workshop questionnaire data. In addition, the Wilcoxon signed-rank test was employed to assess students’ motivation changes from pre-workshop to post-workshop, given that the data did not adhere to a normal distribution.

To further investigate EFL students’ cognitive load, the authors analyzed students’ think-aloud protocols, employing descriptive statistical measures such as the mean number of turns per student and the mean number of spoken words per turn, and standard deviation, minimum, and maximum values. This quantitative analysis was supplemented with representative quotes from students’ think aloud protocols. This analysis provides a fine-grained perspective to students’ cognitive load during the prompt engineering phase of writing with ChatGPT.

3 Results

3.1 Students’ learning motivation

Twenty-one students had answered the post-workshop questionnaire and their median scores were found to be 35.00, within a range of 21 to 42. Twenty students had answered the pre-workshop questionnaire and their median scores for learning motivation among the students were observed to be 33.50, with a range spanning from 26 to 42. The results of the Wilcoxon signed-rank test, comparing the pre- and post-workshop learning motivation of the 20 EFL student cohort, are presented in Table 4. The result of the Wilcoxon signed-rank test (Z = 1.085, p = 0.278) indicates no significant difference in learning motivation between the pre- and post-workshop phases. However, it is noteworthy that the students exhibited an enhanced motivation to engage with ChatGPT and other POE chatbots in the post-workshop setting, as evidenced by a mean of 34.750 (SD = 6.604) in contrast to the mean of 33.850 (SD = 5.631) in the pre-workshop context.

To gain deeper insights into the evolution of students' learning motivation, the authors undertook a visualization of their ratings across specific items, illustrated in Fig. 7. The analysis reveals an increase in learning motivation across five of the seven items. For instance, the average scores for items 1 and 2 exhibited a rise from 4.70 and 4.85 to 5.00, reflecting a heightened perception of the value and interest associated with learning about ChatGPT and other POE chatbots along with an augmented desire for further learning. Remarkably, although item 3 experienced a decrease in score, it remained at a conspicuously high level, with a rating of 5.00. In conclusion, the analysis of learning motivation partially corroborates that interactions with ChatGPT and other POE chatbots in the context of EFL writing have the potential to amplify students' motivation to advance their proficiency in utilizing chatbots.

3.2 Students’ cognitive load

Table 5 and Fig. 8 describe data from the post-workshop questionnaire about EFL students’ retrospective, self-reported cognitive load during the workshop. Intriguingly, students reported a relatively high level of cognitive load, with six out of the eight items returning an average rating of four out of six points. For instance, students attested to the challenging and effort-intensive nature of the workshop’s questions and tasks, a sentiment mirrored in their responses to items 2, 3, and 7. This cognitive load analysis suggests that students faced challenges when learning to write with ChatGPT and other POE chatbots to attempt a writing task.

Analysis of five students’ think aloud protocols during the prompt engineering phase of their writing with ChatGPT provides some corroborative evidence for students’ high cognitive load. In general, students were not speaking effortlessly and freely. Figure 9 illustrates the number of spoken turns that each student took during a six-minute timespan. Students took on average 13 turns, with a range from five turns (n = 1) to 17 turns (n = 2). Figure 9 also illustrates the average number of words that each student spoke per turn. While Students 1A, 2A and 3A wrote ChatGPT prompts exclusively in English language and delivered think aloud protocols exclusively in English language, Students 3A and 3B wrote ChatGPT prompts exclusively in Chinese language and delivered think aloud protocols almost exclusively in Chinese language. Therefore, the authors translated these students’ words into the English language before preparing descriptive statistics. Students spoke on average 11 words per turn, with a range from five words per turn (n = 1) to 25 words per turn (n = 1).

Table 6 shows representative turns for each student. Each student’s turns represent a complete instance of a prompt engineering phase, showing their answers to the questions (1) what do you think about this prompt? (2) what do you think about this output? and (3) how do you feel? Each student’s turns were also selected as the most representative of the average number of words that the student spoke per turn.

3.3 Students’ satisfaction

Table 7 and Fig. 10 offer insights into students' satisfaction concerning the workshop where they learned to write with ChatGPT. On the whole, students expressed a high level of satisfaction throughout the workshop, as all survey items garnered an average rating surpassing 5.40 on a 7.0-point scale. As delineated in Fig. 10, students conveyed a robust sense of engagement and enjoyment in the workshop, as evidenced by their responses to items 8, 10, and 12. Furthermore, they reported a noteworthy level of focus, enthusiasm, and confidence in their ability to assimilate, retain, and apply the workshop content, exemplified by their responses to items 1, 2, 3, 4, 6, 7, and 11.

4 Discussion

This study has explored EFL students’ perceptions about learning to write with ChatGPT in terms of students’ motivation to learn, cognitive load, and satisfaction. The specific sample and context are Hong Kong EFL secondary students in a workshop where they were introduced to ChatGPT and prompt engineering, and attempted a 500-word writing task using ChatGPT for support. The results from the pre- and post-workshop questionnaires and from think aloud protocols provide insights into how using ChatGPT in writing impacts EFL students’ motivation to learn about ChatGPT, EFL students’ cognitive load when writing with ChatGPT and students’ satisfaction with the experience of writing with ChatGPT. The following are the major findings.

4.1 Major findings

The Wilcoxon signed-rank test results revealed no significant difference in students' motivation to learn about ChatGPT from pre- to post-workshop. However, a slight increase in the mean scores for post-workshop motivation suggests that students may have had a more favorable attitude towards learning about ChatGPT after engaging in the workshop activities. The widespread appeal of ChatGPT sparked considerable interest among students, motivating their voluntary participation in the workshop and explaining their initially high levels of motivation, as in line with the findings of Chan and Hu (2023), who reported a willingness among most students to utilize ChatGPT. This highlights the potential of ChatGPT's novelty and interactive nature to stimulate motivation for EFL writing. The integration of ChatGPT into EFL writing bridges the gap between cutting-edge technology and students' academic learning, establishing relevance and potentially contributing to increased motivation. This integration is also consistent with prior research suggesting that the incorporation of innovative technologies can enhance students' motivation to engage with learning materials (Kim & Lee, 2023; Shim et al., 2023). Furthermore, ChatGPT's features, such as generating helpful content tailored to human needs and facilitating interactive conversations, provide students with a high level of satisfaction, as evidenced by their responses to satisfaction questionnaires. This satisfaction may further explain their enhanced motivation to learn. However, it is important to acknowledge the possibility of a "novelty effect" contributing to the slight increase in motivation, which refers to the heightened motivation or perceived usability of a technology due to its novelty or newness (Koch et al., 2018). To substantiate this hypothesis, a longitudinal study is necessary to explore how students' learning motivation may evolve as they become accustomed to ChatGPT.

Notably, this study has found students experienced heavy cognitive load when writing with ChatGPT. Specifically, the think-aloud protocol evidence suggests that students’ experience heavy cognitive load during the prompt engineering phase of writing with ChatGPT. Additionally, the think-aloud protocol evidence suggests the heavy cognitive load stems from neither the demands of writing in English, as students could write ChatGPT prompts in Chinese language, nor from the medium of instruction, as verbal and written instructions were delivered in English language and Chinese language, nor from the think-aloud protocols as students could perform protocols in either English or Chinese language. On the other hand, it is possible the heavy cognitive load stems from the basic cognitive processes associated with writing (Flower & Hayes, 1981) such as planning, drafting and reviewing. Another possibility is that the workshop’s time constraint influenced students heavy cognitive load as Shim et al. (2023) suggested that novices unfamiliar with chatbots need more time to follow instruction and to keep pace in a workshop format. Alternatively, think-aloud protocols have been criticized as providing an artificial and incomplete view of cognitive activity during writing, although they can provide insights into writing and writing response practices (Hyland, 2019).

Importantly, the finding highlights the possibly high cognitive demands of integrating ChatGPT into the EFL writing classroom. Cognitive load theory posits that for effective learning to occur, instructional design should manage cognitive load to prevent overloading the learner's working memory (Sweller et al., 1998). Therefore, this study supports existing recommendations to optimize instruction for AI in the classroom so that students engage level-appropriate material and tasks yet are challenged to advance their cognitive boundaries (Walter, 2024). In the context of students writing with ChatGPT in the EFL classroom, teachers can lead students from comprehension-based tasks to controlled production to more communicative tasks (Nunan, 1989). Teachers can also provide materials on how to craft prompts and schematas by which students can evaluate output, because extensive reading must support EFL writing skills (Hyland, 2019). In addition, teachers may provide students with more time to engage in materials and tasks. By intentionally reducing EFL students’ cognitive load, teachers better position their students to benefit from ChatGPT in the writing classroom.

Students expressed high satisfaction with the workshop overall. The analysis of satisfaction partially supports that engagement with ChatGPT and other POE chatbots in the context of an EFL writing classroom fosters a highly gratifying and enriching educational experience for students. It corroborates prior ChatGPT research where students reported high satisfaction with guided ChatGPT interactions (Amaro et al., 2023) and with writing feedback from ChatGPT (Escalante et al., 2023). The distinctive characteristics of ChatGPT may contribute to the observed high level of satisfaction, as previously mentioned. Firstly, ChatGPT is trained using the reinforcement learning from human feedback method (RLHF; Stiennon et al., 2020). This training approach modifies ChatGPT's language modeling objective, shifting its focus from predicting the next token on a webpage to providing helpful and safe responses based on user instructions (Ouyang et al., 2022, p. 2). Consequently, ChatGPT excels at generating responses that are aligned with human preferences and priorities. In the context of this study on EFL writing, ChatGPT effectively produces responses that cater to students' preferences and facilitate their writing process, thereby explaining their heightened satisfaction. Secondly, ChatGPT exhibits a conversational nature. Previous studies have shown that extended interactions with conversational agents can enhance users' overall experience (Jacq et al., 2016). Similarly, the students' frequent interaction with the human-like ChatGPT in this study can be considered a contributing factor to their elevated satisfaction. However, although high satisfaction is an important predictor of future engagement and positive learning outcomes, it alone does not guarantee learning.

4.2 Implications, limitations and future research

This study has allowed educators to better understand the integration of ChatGPT into the English as a foreign language (EFL) writing classroom in terms of students' experiences and perceptions. Specifically, educators can better understand the potential of ChatGPT to enhance the EFL writing classroom by motivating and engaging students. Furthermore, a workshop format can be a suitable way to integrate ChatGPT into the EFL writing classroom. However, educators should carefully scaffold instruction, especially in teaching prompt engineering skills, so as to manage students’ cognitive load. Educators can consider an iterative design process of activities and instructional materials. Careful design ensures that ChatGPT use supports writing without overwhelming students. Educators may begin by identifying the target text type for students to write, adopting an explicit approach to writing that text type, mapping ChatGPT capabilities to that writing approach and developing genre writing and prompt engineering instructional materials, such as worksheets, and activities such as educators and students jointly constructing prompts. Implementing extensively scaffolded instruction to better integrate ChatGPT in the writing classroom will require more contact time.

Although this exploratory study provides a meaningful window into EFL students' perceptions about learning to write with ChatGPT, its limitations should be considered when interpreting the results. The sample size was relatively small, in terms of number of students, schools and instructional time, which may limit the generalizability of the findings. The sample was all female. Further research could involve larger, and more diverse samples, including males, to further validate the findings. Furthermore, research could explore how students' perceptions evolve with prolonged engagement. For instance, longitudinal studies could examine how students' ability to manage cognitive load improves with prolonged exposure to ChatGPT. Likewise, further research could explore differences in students’ perceptions between two types of workshops. For instance, student perceptions from this study’s workshop can be compared to those from a workshop with adjusted instruction aimed to reduce cognitive load, such as improved prompt engineering instruction. Another limitation is the reliance on self-report measures, which may not fully capture students' perceptions. Future research could incorporate additional measures of student perceptions, such as additional observational data besides screen recordings to further validate self-report measures.

4.3 Conclusion

This mixed-methods study explored Hong Kong secondary school EFL students' experiences and perceptions of learning to write a composition with ChatGPT's support in a workshop format. Key findings revealed that, although not statistically significant, students' mean motivation scores to learn about ChatGPT increased slightly from pre- to post-workshop, suggesting ChatGPT's potential to engage students. However, students reported high cognitive load in the workshop, notably when writing with a machine-in-the-loop. This highlights the need for educators to carefully scaffold instruction and activities to reduce students' cognitive load in the classroom. Nonetheless, students expressed high overall satisfaction with the workshop experience.

This study adds to the empirical research on EFL student experiences and perceptions of writing with ChatGPT. The findings provide insights for educators on the motivational benefits and cognitive demands of integrating ChatGPT in the writing classroom. Furthermore, the study proposes developing EFL students' prompt engineering skills alongside genre writing skills to optimize ChatGPT's support at different stages of learning to write a text type. Overall, the study advances how to enhance EFL classroom writing with ChatGPT integration. Future studies could involve larger, more diverse samples, explore longitudinal effects, and compare varied instructional designs for integrating ChatGPT in EFL writing.

Data availability

The data that support the findings of this study are available from the corresponding author, David James Woo, upon reasonable request.

References

Amaro, I., Barra, P., Della Greca, A., Francese, R., & Tucci, C. (2023). Believe in artificial intelligence? A user study on the ChatGPT’s fake information impact. IEEE Transactions on Computational Social Systems, 1–10. https://doi.org/10.1109/TCSS.2023.3291539

Athanassopoulos, S., Manoli, P., Gouvi, M., Lavidas, K., & Komis, V. (2023). The use of ChatGPT as a learning tool to improve foreign language writing in a multilingual and multicultural classroom. Advances in Mobile Learning Educational Research, 3(2), Article 2. https://doi.org/10.25082/AMLER.2023.02.009

Barrot, J. S. (2023). Using ChatGPT for second language writing: Pitfalls and potentials. Assessing Writing, 57, 100745. https://doi.org/10.1016/j.asw.2023.100745

Belda-Medina, J., & Calvo-Ferrer, J. R. (2022). Using chatbots as AI conversational partners in language learning. Applied Sciences, 12(17), 8427.

Biggs, J. (1999). What the student does: Teaching for enhanced learning. Higher Education Research & Development, 18(1), 57–75.

Brown, T. B., Mann, B., Ryder, N., Subbiah, M., Kaplan, J., Dhariwal, P., Neelakantan, A., Shyam, P., Sastry, G., Askell, A., Agarwal, S., Herbert-Voss, A., Krueger, G., Henighan, T., Child, R., Ramesh, A., Ziegler, D. M., Wu, J., Winter, C., …, & Amodei, D. (2020). Language models are few-shot learners (arXiv:2005.14165). arXiv. https://doi.org/10.48550/arXiv.2005.14165

Cai, S., Wang, X., & Chiang, F. K. (2014). A case study of Augmented Reality simulation system application in a chemistry course. Computers in Human Behavior, 37, 31–40. https://doi.org/10.1016/j.chb.2014.04.018

Calderwood, A., Qiu, V., Gero, K. I., & Chilton, L. B. (2020). How novelists use generative language models: An exploratory user study. IUI ’20: Proceedings of the 25th International Conference on Intelligent User Interfaces. ACM IUI 2020, Cagliari, Italy.

Cao, S., & Zhong, L. (2023). Exploring the effectiveness of ChatGPT-based feedback compared with teacher feedback and self-feedback: Evidence from Chinese to English translation (arXiv:2309.01645). arXiv. https://doi.org/10.48550/arXiv.2309.01645

Chan, CK., & Hu, W. (2023). Students' voices on generative AI: Perceptions, benefits, and challenges in higher education. International Journal of Educational Technology in Higher Education, 20(43). https://doi.org/10.1186/s41239-023-00411-8

Charters, E. (2003). The use of think-aloud methods in qualitative research an introduction to think-aloud methods. Brock Education: A Journal of Educational Research and Practice, 12, 68–82.

Clark, E., Ross, A. S., Tan, C., Ji, Y., & Smith, N. A. (2018). Creative writing with a machine in the loop: Case studies on slogans and stories. 23rd International Conference on Intelligent User Interfaces, 329–340. https://doi.org/10.1145/3172944.3172983

Conole, G., & Wills, S. (2013). Representing learning designs – making design explicit and shareable. Educational Media International, 50(1), 24–38. https://doi.org/10.1080/09523987.2013.777184

Cotton, D., & Gresty, K. (2006). Reflecting on the think-aloud method for evaluating e-learning. British Journal of Educational Technology, 37(1), 45–54. https://doi.org/10.1111/j.1467-8535.2005.00521.x

Creswell, J. W., & Clark, V. L. P. (2007). Designing and conducting mixed methods research. SAGE.

Davis, F. D. (1989). Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Quarterly, 319–340. https://doi.org/10.2307/249008

Dong, A., Jong, M. S. Y., & King, R. B. (2020). How does prior knowledge influence learning engagement? The mediating roles of cognitive load and help-seeking. Frontiers in Psychology, 11, 591203. https://doi.org/10.3389/fpsyg.2020.591203

Ericsson, K. A., & Simon, H. A. (1993). Protocol analysis: Verbal reports as data. The MIT Press. https://doi.org/10.7551/mitpress/5657.001.0001

Escalante, J., Pack, A., & Barrett, A. (2023). AI-generated feedback on writing: Insights into efficacy and ENL student preference. International Journal of Educational Technology in Higher Education, 20(1), 57. https://doi.org/10.1186/s41239-023-00425-2

Fisher, J. B., Schumaker, J. B., Culbertson, J., & Deshler, D. D. (2010). Effects of a computerized professional development program on teacher and student outcomes. Journal of Teacher Education, 61(4), 302–312. https://doi.org/10.1177/0022487110369556

Flower, L., & Hayes, J. R. (1981). A cognitive process theory of writing. College Composition and Communication, 32(4), 365–387. https://doi.org/10.2307/356600

Gayed, J. M., Carlon, M. K. J., Oriola, A. M., & Cross, J. S. (2022). Exploring an AI-based writing assistant’s impact on English language learners. Computers and Education: Artificial Intelligence, 3, 100055. https://doi.org/10.1016/j.caeai.2022.100055

Guo, K., Wang, J., & Chu, S. K. W. (2022). Using chatbots to scaffold EFL students’ argumentative writing. Assessing Writing, 54, 100666. https://doi.org/10.1016/j.asw.2022.100666

Hsu, H.-C. (2023). The effect of collaborative prewriting on L2 collaborative writing production and individual L2 writing development. International Review of Applied Linguistics in Language Teaching. https://doi.org/10.1515/iral-2023-0043

Hsu, T. C. (2017). Learning English with augmented reality: Do learning styles matter? Computers & Education, 106, 137–149. https://doi.org/10.1016/j.compedu.2016.12.007

Huang, A. Y., Lu, O. H., & Yang, S. J. (2023). Effects of artificial intelligence-enabled personalized recommendations on learners’ learning engagement, motivation, and outcomes in a flipped classroom. Computers & Education, 194, 104684. https://doi.org/10.1016/j.compedu.2022.104684

Hwang, G.-J., & Chen, N.-S. (2023). Editorial position paper: Exploring the potential of generative artificial intelligence in education: Applications, challenges, and future research directions. Educational Technology & Society, 26(2). https://www.jstor.org/stable/48720991

Hwang, G. J., & Chang, H. F. (2011). A formative assessment-based mobile learning approach to improving the learning attitudes and achievements of students. Computers & Education, 56(4), 1023–1031. https://doi.org/10.1016/j.compedu.2010.12.002

Hwang, G. J., Yang, L. H., & Wang, S. Y. (2013). A concept map-embedded educational computer game for improving students’ learning performance in natural science courses. Computers & Education, 69, 121–130.

Hyland, K. (2007). Genre pedagogy: Language, literacy and L2 writing instruction. Journal of Second Language Writing, 16(3), 148–164. https://doi.org/10.1016/j.jslw.2007.07.005

Hyland, K. (2019). Second language writing. Cambridge University Press. https://doi.org/10.1017/9781108635547

Jacq, A., Lemaignan, S., Garcia, F., Dillenbourg, P., & Paiva, A. (2016). Building successful long child-robot interactions in a learning context. In 2016 11th ACM/IEEE International Conference on Human-Robot Interaction (HRI) (pp. 239–246). IEEE.

Jeon, J. (2022). Exploring AI chatbot affordances in the EFL classroom: Young learners’ experiences and perspectives. Computer Assisted Language Learning, 0(0), 1–26. https://doi.org/10.1080/09588221.2021.2021241

Keller, J. M. (1987). Strategies for stimulating the motivation to learn. Performance and Instruction, 26(8), 1–7. https://doi.org/10.1002/pfi.4160260802

Kim, H., Yang, H., Shin, D., & Lee, J. H. (2022). Design principles and architecture of a second language learning chatbot. http://hdl.handle.net/10125/73463

Kim, S.-W., & Lee, Y. (2023). Investigation into the influence of socio-cultural factors on attitudes toward artificial intelligence. Education and Information Technologies. https://doi.org/10.1007/s10639-023-12172-y

Kirkpatrick, D., & Kirkpatrick, J. (2006). Evaluating training programs: The four levels. Berrett-Koehler Publishers. https://doi.org/10.1016/S1098-2140(99)80206-9

Koch, M., von Luck, K., Schwarzer, J., & Draheim, S. (2018). The novelty effect in large display deployments–Experiences and lessons-learned for evaluating prototypes. In Proceedings of 16th European conference on computer-supported cooperative work-exploratory papers. European Society for Socially Embedded Technologies (EUSSET).

Kohnke, L., Moorhouse, B. L., & Zou, D. (2023). ChatGPT for language teaching and learning. RELC Journal, 00336882231162868. https://doi.org/10.1177/00336882231162868

Lee, D., & Chiu, C. (2017). “School banding”: Principals’ perspectives of teacher professional development in the school-based management context. Journal of Educational Administration, 55, 686–701. https://doi.org/10.1108/JEA-02-2017-0018

Li, M., & Zhang, M. (2023). Collaborative writing in L2 classrooms: A research agenda. Language Teaching, 56(1), 94–112. https://doi.org/10.1017/S0261444821000318

Mohamed, A. M. (2023). Exploring the potential of an AI-based Chatbot (ChatGPT) in enhancing English as a Foreign Language (EFL) teaching: Perceptions of EFL Faculty Members. Education and Information Technologies. https://doi.org/10.1007/s10639-023-11917-z

Nunan, D. (1989). Designing tasks for the communicative classroom. Cambridge University Press.

Ouyang, L., Wu, J., Jiang, X., Almeida, D., Wainwright, C. L., Mishkin, P., Zhang, C., Agarwal, S., Slama, K., Ray, A., Schulman, J., Hilton, J., Kelton, F., Miller, L., Simens, M., Askell, A., Welinder, P., Christiano, P., Leike, J., & Lowe, R. (2022). Training language models to follow instructions with human feedback (arXiv:2203.02155). arXiv. http://arxiv.org/abs/2203.02155

Paas, F. (1992). Training strategies for attaining transfer of problem-solving skill in statistics: A cognitive-load approach. Journal of Educational Psychology, 84, 429–434. https://doi.org/10.1037/0022-0663.84.4.429

Reynolds, L., & McDonell, K. (2021). Prompt programming for large language models: Beyond the few-shot paradigm (arXiv:2102.07350). arXiv. https://doi.org/10.48550/arXiv.2102.07350

Shaikh, S., Yayilgan, S. Y., Klimova, B., & Pikhart, M. (2023). Assessing the usability of ChatGPT for formal english language learning. European Journal of Investigation in Health, Psychology and Education, 13(9), 1937–1960. https://doi.org/10.3390/ejihpe13090140

Shim, K. J., Menkhoff, T., Teo, L. Y. Q., & Ong, C. S. Q. (2023). Assessing the effectiveness of a chatbot workshop as experiential teaching and learning tool to engage undergraduate students. Education and Information Technologies, 28(12), 16065–16088. https://doi.org/10.1007/s10639-023-11795-5

Stiennon, N., Ouyang, L., Wu, J., Ziegler, D. M., Lowe, R., Voss, C., Radford, A., Amodei, D., & Christiano, P. F. (2020). Learning to summarize with human feedback. In H. Larochelle, M. Ranzato, R. Hadsell, M. Balcan, & H. Lin (Eds.), Advances in neural information processing systems 33: Annual conference on neural information processing systems 2020, neurIPS 2020, December 6–12, 2020, virtual (pp. 3008–3021).

Su, Y., Lin, Y., & Lai, C. (2023). Collaborating with ChatGPT in argumentative writing classrooms. Assessing Writing, 57, 100752. https://doi.org/10.1016/j.asw.2023.100752

Sweller, J. (1988). Cognitive load during problem solving: Effects on learning. Cognitive Science, 12(2), 257–285. https://doi.org/10.1016/0364-0213(88)90023-7

Sweller, J., van Merrienboer, J. J. G., & Paas, F. G. W. C. (1998). Cognitive architecture and instructional design. Educational Psychology Review, 10(3), 251–296. https://doi.org/10.1023/A:1022193728205

Ulla, M. B., Perales, W. F., & Busbus, S. O. (2023). ‘To generate or stop generating response’: Exploring EFL teachers’ perspectives on ChatGPT in English language teaching in Thailand. Learning: Research and Practice, 0(0), 1–15. https://doi.org/10.1080/23735082.2023.2257252

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., Kaiser, Ł., & Polosukhin, I. (2017). Attention is all you need. Advances in neural information processing systems, 30. https://proceedings.neurips.cc/paper/2017/hash/3f5ee243547dee91fbd053c1c4a845aa-Abstract.html

Walter, Y. (2024). Embracing the future of Artificial Intelligence in the classroom: The relevance of AI literacy, prompt engineering, and critical thinking in modern education. International Journal of Educational Technology in Higher Education, 21(1), 15. https://doi.org/10.1186/s41239-024-00448-3

Wang, F., & Hannafin, M. (2005). Design-based research and technology-enhanced learning systems. Educational Technology Research & Development, 53, 1042–1629. https://doi.org/10.1007/BF02504682

Woo, D. J., Wang, Y., Susanto, H., & Guo, K. (2023). Understanding english as a foreign language students’ idea generation strategies for creative writing with natural language generation tools. Journal of Educational Computing Research, 61(7), 1464–1482. https://doi.org/10.1177/07356331231175999

Yoshida, M. (2008). Think-aloud protocols and type of reading task: The issue of reactivity in L2 reading research. Selected Proceedings of the 2007 Second Language Research Forum, 199–209. https://www.semanticscholar.org/paper/Think-Aloud-Protocols-and-Type-ofReading-Task%3A-The-Yoshida/d115ddfddd5a9aa044b0c92ed80c3ae69331e913

Zamfirescu-Pereira, J. D., Wong, R. Y., Hartmann, B., & Yang, Q. (2023). Why Johnny can’t prompt: How non-AI experts try (and fail) to design LLM prompts. Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, 1–21. https://doi.org/10.1145/3544548.3581388

Zhang, Z. (Victor), & Hyland, K. (2023). Student engagement with peer feedback in L2 writing: Insights from reflective journaling and revising practices. Assessing Writing, 58, 100784. https://doi.org/10.1016/j.asw.2023.100784

Funding

This research received no specific grant from any funding agency in the public, commercial, or not-for-profit sectors.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interests

The authors report there are no competing interests to declare.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Appendix. Questionnaires

Appendix. Questionnaires

Motivation to learn | |

1 | I think learning ChatGPT is interesting and valuable [我認為學習 ChatGPT 很有趣且有價值] |

2 | I would like to learn more and observe more in the workshop of using ChatGPT [我想在使用 ChatGPT的研討會中了解更多信息並 觀察更多] |

3 | It is worth learning how to use ChatGPT [值得學習如何使用ChatGPT] |

4 | It is important for me to learn ChatGPT well [對我來說, 很好地學習ChatGPT很重要] |

5 | It is important to know the knowledge related to ChatGPT [重了解與ChatGPT有關的知識很重要] |

6 | I will actively search for more information and learn about ChatGPT [我將積極搜索更多信息, 並了解 ChatGPT] |

7 | It is important for everyone to take the workshop on how to use ChatGPT [參加如何使用ChatGPT的研討會對於每個人來說都很重要] |

Cognitive load | |

Mental load | |

1 | The learning content in this workshop was difficult for me [這次工作坊中的學習內容對我來說很難] |

2 | I had to put a lot of effort into answering the questions in this workshop [我不得不付出很多努力來回答這個工作坊中的問題] |

3 | It was troublesome for me to answer the questions in this workshop [在這次工作坊中回答問題對我來說很麻煩] |

4 | I felt frustrated answering the questions in this workshop [在這次工作坊中回答問題時, 我感到很沮喪] |

5 | I did not have enough time to answer the questions in this workshop [我沒有足夠的時間回答本次工作坊中的問題] |

Mental effort | |

6 | During the workshop, the way of instruction or learning content presentation causes me a lot of mental effort [本次工作坊的教學方式或學習內容的呈現方式讓我花費了很多精力] |

7 | I need to put lots of effort into completing the learning tasks or achieving the learning objectives in this workshop [我需要付出很多努力來完成學習任務或實現這個工作坊中的學習目標] |

8 | The instructional way in the workshop was difficult to follow and understand [我很難跟上和理解本次工作坊中的教學方式] |

Satisfaction with learning | |

1 | I believe that I will remember everything taught today [我相信我會記住今天教的一切] |

2 | The workshop kept me focused on the content throughout [這個工作坊使我全程專注於內容] |

3 | I am confident that I will use the content learned today [我相信我會使用今天學到的內容] |

4 | This workshop made me very enthusiastic about the content taught [這個工作坊讓我對所教授的內容充滿熱情] |

5 | It will be easy to summarize for others what the training is all about [很容易對其他人總結此次工作坊的全部內容] |

6 | It was easy to concentrate on the content of this session [我很容易集中精力關注此次工作坊的內容] |

7 | I plan to apply the content learned today [我計劃使用今天學到的內容] |

8 | I had a lot of fun during this workshop [在這次工作坊中我很開心] |

9 | I clearly understand everything that was taught today [我清楚地理解今天所教的一切] |

10 | The workshop was engaging throughout [今天的工作坊從頭到尾都很吸引人] |

11 | I am looking forward to incorporating the content into my learning [我期待將今天學到的內容融入我的學習中] |

12 | This workshop was very enjoyable for me [這次工作坊對我來說非常愉快] |

13 | This workshop was superior to the others I have attended [這次工作坊比我參加過的其他工作坊要好] |

14 | Overall, I was highly satisfied with this workshop [總的來說, 我對這次工作坊非常滿意] |

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Woo, D.J., Wang, D., Guo, K. et al. Teaching EFL students to write with ChatGPT: Students' motivation to learn, cognitive load, and satisfaction with the learning process. Educ Inf Technol (2024). https://doi.org/10.1007/s10639-024-12819-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10639-024-12819-4