Abstract

This paper presents an introduction to and a formal connection between synthesis problems for discrete event systems that have been considered, largely separately, in the two research communities of supervisory control in control engineering and reactive synthesis in computer science. By making this connection mathematically precise in a paper that attempts to be as self-contained as possible, we wish to introduce these two research areas to non-expert readers and at the same time to highlight how they can be bridged in the context of classical synthesis problems. After presenting general introductions to supervisory control theory and reactive synthesis, we provide a novel reduction of the basic supervisory control problem, non-blocking case, to a problem of reactive synthesis with plants and with a maximal permissiveness requirement. The reduction is for fully-observed systems that are controlled by a single supervisor/controller. It complements prior work that has explored problems at the interface of supervisory control and reactive synthesis. The formal bridge constructed in this paper should be a source of inspiration for new lines of investigation that will leverage the power of the synthesis techniques that have been developed in these two areas.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The goal of this paper is to introduce and present a formal connection between synthesis problems that have been considered, largely separately, in the two research communities of control engineering and formal methods. By making this connection mathematically precise in a paper that attempts to be as self-contained as possible, we hope to introduce these two research areas to non-expert readers and at the same time inspire future research that will further bridge the gap between them and leverage their complementarity. Given the pedagogical nature of this paper, we focus on connecting the most basic synthesis problem in supervisory control, for safety and non-blockingness in the case of full observation, with a suitably-defined analogous problem in the field of reactive synthesis. We start our discussion with a general introduction to the two research areas under consideration.

1.1 Supervisory control of discrete event systems

Feedback control of dynamic systems is an essential element of our technological society. Control theory was originally developed for systems with continuous variables that evolve in time according to dynamics described by differential or difference equations. Since the 1980s, the field of Discrete Event Systems (DES) in control engineering has been concerned with the application of the feedback paradigm of control theory to the class of dynamical systems with discrete state space and event-driven dynamics.

The DES community has been investigating feedback control of DES using models from computer science, such as automata and Petri nets. The body of control theory developed in DES has been for specifications that are expressible as regular languages, in the case of DES modeled by automata, or in terms of constraints on the state (marking vector), in the case of DES modeled by Petri nets. Control-theoretic frameworks have been developed for both of these modeling formalisms. In this paper, we focus on the supervisory control theory for systems modeled by finite-state automata and subject to regular language specifications. Both the plant and the specification are represented as finite-state automata over a common event set. The foundations for this framework were developed in the seminal work of Ramadge and Wonham (1987), Wonham and Ramadge (1987). Since then, a whole body of theory has been developed that covers a wide variety of control architectures and information structures, with vertical and horizontal modularity. The reader is referred to Cassandras and Lafortune (2008), Wonham (2015) for textbook expositions of this theory; other relevant references are the monograph (Kumar and Garg 1995), the survey papers (Ramadge and Wonham 1989; Thistle 1996), and the recent edited book (Seatzu et al. 2013). The focus of this theory is on the synthesis of provably safe and non-blocking controllers for a given uncontrolled system, or plant in control engineering terminology, despite limited actuation and limited sensing capabilities. In automated manufacturing applications for instance, the plant would be the joint operation of a set of robots, conveyors, Automated Guided Vehicles (AGVs), and numerically controlled machines, and the controller would be implemented using one or more Programmable Logic Controllers (PLCs). Safety properties are expressed in terms of bad states where robots and/or AGVs collide for instance, or bad sequences of events that correspond to incorrect assembly for instance. Non-blockingness captures the fact that product assembly should be completed in its entirety, followed by a return of all components to their initial states.

1.2 Reactive synthesis

It is widely acknowledged that many design defects originate in the failure of the implementation to accurately capture the designer’s intent. Underlying the reactive synthesis approach is the realization that many requirements can be expressed as formal temporal assertions, capturing intended system functionality in a declarative fashion. Assertions can express both safety properties, such as “a Grant is always followed by Busy”, and liveness properties, such as “a Request is eventually followed by a Grant”. Thus, the functional specification of a system can be expressed as a set of temporal assertions.

The assertion-based approach to system specification underlays early work on program verification (Francez 1992), whose focus was on input/output properties. This was later extended to temporal properties of ongoing computations (Pnueli 1977), which enabled the application of formal verification techniques to reactive systems–systems that have ongoing interactions with their environments (Harel and Pnueli 1985). One of the most successful applications of the assertion-based approach has been model checking, an algorithmic formal-verification technique (Clarke and Emerson 1981; Clarke et al. 1986; Lichtenstein and Pnueli 1985; Queille and Sifakis 1982; Vardi and Wolper 1986); see (Clarke et al. 2000) for an in depth coverage.

The design of reactive systems, systems that engage in an ongoing interaction with their environment, is one of the most challenging problems in computer science (Harel and Marelly 2003; Harel and Pnueli 1985). The assertion-based approach constitutes a significant progress towards addressing this challenge. While there has been impressive recent progress on applying formal methods in verification (Jackson 2009), in current design methodology, design and verification are distinct phases, typically executed by separate teams. Substantial resources are spent on verifying that an implementation conforms to its specifications, and on integrating different components of the system. Not only do errors discovered during this phase trigger a costly reiteration of design and programming, but more importantly, verification offers only quality control, not quality improvement, and hence, current design methodology does not produce systems that are safe, secure, and reliable.

Currently, when formal assertions are being used, it is in testing and verification, after a significant effort has already gone into the development effort. When errors are found, significant effort has to be expended on design change. An old dream in computer science is that of design automation, in which the process of converting formal specification to implementation is, to a major extent, automated. The implication is that a major portion of the manual design effort should go into the development of high-level specifications, since much of the implementation effort can then be automated. The technique of compiling high-level formal requirements to low-level system code is referred to as synthesis, and was proposed already in Church (1957), Green (1969). Follow up work in Büchi and Landweber (1969), Rabin (1972) addressed the problem mathematically, but it seemed quite far from being applicable to real-life problems.

In the late 1980s, several researchers realized that the classical approach to system synthesis (Green 1969), where a system is extracted from a proof that the specification is satisfiable, is well suited to closed systems (systems with no inputs), but not to reactive systems. In reactive systems, the system interacts with the environment, and a correct system should then satisfy the specification no matter how the environment behaves, i.e., no matter which inputs the environment provides to the system. If one applies the techniques of Emerson and Clarke (1982), Manna and Wolper (1984) to reactive systems, one obtains systems that are correct only with respect to some environment behaviors. Pnueli and Rosner (1989a), Abadi et al. (1989), and Dill (1989) argued that the right way to approach synthesis of reactive systems is to use the model of a, possibly infinite, game between the environment and the system. A correct system can be then viewed as a winning strategy in this game. It turns out that satisfiability of the specification is not sufficient to guarantee the existence of such a strategy. Abadi et al. called specifications for which a winning strategy exists realizable. Since then, the subject of reactive synthesis has been an active area of research, attracting a considerable attention, for example (Kupferman and Vardi 2000; Pnueli and Rosner 1989b; Vardi 1995; Wong-Toi and Dill 1991).

1.3 Contributions and organization of this paper

We start in Section 2 by presenting technical introductions to supervisory control (Section 2.1) and reactive synthesis (Section 2.2). We have tried to make Section 2 as self-contained as possible, so that readers who are not experts in either domain could follow the ensuing results connecting these two areas. However, these introductions are not meant to be thorough and readers interested in learning these areas in more depth should consult the references mentioned above.

The main results connecting supervisory control and reactive synthesis are contained in Section 3. That section is organized into several parts. First, we present in Section 3.1 a simplification of the basic supervisory control problem, non-blocking version, to one where the safety specification has been absorbed into the plant model. We then show that the resulting Simple Supervisory Control Problem (SSCP) has a state-based solution. The results on SSCP will facilitate bridging the gap in the remainder of Section 3. Second, for bridging reactive synthesis with supervisory control, we need two technical steps: the first step is to consider reactive synthesis with plants; the second step is to bring in the issue of maximal permissiveness into this reactive synthesis setting. These two steps are covered in Section 3.2. With the above technical results established, we establish the formal reduction from SSCP to a reactive synthesis problem with plants and maximal permissiveness in Section 3.3. This formal reduction is the main technical contribution of this paper. Section 3.4 discusses algorithmic issues and Section 3.5 discusses links between the reactive synthesis problem with plants and the more standard reactive synthesis problem without plants.

Finally, some concluding comments and directions for future work are given in Section 4. A preliminary and partial version of this paper, without proofs, appears in Ehlers et al. (2014).

1.4 Related works

This paper is not the first to explore connections between supervisory control and reactive synthesis. On the supervisory control side, several authors have considered control of discrete event systems subject to temporal logic specifications; see, e.g.,Thistle and Wonham 1986, Lin (1993), Jiang and Kumar (2006). Supervisory control of discrete event systems with infinite behavior, i.e., modeled by languages over E ω instead of E ∗ for a given event set E, has also been considered by many researchers; see, e.g., Ramadge (1989), Kumar et al. (1992), Thistle and Wonham (1994a), Thistle and Wonham (1994b), Thistle (1995), Thistle and Malhamé (1998), Lamouchi and Thistle (2000). On the other hand, several researchers in the formal methods community have investigated supervisory control of fully- and partially-observed discrete event systems, in untimed, timed, and hybrid system settings; see, e.g., Hoffmann and Wong Toi (1992), Maler et al. (1995), Asarin et al. (1995), Henzinger and Kopke (1997), Kupferman et al. (2000), Madhusudan (2001), Cassez et al. (2002), Arnold et al. (2003), Riedweg and Pinchinat (2003). Researchers from both the supervisory control and formal methods communities have also studied problems of distributed or decentralized controller synthesis, where more than one controllers are (simultaneously) synthesized, e.g., see Pnueli and Rosner (1990), Rudie and Wonham (1992), Barrett and Lafortune (1998), Lamouchi and Thistle (2000), Overkamp and van Schuppen (2000), Ricker and Rudie (2000), Tripakis (2004), Lustig and Vardi (2009), Komenda et al. (2012), Seatzu et al. (2013).

In the present paper, we restrict attention to the basic problem of centralized supervisory control for fully-observed systems modeled by languages of finite strings. Our goal is to establish a precise connection of this classical work with problems of reactive synthesis, by showing how specific problem instances reduce to each other. While more advanced connections have been discussed in the above-cited references, our goal is pedagogical in nature and aims at establishing a bridge between these two areas at the most elementary level. Still, to the best of our knowledge, the formal reduction presented in Section 3 has not been published elsewhere. Our results therefore complement existing works from a technical standpoint.

2 Classical frameworks

In this section, we present technical introductions to the fields of supervisory control and reactive synthesis. These introductions are not meant to be comprehensive, but rather their goal is to allow readers who are not experts in either or both of these fields to follow the later results in Section 3 on establishing a precise connection between basic synthesis problems in these two fields.

2.1 Supervisory control

In supervisory control of Discrete Event Systems (DES), the system to be controlled, i.e., the plant, is typically modeled as a set of interacting finite-state automata coupled by common events or as a Petri net. In order to obtain a monolithic model that will be used for analysis and control synthesis purposes, the parallel composition of the set of interacting automata is performed or the reachability graph of the Petri net is constructed. We restrict attention to plants with finite state spaces. Also, we assume full event observability.

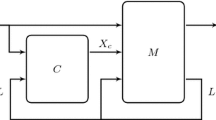

Let the plant be denoted by G. (Formal definitions will follow.) G captures the entire set of possible behaviors of the plant and it is called the “uncontrolled system.” In general, some of this behavior is not acceptable in the sense that it is not safe with respect to a given specification or that it results in deadlock or livelock. Consequently, we wish to restrict the behavior of G by means of a feedback controller, or supervisor in DES terminology. The standard feedback loop of supervisory control theory is shown in Fig. 1. The “input” to the supervisor S is the string of events generated so far by G. All the events of G are observed by S. The “output” of S is a control action that tells G which event(s) it is allowed to do at that moment. The supervisor may allow more than one event, in which case the system will decide which allowed event to execute next. The mechanism by which G chooses which allowed event to execute next is not modeled. One may think of G as a semi-autonomous system for instance. In general, the supervisor may not have full actuation capabilities, i.e., there may be events of G that it cannot disable. These events are called uncontrollable. How to deal with uncontrollable events is one of the contributions of supervisory control theory.

Example 1 (Coffee Machine)

For the sake of illustration, assume that our plant G is a coffee machine that can grind coffee beans, brew coffee, and deliver a cup of coffee. Its interface with the user is a “coffee button” that generates an event, denoted by c, when it is pressed by the user. The automaton representation of that machine is shown in Fig. 2. We give the formal definition of an automaton below; for now, we explain the transition structure. The initial state is 1 (this is denoted by the fact that state 1 has an incoming arrow). State 1 is also an accepting or marked state (denoted by the fact that the state is drawn as a double circle). Upon occurrence of event c, the coffee machine moves to a new state in which it can execute an arbitrary number of “grind” events, which are denoted by g, as well as an arbitrary number of “brew” events, which are denoted by b; these events self-loop at state 2. Finally, when grinding and brewing are completed, the coffee is delivered (poured in cup) and the machine returns to its initial state; this is represented by event r. For simplicity, we assume that the machine ignores further pressing of the coffee button while it is grinding and brewing, i.e., until event r occurs. This is modeled by the self-loop for event c at state 2.

G represents the physical capabilities of the machine, without an appropriate control protocol, i.e., without a specification. As given, this behavior is unsafe: first, brewing should always be preceded by grinding; second, no grinding should occur after brewing has started.

Moreover, one may wish to follow special coffee recipes, parameterized by the number of g events (more events means finer ground coffee) and the number of b events (more events for stronger coffee). One such recipe could be that coffee is prepared by one grinding step followed by two brewing steps. Another recipe for stronger coffee could call for two grinding steps followed by three brewing steps.

We wish to synthesize a supervisor S that will restrict the behavior of G in order to satisfy the above safety constraints and allow the two possible recipes. In this example, event c is uncontrollable, as the supervisor cannot tell the plant to ignore a request for coffee when it is in state 1. (Note that such requests are ignored while the plant is in state 2, as captured in the model in Fig. 2.) The other events are assumed to be controllable, as the coffee machine has actuators for grinding, brewing, and delivering a cup of coffee; hence, the control protocol can decide when to activate or de-active these actuators. For instance, after the occurrence of event c, S needs to tell G that it should execute a g event, not a b event, as grinding must precede brewing. Hence, immediately after event c occurs, S should disable event b. Similarly, after the first b event, S should disable event g until the next cup is prepared. As for event r, it should remain disabled until a recipe is completed. Here, in the spirit of supervisory control, we assume that the supervisor will not force one of the allowed recipes. Namely, after one occurrence of g, S can enable both g and b and let the plant “randomly” choose to do either another g event or to start brewing, i.e., randomly choose which recipe to implement (perhaps on the basis of some other features not included in this simple model). If the plant chooses to execute b, then further occurrences of g will be disabled by S. On the other hand, after two consecutive occurrences of event g, S needs to tell G that it should not execute any more g events for the current cup under preparation, as no recipe calls for three grinding steps; i.e., S must disable the third consecutive occurrence of event g. When the plant has completed either recipe, S disables both g and b and enables r in order to allow the plant to deliver the cup of coffee.

In supervisory control, the objective is to automatically synthesize a supervisor S that provably satisfies all given specifications. The inputs to the synthesis process are: (i) the model G; (ii) the sets of controllable and uncontrollable events; and (iii) an automaton model of the specifications imposed on G. In the rest of this section, we present the main concepts needed before we can proceed to bridging the gap with reactive synthesis.

2.1.1 Plant model

In supervisory control theory, plants are typically modeled as deterministic finite-state automata. A deterministic finite-state automaton (DFA) is a 5-tuple

where

-

X is a finite set of states, x 0∈X is the initial state, and X m ⊆X is the set of marked (i.e., accepting) states;

-

E is a finite set of events. E is (implicitly) partitioned into two disjoint subsets:

$$E = E_{c} \cup E_{uc} $$where E c models the set of controllable events and E u c the set of uncontrollable events.

-

δ:X×E→X is the transition function, which in general will be partial.

The reason for the transition function to be partial is the fact that G models the physically possible behavior of a DES, as a generator of events. Selection of the states to “mark,” i.e., to be included in X m , is a modeling consideration to capture strings that represent that the system has completed some task. In many systems with cyclic behavior, such as our coffee machine, the initial state is marked, as returning to it means that a task has been completed and a new one can start.

A state x∈X is called a deadlock state if for all e∈E, δ(x,e) is undefined.

It is useful to “lift” the transition function δ to a function

defined as follows:

where ε denotes the empty string and ⋅ denotes string concatenation. (We usually omit writing ⋅ unless needed for clarity of notation.) Note that since δ is partial, δ ∗ is also partial. Since we always extend δ to δ ∗, we shall drop the “*” superscript hereafter and simply refer to the extended function as δ.

The DES G defines the following languages:

Given K⊆E ∗, let \(\overline {K}\) denote the prefix-closure of K:

In the definition above σ ′ can be the empty string, so \(K\subseteq \overline {K}\) for all K.

Moreover, for any DES G:

Note that \(\overline {\mathcal {L}_{m}(G)} \neq \mathcal {L}(G)\) in general. This is because \(\mathcal {L}(G)\) may contain strings that cannot be extended to yield strings in \(\mathcal {L}_{m}(G)\). In other words, G may contain reachable states that cannot reach any marked state.

2.1.2 Supervisors

A supervisor for G is a function S:E ∗→2E. It reads a string σ representing the history of what has happened so far and returns the set of controllable events that are allowed to occur. To ensure that S never disables an uncontrollable event, we require that E u c ⊆S(σ) for all σ∈E ∗ (alternatively, we could also define S to be a function \(S: E^{*} \rightarrow 2^{E_{c}}\)). Note that S(σ)∩E c may be empty.

Sometimes S is required to satisfy the following property:

which states that S allows a controllable event e only if e is feasible in G. This is not an essential requirement on S: we can simply ignore controllable events that S allows but are not feasible in G. The same comment applies to enabled but infeasible uncontrollable events.

When S is a supervisor specifically designed for G, the history σ can only be generated by G, therefore S can also be defined as a function

However, in general, it is more convenient to define S to be a function over E ∗, since this allows us to use the same supervisor for different plants, as long as E c and E u c remain the same.

Remark 1 (Full observability)

In the current framework, a plant is fully observable by a supervisor, for two reasons. First, the supervisor, defined as a function with domain E ∗, observes the entire sequence of events generated by the plant (in partial observability frameworks, only a subset of events are observed). Second, the plant itself is a deterministic automaton. Therefore, given the sequence of observed events, the supervisor can uniquely determine the current state of the plant.

2.1.3 Closed-loop system

Given a plant G=(X,x 0,X m ,E,δ) and a supervisor S:E ∗→2E for G, the closed-loop system S/G, according to the feedback loop in Fig. 1, is a DES that is formally defined as follows:

where

-

\(X^{\prime } = X \times \mathcal {L}(G)\)

-

\(x_{0}^{\prime } = (x_{0},\varepsilon )\)

-

\(X_{m}^{\prime } = X_{m} \times \mathcal {L}(G)\)

-

\( \delta ^{\prime }\left ( (x,\sigma ), e \right ) = \left \{\begin {array}{ll} (\delta (x,e), \sigma e) & \text { if }\delta (x,e)\text { is defined and } e\in S(\sigma )\\ \text {undefined} & \text { otherwise.} \end {array}\right .\)

A state in S/G is a pair (x,σ) where x∈X is a state of the plant G and \(\sigma \in \mathcal {L}(G)\) is the history observed so far. Thus, S/G is an infinite-state automaton, except for the special case that G is loop-free. This need not worry us for now. At this point, we are mainly interested in defining the synthesis problem, and not the algorithm to solve it. The initial state of S/G is (x 0,ε), since x 0 is the initial state of G and the history is initially empty. \(X_{m}^{\prime }\) is defined as \(X_{m} \times \mathcal {L}(G)\), meaning that a behavior of the closed-loop system is marked iff it is marked by G. In other words, we only consider supervisors that do not affect the marking of states in the plant. The definition of the transition function δ ′ is explained as follows. Given current state (x,σ) and event e:

-

when δ(x,e) is undefined (i.e., the plant does not have a transition from x for e), then δ ′((x,σ),e) is also undefined

-

otherwise, assuming δ(x,e) = x ′,

-

if e is uncontrollable, i.e., e∈E u c , then e is allowed by the supervisor and the next state is (x ′,σ e), i.e., the plant moves to x ′ and the supervisor observes e,

-

if e is controllable and allowed by the supervisor S, i.e., e∈E c ∩S(σ), then the next state is again (x ′,σ e),

-

otherwise (i.e., if e∈E c ∖S(σ), meaning that e is controllable but not allowed by S), δ ′((x,σ),e) is undefined. This is the only case when an event e which is allowed in G is forbidden in the closed-loop system.

-

Note that when δ(x,e) is defined, \(\sigma \in \mathcal {L}(G)\) implies \(\sigma e\in \mathcal {L}(G)\). This ensures that if (x,σ) is a valid state of S/G, i.e., (x,σ)∈X ′, then δ ′((x,σ),e) is also a valid state of S/G, so that the state-space of S/G is well defined.

S/G is an automaton (albeit an infinite-state one), therefore, languages \(\mathcal {L}(S/G)\) and \(\mathcal {L}_{m}(S/G)\) are defined as stated above. Note that, by definition, S/G is a restriction of G, therefore,

Moreover, it is easy to verify that

since a marking in S/G is completely determined by a marking in G. When S is applied to G as described above, the definition of S(σ) for \(\sigma \in E^{*} \setminus \mathcal {L}(S/G)\) is irrelevant, since the controlled behavior will never exceed \(\mathcal {L}(S/G)\).

As an example, consider the plant G 1 shown in Fig. 3. Let E c ={c 1,c 2} and E u c ={u} (we generally use the convention that events c,c ′,c 1,c 2,... are controllable, while events u,u ′,u 1,u 2,... are uncontrollable). Consider two supervisors S 1 and S 2 for G 1, defined as follows:

The closed-loop systems S 1/G 1 and S 2/G 1 are shown in Fig. 3. For simplicity, states in the closed-loop systems are labeled as in the original plant G 1, instead of being labeled as pairs (x 0,ε), (x 1,c 1), (x 3,u), and so on.

Remark 2 (Supervisors vs. controllers)

Supervisory control theory typically uses the term “supervisor” instead of “controller”. The term “supervisor” is well-chosen because in this framework supervisors are like “parents”: they can disable options, but they cannot “make things happen”. For instance, a supervisor cannot force the plant to take a certain transition, even when this transition is controllable. The supervisor can only allow a controllable transition. If this is the only outgoing transition from the current state, then presumably this will happen (although the state may be marked, with the interpretation that the plant “stops” there). But if there are multiple (controllable or uncontrollable) transitions from that state, the plant could choose any of them, without the supervisor having any control over this choice.

2.1.4 A trivial synthesis problem

A supervisor is needed because without it the plant may generate illegal behaviors. The supervisor aims at restricting the plant’s behaviors, so that they are all contained in a set of “good”, or “legal” behaviors.

One way to formalize this idea is to assume that we are given a language of “good” behaviors, L a m , called the admissible marked language. Then we could define a synthesis problem where we ask for a supervisor S (if it exists) such that \(\mathcal {L}_{m}(S/G) \subseteq L_{am}\). This, however, is not a very interesting problem, for a number of reasons.

First, in terms of synthesis, the problem is trivial. Indeed, instead of searching for an arbitrary supervisor S, it suffices to simply check whether the most-restrictive (or least-permissive) supervisor works. The most-restrictive supervisor S m r is the supervisor that disables everything that it can disable, i.e., S m r (σ) = E u c , for any σ. If S m r satisfies \(\mathcal {L}_{m}(S_{mr}/G) \subseteq L_{am}\), then we have found a solution to the above synthesis problem. Otherwise, it is easy to see that no solution exists. Indeed, any other supervisor S is bound to allow more behaviors than S m r , that is, \(\mathcal {L}_{m} (S_{mr}/G) \subseteq \mathcal {L}_{m}(S/G)\). Therefore, if \(\mathcal {L}_{m}(S_{mr}/G)\) is not a subset of L a m , neither can \(\mathcal {L}_{m}(S/G)\) be.

The fact that this problem is trivial (indeed, it is not really a synthesis problem, but a verification problem) should not necessarily deter us. On the contrary, the easier the problem, the better. However, the second and most important reason why the above problem is not the right one is the fact that the most-restrictive supervisor is rarely what we want. Indeed, the most-restrictive supervisor may be far too restrictive. It may, for example, introduce deadlocks. For the plant G 1 in Fig. 3, S m r will result in a new deadlock at state 3 of G 1, since the only events out of that state are controllable events that are disabled by S m r . We therefore need a richer way to specify desirable supervisors. Toward this goal, we introduce next the notion of non-blockingness.

2.1.5 Non-blockingness

Let G be a plant and S a supervisor for G. S is said to be non-blocking for G iff

Note that, as mentioned above, \(\overline {\mathcal {L}_{m}(S/G)} \subseteq \mathcal {L}(S/G)\) always holds. Therefore, non-blockingness is equivalent to \(\mathcal {L}(S/G) \subseteq \overline {\mathcal {L}_{m}(S/G)}\). Non-blockingness says that the closed-loop system should not contain behaviors that cannot be extended to marked behaviors. More precisely, there should be no deadlock states that are not marked, and there should be no absorbing strongly connected components that do not contain a marked state; the latter situation corresponds to a livelock.

As an example, consider again plant G 1 and supervisors S 1,S 2 of Fig. 3. It can be seen that S 1 is blocking since (\(u c_{1}\in \mathcal {L}(S_{1}/G)\) but \(\overline {\mathcal {L}_{m}(S_{1}/G)}= \{\varepsilon ,c_{1}\}\)); on the other hand, S 2 is non-blocking.

The following is a useful characterization of non-blockingness. Its proof is straightforward from the definition of non-blockingness.

Lemma 1

S is a non-blocking supervisor for G iff from every reachable state of S/G there is a path to a marked state of S/G.

2.1.6 Safety properties and admissible marked languages

Before we can give a formal statement of the basic supervisory control problem defined below (in Section 2.1.8), we need to formalize the notions of admissible (marked) language and of maximal permissiveness. This is done in this and the next subsections.

An admissible marked language in supervisory control, denoted L a m , captures the set of “legal”, or “good”, behaviors. Typically, the designer first comes up with a prefix-closed regular language L a (i.e., a regular language such that \(\overline {L_{a}}=L_{a}\)). L a captures the set of safe behaviors. For safety properties, such sets are prefix-closed, since for every unsafe behavior σ, every extension σ⋅σ ′ of σ is also unsafe. Conversely, if σ is safe, every prefix of σ is also safe. Once L a is defined, the designer typically sets L a m to be the intersection \(L_{am} := L_{a} \cap \mathcal {L}_{m}(G)\), so that L a m captures the set of all safe behaviors that can be generated and are marked by the plant. L a m has two useful properties:

-

1.

\(L_{am} \subseteq \mathcal {L}_{m}(G)\) (by definition, since \(L_{am} = L_{a} \cap \mathcal {L}_{m}(G)\)).

-

2.

L a m is “\(\mathcal {L}_{m}(G)\)-closed”. Given languages K and L with K⊆L⊆E ∗, we say that K is L-closed iff

$$K = \overline{K} \cap L . $$Notice that since K⊆L and \(K\subseteq \overline {K}\), \(K\subseteq \overline {K} \cap L\) always holds. Therefore requiring L-closure is requiring that \(\overline {K} \cap L \subseteq K\). Then, it is easy to see that if \(L_{am} = L_{a} \cap \mathcal {L}_{m}(G)\) then \(\overline {L_{am}} \cap \mathcal {L}_{m}(G) \subseteq L_{am}\).

In the sequel we will assume that the given admissible marked language L a m satisfies conditions 1 and 2 above, i.e., \(L_{am} \subseteq \mathcal {L}_{m}(G)\) and L a m is \(\mathcal {L}_{m}(G)\)-closed. Moreover, we will assume that L a m is regular and we will be using a finite-state automaton to represent it.

Given DES G and \(L_{am}\subseteq \mathcal {L}_{m}(G)\), a supervisor S is said to be safe for G with respect to L a m if \(\mathcal {L}_{m}(S/G) \subseteq {L_{am}}\), i.e., the only marked strings allowed under control are safe marked strings. We often drop the reference to L a m and simply say that a supervisor is “safe” if it is clear from the context which safety specification is being referred to.

As an example, consider plant G 1 of Fig. 3. Let L 2={u c 2}, which can be interpreted as capturing the safety property “ c 1 should never occur”. (As explained above, L 2 is obtained by taking the intersection of \(\mathcal {L}_{m}(G_{1})\) with the prefix-closed language containing all strings not including c 1, i.e., ε, u, c 2, u u, c 2 c 2, and so on.) L 2 is \(\mathcal {L}_{m}(G_{1})\)-closed. L 2 is also a strict subset of \(\mathcal {L}_{m}(G_{1})\). Therefore, L 2 is a valid admissible marked language. Consider the supervisor S 3 defined as follows:

It can be seen that S 3 is non-blocking for G 1 and satisfies \(\mathcal {L}_{m}(S_{3}/G_{1}) \subseteq L_{2}\); therefore, S 3 is safe w.r.t. L 2.

Remark 3

\(L_{am} \subseteq \mathcal {L}_{m}(G)\) is not a restrictive assumption, even for arbitrary L a m , i.e., not necessarily satisfying \(L_{am} = L_{a} \cap \mathcal {L}_{m}(G)\). If \(L_{am} \not \subseteq \mathcal {L}_{m}(G)\), we can set \(L_{am}^{\prime } := L_{am}\cap \mathcal {L}_{m}(G)\) (thus achieving the condition \(L_{am}^{\prime } \subseteq \mathcal {L}_{m}(G)\)) and ask for a supervisor such that \(\mathcal {L}_{m}(S/G) \subseteq L_{am}^{\prime }\). Any such supervisor S also satisfies \(\mathcal {L}_{m}(S/G) \subseteq L_{am}\), since \(L_{am}^{\prime } \subseteq L_{am}\). Conversely, any supervisor which satisfies \(\mathcal {L}_{m}(S/G) \subseteq L_{am}\) also satisfies \(\mathcal {L}_{m}(S/G) \subseteq L_{am}^{\prime }\), since \(\mathcal {L}_{m}(S/G) \subseteq \mathcal {L}_{m}(G)\). Therefore, asking for a supervisor such that \(\mathcal {L}_{m}(S/G) \subseteq L_{am}\) is equivalent to asking for a supervisor such that \(\mathcal {L}_{m}(S/G) \subseteq L_{am}^{\prime }\). Thus, we can assume \(L_{am} \subseteq \mathcal {L}_{m}(G)\) without loss of generality.

On the other hand, an arbitrary L a m is not necessarily \(\mathcal {L}_{m}(G)\)-closed, as the following example illustrates.

Consider the plant G 2 shown in Fig. 4, where \(\mathcal {L}_{m}(G_{2})=\{c,cc\}\). Let L 1={c c}. L 1 is not \(\mathcal {L}_{m}(G_{2})\)-closed. Indeed, \(c\in \overline {L_{1}}\cap \mathcal {L}_{m}(G_{2})\) but c∉L 1. It is easy to find a blocking supervisor that ensures \(\mathcal {L}_{m}(S/G_{2}) \subseteq L_{1}\). In fact, the most-restrictive supervisor S m r achieves \(\mathcal {L}_{m}(S_{mr}/G_{2}) = \emptyset \subseteq L_{1}\). This supervisor is blocking, because ε is in \(\mathcal {L}(S_{mr}/G_{2})\) but not in \(\overline {\mathcal {L}_{m}(S_{mr}/G_{2})} = \emptyset \). A non-blocking supervisor S that ensures \(\mathcal {L}_{m}(S/G_{2}) \subseteq L_{1}\) does not exist. Indeed, S can only disable transitions, and it cannot disable both transitions of G 2 because this would be blocking. Thus, at least one of c or c c must be in \(\mathcal {L}_{m}(S/G_{2})\). But \(c \in \mathcal {L}_{m}(S/G_{2})\) is not allowed, because this would imply \(\mathcal {L}_{m}(S/G_{2}) \not \subseteq L_{1}\). Therefore it must be that \(\mathcal {L}_{m}(S/G_{2})=\{cc\}\). But it is impossible to have S allow c c while it forbids c, since c is a marked prefix of c c. Indeed, this would require S being able to “unmark” some marked states of the plant, which it cannot do.

2.1.7 Maximal permissiveness and uniqueness

An important requirement in the basic supervisory control problem defined below (Section 2.1.8) is maximal permissiveness, namely, the fact that the supervisor must disable events only when strictly necessary to enforce the other requirements (non-blockingness or safety). This is a reasonable requirement, as it forces the supervisor to “disturb” the plant as little as possible, and only when strictly necessary. An important feature of the basic supervisory control framework is that a unique maximally-permissive supervisor always exists. As we shall see, this is not generally the case in the reactive synthesis framework. In this section, we establish this uniqueness property.

First, we define what it means for a supervisor to be more permissive than another supervisor. Consider a plant G and two supervisors S 1, S 2 for G. We say that S 1 is no more permissive than S 2 iff S 1(σ) ⊆ S 2(σ) for any σ. We say that S 2 is strictly more permissive than S 1 iff S 1 is no more permissive than S 2 and S 1≠S 2.

Now, consider an admissible marked language L a m satisfying: (1) \(L_{am} \subseteq \mathcal {L}_{m}(G)\) and (2) L a m is \(\mathcal {L}_{m}(G)\)-closed. A supervisor S which is non-blocking for G and safe w.r.t. L a m is said to be maximally-permissive with respect to G and L a m if there is no supervisor S ′ which is non-blocking for G, safe w.r.t. L a m , and strictly more permissive than S. Note that, a-priori, there could be more than one maximally-permissive supervisor, as the definition itself does not imply uniqueness. The theorem below shows that, for non-blockingness and safety, a unique maximally-permissive supervisor exists, provided that a supervisor exists at all.

Theorem 1

Consider a plant G, and an admissible marked language L am satisfying: (1) \(L_{am} \subseteq \mathcal {L}_{m}(G)\) and (2) L am is \(\mathcal {L}_{m}(G)\) -closed. If there exists a supervisor which is non-blocking for G and safe w.r.t. L am then there exists a unique maximally-permissive supervisor S mpnb which is non-blocking for G and safe w.r.t. L am .

Proof

The books (Cassandras and Lafortune 2008; Wonham 2015) and the original papers (Ramadge and Wonham 1987; Wonham and Ramadge 1987) contain proofs of Theorem 1, as well as statements of necessary and sufficient conditions for the existence of a safe and non-blocking supervisor and algorithmic procedures for computing S m p n b , given G and given an automaton representation of L a m . These proofs are normally done by defining the property of controllability of languages, showing that it is necessary and sufficient for the existence of a supervisor that exactly achieves a given language, and then proving the existence of the supremal controllable sublanguage.

For the reader interested in understanding the existence of a unique maximally permissive safe and non-blocking supervisor without reading more detailed treatments of supervisory control theory, we provide in Appendix A a direct proof based on disjunction of supervisors. This proof does not require the notions of controllable languages and of supremal controllable languages.

In the sequel, the unique maximally-permissive non-blocking and safe supervisor will be denoted by S m p n b and its associated closed-loop marked language by \(\mathcal {L}_{m}(S_{mpnb}/G) = L_{am}^{mpnb}\). Since S m p n b is non-blocking, then \(\mathcal {L}(S_{mpnb}/G) = \overline {L_{am}^{mpnb}}\). Moreover, as a consequence of the maximal permissiveness property of S m p n b , the language \(L_{am}^{mpnb}\) must contain the closed-loop marked language \(\mathcal {L}_{m}(S_{other}/G)\) of any safe and non-blocking supervisor S o t h e r for G wrt L a m .

As an example, consider again plant G 1 of Fig. 3, admissible marked language L 2={u c 2}, and supervisor S 3 defined above. S 3 is maximally-permissive w.r.t. G 1 and L 2. Indeed, any other supervisor, in order to be strictly more permissive than S 3, would have to either allow c 1 initially, which would violate safety w.r.t. L 2, or allow c 2 initially, which would violate non-blockingness, or allow c 1 after u, which again would be blocking.

Remark 4 (Non-uniqueness of supervisors achieving maximal behavior)

Note that, although S m p n b is unique, there are generally more than one supervisors that result in the same maximal closed-loop marked behavior \(L_{am}^{mpnb}\) since, by definition, S m p n b might enable infeasible controllable events. As an example, consider the plant G 3 shown in Fig. 5, where both c 1,c 2 are controllable events. Note that all states of G are accepting and as a result, \(\mathcal {L}_{m}(G)\) is prefix-closed and \(\mathcal {L}_{m}(G)=\mathcal {L}(G)=\{\varepsilon ,c_{1},c_{1} c_{2}\}\).

Let L a m :={ε,c 1}, which can be interpreted as “ c 2 should never occur”. The maximally-permissive supervisor w.r.t. G 3 and L a m defined as above is

Another, less permissive supervisor, is one that always disables c 2. Both these two supervisors, however, achieve the same maximal closed-loop behavior, which is exactly L a m .

2.1.8 BSCP-NB: basic supervisory control problem with non-blockingness

We are now ready to define the standard supervisory control problem:

Definition 1 (BSCP-NB)

Given DES G and admissible marked language \(L_{am} \subseteq \mathcal {L}_{m}(G)\), with L a m assumed to be \(\mathcal {L}_{m}(G)\)-closed, find, if it exists, or state that there does not exist, a supervisor for G which is non-blocking for G, safe w.r.t. L a m , and maximally-permissive.

Observe that from the safety property \(\mathcal {L}_{m}(S/G)\subseteq L_{am}\), we get \(\overline {\mathcal { L}_{m}(S/G)}\subseteq \overline {L_{am}}\). Also, from non-blockingness we know that \(\mathcal {L}(S/G) = \overline {\mathcal {L}_{m}(S/G)}\). These two properties imply \(\mathcal {L}(S/G) \subseteq \overline {L_{am}}\) and thus in BSCP-NB the controlled behavior always stays within the prefix-closure of the admissible marked behavior.

As an example, consider again plant G 1 of Fig. 3, admissible marked language L 2={u c 2}, and supervisor S 3 defined above. S 3 is a solution to this BSCP-NB instance, since it is non-blocking, safe, and maximally-permissive, as explained above.

It may happen that BSCP-NB has no solution. For instance, suppose that \(\mathcal {L}(G) = \overline { \{ucuc \}}\) and \(\mathcal {L}_{m}(G) = \{ uc, ucuc \}\) with E c ={c} and E u c ={u}. Take L a m ={u c}. Then no safe and non-blocking supervisor exists. Any supervisor will allow uncontrollable event u at the beginning of system operation. But enabling c after observing string u will violate safety, since string u c u will be in the closed-loop language. On the other hand, disabling c after observing string u causes deadlock. Hence, BSCP-NB has no solution in this example.

This shows that the set of uncontrollable events E u c plays a central role in BSCP-NB. Algorithmic procedures that solve BSCP-NB must account for both uncontrollability and non-blockingness, and these two requirements are interdependent.

We postpone the discussion of algorithms to solve BSCP-NB to Section 3.4.1.

Example 2 (Coffee Machine Revisited)

We revisit the coffee machine example at the beginning of Section 2.1, where the “plant” is the automaton G in Fig. 2. To obtain an instance of BSCP-NB, we formalize the specifications of safety and the two allowed recipes described earlier in the form of a language \(L_{am} \subseteq \mathcal {L}_{m}(G)\). It is not hard to see that L a m is marked by the non-blocking automaton H shown in Fig. 6. This automaton ensures that grinding precedes brewing, that no grinding occurs after brewing has started, and it allows either one of the two recipes: g b b or g g b b b. Its marked language is also a sublanguage of \(\mathcal {L}_{m}(G)\) as we have included self-loops for event c at the states where the coffee machine is not idle, consistent with the structure of G. Observe that automaton H needs to count the number of g and b events, something that is not done in G. It is also straightforward to verify from Fig. 6 that L a m satisfies the \(\mathcal {L}_{m}(G)\)-closure condition.

If E u c ={c} but all other events are controllable, then the solution of BSCP-NB achieves L a m exactly under control, i.e., the maximally permissive non-blocking supervisor S m p n b is such that \(\mathcal {L}_{m} (S_{mpnb}/G) = L_{am}\). In other words, in this simple example, the synthesis step is trivial since the specification language is exactly achievable by disabling g, b, or r at the right moment along each run of the plant. Hence, the closed-loop language \(\mathcal {L}(S_{mpnb}/G) = \overline {L_{am}}\) is also equal to \(\mathcal {L}(H)\). (This is of course not true in general.) Indeed, initially, S m p n b (ε)={c,b,g,r}: only c is feasible and it is uncontrollable, so it must be enabled; the other events are infeasible but added according to the definition of S m p n b . Then the supervisor issues the following control actions for the given observed strings of G: S m p n b (c)={c,g} (i.e., b and r must be disabled to allow grinding to start); S m p n b (c g)={c,g,b} (i.e., r must be disabled until a recipe is completed); S m p n b (c g b)={c,b} (i.e., b must continue since the first recipe is being followed); S m p n b (c g g)={c,b} (i.e., b must start since the second recipe is being followed); S m p n b (c g b b)={c,r} (i.e., r must start since the first recipe is completed); S m p n b (c g g b)={c,b} (i.e., b must continue since the second recipe is being followed); S m p n b (c g g b b)={c,b} (i.e., b must continue since the second recipe is being followed); S m p n b (c g g b b b)={c,r} (i.e., r must start since the second recipe is completed); S m p n b (c g b b r) = S m p n b (c g g b b b r)={c,b,g,r} (i.e., a new cycle can begin); and so forth.

If either event g, b, or r were uncontrollable, then BSCP-NB would have no solution. In the case where g∈E u c for instance, the strings c g n, n≥3, which are in \(\mathcal {L}(G)\), cannot be prevented by control, and they are outside \(\overline {L_{am}}\). Similarly for string c b if b is uncontrollable, and for string c r if r is uncontrollable.

2.2 Reactive synthesis

In reactive synthesis, we build correct-by-construction controllers from declarative specifications. Controllers are open dynamical systems. A controller is open in the sense that it has inputs and outputs, and its behavior (its dynamics) depends on the inputs that the controller receives. These inputs come from the controller’s environment (which may also be an open system, receiving as inputs the controller’s outputs). A specification is declarative in the sense that it states how a controller must behave, but is not concerned with its internal structure. Rather, the specification only describes the desired behavior of the controller on the interface level, i.e., using its sets of inputs and outputs.

Let us illustrate the reactive synthesis framework by re-stating the coffee maker example (Section 2.1) in this framework. Consider the interface of the controller of a coffee maker that is depicted in Fig. 7.

The controller is meant to trigger the mechanical components of the coffee maker. The interface shows that we have one input signal, c. In this example, it is supposed to represent whether the user of the coffee maker has pressed the coffee button. There are also two output signals, namely b and g. While b is supposed to represent whether the brewing unit of the coffee maker is activated, g represents whether the grinding unit is activated.

In reactive synthesis, we assume that the controller evolves in steps, i.e., the controller communicates via input and output signals, which all have some value assigned in every time step. For simplicity, we assume Boolean signals where all values are Boolean. Controllers can therefore be viewed as state machines of type Moore or Mealy. In typical targets for reactive synthesis such as on-chip controllers, this assumption is well-justified, as there is typically a global clock generator in such systems. In the scope of our coffee maker, which serves mainly as an introductory example, we just choose a reasonable step duration.

A reactive system has no designated time of going out-of-service, i.e., for every number of time steps n, we should not synthesize a controller that only works under the assumption that it runs for at most n steps, as letting it run for n+1 time steps is also conceivable. To abstract from this problem, we assume that the controller never goes out of service, and thus runs for an infinite duration. Such an execution produces a trace, which describes in which steps which inputs and outputs are set (i.e., have value t r u e). Formally, a trace is an infinite word w = w 0 w 1 w 2…, where for every \(i \in \mathbb {N}\), we have w i ⊆A P I ∪A P O , where A P I is the set of input signals, and A P O is the set of output signals. In the case of the coffee maker, A P I ={c} and A P O ={g,b}. The following example shows an example trace of a coffee maker controller:

In this trace, the coffee button is pressed in the second step, and grinding is performed in the two steps starting with the second one. Then, the brewing unit of the coffee maker is triggered for three steps.

This behavior of the controller could be one that satisfies its specification. For example, a specification for a coffee maker controller could be that once the coffee button is pressed, grinding should happen for two steps, and afterwards brewing should be done for three time steps while the grinding unit is idle.

To now perform synthesis from this specification, we need to formalize it. In reactive synthesis, this is typically done by describing the specification in a logic. The logic CTL ⋆ (Emerson and Halpern 1986) is well-suited for this purpose and extends standard Boolean logic by temporal operators and path quantifiers that intuitively allow us to connect the system’s signal valuations in one step with the actions in other, future time steps. In the context of logic, we also call the signals atomic propositions. (CTL stands for Computation Tree Logic.) The informal specification from the previous paragraph would be formalized into CTL ⋆ as follows:

The formula starts with the path quantifier A, which denotes that the expression right of the operator should hold along all executions of a system to be synthesized. It is followed by the temporal operator G, which is called the globally operator. For some formula G ϕ to hold at some point in the execution of a system, ϕ needs to hold for all steps from that point onward.

A specification is required to hold right from the start of the system. Thus, prefixing our coffee maker specification ψ with A G means that the implication c→… has to hold at every step of the system’s execution. The implication in turn describes that (g∧X g∧…) shall happen whenever we have c, i.e., the coffee button has been pressed. The consequent of the implication now is (g∧X g∧X X((b∧¬g)∧X(b∧¬g)∧X X(b∧¬g)), which is a Boolean formula in which the temporal operator X (next) is used. It describes that we need to look one step into the future in the trace of the system to test if some sub-formula holds. So X g holds in the first step in a trace of the system if g holds in the second step of the trace. Likewise, X X g holds in the first step in a trace if g holds in the third step of the trace. This example also shows that the operators in CTL ⋆ can be chained, which makes it a rich modeling formalism for specifications. Note that the consequent of the implication in ψ describes the informal statement of what shall happen upon a coffee button press from above in a formal way.

To actually synthesize a system from a specification, the specification needs to be realizable, i.e., there has to exist a system implementation for the given interface that ensures that every trace of the system satisfies the specification. Most synthesis algorithms also check realizability, i.e., they do not only synthesize an implementation for realizable specifications, but also detect unrealizability. As a consequence, there is often no distinction between the two steps in the literature.

While testing realizability appears to be trivial and unnecessary, in the practice of synthesis, it is not. For example, the coffee maker specification from above is unrealizable, and despite its short length, this fact is easily overlooked. The reason for unrealizability here is that we might press the coffee button in the first two successive steps of the system’s execution. The specification part X g then requires that grinding is performed in the third step (as the implication is triggered by the second button press), but at the same time the specification part X X((b∧¬g)∧…) requires that grinding does not happen in the third step. This is a contradiction that the system to be synthesized cannot avoid, as the input is not under its control. Therefore, this specification is unrealizable.

There are two ways to fix the specification. One is to allow the system to delay the production of the next cup until a grinding and brewing cycle has finished. This can be done using the eventually operator ( F) of CTL ⋆. Intuitively, a CTL ⋆ formula F ϕ holds at a point in a trace of the system if at some point in the future, ϕ holds. The modified specification then looks as follows:

It is now realizable, partly because there is no requirement that the number of coffees produced matches the number of button presses. Note that the eventually operator does not impose a bound on the number of steps by which a brewing cycle might be delayed. Thus, a system that satisfies this specification could react with a delay that gets longer and longer the more coffees are made. However, none of the contemporary synthesis algorithms produces such implementations, as such a behavior would require an infinite-state implementation, but they only compute finite-state ones. As it can be shown that whenever there exists an implementation for a CTL ⋆ specification, there also exists a finite-state one, this is also not necessary. So using the eventually operator in this context instead of imposing a maximal bound on the number of steps until when grinding should start is reasonable.

Another possibility to fix the specification is to add an assumption to the specification that expresses that the coffee button cannot be pressed when brewing or grinding is already happening. The new realizable specification would be:

An assumption of course always has to be reasonable in practice to make sense in synthesis. If we know that the coffee maker in which the controller is supposed to work ensures that the button cannot be pressed while the maker is running (or alternatively ignores the button press), then the assumption is justified.

After this short introduction to the aims of reactive synthesis, let us now discuss more formally how we specify the intended behavior of the system to be synthesized and how such a system is actually represented.

2.2.1 Computation trees

A reactive system has to satisfy a specification regardless of the input to the system. To get an overview about the possible behaviors of a system, for the scope of synthesis, we typically view a system implementation as a computation tree, as these describe all system behaviors of a reactive system at once. Formally, for some interface (A P I ,A P O ) of a reactive system, a computation tree is a tuple 〈T,τ〉, where \(T = (2^{\mathsf {AP}_{I}})^{*}\) and \(\tau : T \rightarrow 2^{\mathsf {AP}_{I} \cup \mathsf {AP}_{O}}\). The tree describes all the possible traces by having τ map every input sequence to the system to an output signal valuation that the system produces after having read the input sequence. Without loss of generality, we assume that every node in the computation tree is also labeled by the last input, i.e., we have \(\phantom {\dot {i}\!}\tau (t_{0} \ldots t_{n})|_{\mathsf {AP}_{I}} = t_{n}\) for every \(t_{0} \ldots t_{n} \in (2^{\mathsf {AP}_{I}})^{+}\). While labeling the nodes in the tree according to the last direction seems to be unnecessary, it allows us to define the logic CTL ⋆ below in a way that generalizes to applying the logic to Kripke structures (which we define in Section 3.2.1) as well. Note that \(\phantom {\dot {i}\!}\tau (\epsilon )|_{\mathsf {AP}_{I}}\) is not constrained in any way and can be freely set by the computation tree.

Figure 8 shows an example computation tree of a coffee maker controller.

2.2.2 The temporal logic CTL ⋆

Let A P be a set of atomic propositions. Expressions in CTL ⋆ can either be state formulas or path formulas. We define the set of path formulas in the temporal logic CTL ⋆ inductively by the following rules:

-

every CTL ⋆ state formula is also a CTL ⋆ path formula

-

For every CTL ⋆ path formula ψ, we have that ¬ψ, G ψ, F ψ, and X ψ are also CTL ⋆ path formulas;

-

For all CTL ⋆ path formulas ψ and ψ ′, we have that ψ U ψ ′, ψ R ψ ′, ψ∨ψ ′, and ψ∧ψ ′ are also CTL ⋆ path formulas.

The set of state formulas is defined as follows:

-

For every p∈A P, p is a CTL ⋆ state formula;

-

For all CTL ⋆ state formulas ϕ and ϕ ′, we have that ϕ∨ϕ ′, ϕ∧ϕ ′ and ¬ϕ ′ are also CTL ⋆ state formulas;

-

Given a CTL ⋆ path formula ψ, A ψ and E ψ are CTL ⋆ state formulas.

The semantics of CTL ⋆ is defined over computation trees. Let A P be a set of atomic propositions for A P=A P I ∪A P O , ψ be a CTL ⋆ formula over A P, and 〈T,τ〉 be a computation tree. A branch in 〈T,τ〉 starting in some node t∈T is defined to be a sequence b = b 0 b 1 b 2… such that (1) b 0 = t, (2) for every \(i \in \mathbb {N}\), we have b i ∈T, and (3) for every \(i \in \mathbb {N}\), we have b i+1 = b i x for some x⊆A P I . We denote the suffix of a string b starting at position \(j \in \mathbb {N}\) by b j, i.e., for b = b 0 b 1 b 2…, we have b j = b j b j+1 b j+2….

Given some node t = t 0…t n of 〈T,τ〉, we evaluate the validity of a CTL ⋆ state formula at point t by recursing over the structure of the CTL ⋆ state formula (where ψ is a CTL ⋆ path formula and ϕ and ϕ ′ are CTL ⋆ state formulas):

-

〈T,τ〉,t⊧p for some p∈A P I ∪A P O if p∈τ(t);

-

〈T,τ〉,t⊧¬ϕ if and only if not 〈T,τ〉,t⊧ϕ;

-

〈T,τ〉,t⊧ϕ∨ϕ ′ if and only if 〈T,τ〉,t⊧ϕ or 〈T,τ〉,t⊧ϕ ′;

-

〈T,τ〉,t⊧ϕ∧ϕ ′ if and only if 〈T,τ〉,t⊧ϕ and 〈T,τ〉,t⊧ϕ ′;

-

〈T,τ〉,t⊧A ψ if for all branches b starting from t, we have 〈T,τ〉,b⊧ψ;

-

〈T,τ〉,t⊧E ψ if for some branch b starting from t, we have 〈T,τ〉,b⊧ψ.

Likewise, given some branch b = b 0 b 1… of 〈T,τ〉, we evaluate the validity of a CTL ⋆ path formula on b by recursing over the structure of the CTL ⋆ path formula (where ψ and ψ ′ are CTL ⋆ path formulas and ϕ is a CTL ⋆ state formula):

-

〈T,τ〉,b⊧ϕ if and only if 〈T,τ〉,b 0⊧ϕ;

-

〈T,τ〉,b⊧¬ψ if and only if not 〈T,τ〉,b⊧ψ;

-

〈T,τ〉,b⊧ψ∨ψ ′ if and only if 〈T,τ〉,b⊧ψ or 〈T,τ〉,b⊧ψ ′;

-

〈T,τ〉,b⊧ψ∧ψ ′ if and only if 〈T,τ〉,b⊧ψ and 〈T,τ〉,b⊧ψ ′;

-

〈T,τ〉,b⊧X ψ if and only if 〈T,τ〉,b 1…⊧ψ;

-

〈T,τ〉,b⊧G ψ if and only if for all \(j \in \mathbb {N}\), we have 〈T,τ〉,b j⊧ψ;

-

〈T,τ〉,b⊧F ψ if and only if for some \(j \in \mathbb {N}\), we have 〈T,τ〉,b j⊧ψ;

-

〈T,τ〉,b⊧ψ U ψ ′ if and only if for some \(j \in \mathbb {N}\), we have 〈T,τ〉,b j⊧ψ ′, and for all 0≤i<j, we have 〈T,τ〉,b i⊧ψ;

-

〈T,τ〉,b⊧ψ R ψ ′ if either for all \(j \in \mathbb {N}\), we have 〈T,τ〉,b j⊧ψ ′, or there exists some \(j \in \mathbb {N}\) such that 〈T,τ〉,b j⊧ψ, and for all i≤j, we have 〈T,τ〉,b i⊧ψ ′.

We define the set of trees for which all children of the root node satisfy some CTL ⋆ state formula ϕ to be the models of ϕ.Footnote 1

Given some CTL ⋆ state formula ϕ, we say that ϕ is realizable for some interface \(\mathcal {I} = (\mathsf {AP}_{I},\mathsf {AP}_{O})\) if there exists an \(2^{\mathsf {AP}_{O} \cup \mathsf {AP}_{I}}\)-labeled \(2^{\mathsf {AP}_{I}}\)-tree that is a model of ϕ (and that copies the last input correctly to its node labels).

CTL is the subset of CTL ⋆ obtained by restricting the path formulas to be X ϕ, F ϕ, G ϕ, and ϕ U ϕ ′, where ϕ,ϕ ′ are CTL state formulas. LTL is the subset of CTL ⋆ consisting of the formulas A ϕ in which the only state subformulas in ϕ are atomic propositions.

Definition 2 (Realizability Problem)

Given some system interface \(\mathcal {I} = (\mathsf {AP}_{I},\mathsf {AP}_{O})\) and some CTL ⋆ state formula ϕ (the specification), the realizability problem is to test if there exists some computation tree 〈T,τ〉 with \(T = (2^{\mathsf {AP}_{I}})^{*}\) and \(\tau : T \rightarrow 2^{\mathsf {AP}_{I} \cup \mathsf {AP}_{O}}\) that copies the respective last input to its node labels correctly and such that 〈T,τ〉 is a model of ϕ.

2.2.3 Transducers

The definition of the realizability problem above has one slight problem: while it clearly defines what constitutes a computation tree that represents a solution to the synthesis problem, such computation trees have infinitely many nodes. Thus, the model is not directly usable for actually synthesizing systems, which have to be finite-state in order to be implementable in the field. As a remedy to this problem, we define (deterministic) transducers here, which serve as finite generators for computation trees. It can be shown that for every realizable specification, there exists a computation tree that is generated by a transducer, and thus for the scope of synthesis, it suffices to search for a transducer that generates a suitable computation tree.

Formally, a transducer over some set of input atomic propositions A P I and output atomic propositions A P O is defined as a tuple \(\mathcal {T} = (S,2^{\mathsf {AP}_{I}},2^{\mathsf {AP}_{I} \cup \mathsf {AP}_{O}},\delta ,s_{0},L)\), where S is a (finite) set of states, \(\delta : S \times 2^{\mathsf {AP}_{I}} \rightarrow S\) is the transition function, s 0∈S is the initial state of the system, and \(L : S \rightarrow 2^{\mathsf {AP}_{I} \cup \mathsf {AP}_{O}}\) assigns to each state its labeling. We require that the states always represent the last input to the transducer, i.e., we have \(\phantom {\dot {i}\!}L(s)|_{\mathsf {AP}_{I}} = x\) for every s∈S such that for some s ′∈S, we have δ(s ′,x) = s. The definition of a transducer corresponds to the definition of a Mealy machine that is common in the practice of hardware design, but with the addition that the transducer always produces the last output.

We say that some word \(w = w_{0} w_{1} \ldots \in (2^{\mathsf {AP}_{O}} \times 2^{\mathsf {AP}_{I}})^{\omega }\) is a trace of \(\mathcal {T}\) if there exists some sequence of states π = π 0 π 1…∈S ω such that π 0 = s 0, and for all \(i \in \mathbb {N}\), we have π i+1 = δ(π i ,x) for some x⊆A P I and w i = L(π i ). We call π a run of the transducer in this context. We can obtain a computation tree 〈T,τ〉 from a transducer \(\mathcal {T} = (S,2^{\mathsf {AP}_{I}},2^{\mathsf {AP}_{I} \cup \mathsf {AP}_{O}},\delta ,s_{0},L)\) by setting \(T = (2^{\mathsf {AP}_{I}})^{*}\) and τ(t 0 t 1…t n ) = L(δ(…δ(δ(s 0,t 0),t 1),…,t n )) for all t 0 t 1…t n ∈T.

To illustrate the concept of transducers, Fig. 9 shows an example transducer for a coffee maker controller that could have the same set of traces as the computation tree in Fig. 8. As the computation tree in Fig. 8 is not fully shown (after all, it is infinite), we can however not be sure about that.

2.2.4 Reactive Synthesis Problem (RSP)

Definition 3 (RSP)

Given some system interface \(\mathcal {I} = (\mathsf {AP}_{I},\mathsf {AP}_{O})\) and some CTL ⋆ state formula ϕ (the specification), the reactive synthesis problem (RSP) for \(\mathcal {I}\) and ϕ is to compute a transducer over \(\mathcal {I}\) whose computation tree satisfies ϕ whenever it exists, and to deduce that no such transducer exists whenever this is the case.

We postpone a discussion of algorithms to solve RSP to Section 3.4.2.

2.2.5 Maximal permissiveness in RSP

The reader may have noted that in the definition of the supervisory control problem, we are concerned with computing maximally permissive controllers, but in the reactive synthesis problem, we just search for any controller that satisfies the specification. There are two reasons for this difference. First of all, the reactive synthesis problem was originally defined in this form by Church (1963). The second, more important reason is however that in general, maximally-permissive controllers do not exist.

To actually discuss maximally-permissiveness in the context of the reactive synthesis problem, we first of all need to change our transducer definition, as the transducers currently dealt with are deterministic. For a transducer \(\mathcal {T} = (S,2^{\mathsf {AP}_{I}},2^{\mathsf {AP}_{O}},\delta ,s_{0},L)\), we redefine δ to map from \(S \times 2^{\mathsf {AP}_{I}}\) to a subset of S. This way, whenever the controller is in some state s and reads some input x⊆A P I , then it can transition to any of the states in δ(s,x). We require that for all s∈S and x⊆A P I , δ(s,x) is non-empty. We furthermore allow more than one initial state and modify the definition of a computation tree of account for these facts.

Computation trees for such non-deterministic transducers \(\mathcal {T} = (S,2^{\mathsf {AP}_{I}},2^{\mathsf {AP}_{I} \cup \mathsf {AP}_{O}},\delta ,s_{0},L)\) are then tuples 〈T,τ〉 with T⊆S ∗ such that for some s 0∈S 0, we have:

-

1.1.

τ(𝜖) = L(s 0)

-

1.2.

\(|\{ s \in S \mid s \in T \}| = |2^{\mathsf {AP}_{I}}|\) and \(\{L(s)|_{2^{\mathsf {AP}_{I}}} : s \in S \wedge s \in T\} = 2^{\mathsf {AP}_{I}}\)

-

1.3.

For all s∈S with s∈T, we have \(s \in \delta (s_{0},L(s)|_{2^{\mathsf {AP}_{I}}})\)

-

2.1.

For all t = t 0…t n ∈T∖{𝜖}, we have τ(t 0…t n ) = L(t n )

-

2.2.

For all t = t 0…t n ∈T∖{𝜖}, we have that \(|\{ s \in S \mid ts \in T \}| = |2^{\mathsf {AP}_{I}}|\) and \(\{L(ts)|_{2^{\mathsf {AP}_{I}}} : ts \in T\} = 2^{\mathsf {AP}_{I}}\)

-

2.3.

For all t = t 0…t n ∈T∖{𝜖} with n≥1, we have \(t_{n} \in \delta (t_{n-1}, L(t_{n})|_{2^{\mathsf {AP}_{I}}})\).

Note that we actually only have three different conditions, but for the sake of completeness need one copy of each condition for the root and one copy for the other nodes. The conditions together ensure that all the possible computation trees of a transducer are input-complete, i.e., from every node, they have one possible successor for every next input. We say that a non-deterministic transducer satisfies some CTL* state formula ϕ if every input-complete computation tree induced by the transducer satisfies ϕ (at the root).

We furthermore say that two computation trees 〈T,τ〉 and 〈T ′,τ ′〉 are isomorphic if there exists some bijective function f:T→T ′ with |f(t)|=|t| for all t∈T and τ(t) = τ ′(f ′(t)) for all t∈T. Isomorphic trees effectively represent the same behavior of a reactive system although the internal structure of the transducers from which the trees are possibly generated may be different.

We call a non-deterministic transducer maximally permissive for some CTL* state formula specification ϕ and interface \(\mathcal {I} = (\mathsf {AP}_{I},\mathsf {AP}_{O})\) if (1) the transducer branches over A P I and satisfies ϕ on all trees induced by the transducer, and (2) every input-responsive computation tree for \(\mathcal {I}\) that satisfies ϕ (at the root) has an isomorphic computation tree that is induced by the transducer.

Note that maximally permissive finite-state controllers/transducers do not exist in general for RSP. For example, let A P I ={r}, A P O ={g}, ϕ=A G F g, and \(\mathcal {T} = (S,2^{\mathsf {AP}_{I}},2^{\mathsf {AP}_{I} \cup \mathsf {AP}_{O}},\delta ,s_{0},L)\) be any transducer that satisfies ϕ. Since \(\mathcal {T}\) satisfies ϕ, there has to be some upper bound \(b \in \mathbb {N}\) on the number of steps until g is set to true by the controller for the first time, as otherwise, there exists some path in some computation tree induced by \(\mathcal {T}\) on which G F g is not satisfied. However, since a controller that sets g to true every (b+1)th cycle satisfies ϕ as well, \(\mathcal {T}\) cannot be maximally permissive. As we started with an arbitrary finite-state transducer, this proves that no controller can be maximally permissive.

Section 3.2.5 contains a more detailed discussion of maximal permissiveness in the reactive synthesis context.

3 Bridging the gap

In this section, we discuss how to bridge the gap between the synthesis problems considered in supervisory control and reactive synthesis. We do this by establishing relations (e.g., reductions) between some specific problems studied in these frameworks. The general situation is described in Fig. 10, where the basic supervisory control problem (BSCP-NB) and the reactive synthesis problem (RSP) are the cases at the cliffs of the gap. We introduce problems that conceptually lie in between BSCP-NB and RSP in order to bridge the gap. These problems always differ in one aspect from their neighbors, and we can perform reductions between these problems. As a result, we can move gently between the BSCP-NB and RSP problems, which simplifies understanding the concepts to follow.

However, our bridge does not exactly meet in the middle. The reason is that the aim of supervisory control and the aim of reactive synthesis slightly differ. In supervisory control, we always want our supervisor to be maximally permissive (being a “parent”), as it should only block undesired actions. In reactive synthesis, on the other hand, where maximal permissiveness is unachievable in general, we want our controller to actively enforce certain properties, possibly at the expense of preventing certain overall system behavior that is unproblematic. This mismatch, and the lack of study of the general reactive synthesis problem with maximal permissiveness, RSCP max (see Definition 8 that follows), prevent us from performing a sequence of reductions that map the problems completely onto each other.

Our focus in this paper is on the left-side of the bridge: we present formal reductions from BSCP-NB to a special instance of RSCP max. Specifically, we show in Section 3.1 that BSCP-NB is equivalent to a simpler supervisory control problem in which only non-blockingness is required, called SSCP, and which simplifies the reduction to the reactive synthesis setting. In Section 3.2 we define a reactive synthesis problem with an explicit notion of plants, called RSCP. This makes it easier to capture supervisory control problems where the plant is an input to the problem. RSCP does not generally admit maximally-permissive solutions, but does so for the non-blocking requirement, which is the only requirement of SSCP. In Section 3.3 we show how SSCP can be reduced to a variant of RSCP which requires maximal permissiveness.

Regarding the right side of the bridge, we discuss in Section 3.5 the links between reactive synthesis with plants and reactive synthesis without plants.

The center of the bridge is represented as an “automaton vehicle.” This vehicle captures the fact that at an algorithmic level, the problems on either side of the gap are solved by applying automata-theoretic techniques, as discussed in Section 3.4.2.

3.1 Simplifying the supervisory control problem

In view of reducing BSCP-NB to the reactive synthesis framework, we first reduce BSCP-NB to a simpler problem. In particular, we will eliminate the safety specification L a m by incorporating it into the plant. This can be done by taking as new plant the product of the original plant G and an automaton recognizing L a m . The simpler problem asks for a non-blocking supervisor for the new plant. We next formalize this idea.

3.1.1 Incorporating safety into the plant

Let G=(X,x 0,X m ,E,δ) be a DES plant. Let \(L_{am}\subseteq \mathcal {L}_{m}(G)\) and let L a m be \(\mathcal { L}_{m}(G)\)-closed. Let \(A=(X^{A},{x_{0}^{A}},{X_{m}^{A}},E,\delta ^{A})\) be a deterministic finite-state automaton such that \(\mathcal { L}_{m}(A)=L_{am}\) and \(\mathcal {L}(A) = E^{*}\). We can assume without loss of generality that A is complete in the sense that its transition function δ A is total. Therefore, every string in E ∗ has a unique run in A, although only some runs will generally end up in a marked state; in fact, A is generally a blocking automaton, since strings outside \(\overline {L_{am}}\) will never reach a marked state. (The fact that \(\mathcal {L}(A) \not \subseteq \mathcal {L}(G)\) is not a problem; this will become apparent below.)

The product of G and A, denoted by G×A, is defined to be the automaton

such that

It follows from the construction of A and the assumptions on L a m and A that

G and G×A have the same set of events E, thus also the same subsets of controllable and uncontrollable events. Therefore, any supervisor S for G is also a supervisor for G×A, and vice versa. This allows us to state the following result.

Theorem 2

Let G=(X,x 0 ,X m ,E,δ), \(L_{am}\subseteq \mathcal {L}_{m}(G)\) , and assume that L am is \(\mathcal {L}_{m}(G)\) -closed. Let A be a complete DFA such that \(\mathcal {L}_{m}(A)=L_{am}\). Let S be a supervisor for G, and therefore also for G×A. Then, the following statements are equivalent:

-

1.

S solves BSCP-NB for plant G with respect to admissible marked language L am .

-

2.

S solves BSCP-NB for plant G×A with respect to admissible marked language \(\mathcal {L}_{m}(G \times A)\).

Proof

The proof is given in Appendix B.

To the best of our knowledge, the result in Theorem 2 does not appear explicitly in the published literature, although it can be inferred rather straightforwardly from the algorithmic procedure for solving BSCP-NB originally presented in Ramadge and Wonham (1987), Wonham and Ramadge (1987). Our proof in Appendix B is direct and relies only on the concepts introduced so far in this paper.

3.1.2 SSCP: simple supervisory control problem

Theorem 2 allows to reduce BSCP-NB to a simpler problem, namely, that of finding a maximally-permissive non-blocking supervisor for a given plant, with no external admissible marked behavior. We call the resulting problem the Simple Supervisory Control Problem (SSCP) and restate it formally:

Definition 4 (SSCP)

Given DES G, find (if it exists, or state that none exists) a maximally-permissive non-blocking supervisor for G, that is, a supervisor S which is non-blocking for G, and such that there is no supervisor S ′ which is non-blocking for G and strictly more permissive than S.

Corollary 1

BSCP-NB and SSCP are equivalent problems, i.e., each one can be reduced to the other with a polynomial-time reduction.

Proof

SSCP is equivalent to the special case of BSCP-NB with \(L_{am} := \mathcal {L}_{m}(G)\). This is because \(\mathcal { L}_{m}(G)\) is trivially \(\mathcal {L}_{m}(G)\)-closed and \(\mathcal {L}_{m}(S/G)\subseteq \mathcal {L}_{m}(G)\) always holds. Obviously this special case of BSCP-NB can be reduced to BSCP-NB. Conversely, Theorem 2 demonstrates that BSCP-NB can be reduced to this special case of BSCP-NB. This reduction is polynomial-time because G×A can be computed in polynomial time from G and A. Therefore all three problems, BSCP-NB, BSCP-NB with \(L_{am} := \mathcal {L}_{m}(G)\), and SSCP are equivalent with polynomial-time reductions.

It also follows from the above results and Theorem 1 that if a solution to SSCP exists, then this solution is unique, i.e., the maximally-permissive non-blocking supervisor is unique.

3.1.3 Finite-memory, state-based supervisors

We will use SSCP to establish a precise connection between supervisory control and reactive synthesis. In this regard, we prove a useful property for the type of supervisors that need to be considered in solving SSCP.

A supervisor is a function S:E ∗→2E. The domain of this function is E ∗, which is an infinite set. This makes it a priori possible for S to require infinite memory. Fortunately, it can be shown that finite-memory, and in particular state-based supervisors, are sufficient for SSCP.

Definition 5

Let G=(X,x 0,X m ,E,δ) be a DES plant with E = E c ∪E u c and let S:E ∗→2E be a supervisor for G. S is said to be state-based if