Abstract

Features within the dataset carry a significant role; however, resource utilization, prediction-time, and model weight are increased by utilizing high-dimensional data in intrusion-detection paradigm. This paper aims to design a novel lightweight intrusion detection system in two phases utilizing a swarm intelligence-based technique. In 1st-phase, essential features are selected using particle swarm optimization algorithm by considering imbalanced dataset. Ant colony optimization algorithm is utilized in 2nd-phase for extracting information-rich and uncorrelated features. Additionally, genetic algorithm is employed for fine-tuning each detection model. Proposed model’s performance is evaluated on different base and ensemble classifiers, and it is observed that xgboost achieves best accuracy with 90.38%, 92.63%, and 97.87% on NSL-KDD, UNSW-NB15, and CSE-CIC-IDS2018 datasets, respectively. The proposed model also outperforms other traditional dimensionality reduction and state-of-the-art approaches with statistical validation. This paper also analyses objective function of each metaheuristic algorithm used in this paper, applying convergence graphs, box, and swarm plots.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In the modern era, the Internet plays a vital role in connecting individuals worldwide. With the vast growth of the Internet, the chance of cyber-attacks increases and harms individuals at the organization and personal levels. Cyber-attackers exhibit high proficiency in exploiting vulnerabilities and causing harm to individuals. This harm covers a wide range of consequences, which include data breaches, online harassment, financial losses, intellectual property theft, cyberbullying, and disruptions to essential services like healthcare. Additional resources are being utilized and assigned to defend against these cyber-attacks or abnormal behavior in the network [1]. For this reason, cyber-security is gaining popularity and is necessary to protect organizations and individuals from cyber-attacks. Various network security measures have been proposed to mitigate these cyber-attacks, including firewalls, antivirus software, and malware programs, which serve as an initial line of defense [2]. Still, these security measures cannot properly protect organizations and individuals, especially from the contemporary cyber-attacks on the network [1].

An intrusion detection system is a security system that monitors, analyses the network traffic, and compares it with predefined patterns. If the match (or mismatch) happens between the observed traffic and predefined patterns, an alert signal is generated (based on the matching or mismatching criteria) and sent to the network administrator to take appropriate action. Based on the detection methods, two types of intrusion detection systems have been developed: signature-based intrusion detection systems (SIDS) and anomaly-based intrusion detection systems (AIDS). SIDS compares and analyses the observed network traffic with the pre-defined signature of the malicious behavior stored in the database and triggers an alarm signal when malicious traffic is detected in the network. It depends on the signature of the attack traffic behavior and fails for a new type of attack or zero-day attacks. On the other hand, AIDS overcomes the issues of SIDS by creating a baseline model that depends on the behavior of normal network traffic. If the observed network traffic deviates from the usual baseline behavior of the model, an alarm is generated and sent to the network administrator. It is generally useful in detecting novel categories of attacks or zero-day attacks.

In this paper, an Intrusion detection system model is designed using an intelligence approach. Most of the traditional intrusion detection system datasets are highly imbalanced and contain many features that significantly degrade the attack detection performance of the system [3]. The learning models with these many features take a long time, resulting in worse classification results [4]. Moreover, the model’s weight is one of the most crucial factors influencing the accuracy and efficiency of any intelligent model [5]. It depends upon the number of features present in the data, which is fed as an input to the model. Efficient resource (or power) consumption is a significant concern for the networks of the IoT, where numerous devices are interconnected to each other [6]. Due to the constraints of limited resources, it is necessary to utilize efficiently the available resources. To mitigate the aforementioned resource constraint problems, there is a significant necessity for designing of lightweight intrusion detection system that can detect anomalies with high accuracy by utilizing resources efficiently [7]. Most of the traditional IDS designs do not consider the weight of the IDS model and are heavily weighted in nature [8]. It is important to note that the model’s weight is minimized by extracting the most appropriate features from the network data, which enhances the attack detection accuracy and reduces the detection model’s prediction time and false alarm rate (FAR).

2 Research gap

State-of-the-arts commonly employ unsupervised techniques for selecting a substantial number of features in IDS design, which reduces the attack detection rate. Many studies [4, 9,10,11,12,13,14,15] also overlook the imbalanced nature of datasets when selecting relevant features by using accuracy and error rate in the fitness function. Furthermore, the existing models [10, 16,17,18], are evaluated by applying only one classifier, which may lead to biased performance. On the other hand, several works proposed by [4, 9,10,11, 14, 15, 19,20,21,22,23] neglect addressing the hyperparameter tuning of the detection models. As a result, the attack detection rate of the model is very low. Additionally, fine-tuning some existing detection models such as [5, 6, 18, 24, 25], often rely on grid or exhaustive search and random search techniques which have high computational cost and lack of interpretability.

In some state-of-the-art, it is revealed that the utilization of an exhaustive search for feature selection, which examines all possible feature combinations and selects the best result, is an extremely naïve method with very high time complexity. Most of them are NP-hard problems due to the high computational cost and processing time. Metaheuristic algorithms solve NP-hard problems and complicated optimization issues. Rather than obtaining exact solutions, these algorithms (which are suboptimal) find appropriate solutions in a reasonable amount of time [4]. Traditional intrusion detection systems (IDS) are designed using fuzzy algorithms, genetic algorithms, swarm intelligence algorithms, data mining, machine learning, and deep learning models. Most of them overlook the significance of the weight of the IDS model, which leads to computationally intensive and resource-heavy models.

The aforesaid problems motivate to design a Lightweight Intrusion Detection System, applying swarm intelligence-based techniques and genetic algorithms with machine intelligence approaches. The primary objectives of the proposed model are as follows: (i) Saving attack detection time with attack detection accuracy, (ii) Compressing data features, (iii) Reducing the curse of dimensionality by utilizing the combination of PSO and ACO for feature selection. It is a powerful and efficient swarm intelligent approach to addressing high-dimensional data and improving the performance of various intelligence models, and (iv) This paper employs the genetic algorithm for optimizing the hyperparameter of the detection model using the weighted f1-score as the fitness function. This approach identifies the optimal hyperparameters and evaluates the model using multi-class classification.

Figure 1 outlines the proposed model’s block diagram, which is divided into four major modules: data preprocessing, Bi-Phase feature optimization, Hyperparameter tuning, and Classification.

2.1 The key contributions of the proposed approach

The main contributions of this paper are outlined as follows:

-

(i)

Bi-phase swarm intelligence-based feature optimization: An improved Bi-Phase swarm intelligence-based feature optimization technique is proposed to reduce the number of features in the data with the objective of designing a lightweight IDS.

-

(ii)

GA-based optimization of detection models: To fine-tune various detection models (used in this paper), a nature-influenced genetic algorithm is applied to provide each model with the optimal hyperparameter values.

-

(iii)

Classification module: In classifying different malicious and normal traffic, two categories of detection models are applied to assess the effectiveness of the proposed lightweight IDS. Base detection models (such as DT, KNN, SVM, logistic regression, DNN, and CNN) are utilized in the category-1 classification, while ensemble detection models (such as RF, xgboost, lightgbm, catboost, majority, and mean voting) are used in the category-2 classifications.

2.2 Paper structure

The rest of the paper is organized as follows: Sect. 3 analyses the related works, followed by the research objective of this paper in Sect. 4. After that, Sect. 5 broadly discusses the proposed methodology, followed by Sect. 6, which outlines the experimental results and discussion. Last but not least, Sect. 7 concludes the paper by discussing the limitations and future direction of the work.

3 Related works

This section analyses the state-of-the-art works in the field of intrusion detection systems. The paper mainly focuses on designing a lightweight IDS model with fewer features and aims for accurate classification among several malicious and normal network traffic. Therefore, only those state-of-the-art works are considered that emphasize the feature optimization of the data for lightweight IDS design. Additionally, this paper considers hyperparameter optimization of the detection models for accurate classification performance. In the existing works, feature optimization is performed utilizing various metaheuristic and non-metaheuristic algorithms. Especially for feature optimization, the related works in this paper are divided into two subsections: non-metaheuristic feature optimization algorithm-based and metaheuristic feature optimization algorithm-based. This paper surveys one separate subsection for the hyperparameter optimization of detection models. Related work is structured into a total of three main subsections according to the feature optimization approaches and hyperparameter-tuning techniques such as non-metaheuristic feature optimization algorithms-based, metaheuristic feature optimization algorithms-based, and hyperparameter tuning of detection model-based. This section concludes by demonstrating the superiority of the proposed model compared to the existing state-of-the-art.

3.1 Non-metaheuristic feature optimization algorithm-based

Several traditional methods are used to reduce the data’s dimensionality, including filter, wrapper, embedded, and feature extraction techniques like PCA, ICA, LDA, t-SNE, and autoencoder. This subsection describes selecting information-rich features from the data in the IDS paradigm without utilizing metaheuristic algorithms.

Chebrolu et al. [26] have built a lightweight IDS that can efficiently and effectively detect intrusions in the classification process. Irrelevant and redundant features are eliminated from the data by implementing markov blanket and decision tree models. Following this, bayesian networks and CART algorithms and an ensemble of bayesian networks and CART algorithms are employed in constructing a lightweight IDS model. The bayesian network utilized here requires either an O(\({n}^{2}\)) CI test in special or an O(\({n}^{4}\)) CI test in general cases where n denotes the number of domain variables. The outcomes of the model with reduced features are 19.70s and 10.10s average training and testing time, respectively, with only 88.84% average accuracy. Li et al. [27] have used a wrapper-based feature selection approach to build lightweight IDS. Modified linear SVM and modified random mutation hill-climbing (RMHC) approaches are used in the proposed wrapper-based feature selection method. Decision tree algorithm is utilized for the classification where nodes of the decision tree contain linear SVMs. Best feature subsets are selected separately for each attack category in the KDD CUP 1999 dataset. Two methods are compared in terms of processing time in such a way that RHMC takes 1.5 h to process U2R attacks, whereas modified RHMC processes it in under 1 h. Mukherjee & Sharma [20] have proposed a vitality-based feature selection method on the NSL-KDD [28] dataset, and out of a total of 41 features, 24 best features are selected. The process of the models is that pre-defined accuracy, average TPR, and RMSE values are considered threshold values. Each feature in the original data is removed, and the performance is checked to see whether it increases, decreases, or remains constant. Here, a sequential search is performed, and the same steps are repeated for each feature (41 times). The importance of each feature is examined at a time based on the pre-defined threshold value. Thus, both time and space complexities of the proposed method take O(n). The accuracy obtained by the proposed method is 97.78%, and the time taken to build the model is 9.42. In this case, the primary limitation is the low true positive rate (TPR) value for the U2R attack class, as well as the lack of addressing hyperparameter tuning. Additionally, the model is evaluated on only one traditional dataset and within a very complex framework. The high complexity of the proposed framework results in high resource utilization.

Li et al. [29] have proposed a three-phase model that includes data preprocessing, feature selection, and anomaly detection. L2 regularization is employed in the preprocessing phase, and feature selection is performed using the random forest with affinity propagation clustering algorithm for the feature grouping. Auto-encoder is used at the anomaly detection phase, where average RMSE is utilized to measure the error rate of multiple auto-encoders. Despite the affinity propagation technique being advantageous in classifying massive amounts of data, it is very difficult with an O(\({n}^{2}\text{log}n\)) complexity (in terms of time as well as space) where n is the number of instances in data. The detection time and recall rate achieved by the AE-IDS model are 2493.83s (which is very high) and 61.90%, respectively, on the brute force—web dataset. Kunhare et al. [30] have designed the IDS model, where features are selected by combining the wrapper method with the filter. Random forest algorithm is utilized for calculating the importance of each feature in the data. It is observed that the random forest algorithm reduces the number of features from 41 to 10, and the performance of the model is best utilizing the PSO-based classification algorithm. Based on the experimental results, it is found that the proposed framework has a 99.26% detection rate, 99.32% efficiency, and low computing complexity. The main limitation here is that the data imbalance problem is not considered, and hyperparameter optimization of the model is not explored. Gu & Lu [19] have carried out naïve bayes-based feature embedding in the initial step of the proposed model to transform the data. Subsequently, the transformed train data is used to train the SVM model, and finally, trained models are used to detect intrusions using new test data samples. An optimal accuracy achieved by the detection model is 93.75%, 98.92%, 99.35%, and 98.58% on UNSW-NB15, CICIDS2017, NSL-KDD, and Kyoto 2006 + datasets, respectively. However, the detection performance is evaluated here utilizing binary classification, which considers only three metrics. Moreover, the model’s hyperparameter tuning has not been explored. Rao et al. [17] have proposed the two-stage hybrid IDS model. In the first stage, an unsupervised sparse auto-encoder is employed for the extraction of the features, and in the second stage, the deep neural network is utilized to classify different attacks. Auto-encoder is utilized in the first stage of the proposed model for the feature extraction, and classification among different attacks is performed using the deep neural network model. In the classification stage, only one deep neural network model is used. Here, the paper does not deal with the hyperparameter optimization of the deep neural network model. The optimal performance in terms of efficiency is achieved with ten features for the KDD-CUP99 and NSL-KDD datasets and 11 features for the UNSW-NB15 dataset. The accuracy and detection rate of the proposed model are 99.03% and 99.48%, respectively, on the KDD-CUP99 dataset. However, here, a standard technique for hyperparameter tuning is not discussed, and detection performance is evaluated on one detection model, which leads to biased results. Kunhare et al. [31] have explored the effectiveness of port scanning methods for obtaining the IP addresses of networked hosts that are vulnerable to attack. The attacker’s initial step to launch a targeted cyber-attack is employing the port scanning technique. The snort IDS tool is also analyzed, including its architecture, installation process, file configuration, and detection approach. Furthermore, real-time network traffic implementation of the different variants of DoS attacks is demonstrated.

Li et al. [16] have introduced a Hierarchical and Dynamic Feature Extraction Framework (HDFEF) for designing network intrusion detection systems (NIDS). This approach considers multiple network flow packets to comprehensively define network activity. Here, the optimal performance is best achieved by combining HDFEF with the LSTM with focal loss instead of cross-entropy loss. The experiment is performed up to 40 epochs, which achieves an accuracy of 99.75%, and the time for one epoch taken by the model is 145.17s. Zhao et al. [21] have proposed a three-phase framework that includes data pre-processing, dimensionality reduction, and weighted stacking of classifiers with the aim of improving accuracy and efficiency. For dimensionality reduction, correlation-based feature selection with the deferential evolution algorithm (CFS-DE) approach is utilized, and classification is performed based on weights given to the base classifiers. The twenty best features are selected by the proposed CFS-DE model, which achieves an accuracy rate of 87.34% and 168.93s on the KDDTest + dataset. Here, the model increases the computation time cost because of the extra overhead of the base model’s weight calculation. Gupta et al. [32] have used an ensemble model for the detection of brain tumors and classification of the cancer stage, which is either pituitary, meningioma, or glioma cancer. The proposed model is majorly divided into three modules: data preprocessing, tumor detection, and classification. Preprocessing of the data is performed, which includes increasing the contrast of the MRI images, followed by image augmentation with the help of CycleGAN. For the detection of the brain tumor in the second module, modified inception ResNetV2 is employed, which gives a binary output of either yes or no. If the tumor is detected, then the tumor stage is classified in the third module. It is achieved by combining modified Inception ResNetV2 and random forest algorithms, which yield either of the three categories, including pituitary, meningioma, and glioma cancer, with an accuracy of 98%. Azimjonov & Kim [5] have developed a lightweight IDS capable of detecting various cyber-attacks while addressing the challenges posed by limited computational resources and high-dimensional data within the IoT environment. The number of features is reduced by utilizing four feature selection methods: importance coefficient, backward sequential, forward sequential, and correlation coefficient with ridge regressor model. A stochastic gradient descent-based classifier is used for the classification. Here, the worst-case time complexity of the method is O(\({n}^{2}\)) with a 92.69% accuracy rate on average, where the data contain the ‘n’ number of features. The run time of the model with a reduced feature set utilizing the backward sequential algorithm is 2.5 ms on the N-BaIoT-2021 dataset. The backward sequential algorithm takes the worst time (7.21 h) to select the appropriate features on the N-BaIoT-2021 dataset. Here, exactly the six best features are selected by the method. Here, the main limitation is that grid-search-based hyperparameter optimization is performed, which increases the complexity of the model as a result, takes high resource utilization. Additionally, only traditional feature selection methods are explored. Dhanya & Chitra [33] have designed a framework for the IoMT environment to reduce resource utilization with comprehensive time. Auto-encoder is used to decode the features while the xgboost classifier detects the malware. The hyperparameters of the auto-encoder are tuned using the random search, and the hyperparameters of the xgboost classifier are tuned using the genetic algorithm. The adaptive mutation used in the genetic algorithm enhances the search space, hence making it complex in terms of space. Here, cohen’s kappa metric is employed for statistical validation of the model, and it achieves an accuracy of 98.66%, while cohen’s kappa is 96.37%.

3.2 Metaheuristics feature optimization algorithm-based

This subsection discusses several metaheuristic methods for feature selection within the data, including GA, tabu search, MFO, and RSA. It selects the crucial and information-rich features from the data in the IDS paradigm by applying metaheuristic algorithms.

Khammassi & Krichen [9] have proposed a genetic algorithm combined with a logistic algorithm-based wrapper approach for the feature selection. The model is divided into three stages: preprocessing, feature selection, and classification. The optimal subset of features is obtained from the feature selection stage, where the GA-LR-based approach is applied. The complexity of the proposed genetic algorithm depends upon the fitness function here. The aim is to maximize the fitness function within the genetic algorithm, which is a combination of accuracy (directly proportional to fitness function) and the number of features in the subset (inversely proportional to fitness function). The number of features and accuracy pair in this work is as follows: (18, 99.90%) and (20, 81.42%) on the KDD99 and UNSW-NB15 datasets respectively. Vijayanand et al. [10] have proposed a method that uses a genetic algorithm for the feature selection, and multiple support vector machines are used to detect multiple attacks and build IDS for wireless mess networks. Multiple SVM classifiers are arranged linearly, and each classifier is dedicated to each attack and normal class in the input dataset. Performance achieved in this paper is 95.7% accuracy, with 1.90% FPR, 0.5486s average training time, and 0.0023s average testing time on the WMN dataset. The time complexity of the proposed method is O(L*S), where L represents the length of the candidate solution, and S denotes the size of the population in the genetic algorithm. Mohammadi et al. [14] have designed an IDS model that combines filter and wrapper-based methods for feature grouping and feature selection. In the data pre-processing phase, transformation, discretization, and normalization-based techniques have been applied. A filter method-based linear correlation coefficient is used for feature grouping and is called FGLCC. Additionally, the authors have combined the cuttlefish algorithm (CFA) to improve the performance of the model. Here, the fitness function is a combination of detection rate and false positive rate. The main aim of this paper is to enhance the fitness score of the candidate solution as much as possible. To classify between intrusive activity and normal flow, decision tree classification algorithm is employed here. The efficiency of the proposed FGLCC-CFA with the ten best features in terms of detection rate, accuracy rate, FPR, fitness, model building, and testing time are as follows: 95.23%, 95.03%, 1.65%, 95.46%, 83.28s, and 43.50s respectively on KDD CUP99 dataset. Nguyen & Kim [22] have used the genetic algorithm along with KNN and the fuzzy c-means clustering algorithm for the optimal feature subset selection and feature improvement, respectively. After selecting the optimal feature subset, the optimal model is selected employing GA-CNN along with fivefold cross-validation. Only the training dataset is used in these two aforementioned steps. After that, the model is validated using a validation set, and deep features are extracted by the CNN model. Finally, classification models such as KNN, RF, BG, and BS, along with fivefold cross-validation, are utilized to evaluate the performance of the proposed NIDS. Here, the model achieves an accuracy of 98.2%, FPR of 0.5%, and TPR of 95.4% with the 33 best features on the KDDTest-21 dataset. The main limitation is that the data imbalanced issue is not addressed, has a very high computational time, and is evaluated on only one dataset.

Khammassi & Krichen [13] have used the combination of NSGA2 and logistic regression classifier for the feature selection in network intrusion detection. Two schemes are utilized to test the proposed feature selection approach, which includes multinomial logistic regression corresponding to multiple classes and binary logistic regression, which corresponds to each attack class separately in the dataset. Three different decision tree algorithms, such as the C4.5 decision tree, naïve bayes tree, and random forest, are applied to test the performance of the model. The main limitation of this work is that the proposed multi-objective function includes accuracy, which is not a better metric for evaluating the candidate feature subsets in case of an imbalanced dataset. The value of the weighted mean CPU time of the proposed NSGA2-BLR is approx. 20000s while NSGA2-MLR takes nearly 200000s on the CIC-IDS2017 dataset. Performance of the model in terms of accuracy, detection rate, FAR, and the number of features on the UNSW-NB15 dataset is as follows: 94.90%, 55.73%, 0.72, and 8 to 17 respectively, for the binary class, while 66%, 64.90%, 3.85%, and 11 respectively for multi-class. Nazir & Khan [11] have applied the tabu search algorithm to select an optimal subset of features, and the random forest is used to evaluate the performance of the model. In the fitness (cost) function of the tabu search, a combination of multiple objective functions have been used such as error rate, false positive rate, and number of features in the candidate solution. The main aim is to minimize the fitness function for each candidate solution as much as it can be. Here, the feature space is decreased by greater than 60% because tabu search is not hampered by the complexity of the search space, and the time complexity is decreased by up to 40% with a random forest classifier. The proposed method achieves 83.12% accuracy and 3.70% FPR, with 16 optimal features, resulting in a 12.18% cost for the UNSW-NB15 dataset. Halim et al. [15] have designed an IDS that performs feature selection by applying the genetic algorithm for the designing of IDS. The fitness function in the genetic algorithm uses the combination of the correlation metric and accuracy. The correlation metric employs the different combinations of feature sets in the original dataset for the specific candidate feature subset. Moreover, accuracy is not an appropriate metric for evaluating the candidate feature set in an imbalanced dataset, and features are selected in an unsupervised manner. The roulette wheel selection function is applied for the selection of the parent solution in the genetic algorithm. After applying the genetic algorithm, the number of features is reduced up to 10. Different machine learning classification algorithms, such as xgboost, SVM, and KNN, are used to detect intrusive and normal traffic. Time and space complexities of the proposed algorithm are O(\(g(p*{c}^{2})\)) and O \((p*{c}^{2})\), respectively, where g, p, and c represent the number of generations, population size, and length of chromosome within the genetic algorithm. The average accuracy of the model is reported as 98.11%. Ogundokun et al. [34] have applied the PSO algorithm to select the feature and design an IDS model. Subsequently, decision tree and KNN algorithms are utilized to evaluate the feature subset distilled by applying the PSO algorithm. This paper does not consider the imbalanced nature of the dataset. Furthermore, the objective function in the proposed PSO algorithm is not discussed, which is a crucial phase for selecting candidate feature subsets. The model achieves an accuracy rate of 98.6%, a detection rate of 89.6%, and an FPR of 1.1%. The time and space complexities of the model have not been discussed.

Aksu & Aydin [4] have used machine learning techniques to secure CAN Buses. A modified genetic algorithm is utilized to select the ‘m’ optimal feature according to the k-fold cross-validation. Furthermore, five different classification algorithms are employed as candidate classifiers: decision tree, SVM, KNN, logistic regression, and linear discriminant analysis classifier. The overall run time complexity of the proposed model in the worst case is O(\({n}^{7}\)). Here, the hyperparameter of the detection model is not addressed for better accuracy and detection rate. The main limitation is a complex structure with high computational time. Chohra et al. [35] have used the PSO algorithm for feature optimization in the anomaly detection domain. The fitness function employs the ensemble of different machine learning and deep learning classifiers where weighted f1-scores of the ensemble model are selected as the objective function. Subsequently, the selected features are used to filter out original datasets, and a deep learning-based autoencoder model is used for the anomaly detection task. Autoencoder uses the following hyperparameter settings: dropout rate of 0.5 and L2 regularization, categorical cross-entropy, and mean squared error loss functions, which are utilized in the anomaly detection phase. Here, the time complexity of the proposed method is O(k*n*m*log(m)), where n, m, and k represent the number of features, number of samples, and number of trees, respectively. The model is not hampered by space complexity due to the utilization of 128 GB RAM, and it reports an 89.523% accuracy and (28 min + 38s) training time on the UNSW-NB15 dataset. Here, the fine-tuning of only two and three hyperparameters is considered for random forest and xgboost, respectively. Additionally, features are not selected based on the correlation between feature-feature and class-feature pairs. Alazab et al. [36] have designed a network-based IDS (called CossimMFO) by utilizing the swarm optimization algorithm combined with the machine learning algorithm for classification. A modified moth-flame optimizer (MFO) algorithm (a wrapper method) is proposed for selecting the best feature subsets, and a decision tree algorithm is applied for the classification task. Only four of the best features are selected in the NSL-KDD and UNSW-NB15 datasets, and five features are selected in the KDD-CUP99 dataset. The model achieves an accuracy rate and TPR of 97.8% and 99.6%, respectively. Dahou et al. [37] have designed an IDS for IoT security by utilizing deep learning and metaheuristic algorithms. CNN is utilized to extract the relevant features from the IoT data, followed by an enhanced reptile search algorithm (RSA) to select the information-rich features. The fitness function of the RSA uses a combination of error rate and ratio of selected features. The error rate is computed by utilizing the KNN-based classification algorithm. The main limitation of the paper is that the convergence rate of the proposed RSA algorithm is very low. The time complexity of the model is O(n * (t * d + 1)), where n, t, and d indicate the number of candidate solutions, max. number of iterations and the dimension of each candidate solution, respectively. The model achieves a 92.04% accuracy rate for multi-classification on the KDD99 dataset.

Kunhare et al. [38] have proposed a model that is separated into four major modules, including data pre_processing, feature_selection, classification, and finally, optimization. For selecting the best subset of features from the NSL-KDD data (which originally contains 41 features), here genetic algorithm is applied (which reduces the number of features to 20). These reduced features are utilized to filter the dataset with only these feature sets. The filtered dataset is used in the classification module, which employs the hybrid method combining supervised and unsupervised classifiers such as decision trees and logistic regression. In the last module, several metaheuristic algorithms, including GWO, PSO, MVO, and BAT, are applied for optimization. It is observed that the GWO algorithm gives the best accuracy (99.44%), FPR (0.60%), and detection rate (99.36%). Here, the time complexity of the proposed GWO-based algorithm is O(n*logn). Chowdhury et al. [23] have built a network intrusion detection system to identify malicious traffic using the information-rich feature subset. Various combinations of the PSO algorithm, GA algorithm, and threshold correlation (TC) have been explored. PSO and GA algorithms are used to remove the redundant features, and threshold correlation is used to remove the correlated features by setting a certain threshold value. In phase 2 of the classification model, different ensemble models have been employed that best perform at phase 1, including majority voting, mean voting, and catboost. The performance of the model is 73.23% accuracy and 187.125s run time with the SVM classifier and 98.39% accuracy and 5.862s run time with the xgboost classifier for binary and multiclass classification, respectively. Kumar et al. [12] have used the grasshopper optimization-based algorithm to extract the most essential and relevant features from the datasets. Deep residual convolutional neural networks are applied to design an IDS for classification, which further optimizes utilizing the gazelle optimization-based algorithm. The aim is to minimize the fitness function for each candidate solution as much as possible. The time complexity of the model is O(Maximum_Iteration * m * (m*d)), where m represents the number of candidate solutions utilized, and d denotes the size of the problem. Here, the model achieves the following results: 99.17% accuracy, 0.87% FAR, 99.08% detection rate, 47s processing time, and 23.01s testing time. Here, the error rate in the fitness function is utilized, which is a very common approach.

3.3 Hyperparameter tuning of detection model-based

Several studies use traditional methods for optimizing the hyperparameter values, such as grid search, random search, and bayesian optimization methods, and some use metaheuristic methods like the firefly algorithm. This subsection outlines the selection of the optimal hyperparameters of the models in the IDS paradigm.

Wazirali [18] has proposed a method that is majorly separated into four phases: data pre-processing, feature selection, classification, and model validation. This paper mainly addresses the zero-day attack problem to reduce model building and model testing time. In this paper, the optimized hyperparameters are as follows: number of neighbors, distance function and weight, and data standardization. The main drawback of this paper is that the model is evaluated only on a single classifier, such as KNN. Furthermore, the hyperparameter of the model is optimized using the exhaustive search technique, which is a computationally extremely inefficient approach (takes exponential time O(\({n}^{k}\))), where n and k are no. of hyperparameter values and no. of hyperparameters respectively. The accuracy and f1-score of the presented framework are 98.49% and 98.43%, respectively. Kunang et al. [24] have separated the proposed architecture into three modules: a data preprocessing module, a deep learning module with hyperparameter optimization, and an attack detection module. Furthermore, hyperparameter optimization is used in the second module to determine the best model. The deep autoencoder is used as the model in the second module for feature extraction, which includes the encoding, decoding, and bottleneck layers. Achieved values of the accuracy rate, training, and run time of the proposed framework are 83.33%, 382.48s, and 0.968s, respectively, with multiclass classification on the NSL-KDD dataset. Batchu & Seetha [25] have used machine learning models to detect DDoS attacks. During data preparation and preprocessing, the data undergo five phases: exploratory analysis, sample balancing with techniques like SMOTE and Tomek, imputing missing/infinite/zero values with median values, feature normalization using a standard scaler, and label encoding for categorical features. Feature selection is performed using a combination of two traditional feature selection techniques, filter and embedded-based, and the model’s hyperparameters are optimized by utilizing the grid search technique. The main drawback of the paper is that grid search-based hyperparameter tuning increases the time complexity of the model exponentially (O(\({n}^{k}\))). Here, a gradient boosting algorithm is utilized, which takes O(\(n*f*{n}_{trees}\)), where f and \({n}_{trees}\) are a number of features and a number of trees respectively. The performance of the proposed model in terms of accuracy and run time is 99.97% and 40.78s, respectively.

Jovanovic et al. [39] have designed a network intrusion detection system (called XGBoost-TSFA) using the improved firefly and xgboost algorithms. The six different hyperparameters of the xgboost algorithm (including eta, max_depth, gamma, colsamplel_bytree, min_child_weight, and subsample) are optimized using the improved firefly algorithm, which enhances the detection capabilities of the IDS. Evaluation of the proposed framework is performed on the UNSW-NB15 dataset. The experiments are performed using a population size of ten with fifteen iterations, and the model is evaluated by applying binary and multi-class classification with 97.49% and 86.96% accuracies, respectively. To enhance the detection abilities, reducing the false positives and false negatives ratio [40] have proposed a NIDS, which uses the xgboost algorithm to identify malicious traffic. To improve the performance, the hyperparameters of the xgboost algorithm are optimized using the modified sine–cosine metaheuristic algorithm. The performance of the proposed model is evaluated utilizing the NSL-KDD dataset and compared with another metaheuristic algorithm based on optimized xgboost and without optimized xgboost algorithm. Kalita et al. [41] have used a drift detection technique to measure the magnitude of the drift in the dynamic or non-stationary environment. Only the hyperparameters of the SVM classifier (C & \(\gamma\)) are discussed, and based on the magnitude of drift, one of three mechanisms is selected. The first mechanism is the introduction of the base optimization algorithm, i.e., the moth flame optimization algorithm (MFO), and random initialization of the algorithm is considered here. In the second mechanism, lightweight-MFO is introduced, which uses the knowledge base for the initialization of the algorithm. In the third mechanism, the knowledge base search space is utilized to achieve the optimal value of the SVM hyperparameters. The execution time at the 10th time instance with and without drift detection module are 17,473.99s and 28,321.6s, respectively, and the average accuracy obtained by the model is 97.5%.

Savanovi et al. [42] have developed an IDS model for the security of IoT devices for healthcare 4.0. Here, the machine learning classification algorithm is utilized along with the metaheuristic algorithms. The modified firefly algorithm is utilized to optimize the xgboost model’s hyperparameters. To select the best feature within the dataset, the KNN algorithm is applied with the value of K = 5. As a result, out of 50 features, ten best features are selected. The proposed model is compared with the other eight metaheuristic algorithms such as FA [43], GA [44], PSO [45], ABC [46], ChOA [47], COLSHADE [48], and SASS [49]. The SHAP plot is utilized to analyze the selected features, and statistical validation of the observed results is performed using the p-values at significance levels 0.1 and 0.05. The accuracy and f1-score of the proposed framework are 99.69% and 99.69%, respectively. Six hyperparameters of only xgboost based detection model are tuned. Saheed & Misra [50] have designed an intrusion detection system for IoT security, which considers the average probability of a voting classifier. The dimensionality of the dataset is reduced with the hybrid approach utilizing information gain for feature selection and PCA for feature extraction. The voting classifier uses four machine and deep learning-based base classifiers such as random forest, KNN, decision tree, and multilayer perceptron. The hyperparameters of these base classifiers are optimized using the gray wolf optimizer. The class imbalance issues present in the IoT datasets (such as UNSW-NB15 and BoT-IoT) are handled with the help of SMOTE. The performance attained by the framework in terms of accuracy, detection rate, and FAR is 99.87%, 99.89%, and 1.20%, respectively. Azimjonov & Kim [6] have presented a framework with two main contributions based on implementation and methodology. In the implementation, the dataset preparation portion has been discussed, such as balancing imbalanced data, removing duplicate records, transforming categorical data into numerical data, dealing with missing values, and splitting the data into train and test sets. Four feature selection techniques have been applied in the methodology: forward sequential, backward sequential, importance coefficient, and correlation coefficient with linear SVM classifier. Moreover, the hyperparameters of the ridge regressor and LSVM classifier have been tuned utilizing the grid search-based hyperparameter tuning approach. However, the grid search-based hyperparameter tuning approach is inefficient in terms of computational cost and high-dimensional data. Grid search explores all combinations of the search space, and hence, it takes exponential time (e.g. O(\({n}^{k}\))). Although the model’s accuracy is 94.64%, it takes an extremely long training time of 5394.409 ms. Table 1 summarizes the state-of-the-art work by discussing the five major components such as (i) Objective, (ii) Method, (iii) Result, (iv) Advantages, and (v) Limitations.

4 Research objective

This paper proposes a lightweight intrusion detection system to address the aforementioned challenges. The proposed model uses the swarm intelligence-based technique to select the most crucial features from the network traffic dataset. There are three main advantages of using swarm intelligence-based feature optimization techniques, which are as follows: (i) Capability to adjust to the dynamic environment, (ii) Resilience to individual failures, and (iii) Capability to effectively explore a broad solution space. Since the IDS datasets are imbalanced and contain many features, they extensively obstruct the accuracy of attack detection [3]. To account for the imbalanced nature of the dataset, the PSO-based feature selection algorithm incorporates the geometric mean of ensemble models into its fitness function. The metric “geometric mean” is said to be superior to accuracy in dealing with the imbalanced nature of the dataset [51]. Here, feature selection is performed using a supervised approach, which prioritizes the target class when determining the optimal feature subset by introducing the correlation metric and information gain metric into the fitness function in the ACO-based feature selection approach. On the other hand, this paper considers the hyperparameter tuning of the different detection models using the nature-influenced genetic algorithm-based technique, which gives optimized results even in complex and high-dimensional data scenarios. The genetic algorithm-based fine-tuning technique determines the best hyperparameter settings for each detection model after every generation. This search process continues iteratively till an optimized result can be achieved. Moreover, the potency of the proposed lightweight Intrusion Detection System is examined on twelve different detection models, each on three different datasets. A detailed description of the proposed methodology of this paper is given in the following section.

5 Proposed methodology

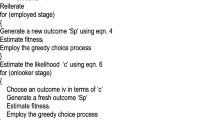

The Proposed Light-Weight IDS model is mainly developed employing four major modules, which are given as follows: (i) Dataset Description & Data Preprocessing, (ii) Bi-Phase Swarm Intelligence-based Feature Optimization (Phase1: PSO-based feature selection, and Phase2: ACO-based feature selection), (iii) Hyperparameter Tuning (genetic algorithm-based hyperparameter tuning), and (iv) Classification (by applying either Base or Ensemble detection models). Figure 2 depicts the overall flow of the proposed model. Algorithm 1 summarizes the complete step-by-step development of the Light-Weight IDS model employing all four major modules discussed above. Table 2 illustrates the abbreviations and their description used in the algorithm.

Proposed model

5.1 Dataset description & data preprocessing

The first module of the proposed framework discusses the dataset and the preprocessing steps applied in this paper.

5.1.1 Dataset description

Three most popular Intrusion detection system traditional datasets (such as NSL-KDD [28], UNSW-NB15 [52], and CSE-CIC-IDS2018 [53]) are utilized in this paper. The NSL-KDD [28] dataset is an enhanced form of the KDD Cup’99 dataset [54]. A detailed description of the CSE-CIC-IDS2018 dataset is present at (https://www.unb.ca/cic/datasets/ids-2018.html) [55]. All these datasets are imbalanced. Here, complete training and testing sets of NSL-KDD and UNSW-NB15 are used. Since the CSE-CIC-IDS2018 dataset contains many records, only the samples belonging to a subset of Wednesday traffic are randomly selected in this paper. Table 3 shows a brief description of the datasets used in this paper.

5.1.2 Data preprocessing

Data preprocessing is required for cleaning the dataset as the first step of the proposed framework since the dataset contains null or redundant values, outliers, and categorical values. Here, the data preprocessing process is divided into 3 steps, which include (i) Filling Missing Values, (ii) Label Encoding, and (iii) Outlier Removal. The sequential flow of data preprocessing steps used in this paper is described as follows:

-

(i)

Filling Missing Values: Some of the entries contain “Null” values, which give no information about the detection of attack. Therefore, in this paper, a particular row’s ‘null’ values are filled with a top value of that column.

-

(ii)

Label Encoding: In the datasets used in this paper, some of the features contain categorical values that need to be converted into numerical values. For this reason, the label encoding technique is applied here. By doing label encoding, the values of categorical features can be converted into their corresponding numerical values.

-

(iii)

Outlier Removal: Outlier removal is the process of normalizing or scaling the features in the data to a specific range. In this paper, min–max scaling is applied, and its formula is given in Eq. (1) as follows:

$${\varvec{x}}.{\varvec{s}}{\varvec{c}}{\varvec{a}}{\varvec{l}}{\varvec{e}}{\varvec{d}}=\frac{({\varvec{x}}-{\varvec{x}}.{\varvec{m}}{\varvec{i}}{\varvec{n}})}{({\varvec{x}}.\mathbf{max}-\boldsymbol{ }{\varvec{x}}.{\varvec{m}}{\varvec{i}}{\varvec{n}})}$$(1)

Where \(x\) is the specific feature’s original value, \(x.min\) is the minimum value of that specific feature, \(x.max\) is the maximum value of that specific feature, and \(x.scaled\) is the specific feature’s scaled value within the range [0,1].

5.2 Bi-phase swarm intelligence-based feature optimization

To make data with the most efficient utilization for building the model, appropriate and scrupulous selection of the features in the data is one of the important parts that is being addressed in this module. In the context of machine learning or deep learning, the model’s weight is highly dependent on the number of features in the data. The weight of the model is highly dependent on the number of features used in training the model. If the data contains high dimensions, the model weight becomes high. Many numbers of redundant and non-informative features in the training data make the model heavyweight. Therefore, the number of features plays a crucial role in designing a lightweight IDS model.

This module is divided into two phases; hence, it is called Bi-Phase Swarm Intelligence-based Feature Optimization. The term swarm intelligence [56] is used because it is influenced by how a group of simple agents works together to solve complicated or intricate problems in social organisms. These fields come under the nature-inspired metaheuristic class of algorithms. A modified version of two algorithms, particle swarm optimization, and ant colony optimization, is applied to the proposed approach. The reason behind introducing two-phase feature optimization is to build the model as light as possible, which can save time and reduce overfitting and overall cost.

However, the field of optimization has seen the emergence of several advanced algorithms such as the reptile search algorithm (RSA) [57], red fox optimization algorithm (RFO) [58], salp swarm algorithm (SSA) [59], butterfly optimization algorithm (BOA) [60], and many others. Despite these advancements, the hybrid use of PSO and ACO remains prevalent. The no free lunch (NFL) theorem [61] asserts that no single optimization algorithm outperforms all others across all possible problems. Its problem-specific nature implies that an algorithm effective for one type of problem may not work well for another. Consequently, there is no universally superior heuristic for all optimization tasks. This underscores the importance of carefully selecting and tuning optimization algorithms based on the unique characteristics of each problem rather than a generalized approach.

Considering the “no free lunch” (NFL) theorem, combining different algorithms or utilizing hybrid approaches can offer a way forward by harnessing the unique strengths of multiple algorithms. For instance, combining PSO and ACO for feature selection tasks offers several advantages over algorithms like RSA, RFO, SSA, and BOA due to their complementary strengths. RSA may suffer from slower convergence and lack of fine-tuning mechanisms, RFO may not possess a robust dual mechanism for balancing exploration and exploitation across diverse problems, and on the other hand, SSA may struggle with exploitation and fine-tuning, and BOA may lack the precise search refinement offered by the combination of PSO’s global search and ACO’s local search capabilities. PSO offers fast convergence and effective global search, while ACO provides robust exploration and fine-tuning through pheromone trails. PSO excels in quick global search and convergence, while ACO excels in refining the search locally through pheromone-based learning. The combination of these two methods improves exploration and exploitation processes, preserves diversity, as well as adjusts the search process dynamically. This leads to a more resilient and efficient method for feature selection tasks. The following sections, 5.2.1 and 5.2.2, describe in detail the phases of the proposed feature selection process.

5.2.1 Phase1: particle swarm optimization based feature selection

Since the dataset used in this paper is imbalanced, g_mean is considered a more acceptable metric than accuracy for the imbalanced dataset [51]. The main goal of this phase is to extract the most crucial features from the intrusion detection system data based on the imbalanced nature of the dataset. It reduces the complexity and false alarm rate, enhances the attack detection accuracy and interpretability, and yields a more efficient model. Several machine and deep learning-based intelligent detection models are used in ensemble learning techniques. The machine learning models applied in the ensemble technique are decision tree, random forest, xgboost, k-nearest neighbor, support vector machine, lightgbm, and catboost. The deep learning models of ensemble technique are dense neural networks and 1-dimensional convolutional neural networks. The geometric mean is employed in the fitness function, which preserves the imbalanced property of the dataset and gives the best attack detection performance.

Figure 3 shows the flowchart, and Algorithm 2 outlines the steps involved in the proposed phase 1 feature selection. Mainly six steps are involved in the construction of this algorithm which is given as follows: (i) Initialize Particle and Velocity Position, (ii) Evaluation of Fitness Function, (iii) Update Personal and Global Best Position, (iv) Compute Velocity and Update Particle Position, (v) Termination Condition. Each step of the proposed phase 1 feature selection algorithm is described in detail as follows:

Phase1 for feature selection

-

(i) Initialize particle and velocity position(i) Initialize particle and velocity position

Nitialization of the particle position and velocity is the first step in the particle swarm optimization algorithm. A particle position within a swarm represents one of the acceptable solutions. The size of a particle and its corresponding velocity position are randomly initialized. Particle position vectors are allocated with binary values denoting the inclusion or exclusion of the feaures within that feature subset. The presence of 1’s in the particle position denotes that the corresponding feature is included in the feature subset and 0’s denotes the absence of the feature in the subset. The size of a particle is equivalent to the number of 1’s present in that particle position vector. The maximum size of the particle is limited to the number of features available in the dataset. Figure 4a depicts the arrangement of particles in a swarm and Fig. 4b represents the structure of a particle position and velocity vector where, S1, S2, S3, …, Sm denote the set of samples and F1, F2, F3, …. Fn denote the set of features in the dataset.

-

(ii) Evaluation of fitness_function

Fitness function computation is a prominent step in the PSO algorithm. It produces the scores, and based on that score, the significance of a particular particle (candidate solution) is determined. This is the novelty of this paper because, here, the fitness function is computed by taking the G_mean of the ensemble model (described in Function 1). An ensemble model is employed, which combines multiple machine learning (in this case, 7) and deep learning models (in this case, 2) with a weight parameter (\(\gamma\)) given to each model (\(M\)) depending upon their importance. Common hyperparameter values given to both DNN and CNN models are as follows: ‘Adam’ as an optimizer, ‘categorical cross-entropy’ in the loss function, ‘relu’ & ‘softmax’ in the activation function, batch size = 32, and no. of epochs = 25. For the DNN model, the dropout rate is determined to be 0.2, and for the CNN model, kernel size and pool size are equal to 3 and 2, respectively. Equation (2) explains the fitness function used in the proposed phase 1 of the feature selection module.

where, \({M}_{i}\in \{DT, RF, Xgboost,Lightgbm, Catboost,SVM,KNN\}\),\({M}_{j} \in \{DNN, CNN\}\)

Several extensive experiments are performed by varying the value of γ from 0.1 to 1, and it is observed that γ = 1 offers optimal results in this paper. Thus, equal weights (γ) are given to all the models, equal to 1, determined by the trial-and-error method.

The following Eq. (3) shows the formula for computing the geometric mean between specificity and sensitivity. Equations (4) and (5) provide the formula for computing specificity and sensitivity, respectively. Definitions of True Positives, False Negatives, True Negatives, and False Positives are given in Sect. 6.2 of this paper.

where,

Compute_fitness(particlek, DC_Train)

-

(iii) Update personal and global best position

After computing the fitness score of each particle in the swarm, the personal best position of each particle is updated based on fitness function. If a particle’s fitness score performs better than its previous fitness score, change the current personal best position of that particular particle to the position with a large fitness score value; otherwise, the previous personal best position is considered the current one. The global best position is determined by the highest fitness score among all the personal best positions of the particles within a swarm. At the end of the iterations, the global best position is considered the best feature subset provided by this phase of the proposed model.

-

(iv) Compute velocity and update particle position

The velocity vector of the particle (described in Function 2) is computed using the following Eq. (6), and the position of the particle is updated (shown in Function 3) using the following Eq. (7).

where W is the inertia weight, \({{\varvec{r}}}_{1}\), and \({{\varvec{r}}}_{2}\) are the random numbers in the range [0,1], and they are randomly chosen, \({{\varvec{C}}}_{1}\) and \({{\varvec{C}}}_{2}\) are the learning factors termed as cognitive behavior and social behavior coefficients, respectively. \({{\varvec{P}}}_{{\varvec{i}}}^{{\varvec{t}}}\) and \({{\varvec{V}}}_{{\varvec{i}}}^{{\varvec{t}}}\) denote the position and velocity vector of the ith particle, respectively, at time t. \({{\varvec{P}}}_{{{\varvec{b}}}_{1}}^{{\varvec{t}}}\) denotes the personal best position of ith particle at time t, and \({{\varvec{g}}}_{{\varvec{b}}}^{{\varvec{t}}}\) is the global best position (optimal feature subset) within the swarm at time t. In this paper, several extensive experiments are performed on all three datasets to determine the best values of each parameter in phase 1 PSO-based feature selection. The optimal values of each parameter are provided in Table 4, which are determined by the trial-and-error method. Only those values are selected, which gives an optimal result in terms of fitness score at every iteration. The experiments are conducted by varying the swarm size from 10 to 50; it is observed that the swarm size is equal to 10, providing the optimal result for all the datasets.

Compute_velocity(c1, c2, r1, r2, w, global_best, personal_bestk, particlek, velocity_particlek)

Update_position(velocity_particlek+1, particlek)

-

(V) Termination condition

There are two ways to terminate the execution of the algorithm: (a) By defining a fixed number of iterations and (b) By constantly observing the progress of the graph between the best fitness score for each iteration and the number of iterations. In the second case, the execution of the algorithm will be terminated if the fitness score of the global best position (feature subset) of each iteration becomes stable. In this paper, a rigorous number of experiments are conducted to select the appropriate value for the number of iterations per dataset. Therefore, the number of iterations varies depending on the type of dataset. Figure 9a demonstrates the convergence graph between the best fitness score per iteration and the number of iterations in the algorithm. Table 4 indicates the number of iterations for each dataset as the convergence condition of the algorithm. After this phase, the number of features is reduced to 25, 29, and 48 for the NSL-KDD, UNSW-NB15, and CIC-IDS2018 datasets, respectively.

5.2.2 Phase2: ant colony optimization based feature selection

In this phase, the most informative features are selected based on the mutual information value and correlation value between feature-feature pairs and class-feature pairs. It is achieved by considering mutual information and correlation metrics in the fitness function of the ant colony optimization algorithm. Therefore, the feature subset obtained after this phase contains uncorrelated and information-rich features. By removing irrelevant features, this phase ensures computational efficiency, while the less dimensionality of the data ensures the lightweight of the model. Figure 5 shows the flowchart, and Algorithm 3 outlines the overall steps involved in the proposed phase 2 feature selection.

To determine the optimal values of each parameter in the ACO-based feature selection in phase 2, various comprehensive experiments are performed on each dataset considered in this paper. The optimal values of each parameter are provided in Table 5 by the trial-and-error method. Only those values are selected, which offers an optimal result in terms of the fitness function at each iteration and considering multi-class classification. The fitness function is observed to stop increasing its values after 50, 60, and 50 iterations for NSL-KDD, UNSW-NB15, and CIC-IDS2018 datasets, respectively demonstrated in Fig. 9a. The number of ants is initialized with the number of features obtained from the PSO-based feature selection phase corresponding to each dataset (discussed in Sect. 5.2.1). Moreover, the number of features in the subset is determined on the basis of the feature importance, which is obtained by the importance plot using Xgboost for each datasets.

Phase 2 for feature selection

The primary step of the ant colony optimization algorithm applied in this paper can be analyzed using the following points: (i) Initialization of Look-up-table (LUT), (ii) Heuristic Function, (iii) Probability Function, (iv) Fitness Function, (v) Pheromone Update Rule. Each step involved in the construction of this phase is described as follows:

-

(i) Initialization of look-up-table (LUT)

Initialization of the look-up table (LUT) is the first step in the ant colony optimization algorithm. LUT is a matrix in the form of either lower or upper triangular. The reason behind using only half (either upper or lower) part of the matrix is that it contains symmetric values on both sides of LUT. It is a matrix of dimension (n*n) where only one-half part of the matrix is utilized. It implies that only \((n*(n-1))/2\) entries contain unique values in the LUT. Therefore, only those entries in the LUT are filled with values. Here, ‘n’ represents the number of features obtained after the first phase of the feature selection module in the proposed model. It includes mainly two pieces of information associated with feature-feature pairs, i.e., (a) Pheromone value and (b) Heuristic value. Figure 6 shows an example of the LUT employed in this paper where both the values are combined in a single table. Functions 4 and 5 explain the initializing of the pheromone LUT and heuristic LUT, respectively.

Initialize_Pheromone_LUT(n)

Heuristic_LUT(n, Xgboost(), DF_Train)

Pheromone LUT is first initialized with a constant value (here, it is equal to 0.8) and is updated after each iteration, as indicated in Algorithm 3. Furthermore, heuristic LUT is initialized with the heuristic value (described in Function 6) corresponding to each entry in LUT, which is computed by applying the proposed heuristic function (shown in Eq. 8). Heuristic value depends upon ‘true positive rate’ and ‘cosine similarity’ corresponding to different feature-feature pairs in the LUT. Therefore, entries in heuristic LUT will remain constant throughout the execution of Algorithm 3. In Fig. 6, the upper section represents the heuristic value, whereas the bottom section denotes the pheromone value of each row in the LUT. For instance, the entries corresponding to feature ‘1’ and feature ‘2’ are 0.55 and 0.73, respectively, which indicate the heuristic and the pheromone values respectively.

-

(ii) Heuristic function

A novel heuristic function is utilized to evaluate the heuristic values in the heuristic LUT. Function 6 represents the overall step-by-step procedure for evaluating the heuristic value for different feature-feature pairs in the LUT. The heuristic value corresponding to feature ‘A’ and feature ‘B’ (\({{\varvec{\eta}}}_{{\varvec{A}}{\varvec{B}}}\)) is computed using the following Eq. (8). It is measured by simply dividing the ‘True Positive Rate’ by the ‘Cosine_Similarity’ between feature-feature pairs.

TPR for a specific class ‘i’ can be assessed using the given Eq. (9), while the weighted TPR can be measured using the corresponding Eq. (10). For measuring the value of Weighted_TPR, several classification algorithms (such as DT, RF, Xgboost, and KNN) are analyzed, and it is observed that the ‘Xgboost’ classifier offers the optimal performance.

where ‘C’ and ‘S’ are the total number of classes and the total number of samples, respectively, in the data.\({S}_{i}\) represents the number of instances of a particular class ‘i’ present in the data. The Cosine_Similarity between two features, ‘A’ and ‘B’, is determined using the following Eq. (11). The actual meaning of True Positive and False Negative are provided in Sect. 6.2.

where \({A}_{i}\) & \({B}_{i}\) are the specific instances of feature vectors ‘A’ and ‘B’, respectively.

Heuristic_value(column_i, column_j, DF_Train, Xgboost())

-

(iii) Probability function

Initially, the number of ants is equivalent to the number of features in the subset obtained after phase 1 feature selection. Each ant is standing at every feature provided that no two ant stands at the same feature. Now each ant explores their path by visiting the other features (nodes) with the aim of achieving the best feature subset. This is attained by choosing the routes with the highest probability value. Equation (12) signifies the function for assessing probability values related to each feature in the unvisited feature list, which helps the ants select the next feature appropriately in their path. Function 7 explains the systematic approach to calculate the probability values between two given features and it gives the best feature subset selected by specific ant ‘k’.

where \({P}_{AB}^{k}\) is the probability of selecting the next feature as ‘B’ by ant ‘k’ if the ant ‘k’ is standing at feature ‘A’. \({{\prime}\eta }_{AB}{\prime}\) is the heuristic value between feature ‘A’ and feature ‘B’ present in the heuristic LUT. \({\prime}{\tau }_{AB}{\prime}\) is the pheromone value between feature ‘A’ and feature ‘B’ present in the pheromone LUT. ‘m’ is the total number of unvisited features. The parameter ‘α’ adjusts the impact of \({{\prime}\tau }_{AB}{\prime}\), while the parameter ‘β’ governs the effect of ‘\({\eta }_{AB}{\prime}\).

antBuildSubset(ia, n, s, alpha, beta)

-

(iv) Fitness function

In the ant colony optimization-based feature selection algorithm, the fitness function plays a very crucial role in precisely determining the best feature subset. Therefore, setting the fitness function appropriately is a primary key in the ant colony optimization-based feature selection algorithm. During this phase, two different fitness functions are utilized to analyze various combinations in subset selection. The aim is to identify the most crucial features subset for designing of a light-weighted IDS model. Description of both these fitness functions, along with the algorithmic details, are provided in the following subsections (a) and (b):

-

a. Mutual information-based

It selects the most crucial features based on the mutual information theory concept. It observes the mutual information between the feature subset selected by a specific ant and its corresponding target class feature. It calculates the entropy related to the subset and conditional entropy related to the subset given class feature. Functions 8.a and 8.a1 explain the systematic way to execute the mutual information as a fitness function in the proposed model. Equation (13) shows the mutual information (\(I(F, C))\) by selecting a subset of features (F) and the class label (C).

fitness_score (feature_subset_ia, DF_Train)

Mutual_Information(Xf, Y)

Entropy ‘H’ of feature subset ‘F’ is estimated using the following Eq. (14).

Entropy ‘H’ for feature subset ‘F’ after analyzing class ‘C’ can be measured using the following Eq. (15).

where F and C represent the feature subset and target class, respectively. \(I(F, C)\), \(H\left(F\right)\), and \(H(F|C)\) describe the mutual information between F and C, the entropy of F, and the conditional entropy of F provided C, respectively.\(P \left({f}_{i}|{c}_{k}\right)\) presents probability of a feature having a value \({f}_{i}\) and target class being \({c}_{k}\), while \(P\left({f}_{i}\right)\), and \(P\left({c}_{k}\right)\) represent the probability of a feature having a value \({f}_{i}\) and the probability of target class being \({c}_{k}\) respectively.

-

b. Correlation-based

The second type of fitness function used here is the correlation-based. Equation (16) determines the potential of a particular feature subset ‘F’ selected by the ant colony optimization-based feature selection algorithm. This function selects features based on the feature-feature correlation and the class-feature correlation. Therefore, this fitness function gives importance to both correlation values. It is important to observe that for selecting the best feature subset, the correlation between the class-feature pair should be as high as possible, and the correlation between the feature-feature pair should be as low as possible. Function 8.b, along with functions 8.b1 and 8.b2, explains this fitness function.

where ‘s’ is the number of features in the feature subset selected by a particular ant and \({r}_{cf}\) indicates the correlation between categorical and numerical features. It is measured using kendall’s rank correlation coefficients, and its average value is taken here. The systematic procedure for computing the value of \(\overline{{r }_{cf}}\) is outlined in function 8.b1. \({r}_{ff}\) is the pearson correlation coefficient between two numerical features, and it is computed between two features ‘A’ and feature ‘B’ using the following Eq. (17) and its average value are taken. Where ‘S’ represents the total number of samples while \({A}_{i}\) and \({B}_{i}\) indicate the feature value of the ‘ith’ sample of features ‘A’ and ‘B’, respectively. The step-by-step process for computing the average value of \(\overline{{r }_{ff}}\) is provided in function 8.b2.

fitness_score (feature_subset_ia, DF_Train)

compute_rcf(X,Y)

compute_rff(X)

-

(v) Pheromone update rule

Pheromone updation (summarized in Function 9) is the last step in the ant colony optimization-based feature selection algorithm, and its value is updated in the pheromone LUT. It has been studied that ants secrete a kind of chemical (called pheromone) in the path where they walk. In searching for the best feature subset, they secrete a chemical to help other ants follow the suitable route attracted by the amount of chemicals in the route. If the concentration of pheromones in a route is higher, the probability of an ant selecting the route with the highest pheromone concentration increases. Hence, the pheromone LUT is updated appropriately corresponding to the feature-feature pair.

Update_Pheromone(n, \(\rho\), Q, feature_subset_list, F1_Score, Pheromone_LUT)

The pheromone value is decremented for all feature–feature pairs. Moreover, Eqs. (18) and (19) describe the formula for updating the values in the pheromone LUT.

where \({\tau }_{ij}^{\left(t+1\right)}\) represents the amount of pheromones between feature (i) and feature (j) pair at the (t + 1)th iteration. ρ is the evaporation rate, and by the trial-and-error method, its value is determined as 0.1, and Q is a constant number (here, it is 1). To find the F1-Score value, various machine learning models, such as KNN, DT, RF, and xgboost, are applied. It is observed that xgboost performs better than others.

5.3 GA-based hyperparameter tuning

The best set of hyperparameters improves the detection models’ performance. For this reason, this paper introduces a genetic algorithm-based hyperparameter tuning module for retrieving the best set of hyperparameters per detection model. Genetic algorithms (GAs) offer a powerful and flexible approach to optimizing hyperparameters in machine learning models. They excel in global search capability, adaptability, parallelism, robustness to noise, and a balanced exploration–exploitation trade-off. These attributes make GAs highly effective for navigating complex and high-dimensional hyperparameter spaces, often surpassing other optimization methods such as grid search, random search, and bayesian optimization. Compared to grid search, GAs efficiently explore the search space without an exhaustive search. They can leverage historical information to guide the search more effectively than random search. In contrast to bayesian optimization, GAs are less computationally intensive per iteration and are better equipped to handle larger search spaces. GAs also excel in handling mixed types of hyperparameters and maintaining diversity in the population, thereby reducing the risk of premature convergence. Algorithm 4 outlines the proposed GA-based hyperparameter tuning module. As shown in Algorithm 4, this module is executed using training and testing datasets.

Table 6 shows the parameter settings for executing the genetic algorithm in the search for the best hyperparameter values. To determine the optimal values of each parameter in the GA-based hyperparameter optimization in the third module, this paper performs several rigorous experiments by varying the population size, selection rate, and mutation rate on each dataset. The optimal values of each parameter are given in Table 6 by the trial-and-error method. Only those values that offer an optimal result in terms of the fitness function at each iteration are selected by focusing on multi-class classification. The fitness function gives maximum values at 128, 64, and 64 generations for NSL-KDD, UNSW-NB15, and CIC-IDS2018 datasets, respectively as depicted in Fig. 9b, the convergence graph between fitness score and no. of iterations (or generations).

Hyperparameter Tuning.

-

(i) Initialization of population

Initialization of the population is the first step in implementing the GA-based hyperparameter tuning module. Each detection model is executed separately in the search of the best hyperparameters. So, this module is executed as many times as the number of detection models provided as input to this module. Hyperparameters and their approximate ranges are pre-specified in this module, as the populations are initialized based on the hyperparameters’ ranges. Figure 7 describes the structure of a population, chromosome, and gene for xgboost classifier in the proposed genetic algorithm. For instance, executing this module for the xgboost classifier, the genes in the chromosome are ‘max_depth’, ‘booster’, ‘n_estimators’, ‘min_child_weight’, ‘gamma’, and ‘subsample’.

-

(ii) Fitness score computation

Each candidate chromosome is evaluated using the fitness function. Since multi-class (multiple attacks and normal class) classification is being performed here, the weighted F1-Score of the detection model is utilized for the fitness score computation of the chromosomes. The formula for computing the f1-score, precision, and recall for a particular class ‘i’ are specified in Eqs. (20–22), respectively. The formula for computing the weighted f1-score is provided in Eq. (23).

where N and \(|c|\) represent the total no. of instances and total no. of classes, respectively, while \({|S}_{i}|\) denotes the total no. of instances of the ‘ith’ class in the dataset.

-

(iii) Parent selection

Parent chromosomes are selected using the roulette wheel selection function [62]. The parent selection rate is determined as 0.5 by trial and error. This means that 50% of chromosomes from the old population are selected as in the new population, and the remaining 50% of new child chromosomes are built through the reproduction process described below.

-

(iv) Reproduction operation

Reproduction operation is performed to generate new child chromosomes to balance the number of chromosomes in the population. This phase contains two operations: crossover and mutation. Figure 8 describes the overall reproduction operation used in this paper for the xgboost classifier.

-

a.

Crossover: In this phase, single-point crossover [63] operation is applied, as shown in Fig. 8. From the two parent chromosomes, two new child chromosomes are created. The overall steps involved in the crossover operation are given as follows:

-

a

Initially, two chromosomes are selected from the population as the parent chromosomes for the crossover operation

-

b

The first half part of the first parent chromosome and the second half part of the second parent chromosomes are merged to make the first child chromosome

-

c

Similarly, the first half part of the second parent chromosome and the second half part of the first chromosome are merged to make the second child chromosome

-

b.

Mutation: In this phase, a random resetting mutation operation is applied to make the variation in the population. This operation is performed on each chromosome in the population. Here, the mutation rate is determined as 0.5 by trial and error method. This means that 50% of genes in each chromosome in the population have changed their values to new random values from their pre-defined ranges. Figure 8 depicts the mutation operation where, out of 6 genes in the chromosome, randomly, 3 genes (‘yellow’ in color after mutation operation) changed their values to a new value from the appropriate pre-defined ranges for these genes.

-

(V) Termination condition