Abstract

We consider interpolatory quadrature formulae relative to the Legendre weight function \(w(t)=1\) on the interval \([-1,1]\). On certain spaces of analytic functions the error term of these formulae is a continuous linear functional. We obtain new estimates for the norm of the error functional when the latter does not keep a constant sign at the monomials. Subsequently, the derived estimates are applied into the case of the Clenshaw–Curtis formula, the Basu formula and the Fejér formula of the first kind.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We consider the interpolatory quadrature formula relative to the Legendre weight function \(w(t)=1\) on the interval \([-1,1]\)

where the nodes \(\tau _{\nu }=\tau _{\nu }^{(N)}\), ordered decreasingly, are all in the interval \([-1,1]\), and the weights \(w_{\nu }=w_{\nu }^{(N)}\) are real numbers. Formula (1.1) has degree of exactness d at least \(N-1\), i.e., \(R_{N}^{}(f)=0\) for all \(f\in \mathbb {P}_{N-1}\).

For functions \(f\in C^{d+1}[-1,1]\), the error term of formula (1.1) can be estimated by

where \(K_{d}\) is the dth Peano kernel (cf. [3, Section 4.3]). Estimate (1.2), although frequently quoted, is of limited use. For one reason, higher order derivatives of a function are not readily available, and, even if they are, the resulting error bound cannot be applied to functions of lower-order continuity. Furthermore, estimates like (1.2) do not lend themselves for comparing quadrature formulae with different degrees of exactness.

A more practical estimate can be obtained by means of a Hilbert space method proposed by Hämmerlin in [5]. If f is a single-valued holomorphic function in the disc \(C_{r}=\{z\in \mathbb {C}:|z|<r\}\), \(r>1\), then it can be written as

Define

which is a seminorm in the space

Then it can be shown that the error term \(R_{N}^{}\) of formula (1.1) is a continuous linear functional in \((X_{r},|\cdot |_{r})\), and its norm is given by

If, in addition, for an \(\varepsilon \in \{-1,1\}\),

or

then, letting

we can derive the representations

or

respectively (cf. [11, Section 2]). The error norm can lead to estimates for the error functional itself. If \(f\in X_{R}\), then

which, optimized as a function of r, gives

Furthermore, if \(|f|_{r}\) is estimated by \(\max _{|z|=r}|f(z)|\), which exists at least for \(r<R\) (cf. [11, Equation (2.9)]), we get

The latter can also be derived by a contour integration technique on circular contours (cf. [4]).

Representations (\(1.7_i\)) and (\(1.7_{ii}\)) have been successfully applied for computing the norm of the error functional for many well-known quadrature formulae, among them the Gauss, Gauss–Lobatto, Gauss–Radau and Gauss–Kronrod quadrature formulae for various weight functions as well as the Fejér formula of the second kind also known as Filippi rule.

However, how do we proceed if the error term of formula (1.1) does not satisfy one of conditions (\(1.6_i\)) and (\(1.6_{ii}\)), in particular, if \(R_{N}^{}(t^{k})\) does not keep a constant sign for all \(k\ge 0\)? This is the case with a number of well-known formulae, such as the Clenshaw–Curtis formula, the Basu formula and the Fejér formula of the first kind also known as Pólya rule. In the present paper, we show that if \(R_{N}^{}(t^{k})\) changes sign, only once, at some specific \(k=k_{N}^{}\) (cf. (3.2) and (3.16) below), then \(\Vert R_{N}^{}\Vert \) can still be effectively estimated by means of (1.5). We do this in Sect. 3. Our approach is new and general, and in that sense it could likely be applied to interpolatory quadrature formulae whose error term at the monomials changes sign more than once, although this case would require a more delicate handling. In Sect. 4, we apply the estimates derived in Sect. 3 to the Clenshaw–Curtis formula, the Basu formula and the Fejér formula of the first kind. A detailed numerical example, depicting the quality of our bounds, is given in Sect. 5. Our exposition begins in Sect. 2, with some integral formulas for the Chebyshev polynomials of the first and second kind useful in our development.

2 Integral formulas for Chebyshev polynomials

The formulas presented in this section, besides been important in their own right, are also useful in deriving the error norm of Clenshaw–Curtis and Basu formulae.

Throughout this and all subsequent sections, by \([\cdot ]\) we denote the integer part of a real number, while the notation \(\mathop {{\sum }'}\) means that the last term in the sum must be halved when n is odd.

Let \(T_{n}\) and \(U_{n}\) be the nth degree Chebyshev polynomials of the first and second kind, respectively, expressed by

Both satisfy the three-term recurrence relation

where

Proposition 2.1

Let \(r\in \mathbb {R}\) with \(|r|>1\).

(i) We have

Moreover,

(ii) We have

Moreover,

Proof

(i) Writing

splitting the integral on the right-hand side in two, and using

Now,

(cf. [10, Proposition 2.2, Equation (2.8)]), and

(cf. [8, Equation (2.43)]), which, inserted into (2.9), give (2.5).

To prove (2.6), all we have to do is to set \(-t\) for t into the integral on the left-hand side and take into account that \(T_{n}(-t)=(-1)^{n}T_{n}(t)\) (cf. (2.1)).

(ii) The proof of (2.7) and (2.8) is similar to that of (2.5) and (2.6), respectively, except that here we use

(cf. [10, Proposition 2.2, Equation (2.9)]),

(cf. [8, Equation (2.46)]), and \(U_{n}(-t)=(-1)^{n}U_{n}(t)\) (cf. (2.2)). \(\square \)

3 Estimates for the error norm of interpolatory quadrature formulae

In the present section, we assume that the error term of formula (1.1) does not satisfy one of conditions (\(1.6_i\)) and (\(1.6_{ii}\)), but instead \(R_{N}^{}(t^{k})\) changes sign, once, at some specific \(k=k_{N}^{}\). Then, based on (1.5), we obtain estimates for the error norm, which are both efficient and easy to apply.

Theorem 3.1

Consider the quadrature formula (1.1) satisfying

and

where \(M>0\) and \(k_{N}^{}=k_{N}^{(N)}\) are constants. Then

Proof

From (1.5), we have, in view of (3.2),

The first part on the right-hand side of the last line in (3.4), using the continuity of \(R_{N}^{}\) on \((C[-1,1]\), \(\Vert \cdot \Vert _{\infty })\), and proceeding as in the proof of Theorem 2.1(a) in [11], computes to

The second part, on the other hand, again by the continuity of \(R_{N}^{}\) on \((C[-1,1],\Vert \cdot \Vert _{\infty })\), can be written as

and, setting \(f(t)=t^{k_{N}^{}+1}/(r-t)\) in formula (1.1), (3.6) takes the form

Given that

the integral on the right-hand side of (3.7) computes to

Moreover, by means of \(|\tau _{\nu }|\le 1,\ \nu =1,2,\ldots ,N\), and (3.1),

which inserted, together with (3.8), into (3.7), gives

Now, (3.4), together with (3.5) and (3.10), yields (3.3).\(\square \)

An immediate consequence is the following

Corollary 3.2

(a) Consider the quadrature formula (1.1) having all weights nonnegative and satisfying condition (3.2). Then

(b) Consider the quadrature formula (1.1) being symmetric, having all weights nonnegative and satisfying condition (3.2). Then

Proof

(a) If all weights of formula (1.1) are nonnegative, then

hence, \(M=2\) (cf. (3.1)), which, in view of (3.3), implies (3.11).

(b) Formula (1.1) is symmetric if

and as, for N odd, \(\tau _{(N+1)/2}^{}=0\), we have

Furthermore, by symmetry,

consequently, \(k_{N}^{}\) in (3.2) is even, and (3.14), by virtue of \(|\tau _{\nu }|\le 1,\ \nu =1,2,\ldots ,N\), takes the form

Now, following the proof of Theorem 3.1, and replacing (3.9) by (3.15), we obtain (3.12). \(\square \)

A case similar to that of Theorem 3.1 is presented in the following

Theorem 3.3

Consider the quadrature formula (1.1) satisfying condition (3.1) and

where \(k_{N}^{}=k_{N}^{(N)}\) is a constant. Then

Proof

From (1.5), we have, in view of (3.16),

Then, proceeding as in the proof of Theorem 3.1, we obtain (3.17). \(\square \)

Also, in a like manner as in Corollary 3.2, we derive the following consequence of Theorem 3.3.

Corollary 3.4

(a) Consider the quadrature formula (1.1) having all weights nonnegative and satisfying condition (3.16). Then

(b) Consider the quadrature formula (1.1) being symmetric, having all weights nonnegative and satisfying condition (3.16). Then

4 Estimates for the error norm of the Clenshaw–Curtis formula, Basu formula and Fejér formula of the first kind

We treat each case separately.

4.1 The Clenshaw–Curtis formula

This is the quadrature formula

with

the zeros of the nth degree Chebyshev polynomial of the second kind \(U_{n}\) (cf. (2.2)), and

The weights \(w_{\nu }^{*(2)},\ \nu =1,2,\ldots ,n\), are all positive, and formula (4.1) has precise degree of exactness \(d=n+1\) if n is even and \(d=n+2\) if n is odd, i.e., \(d=2[(n+1)/2]+1\) (cf. [9]).

In order to obtain an estimate for \(\Vert R_{n}^{*(2)}\Vert \), we need to get an assessment for the sign of \(R_{n}^{*(2)}(t^{k})\), \(k\ge 0\). Our findings are summarized in the following

Lemma 4.1

The error term of the Clenshaw–Curtis quadrature formula (4.1), when \(n\ge 2\), satisfies

where \(k_{n}^{*(2)}>2[(n+1)/2]+2\) is a constant.

For \(n=1\), there holds

Proof

First of all,

Moreover, formula (4.1) is symmetric (cf. (3.13)), hence,

Thus, we have to look at the sign of \(R_{n}^{*(2)}(t^{2l})\). Let \(n\mathrm{(even)}\) \(\ge \)2. Then, by (4.6) and (4.1),

from which, in view of

(cf. (2.3) and (2.4) or [8, Equation (2.42)]), we get, by means of (2.10),

In a like manner, we obtain, for \(n\mathrm{(odd)}\ge 3\),

Hence, all together

Furthermore, setting \(f(t)=t^{2l}\) in (4.1), we have

and, as \(\frac{2}{2l+1}\) is decreasing with l while \(w_{0}^{*(2)}=w_{n+1}^{*(2)}>0\) are independent of l,

for some constant \(k_{n}^{*(2)}>2[(n+1)/2]+2\). Combining (4.6)–(4.8) and (4.10), we obtain (4.4).

For \(n=1\), the Clenshaw–Curtis formula (4.1) is Simpson’s rule on the interval \([-1,1]\),

with

From (4.11), there follows that

which, combined with (4.6) and (4.7), yields (4.5). \(\square \)

From (4.9), in view of (4.2) and (4.3), we can compute the precise values of \(k_{n}^{*(2)}\). It suffices to find the highest value of \(l=l^{*(2)}\) such that \(R_{n}^{*(2)}(t^{2l^{*(2)}})>0\); then \(k_{n}^{*(2)}=2l^{*(2)}\). The values of \(k_{n}^{*(2)},\ 2\le n\le 40\), are given in Table 1.

Based on the previous lemma, we can derive estimates for \(\Vert R_{n}^{*(2)}\Vert \).

Theorem 4.2

Consider the Clenshaw–Curtis quadrature formula (4.1). For \(n\ge 2\), we have

On the other hand, for \(n=1\), we have

Proof

The Clenshaw–Curtis formula (4.1) is the case of the quadrature formula (1.1) with \(N=n+2,\ \tau _{1}=1,\ \tau _{\nu +1}=\tau _{\nu }^{(2)},\ \nu =1,2,\ldots ,n\), and \(\tau _{n+2}=-1\).

For \(n\ge 2\), in view of (4.4), we get, from Eq. (3.12) in Corollary 3.2(b),

and, inserting (2.7), we obtain (4.12).

On the other hand, for \(n=1\), in view of (4.5) (cf. (\(1.6_i\)) with \(\varepsilon =-1\)), we have, from (\(1.7_i\)),

and, again by (2.7), we get (4.13). \(\square \)

Remark 4.1

The value of \(U_{m}(r)\) in (4.12) can be computed by either the three-term recurrence relation (2.3) and (2.4) or directly as

where \(\tau =r-\sqrt{r^{2}-1}\) (cf. [8, Equation (1.52)]).

4.2 The Basu formula

This is the quadrature formula

with

the zeros of the nth degree Chebyshev polynomial of the first kind \(T_{n}\) (cf. (2.1)), and

The weights \(w_{\nu }^{*(1)},\ \nu =1,2,\ldots ,n\), are all positive, and formula (4.14) has precise degree of exactness \(d=n+1\) if n is even and \(d=n+2\) if n is odd, i.e., \(d=2[(n+1)/2]+1\) (cf. [9]).

As in the case of the Clenshaw–Curtis formula, we need an assessment for the sign of \(R_{n}^{*(1)}(t^{k})\), \(k\ge 0\).

Lemma 4.3

The error term of the Basu quadrature formula (4.14), when \(n\ge 4\), satisfies

where \(k_{n}^{*(1)}>2[(n+1)/2]+2\) is a constant.

For \(n=1,2\) or 3, there holds

Proof

First of all,

and, as formula (4.14) is symmetric (cf. (3.13)),

Moreover, proceeding exactly as in the case of the Clenshaw–Curtis formula, we find, for \(n\mathrm{(even)}\ge 4\),

and, for \(n\mathrm{(odd)}\ge 5\),

i.e.,

Furthermore, from (4.14),

and, as \(w_{0}^{*(1)}=w_{n+1}^{*(1)}<0\) while \(|\tau _{\nu }^{(1)}|<1,\ \nu =1,2,\ldots ,n\), and therefore \(\sum _{\nu =1}^{n}w_{\nu }^{*(1)}(\tau _{\nu }^{(1)})^{2l}\) is decreasing with l,

for some constant \(k_{n}^{*(1)}>2[(n+1)/2]+2\). Now, combining (4.21)–(4.23) and (4.25), we obtain (4.17).

For \(n=1\), the Basu formula (4.14) is Simpson’s rule, hence, (4.18) follows as in Lemma 4.1.

For \(n=2\), formula (4.14) has the form

and, after a simple computation,

which, combined with (4.21) and (4.22), yields (4.19).

Similarly, for \(n=3\), formula (4.14) is

hence,

and, as in the previous case, gives (4.20). \(\square \)

From (4.24), in view of (4.15) and (4.16), we can compute, in a like manner as in the Clenshaw–Curtis formula, the constants \(k_{n}^{*(1)}\); their values, for \(4\le n\le 40\), are given in Table 2.

We are now in a position to derive estimates for \(\Vert R_{n}^{*(1)}\Vert \).

Theorem 4.4

Consider the Basu quadrature formula (4.14). For \(n\ge 4\), we have

On the other hand, we have, for \(n=1\),

for \(n=2\),

and, for \(n=3\),

Proof

The Basu formula (4.14) is the case of the quadrature formula (1.1) with \(N=n+2,\ \tau _{1}=1,\ \tau _{\nu +1}=\tau _{\nu }^{(1)},\ \nu =1,2,\ldots ,n\), and \(\tau _{n+2}=-1\).

For \(n\ge 4\), in view of (4.17), and in spite of \(w_{0}^{*(1)}\) and \(w_{n+1}^{*(1)}\) being negative, a minor modification of Corollary 3.4(b), together with (2.5), yields (4.26).

On the other hand, the case \(n=1\) was treated in Theorem 4.2; while, for \(n=2\) or 3, in view of (4.19) and (4.20) (cf. (\(1.6_i\)) with \(\varepsilon =1\)), we have, from (\(1.7_i\)),

which, by (2.5), gives (4.28) and (4.29). \(\square \)

Remark 4.2

As for \(U_{m}(r)\) (cf. Remark 4.1), the value of \(T_{m}(r)\) in (4.26) can be computed by either the three-term recurrence relation (2.3) and (2.4) or directly as

(cf. [8, Equation (1.49)]).

4.3 The Fejér formula of the first kind

This is the quadrature formula

with the \(\tau _{\nu }^{(1)}\) given by (4.15), and

The weights \(w_{\nu }^{(1)},\ \nu =1,2,\ldots ,n\), are all positive, and formula (4.30) has precise degree of exactness \(d=n-1\) if n is even and \(d=n\) if n is odd, i.e., \(d=2[(n+1)/2]-1\) (cf. [9]).

As in the previous two cases, we begin our analysis by examining the sign of \(R_{n}^{(1)}(t^{k}),\ k\ge 0\).

Lemma 4.5

The error term of the Fejér quadrature formula of the first kind (4.30), when \(n\ge 2\), satisfies

where \(k_{n}^{(1)}>2[(n+1)/2]\) is a constant.

For \(n=1\), there holds

Proof

First of all,

and, as formula (4.30) is symmetric (cf. (3.13)),

Also, proceeding as in the case of the Clenshaw–Curtis formula, we find, for \(n\mathrm{(even)}\ge ~2\),

and, for \(n\mathrm{(odd)}\ge 3\),

i.e.,

Furthermore, from (4.30),

and, as \(|\tau _{\nu }^{(1)}|<1,\ \nu =1,2,\ldots ,n\), hence,

we get

for some constant \(k_{n}^{(1)}>2[(n+1)/2]\). Now, combining (4.34)–(4.36) and (4.38), we obtain (4.32).

For \(n=1\), the Fejér formula of the first kind (4.30) is the 1-point Gauss formula for the Legendre weight function \(w(t)=1\) on \([-1,1]\),

with

consequently,

which, combined with (4.34) and (4.35), yields (4.33).\(\square \)

The constants \(k_{n}^{(1)}\) are computed from (4.37), in view of (4.15) and (4.31); their values, for \(2\le n\le 40\), are given in Table 3.

We can now derive the estimates for \(\Vert R_{n}^{(1)}\Vert \).

Theorem 4.6

Consider the Fejér quadrature formula of the first kind (4.30). For \(n\ge 2\), we have

On the other hand, for \(n=1\), we have

Proof

The Fejér formula of the first kind (4.30) is the case of the quadrature formula (1.1) with \(N=n\) and \(\tau _{\nu }=\tau _{\nu }^{(1)},\ \nu =1,2,\ldots ,n\).

For \(n\ge 2\), in view of (4.32), Corollary 3.4(b), together with [10, Proposition 2.2, Equation (2.8)], yields (4.39).

On the other hand, for \(n=1\), in view of (4.33) (cf. (\(1.6_i\)) with \(\varepsilon =1\)), we have, from (\(1.7_i\)),

which, again by [10, Proposition 2.2, Equation (2.8)], gives (4.40). \(\square \)

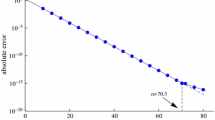

5 A numerical example

We choose the same example as in [10, Section 4], for the reader to be able to make comparisons.

We want to approximate the integral

by using either the Clenshaw–Curtis formula (4.1) or the Fejér formula of the first kind (4.30).

The function \(f(z)=e^{\omega z}=\sum _{k=0}^{\infty }\frac{\omega ^{k}z^{k}}{k!}\) is entire and, in view of (1.3) and (4.7), we have

The above formula holds as it stands if \(\sqrt{(2[(n+1)/2]+3)(2[(n+1)/2]+4)}>\omega \); otherwise, the formula for \(|f|_{r}^{*(2)}\) starts at the branch of (5.2) for which \(\sqrt{(2[(n+1)/2]+2k+3)(2[(n+1)/2]+2k+4)}>\omega \). Similarly,

under restrictions similar to those in (5.2). Hence, in both cases, \(f\in X_{\infty }\) (cf. (1.4)). Moreover,

Therefore, by (1.8) and (1.9),

and

with \(\Vert R_{n}^{*(2)}\Vert \) and \(\Vert R_{n}^{(1)}\Vert \) estimated or given by (4.12) and (4.13) and (4.39) and (4.40), respectively.

It is now worthwhile to see how (5.3) and (5.5) compare with already existing error bounds.

First of all, estimates for \(\Vert R_{n}^{*(2)}\Vert \) using Hämmerlin’s method (cf. Sect. 1) have been obtained by Akrivis in his Ph.D. thesis; he did that by either Approximation Theory techniques or Chebyshev polynomials’ expansions (cf. [1, Sections 1.4–1.5 and 1.7, Equations (1.7.9), (1.4.35) and (1.5.58)–(1.5.59)]). From the three estimates he derived, we choose the one that gives the best results in our case; setting \(m=[(n+1)/2]+2\) in Equation (1.7.9) in [1] and using Equation (1.5.58), we have

where

\(\tau =r-\sqrt{r^{2}-1}\) and \(R_{m}^{L}\) is the error term of the m-point Gauss-Lobatto quadrature formula for the Legendre weight function \(w(t)=1\) on the interval \([-1,1]\) (with the end nodes \(\pm 1\) included in the m points). Then, we apply (5.3) and (5.5) with \(\Vert R_{n}^{*(2)}\Vert \) estimated by (5.7) and (5.8).

Also, a few years before Akrivis, a number of error bounds have been derived for functions analytic on circular or elliptic contours.

If f is analytic on the disc \(C_{r}\) (cf. Sect. 1), Jayarajan obtained bounds for the Chebyshev-Fourier coefficients of f, leading, in our case, to the estimate

(cf. [6, Equation (26)]).

If, on the other hand, f is analytic on the ellipse \(\mathcal{E}_{\rho }=\{z\in \mathbb {C}:z=\frac{1}{2}(u+u^{-1}),\ u=\rho e^{i\theta },\ 0\le \theta \le 2\pi \}\) with foci at \(z=\pm 1\) and sum of semiaxes \(\rho ,\ \rho >1\), error bounds for \(R_{n}^{*(2)}\) have been given by Chawla (cf. [2]), Kambo (cf. [7]) and Riess and Johnson (cf. [12]). We choose the latter, which is based on Chebyshev polynomials’ expansions, and, together with the estimate of Kambo, gives the best results and it is also very easy to apply. It has the form

and, as in our case,

we get

(cf. [12, Equation (11)]).

Our results are summarized in Tables 4, 5 and 6 for formula (4.1) and in Table 7 for formula (4.30); in particular, in Table 4, we present the results based on estimates (5.3) and (5.5) with \(\Vert R_{n}^{*(2)}\Vert \) estimated by (4.12); in Table 5, we give the corresponding estimates but with \(\Vert R_{n}^{*(2)}\Vert \) estimated by (5.7) and (5.8); finally, in Table 6, we present the results based on estimates (5.9) and (5.12). (Numbers in parentheses indicate decimal exponents.) All computations were performed on a SUN Ultra 5 computer in quad precision (machine precision \(1.93\cdot 10^{-34}\)). The value of r and \(\rho \), at which the infimum in each of bounds (5.3)–(5.6), (5.9) and (5.12) was attained, is given in the column headed \(r_{opt}\) and \(\rho _{opt}\), respectively, which is placed immediately before the column of the corresponding bound. As n and r increase, \(\Vert R_{n}^{*(2)}\Vert \) and \(\Vert R_{n}^{(1)}\Vert \) decrease and close to machine precision they can even take a negative value. This actually happens, for \(\Vert R_{n}^{*(2)}\Vert \) when \(\omega =0.5\) and \(n\ge 13,\ \omega =1.0\) and \(n\ge 15\), and \(\omega =2.0\) and \(n\ge 19\); and for \(\Vert R_{n}^{(1)}\Vert \) when \(\omega =0.5\) and \(n\ge 15\), and \(\omega =1.0\) and \(n\ge 17\). The reason is that, in all these cases, the infimums in bounds (5.3)–(5.6) are attained at rather high values of \(r>15\).

Bounds (5.3) and (5.4) provide an excellent estimate of the actual error, and they are always better than bounds (5.5) and (5.6), respectively. This is to be expected, as (5.5) and (5.6) can be derived from (5.3) and (5.4) if \(|f|_{r}^{*(2)}\) and \(|f|_{r}^{(1)}\) are estimated by \(\max _{|z|=r}|f(z)|\) (cf. Sect. 1).

Furthermore, bounds (5.3) and (5.5) with \(\Vert R_{n}^{*(2)}\Vert \) estimated by (4.12) are better than the corresponding bounds with \(\Vert R_{n}^{*(2)}\Vert \) estimated by (5.7) and (5.8), particularly as \(\omega \) and n increase. Estimate (5.7) was derived by approximating \(\Vert R_{n}^{*(2)}\Vert \) by \(\Vert R_{m}^{L}\Vert \) and then adding a correction factor, while (4.12) was obtained by a tailored made process, which apparently explains the difference between the two estimates.

Similarly, bounds (5.3) and (5.5) are better than (5.9) and (5.12), particularly as \(\omega \) gets large, and this in spite of the fact that (5.12) is based on elliptical contours, which have the advantage of shrinking around the interval \([-1,1]\) as \(\rho \rightarrow 1\). However, (5.10) is reasonably good for large \(\rho \) (when the ellipse looks more and more like a circle), and this cannot happen when \(\omega \) is large, as then \(\max _{z\in \mathcal{E}_{\rho }}|f(z)|\) becomes exceedingly high (cf. (5.11)), which is apparently the reason for (5.3) and (5.5) outperforming (5.12).

References

Akrivis, G.: Fehlerabschätzungen bei der numerischen Integration in einer und mehreren Dimensionen. Doctoral Dissertation, Ludwig-Maximilians-Universität München (1982)

Chawla, M.M.: Error estimates for the Clenshaw–Curtis quadrature. Math. Comp. 22, 651–656 (1968)

Davis, P.J., Rabinowitz, P.: Methods of Numerical Integration, 2nd edn. Academic Press, San Diego (1984)

Gautschi, W.: Remainder estimates for analytic functions. In: Espelid, T.O., Genz, A. (eds.) Numerical Integration: Recent Developments, Software and Applications, pp. 133–145. Kluwer Academic Publishers, Dordrecht (1992)

Hämmerlin, G.: Fehlerabschätzung bei numerischer Integration nach Gauss. In: Brosowski, B., Martensen, E. (eds.) Methoden und Verfahren der mathematischen Physik, vol. 6, pp. 153–163. Bibliographisches Institut, Mannheim (1972)

Jayarajan, N.: Error estimates for Gauss–Chebyshev and Clenshaw–Curtis quadrature formulas. Calcolo 11, 289–296 (1974)

Kambo, N.S.: Error bounds for the Clenshaw–Curtis quadrature formulas. BIT 11, 299–309 (1971)

Mason, J.C., Handscomb, D.C.: Chebyshev Polynomials. Chapman & Hall/CRC, Boca Raton, FL (2002)

Notaris, S.E.: Interpolatory quadrature formulae with Chebyshev abscissae. J. Comput. Appl. Math. 133, 507–517 (2001)

Notaris, S.E.: Integral formulas for Chebyshev polynomials and the error term of interpolatory quadrature formulae for analytic functions. Math. Comp. 75, 1217–1231 (2006)

Notaris, S.E.: The error norm of quadrature formulae. Numer. Algorithms 60, 555–578 (2012)

Riess, R.D., Johnson, L.W.: Error estimates for Clenshaw–Curtis quadrature. Numer. Math. 18, 345–353 (1972)

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Lothar Reichel.

Rights and permissions

About this article

Cite this article

Notaris, S.E. The error norm of Clenshaw–Curtis and related quadrature formulae. Bit Numer Math 56, 705–728 (2016). https://doi.org/10.1007/s10543-015-0569-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10543-015-0569-6

Keywords

- Error norm

- Interpolatory quadrature formulae

- Clenshaw–Curtis quadrature formula

- Basu quadrature formula

- Fejér quadrature formula of the first kind