Abstract

Near-conservation over long times of the actions, of the energy, of the mass and of the momentum along the numerical solution of the cubic Schrödinger equation with small initial data is shown. Spectral discretization in space and one-stage exponential integrators in time are used. The proofs use modulated Fourier expansions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We consider the cubic Schrödinger equation with a potential of convolution type

where u=u(x,t) with \(x\in \mathbb {T}^{d}=\mathbb {R}^{d}/2\pi \mathbb {Z}^{d}\) and t≥0, in dimension d≥1 with periodic boundary conditions. We will consider small initial data: in appropriate Sobolev norms, the initial value u(⋅,0) is bounded by a small parameter ε. The potential \(V=V(x)\in L^{2}(\mathbb {T}^{d})\) is assumed to be periodic with real Fourier coefficients. It acts by convolution on the function u. Such equations have been studied for example in [1, 2, 12].

It is known that this Hamiltonian partial differential equation possesses the following invariants, that follow from invariances of the equation under certain transformations, see for example the monograph [25, Sect. I.2.3]. For H 1-solutions, one has conservation of the total energy or Hamiltonian

where |⋅| denotes the Euclidean norm. The L 2-norm, density, or mass

is also a conserved quantity. Finally, the momentum

is exactly conserved along the solution of our partial differential equation (1.1). But this is not all, this equation offers also another interesting geometric property which will turn out to be useful for our numerical analysis: It is reversible with respect to the complex conjugation ρ of the Fourier coefficients,

for a solution u=u(x,t) of (1.1), if \(\rho(u) = \sum_{j\in \mathbb {Z}^{d}} \overline{u_{j}} \mathrm {e}^{\mathrm {i}(j\cdot x)}\) for \(u=\sum_{j\in \mathbb {Z}^{d}} u_{j} \mathrm {e}^{\mathrm {i}(j\cdot x)}\). The Fourier coefficients of a function u=u(x) are denoted throughout the paper by u j , j∈ℤd.

For the numerical solution of (1.1) we first discretize in space (method of lines) and then in time. In practice, in the periodic case, the use of a discrete Fourier transform is a favorable choice. We then discretize the resulting system of ordinary differential equations with an exponential integrator (Sect. 2). Exponential integrators are widely used and studied nowadays as witnessed by the recent review [22]. Here we use the exponential integrators for nonlinear Schrödinger equations introduced in [7]. An error analysis for exponential integrators of collocation type applied to Schrödinger equations was given in [10].

In this paper, we study the long-time behavior of the conserved quantities energy (1.2), mass (1.3) and momentum (1.4) along such a numerical solution of (1.1) by an exponential integrator. In recent years, there is a growing interest and an ongoing effort in explaining the long-time behavior of numerical schemes for Hamiltonian partial differential equations, see [3, 6, 8, 9, 11, 13–17, 19]. In the present article, we show for a class of one-stage exponential integrators that energy, mass and momentum of (1.1) are approximately conserved along the numerical solutions over long times, see Sect. 3 for a precise statement of the results and numerical experiments. A property closely related to the reversibility of the numerical scheme turns out to be crucial to prove this result. We present numerical experiments that suggest that this property of the one-stage exponential integrator is not only sufficient but also necessary to have a good long-time behavior. This property requires a one-stage exponential integrator to be implicit.

Similar results have been shown in [16, 19] for splitting integrators, which are widely applied in the numerical integration of Schrödinger equations. This paper is neither aimed at comparing the (implicit) exponential integrators studied here with these splitting integrators nor at promoting their use at the expense of splitting integrators. The present paper contributes to the numerical analysis of exponential integrators, and, in a broader sense, tries to identify mechanisms that lead to a good long-time behavior of numerical integrators for partial differential equations (reversibility, for instance). Nonetheless we mention that, although implicit exponential integrators are slightly less efficient in comparison with splitting integrators, cf. [7, Introduction and Sect. 5.1], they are indeed used for the numerical integration of Schrödinger equations, see [4, 7, 10].

Similarly as in the aforementioned paper [19] on splitting integrators we start in Sect. 4 by showing, using a modulated Fourier expansion of the numerical solution, that the actions

of the linear Schrödinger equation \(\mathrm {i}\frac{\partial}{\partial t} u=-\Delta u+V*u\) are approximately conserved along the numerical solution of (1.1) over long times. Note that the actions (1.5) are also nearly conserved along the exact solution of the nonlinear equation (1.1) over long times, see [1, 18]. The long-time near-conservation of actions implies the regularity of the numerical solution over long times and is the key for the proof of the above mentioned conservation properties in Sect. 4.

2 Discretization of the nonlinear Schrödinger equation

In this section, we discretize (1.1) in space with a spectral collocation scheme and in time with a one-stage exponential integrator.

2.1 Spectral collocation method for the discretization in space

We start by denoting, for j∈ℤd, the frequencies in the nonlinear Schrödinger (1.1) by

with the j-th Fourier coefficient V j ∈ℝ of the potential V.

A spectral collocation discretization in space with collocation points

yields an approximation

Requiring this ansatz to fulfill (1.1) at our collocation points, one obtains, using  with the d-dimensional discrete Fourier transform F

2M

, the following system of ordinary differential equations

with the d-dimensional discrete Fourier transform F

2M

, the following system of ordinary differential equations

where  is a diagonal matrix with frequencies ω

k

,

is a diagonal matrix with frequencies ω

k

,  . Or in terms of the approximation u

M(x,t) one gets

. Or in terms of the approximation u

M(x,t) one gets

with

Here  denotes the trigonometric interpolation

denotes the trigonometric interpolation

and this is defined in such a way that  for all

for all  . The initial value is then given by

. The initial value is then given by

We note that the above semi-discretized system is a finite dimensional complex Hamiltonian system with Hamiltonian

We now discretize (2.2) with the above initial value in time.

2.2 Exponential integrators for the discretization in time

Exponential integrators, as their name suggests, use the exponential function of the Jacobian (or an approximation to it) inside the numerical scheme. They are particularly efficient for problems of the form, see (2.2)–(2.3),

where u=u(t), L is typically a linear unbounded differential operator, alternatively one can think of L as a matrix arising from a space discretization of such an operator and thus bounded for a fixed spatial resolution, but with a large norm. The map f is nonlinear but we assume that the size of f(u) is small compared to L. For a survey of these methods see for instance [21, 24] and more recently the review [22] and references therein.

The schemes we consider here can all be cast in the form

We use upper indices for denoting time steps with step-size h. The involved functions a rj (z) and b r (z) are complex functions that are used to define and to compute a rj (hL) and b r (hL) in terms of the spectral decomposition of the matrix describing L, i.e., \(a_{rj}(hL) = F_{2M} a_{rj}(-ih\varOmega) F_{2M}^{-1}\) and accordingly for b r . They are often real entire or at least real analytic in a domain of the complex plane which includes the spectrum of hL for all h of interest. Such schemes have been applied to nonlinear Schrödinger equations in [7] and [5] for example. In applying them to this equation, it is of importance to choose functions a rj (z) and b r (z) which are bounded on the imaginary axis, a property which is rather common among popular exponential integrators.

In our numerical analysis, we will only consider one-stage exponential integrators (s=1) for (2.2)–(2.3)

We will focus on two important geometric properties: symmetry and reversibility which we recall now.

Definition 2.1

(Symmetry, [20, Chap. V])

A numerical one-step method y 1=Φ h (y 0) is called symmetric if it satisfies

Definition 2.2

(Reversibility, [20, Chap. V])

Let ρ be an invertible linear transformation in the phase space of \(\frac{d}{dt}y=g(y)\). This differential equation is called ρ-reversible if ρ∘g=−g∘ρ, implying \(\rho\circ \varphi_{t}=\varphi_{t}^{-1}\circ\rho\) for the exact flow φ t . A numerical one-step method y 1=Φ h (y 0) is called ρ-reversible if

Our nonlinear Schrödinger equation (1.1) and also its semi-discretization in space (2.2) are ρ-reversible for the complex conjugation of Fourier coefficients, \(\rho (u)=\sum_{j}\overline{u_{j}}\mathrm {e}^{\mathrm {i}jx}\) for u=∑ j u j eijx (note that (1.1) is in general not reversible for the complex conjugation of a function itself because of the convolution with V). In the following we will always study reversibility with respect to this complex conjugation.

For the one-stage numerical schemes (2.5) considered here, we obtain the following results.

Lemma 2.1

(Symmetry of exponential integrators, [7])

A consistent one-stage exponential integrator (2.5) is symmetric if and only if

This shows in particular that symmetric exponential integrators as considered here are implicit.

Lemma 2.2

(Reversibility of exponential integrators)

A consistent one-stage exponential integrator (2.5) is reversible if and only if

Proof

Let us first compute v=ρ∘Φ h (u 0). From the definition of the exponential integrator, we have

with the adjoint matrix b(hL)∗. For the second term \(w = \varPhi_{h}^{-1}\circ\rho(u^{0})\) in the definition of reversibility, we get ρ(u 0)=ehL w+hb(hL)f(U) with U=echL w+ha(hL)f(U). We thus obtain

Comparing the two equations for v and w, the result follows. □

We also note, that the conditions of symmetry (2.6) and reversibility (2.7) are equivalent if \(a(\overline{z})=\overline{a(z)}\) for all z∈iℝ. Let us now illustrate these properties with some examples.

Example 2.1

(Symmetric Lawson method, [7, 23])

The symmetric one-stage Lawson method is an exponential integrator (2.5) with coefficients

It is symmetric and reversible.

Example 2.2

The method with coefficients

is also symmetric and reversible.

Example 2.3

The exponential method (2.5) with coefficients

is neither symmetric nor reversible.

However, if \(a(\overline{z})\ne\overline{a(z)}\) for some z∈iℝ, then reversible methods are not symmetric and symmetric methods are not reversible.

Example 2.4

The method with coefficients

is reversible but not symmetric.

Example 2.5

The method with coefficients

is symmetric but not reversible.

3 Main result and numerical experiments

We now formulate our main result on the long-time behavior of exponential integrators.

3.1 Assumptions on the exponential integrator (2.5)

We start this section by collecting the assumptions on the exponential integrator (2.5) we need to prove long-time near-conservation properties of the numerical solution. Our main assumption on the exponential integrator is that its coefficient functions a(z) and b(z) are linked in the following way:

If in addition \(c=\operatorname {Re}(a(0))=\frac{1}{2}\), this is equivalent to the condition of reversibility (2.7). In particular, all reversible methods (2.7) satisfy this condition. Also many symmetric methods (2.6) satisfy (3.1a), namely those that are reversible, i.e., those with \(a(\overline{z})=\overline{a(z)}\) for all z∈iℝ. But there are methods that satisfy (3.1a) which are neither symmetric nor reversible.

Example 3.1

The exponential method (2.5) with coefficients

satisfies (3.1a) but is neither symmetric nor reversible.

Besides condition (3.1a) we need, as mentioned in Sect. 2, that the function a is bounded on the imaginary axis,

Moreover, we assume that

We do not hesitate to impose this assumption because it is typically less restrictive than the non-resonance condition on the frequencies ω j that we will introduce in the following section. For the methods of Examples 2.1 and 3.1 the condition (3.1c) is trivially satisfied, and for the methods of Example 2.4 and 2.5 it is not difficult to verify this condition for positive frequencies ω j , the eigenvalues of L. For the methods of Examples 2.2 and 2.3 condition (3.1c) amounts to a restriction on the time step-size h: One has to avoid time step-sizes that are integer multiples of 2π/ω j for some frequency ω j . For comparison the non-resonance condition, that we will impose, requires that the time step-size h is not close to an integer multiple of 2π divided by many linear combinations of frequencies. We mention, however, that the method of Example 2.2 behaves in numerical experiments very well also for resonant time step-sizes that are not small and for time step-sizes that do not satisfy (3.1c). This may be due to the fact that the functions a and b decay for large frequencies and therefore act as filter functions.

The invertibility condition (3.1c) can be used to rewrite the exponential integrator (2.5). We solve the first equation of (2.5) for f(U) and then plug it in the second one to obtain

This yields

In the following we will work with one-stage exponential integrators in this compact form.

3.2 Long-time near-conservation of actions, energy, mass and momentum

Let N≥1 be an arbitrary fixed integer. Our main result states near-conservation properties over long times 0≤t≤ε −N of the numerical solutions of the cubic Schrödinger equation (1.1) with small initial data:

with the Sobolev norm

where we recall that u j denotes the j-th Fourier coefficient of a function u on \(\mathbb {T}^{d}\) and that ω j denotes the j-th frequency (2.1). In this definition, a zero frequency is replaced by 1. This is also tacitly assumed in the following whenever the absolute value of a frequency appears.

Moreover, we need a non-resonance condition on the frequencies that we introduce now. Recall that  denotes the set of indices whose corresponding Fourier coefficients are used for the discretization in space, see Sect. 2.1. We denote by

denotes the set of indices whose corresponding Fourier coefficients are used for the discretization in space, see Sect. 2.1. We denote by  a finite sequence of integers, by

a finite sequence of integers, by  the finite sequence of frequencies and by mod 2M the entry-wise reduction modulo 2M with representative chosen in

the finite sequence of frequencies and by mod 2M the entry-wise reduction modulo 2M with representative chosen in  . The non-resonance condition controls near-resonances among the frequencies, where the difference of a linear combination of frequencies

. The non-resonance condition controls near-resonances among the frequencies, where the difference of a linear combination of frequencies

and the frequency

is close to an integer multiple of 2π/h. We recall that h is the step-size of our numerical integrator. More precisely, we require for near-resonant indices (j,k) in the set

where \(\langle j\rangle =(\delta_{jl})_{l\in \mathbb {Z}^{d}}\) with Kronecker’s delta, that

with a constant C 0 independent of ε. Here,

This non-resonance condition is very similar to the one used for splitting integrators [19, Sect. 4]. As discussed in [19, Appendix] it reduces in the limit h→0 to a condition that is satisfied for almost all choices of the potential V and a time step-size restriction allows to exclude numerical resonances. Moreover, it is fulfilled for all step-sizes in a dense set under a restriction on the parameter M of the discretization in space in terms of ε, see [19, Appendix].

We are now able to state the main result of this paper, whose proof will be given in Sect. 4.

Theorem 3.1

For given N≥1 and s≥d+1 there exists ε 0>0 such that the following holds: Under the conditions (3.1a)–(3.1c) on the exponential integrator, the condition of small initial data (3.3) with ε≤ε 0 and the non-resonance condition (3.5), the estimates

hold for the numerical solution u n described in Sect. 2 with time step-size h≤1 over long times

with a constant C which depends on C 0 from the non-resonance condition (3.5), C 1 from assumption (3.1b), the dimension d, N, s and the norm of the potential V but is independent of n, the size of the initial value ε and the discretization parameters M and h.

3.3 Numerical experiments

We conclude this section with some numerical experiments in order to illustrate Theorem 3.1. We use data as in the experiments of [19]. The initial value u(⋅,0) is chosen as (d=1)

and the potential V is chosen such that

where r j =0.5 for j≥0 and r j =0.8 for j<0. We use 2M=28 collocation points for the discretization in space, and we use a time step h=0.1 with different exponential integrators. For the solution of the nonlinear equations defining U in (2.5) we apply the standard fixed point iteration to the nonlinear equation as described in [7, Sect. 5.1]. To be on the safe side, we use 15 iterations although the convergence is much faster, in particular due to the small nonlinearity (cf. the analysis of the nonlinear equation in Sect. 4.7). For the role of rounding errors in a long-time integration and a possible way to reduce them we refer to [20, VIII.5].

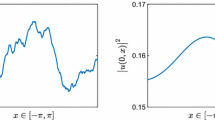

In Fig. 1 we plot some of the actions, the discrete energy H M , the mass m and the momentum K for the symmetric and reversible Lawson method from Example 2.1. This method satisfies the main assumption (3.1a) on the exponential integrator. As explained by Theorem 3.1, the plotted quantities are nearly conserved on a long time interval of length 106. We observe the same behavior for the other methods that satisfy the main assumption (3.1a), the symmetric and reversible method from Example 2.2, the reversible but non-symmetric method from Example 2.4 and the non-reversible and non-symmetric method from Example 3.1.

We repeat the experiment in Fig. 2 using methods that do not satisfy the main assumption ( 3.1a ): The non-symmetric and non-reversible method from Example 2.3 and the symmetric but non-reversible method from Example 2.5. For these methods, the actions are no longer nearly conserved on long time intervals.

4 Modulated Fourier expansions and proof of the main result

In this section we prove Theorem 3.1 on the long-time near-conservation of actions (1.5), energy (1.2), mass (1.3) and momentum (1.4) along the numerical solution. Throughout this section we work under the assumptions of this theorem.

The proof relies on a careful study of a modulated Fourier expansion in time of the numerical solution (3.2),

We require this modulated Fourier expansion to describe at time t n =nh the numerical solution u n after n time steps. The modulation functions z k evolve on a slow time-scale τ=εt. It turns out that we can assume these functions to be single spatial waves,

i.e., their Fourier coefficients \(z^{\mathbf {k}}_{j}\) vanish for j≠j(k) with j(k) as introduced as in (3.4). The outline of the proof is as follows.

-

In Sect. 4.1 we derive a system of equations for the modulation functions.

-

In Sect. 4.2 we show the existence of invariants for this system of equations.

-

Then we construct an approximate solution of this system in Sect. 4.3.

-

We study the size of the constructed modulation functions in Sect. 4.5 using a rescaling of these functions introduced in Sect. 4.4,

-

and we study the defect for the approximate solution in the modulation system in Sect. 4.6.

-

Then we control the size of the numerical solution and the difference of the numerical solution and its modulated Fourier expansion in Sects. 4.7 and 4.8.

-

We study the invariants of the modulation system along the approximate solution of this system and establish their relationship with the actions in Sect. 4.9.

-

Finally, we extend the previous results, that are valid only on a short time interval of length ε −1, to a long time interval in Sects. 4.10 and 4.11.

This is the standard approach to study the long-time behavior of numerical solutions of Hamiltonian partial differential equations using modulated Fourier expansions [8, 17]. The proof of Theorem 3.1 presented here closely follows the one given in [19] for splitting integrators applied to (1.1). We refer the reader to that article, whenever arguments are very similar or identical, but try to present however the main line of arguments. A major difference compared to the corresponding proof for splitting integrators [19] is that the modulation system for the exponential integrators studied here directly provides invariants. In contrast to that, one has to consider an auxiliary modulation system in the case of splitting integrators. Differences in the analysis of the modulation system further arise due to the implicitness of the considered exponential integrators.

4.1 The modulation system

We insert the modulated Fourier expansion (4.1a), (4.1b) in the numerical scheme (3.2) and require that the numerical solution u n defined by (3.2) is described by the modulated Fourier expansion \(\tilde{u}(x,t_{n})\) at time t n =nh. Comparing the coefficients of e−i(k⋅ω)t, this yields the following system of equations for the modulation functions \(z^{\mathbf {k}}_{j(\mathbf {k})}\):

for j=j(k) (note that j(k)=j(k 1)+j(k 2)−j(k 3)mod2M if k=k 1+k 2−k 3). Here and in the following, we assume that \(\lVert k\rVert \leq K:=2N+2\) unless stated otherwise. The functions \(w_{j}^{\mathbf {k}}\) in the nonlinearity take the form

for j=j(k). The initial condition further yields

The system of equations (4.2a)–(4.2c) for the coefficients of the modulated Fourier expansion is called the modulation system.

4.2 Invariants of the modulation system

A remarkable property of the modulation system (4.2a)–(4.2c) is the presence of many conserved quantities or invariants provided that the exponential integrator satisfies condition (3.1a). These invariants, that we derive next, form the cornerstone for the study of long time intervals.

Let

with w=(w k) k , be the extended potential. We have for real sequences μ

Using the modulation system (3.1a) we get for w=w(εt) as defined in (4.2b)

Under the main condition (3.1a) on the exponential integrator we have

for j=j(k), and (4.4) simplifies to

Choosing \(\boldsymbol {\mu }=\frac{1}{2} \operatorname {Re}(a(-\mathrm {i}\omega_{l}h)) \langle l\rangle \) for l∈ℤ, this shows that

is conserved along a solution z of the modulation system (4.2a)–(4.2c) from one time step to another. Recalling the conditions (3.1a) and (3.1b) on the coefficients of the numerical scheme, we will see in Lemma 4.1 that these quantities are well defined. These invariants are the same as for splitting integrators derived in [19, Sect. 6.1] except for the fraction \(\operatorname {Re}(a(-\mathrm {i}\omega_{l}h)) / \operatorname {Re}(a(-\mathrm {i}\omega_{j(\mathbf {k})}h))\). Note, however, that our invariants (4.5) are invariants of the modulation system itself and not of an auxiliary modulation system as in [19, Sect. 6].

4.3 Iterative solution of the modulation system

In this subsection we introduce an iterative procedure that we use to compute an approximate solution of the modulation system (4.2a)–(4.2c) in the same way as in [19, Sect. 5.3]. The modulation system here takes the form

for j=j(k), where the dot on \(z^{\mathbf {k}}_{j}\) stands for the derivative with respect to the slow time τ=εt, and where we use the differential operators

and the nonlinearity

For the iterative solution of the modulation system we distinguish modulation functions corresponding to near-resonant indices  or large indices \(\lVert \mathbf {k}\rVert >K=2N+2\), “diagonal” modulation functions with indices (j,〈j〉), and the remaining modulation functions with indices in the set

or large indices \(\lVert \mathbf {k}\rVert >K=2N+2\), “diagonal” modulation functions with indices (j,〈j〉), and the remaining modulation functions with indices in the set

We start by setting

for 0≤εt=τ≤1. We iterate, motivated by (4.6a), by

for  and 0≤εt=τ≤1 with w defined as in (4.6b). The notation [⋅]n means that the n-th iterates of the modulation functions within the brackets are taken. For k=〈j〉 the first term in (4.6a) cancels, and we define \(z_{j}^{\langle j\rangle }\) as solution of the differential equation

and 0≤εt=τ≤1 with w defined as in (4.6b). The notation [⋅]n means that the n-th iterates of the modulation functions within the brackets are taken. For k=〈j〉 the first term in (4.6a) cancels, and we define \(z_{j}^{\langle j\rangle }\) as solution of the differential equation

with initial value

by (4.2c). For near-resonant indices  or for large k with \(\lVert \mathbf {k}\rVert >K\) we set for 0≤εt=τ≤1

or for large k with \(\lVert \mathbf {k}\rVert >K\) we set for 0≤εt=τ≤1

With this iterative construction, the iterated modulation functions \([z^{\mathbf {k}}_{j}]^{n}\) are polynomials in τ of degree bounded in terms of the number of iterations n.

4.4 Rescaling the modulation functions

In order to take into account the powers of ε that accumulate in the modulation functions, we now rescale and split these functions as in [19, Sect. 5.4]. Let

and

with diagonal entries \(a_{j}^{\mathbf {k}}\) and off-diagonal entries \(b_{j}^{\mathbf {k}}\), i.e., \(a_{j}^{\mathbf {k}}\ne0\) only for k=〈j〉 and \(b_{j}^{\mathbf {k}}\ne0\) only for k≠〈j〉. We write

and set additionally u=(u k) k with

We further define \(\mathbf {F}(\mathbf {u})_{j}^{\mathbf {k}} = \varepsilon ^{-\max([[\mathbf {k}]],2)} \mathbf {N}(\mathbf {w})\) and

In the rescaled variables the iteration from the previous Sect. 4.3 becomes

with \([u^{\mathbf {k}}_{j}]^{n}=\varepsilon ^{-[[\mathbf {k}]]}[w_{j}^{\mathbf {k}}]^{n}\) defined by (4.6b).

We also use a second rescaling of the variables,

With \(\hat{\mathbf {F}}(\hat{\mathbf {u}})_{j}^{\mathbf {k}} = \lvert \boldsymbol {\omega }^{\frac {2s-d-1}{4}\lvert \mathbf {k}\rvert }\rvert \cdot \mathbf {F}(\mathbf {u})_{j}^{\mathbf {k}}\) the iteration for \(\hat{\mathbf {b}}\) becomes

4.5 Size of the iterated modulation functions

In order to control the size of the iterated modulation functions we use the norm

Note that we do not only need to control the modulation functions themselves but also products of the modulation functions with a(−iω j h)/b(−iω j h), see the definition of \(w^{\mathbf {k}}_{j}\) in (4.6b). Fortunately, this is not needed for the diagonal modulation functions collected in a but only for their derivatives and the off-diagonal modulation functions collected in b including all their derivatives. Since a(−iω j h) is bounded by (3.1b), we therefore set

and we study Γ b and \(\boldsymbol {\varGamma }\dot{\mathbf {a}}\) instead of b and \(\dot {\mathbf {a}}\).

Lemma 4.1

We have for 0≤τ=εt≤1

for all n with a constant C depending only on C 0, C 1, d, n, s and the norm of V. The same estimates hold for \(\hat{\mathbf {a}}\) and \(\hat{\mathbf {b}}\) instead of a and b if we replace \({\lVert \hskip -1pt\lvert }\cdot{\rvert \hskip -1pt\rVert }_{s}\) by \({\lVert \hskip -1pt\lvert }\cdot{\rvert \hskip -1pt\rVert }_{\frac{d+1}{2}}\).

In particular, it follows that the modulated Fourier expansion of the numerical scheme \(\tilde{u}\) is small

and that its coefficients z are also small

Proof

Initially, we have for 0≤τ≤1

and the same estimates hold for \(\hat{\mathbf {a}}\) and \(\hat{\mathbf {b}}\) if we replace \({\lVert \hskip -1pt\lvert }\cdot{\rvert \hskip -1pt\rVert }_{s}\) by \({\lVert \hskip -1pt\lvert }\cdot{\rvert \hskip -1pt\rVert }_{\frac{d+1}{2}}\).

The bounds for the iterated modulation functions are obtained as in [19, Sect. 5.6] by analyzing the iteration using

-

the non-resonance condition (3.5) to control Ω −1,

-

the fact that A(a) contains only derivatives of a to estimate Γ A(a) in the same way as Γ B(b) inductively,

-

the factor b(−iω j h) in front of the nonlinearity to estimate Γ F(u) in \({\lVert \hskip -1pt\lvert }\cdot{\rvert \hskip -1pt\rVert }_{s}\) by \(C\varepsilon h{\lVert \hskip -1pt\lvert }\mathbf {u}{\rvert \hskip -1pt\rVert }_{s}^{3}\) using [18, Lemma 2] and the bound (3.1b) together with the condition (3.1a),

-

the bound \({\lVert \hskip -1pt\lvert }\mathbf {u}{\rvert \hskip -1pt\rVert }_{s}\le {\lVert \hskip -1pt\lvert }\mathbf {a}+\mathbf {b}{\rvert \hskip -1pt\rVert }_{s} + C\max_{\ell \ge 1}{\lVert \hskip -1pt\lvert }\boldsymbol {\varGamma }\mathbf {a}^{(\ell)}{\rvert \hskip -1pt\rVert }_{s} + C\max_{\ell\ge0}{\lVert \hskip -1pt\lvert }\boldsymbol {\varGamma }\mathbf {b}^{(\ell)}{\rvert \hskip -1pt\rVert }_{s}\)

-

and the fact that the modulation functions are polynomials in τ of degree bounded in terms of the number of iterations n.

The same arguments also yield estimates for \(\hat{\mathbf {a}}\) and \(\hat {\mathbf {b}}\) in the norm \({\lVert \hskip -1pt\lvert }\cdot{\rvert \hskip -1pt\rVert }_{\frac{d+1}{2}}\). □

4.6 Defect of the iterated modulation functions

The defect in the modulation system (4.6a), (4.2c) after n iterations is

In contrast to [19, Sect. 5.7], we have here no defect resulting from a truncation of a Taylor expansion. We decompose the defect as

with \([e^{\mathbf {k}}_{j}]^{n}=0\) for  , \([\dot{h}^{\mathbf {k}}_{j}]^{n}=0\) for k≠〈j〉, \([f^{\mathbf {k}}_{j}]^{n}=0\) for non-near-resonant indices

, \([\dot{h}^{\mathbf {k}}_{j}]^{n}=0\) for k≠〈j〉, \([f^{\mathbf {k}}_{j}]^{n}=0\) for non-near-resonant indices  and \([g^{\mathbf {k}}_{j}]^{n}=0\) for \(\lVert \mathbf {k}\rVert \le K\). The defect can be estimated as follows.

and \([g^{\mathbf {k}}_{j}]^{n}=0\) for \(\lVert \mathbf {k}\rVert \le K\). The defect can be estimated as follows.

Lemma 4.2

We have for 0≤τ≤1

for all n with a constant C depending only on C 0, C 1, d, N, n, s and the norm of the potential V. The same estimates hold for \(\hat{\mathbf {e}}\) and \(\hat{\mathbf {h}}\) instead of e and h if we replace \({\lVert \hskip -1pt\lvert }\cdot{\rvert \hskip -1pt\rVert }_{s}\) by \({\lVert \hskip -1pt\lvert }\cdot{\rvert \hskip -1pt\rVert }_{\frac{d+1}{2}}\).

Proof

The estimate of the defect f in the near-resonant indices is obtained as in [18, Sect. 3.7] and [19, Sect. 5.7] using the non-resonance condition (3.5) and in addition the bound (3.1b). Also the defect g can be estimated as there using that \(\lVert \mathbf {k}\rVert >K\) implies \([[\mathbf {k}]]\ge\frac{1}{2}(K+2) = N+2\).

The diagonal part \(\dot{\mathbf {h}}\) and the off-diagonal part e of the defect take the form

Using a Lipschitz estimate [18, Lemma 2] for the nonlinearity in the modulation system, we get as in [19, Sect. 5.7] by an analysis of the iteration

for 0≤τ≤1, and the same estimates also for \(\hat{\mathbf {a}}\) and \(\hat{\mathbf {b}}\) if we replace \({\lVert \hskip -1pt\lvert }\cdot{\rvert \hskip -1pt\rVert }_{s}\) by \({\lVert \hskip -1pt\lvert }\cdot {\rvert \hskip -1pt\rVert }_{\frac{d+1}{2}}\).

Also the defect for the initial condition \(\tilde{\mathbf {d}}\) can be estimated as in [19, Sect. 5.7]:

This concludes the proof of the lemma. □

4.7 The numerical solution on short time intervals

We study the size of the numerical solution u n on a short time interval of length ε −1. Its control uses fixed point arguments since the considered exponential integrators (3.2) are implicit schemes.

Lemma 4.3

We have for 0≤t n =nh≤ε −1

for ε sufficiently small compared to C 1, d, s and the norm of the potential V.

Proof

We show by induction on n that

and we let ε be sufficiently small compared to C such that (4.8) implies \(\lVert u^{n}\rVert _{s}\le2\varepsilon \). Here, C is a constant depending only on C 1, d, s and the norm of V such that

which exists by [18, Lemmas 1 and 4]. Note that  is the nonlinearity defined in (2.3).

is the nonlinearity defined in (2.3).

For n=0 the estimate (4.8) is trivial. For n>0 we have by (4.9) and the definition of the integrator

with a fixed point U n−1 of

For 0≤nh≤ε −1 this function g maps by (4.9) the ball \(\{ U : \lVert U\rVert _{s}\le3\varepsilon \}\) to itself since \(\lVert u^{n-1}\rVert _{s}\le2\varepsilon \) by induction. Moreover, using the fact that

and the form of our nonlinearity, see (2.3), we obtain from (4.9) that

This shows that the map g has for sufficiently small ε in the norm \(\lVert \cdot\rVert _{s}\) on the ball \(\{ U : \lVert U\rVert _{s}\le3\varepsilon \}\) a Lipschitz constant smaller than one. The Banach fixed point theorem then ensures

for the fixed point U n−1 of g, and finally the induction hypothesis applied to (4.10) yields (4.8). □

4.8 The modulated Fourier expansion and the numerical solution

In this subsection we study the error \(u^{n}-\tilde{u}(\cdot,t_{n})\) of the modulated Fourier expansion

where the iterated modulation functions \(z^{\mathbf {k}}_{j} = [z^{\mathbf {k}}_{j}]^{L}\) after L:=2N+2 iterations replace the exact solution of the modulation system that is not available. By a slight abuse of notation, we omit the index L in the following, keeping in mind that the modulation system is then satisfied only up to a small defect. We show that the modulated Fourier expansion \(\tilde{u}(\cdot,t_{n})\) describes the numerical solution u n up to a very small error on a short time interval of length ε −1. Again, as in the previous subsection, we employ fixed point arguments in contrast to the direct arguments used in [19, Sect. 5.8].

Proposition 4.1

We have for 0≤t n =nh≤ε −1

for ε sufficiently small compared to C 0, C 1, d, N, s and the norm of V with a constant C depending only on C 0, C 1, d, N, s and the norm of V.

Proof

Let

Then, by definition of the modulation system (4.2a)–(4.2c) and with (4.7), we obtain

with the defect

Note that for 0≤t≤ε −1 by Lemma 4.2

with a constant C depending only on C 0, C 1, d, N, s and the norm of the potential V.

(a) We first examine the difference \(U^{n-1} - \tilde{U}(\cdot ,t_{n-1})\) with the solution U n−1 of the nonlinear equation in the numerical method for computing u n (see the proof of Lemma 4.3). Note that \(\tilde{U}(\cdot,t_{n-1})\) is by (4.2a)–(4.2c) and (4.7) a fixed point of

and by Lemma 4.2

Recall from the proof of Lemma 4.3 that the fixed point iteration [U]l=g([U]l−1), [U]0=echL u n−1 converges in the norm \(\lVert \cdot\rVert _{s}\) to U n−1 and is bounded in this norm by 3ε. We study \([U]^{l} - \tilde{U}\) with \(\tilde{U} = \tilde{U}(\cdot,t_{n-1})\). Since \(\lVert \tilde{U}\rVert _{s}\le C\varepsilon \) by Lemma 4.1, we get with (4.9) and the estimate of the defect

For l>0 we use (4.11) to obtain

with a constant C independent of l. A recursion on l and the above result for l=0 now yields

For l→∞ and \(C\varepsilon ^{2}h \le\frac{1}{2}\) one then obtains

(b) Finally, we consider \(u^{n} - \tilde{u}(\cdot,t_{n})\). For n>0 we have using (4.11) with b(hL) instead of a(hL)

Together with (4.12) we get by induction on n

This yields the desired result if ε is sufficiently small since

by Lemma 4.2 with the defect \(\tilde{\mathbf {d}}\) in the initial condition. □

4.9 Almost invariants close to the actions

Let \(z^{\mathbf {k}}_{j} = [z^{\mathbf {k}}_{j}]^{L}\) be the iterated modulation functions after L=2N+2 iterations as in the previous subsection. These modulation functions satisfy the modulation system (4.2a)–(4.2c) only up to a defect \(d^{\mathbf {k}}_{j} = [d^{\mathbf {k}}_{j}]^{L}\) studied in Lemma 4.2. Of course, the formal invariants  of the modulation system introduced in (4.5) are then no longer exact invariants, but they turn out to be almost invariants.

of the modulation system introduced in (4.5) are then no longer exact invariants, but they turn out to be almost invariants.

Proposition 4.2

We have for 0≤t n =nh≤ε −1

for ε sufficiently small compared to C 0, C 1, d, N, s and the norm of the potential V with a constant C depending only on C 0, C 1, d, N, s and the norm of V.

Proof

Repeating the calculation for the derivation of the invariants of the modulation system in Sect. 4.2 we get

Lemma 3 from [18] (with the adaption to the spatially discrete setting in [18, Sect. 6.2]) together with the bound (3.1b) for a(z) then tells us that

with a constant C depending only on d, N, s and the norm of V. Using the estimates from Lemma 4.1 on the size of the iterated modulation functions and from Lemma 4.2 on the defect of these functions, we get the statement of the proposition by summing up. □

We can show as in [18, Proposition 6] using in addition the bound (3.1b) for a(z) that the almost invariants  are close to the actions I

l

.

are close to the actions I

l

.

Proposition 4.3

We have for 0≤t n =nh≤ε −1

for ε sufficiently small compared to C 0, C 1, d, N, s and the norm of V with a constant C depending only on C 0, C 1, d, N, s and the norm of V.

4.10 Interface between modulated Fourier expansions

So far, we only considered a short time interval of length ε −1. In order to get longer time intervals as announced in Theorem 3.1 we have to patch many of these short time intervals together. In this subsection we consider a second short time interval ε −1≤t≤2ε −1 (if ε −1 is not a multiple of the time step-size h we consider instead the time interval n ε h≤t≤2n ε h, where n ε denotes the largest integer with n ε h≤ε −1). On this second time interval we consider again a modulated Fourier expansion

of the numerical solution, starting with the numerical solution \(u^{n_{\varepsilon }}\) at the end of the first time interval (after n ε time steps) as initial value. The initial condition (4.2c) of the modulation system then becomes

whereas the remaining part of the modulation system (4.2a)–(4.2c) remains unchanged. This modulation system is again solved approximately with the iterative procedure described in Sect. 4.3, and we denote, again by an abuse of notation, by \(\tilde{z}_{j}^{\mathbf {k}}\) (and also \(z_{j}^{\mathbf {k}}\)) the iterated modulation functions after L=2N+2 iterations. Since \(\lVert u^{n_{\varepsilon }}\rVert _{s}\le2\varepsilon \) by Lemma 4.1, all the results on the modulation functions z proven so far are also valid for \(\tilde {\mathbf {z}}\) with constants depending on the same parameters.

It is possible to control the difference of the almost invariants  and

and  at the interface n

ε

h≈ε

−1 between the modulated Fourier expansions on the first two time intervals.

at the interface n

ε

h≈ε

−1 between the modulated Fourier expansions on the first two time intervals.

Proposition 4.4

We have

for ε sufficiently small compared to C 0, C 1, d, N, s and the norm of the potential V with a constant C depending only on C 0, C 1, d, N, s and the norm V.

Proof

We first show that

for the rescaled modulation functions defined in Sect. 4.4. Together with [18, Lemma 3] and Lemma 4.1 this yields the stated result. For the proof of (4.13) we have to study once more the iterative procedure, this time the iteration for \(\tilde{\mathbf {z}}\). This is done in the same way as in the proof of [18, Proposition 4], considering again Γ a (ℓ) for ℓ≥1 and Γ b (ℓ) for ℓ≥0 instead of a (ℓ) and b (ℓ) (but not Γ a). □

4.11 From short to long time intervals

We are now in the position to prove Theorem 3.1. We start with the long-time near-conservation of actions, that we can control so far only on a short time interval of length ε

−1. The almost invariants  permit to patch many of these short time intervals together to a long time interval of length ε

−N exactly as in [19, Sect. 6.3]: We consider modulated Fourier expansions on short time intervals of length ≈ε

−1 starting on the numerical solution as described in Sect. 4.10. The almost invariants (Propositions 4.2 and 4.4) close to the actions (Proposition 4.3) ensure that the numerical solution satisfies a smallness condition \(\lVert u^{n}\rVert _{s}\le 2\varepsilon \) over long times 0≤nh≤ε

−N and imply the near-conservation of actions on these time intervals.

permit to patch many of these short time intervals together to a long time interval of length ε

−N exactly as in [19, Sect. 6.3]: We consider modulated Fourier expansions on short time intervals of length ≈ε

−1 starting on the numerical solution as described in Sect. 4.10. The almost invariants (Propositions 4.2 and 4.4) close to the actions (Proposition 4.3) ensure that the numerical solution satisfies a smallness condition \(\lVert u^{n}\rVert _{s}\le 2\varepsilon \) over long times 0≤nh≤ε

−N and imply the near-conservation of actions on these time intervals.

Finally, the near-conservation of energy H, discrete energy H M , mass m and momentum K is shown as in [19, Sect. 6.4]. The main point is that all these quantities are sums of scaled actions plus, in the case of the energies, a higher order term of size ε 4. The long-time near-conservation of actions thus implies the long-time near-conservation of discrete and continuous energy, of mass and of momentum as stated in Theorem 3.1.

5 Conclusion and open problems

We have shown long time near-conservation of actions, energy, mass and momentum for the numerical solution of a cubic Schrödinger equation given by a one-stage exponential integrator. This has been done for a class of methods that contains all reversible methods. We have presented a numerical experiment with a symmetric exponential integrator, that does not belong to this class, and that does not show this good long-time behavior.

An extension of the results to nonlinear Schrödinger equation (1.1) with more general nonlinearities of the form g(|u(x,t)|2)u(x,t) is easy if g is real analytic in a neighborhood of zero and g(0)=0. On the contrary, it is an open problem to extend the theoretical results of the present paper to exponential integrators with more than one stage (s>1 in (2.4)). The technical difficulty seems to be the identification of invariants in the corresponding modulation system. In fact, in the modulation system for a method with more than one stage there are several nonlinear terms coming from an extended potential  (4.3) with different arguments. This prevents the derivation of the invariants in Sect. 4.2 from working. We expect, however, that reversible exponential integrators with two or more stages have a similar long-time behavior as the one-stage methods studied here. In order to support this conjecture, we present in Fig. 3 a numerical experiment with the same parameters as in the experiments of Sect. 3.3 but with the two stage exponential integrator

(4.3) with different arguments. This prevents the derivation of the invariants in Sect. 4.2 from working. We expect, however, that reversible exponential integrators with two or more stages have a similar long-time behavior as the one-stage methods studied here. In order to support this conjecture, we present in Fig. 3 a numerical experiment with the same parameters as in the experiments of Sect. 3.3 but with the two stage exponential integrator

with \(c_{1}=\frac{1}{2}-\frac{\sqrt{3}}{6}\) and \(c_{2}=\frac {1}{2}+\frac {\sqrt{3}}{6}\). This is a reversible and symmetric Lawson method with the Hammer and Hollingsworth method as an underlying Runge–Kutta method, see [7, Sect. 2]. We also perform the same experiment for the pseudo steady-state approximation [24, 26]

a two-stage explicit exponential integrator (neither symmetric nor reversible) that is used in chemistry, and plot the result in Fig. 4. A loss of energy, mass, momentum and actions is observed for this method.

Actions (black lines), discrete energy (middle bold grey line), mass (upper bold grey line) and momentum (lower bold grey line) for the two-stage method (5.1)

Actions (black lines), discrete energy (middle bold grey line), mass (upper bold grey line) and momentum (lower bold grey line) for the pseudo steady state approximation (5.2)

Our main result explains rigorously the good long-time behavior of certain exponential integrators in the situation where the initial values are small and the frequencies in the equation as well as the time-step size satisfy a non-resonance condition. For initial values that are not small we can not expect long-time near-conservation of actions (neither along the exact nor along a numerical solution). Concerning the behavior of energy and mass we refer to [7] for many numerical experiments when initial values are not small and frequencies are resonant. A rigorous numerical analysis of this situation on long time intervals is still missing.

We finally mention that mass can be exactly conserved by exponential integrators, whereas our main result only explains its near-conservation over long times. For example the Lawson method from Example 2.1 conserves mass exactly. For a characterization of exponential integrators, that preserve mass exactly, we refer the reader to [7, Sect. 3].

References

Bambusi, D., Grébert, B.: Birkhoff normal form for partial differential equations with tame modulus. Duke Math. J. 135(3), 507–567 (2006)

Bourgain, J.: Quasi-periodic solutions of Hamiltonian perturbations of 2D linear Schrödinger equations. Ann. Math. (2) 148(2), 363–439 (1998)

Cano, B.: Conserved quantities of some Hamiltonian wave equations after full discretization. Numer. Math. 103(2), 197–223 (2006)

Cano, B., González-Pachón, A.: Exponential time integration of solitary waves of cubic Schrödinger equations. Preprint (2011)

Cano, B., González-Pachón, A.: Exponential methods for the time integration of Schrödinger equation. AIP Conf. Proc. 1281(1), 1821–1823 (2010)

Castella, F., Dujardin, G.: Propagation of Gevrey regularity over long times for the fully discrete Lie Trotter splitting scheme applied to the linear Schrödinger equation. M2AN Math. Model. Numer. Anal. 43(4), 651–676 (2009)

Celledoni, E., Cohen, D., Owren, B.: Symmetric exponential integrators with an application to the cubic Schrödinger equation. Found. Comput. Math. 8(3), 303–317 (2008)

Cohen, D., Hairer, E., Lubich, C.: Conservation of energy, momentum and actions in numerical discretizations of non-linear wave equations. Numer. Math. 110(2), 113–143 (2008)

Debussche, A., Faou, E.: Modified energy for split-step methods applied to the linear Schrödinger equation. SIAM J. Numer. Anal. 47(5), 3705–3719 (2009)

Dujardin, G.: Exponential Runge–Kutta methods for the Schrödinger equation. Appl. Numer. Math. 59(8), 1839–1857 (2009)

Dujardin, G., Faou, E.: Normal form and long time analysis of splitting schemes for the linear Schrödinger equation with small potential. Numer. Math. 108(2), 223–262 (2007)

Eliasson, L.H., Kuksin, S.B.: KAM for the nonlinear Schrödinger equation. Ann. Math. (2) 172(1), 371–435 (2010)

Faou, E., Grébert, B.: Resonances in long time integration of semi linear Hamilonian PDEs. Preprint (2009). http://www.irisa.fr/ipso/perso/faou

Faou, E., Grébert, B.: Hamiltonian interpolation of splitting approximations for nonlinear PDEs. Found. Comput. Math. 11(4), 381–415 (2011)

Faou, E., Grébert, B., Paturel, E.: Birkhoff normal form for splitting methods applied to semilinear Hamiltonian PDEs. I. Finite-dimensional discretization. Numer. Math. 114(3), 429–458 (2010)

Faou, E., Grébert, B., Paturel, E.: Birkhoff normal form for splitting methods applied to semilinear Hamiltonian PDEs. II. Abstract splitting. Numer. Math. 114(3), 459–490 (2010)

Gauckler, L.: Long-time analysis of Hamiltonian partial differential equations and their discretizations. Dissertation (doctoral thesis), Universität Tübingen (2010). http://nbn-resolving.de/urn:nbn:de:bsz:21-opus-47540

Gauckler, L., Lubich, C.: Nonlinear Schrödinger equations and their spectral semi-discretizations over long times. Found. Comput. Math. 10(2), 141–169 (2010)

Gauckler, L., Lubich, C.: Splitting integrators for nonlinear Schrödinger equations over long times. Found. Comput. Math. 10(3), 275–302 (2010)

Hairer, E., Lubich, C., Wanner, G.: Geometric numerical integration. In: Structure-Preserving Algorithms for Ordinary Differential Equations, 2nd edn. Springer Series in Computational Mathematics, vol. 31. Springer, Berlin (2006)

Hochbruck, M., Lubich, C., Selhofer, H.: Exponential integrators for large systems of differential equations. SIAM J. Sci. Comput. 19(5), 1552–1574 (1998) (electronic)

Hochbruck, M., Ostermann, A.: Exponential integrators. Acta Numer. 19, 209–286 (2010)

Lawson, J.D.: Generalized Runge–Kutta processes for stable systems with large Lipschitz constants. SIAM J. Numer. Anal. 4, 372–380 (1967)

Minchev, B., Wright, W.M.: A review of exponential integrators for semilinear problems. Tech. rep. 2/05, Department of Mathematical Sciences, NTNU, Norway (2005). http://www.math.ntnu.no/preprint/

Sulem, C., Sulem, P.L.: The Nonlinear Schrödinger Equation. Applied Mathematical Sciences, vol. 139. Springer, New York (1999)

Verwer, J.G., van Loon, M.: An evaluation of explicit pseudo-steady-state approximation schemes for stiff ODE systems from chemical kinetics. J. Comput. Phys. 113(2), 347–352 (1994)

Acknowledgements

We greatly appreciate the referees’ comments on an earlier version. We would like to thank Christian Lubich for interesting discussions.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Mechthild Thalhammer.

Rights and permissions

About this article

Cite this article

Cohen, D., Gauckler, L. One-stage exponential integrators for nonlinear Schrödinger equations over long times. Bit Numer Math 52, 877–903 (2012). https://doi.org/10.1007/s10543-012-0385-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10543-012-0385-1

Keywords

- Nonlinear Schrödinger equation

- Exponential integrators

- Long-time behavior

- Near-conservation of actions, energy, mass and momentum

- Modulated Fourier expansion