Abstract

Facial expression of emotion is a key mechanism of non-verbal social communication in humans. Deficits in processing of facial emotion have been implicated in psychiatric disorders characterized by abnormal social behavior, such as autism and schizophrenia. Identification of genetically transmitted variability in the neural substrates of facial processing can elucidate the pathways mediating genetic influences on social behavior and provide useful endophenotypes for psychiatric genetic research. This study examined event-related brain potentials (ERPs) evoked by changes in facial expression in adolescent twins (age 12, 47 monozygotic and 51 dizygotic pairs). Facial images with happy, fearful, and neutral expressions were administered in a continuous mode, such that different expressions of the same face instantaneously replaced each other. This experimental design allowed us to isolate responses elicited by changes in emotional expression that were not confounded with responses elicited by image onset. Changes of emotional expression elicited a N240 wave with a right temporoparietal maximum and a P300 wave with a centropariatal midline maximum. Genetic analyses using a model fitting approach showed that a substantial proportion of the observed individual variation in these ERP responses can be attributed to genetic factors (36–64% for N250 and 42–62% for P300 components, respectively). This study provides the first evidence for heritability of neuroelectric indicators of face processing and suggests that ERP components sensitive to emotional expressions can potentially serve as endophenotypes for psychpathology characterized by abnormalities in social cognition and behavior.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Facial expression of emotion is a key mechanism of non-verbal social communication in humans and non-human primates. Facial expressions can rapidly convey information about the motivational and emotional state of the expressor and thus can supply important information about the situation such as presence of imminent danger or intentions of the expressor (friendly or hostile). From the evolutionary perspective, the ability to rapidly extract this vital information is of clear adaptive significance and must have been under strong selection pressure. Consistent with this notion, the ability to express and interpret facial expression of basic emotion is present in both humans and non-human mammals (Tate et al. 2006), emerges very early in infancy (Grossmann and Johnson 2007), and is essentially universal across cultures (Mandal and Ambady 2004), suggesting that it is a genetically determined characteristic of the species.

Research in the past decade has made substantial progress in the understanding of the neural substrates and mechanisms underlying the processing of faces. Evidence from studies using electroencephalography (EEG), magnetoencephalography (MEG), and functional magnetic resonance imaging (fMRI) indicate that face-sensitive neural processing involves an interactive network of distributed brain regions, most notably, the inferior temporal cortex including the fusiform gyrus, superior temporal cortex, and amygdala and involves distinct processing stages such as structural encoding, categorization, and higher-level discrimination of identity or affect in associative areas (reviewed in Haxby et al. 2002; Liu et al. 2002; Posamentier and Abdi 2003; Vuilleumier and Pourtois 2007).

There is considerable variability in the human population with respect to the ability to recognize affective facial information (Corden et al. 2006), and different aspects of face processing are impaired in psychiatric disorders, particularly in those conditions that include abnormal social behavior as a characteristic feature. Thus, patients with schizophrenia show impaired performance on tasks involving discrimination of emotional facial expressions, but are less impaired in processing of non-emotional features of the face (Kohler et al. 2003; Schneider et al. 2006). Other studies established a robust relationship between antisocial behavior and ability to recognize fearful facial expression (reviewed in Marsh and Blair 2008). Consistent with these performance deficits, ERP studies reported abnormalities of the neural processing of facial affect in psychiatric disorders. Face-sensitive ERPs are impaired in patients with schizophrenia (Johnston et al. 2005; Bediou et al. 2007; Turetsky et al. 2007). Children with autism spectrum disorders show abnormal patterns of ERP responses to emotional facial expressions compared to typically developing children (McPartland et al. 2004; Dawson et al. 2005; Jeste and Nelson 2009; Wong et al. 2008). Abnormal ERPs evoked by facial emotion have been also reported in alcoholism (Criado and Ehlers 2007).

These studies strongly suggest that early abnormalities in the neural mechanisms of facial processing may lead to the disruption of the “downstream” social communication processes and ultimately lead to abnormal social and emotional development. The social and biological adaptive significance of facial affect and its evolutionary relevance suggest that neural substrates of facial expression processing may be under substantial genetic control. Identification of genetically transmitted variability in the neural substrates of facial processing can help to elucidate the pathways by which genetic factors influence social behavior and provide a useful endophenotype for genetic studies of psychiatric disorders characterized by abnormal social behavior. Endophenotypes, or intermediate phenotypes, are heritable characteristics indicative of the neurobiological substrates of a complex behavior (Vogel 1981; Gottesman and Gould 2003). The utility of a neurobiologically oriented approach to the genetics of complex behaviors, including psychiatric disorders, is twofold: first, it can help to elucidate the neurobiological underpinnings of complex behavioral traits and thus clarify the pathways by which specific genes (already identified or yet to be identified) can influence the behavioral phenotype; second, knowledge of relevant neurobiological substrates can inform the selection and prioritization of genes for larger scale association studies of the behavioral phenotype. Furthermore, given the complex multifactorial etiology of behavioral traits and psychiatric phenotypes, isolation of specific, relatively homogenous components of liability that could be traced to genetic variability at the level of neurotransmitter function can potentially facilitate the identification of genes contributing to specific aspects of the liability.

Among different non-invasive techniques used to study brain function in humans, the ERP method is particularly appealing for genetic studies due to its relatively low costs and thus feasibility of collecting sufficiently large samples. Unlike hemodynamic neuroimaging methods, ERPs provide a direct, real-time measure of cortical neuronal activity that permits an isolation of specific stages of processing that occur in the subsecond range and last for tens of milliseconds. On the other hand, ERPs have very limited spatial resolution and their sources cannot be precisely localized. It is important to note, however, that the neural substrates of well-established ERP components are being increasingly clarified by studies combining ERP and fMRI technique. Thus, in a recent study facial emotion-sensitive ERP components including face-specific vertex positive potential and P300a predicted blood oxygenation level-dependent (BOLD) activation in the fusiform gyrus, thus providing a neuroanatomical validation for well-characterized ERP components (Johnston et al. 2005).

To the best of our knowledge, there have been no published studies that investigated genetic and environmental influences on electrocortical processing of facial emotion. We hypothesized that individual differences in ERP components elicited by facial expression and by facial identity are influenced by genetic factors. Accordingly, the goal of the present study was to estimate heritability of face-sensitive ERPs using a classical twin design.

Methods

Sample

The participants were 196 adolescent twins (98 females, M age = 12, SD = 0.3) including 47 monozygotic and 51 dizygotic pairs (22 female MZ pairs, 25 male MZ pairs, 25 female DZ pairs, 22 male DZ pairs, and 4 opposite-sex DZ pairs). All twins are participating in a longitudinal adolescent study of the genetics of brain function and behavior. The twins were identified through birth records from the general population and screened for exclusion criteria that included a history of head trauma with loss of consciousness for more than 5 min, known history of epilepsy, currently taking a psychoactive medication, as well as hearing, visual, and other physical and mental impairments that could prevent the subjects from understanding and following instructions in cognitive psychophysiology experiments. The study was approved by Washington University Institutional Review Board. After complete description of the study to the subjects and their parents a written informed child assent and parental consent was obtained. Zygosity was determined using a questionnaire administered to parents. The reliability of zygosity diagnosis by questionnaire has been demonstrated in previous studies (Kasriel and Eaves 1976). Only data from the first (baseline) assessment at age 12 are presented here.

Procedures

The stimuli were drawn from Ekman & Friesen’s Pictures of Facial Affect set (Ekman 1976). All images were cropped to fit into a standard-size oval in order to minimize possible influence of non-facial information such as hairstyle. Stimuli (13 × 17 cm) were presented on a 22″ LCD computer screen placed at a 110 cm distance from the subject’s eyes (angular size: 6.77° × 8.85°). Faces with happy, fearful, and neutral expressions were administered in a continuous mode, such that different expressions of the same face instantaneously replaced each other, without a blank screen between the faces (i.e. with a zero inter-stimulus interval). Emotional expressions were always alternated with a neutral expression of the same face (Fig. 1). Stimulus duration varied pseudorandomly from 1.2 to 1.4 s within each stimulus category in order to minimize the predictability of expression change. This mode of presentation creates an appearance that a face continuously shown on the screen changes its expression from neutral to emotional, then back to neutral, then to another emotional expression, and so on (example of the sequence: N1-F1-N1-H1-N1-F1-N1-F1-N1-H1-N1-N2-H2-N2-F2-N2-…, where N, F, and H are neutral, fearful, and happy expressions, respectively, and subscript numbers denote different actors). Face identity changed only during a neutral expression (Fig. 1). This experimental design with continuous face presentation allowed us to isolate responses elicited by the change of facial expression (e.g. from neutral to fearful) that were not confounded with responses elicited by the face onset. When facial images are presented following a blank screen, they elicit a cascade of processing stages related to stimulus encoding, categorization, etc., which may complicate the isolation of activity related to the change of expression only. A total of 440 male and female facial images were presented including 99 happy, 99 fearful, and 231 neutral facial expressions. In addition, 21 neutral faces with eyeglasses were inserted randomly into the sequence. Subjects were instructed to press a button on the response pad whenever they saw a face with eyeglasses on. The purpose of this secondary task was to ensure that the subjects attended to the stimuli during the experiment, and these data were not included in the ERP analysis. Importantly, this instruction also ensured that the subjects attended to the eyes region of the face that is particularly critical for the detection of emotional expressions.

EEG recordings

EEG was recorded from 30 scalp electrodes (extended 10–20 system) with a left mastoid as reference. Electrode impedance was kept below 5 KOhm. The signal was digitized at 500 Hz sampling rate, stored, and analyzed off-line. The signal was re-referenced off-line to the average mastoid reference for the assessment of the P300 component and to midline central electrode (Cz) reference for the assessment of the N240 component. Cz reference was used because, similar to the previously described face-specific N170 (Sagiv and Bentin 2001), this component peaks in lateral posterior areas of the scalp, and the use of mastoid refernce would lead to its substantial attenuation due to the proximity of the reference to putative source of this wave in the superior temporal sulcus (Henson et al. 2003). Vertical and horizontal EOG was used for ocular artifact correction using a regression-based algorithm (Semlitsch et al. 1986). Individual epochs (−150 to 1,000 ms relative to the stimulus onset) were isolated from the continuous recording, baseline-corrected, and averaged separately for each category of the facial expression change: happy (after neutral), fearful (after neutral), neutral (after emotional), and identity (actor) change (neutral after neutral). It is important to note that the “stimulus onset” was actually a change from previous to subsequent image within one refresh cycle of the monitor, rather than more traditional presentation of the stimulus on a blank screen background. ERP peak detection was performed automatically in the time windows of 200–400 and 150–350 ms for P300 and N240 peaks, respectively, and all averaged waveforms were visually inspected to confirm the quality of peak detection. P300 amplitude was scored relative to the mean baseline value, and N240 was scored relative to the preceding positive peak. The late positive “P600” potential elicited by the change of face identity (actor) was scored as mean amplitude value in the time window of 450–850 ms due to substantial variability of individual waveforms and difficulty of unambiguous peak detection.

Statistical analysis

Repeated measures general linear models (GLM) were used to evaluate the effects of different types of expression change on the ERP components, as well as to examine topographical scalp differences. This analysis included repeated-measures factors Expression with 3 levels (neutral, happy, fearful) and Laterality with 5 levels representing the parietal chain of electrodes (from the left to the right: P7, P3, Pz, P4, and P8). We tested for the main effect of each of these factors and their interaction. Post-hoc pair-wise comparisons included Sidak correction to protect from Type I error.

To estimate the relative contribution of genetic (heritability) and environmental sources to the total phenotypic variance of ERP we used the model-fitting approach to genetic analysis of twin data (Neale and Cardon 1992; Rijsdijk and Sham 2002). Linear structural equation models were fitted to variance–covariance matrices computed separately for MZ and DZ twins using the Mx program (Neale et al. 2002). These models assume that phenotypic variance arises from the following factors: additive genetic influences (A), non-additive genetic influences (D) or environmental influences shared by family members (C), and individually unique (unshared) environmental influences (E). Path coefficients corresponding to these factors were estimated using a maximum likelihood method, and a χ2 statistic was used to assess the goodness-of-fit of each model, where low χ2 values indicate a good fit. For individual variables, we fit ADE or ACE model depending on which model was suggested by the relative size of MZ and DZ correlation (ADE model if r MZ > 2r DZ and ACE model if r MZ < 2rDZ). The significance of C or D paths was tested by comparing the goodness of fit of the reduced (AE) model with the ACE or ADE model, respectively. If dropping a path significantly reduced the goodness of fit (the χ2 difference was significant), the path was retained in the model, otherwise the more parsimonious model was chosen (i.e. the one that accounted for the variance equally well, but with a fewer number of parameters). In addition, different models were compared using Akaike’s information criterion (AIC, computed as χ2 − 2df) that provides a combined measure of goodness-of-fit and parsimony of a given model at the same time. A model with the lower AIC was considered as better fitting. Although our sample included both male and female twins, we did not have sufficient power to fit “sex-limitation” models due to the small number of opposite-sex pairs.

Results

General effects of facial expression

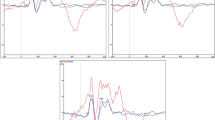

Changes of facial expression elicited electrocortical responses with two distinct components that were sensitive to the expression categories, N240 and P300 (Fig. 2). The first component is a negative-going wave with a right temporoparietal (P8) maximum peaking at about 250 ms after the expression change. This component is most pronounced with a Cz reference and resembles the classical face-specific N170 wave with maximum amplitude in temporoparietal regions, but has a longer latency (about 240 ms). Because both ERP components were most distinct over posterior areas of the scalp, consistent with previous studies of face-related ERPs (Posamentier and Abdi 2003; Leppanen and Nelson 2009), statistical analyses were restricted to parietal regions of the scalp. RM ANOVA showed a highly significant effect of Expression (F 2,392 = 116.6, P < 0.001) on the N240 component indicating that both happy and fearful expressions produced larger responses compared to the neutral expressions (both contrasts are highly significant, P < 0.001) and happy expressions produced larger responses compared to fearful expressions (P < 0.001). The effect of Laterality was also significant (F 2,784 = 179.1, P < 0.001) indicating that N240 components were larger at lateral than central locations. A significant Expression by Laterality interaction effect (F 8,1,568 = 74.6, P < 0.001) indicated that the differences between emotional expressions were the largest at both lateral parietal locations. Furthermore, the right parietal location (P8) showed larger N240 amplitude compared to the left parietal location (within-subject contrast: F 1,196 = 7.24, P < 0.01). The N240 amplitude at the right lateral parietal site (P8) was used in subsequent genetic analyses (Fig. 2a).

Event-related brain potentials (ERPs) elicited by changes of facial expression and changes in facial identity (actor). a Grand-averaged waveforms of the N240 component at the right temporoparietal site (P8, referenced to Cz). b N240 amplitudes for facial expression and identity changes (note that the average amplitude of individual subjects’ peak values is not equal to the peak amplitude of the grand-averaged waveform due to inter-subject variation in peak latency). c Grand-averaged waveforms of the P300 component at the midparietal recording site (average mastoid reference). d Average peak amplitudes of the P300 component elicited by change in facial expresison and identity at parietal midline location (Pz)

The second component that was sensitive to the expression categories is a positive-going P300 wave that is broadly distributed over the scalp, has a centroparietal midline maximum, and peaks at about 300 ms. The main effect of Expression was highly significant (F 2,392 = 380.9, P < 0.001) indicating that happy and fearful expressions elicited dramatically larger responses compared to the neutral expression (Fig. 2c). There also was a modest but significant difference between happy and fearful expressions (within-subject contrast: F 1,196 = 5.0, P < 0.05). The effect of Laterality was highly significant (F 2,784 = 37.8, P < 0.001), indicating that the amplitude of the P300 component was generally larger at midline than lateral locations and in the right hemisphere compared to the left hemisphere. A significant Expression by Laterality interaction effect (F 8,1,568 = 34.7, P < 0.001) indicated that P300 component best discriminated between emotional expressions at the midline parietal location (Pz). This location was used in the subsequent genetic analyses.

In addition, the change of face identity (posing actor) elicited a distinct waveform with a markedly lateralized late positive wave peaking at a latency about 600 ms (P7−P8 difference: t = 6.52, df = 102, P < .001).

Genetic analyses

The goodness of fit for different genetic models is presented in Table 1. Comparison of AE models with ADE and ACE models showed that including additional parameters into the model (D or C, respectively) did not lead to a significant improvement of model fit. Therefore, results are presented for the most parsimounous AE model that showed smaller AIC values relative to ADE and ACE models.

Genetic analyses showed that substantial portion of individual variability in ERP components (28–64%) can be attributed to genetic factors (Table 2). Both N240 and P300 components elicited by changes in facial expression showed high heritability, whereas the P600 component evoked by the change of face identity showed only modest heritability.

Discussion

This study provides the first evidence for heritability of individual differences in brain activation associated with processing of facial affect. A substantial portion of individual variability (36–64%) in the ERP components elicited by changes in facial expression was accounted for by genetic factors, suggesting that these ERP components may be indicative of genetically transmitted differences in the neural substrates of social cognition. The magnitude of heritability was comparable for different kinds of expression change (neutral to emotional versus emotional to neutral) despite the differences in mean amplitude values, suggesting that genetic factors influencing individual differences in sensitivity to facial expression may be relatively non-specific.

Changes in facial expression and changes in face identity evoked distinct electrocortical responses. Consistent with previous ERP and neuroimaging literature (Posamentier and Abdi 2003), there is a significant hemispheric asymmetry in all studied ERP face-related components, suggesting a right hemispheric advantage for processing of facial affect. This lateralization was particularly striking for the P600 component elicited by the change of facial identity.

The ERP components identified in this study are distinct from previously described face-related brain potentials. In previous ERP studies of face perception, stimuli were typically presented at certain time intervals (usually 1–2 s), with a blank screen preceding the onset of the facial image. In this mode of presentation, neural activity related to processing of facial expression may overlap with more general sensory responses to the onset of a visual stimulus, structural encoding, stimulus categorization, and other processes. The present study used a continuous stimulus presentation procedure with instantaneous changes in facial expression in order to isolate neural activity specifically associated with the processing of facial affect. The negative N240 potential identified in the present resembles the “classic” N170 (Sagiv and Bentin 2001) with respect to its topographical distribution, but has a longer latency. N170 is considered to be face-specific and is thought to reflect structural encoding of the face, while its sensitivity to emotional expression is a matter of debate. In our experiment, we did not observe the classic N170, probably because the structural encoding processes were minimally engaged due to the fact that faces were displayed in a continuous mode. Thus, the N240 may rather reflect the process of “restructuring” of the neural representation of facial features specific to different emotional expressions, however, this processing may be subserved by neural substrates largely overlapping with initial structural encoding. The P300 potential corresponds to the processing stage at which the knowledge of the emotion signaled by the face is believed to be formed (Adolphs 2002).

One important question is whether ERPs described in the present study reflect changes in emotion expression or just physical changes in the facial image. In general, any changes in the visual image can elicit ERP response, regardless of the image content. However, this simplified explanation can be ruled out because responses elicited by the change from neutral to emotional (happy or fearful) expression differed dramatically (and highly significantly) from responses elicited by the change from emotional to neutral expression, despite the fact that the amount of physical changes in the image was identical. Furthermore, changes in face identity that always occurred during a neutral expression evoked a waveform that was quite distinct from ERPs related to expression change with respect to both scalp topography and morphology of the waveform. These findings are consistent with the literature suggesting that face identification versus emotion recognition are subserved by dissociable neural networks (reviewed in Posamentier and Abdi 2003; Vuilleumier and Pourtois 2007). It should be noted that in the present task both expression and identity discrimination was largely automatic, since no explicit instruction was given to the subjects regarding these stimuli attributes.

The results of the genetic analysis suggest substantial heritability of individual differences in neural substrates of facial affect processing in early adolescence. This finding may have important implications for the investigation of neurobiological and genetic underpinnings of a range of neuropsychiatric disorders characterized by disturbances of social communication and behavior. There is a growing body of evidence from behavioral, electrophysiological, and neuroimaging studies of psychiatric disorders suggesting that socialization deficits are associated with impaired processing of facial emotional cues. This is particularly important for autism spectrum disorders, since abnormal social cognition, including disturbed neural processing of facial information, is increasingly recognized as one of the core deficits in this disorder (Dawson et al. 2005; Webb et al. 2006; Jeste and Nelson 2009). Abnormalities in facial affect processing have also been reported in other disorders. For example, a recent meta-analysis revealed a large deficit in the perception of facial emotion in patients with schizophrenia relative to healthy participants that was associated with clinical symptoms and social functioning (Kohler et al. 2009). Adolescents with ADHD showed deficits in identifying threat-related emotional expressions (anger and fear), as well as significantly reduced face-related ERP components (Williams et al. 2008). About 9% of men in the general population exhibit significant deficit in recognizing fearful expressions, and this deficit has been shown to predict poor performance on a “theory of mind” task, a measure of social cognition (Corden et al. 2006). Consistent with this finding, a functional imaging study has shown that adolescents with conduct disorder and callous-unemotional traits characterized by reduced empathy and emotional response showed reduced amygdala activation to fearful faces (Marsh et al. 2008). These and other findings suggest that individuals with antisocial and psychopathic traits show a specific deficit in recognizing fearful expression (Marsh and Blair 2008). In contrast, adolescents at risk for major depression showed increased amygdala and nucleus accumbens activation to fearful faces and reduced activation to happy faces (Monk et al. 2008). Taken together, this evidence suggests that abnormal processing of facial emotion may be an important component of liability to a range of mental disorders. Importantly, the specific nature of the deficit such as underactivation versus overactivation of the same neural circuitry may contribute to different forms of psychopathology.

Given the present findings of high heritability of individual differences in brain responses to facial affect, it can be hypothesized that ERP components elicited by facial expressions can serve as endophenotypes for genetic studies of psychiatric disorders characterized by abnormal social behavior. It is important to note that validation of a new endophenotype requires additional evidence. First, behavioral correlates of these heritable ERP characteristics should be established including both normal traits such as personality and diagnostic phenotypes including specific diagnoses, symptom-complexes, and individual symptoms. Next, it needs to be investigated whether unaffected relatives of the probands show similar deviations in ERP responses. Also, test–retest reliability and developmental stability of individual differences in face-related ERP responses should be confirmed.

Several limitations of the present study have to be acknowledged. First, although the sample was quite large for a laboratory ERP study, it is very modest by the standards of biometrical genetics. Shared environmental (C) and dominant genetic (D) paths could be dropped without significant reduction of the goodness-of-fit, however, this may be in part due to the limited power to differentiate between the full (ACE or ADE) and reduced (AE) model. It is possible that shared environmental or non-additive genetic effects could make a significant contribution in a larger sample. Next, due to small number of opposite-sex DZ twin pairs, we did not have sufficient power to test sex-limitation models allowing for sex differences in genetic architecture. Therefore, the present study provides only a crude estimate of the strength of genetic influences, rather than a detailed characterization of genetic and environmental architecture of the ERP traits. Second, neural substrates underlying the variation of ERP components still need to be clarified in future studies, e.g. by examining covariation between ERP components and fMRI activations obtained in the same individuals using the same task. This approach has been successfully implemented in a recent study using a combination of ERP and fMRI methods that reported correlations of >.8 between specific ERP components in a Go-NoGo task and BOLD signal (Ford et al. 2004).

In conclusion, This study provides the first evidence for heritability of neuroelectric indicators of face processing and suggests that ERP components sensitive to emotional expressions can potentially serve as endophenotypes for psychpathology characterized by abnormalities in social cognition and behavior.

References

Adolphs R (2002) Neural systems for recognizing emotion. Curr Opin Neurobiol 12:169–177

Bediou B, Henaff MA, Bertrand O, Brunelin J, d’Amato T, Saoud M, Krolak-Salmon P (2007) Impaired fronto-temporal processing of emotion in schizophrenia. Neurophysiol Clin 37:77–87

Corden B, Critchley HD, Skuse D, Dolan RJ (2006) Fear recognition ability predicts differences in social cognitive and neural functioning in men. J Cogn Neurosci 18:889–897

Criado JR, Ehlers CL (2007) Electrophysiological responses to affective stimuli in Southwest California Indians: relationship to alcohol dependence. J Stud Alcohol Drugs 68:813–823

Dawson G, Webb SJ, McPartland J (2005) Understanding the nature of face processing impairment in autism: insights from behavioral and electrophysiological studies. Dev Neuropsychol 27:403–424

Ekman P (1976) Pictures of facial affect. Consulting Psychologists Press, Palo Alto

Ford JM, Gray M, Whitfield SL, Turken AU, Glover G, Faustman WO, Mathalon DH (2004) Acquiring and inhibiting prepotent responses in schizophrenia: event-related brain potentials and functional magnetic resonance imaging. Arch Gen Psychiatry 61:119–129

Gottesman II, Gould TD (2003) The endophenotype concept in psychiatry: etymology and strategic intentions. Am J Psychiatry 160:636–645

Grossmann T, Johnson MH (2007) The development of the social brain in human infancy. Eur J Neurosci 25:909–919

Haxby JV, Hoffman EA, Gobbini MI (2002) Human neural systems for face recognition and social communication. Biol Psychiatry 51:59–67

Henson RN, Goshen-Gottstein Y, Ganel T, Otten LJ, Quayle A, Rugg MD (2003) Electrophysiological and haemodynamic correlates of face perception, recognition and priming. Cereb Cortex 13:793–805

Jeste SS, Nelson CA 3rd (2009) Event related potentials in the understanding of autism spectrum disorders: an analytical review. J Autism Dev Disord 39:495–510

Johnston PJ, Stojanov W, Devir H, Schall U (2005) Functional MRI of facial emotion recognition deficits in schizophrenia and their electrophysiological correlates. Eur J Neurosci 22:1221–1232

Kasriel J, Eaves L (1976) The zygosity of twins: further evidence on the agreement between diagnosis by blood groups and written questionnaires. J Biosoc Sci 8:263–266

Kohler CG, Turner TH, Bilker WB, Brensinger CM, Siegel SJ, Kanes SJ, Gur RE, Gur RC (2003) Facial emotion recognition in schizophrenia: intensity effects and error pattern. Am J Psychiatry 160:1768–1774

Kohler CG, Walker JB, Martin EA, Healey KM, Moberg PJ (2009) Facial emotion perception in Schizophrenia: a meta-analytic review. Schizophr Bull [Epub ahead of print]

Leppanen JM, Nelson CA (2009) Tuning the developing brain to social signals of emotions. Nat Rev Neurosci 10:37–47

Liu J, Harris A, Kanwisher N (2002) Stages of processing in face perception: an MEG study. Nat Neurosci 5:910–916

Mandal MK, Ambady N (2004) Laterality of facial expressions of emotion: Universal and culture-specific influences. Behav Neurol 15:23–34

Marsh AA, Blair RJ (2008) Deficits in facial affect recognition among antisocial populations: a meta-analysis. Neurosci Biobehav Rev 32:454–465

Marsh AA, Finger EC, Mitchell DG, Reid ME, Sims C, Kosson DS, Towbin KE, Leibenluft E, Pine DS, Blair RJ (2008) Reduced amygdala response to fearful expressions in children and adolescents with callous-unemotional traits and disruptive behavior disorders. Am J Psychiatry 165:712–720

McPartland J, Dawson G, Webb SJ, Panagiotides H, Carver LJ (2004) Event-related brain potentials reveal anomalies in temporal processing of faces in autism spectrum disorder. J Child Psychol Psychiatry 45:1235–1245

Monk CS, Klein RG, Telzer EH, Schroth EA, Mannuzza S, Moulton JL 3rd, Guardino M, Masten CL, McClure-Tone EB, Fromm S, Blair RJ, Pine DS, Ernst M (2008) Amygdala and nucleus accumbens activation to emotional facial expressions in children and adolescents at risk for major depression. Am J Psychiatry 165:90–98

Neale MC, Cardon LR (1992) Methodology for genetic studies of twins and families, vol 67. Kluwer Academic Publishers, Dordrecht

Neale MC, Boker SM, Xie G, Maes HH (2002) Mx: statistical modeling, 6th edn. Department of Psychiatry, Richmond

Posamentier MT, Abdi H (2003) Processing faces and facial expressions. Neuropsychol Rev 13:113–143

Rijsdijk FV, Sham PC (2002) Analytic approaches to twin data using structural equation models. Brief Bioinform 3:119–133

Sagiv N, Bentin S (2001) Structural encoding of human and schematic faces: holistic and part-based processes. J Cogn Neurosci 13:937–951

Schneider F, Gur RC, Koch K, Backes V, Amunts K, Shah NJ, Bilker W, Gur RE, Habel U (2006) Impairment in the specificity of emotion processing in schizophrenia. Am J Psychiatry 163:442–447

Semlitsch HV, Anderer P, Schuster P, Presslich O (1986) A solution for reliable and valid reduction of ocular artifacts, applied to the P300 ERP. Psychophysiology 23:695–703

Tate AJ, Fischer H, Leigh AE, Kendrick KM (2006) Behavioural and neurophysiological evidence for face identity and face emotion processing in animals. Philos Trans R Soc Lond B Biol Sci 361:2155–2172

Turetsky BI, Kohler CG, Indersmitten T, Bhati MT, Charbonnier D, Gur RC (2007) Facial emotion recognition in schizophrenia: when and why does it go awry? Schizophr Res 94:253–263

Vogel F (1981) Neurobiological approaches in human behavior genetics. Behav Genet 11:87–102

Vuilleumier P, Pourtois G (2007) Distributed and interactive brain mechanisms during emotion face perception: evidence from functional neuroimaging. Neuropsychologia 45:174–194

Webb SJ, Dawson G, Bernier R, Panagiotides H (2006) ERP evidence of atypical face processing in young children with autism. J Autism Dev Disord 36:881–890

Williams LM, Hermens DF, Palmer D, Kohn M, Clarke S, Keage H, Clark CR, Gordon E (2008) Misinterpreting emotional expressions in attention-deficit/hyperactivity disorder: evidence for a neural marker and stimulant effects. Biol Psychiatry 63:917–926

Wong TK, Fung PC, Chua SE, McAlonan GM (2008) Abnormal spatiotemporal processing of emotional facial expressions in childhood autism: dipole source analysis of event-related potentials. Eur J Neurosci 28:407–416

Acknowledgments

This work was supported by the grant DA018899 from the National Institute on Drug Abuse.

Author information

Authors and Affiliations

Corresponding author

Additional information

Edited by Kristen Jacobson.

Rights and permissions

About this article

Cite this article

Anokhin, A.P., Golosheykin, S. & Heath, A.C. Heritability of Individual Differences in Cortical Processing of Facial Affect. Behav Genet 40, 178–185 (2010). https://doi.org/10.1007/s10519-010-9337-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10519-010-9337-1