Abstract

The application of Latin Hypercube and Monte Carlo (MC) sampling techniques for ground motion selection purposes is investigated. Latin Hypercube Sampling (LHS) works by first stratifying a probability distribution domain into multiple equally spaced and non-overlapping stripes and then by permutationally drawing samples from those stripes. To examine the efficiency of these two distinct sampling methods, a set of conditional multivariate distributions was fit to an intensity measure vector based on a single, two, or average of more-than-two (average) conditioning intensity measure. LHS was then utilized for sampling purposes from the conditional multivariate distributions, which in turn demonstrated superiority over MC given the same number of realization samples. Accordingly, it was utilized as an underlying peace of a broader ground motion selection framework to facilitite the selection of a number of ground motion suites based on different methods of conditioning. Using the selected suites, response history and subsequent damage/loss analyses were conducted on a generic 4-story non-ductile reinforced concrete building. The outcomes of these latter studies demonstrated that the ground motion suite selected based on an average-intensity-measure conditioning criterion performed better than those selected through single- and two-intensity-measure conditioning criteria.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The generalized conditional intensity measure (GCIM) methodology originally developed by Bradley (2011) is arguably the most comprehensive method for ground motion selection. As such, ground motion suites selected using this method are known to meet the sufficiency and efficiency criteria with respect to capturing the true seismic behavior of structures across multiple modes of vibration. In this method, it is common to first put together an intensity measure vector consisting of several intensity measures (\(IM_{i}^{{\prime }} s\)) and that is to capture various characteristics of an earthquake during the record selection phase. Hence, each intensity measure in that vector represents a specific content of an earthquake ground motion record. For instance, spectral acceleration at a given period (SAT) represents the amplitude and frequency content at that period, Arias Intensity (AI) or Cumulative Absolute Velocity (CAV) each represents the cumulative content, and Significant Duration (Ds575 or Ds595) represents the duration content. In GCIM, a multivariate distribution is fit to the \(IM_{i}\) vector. Subsequently, any number of target realization samples associated with each \(IM_{i}\) in the vector are drawn. Finally, a ground motion record database is searched to find matching records whose \(IM_{i}^{{\prime }} s\) are identical to those of the target realization samples, and that is to fully enforce hazad consistency.

One of the primary issues in the GCIM approach is the utilization of a reliable sampling technique for drawing an adequate number of samples from a conditional multivariate distribution of various intensity measures. To this end, this work is organized to (1) devise two different sampling methods, namely the Latin Hypercube Sampling (LHS) and Monte Carlo (MC) for the purpose of drawing realization samples from a target conditional multivariate distribution of various \(IM_{i}^{{\prime }} s\), (2) compare the performance of each of these methods in a real ground motion selection exercise based on single, two and average of more-than-two (average) conditioning intensity measure criteria developed by Ghotbi and Taciroglu (2020a), (3) utilize the selected ground motion suites to perform a series of damage/loss assessments on a generic 4-story moment frame non-ductile reinforced concrete building to examine the differences in estimated damage and losses due to use of different methods of conditioning.

1.1 Background

The application of Latin Hypercube and Monte Carlo sampling techniques is explored herein for drawing realization samples from a conditional multivariate distribution of various \(IM_{i}^{{\prime }} s\). Despite a broader use of MC in the field of ground motion selection (Bradley 2010, 2012; Tarbali and Bradley 2015, 2016; Tarbali et al. 2018), LHS applications are scarce. As such, in this section it is aimed to review some of the prior works in which the applications of both of these methods are demonstrated.

Vorechovsky and Novák (2009) combined MC and LHS in order to draw a smaller number of samples from a multivariate distribution of various variables. The samples were drawn such that the empirical distributions of them closely match the theoretical target distributions aiming at preserving the correlation structure among all variables with respect to the theoretical covariance matrix. Dolsek (2009) utilized LHS to draw realization samples from the PDF of various structural modeling parameters to consider the epistemic variability in those parameters besides the variability in ground motions to perform a set of incremental dynamic analyses. Chouna and Elnashai (2010) utilized a simplified method based on modifying the quantile arithmetic methodology and compared it with MC, in order to consider the epistemic variability associated with different parameters involved in seismic loss assessment of structures. Zhongxian et al. (2014) studied the effects of variability in ground motions and some of the structural modeling parameters on the probabilistic seismic responses of bridges. They used LHS to consider the effects of uncertainity in structural modeling parameters. Decò and Frangopol (2013) used LHS to generate random earthquakes for the purpose of life-cycle risk assessment of bridges. Celarec and Dolšek (2013) studied the effects of variability in structural modeling parameters on the probabilistic seismic risk of reinforced concrete (RC) structures by using the first-order-second-moment (FOSM) reliability method combined with LHS.

Decò et al. (2013) utilized MC and LHS to consider the effects of uncertainty associated with the expected damage, restoration process, and rehabilitation costs in resilience-based seismic assessment of bridges. Kosič et al. (2014) studied the probabilistic response of RC structures using a single-degree-of-freedom (SDOF) structural system instead of modeling the entire structure. They used LHS to consider the effects of structural modeling parameters and used a suite of ground motion records in order to incorporate the effects of record-to-record variability in ground motions. Vamvatsikos and Fragiadakis (2010) performed an incremental dynamic analysis (IDA) on a 9-story moment frame steel structure considering the effects of epistemic variability in structural modeling parameters and the variability in ground motion earthquake records. They used different methods such as MC mixed with LHS as well as the FOSM method to consider the effects of the aforementioned variability.

Bucher (2009) used MC mixed with LHS for the purpose of optimization and design of seismic isolation devices to be incorporated into various structural systems. Pan et al. (2007) utilized LHS and a restricted pinning approach with respect to considering the uncertainty in various modeling parameters of the steel bridges. They also considered the simultaneous effects of variability in ground motion earthquake records and studied the corresponding impacts of both sources of uncertainty on the seismic demand fragilities for various components of the bridges. Tubaldi et al. (2012) utilized MC mixed with LHS for the purpose of uncertainty propagation into the structural models in order to perform seismic damage assessment on multi-span continuous bridges with dissipative piers and a steel–concrete composite deck. Finally, Vamvatsikos (2014a,b) adopted MC mixed with LHS to incorporate the effects of uncertainty in structural modeling parameters on the IDA, which was used to assess the seismic responses of various structures given multiple damage limit states.

As demonstrated with the review provided herein, the application of LHS in the field of ground motion selection with the main objective of generating hazard-consistent target realizations sampled from a theoretical multivariate distribution, namely the GCIM, is quite scarce. As such, the present study aims at utilizing LHS to select a number of ground motion suites based on different methods of conditioning. It is also aimed to utilize these suites to perform a number of seismic damage/loss assessments on a generic 4-story moment frame non-ductile reinforced concrete building to explore the distinct effects due to different methods of conditioning. The underlying framework to develop GCIM-based target distributions using a variety of conditioning approaches is addressed in Ghotbi (2018) and Ghotbi and Taciroglu (2020a), and the datails of the GCIM-based approach are omitted here for brevity.

2 Monte Carlo sampling technique

Here it is aimed to develop an approach in which the MC sampling technique is utilized to draw realization samples from a conditional multivariate distribution fitting to an \(IM_{i}\) vector, given the methodologies developed in Ghotbi and Taciroglu (2020a). To this end and following Bradley (2012), a two-level approach will be adopted here to draw realization samples of each \(IM_{i}\) from the conditional multivariate distributions of various \(IM_{i}^{{\prime }} s\), which was defined in Ghotbi and Taciroglu (2020a) based on single-, two-, and \(Avg{-}IM_{j}\) (average of more-than-two IMj’s) conditioning criteria. This is carried out first by obtaining a random rupture probability (\(Rup^{nsim}\)) from a disaggregation density function. Then, to draw samples from a multivariate distribution, an uncorrelated standard normal random vector is defined (\(u^{nsim}\)) whose elements are drawn from a standard normal distribution, independently. Using this vector, a correlated vector can be defined as

where L is the Cholesky decomposition of the correlation matrix, which is

Using this, the realization sample for each \(IM_{i}\) can be obtained via

where \(v_{i}^{nsim} = v^{nsim} \left( i \right)\) is the i-th element in the \(v^{nsim}\) vector, and \(Rup = Rup^{nsim}\).

3 Latin hypercube sampling technique

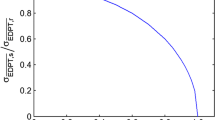

The Latin hypercube sampling (LHS) can be applied through a stratified sampling approach (see Fig. 1). In order to draw realization samples from the distribution of an \(IM_{i}\), its domain is stratified into N equally spaced and non-overlapping intervals. Next, samples are randomly drawn from each interval. By utilizing a random permutation approach, a set of random LHS samples can be obtained. The overall method to draw samples from a theoretical multivariate distribution of multiple \(IM_{i}^{{\prime }} s\) can thus be carried out through the following steps:

Schematic of the stratification of a variable distribution’s domain using LHS (adopted from Vorechovsky and Novák 2009)

-

1.

Sample from the actual marginal distribution of each \(IM_{i}\) using LHS (Zhang and Pinder 2003).

-

2.

Derive the correlation matrix of the sampled realizations of various \(IM_{i}^{{\prime }} s\).

-

3.

Obtain the Cholesky decomposition of a Hermitian positive-definite matrix (L) of the correlation coefficient matrix (see Eq. 2). If L is not positive-definite, then a method to find the nearest PD matrix can be used (see, e.g., Higham 2002).

-

4.

Add dependency between the independent samples, drawn using LHS, by transforming their governing normal distribution into a uniform distribution (this transformation preserves the dependency between the variables).

-

5.

Map each of the \(IM_{i}^{{\prime }} s\) uniform distribution onto the corresponding probability distribution of each, defined by a GMPE.

-

6.

Obtain the correlation structure among the new realization samples in order to compare it with the original correlation structure of the theoretical multivariate distribution to ensure that they are identical. To enforce this, a stochastic optimization using the simulated annealing (Dolsek 2009) is incorporated in order to preserve the correlation structure among the realization samples.

4 Applications

Applications of the methodologies described in the preceding sections are presented here. First, a vector of \(IM_{i}^{{\prime }} s\) needs to be populated. As suggested by Bradley (2012), various intensity measures—such as spectral acceleration at multiple periods (SAT), Arias Intensity (AI), Cumulative Absolute Velocity (CAV), 5–75% significant duration (Ds575) and 5–95% significant duration (Ds595)—will be picked to populate the \(IM_{i}\) vector. For SAT, 21 different periods, identical to those for which the hazard curves are available, have been adopted. The GMPEs to be used to define the moments (i.e., the median and standard deviation) of the distribution of each \(IM_{i}\) in the \(IM_{i}\) vector are adopted from Boore and Atkinson (2008) for SAT, PGA, and PGV, from Campbell and Bozorgnia (2012) for AI, from Campbell and Bozorgnia (2010) for CAV, and from Bommer et al. (2009) for Ds575, and Ds595. For generating the multivariate distributions of \(IM_{i}^{{\prime }} s\) in the \(IM_{i}\) vector, a matrix containing the cross-correlations between the \(IM_{i}^{{\prime }} s\) should be defined in addition to the median and standard deviation of each IMi (for details, see Table 1 in Bradley 2012, and Table 2 in Tarbali and Bradley 2015).

Based on Ghotbi (2018), three different algorithms are utilized to generate hazard-consistent target \(IM_{i}^{{\prime }} s\) based on a multi-conditioning (single-, two-, or \(Avg{-}IM_{j}\)) approach leading to three distinct multivariate distributions of the adopted \(IM_{i}\) vector. The MC and LHS will then be employed to draw 30 realization samples from the marginal distribution of each \(IM_{i}\). To this end, Fig. 2 displays the realization samples (blue curves) for the response spectrum (SAT) conditioned on a set of different \(IM_{j}^{{\prime }} s\). It is also useful to add that LHS was utilized to draw samples from the theoretical distribution of the \(IM_{i}\) vector whose median (see the red solid curves) and 16th/84th percentiles (see the red dashed curves) are also shown in the sub-figures.

Figure 3 shows the comparison between the SAT (Fig. 3a) obtained using MC (shown in blue) and LHS (shown in black) based on a single conditioning approach. Figure 3b–d display the empirical distributions of CAV, AI and Ds595, respectively, which were obtained using MC (shown in blue) and LHS (shown in black), again for a single conditioning (single-\(IM_{j}\)) intensity measure. As noticed from all the graphs, LHS performance is slightly better than MC given the same number of samples, which can be noticed from the extent to which the statistics (Fig. 3a) or the distributions (Fig. 3b–d) of the realization samples match those of the GCIM targets (shown in red). Of course, the MC performance can be improved by increasing the number of samples or repeating the sampling process for a number of times to get better results, but clearly at a higher computational cost.

Figure 4 is a repeat of Fig. 3, however given a two-\(IM_{j}\) conditioning approach for SAT, CAV, Ds575 and Ds595 as the adopted target \(IM_{i}^{{\prime }} s\). It is clear, once again, that the LHS performance is more desirable compared to the MC.

The same set of comparisons are made for the case of \(Avg{-}IM_{j}\) (see Fig. 5), which affirms, once again, the superiority of LHS over MC, albeit slightly less so compared to the single- and two-\(IM_{j}\) cases.

In some of the graphs showing the empirical distributions of the realization samples, it is observed that the blue curves (e.g., see Fig. 5c), obtained using MC, intercept the confidence bounds (dashed curves) meaning that those samples must be rejected. However, none of the cumulative distributions obtained using LHS (see the black curves) demonstrated such unacceptable behavior. Moreover, the main reason why LHS performed better in the majority of above cases can be attributed to the stratifications strategy in LHS, which made it possible to draw samples from the entire distribution domain. Whereas in MC, since the samples are randomly drawn, as the number of designated samples are quite low, there is no guarantee that those samples are drawn uniformly from the entire distribution domain. Again, increasing the number of samples or repeating the MC for a number of times should improve the outcomes, but this will result in higher computational costs.

5 Ground motion selection

In this section, ground motion records are selected to match the target realizations drawn using LHS through the procedure laid out in the previous section. In order to select the ground motion records, a hypothetical site in the City of Los Angeles, California (LONG− 118.43; LAT− 34.053) with a Vs = 760 m/sec and a depth to a 2.5 km/s shear-wave velocity horizon of \({\text{z}}_{2.5} = 1 {\text{km}}\) is chosen. Using the relationships developed in Ghotbi (2018) and Ghotbi and Taciroglu (2020a), different GM suites are selected based on different conditioning criteria by specifically putting major emphasis on the ground motion spectral content—for example a 99% importance weigh is assigned to SAT-based content during the selection phase (see Ghotbi and Taciroglu 2020a). For the causal parameters, the magnitude range of \({\text{M}} = \left[ {5, 8.5} \right]\), the closest source-to-site distance range of \({\text{R}}_{\text{jb}} = \left[ {0, 100} \right] {\text{km}}\), and the shear wave velocity range of \({\text{V}}_{{{\text{s}}30}} = \left[ {600, 1200} \right] {\text{m}}/{ \sec }\) corresponding to site class B & upper-C, are adopted. The maximum scale factor is set to be 4.5, however an attempt was made to keep the scaling factor (on average) as close to 1.0 as possible in order to keep the original earthquake records unaltered (see, e.g., Baker 2015; Miano et al. 2018). The hazard consistency is enforced by considering up to 2000 rupture scenarios and their relative contributions to different types of conditioning intensity measure.

As an example, a suite of ground motions consisting of 30 bi-directional records selected based on an \(Avg{-}IM_{j}\) conditioning approach is provided here (see Fig. 6) and the rest along with the associated details are omitted for brevity, however can be found elsewhere (see Ghotbi and Taciroglu 2020a). The PEER NGA-West2 database (Bozorgnia et al. 2014) is consulted to select the earthquake records matching the target realization samples. Once a record that is matching a target realization is selected, it is no longer utilized in the remainder of the selection process.

Fig. 6a shows that, given a larger weight assigned to SAT during the selection process, the statistics of the selected records (shown in green) match those of the target (shown in blue). However, as seen in Fig. 6b, the cumulative distribution of a non-SAT intensity measure (e.g., AI), which is shown in black, does not match the target (shown in blue). This rather significant mismatch can be attributed to the smaller weight, which was initially assigned to non-SAT intensity measures.

6 Structural seismic responses

A generic 4-story moment frame non-ductile reinforced concrete (RC) building is modeled and used in nonlinear response history analyses. The degree of non-ductility is defined through the ratio \(V_{p} /V_{n} ,\) where Vp is the shear at probable moment strength, and Vn is the nominal shear strength per ASCE/SEI 41-06 for low ductility demand. Accordingly, \(V_{p} /V_{n}\) is set equal to 1.0. The building's fundamental period of vibration is T1 = 1.30 s. The structure is modeled as a two-dimensional moment frame in OpenSees (McKenna et al. 2000). More details regarding the structural properties can be found in Galanis (2014), and Ghotbi and Taciroglu (2020b).

Figure 7 shows the key elements and material models that are used for simulating the non-ductile behavior (see Elwood 2004); and Fig. 8 displays the schematic of the building. More specifically, Fig. 7a presents the backbone curve that defines the plastic hinge (shown in purple in Fig. 8) behavior based on the work of Ibarra et al. (2005). Bar-slip is implicitly considered through an elastic rotational spring (Fig. 7b). While the axial-flexure interaction is incorporated, the shear-flexure interaction is neglected due to the slenderness of the structural members of this particular building. More useful information regarding the incorporation of axial-shear-flexure interaction in reinforced concrete structural elements can be found in Miano et al. (2019).

Details of the element properties—including the cross-sectional properties and definitions and assigned values of plastic hinge parameters for simulating non-ductile behavior—can be found in Galanis (2014). It is also worth noting here that, given a high computational cost associated with the nonlinear response history analyses that will be described later; a parallel computing approach was adopted (Ghotbi 2018). As such, all of the analyses were carried out on STAMPEDE2, which presently is the primary supercomputer of the Extreme Science and Engineering Discovery Environment (XSEDE).

6.1 Effects of conditioning intensity measures \(\left( {IM_{j}^{\prime } s} \right)\)

The effects of ground motions selected based on different conditioning approaches on the structural seismic responses are presented in this section. A risk-based point-of-comparison (POC) approach (Kwong 2015) is adopted to develop reference demands, which are then used as the basis for comparison purposes to examine the relative efficiency of different GM suites selected based on various conditioning approaches. Details of this procedure are omitted here for brevity, however can be found in the study by Ghotbi and Taciroglu (2020b). Two engineering demand parameters (EDPs), namely the inter-story drift ratio (IDR) and the peak floor acceleration (PFA) are selected to be used in comparison studies.

Figure 9 shows the statistics (i.e., median and percentiles) of the responses obtained from nonlinear response history analyses. On each plot, the damage thresholds associated with the immediate occupancy (IO), life safety (LS), and collapse prevention (CP) limit states (see FEMA 356) are also shown.

Here, the statistics of the EDPs obtained using the GM suites selected based on single-, two-, and \(Avg{-}IM_{j}\) criteria, are compared against the risk-based POC reference demands. In order to compensate for deficiency of the single conditioning intensity measure approach to capture the structural responses associated with higher modes of vibration, three distinct GM suites, instead of just one, were selected for the single-IMj case—namely, for upper- (2.0T1) and lower-bound (0.20T1) conditioning periods (see Kohrangi et al. 2017) in addition to 1.0T1—, and maximum responses obtained by using all of these suites are plotted (shown in cyan color) in addition to the other cases.

Figure 9a,b display the IDR and PFA values. It is evident from these figures that the \(Avg{-}IM_{j}\) case (red curves) is superior, albeit a bit less so for the case of PFA, when the median responses are compared against the POC reference demands (pink curves). The single- (blue curves), and two-\(IM_{j}\) (black curves) and maximum of the set of three single-\(IM_{j}^{\prime } s\) (cyan curves) have all underestimated the drift demand when compared to the POC demand, but performed better when it came to predicting the PFA. The reason for such better performance can be traced back to the higher spectral content of selected GMs at shorter periods. It is also worth adding that the superiority of the \(Avg{-}IM_{j}\) case with respect to capturing the drift demands is likely due to the richer spectral content—across multiple modes of vibration — of earthquake records in that suite.

It is critical to note that the selected records—especially for the case of two-\(IM_{j}\) in terms of spectral matching over the period range of interest—are affected by several restrictions set forth initially with respect to the casual parameters, scaling factors and the availability of the ground motion records. As such, the inherently lower likelihood of finding records for the two-\(IM_{j}\) (whose spectra are pinched at the lower- and upper-bound conditioning periods) may have affected some of the results. Nevertheless, this potential shortcoming is avoided with the \(Avg{-}IM_{j}\) approach, which has smoother spectra (Ghotbi and Taciroglu 2020a).

6.2 Seismic loss analyses

In order to examine the effects of various ground motion selection strategies based on different methods of conditioning, a simplified loss assessment on the 4-story moment frame non-ductile RC building is conducted. Figure 10 shows the process of obtaining the cumulative distribution function \(G(dv|dm)\) of seismic losses (e.g., repair cost, and time). This task is fully automated and available through a web-based application called the Performance Assessment Calculation Tool (PACT), which is based on the methodologies described in FEMA P-58. Thus, PACT was utilized in this study. To this end, the subsequent loss assessment workflow was organized to (1) populate vectors of various engineering demand parameters (EDPs)- IDR and PFA here- through seismic response history analyses on the building given different suites of earthquake records (see Sect. 6.1), (2) define various Performance Groups (PGs) and their quantities (Yang et al. 2009; Baradaran Shoraka et al. 2013) each of which is controlled by a certain EDP, (3) define fragilities based on a range of damage limit states (e.g., minor, moderate and major damage) for each performance group and determine the repair cost and time associated with each damage state, (4) use PACT to generate the loss fragilities.

Probabilistic procedure for seismic loss assessments (adopted from Yang et al. 2009)

There is a variety of component fragilities available in the PACT library. Accordingly, relative to their applications to non-ductile reinforced concrete buildings, some of those fragilities were used here for grouping the structural components into different PGs. A certain EDP governs each PG. For instance, the non-code-conforming beams, columns and joints in each floor comprise a PG, which is governed by IDR; or wall partitions form another PG, which are also governed by IDR. Some other components, however, are governed by PFA- e.g., chiller, air hanging unit, etc. An example of fragilities based on different damage limit states for a PG (i.e., the beams or joints) is provided in Fig. 11.

In order to increase the accuracy of the seismic loss assessments conducted here, and since the number of EDPs in each EDP vector is bounded by the limited number of response history analyses, the statistical approach described in Yang et al. (2009) is utilized to generate 200 realizations per each EDP vector. To keep the study brief, the collapse state was excluded from the analyses, which would trigger subsequent losses associated with the building’s demolition and replacement.

Using the information provided above, a seismic loss assessment was conducted using PACT by utilizing three suites of ground motion records selected based on single-\(IM_{j}\), two-\(IM_{j}\), and \(Avg{-}IM_{j}\) conditioning approaches. Figure 12 presents the outcomes where it can be seen that, for both the repair cost (see Fig. 12a) and repair time (a.k.a., downtime) (see Fig. 12b), the ground motion suite based on the \(Avg{-}IM_{j}\) conditioning approach was able to estimate the losses more accurately. The two other ground motions sets (based on single-\(IM_{j}\) and two-\(IM_{j}\)) appear to have underestimated the losses. The main reason for this can be traced back to the response history analyses (see Sect. 6.1), where it was concluded the \(Avg{-}IM_{j}\) suite was able to more accurately predict the responses when comparisons were made with respect to a baseline risk-based approach, namely the point-of-comparison (POC). As such, the difference in the responses obtained by using different ground motion suites can be the root cause of the difference observed in the associated seismic loss curves (see Fig. 12). To this end, the median repair costs associated with the single-\(IM_{j}\), two-\(IM_{j}\), and \(Avg{-}IM_{j}\) cases are $9.40 M, $10.32 M and $14.40 M, and the median repair times are 318, 340 and 454 days, respectively. This demonstrates a roughly %40 + difference between the median repair costs and times obtained using the \(Avg{-}IM_{j}\) suite compared to the rest. It is also necessary to add that the conclusions made herein are bounded by various limitations set forth in this study (e.g., building type and modeling, ground motion characteristics, etc.) so more studies would have to be conducted for more comprehensive conclusions.

Seismic loss assessments of a generic 4-story moment frame non-ductile RC building using GM suites selected based on single-\(IM_{j}\), two-\(IM_{j}\), and \(Avg{-}IM_{j}\) conditioning approaches, a repair cost based on the total replacement cost of $30 M; and b repair time based on the total replacement time of 800 days

7 Concluding remarks

The performances of the Latin Hypercube versus Monte Carlo (MC) sampling technique were examined in a process to draw realization samples from a conditional multivariate distribution of various intensity measures based on a generalized conditional intensity measure (GCIM) approach. To facilitate this, three different methods of conditioning were employed to generate a set of conditional multivariate distributions to be fitted to an intensity measure vector consisting of several intensity measures. LHS has exhibited superiority over MC for the same number of realization samples associated with any given intensity measure in the intensity measure vector. As such, it was utilized as a baseline for sampling purposes, which was ultimately used in a subsequent ground motion selection effort. To this end, various suites of ground motion records were selected by searching a ground motion database to find matching records whose characteristics are identical to those of the realization samples drawn by LHS. The ground motion selection process was repeated for three different methods of conditioning, namely single-\(IM_{j}\), two-\(IM_{j}\), and \(Avg{-}IM_{j}\). Using the selected suites, a series of nonlinear response history analyses was conducted on a generic 4-story moment frame non-ductile reinforced concrete structure.

The analyses outcomes revealed the superiority of the ground motion suite selected based on the \(Avg{-}IM_{j}\) over the single-\(IM_{j}\) and two-\(IM_{j}\), relative to a risk-based POC reference demand. The three ground motion suites were also used in a subsequent loss assessment, where various performance groups and EDP responses were designated to compute the repair cost and time for the building. Based on that, it was observed that the ground motion suites selected based on single-\(IM_{j}\), and two-\(IM_{j}\) conditioning approaches appeared to have underestimated the seismic losses by over 40% compared to the suite selected based on \(Avg{-}IM_{j}\).

References

Baker JW (2015) Efficient analytical fragility function fitting using dynamic structural analysis. Earthq Spectra 31(1):579–599

Baradaran Shoraka M, Yang TY, Elwood KJ (2013) Seismic loss estimation of non-ductile reinforced concrete buildings. Earthq Eng Struct Dyn 42:297–310

Bommer JJ, Stafford JP, Alarcón EJ (2009) Empirical equations for the prediction of the significant, bracketed, and uniform duration of earthquake ground motion. Bull Seismol Soc Am 99(6):3217–3233

Boore MD, Atkinson AG (2008) Ground-motion prediction equations for the average horizontal component of PGA, PGV, and 5%-damped PSA at spectral periods between 0.01 and 10.0 s. Earthq Spectra 24(1):99–138

Bozorgnia Y, Abrahamson AA, Atik AL et al (2014) NGA-West2 research project. Earthq Spectra 30(3):973–987

Bradley AB (2010) A generalized conditional intensity measure approach and holistic ground-motion selection. Earthq Eng Struct Dyn 39:1321–1342

Bradley AB (2011) Empirical equations for the prediction of displacement spectrum intensity and its correlation with other intensity measures. Soil Dyn Earthq Eng 31(8):1182–01191

Bradley AB (2012) A ground motion selection algorithm based on the generalized conditional intensity measure approach. Soil Dyn Earthq Eng 40:48–61

Bucher C (2009) Probability-based optimal design of friction-based seismic isolation devices. Struct Saf 31:500–507

Campbell WK, Bozorgnia Y (2010) A ground motion prediction equation for the horizontal component of cumulative absolute velocity (CAV) based on the PEER-NGA strong motion database. Earthq Spectra 26(3):635–650

Campbell WK, Bozorgnia Y (2012) A comparison of ground motion prediction equations for arias intensity and cumulative absolute velocity developed using a consistent database and functional form. Earthq Spectra 28(3):931–941

Celarec D, Dolšek M (2013) The impact of modelling uncertainties on the seismic performance assessment of reinforced concrete frame buildings. Eng Struct 52:340–354

Chouna Y-S, Elnashai SA (2010) A simplified framework for probabilistic earthquake loss estimation. Probab Eng Mech 25:355–364

Decò A, Frangopol MD (2013) Life-cycle risk assessment of spatially distributed aging bridges under seismic and traffic hazards. Earthq Spectra 29(1):127–153

Decò A, Bocchini P, Frangopol MD (2013) A probabilistic approach for the prediction of seismic resilience of bridges. Earthq Eng Struct Dyn 42:1469–1487

Dolsek M (2009) Incremental dynamic analysis with consideration of modeling uncertainties. Earthq Eng Struct Dyn 38:805–825

Elwood KJ (2004) Modelling failures in existing reinforced concrete columns. Can J Civ Eng 32:846–859

Galanis P (2014) Probabilistic methods to identify seismically hazardous older-type concrete frame buildings. Ph.D. Dissertation, University of California Berkeley

Ghotbi A (2018) Resilience-based seismic evaluation and design of reinforced concrete structures. Ph.D. Dissertation, Unversity of California Los Angeles

Ghotbi A, Taciroglu E (2020a) Ground motion selection based on a multi-intensity-measure conditioning approach with emphasis on diverse earthquake contents. Earthq Eng Struct Dyn 1-17. https://doi.org/10.1002/eqe.3383

Ghotbi A, Taciroglu E (2020b) Effects of conditioning criteria for ground motion selection on the probabilistic seismic responses of reinforced concrete buildings. Earthq Eng Struct Dyn 1-15. https://doi.org/10.1002/eqe.3380

Higham JN (2002) Computing the nearest correlation matrix-A problem for finance. IMA J Numer Anal 22:329–343

Ibarra FL, Medina AR, Krawinkler H (2005) Hysteretic models that incorporate strength and stiffness deterioration. Earthq Eng Struct Dyn 34(12):1489–1511

Kohrangi M, Bazzurro P, Vamvatsikos D, Spillatura A (2017) Conditional spectrum-based ground motion record selection using average spectral acceleration. Earthq Eng Struct Dyn 46(10):1667–1685

Kosič M, Fajfar P, Dolšek M (2014) Approximate seismic risk assessment of building structures with explicit consideration of uncertainties. Earthq Eng Struct Dyn 43(10):1483–1502

Kwong NS (2015). Selection and scaling of ground motions for nonlinear response history analysis of buidlings in performance-based earthquake engineering. Ph.D. Dissertation, University of California Berkeley

McKenna F, Fenves LG, Scott MH (2000) OpenSees: a framework system for earthquake engineering simulation. Comput Sci Eng 13(4):58–66

Miano A, Jalayer F, Ebrahimian H, Prota A (2018) Cloud to IDA: efficient fragility assessment with limited scaling. Earthq Eng Struct Dyn 47(5):1124–1147

Miano A, Sezen H, Jalayer F, Prota A (2019) Performance-based assessment methodology for retrofit of buildings. ASCE J Struct Eng 145(12):04019144

Pan Y, Agrawal KA, Ghosn M (2007) Seismic fragility of continuous steel highway bridges in New York State. ASCE J Bridge Eng 12(6):689–699

Tarbali K, Bradley BA (2015) Ground motion selection for scenario ruptures using the generalised conditional intensity measure (GCIM) method. Earthq Eng Struct Dyn 44:1601–1621

Tarbali K, Bradley BA (2016) The effect of causal parameter bounds in PSHA-based ground motion selection. Earthq Eng Struct Dyn 45(9):1515–1535

Tarbali K, Bradley AB, Baker WJ (2018) Consideration and propagation of ground motion selection epistemic uncertainties to seismic performance metrics. Earthq Spectra 34(2):587–610

Tubaldi E, Barbato M, Dall’Asta A (2012) Influence of model parameter uncertainty on seismic transverse response and vulnerability of steel-concrete composite bridges with dual load path. ASCE J Struct Eng 138(3):363–374

Vamvatsikos D (2014a) Seismic performance uncertainty estimation via IDA with progressive accelerogram-wise latin hypercube sampling. ASCE J Struct Eng 140(8):A4014015

Vamvatsikos D (2014b) Seismic performance uncertainty estimation via IDA with progressive accelerogram-wise latin hypercube sampling. ASCE J Struct Eng. 140(8):A4014015

Vamvatsikos D, Fragiadakis M (2010) Incremental dynamic analysis for estimating seismic performance sensitivity and uncertainty. Earthq Eng Struct Dyn 39:141–163

Vorechovsky M, Novák D (2009) Correlation control in small-sample Monte Carlo type simulations I: a simulated annealing approach. Probab Eng Mech 24:452–462

Yang TY, Moehle J, Stojadinovic B, Der Kiureghian A (2009) Seismic performance evaluation of facilities: methodology and implementation. ASCE J Struct Eng 135(10):1146–1154

Zhang Y, Pinder G (2003) Latin hypercube lattice sample selection strategy for correlated random hydraulic conductivity fields. Water Resour Res 39(8):1–11

Zhongxian L, Yang L, Ning L (2014) Vector-intensity measure based seismic vulnerability analysis of bridge structures. Earthq Eng Eng Vib 13:695–705

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Ghotbi, A.R., Taciroglu, E. Structural seismic damage and loss assessments using a multi-conditioning ground motion selection approach based on an efficient sampling technique. Bull Earthquake Eng 19, 1271–1287 (2021). https://doi.org/10.1007/s10518-020-01016-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10518-020-01016-6