Abstract

Selecting the optimum component implementation variant is sometimes difficult since it depends on the component’s usage context at runtime, e.g., on the concurrency level of the application using the component, call sequences to the component, actual parameters, the hardware available etc. A conservative selection of implementation variants leads to suboptimal performance, e.g., if a component is conservatively implemented as thread-safe while during the actual execution it is only accessed from a single thread. In general, an optimal component implementation variant cannot be determined before runtime and a single optimal variant might not even exist since the usage contexts can change significantly over the runtime. We introduce self-adaptive concurrent components that automatically and dynamically change not only their internal representation and operation implementation variants but also their synchronization mechanism based on a possibly changing usage context. The most suitable variant is selected at runtime rather than at compile time. The decision is revised if the usage context changes, e.g., if a single-threaded context changes to a highly contended concurrent context. As a consequence, programmers can focus on the semantics of their systems and, e.g., conservatively use thread-safe components to ensure consistency of their data, while deferring implementation and optimization decisions to context-aware runtime optimizations. We demonstrate the effect on performance with self-adaptive concurrent queues, sets, and ordered sets. In all three cases, experimental evaluation shows close to optimal performance regardless of actual contention.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

A software component exposes its functionality via an application programming interface (API) while hiding its implementation details. Selecting the right implementation variant of a component for a given task can be tedious and time consuming. The component implementation performing best in the worst case may perform worse in the actual executions. Yet programmers tend conservatively optimize for the worst case, e.g., they use the component implementations that scale best, even though scalability is not an issue and, hence suboptimal, in the actual executions.

Components that can run safely in concurrent, i.e., contended, contextsFootnote 1 have become increasingly important since the rise of symmetric multiprocessing (SMP) architectures. We refer to them as concurrent components. A common problem programmers face when implementing concurrent components is that the level of runtime contention of a component, i.e., the concurrency a component is exposed to, is unknown at development time. If multiple (increasingly deep) layers of APIs depend on one another, it becomes increasingly difficult to know which level of contention a component will be exposed to in the end. Yet again, often a conservative approach is taken. A thread-safe component is picked if there is a possibility that an API is used in a concurrent context in some possible deployment, e.g., by a multi-threaded application.

However, if the actual contention was known, alternative component implementation variants may be preferable. If contention is high, it may be beneficial to use an implementation based on lock-free or wait-free data structures rather than an implementation using mutual exclusion locks. Likewise, if contention is low or synchronization is not needed, implementations that block or disregard concurrency would be more beneficial. The contention is a property that can change throughout program executions and the respectively optimal component implementation does so as well.

Since optimizing single thread performance in hardware got increasingly difficult the last decade, both hardware and operating system (OS) vendors added more and more synchronization features, exposed at different levels of an application stack, making the choice of synchronization mechanisms more difficult than ever for programmers. The same component implementation might perform well in one deployment environment and bad in a different one. With emerging cloud platforms, that environment might not even be known until runtime.

A lot of research has focused on improving the synchronization mechanisms to cope with varying contention. Adaptive spin locks (Pizlo et al. 2011) and biased locking (Russell and Detlefs 2006) partially addresses this issue by adapting to and, hence, providing higher performance for contended and uncontended contexts, respectively.

However, the nature of mutually exclusive locking tends to imply scalability bottlenecks. Specialized lock-free component implementations (Michael and Scott 1996; Kogan and Petrank 2011; Herlihy et al. 2008; Fomitchev and Ruppert 2004) provide even better concurrent performance, by limiting contention to actual data conflicts, carefully hand tuning the algorithms to spatially distribute those data conflicts, as well as even allowing some data conflicts not violating the consistency of the components. These hand-crafted highly specialized concurrent component implementations tend to scale best when available. However, due to the use of atomic instructions and stricter memory models required for concurrent consistency, they are suboptimal in uncontended contexts.

Even more research has been focused on universal “silver bullet” constructions like transactional memory (TM) (Herlihy and Moss 1993) that would automatically turn sequential components into scalable concurrent components without mutual exclusion. First it was implemented in software (STM) (Herlihy et al. 2003; Saha et al. 2006; Felber et al. 2008) to provide ease of use and scalable concurrent performance. Then it was implemented in hardware (HTM) (Hammond et al. 2004; Ananian et al. 2005) to get constants down, but it lost some ease of use such as transactional composition. Hybrid variant (Damron et al. 2006) combined the transactional composition of STM with the performance of HTM.

Existing sequential components were optimized for sequential contexts and never had concurrency in mind. They did not minimize data dependencies and, e.g., deliberately rely on an updated size variable for each operation. They did not consider making disjoint memory accesses whenever possible, which is required for good concurrent performance. Therefore, after being automatically transformed using TM, non-essential data dependencies have to be removed manually to scale well in concurrent contexts. However, the removal of those data dependencies, e.g., by adding re-computations of a size variable for each operation, makes them then perform worse in sequential contexts. Even in the concurrent contexts they were optimized for, transactional memory does not allow certain non-essential data conflicts to happen without aborting in the way that specialized lock-free data structures do. Hence, they can not compete with lock-free data structures in the concurrent contexts.

The dream of not having to manually pick component variants based on assumed contention traces back to old components like Vector in the Java class library. All methods were “synchronized” so that programmers could assume thread-safety always. Unfortunately, the approach had bad uncontended performance, and increasingly bad concurrent performance as number of cores grew, due to the use of mutual exclusion locks. Therefore, the dream was abandoned in later generations of the class library, and the responsibility of picking the appropriate component variant became the burden of programmers again.

This paper rejects the idea of a single “silver bullet” synchronization mechanism that performs optimally on all levels of contention. Instead we suggest uniting the different mechanisms with an architectural solution, self-adaptive concurrent components, that allows them to coexist and complement each other and combines their individual strengths. They regard contention as a context attribute and automatically transform at runtime between different component implementation variants. This way they provide superior uncontended performance and superior contended performance at the same time, while exposing a single component API for programmers. Self-adaptive concurrent components relieve the programmers from the burden of finding the optimal solution for each actual context; this is done automatically behind their interface. They encapsulate variants of its operation implementations (algorithms), state representations (data structures) and synchronization mechanism behind a well-defined interface and

-

1.

Switch between different algorithms and representations without changing its functional behavior. Observed operation sequences at runtime determine and transform to the expectedly best-fit algorithmic and data representation variants. This idea was first introduced in (Österlund and Löwe 2013).

-

2.

Switch between different synchronization mechanisms seamlessly without violating consistency. The default is optimistic biased locking that is essentially for free when used by a single thread. Contention sensors that do not have any noticeable performance impact automatically sense changes in concurrent contention. At signs of higher contention, the components adapt by either switching to a more fine-grained locking scheme that scales better or transform into a completely lock-free solution for maximum scalability. This idea was first introduced in (Österlund and Löwe 2014).

The focus of the present paper is on self-adaptive concurrent components. Hence, it discusses how to switch correctly between different algorithms and representations in a concurrent context and how to switch the synchronization mechanism, which is only interesting in a concurrent context.

In our experiments, we evaluate self-adaptive concurrent components extending Java concurrency data structures. We run them on our own modified OpenJDK and HotSpot Java Virtual Machine (JVM) showing that these concurrent components perform (almost) as well as the best known component for each contention context.

The paper is organized as follows: Sect. 2 introduces self-adaptive components using context-aware composition that at runtime selects the presumably optimal algorithms and data representations for each usage context. Section 3 introduces three standard synchronization mechanisms: locks, lock-free algorithms, and TM. Section 4 introduces self-adaptive concurrent components using context-aware composition also based on contention as an additional usage context attribute. Section 5 shows how contention can be monitored efficiently, Sect. 6 discusses how to consistently invalidate an outdated component variant, and Sect. 7 shows how the actual component transformation can be implemented efficiently. Section 8 introduces the Java concurrency data structures used in the evaluation, highlights some implementation details and finally describes the evaluation and the evaluation results. Section 9 discusses the related work and Sect. 10 concludes the paper and points out directions of future work.

2 Self-adaptive components

Self-adaptive components (or dynamically transforming data structures) as suggested by Österlund and Löwe (2013) build on the previous work of Andersson et al. (2008), Kessler and Löwe (2012) introducing context-aware composition and of Löwe et al. (1999) suggesting transformation components as a general design pattern for data structures with changeable representation and algorithm variants.

2.1 Transformation components

A transformation component consists of an abstract data representation and a set of abstract operations o operating on this data. The abstract data representation allows for different data representation variants, specialized for certain contexts. Each abstract operation o of a component also allows for different algorithm variants. In general, the same operation could come in different algorithm variants using the same data representation, each optimized for different contexts.

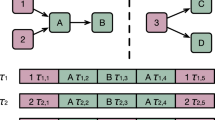

Transformation components follow a general design pattern for data structures with changeable representation and algorithm variants depicted in Fig. 1. It uses a combination of the well-known bridge and strategy design patterns (Gamma et al. 1995).

A transformation component holds a reference to the current representation variant. It could be any representation variant that is an instance of the abstract representation. All state information of a component is contained in the data representation variant.

State migration between data representation variants allow a component to transform its data representation. Therefore, each representation variant implements a Representation clone(Representation) method accepting an instance of a previous (outdated, unknown) representation variant as a parameter and returning the new actual representation variant instance.

The changeTo() operation of the transformation component invalidates the previous representation variant so that accesses to it will be trapped, creates a new representation variant of a new type and populates it using the clone() method.

For each operation o the component also holds references to the current algorithm variant implementing o on the current representation variant. Algorithm variants are classes specializing an abstract operation class. The abstract class provides an execute() method implemented (differently) by all algorithm subclasses. Calls to an operation are delegated to the current algorithm variant.

In general, one representation variant might have several algorithm variants per operation o and for each operation, a setOperation...Algorithm() method sets the expectedly best algorithm for (a sequence of) calls to o. Then even a selection of the algorithms adapted to the actual call context is possible and beneficial as shown by Andersson et al. (2008). Without loss of generality, we assume here that the representation variant determines the algorithm variant for each operation and that the component variants comprise both the representation and its associated set of algorithm variants.

In the transformation component pattern, the transition from one to another component variant is triggered by an explicit call to the changeTo() or an setOperation...Algorithm() method in the application code. Context-aware composition, in contrast, automatically selects between different component variants based on the actual usage context. Finding the expectedly best (algorithm and data representation) variant, requires the designer to define an optimization goal, e.g., minimize execution time, and formal context attributes, e.g., problem size or number of processors and amount of memory available, which are expected to have an impact on the goal. Formal context attributes, i.e., the actual context, must be evaluable before each call to an operation o; the property to optimize must be evaluable after each call. Context-aware composition finds the expectedly best variant automatically using profiling, analysis, and/or machine learning. Using profiling or analysis or a combination thereof, the fitness of variants can be assessed for different selected actual contexts. Using machine learning, dispatchers can be trained selecting the expectedly best fitting variants of operations and representation implementation for each actual context.

Note that best-fit-learning can be performed offline at design or deployment time, or even online using feedback from online monitoring during program execution (Abbas et al. 2010; Kirchner et al. 2015a, b).

2.2 Self-adaptive components as generalized transformation components

Implementing context-aware composition in the operations of transformation components as an explicit dispatch makes the components self-adaptive. Then a call to an operation of a self-adaptive component:

-

1.

evaluates the actual context attributes,

-

2.

decides, based on the actual context, whether a new component variant should be selected and, if so, adapts the component using appropriate calls to the changeTo() or setOperation...Algorithm() method,

-

3.

calls the execute() method of the presumably best-fit algorithm on the presumably best-fit data representation,

-

4.

before returning a result and only in the case of online profiling and learning, evaluates and captures the performance of the operation on the current component variant for future, maybe revised decisions of best-fit component variants.

For integrating a set of existing variants implementing the same functional behavior of a component to a self-adaptive component and for replacing these variants with this self-adaptive component in an existing environment, certain potential pitfalls ought to be regarded. Below we distinguish and discuss issues in sequential and concurrent environments and how to handle them.

2.2.1 Self-adaptive components in sequential environments

If the internal data representation variant was exposed outside its component and then adapted to a new variant, there could be outdated representations referenced in the heap. Normally this does not happen as the internal representation should not be exposed. Instead all accesses should go through the component interface. Iterators, however, are an exception that needs to be explained more in detail.

Iterators have a reference to the component’s internal representation. A transformation triggered during (read) iteration interleaved with any write could lead to inconsistencies. Data structures usually (for good reasons) forbid arbitrary writes during iteration. However, the iterator itself may allow writes to the underlying internal representation. Care must then be taken to handle this case by, e.g., (1) distinguishing an external iterator object (similar to the component’s façade object itself) referencing an internal one, and abandoning the internal iterator when its internal data representation is outdated and then constructing a new internal iterator after a transformation, (2) not allowing the iterator access to the internal representation but always accessing through the façade object, or (3) deferring transformation until after iteration.

It may also happen that a variant has side effects either directly or by invoking callback methods in the environment outside the self-adaptive component. It must be assured that all side effects and callbacks with side effects and exactly those happen in the right order in all variants even if not needed in all of them. For instance, a constructor with side effects might never be executed since the variant is not used or, due to transformation, it might possibly be executed several times, or it is executed in a different order with other methods with side effects. Also, a callback with side effects might not occur in a variant but it does in another, or two callbacks with side effects might occur in different orders in different variants.

Especially, in the presence of callbacks, e.g., to equals or hashCode methods in Java, this cannot be guaranteed without knowing the usage environment. Then, side effect free callbacks ought to be explicitly required in preconditions; they can be enforced by conservative static analysis. It is worth noting that there should not be a problem if the implementation of those methods follows the specification for java.lang.Object (Oracle 2016) requiring that a contract must be followed such that subsequent invocations of equals or hashCode are consistent.

2.2.2 Self-adaptive components in concurrent environments

Also for concurrent components, all operations may potentially trigger a transformation between variants and perform (lightweight) bookkeeping of usage histories in order to make more informed transformation decisions in the future. This means that even a read operation could cause a mutation while the corresponding read in any of the existing variants (typically) does not. The usage context of a variant might assume that reading operations do not need to be synchronized because there is no mutation. In order for self-adaptive components to work correctly in existing concurrent usage contexts this assumption ought to be regarded.

The solution to this problem could be (1) synchronizing even reading operations, or (2) accepting that the self-adaptive component would make a suboptimal transformation decisions due to inconsistent bookkeeping. The latter is fine as long as it does not happen too often and the amortized time over all operations is shorter. The consistency of the actual data structure is never violated.

Another issue arises from using a variant as a monitor object to synchronize with. The monitor object used has to be the self-adaptive component façade object encapsulating the internal variants, as this is what is exposed to the outside world. This potentially requires code transformations in both the usage environment and the variants where references to this object need to be redirected to the façade object.

3 Synchronization mechanism variants

For concurrent execution environments, synchronization mechanisms assure the atomicity of thread access to critical resources. Orthogonally to the component variants, there are different variants of these mechanisms too. We describe three different synchronization mechanisms used to implement concurrent components: (1) locks, (2) lock-free synchronization using atomic instructions like compare-and-swap (CAS), and (3) TM. We argue they all have their weaknesses and strengths, specifically, for our main concern in this paper: performance. We discuss the theoretical pros and cons of each synchronization mechanism in both (almost) sequential and (highly) concurrent execution contexts.

3.1 Locks

A lock can be acquired by one and only one (owner) thread that may continue with its execution. It blocks other threads trying to acquire the lock until the owner releases it again. Locks are arguably the most common synchronization mechanism because of their availability and ease of use. They are also flexible in the sense that the locking granularity, i.e., locking the whole component instance at once (coarse grained) or some of its objects individually (fine grained), can be varied to achieve scalability. Problems connected to locks such as deadlocks will not be discussed here as we are only interested in performance and not in programming and verification efficiency.

Sequential Context In an (almost) sequential execution context, coarse grained locks around the complete sequential algorithms implementing an API are usually very efficient. If it can be statically proven that objects never escape a thread (by manual or automated escape analysis), locks can even be elided completely and the sequential code can run at no additional overhead. Even if it is not provable statically that a lock is always held by a single thread exclusively, the lock implementation may optimistically assume so using biased locking (Russell and Detlefs 2006; Pizlo et al. 2011). Therefore, it installs the presumed owner thread the first time it is locked using CAS, cf. Algorithm 1, with a dummy thread id NO_OWNER as the expected value indicating that no other thread has claimed to own the lock before. If the owner stays the same, locking and unlocking is performed with normal loads and stores without need for atomics, and is very fast.

Concurrent Context In concurrent execution contexts, locks suffer from problems predicted by Amdahl’s law (Amdahl 1967). Since locks fundamentally only allow one thread to execute at a time, locked programs do not scale very well when the sequential parts dominate the concurrent parts.

There are ways to mitigate this bottleneck by using a different locking scheme. One solution is to use fine-grained locking where only some objects of a complex component local to a change are locked instead of locking the whole component instance, with a negative impact on programming and verification efficiency. For instance, only some nodes in a tree are locked instead of locking the whole tree. Another solution is to use readers-writer locks that allow multiple readers but only one writer at a time.

Conclusion Locking is superior for (almost) sequential execution contexts but may not be the best candidate for a (highly) concurrent one.

3.2 Lock-free synchronization

Lock-free algorithms (Michael and Scott 1996; Kogan and Petrank 2011; Herlihy et al. 2008; Fomitchev and Ruppert 2004) were introduced to deal with the scalability problems of locks. Synchronizing instructions are used only on memory locations where there are actual data conflicts. They read and remember values from the memory locations, perform calculations and write the result back to these locations. Using operations like CAS, they detect conflicts, i.e., if another thread has changed the value in between. Then they try again (in a biased loop with branch prediction optimized for the success, and not the try again branch). This allows concurrent modification of components as long as there are no actual data conflicts and, thus, somehow mitigates the problems of Amdahl’s law by allowing more work to be done concurrently.

Besides the inherent complexity of these algorithms, not all sequential data structures have lock-free implementations yet. Also, a truly lock-free data structure requires the underlying execution environment, including the memory manager to be lock-free to retain its progress guarantee, which is difficult to assure. It requires, e.g., lock-free garbage collection (Sundell 2005) or hazard pointers (Michael 2004). However, this paper is not concerned with guaranteeing true lock-freedom or real-time properties. Our interest is only the scalable performance characteristics of this class concurrent component variants.

Sequential Context Lock-free algorithms are typically slower in (almost) sequential contexts because they are fundamentally designed to handle data conflicts, e.g., branches for loops retrying committing their changes, which is never necessary in a sequential execution. Moreover, simple component attributes such as the size of a collection are typically computed on demand in a lock-free implementation in order to reduce data conflicts. In the example of the size attribute, this leads to an O(n) instead of an O(1) operation of a corresponding sequential data structure that simply maintains a size counter because it does not need to optimize for concurrency.

Beyond this principle issue of lock-free algorithms, their performance greatly depends on the hardware and how fast its CAS instruction is compared to normal memory accesses.

Historically, x86 implemented LOCK CMPXCHG (CAS) by locking the whole memory bus globally during the execution of the instruction. Newer implementations, however, lock only the cache line where the CAS is executed. At least that is true for the officially supported aligned atomics. Misaligned atomics, although not officially supported, typically works still to support legacy software, and reverts back to the older behaviour whenever the memory access crosses two cache lines.

The cost also typically depends on whether the CAS is contended or not. And when it is contended, the cost typically depends on the locality of the thread causing the contention, e.g., if it has shared L2 cache or not.

A CAS requires sequentially consistent semantics and hence needs to issue a full memory fence. In certain architectures this might be a relatively expensive operation requiring all write buffers and caches to be serialized. Some newer hardware can speculate over fences and continue executing as store buffers are being flushed, but rolling back in case cache coherence detects that the speculation was unsafe and changes the observable outcome.

In the case of x86, which is considered strongly consistent, all aligned memory accesses already have sequentially consistent semantics, and the LOCK CMPXCHG instruction already implicitly issues a complete memory fence.

In other architectures such as POWER, which is weakly consistent, the cost is higher. Here, enforcing the sequentially consistent semantics requires: (1) an expensive heavy weight sync memory fence instruction, (2) a lwarx (load link) and stwcx (store conditional) in a loop until the instruction can return a valid result free from spurious failures, (3) a more lightweight isync fence to serialize the pipeline. In the JVM, the lightweight fence is conservatively replaced by another heavy weight sync, to accommodate reordering between a potentially latent store conditional and subsequent memory accesses after the specification, which, according to the specification, should not be possible. Nevertheless, the OpenJDK implementation of CAS on POWER uses the more conservative fencing than, e.g., C++11 to accommodate hypothetical broken implementations of POWER.

On POWER, the CAS can also spuriously fail due to context switching between the load link and store conditional. This is called a weak CAS—it admits false negatives but never false positives. Normally, the stronger behaviour is desired by users of CAS that do not care about such particularities. Therefore, generic CAS implementations typically guarantee strong CAS semantics by checking for false negatives and then retrying in a loop.

Lock-free algorithms can also rely on volatile memory accesses (other than CAS) with acquire-release semantics whose performance may similarly depend on which architecture it runs on. Machines with a total store order (TSO) typically do this cheaply, whereas machines with relaxed memory ordering (RMO) need some kind of lightweight fencing like lwsync on POWER.

Concurrent context is where the merits of lock-free programming become visible. Lock-free components commit changes at linearization points, cf. (Herlihy and Wing 1990), using CAS. Optionally they lazily, i.e. asynchronously on any subsequent operations, update references that are not necessary for consistency, but for improving time complexity. Therefore, only true data conflicts violating the consistency of the component require operations to be restarted and delay the execution significantly. Other data conflicts not necessary for maintaining the consistency can be tolerated and handled lazily, i.e., without restarting the operation. This makes lock-free algorithms very scalable in terms of performance and typically the best option if available in contended contexts.

Conclusion Even though CAS is implemented more or less efficiently by different hardware vendors, the performance of lock-free components is still inherently slower than the performance of their sequential counterparts in the absence of concurrency. However, when there is contention, this class of components provides the most fine-grained synchronization fine-tuned by clever implementers.

3.3 Transactional memory

Analogous to database transactions, Transactional Memory (TM) (Herlihy and Moss 1993) guarantees sequences of load and store instructions to execute in an atomic way. It is a universal construction for turning any sequential algorithm into a thread safe algorithm. Some constructions can even turn them into lock-free (Shavit and Touitou 1997) and wait-free (Moir 1997) algorithms without any deadlocks. Despite this theoretical benefit, TM has yet to become widely adopted in practice mainly due to performance reasons discussed below. Additionally and not discussed here in detail, speculative TM (performing in-place writes) cannot always guarantee that a transaction will ever end in case of contention since a speculative load could cause an infinite loop never reaching the commit operation.

Sequential context Software TM (STM) (Herlihy et al. 2003; Saha et al. 2006; Felber et al. 2008) typically performs significantly worse in a sequential environment compared to a normal sequential solution.

Performance depends on whether the algorithm uses write buffering versus undo logging, pessimistic versus optimistic concurrency, cache line based versus object based versus word based conflict detection etc. Saha et al. (2006) evaluated all these trade-offs.

Hardware TM (HTM) (Hammond et al. 2004; Ananian et al. 2005) can accelerate for instance write buffering in hardware and allows to elide locks. It can reduce the cost compared to STM, but relies on hardware that may or may not be available. Biased locking already shows better performance characteristics without special hardware support in this case.

Concurrent context The idea of a universal construction that transforms sequential code into concurrent code automatically without the need to re-engineer or re-design fails because sequential code typically has sequential data dependencies everywhere (since it was not optimized for concurrent use), which, in turn, causes rollback (abort and restart the transaction) storms.

STM is considered to have higher constant cost but better scalability than coarse grained locking. In previous publications, the break even point seems to be approximately four concurrent threads (Damron et al. 2006).

HTM has the same scalability properties as STM but provides better constants as long as the hardware write buffers are large enough for the operations, lowering the break even point to two concurrent threads (Damron et al. 2006).

However, carefully implemented lock-free algorithms typically outperform TM. The problem is that TM aborts transactions for all data conflicts. A lock-free algorithm, conversely, may know that a speculatively loaded value may become invalid or a lazily updated reference may not be written, and it does not matter for the consistency of the component and the correctness of the algorithm. Therefore, the lock-free algorithm may continue whereas the TM based code has to restart the transaction.

Conclusion TM does exhibit good concurrent scalability when there is no known lock-free component variant. TM provides decent sequential performance if hardware support is available. However, when there is no hardware support or a lock-free algorithm exists, other options are better.

4 Self-adaptive concurrent components

As discussed in the previous section, research in finding the ultimate synchronization mechanism combining the best of them all has not found a conclusion yet. We must admit that the best synchronization mechanism depends on the context including the application runtime behavior and the runtime environment. The application could be sequential or concurrent and its runtime behavior includes the operations invoked, their read to write ratio to the data representation, the number of threads, and their actual contention. The runtime environment includes properties of hardware, operating system, and language runtime environment such as the memory consistency of the architecture, the number of cores (and, hence, the need for scalability) whether there is a single socket system or multiple socket system, the number of hardware threads per core, the fairness of the scheduler, the fairness of the potential locking protocol (unfairness potentially yields higher performance since translation lookaside buffer (TLB) caches are already populated when reacquiring the lock), the availability (and stability) of HTM, the size of its write buffers, the speed of CAS operations, the size of caches, how caches are shared, what cache coherence protocol they employ, the speed of the memory bus etc. Some of the properties are known at compilation time, some at deployment time, and yet some vary dynamically at runtime.

Since there is no single silver bullet that provides the best possible performance in all these contexts, our approach is to understand the synchronization mechanism as yet another component variant self-adapted to the actual context dynamically. We refer to components adapting (among others also) their synchronization mechanism to the actual context as self-adaptive concurrent components. Based on the changing contention context, we strive to select the best performing component variant including the best synchronization mechanism and the algorithm variant with the lowest time complexity.

The contention attribute is orthogonal to other context attributes, e.g. size of actual parameters and actual operation call sequences. However, contention is regarded the most important dynamically changing context attribute. The rationale for this is that whenever contention builds up on locks, the system experiences a massive slow down due to the concurrency bottleneck that typically by far outweighs all other decisions, similar to how thrashing should be avoided at (almost) all costs. Getting the right fit for a given contention level gets the highest priority. Therefore, a straightforward layered approach is used for the contention (first layer) and the other context attributes (second layer).

A lock-free component variant is used whenever there is a lot of contention (first layer). In practice, there are not many lock-free variants of one and the same lock-free component. However, the number of processors used for storing the data representation instances of the component and for algorithms operating on it can still vary based on the number of processors actually available (second layer) according to Kessler and Löwe (2012).

If there is little contention a component variant protected by locks is used (first layer). If there are different sequential or parallel component variants, then transformations can happen to the (lock-based) component variant that has a presumably superior time complexity (second layer), either instantly with the first operation invoked (Andersson et al. 2008; Kessler and Löwe 2012) or in a deferred way using biasing (Österlund and Löwe 2013).

Of course, a more complex biasing could find tricky corner cases by simultaneously employing contention and other context attributes, like size of data, application profile, time complexity of operations used, hardware, and scheduling, to find more accurate break-even points for what contention level should trigger transformation to a lock-free component variant with potentially worse time complexity. This is less straightforward and potentially costly for the common case as the cost for the logic deciding the presumably best fitting solution increases. It only improves corner cases where manual attention perhaps should be advised anyway for optimal performance. It is therefore deferred to future work.

For the implementation of the first level adaptation of the synchronization variant, we propose a single contention manager object for each self-adaptive concurrent component object. It listens to contention level changes. If the current component variant is considered inappropriate for the current level of contention, it is invalidated and a new equivalent and presumably more appropriate component variant is instantiated. The contention manager receives input from the specific active implementation variants in completely different ways. The contention manager itself is invariant of how it gets its information.

For the contention manager, we need to address three issues. We need to (i) assess the contention context and decide whether changes in the contention should trigger a change of the component variant or not. If so, we need to (ii) invalidate the current component variant and, finally, we need to (iii) transform to the new component variants safely. Obviously, the steps (i)–(iii) need to be performed online and they contribute to the runtime overhead of self-adaptive concurrent components. Hence, efficiency of context assessment and transformation is an important issue. We will address (i)–(iii) in the subsequent three sections.

5 Continuous context feedback (i)

Continuous context feedback for contention, issue (i), is provided by a contention sensor. The contention sensor is implemented differently for the three different synchronization mechanisms. It provides a general interface, in essence hooks to code in the contention manager, for dealing with adaptation when contention levels change.

For each contention sensor implementation, it is beneficial to log only the bad uses of a certain synchronization mechanism. For instance, a lock would only notify the contention manager of bad uses of locks such as when blocking was necessary. Conversely, a message indicating that a biased lock was successfully acquired local to one thread would never lead to any change. Hence, such a message would only be an unnecessary overhead. The same principle applies to the contention sensors for all synchronization mechanisms described below.

Note that signs of high contention are monitored promptly so that components can eagerly transform into concurrent variants. The reason is that locks could be bottlenecks hampering global progress of the system, requiring immediate transformation into a concurrency friendly component.

Conversely, signalling low contention and transforming back into the lock based component variant is deferred until there is sufficient evidence that contention has indeed decreased to a single thread for a significant amount of time. The transformation is typically not necessary to prevent a serious performance bottleneck in the sense that scalability is prevented. It may however provide better performance if executed frequently.

5.1 Locks

The contention sensor for locks is quite important as locks tend to be the biggest bottlenecks in concurrent programs. Therefore, we must take extra care of minimizing the cost of this contention monitoring.

Modern virtual machines (VMs) employ adaptive locking schemes with support for biased locking. We will assume an ideal solution for us, presented by Pizlo et al. (2011) in JikesRVM and Russell and Detlefs (2006) for HotSpot (OpenJDK), that performs better when locking is done from a single thread, and adaptively spins when there is actual contention. We hereby define three levels of contention for a lock: 1) biased locking, 2) lightweight spin lock, 3) heavyweight blocking lock. Each level has its own type of lock that needs to be installed. The ideas apply for any managed runtime, but we will focus specifically on HotSpot, where our algorithms were implemented.

Note that our contention sensor just needs to understand that different locks are installed corresponding to different contention level. In a way, the installation of different locks based on contention is a self-adaptation of the lock component, which is below our general architecture for self-adaptive components done on the level of the runtime environment of our components.

5.1.1 Contention level 1: biased locking

A lock is biased against the first thread that acquired it. This owner thread can acquire and release the lock cheaper with normal loads and stores, avoiding heavier atomic instructions (Russell and Detlefs 2006). The owner thread is established using an atomic CAS operation to install a thread id in the object header. Once the owner has been established, subsequent acquire and release operations are almost for free. It also avoids allocating a lock data structure, by fitting all fields in the object header instead.

Biased locking is a speculative optimization that makes locking fast when used by a single thread. Therefore, it is optimized for very low contention levels. If it turns out that multiple threads need to use the lock, then the bias must be revoked. This is done by stopping the thread holding the bias using the same synchronization mechanism, referred to as safepoint, as used by a garbage collector (GC) to stop threads for GC pauses (all managed threads, referred to as mutators, need to be stopped during certain critical GC operations called GC pauses). Once inside of the safepoint, the lock is reacquired as a lightweight spin lock of level 2.

In detail, for revoking the biased lock of level 1 and promoting the lock to level 2 heuristics keep track of the cost of individual lock bias revocations. When the cost exceeds a certain threshold, a bulk re-bias operation is performed for all locks on objects of a certain type. This is done by maintaining bias epochs as counters for each type that must match a corresponding counter for each lock on objects of that type. A bulk re-bias operation issues a safepoint, changes the epoch counter of a type, and reclaims all locks currently held. Once the threads start running again, the epochs no longer match between the locks and their corresponding type. This way, the locks that were not held in the safepoint can be re-biased with new owners at subsequent acquire operations. This allows keeping more objects at level 1 and reduces spurious promotions to level 2.

5.1.2 Contention level 2: lightweight spin lock

Once a lock gets promoted to a lightweight spin lock, some contention can be assumed. In HotSpot these locks are implemented by having threads allocate some 8 bit aligned stack space for their lock, loading the object header, writing it to the stack, and installing the stack slot address into the object header using CAS. If the CAS succeeds, then the lock is acquired. Otherwise it failed. This type of stack allocated lock is still lightweight in that it does not need any heavyweight OS lock.

When locking fails, the lock can either spin and wait for a while before trying to lock again, assuming that a small critical section is concurrently executing in another thread that will release the lock soon. The naïve approach would be to just spin in a loop until the lock is released. But that has a number of problems.

Doing spinning right is very difficult and can either speed up a program or make it slower. An optimized spinning implementation in locks goes through the whole stack: from the application layer (disregarded here), over the VM layer and the OS layer, all the way to the hardware layer:

- VM:

-

VMs can adaptively change the length of the spinning loop before retrying by learning from history. If it finds that spinning takes too long, it promotes the lock to level 3.

- OS:

-

Waiting for a lock to be released by spinning is only a good idea if it is believed that the lock will be released soon. If a thread in a critical section was to be preempted by the OS or blockingly waiting for IO, then suddenly the spinning will waste many cycles in vain. Solaris allows to check cheaply from userspace if the owner of a lock is running on the processor or not. If it is not running, then spinning can be immediately abandoned.

- Hardware:

-

Modern processors typically have more than one hardware thread per core to allow sharing execution units like arithmetic logic units (ALU) within the core between different concurrently executing threads, to better exploit thread level parallelism (TLP) when instruction level parallelism (ILP) can not be exploited. On Intel chips it is normal to expect two hardware threads per core, and on SPARC chips 8 hardware threads per core. However, the hardware has no notion of priority once threads have been scheduled to run. So if one thread is in a critical section performing actual work, and a concurrent spinning thread is waiting for acquiring the lock, then the spinning thread in the same core will use the resources of the core and slow down the one thread performing actual work in the critical section.

To combat this, Intel has a special pause instruction that is similar to a nop but also flushes the pipeline with its speculations, uses less power, and lets the other thread in the core (which might be running the critical section) use the core better.

SPARC goes yet another step further and addresses the issue directly. The header word can be loaded with a special load, and then a special mwait register is set to wait a certain amount of time, or until the address that was loaded is changed. Meanwhile the hardware is performing very cheap spinning, while boosting the ILP of the other threads, and possibly the threads executing the critical sections. When the lock is released, the spinning threads will wake up and start executing again.

These spinning particularities may motivate performing transformations to new component variants already at this lock contention level, if the environment is not supporting spinning well enough throughout the technology stack. Especially, in environments where the JVM is not the only heavy process running on the same machine contending for cycles. With emerging deployment models where many JVMs are running on the same machine, spinning might be even more destructive than it usually is because of intra JVM interference, and further motivate transforming to a lock-free component earlier.

5.1.3 Contention level 3: heavyweight blocking lock

If bounded spinning on a lightweight lock was not enough, the lock is inflated to a heavyweight lock of level 3 able to block to the OS. A monitor object is allocated and installed into the header word (inflation) using CAS.

The heavyweight lock will also try spinning in a similar fashion as level 2, but once that fails, it will block the thread so that other threads may run instead. It is in particular this blocking event that contention sensors use to signal that locking probably is not the best fitting strategy any longer and that the application should transform to a better one.

The contention sensors need to monitor changes between different contention levels. Fortunately, since each contention level corresponds to a new type of lock being installed into the cell header, the performance cost of such contention monitoring is insignificant since it does not get invoked for each lock operation (especially fast paths), but only for the slow paths when locks are promoted due to contention or demoted due to lack of contention. In practice, we chose to only monitor blocking events. because it occurs when the contention becomes noticeable. Also it makes sure that the rest of the locking machinery has untouched performance having an extra code path when monitoring has insignificant cost as the cost of blocking far exceeds that of the monitoring code.

5.2 Lock-free

In a lock-free component, sensing the absence of contention would be useful. In order to do this, we piggyback on a garbage collector (GC) and use an idea similar to normal lock deflation, i.e., adaptive locking that decides to demote a level 3 type lock and to install a lower level. The VM normally aggressively deflates locks that are not held during garbage collection. Similarly, even lock-free component variants could be transformed back to the locking variant optimized for a single-thread regardless of the actual contention, for a chance to eventually revise the assumption that contention is high. Obviously, this could be the wrong decision, causing an immediate (and unnecessary) transformation back to the concurrency favoured variant again. However, this only happens infrequently (once every GC cycle), and the amortized cost of such bad transformations is low.

Alternatively, the lock-free component creates a probe object that is garbage on creation (no references to it). Hence, the probe gets freed, i.e., finalized, by the garbage collector at the next GC cycle. Its finalizer, however, checks the ownership of a lock-free component. If the component indeed was exclusively owned by a single thread, the probe did not sense any contention.

Checking this exclusive ownership when the probe finalizes can be done in two ways. In the first approach, accurate probing, threads read a special volatile field of a lock-free component for determining its owner thread. For each operation the component checks if the owner is NONE. If so, the thread could attempt to install itself as owner safely using CAS. If the CAS fails or the owner was not NONE, then MULTIPLE is written to the owner field, symbolizing that the component is used by multiple threads. Now the probe can see, when triggered by the GC finalizer, that the status is either NONE, a single thread or MULTIPLE. This value stabilizes quickly and its cache line can be shared with no conflicts.

In practice inaccurate probing is acceptable. Since monitoring is only providing hints for when to transform, the field can be updated using normal memory accesses for increased performance. This approximation could be a victim of data races and hence provide inaccurate hints. However, that does not affect the consistency of the component, only the hints used for the optimization.

The accurate and inaccurate probing techniques are similar to biased locking; they work well when a single instance is either contended or not. However, when contention changes rapidly, this technique may detect the change to lower contention undesirably late as it needs to wait until the next garbage collection cycle, which could take arbitrarily long. Then it could be beneficial with active probing. This scheme calls an introspection probe in the prologue of each operation of the data structure. For a contended data structure, the cost of cache coherence traffic dominates. Therefore, the extra time spent for performing local computations for probing is arguably cheap.

Our active probing mechanism maintains a ContentionStatus object in the lock-free data structure. It has a high resolution timestamp describing when it was created, and a pointer to the owner thread that created it. These two fields are set at creation, and do not change thereafter. Another field, last_check, tracks the when this probe was checked for contention the last time. If a RESOLUTION amount of time has not yet passed since the last check, the monitoring routine exits. This is the common path of the monitoring routine. The RESOLUTION constant is picked so that the overheads of monitoring are kept minimal. We used 1024 nanoseconds in our experiments. The last field, contended, describes whether other threads have observed a ContentionStatus. When MONITORING_INTERVAL time has elapsed, the current ContentionStatus is examined. If it was not found to be contended, an exception is thrown to trigger invalidation and transformation to something more suitable. Otherwise, a new ContentionStatus is installed using CAS. In our experiments, a MONITORING_INTERVAL of 1 ms was used. The procedure is described with pseudo code in Algorithm 2.

5.3 Transactional memory

Monitoring contention in TM is particularly trivial: simply count the number of transactions free from data conflicts, which must be tracked anyway, and feed it into the contention manager.

6 Safe component invalidation (ii)

Knowing that a particular component variant is not suitable is not enough; we also need to address issue (ii) and invalidate the current unsuitable component variant safely and efficiently. This component variant invalidation invalidates a component variant in the sense that no operation (read or write) may complete or harm the consistency of the component once invalidation has finished. We provide safe component variant invalidation mechanisms separately for each synchronization mechanism.

Note that in order to instantiate and initialize a new more suitable component variant, it must still be possible to read the state of the invalidated component variant. If all reading methods throw exceptions it becomes impossible to read the last valid state and, hence, installing a new component variant is not possible either. Therefore, every component variant must provide a special yet general read-only version, e.g., a read-iterator, which is only used when the component variant is rendered invalid and does not need protection. This read-iterator is used in the methods, Representation clone(Representation) copying the state of an invalidated representation to a new representation variant.

6.1 Locks

A general and natural solution to invalidating a lock-based component variant is making lock invalidation a part of the locking protocol. An invalidated lock will make subsequent lock and unlock operations throw an exception taking the execution back to the abstract component wrapper.

Lock invalidation is best done on inflated locks for two reasons. First, we do not care about taking a slow path to the invalidated locks as lock invalidation is rare and happens only when transformation is due. Second, we only invalidate locks due to even higher contention when they have been inflated already.

The pseudo code shown in Algorithm 3 sketches the implementation ideas. When a lock is inflated (line 16) from a spin lock appropriate for contention level 2 to a blocking lock for contention level 3, it can be changed safely, especially invalidated, once the current thread is the owner established with CAS (lines 24–29). After the inflated blocking lock is acquired while holding the lock, we call the contention manager (line 19). The self-adapting concurrent component manager will then trigger transformation and invalidate the lock by calling the invalidation callback (lines 1–6). It changes the owner from its own thread to a sentinel value corresponding to the real owner tagged with a single extra bit that denotes that the monitor has been invalidated (line 4).

After invalidation, the faster JIT-compiled locking paths of other threads may optimistically try biased locking or spinning to get the lock, but they always fail to acquire the lock and ultimately all threads take the slow path because the owner field is never cleared. In that slow path, they will ultimately see that the lock has been invalidated because the owner field is tagged (line 30–32). By now all threads will fail to enter the critical section, and instead compete for taking the transformation lock in the abstract component wrapper to transform to a more suitable component variant.

6.2 Lock-free

For discussing lock-free algorithms, we first need to differentiate linearizing from non-linearizing CAS operations. A linearizing CAS atomically commits the change made by an operation at a linearization point (Herlihy and Wing 1990) and either fails to commit due to contention (and hence making the wanted change not visible at all to other threads) or succeeds (and hence making the new state of the component completely visible to all other threads). There may be other, non-linearizing CAS operations that update the component lazily and do not hamper the consistency of the component.

Any non-linearizing CAS operation does not need to be visible at all as the consistency of the data structure is not harmed by their succeeding or failing according to the algorithm specification.

Operations of lock-free components are typically linearizable and we need to make sure that state changes of succeeding operations are copied to and that failing operations are repeated on the new component variant.

Linearizability is commonly defined in the following way:

Definition 1

All linearizable function calls have a linearization point at some instant between their invocation and their response. All functions appear to occur instantly at their linearization point, behaving as specified by the sequential definition.

Sequential consistency (Lamport 1979) ensures that the observable events in program order are observed in the same order on all processors. Linearizability is strictly stronger than sequential consistency. Each operation has a linearization point where the operation logically either completely succeeds or completely fails, hence, making the whole operation logically appear to happen instantaneously. Other processors will never observe partially completed operations from other threads that violate consistency. For writing operations, this linearization point is typically an atomic sequentially consistent CAS operation (cf. Algorithm 1) that atomically either writes a new value, hence, publishes the result of the whole operation, or fails, depending on whether the previous value is as expected, as required for consistency. Similarly, for reading operations, the linearization point is typically a load.

We exploit the linearization points of the lock-free component variants to invalidate them. All the linearization points go through operations on atomic references. We provide our own atomic reference class, that has special load and CAS operations used as linearization points for lock-free data structures for all operations. These special atomic references, can be irreversibly invalidated. Once an atomic reference has been invalidated, any subsequent linearizing operation will throw an exception that takes control back to the abstract component wrapper to handle the fact that the variant has been invalidated, by restarting the whole operation in the new component variant once transformation has finished. We assume lock-free data structures to be composed of object nodes linked together with invalidatable atomic references. Values are captured in value objects linked from (reference) object nodes with atomic references too.

By design, all atomic references transitively reachable from the root (data structure wrapper node) to the leaves (references to the elements) are at all points in time completely encapsulated within the lock-free data structure and are never exposed outside of the data structure. That is, no method on the data structure returns or otherwise exposes any of the internal nodes used by the lock-free component variant outside of itself. This is important for encapsulating the lock-free machinery from the outside world.

Invalidating an atomic reference (cf. Algorithm 4) is itself a lock-free and linearizable operation. A CAS tries to install an INVALIDATED sentinel value. This is the linearization point of the operation—it either fails and retries or it succeeds and the reference is invalidated. It continues in a loop until the atomic reference is invalidated in a lock-free fashion.

When a reference has been invalidated, the value that was there before the invalidation is remembered and exposed through a special load_remembered() API operation (cf. Algorithm 5) that can be used in the clone(Representation) method to read the state of the reference, used only for the purpose of iterating through the elements to construct the new component variant, once the whole data structure has been invalidated.

Invalidation means that all subsequent load and linearizing CAS operations (cf. Algorithm 6) on the atomic reference will throw exceptions and fail their operations.

Note that all loads used by the data structure operations, whether linearization points or not, will throw an exception if the atomic reference has been invalidated, because the INVALIDATED sentinel value can never be the expected value. Therefore, CAS operations, whether linearizing (explicitly checking for invalidation) or not, will never succeed after invalidation: a CAS can only succeed if the current value is also expected. The INVALIDATED sentinel value is an encapsulated secret of the atomic reference class; it is never exposed to the outside world. As a result, it is impossible even for a non-linearizing CAS to ever succeed after invalidation. Therefore, invalidated atomic references are immutable.

The reason we still distinguish between linearizing and non-linearizing CAS is that even though they are similar in that they fail after invalidation, it is semantically subtly different to report a failed CAS and throw an invalidation exception. An algorithm could have a linearizing CAS in a loop that expects some value that was not loaded from the atomic reference, e.g., sentinel values known by the algorithm or NULL. Such an algorithm would get stuck in an infinite loop after invalidation unless a linearizing CAS is used to explicitly break the loop after invalidation, rather than just reporting failure to CAS. Typically, for CAS operations not used at linearization points, e.g., to lazily update tail pointers in lock-free linked lists, do not even check the success of their CAS; it does not matter if the CAS succeeded or not. Then it is more appropriate to use a non-linearizing CAS to save a few instructions.

Before transforming a lock-free component into another variant, we need to ensure that all operations performed on the lock-free component variant will be blocked by invalidated references. Therefore, all contained atomic references must be invalidated. The data structures of lock-free components consist of objects (nodes) accessed and connected via atomic references (edges). Invalidation is done by tracing through the lock-free component’s data structures and invalidating all reachable atomic references.

6.2.1 Consistency of lock-free invalidation

To guarantee consistency of the lock-free component once variant transformation was decided, we must guarantee that, once tracing (and, hence, invalidation) has been started, operations either successfully reach a linearization point, or fail and retry in the new component variant when transformation has finished. We must also guarantee that tracing (invalidation) will terminate, and that once it has terminated, it holds that (1) all objects reachable from the data structure root node have been invalidated, and (2) all subsequent operations on the invalidated data structure will fail and instead run the operation on the new component variant once transformation has finished.

If the data structure was immutable or there were no concurrently executing threads (serial tracing), tracing through such a data structure object graph in a consistent way would be easy. Any depth-first search (DFS) or breadth-first search (BFS) algorithm would do.

Guaranteeing consistency of the tracing while concurrent threads are executing is more tricky. Tracing through the data structure to invalidate atomic references concurrently to other threads executing an algorithm works analogously to GC tracing through all live objects concurrently to mutators. In GC, on-the-fly marking advances a wavefront of visited objects. Instead of marking found objects as live to separate them from the garbage objects in GC, they are now marked as invalidated. This guarantees a stable snapshot of the transitive closure of the data structure. Because of the strong similarity to on-the-fly marking, we reuse Dijkstra’s tricoloring scheme (Dijkstra et al. 1978) for reasoning about this. It describes a framework of reasoning where nodes can be in one of three states (colors), at any time during tracing.

White nodes have not been visited by the tracing algorithm yet, gray nodes have been noted by the tracing algorithm, but their references have not been processed yet, i.e., in our case, their edges haven’t been invalidated. Black nodes have been visited by the tracing algorithm and their references have been processed and, hence, they don’t need to be visited by the tracing algorithm again.

Our tracing and invalidation algorithm can then be described like this: The first node to be traced is the lock-free component variant itself. It is shaded gray and pushed to a stack. In a loop, the stack top node is popped and visited until the stack is empty. A node is visited iff it is not already black, i.e., visited before. For each node visited, all its atomic references are invalidated and, iff the referents are white, they are shaded gray and pushed to the stack (black referents do not need to be visited again and gray referents will eventually be visited as they are on the stack already). Finally, it is shaded black.

A GC tracing algorithm is complete if, upon termination, all reachable objects are eventually visited (shaded black) and, hence, separated from garbage objects (shaded white) that can be collected. This is a challenge if tracing and mutations of the object graph happen concurrently. Note that our tracing for invalidating references of the lock-free data structure also happens concurrently to mutating accesses to this data structure. It was found by Pirinen (1998) that any concurrent tracing GC algorithm that guarantees GC-completeness, enforces one of two invariants on the concurrent execution to guarantee consistency of the tracing:

-

The strong tricolor invariant: There is no edge from a black node to a white node.

-

The weak tricolor invariant: All white nodes pointed to by a black node are also reachable from a gray node through a chain of white nodes.

Algorithms enforcing the strong tricolor invariant disallow new edges from black nodes to white nodes to guarantee GC-completeness, while the weak tricolor invariant allows new edges from black nodes to gray nodes as long as those white object are somehow reachable later in the tracing.

Our algorithm enforces the strong tricolor invariant. If concurrent threads try to write any edge at all from a black node with the linearizing CAS (cf. Algorithm 6), then it will fail to commit and an invalidation exception would be thrown. Therefore, the scenario where an edge from a black node to a white node is concurrently written, can trivially not happen because black nodes have become immutable.

Our tracing algorithm for invalidating all reachable nodes in a lock-free data structure is complete in the sense that the tracing, once terminated, has shaded all reachable nodes black regardless of concurrent mutation (attempts) to the data structure. This can be shown analogously to already established and since long understood theoretical frameworks for concurrent tracing by a GC. In particular, Dijkstra et al. (1978) proved that their tracing algorithm could guarantee GC-completeness, provided that the strong tricolor invariant is enforced by GC and mutators. Barriers (mutator code run when writing references to the heap, referred to as actions in the paper) for mutators were described that would enforce the invariant, and it was then proven that this invariant on the concurrent execution makes tracing correct and complete. It was later mechanically verified (Hawblitzel and Petrank 2009; Gammie et al. 2015) that barriers of practical GC algorithms really do enforce these tricolor invariants, and then proved by extension that some example tracing algorithms are GC-complete and correct.

For the interested reader, there are formal proofs of invalidation termination, invalidation completeness, and data structure consistency in the Appendix A. These proof are sketched below.

The termination of invalidation is guaranteed iff the data structure instance is not concurrently extended endlessly, as reference cycles are broken by not pushing black and gray referents on the stack again. The trick in proving termination is to recognize that the atomic references are completely encapsulated within the lock-free component variant. Therefore, all subsequent operations after the root has been invalidated will fail, and only latent operations that started before invalidation has started need to be dealt with. This is formalized in Section A. I.

The proof of invalidation completeness is analogous to the proofs of GC-completeness of the GC tracing algorithms: (1) black nodes cannot change as it requires changing outgoing atomic references that are all invalidated, (2) gray nodes still can change while being on the stack or being visited but, changing competes with attempts to invalidate them in an endless loop and eventually all outgoing references are invalidated and cannot change, (3) a wavefront of gray then black coloring will eventually color all nodes black making the whole data structure immutable. This is formalized in Section A. II.

The consistency of the whole data structure instance is guaranteed by the design of the lock-free data structure type. Operations and algorithms are designed to be correct if reads and writes fail at liniarization points; they just don’t make progress then. Once invalidation has been initiated, they all will fail eventually. Black nodes are guaranteed to block all subsequent linearization points by concurrent threads. All their operations that have failed, i.e., didn’t logically finished atomically, will wait for the transformation to complete and then eventually retry on a new component variant. This is formalized in Section A. III.

6.2.2 Lock-free invalidation implementation

While the principles of invalidation have been discussed before, we still need to fit them into the object-oriented framework of self-adaptive components. Lock-free components are built using our own IAtomicReference \({\textsf {<}}\) T \({\textsf {>}}\) that can be invalidated. It has special get() and load() methods (the implementation principles are described in Algorithm 6) that will throw an exception when invalidated to block linearization points as previously described.

The generic type argument T is required to be a subclass of Object. An internal atomic reference assumes the Object type, and load() casts the internal load from Object to T. A special internal class Invalidated \({\textsf {<}}\) T \({\textsf {>}}\) represent invalidated references, and load() will trigger a class cast exception (since Invalidated \({\textsf {<}}\) T \({\textsf {>}}\) is not a T) indicating that the reference has become invalid. Similarly, the linearizingCAS() operation returns immediately if the internal CAS worked, otherwise it checks if it failed due to invalidation by loading the current value and checking if it is an Invalidated \({\textsf {<}}\) T \({\textsf {>}}\) object.

An invalidate() method in IAtomicReference \({\textsf {<}}\) T \({\textsf {>}}\) invalidates a reference by installing an Invalidated \({\textsf {<}}\) T \({\textsf {>}}\) object containing the original value using CAS in a loop that terminates when CAS succeeds. To invalidate a complete lock-free component variant, it is traversed as described above using invalidate() calls on all existing references. When the whole representation variant has been traversed, it is certain that the component variant is logically invalidated in the sense that any linearization point for any operation will fail and throw an exception.

Like for the other synchronization mechanisms, there must be a read-only variant that will not throw exceptions, allowing copying of the old invalidated representation variant and create a new one. This is done with the special T loadRemembered() method (cf. Algorithm 5) implemented in IAtomicReference \({\textsf {<}}\) T \({\textsf {>}}\) used to implement the clone(Representation) methods for changing the representation variants.

6.3 Transactional memory

Implementing safe component variant invalidation for TM is done by simply inserting a data dependency in the beginning of the transactions, checking a flag if the representation variant is invalid. If so it throws an exception, otherwise, it continues executing. When the component variant is invalidated, the flag is set causing current transactions to fail. In a strongly consistent TM the flag is set normally, while in a weakly consistent TM the setting of the flag is itself wrapped in a transaction. If the flag checking operation’s transaction failed, it checks if the invalid flag is set. If so it throws an exception like in the locking approach. Unlike the locking invalidation, we unfortunately do not know how to make this free of charge in the common case as infusing a data dependency is integral for making the transaction fail.

7 Safe component transformation (iii)

For all synchronization mechanisms, we can sense inappropriate component variants due to changing contention (i) and safely invalidate them (ii). Finally, this section addresses issue (iii) and discusses how to transform components safely.

In order to complete the transformation there must be a way of handling transformation races. We simply solve that uniformly for all synchronization mechanisms by wrapping the actual transformation in a synchronized block with a transformation lock owned by the contention manager. Alternatively, we could employ the lock-free GC copying algorithm by Österlund and Löwe (2015). However, we argue that if contention is so heavy that this lock would become a bottleneck itself, then

-

1.

Choosing to transform away from a locking component variant is probably a good decision anyway. The lock of the component is the actual bottleneck that is eventually removed by the transformation.

-

2.

Choosing to transform away from a lock-free component variant is probably a bad decision in the first place and the contention manager should simply not trigger this transformation.

To safely transform to a new component variant, a special read-only transformation iterator is used in the Representation clone(Representation) methods to iterate over all the elements of the old component variant and add them one-by-one to the new component variant.

The read-only transformation iterator has its own implementation for each component variant, depending on what measures were taken to invalidate the state of the data structure. The read-only transformation iterator must be able to read this invalidated state.

7.1 Lock-based component variants

For the locking, clone may simply use a normal iterator without a lock for the read-only transformation iterator. Apart from the transformation lock, no other lock is necessary to read the state since, the data structure is immutable once invalidated.

7.2 Lock-free component variants

Once a lock-free component variant has been invalidated, any normal access to the invalidated atomic references will throw an exception. Therefore, the read-only transformation iterator walks through the data structure accessing the state using the special loadRemembered() method instead of the normal load (that would throw exceptions), in order to read the invalidated state.

7.3 Transactional memory component variants

Assuming write-buffered TM, the read-only transformation iterators and, hence, the clone methods are implemented by using plain memory read operations.

8 Evaluation

All benchmarks were run on a 2 socket Intel® Xeon® CPU E5-2665 with 2.40 GHz, 16 cores, 32 hyperthreads, 256 KiB L2 cache (per core), 20 MiB L3 cache (shared), 8 x 4 GiB DIMM 1600 MHz, running on Linux kernel version 4.4.0-31. Benchmarks were run on our own modified OpenJDK 9.

By default biased locking would not get activated until too late. Therefore, it was forced to start immediately. Escape analysis was disabled because using static analysis to elide locks in the benchmarks would be unfair. In practice, our transformation components would benefit from such optimizations. The JVM arguments were:

-

-XX:+UseBiasedLocking

-

-XX:BiasedLockingStartupDelay=0

-

-XX:-DoEscapeAnalysis.