Abstract

In a complex group decision-making (GDM) process, decision makers (DMs) usually encounter some uncertainties. The uncertainty experienced by DMs could be characterized by the non-reciprocal property of pairwise comparisons. In this paper, the concept of non-reciprocal pairwise comparison matrices (NrPCMs) is introduced to generally capture the situation with the breaking of reciprocal property. The transformation relation between NrPCMs and interval multiplicative reciprocal matrices (IMRMs) is addressed. It is identified that NrPCMs together with IMRMs are inconsistent in nature. Then a consistency index of NrPCMs is constructed according to cosine similarity measures of two row/column vectors. An optimization-based method for eliciting priorities from NrPCMs is proposed. The properties of the proposed consistency index and prioritization method are investigated. Furthermore, a model of building consensus in GDM with NrPCMs is formed, where an optimization model is constructed and solved using a particle swarm optimization (PSO) algorithm. Some comparisons with the existing methods are reported by carrying out numerical examples. The obtained results reveal that NrPCMs provide a new yet effective way to capture the uncertainty experienced by DMs.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

As a typical choice model, the Analytic Hierarchy Process (AHP) has been developed and applied for more than forty years [1, 2]. The basic mathematical tool of the AHP model is pairwise comparison matrix (PCM), which is produced by the technique of pairwisely comparing criteria and alternatives. As shown in the axiomatic foundation of AHP [3], multiplicative reciprocal property is usually equipped for a PCM due to mathematical intuition. It is further noted that some uncertainty is always encountered by decision makers (DMs) in the process of comparing alternatives [4, 5]. An uncertainty-induced axiomatic foundation of the AHP model has been proposed [6], where the concept of reciprocal symmetry breaking was introduced to characterize the uncertainty. Moreover, the concept of interval multiplicative reciprocal matrices (IMRMs) has been proposed to capture the experienced uncertainty of DMs [7], which can be derived from PCMs with the breaking of reciprocal property.

It is worth noting that there is an inherent relationship between the concepts of PCMs and IMRMs. The concept of IMRMs is the extension of PCMs by considering the uncertainty of human-originated information [7]. It is further found that IMRMs can be decomposed into two matrices without multiplicative reciprocal property and derived by a PCM with reciprocal symmetry breaking [6]. However, the uncertainty-induced axiomatic foundation in [6] only considers the case with 0 < aijaji ≤ 1 where aij denotes the comparison ratio between alternatives xi and xj for i ∈ In = {1,2,⋯ ,n}. In fact, for the reciprocal symmetry breaking, the mixed situation with 0 < aijaji ≤ 1 and 1 < aklalk < 81 could emerge. For convenience, the mixed matrices without multiplicative reciprocal property are recalled as non-reciprocal pairwise comparison matrices (NrPCMs). It is worth mentioning that the non-reciprocal property of pairwise comparisons has been considered theoretically and experimentally [8, 9]. Here we further link the non-reciprocal property to the uncertainty of decision information. That is, when the multiplicative reciprocal property of PCMs is relaxed, the generalized NrPCMs can be used to characterize decision information under uncertainty. Moreover, from the viewpoint of producing a NrPCM, the workload is equal to that of giving an IMRM. And NrPCMs exhibit a simpler form than IMRMs. Motivated by the above considerations, we focus on the concept of the generalized NrPCMs and its application to group decision making (GDM), since GDM under uncertain environments has still been a research hotspot [10,11,12,13,14,15]. In particular, the consistency index and prioritization method are studied for NrPCMs. The main contributions are worth mentioning below:

-

The concept of NrPCMs is proposed generally. The transformation relation between IMRMs and NrPCMs is carefully addressed.

-

The consistency index of NrPCMs is proposed. It is identified that NrPCMs are inconsistent in nature due to the lacking of multiplicative reciprocal property.

-

The priority vector is derived from NrPCMs by constructing an optimization model.

-

The proposed consistency index and prioritization method are used to propose a consensus model in GDM, where individual NrPCMs are optimized using the particle swarm optimization (PSO) algorithm.

The paper is organized as follows. In Section 2, a literature review is provided to give a solid foundation for the present study. Section 3 introduces some concepts related to PCMs, IMRMs and NrPCMs, respectively. The relationship between IMRMs and NrPCMs is studied and some interesting properties are reported. In Section 4, a consistency index is proposed for NrPCMs and some comparisons are given by numerical computations. Then the prioritization method is constructed to derive the priority vector from NrPCMs. Section 5 gives a consensus model with NrPCMs and offers some discussions on the effects of the parameters and comparisons with the existing methods. Finally, the conclusions and future research direction are covered in Section 6.

2 Literature review

Our contributions are related to the line of work that addresses consistency index, prioritization method and consensus reaching model [11, 16, 17]. It is found that DMs always provide inconsistent PCMs [1]. In order to quantify the inconsistency degrees of PCMs, an index should be proposed. The earliest contribution is attributed to Saaty’s consistency index (CI) and consistency ratio (CR) [1], which are constructed from a global view point to measure the deviation degree of inconsistent PCMs from a consistent one. The geometric consistency index (GCI) of PCMs was formalized in [18] using the average value of the squared difference between the logarithm of entries and the corresponding ratio of priorities. The consistency index C∗ of a PCM was determined by the average of the determinants of 3 × 3 submatrices [19]. A statistical approach was proposed to measure the consistency level of PCMs in [20]. The cosine similarity measures of two column vectors in a PCM were used to obtain the cosine consistency index (CCI) in [21]. The CCI was further extended to the double cosine similarity consistency index (DCSCI) by considering row/column vectors in a PCM [22]. In general, the consistency indexes of PCMs have been analyzed and compared using an axiomatic approach [23]. Recently, the distance-based method has been used to measure the consistency and transitivity degrees of pairwise comparisons [24]. It is seen from the above analysis that the consistency indexes of PCMs have been widely studied according to various views. Moreover, consistency indexes of interval-valued comparison matrices have also attracted much attention [25]. For example, Conde and Pérez [26] have defined a consistency index of IMRMs by considering the existence of an associated PCM with consistency. Dong et al. [27] have constructed two consistency indexes of interval-valued comparison matrices where the deviation degree of a PCM from a consistent one. Liu et al. [28] have given a consistency index of IMRMs by considering the boundary matrices and the permutations of alternatives. The result in [28] shows that a consistent PCM is the limiting case of an IMRM. In addition, the cardinal and ordinal consistencies of fuzzy preference relations have also attracted a great deal of attention with some investigations of consistency index [29,30,31,32]. Here we construct the consistency index of NrPCMs and consider its extension to IMRMs.

Moreover, it is important to derive the priority vector from a preference relation. When considering a PCM, many methods have been proposed [33]; and here they are split into two classes. One is the direct computation methods using a mathematical theory. For example, based on the matrix eigenvalue theory, the eigenvector method was considered to be the only way to give the priorities and correct ranking of alternatives [34]. According to the statistical theory, the scaling method was proposed to elicit the priorities of alternatives [35]. The other is the distance-based optimization methods by constructing various objective functions. For instance, the logarithmic least squares method was proposed by Crawford and Williams to obtain the geometric mean priority vector of alternatives [36]. By considering the singular value decomposition (SVD) of a matrix, the priorities of alternatives were determined by explicitly solving an optimization problem [37]. A linear goal programming model was established to derive the priorities in [38] from a PCM. By maximizing the cosine similarity degree of two row vectors in a PCM, the priority vector was elicited in [21]. The cosine maximization method in [21] has been further extended to the double cosine similarity maximization method in [22]. The extended logarithmic least squares method was proposed to derive individual and collective priority vectors from multiplicative preference relations with self-confidence in [39]. In addition, when deriving the priority vector from IMRMs, the prioritization methods of PCMs have been extended to propose the corresponding techniques. It is seen that the sampling method has been given for deriving the interval weights from IMRMs [7]. The convex combination method has been proposed to directly compute the interval weights from an IMRM [28, 40]. Following the ideas of CI and GCI, two methods have been used to determine the interval weights of alternatives from IMRMs [41]. Recently, the eigenvector method has been further applied to derive the interval priority vector from IMRMs [42]. In addition, many mathematical programming models have been constructed to elicit the priority vector from IMRMs [43, 44]. In the present study, an optimization model will be established to elicit the priority vector from NrPCMs.

At the end, let us briefly review consensus reaching models in GDM. In order to achieve a widely accepted solution to a GDM problem, the consensus of experts should be considered [11, 45]. The consensus level is always measured by proposing an index or characterized by a function. For example, based on the distance between eigenvectors of individual and collective PCMs, the group consensus was computed in [10, 46]. The similarity degree between individual and collective fuzzy preference relations was used to define the consensus level of group in [47, 48]. The distance-based method was usually developed to capture the consensus degree between individual and collective judgements of DMs [49,50,51,52,53]. In the process of reaching consensus, two approaches are always adopted. One is the optimization-based method for reaching an optimal consensus level [10, 46,47,48, 54,55,56]. The other is to use the threshold of consensus index to give an acceptable consensus degree [49,50,51,52,53]. This paper focuses on the optimization-based consensus model with NrPCMs, which is solved using a PSO algorithm.

3 Preliminaries and notations

Suppose that there are a finite set of alternatives X = {x1,x2,…,xn} in a decision making problem. The DM compares the alternatives in pairs and gives her/his opinions. In the following, we recall some basic definitions and offer some novel findings.

3.1 Basic definitions

When the judgements of DMs are expressed as positive real numbers, the concept of PCMs with multiplicative reciprocal property is given as follows:

Definition 1

Saaty [1] A PCM A = (aij)n×n is multiplicative reciprocal, if aij = 1/aji and \(a_{ij}\in \mathbb {R}^{+}\) for ∀i,j ∈ In.

In addition, by considering the strict transitivity of judgements, the consistent PCM is defined:

Definition 2

Saaty [1] A PCM A = (aij)n×n is consistent if aij = aik ⋅ akj for ∀i,j,k ∈ In.

It is seen that when A = (aij)n×n is consistent, the condition of aij = aik ⋅ akj yields the multiplicative reciprocal property aijaji = 1 for ∀i,j ∈ In. In other words, the multiplicative reciprocal property is a necessary condition for the consistency of PCMs in Definition 2. When a PCM is not with multiplicative reciprocal property, it must be inconsistent.

Moreover, by considering the uncertainty experienced by DMs, the concept of IMRMs is provided below:

Definition 3

[7] An IMRM \(\bar {A}\) is represented as

where \(a_{ij}^{-}\) and \(a_{ij}^{+}\) are non-negative real numbers satisfying \(a_{ij}^{-}\leq a_{ij}^{+},\) \(a_{ij}^{-}\cdot a_{ji}^{+}=1\) and \(a_{ij}^{+}\cdot a_{ji}^{-}=1\). The term \(\bar {a}_{ij}\) indicates that the comparison ratio of alternatives xi over xj lies between \(a_{ij}^{-}\) and \(a_{ij}^{+}\).

It is seen that two boundary PCMs with multiplicative reciprocal property can be constructed from an IMRM \(\bar {A}\) [40]. In addition, since the comparison ratio is considered to be uniformly distributed in \([a_{ij}^{-}, a_{ij}^{+}],\) the following two boundary matrices can be also determined:

In general, we have \(0<a_{ij}^{-}a_{ji}^{-}\leq 1\) and \(a_{ij}^{+}a_{ji}^{+}\geq 1\) since \(a_{ij}^{-}\leq a_{ij}^{+}\) for i,j ∈ In. The two matrices AL and AR are inconsistent, unless \(\bar {A}\) degenerates to a consistent PCM with \(a_{ij}^{-}=a_{ij}^{+}\) (i,j ∈ In). As compared to Definition 1, the difference in AL and AR is that the multiplicative reciprocal property has been relaxed. In the recent paper [6], the concept of reciprocal symmetry breaking has been proposed to consider the case without reciprocal property. The case of \(0<a_{ij}^{-}a_{ji}^{-}\leq 1\) has been used to establish the novel axiomatic foundation of the AHP model under uncertainty. Here we generally consider the mixed situation, where the two cases of \(0<a_{ij}^{-}a_{ji}^{-}\leq 1\) and \(a_{ij}^{+}a_{ji}^{+}\geq 1\) may exist at the same time in a matrix. The concept of NrPCMs is proposed as follows:

Definition 4

If there is a pair of entries in a PCM A = (aij)n×n such that the relation aij ⋅ aji = 1 is not satisfied, A is called a non-reciprocal pairwise comparison matrix (NrPCM).

One can find from Definition 4 that when aij ⋅ aji≠ 1 for at least a pair of i and j, a NrPCM is given. For a practical situation, when the DM compares alternatives xi and xj to give the judgement aij, the comparison ratio of xj over xi is written as aji. A NrPCM means that it is not necessary to satisfy the relation aij ⋅ aji = 1. For example, when considering the scale [1/9,9] and giving aij = 1/4, the value of aji could deviate from 4 to a certain degree. This deviation is attributed to the uncertainty experienced by DMs [7]. As compared to the process of giving IMRMs, the method of providing NrPCMs seem simpler and more direct. The reveal-valued comparison matrix is more intuitive than an interval-valued one. Hereafter, similar to the idea in [6], when talking about PCMs, it means that the multiplicative reciprocal property may be not satisfied. Clearly, AL and AR are two particular NrPCMs corresponding to \(0<a_{ij}^{-}a_{ji}^{-}\leq 1\) and \(a_{ij}^{+}a_{ji}^{+}\geq 1,\) respectively.

3.2 The relationship between NrPCMs and IMRMs

In what follows, let us discuss the relationship between NrPCMs and IMRMs. For a generalized PCM A = (aij)n×n, it is convenient to define the uncertainty degree as

When the scale [1/9,9] is used, it gives hij ∈ [1/81,81] for ∀i,j ∈ In. It is seen that the interval [1/81,81] can be easily transformed into [0,1] by only using a linear transformation. Here for the sake of simplicity, the interval [0,1] is not used for the uncertainty degree. Then the uncertainty matrix is determined as:

Since hij = hji, we can find that H is a symmetric matrix. When hij = 1 for ∀i,j ∈ In, A = (aij)n×n is a PCM with multiplicative reciprocal property. When there are a pair of i and j for holding hij≠ 1, A = (aij)n×n is a NrPCM. Furthermore, we consider the relationship between the two PCMs AL and AR. Similar to (4) and (5), we give the following terms:

and

According to the relations \(a_{ij}^{-}\cdot a_{ji}^{+}=1\) and \(a_{ij}^{+}\cdot a_{ji}^{-}=1,\) it gives

The above observation shows that the uncertainty degree of IMRMs can be characterized using the two symmetric matrices HL and HR, respectively. Hence, we have the following property:

Theorem 1

For an IMRM \(\bar {A}=(\bar {a}_{ij})_{n\times n},\) letting \(A^{L}=(a_{ij}^{-})_{n\times n}\) and \(A^{R}=(a_{ij}^{+})_{n\times n},\) it follows

where the symbol “∘” denotes the Hadamard product of two matrices.

Proof

Since

and

for ∀i,j ∈ In, we get AR = AL ∘ HL and AL = AR ∘ HR. □

It is noted from the above analysis that both NrPCMs and IMRMs can be used to capture the uncertainty of DMs. Moreover, the transformation relationship between NrPCMs and IMRMs can be determined as follows:

Theorem 2

For a NrPCM A = (aij)n×n, let

and

where hij has been defined in (4). Then an IMRM \(\bar {A}=([a_{ij}^{-}, a_{ij}^{+}])_{n\times n}\) is uniquely determined by (10) and (11).

Proof

If aij ⋅ aji ≥ 1, we can determine \(h_{ij}=\frac {1}{a_{ij}\cdot a_{ji}}\leq 1,\) and

According to (10), it follows:

and

Therefore, it is calculated that

and

Similarly, when aij ⋅ aji < 1, we also obtain \(a_{ij}^{-}\leq a_{ij}^{+},\) \(a_{ij}^{-}\cdot a_{ji}^{+}=1\) and \(a_{ij}^{+}\cdot a_{ji}^{-}=1\). Hence, an IMRM \(\bar {A}=([a_{ij}^{-}, a_{ij}^{+}])_{n\times n}\) is constructed and the proof is completed. □

It is seen from Theorem 1 that an IMRM can be decomposed into two NrPCMs with the relations in (9). A NrPCM can be transformed into an IMRM according to Theorem 2. When all the entries in a NrPCM A = (aij)n×n satisfy aij ⋅ aji ≥ 1 or 0 < aij ⋅ aji ≤ 1, A = (aij)n×n is written as AR or AL and an IMRM is determined using (9). When the two cases of aij ⋅ aji ≥ 1 and 0 < aij ⋅ aji ≤ 1 are all existing, some computations should be made to obtain an IMRM in terms of Theorem 2. For example, we consider the following NrPCM:

It is seen that \(a_{23}\cdot a_{32}=\frac {1}{2}\cdot 3=\frac {3}{2}>1,\) then \(h_{23}=h_{32}=\frac {2}{3}\). According to (10), we have

and

meaning that

Because \(a_{12}\cdot a_{21}=4\cdot \frac {1}{5}=\frac {4}{5}<1,\) then it gives \(h_{12}=h_{21}=\frac {5}{4}\). The application of (11) yields

and

implying that

For the case of \(a_{13}\cdot a_{31}=3\cdot \frac {1}{3}=1,\) it is natural to give h13 = h31 = 1 and

According to the above computations, the IMRM \(\bar {A}_{1}\) can be determined:

4 Consistency index and prioritization method of NrPCMs

It is noted that NrPCMs are inconsistent due to the lack of multiplicative reciprocal property. It is important to propose a consistency index to quantify the inconsistency degree and a prioritization method of NrPCMs.

4.1 A consistency index based on cosine similarity measure

Following the ideas in [21, 22], the cosine similarity measure of two vectors is used to construct a consistency index of NrPCMs. For convenience, we recall the following definitions:

Definition 5

[21] For \(\vec {v}_{i}=(v_{i1}, v_{i2}, \ldots , v_{in})\) and \(\vec {v}_{j}=(v_{j1}, v_{j2}, \ldots \),vjn), the cosine similarity measure is given as:

where the symbol ⊙ is the dot product of two vectors.

Then the properties of cosine similarity measure are given as follows [21]:

-

\(0\leq CSM(\vec {v}_{i}, \vec {v}_{j})\leq 1;\)

-

\(CSM(\vec {v}_{i}, \vec {v}_{i})=1;\)

-

\(CSM(\vec {v}_{i}, \vec {v}_{j})=1\) means that \(\vec {v}_{i}\) and \(\vec {v}_{j}\) are completely similar;

-

\(CSM(\vec {v}_{i}, \vec {v}_{j})=0\) implies that \(\vec {v}_{i}\) and \(\vec {v}_{j}\) are not similar at all;

-

\(CSM(\vec {v}_{i}, \vec {v}_{j})<CSM(\vec {v}_{i}, \vec {v}_{k})\) indicates that \(\vec {v}_{k}\) is more like \(\vec {v}_{i}\) than \(\vec {v}_{j}\).

According to the cosine similarity measure, a consistency index of PCMs has been constructed:

Definition 6

[22] Assume that A = (aij)n×n is a PCM. The row and column vectors of A = (aij)n×n are written as \(\vec {a}_{i\cdot }=(a_{i1}, a_{i2}, \ldots , a_{in})\) and \(\vec {a}_{\cdot i}=(a_{1i}, a_{2i}, \ldots , a_{ni})^{T}\) for i ∈ In, respectively. The double cosine similarity consistency index (DCSCI) is defined as:

when \(r_{ij}=CSM(\vec {a}_{i\cdot }, \vec {a}_{j\cdot })\) and \(c_{ij}=CSM(\vec {a}_{\cdot i}, \vec {a}_{\cdot j})\).

One can see from the finding in [22] that the values of rij and cij could be different for a PCM with multiplicative reciprocal property. In the present study, in order to consider the non-reciprocal property of NrPCMs, the DCSCI should be modified. Therefore, we propose a consistency index of NrPCMs as follows:

Definition 7

For a generalized PCM A = (aij)n×n, the consistency index is defined as:

where \(r_{ij}=CSM(\vec {a}_{i\cdot }, \vec {a}_{j\cdot }),\) \(c_{ij}=CSM(\vec {a}_{\cdot i}, \vec {a}_{\cdot j}),\) \(e_{ij}=CSM(\vec {a}_{i\cdot }, \vec {a}_{\cdot j}^{-1}),\) and \(f_{ij}=CSM(\vec {a}_{\cdot i}, \vec {a}_{j\cdot }^{-1})\) with

It is noted from Definition 7 that the proposed consistency index for a generalized PCM considers the cosine similarity measures of two row vectors, two column vectors, the row vectors and the reciprocal vectors of column vectors, the column vectors and the reciprocal vectors of row vectors. The uncertainty of a NrPCM has been incorporated into the proposed consistency index. Now, we give the properties in the following result:

Theorem 3

Suppose that A = (aij)n×n is a generalized PCM. The consistency index in (14) satisfies:

- (1):

-

0 ≤ CSCI(A) ≤ 1;

- (2):

-

CSCI(A) = 0 if and only if A is consistent according to Definition 2;

- (3):

-

\(CSCI(A)=\displaystyle \frac {n-1}{n}DCSCI(A)\) when A is with multiplicative reciprocal property.

Proof

(1) Since we have \(0\leq CSM(\vec {v}_{i}, \vec {v}_{j})\leq 1\) for any two vectors, it is determined 0 ≤ CSCI(A) ≤ 1 according to (14).

(2) When A = (aij)n×n is perfectly consistent, one has aij = aik ⋅ akj for ∀i,j,k ∈ In. Therefore, it follows

for ∀i,j ∈ In. Thus, we have

This means that CSCI(A) = 0 by virtue of Definition 7.

On the contrary, the application of CSCI(A) = 0 leads to

Moreover, in terms of Definition 5, it follows 0 ≤ rij,eij,cij,fij ≤ 1. Hence, we must have rij = eij = cij = fij = 1 for ∀i,j ∈ In. According to the properties of cosine similarity measure and Definition 7, A = (aij)n×n is perfectly consistent.

(3) When A = (aij)n×n is with multiplicative reciprocal property, one has

Therefore, it follows:

Similarly, we can get fij = cij for ∀i,j ∈ In. Based on Definition 5, the following relations hold:

Hence, we have

□

It is found from Theorem 3 that the consistency index is based on the deviation degree of a generalized PCM from a consistent one. The more the value of CSCI(A) is, the more the inconsistency degree of A is. As compared to the DCSCI in [22], the only difference is the coefficient for a PCM A with multiplicative reciprocal property. This is attributable to the different coefficient when defining the two consistency indexes. Moreover, the acceptable consistency of NrPCMs should be considered according to the proposed consistency index in (14). Here we follow the idea of Saaty [1] to randomly generated 10,000 NrPCMs and give the mean value of CSCI as the random consistency index in Table 1 for n = 2,3,⋯ ,9, respectively. As compared to the random index of consistency index in [1], the main difference is the non-zero value for n = 2 here. Then the consistency ratio is defined as

When CRNr(A) ≤ 0.1, matrix A is considered to be acceptable. Otherwise, matrix A is unacceptable and could be adjusted to a new matrix with acceptable consistency.

As an illustration, we compute the value of CSCI for the following NrPCM:

According to Definition 7, it gives

and

It is found that matrices R2 and C2 are symmetric, while matrices E2 and F2 are asymmetric. By virtue of (14), we arrive at CSCI(A2) = 0.2179 and CRNr(A2) = 0.5222 > 0.1, meaning that A2 is not of acceptable consistency.

By the way, based on the relationship between NrPCMs and IMRMs, we can construct a novel consistency index of IMRMs as follows:

Definition 8

Suppose that two NrPCMs AL and AR are obtained from an IMRM \(\bar {A}=(\bar {a}_{ij})_{n\times n}\). The consistency index of \(\bar {A}\) is defined as:

As shown in Theorem 3, the properties of \(NCI(\bar {A})\) can be obtained straightforwardly and the detailed procedures have been omitted. In particular, it is observed that if and only if \(NCI(\bar {A})=0,\) IMRM \(\bar {A}\) degenerates to a consistent PCM. That is, the value of \(NCI(\bar {A})\) can be considered as the deviation degree of \(\bar {A}\) from a consistent PCM. The above observation is in agreement with the finding in [28]. For example, we consider the IMRM as follows [27, 28, 40]:

The results in [28] have shown that \(ICI(\bar {A}_{2})=0,\) \(SICI(\bar {A}_{2})=0.3385\) and \(WCI(\bar {A}_{2})=0.0483\). Here the application of (17) leads to \(NCI(\bar {A}_{2})=0.0592\) and \(ICI(\bar {A}_{2})<WCI(\bar {A}_{2})<NCI(\bar {A}_{2})<SICI(\bar {A}_{2})\).

4.2 An optimization method of eliciting priorities

When the judgements of DMs are expressed as NrPCMs, one of the important issues is on how to derive the priority vector of alternatives. Suppose that \(\vec {\omega }=(\omega _{1}, \omega _{2}, \ldots , \omega _{n})^{T}\) with \({\sum }_{i=1}^{n}\omega _{i}=1\) and ωi ≥ 0 (i ∈ In) is the priority vector obtained from a PCM A = (aij)n×n. Moreover, let \(\vec {t}=(t_{1}, t_{2}, \ldots , t_{n})\) and ti ⋅ ωi = 1. If A = (aij)n×n is consistent, then \(a_{ij}=\frac {\omega _{i}}{\omega _{j}}\) [1]. At the same time, one has \(\vec {a}_{i\cdot }=\omega _{i}(\frac {1}{\omega _{1}}, \frac {1}{\omega _{2}}, {\ldots } \frac {1}{\omega _{n}})=\omega _{i}(t_{1}, t_{2}, \ldots , t_{n})\) and \(\vec {a}_{\cdot j}=\frac {1}{\omega _{j}}(\omega _{1}, \omega _{2}, {\ldots } \omega _{n})^{T}\). In the following, based on the idea of constructing CSCI in (14), we propose an optimization model to elicit the priorities of alternatives.

First, by considering the non-reciprocal property of NrPCM A = (aij)n×n, we construct a new matrix D = (dij)n×n where

According to Definition 5, we have

where \(\vec {d}_{i\cdot }=(d_{i1}, d_{i2}, \ldots , d_{in})\) and \(\vec {d}_{\cdot j}=(d_{1j}, d_{2j}, \ldots ,\) dnj)T for i,j ∈ In. It is further obtained that

Second, similar to the finding in [22], the optimization model is established as follows:

where p ≥ 0 and q ≥ 0 are constants. If A is with multiplicative reciprocal property, the optimization model (22) degenerates to that in [22]. For convenience, the proposed model is called as the cosine similarity maximization method (CSM). For the optimal solutions, we have the following result:

Theorem 4

Assume that the optimal solution to the optimization problem (22) is \(\vec {\omega }^{\ast }=(\omega _{1}^{\ast }, \omega _{2}^{\ast }, \ldots , \omega _{n}^{\ast })^{T}\) and the optimal value is F∗.

- (1):

-

When p = 1 and q = 0, the optimal solution and value of the optimization model (22) are determined as:

$$ \omega_{i}^{\ast}=\frac{\hat{\omega}_{i}^{\ast}}{{\sum}_{j=1}^{n}\hat{\omega}_{j}^{\ast}},\quad i\in I_{n},\quad F^{\ast}=\sqrt{\sum\limits_{i=1}^{n}\left( \sum\limits_{j=1}^{n}u_{ij}\right)^{2}}, $$(23)where

$$ \begin{array}{@{}rcl@{}} \hat{\omega}_{i}^{\ast}&=&\frac{{\sum}_{j=1}^{n}u_{ij}}{\sqrt{{\sum}_{i=1}^{n}({\sum}_{j=1}^{n}u_{ij})^{2}}},\quad u_{ij}=\frac{d_{ij}}{\sqrt{{\sum}_{i=1}^{n}d_{ij}^{2}}},\\ d_{ij}&=&\frac{a_{ij}+\frac{1}{a_{ji}}}{2}. \end{array} $$(24) - (2):

-

When p = 0 and q = 1, the optimal solution and value of the optimization model (22) are given as:

$$ \omega_{i}^{\ast}=\frac{1/\hat{t}_{i}^{\ast}}{{\sum}_{j=1}^{n}(1/\hat{t}_{j}^{\ast})},\quad i\in I_{n},\quad F^{\ast}=\sqrt{\sum\limits_{j=1}^{n}\left( \sum\limits_{i=1}^{n}v_{ij}\right)^{2}}, $$(25)where

$$ \begin{array}{@{}rcl@{}} \hat{t}_{j}^{\ast}&=&\frac{{\sum}_{i=1}^{n}v_{ij}}{\sqrt{{\sum}_{j=1}^{n}({\sum}_{i=1}^{n}v_{ij})^{2}}},\quad v_{ij}=\frac{d_{ij}}{\sqrt{{\sum}_{j=1}^{n}d_{ij}^{2}}},\\ d_{ij}&=&\frac{a_{ij}+\frac{1}{a_{ji}}}{2}. \end{array} $$(26)

Proof

(1) When p = 1 and q = 0, letting

and

we have

Then the optimization model (22) is simplified as the following form:

The model (27) can be solved by introducing the Lagrangian function as follows:

By assuming

it gives

According to \({\sum }_{i=1}^{n}\hat {\omega }_{i}^{2}=1,\) \(\hat {\omega }_{i}\geq 0\) and uij > 0, one has λ < 0 and

Then it follows

and

We further normalize the vector \(\hat {\omega }_{i}^{\ast }\) to give

(2) When p = 0 and q = 1, the proof is similar to the case (1) and the detailed procedure has been omitted for saving space. □

In general, when p≠ 0 and q≠ 0, the optimization model (22) should be solved using a resolution algorithm. For example, let us assume p + q = 1 and derive the priority vector from the following NrPCM:

The obtained results are shown in Table 2 according to the proposed method. In addition, it is seen that there are many methods to derive the priority vector from PCMs [33]. For the sake of simplicity, here we extend serval representative methods to A2, such as the eigenvector method (EM) [35], the additive normalization method (AN) [1], the weighted least-squares method (WLS) [57] and the logarithmic least squares (LLS) method [36]. As shown in Table 2, the ranking of alternatives is dependent on the values of p or q. When p = 0, p = 0.25 and p = 0.5, the CSM-based ranking is in accordance with that using WLS. When p = 0.75, the ranking using CSM is in agreement with those using EV, AN and LLS. Furthermore, in order to assess the performance of various prioritization methods, we also use the following two error criteria [58]:

and

with

The less the values of ED or MV, the better the performance of the prioritization method is. The obtained results are given in Table 3. It is seen that the proposed method is effective and better than the others according to the criterion ED.

5 Reaching consensus in GDM with NrPCMs

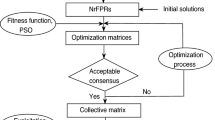

One can see that the GDM models with PCMs and PSO have been widely studied [10, 46]. Here it is of much interest to apply the proposed consistency index and prioritization method to GDM with NrPCMs. A consensus reaching process is built, where an optimization model is constructed and solved using PSO [59, 60].

5.1 Consensus reaching model

Assume that a group of experts E = {e1,e2,…,em} (m ≥ 2) are invited to express their opinions on X = {x1,x2,…,xn} (n ≥ 2). A set of NrPCMs \(A^{(k)}=(a_{ij}^{(k)})_{n\times n}\) for k ∈ Im = {1,2,⋯ ,m} are provided. In GDM, a consensus building process is necessary to improve the level of consensus within the group. To assess the level of consensus among DMs, we can examine the distance between individual and collective opinions. For obtaining the collective matrix, the weighted geometric mean method is applied to \(A^{(k)}=(a_{ij}^{(k)})_{n\times n}\) (k ∈ Im) [61, 62]. That is, we obtain \(A^{c}=(a_{ij}^{c})_{n\times n}\) with

where

For the two matrices A(k) and Ac, the similarity matrix can be computed as \(SM^{kc}=(sm^{kc}_{ij})_{n\times n},\) where

Then the similarity degree between A(k) and Ac is calculated as follows:

Hence, the consensus degree of DMs is determined as:

Obviously, the smaller the value of cd is, the better the group consensus is.

Moreover, for reaching the optimal solution to a GDM problem accepted by the most experts, a DM could consider the opinions of the others and modify her/his initial judgments by multiple consultations. This means that DMs should have some flexibility to adjust their initial opinions [10, 54]. Following the idea in [46], it is assumed that the flexibility degree αk is given to the DM ek with the initial matrix A(k). Then the entries in A(k) can be adjusted such that

for \(\frac {1}{9}\leq a_{ij}^{(k)}<1,\) and

for \(1\leq a_{ij}^{(k)}\leq 9\) with αk ∈ [0,1] (k ∈ In). It is convenient to express the set of all matrices with the entries satisfying (32) or (33) is expressed as P(A(k)).

In order to build the consensus model in GDM, two objectives are usually considered: the group consistency level and the consensus degree among DMs [10, 45, 54, 56]. The former is due to the consideration of rationality for the judgements of DMs, and the latter is attributable to the wide acceptability of the final solution. For the former, by using the proposed consistency index of NrPCMs, the objective function is written as:

For the latter, the application of (31) leads to

Hence, we construct an optimization problem as follows:

For the sake of simplicity, the multi-objective problem (36) is written as the linear form [46, 48]:

where μ and ν are non-negative real numbers. Furthermore, in order to reach a group consensus, we equip the constraint condition for the optimization model (37) as follows:

where the term δ stands for the predetermined threshold of the consensus level. When solving the optimization model (37) under the condition (38), the consistency levels of individual and collective matrices can be controlled automatically. If one wants to further make Ac be with acceptable consistency, the following constraint condition can be equipped:

5.2 Optimization of individual NrPCMs

It is noted that the PSO algorithm has been used to solve the nonlinear and complex optimization problems arising in GDM [10, 54, 55]. The PSO algorithm was proposed to simulate the behavior of birds flying for finding the food through swarm cooperation [59]. The PSO algorithm is initialized as a group of random particles, then to find the optimal solution through iterations. In each iteration, the particle updates itself by tracking two “extremum”: the local optimal solution \(\vec {z}_{p}\) found by the particle itself and the global optimal solution \(\vec {z}_{g}\) found by the population. The position of each particle changes according to the following formula:

The terms c1 and c2 are positive constants, which are called learning factors, and are usually assigned to 2 [10, 54, 55, 59]. The symbol rand() means to generate the random numbers with uniform distribution in [0,1]. In the searching process, the balance between the local and global searching abilities plays an important role to the success of the algorithm. Properly changing the inertia weight w will have a good effect. When the inertia weight w is large, it is conducive to search the local optimum. When the inertia weight w is small, it is conducive to the convergence of the algorithm. Therefore, a large inertia weight it is generally assumed in the initial stage of optimization, and a small inertia weight is used in the later stage of optimization. For this reason, we adopt the linear change of inertia weight w as follows:

where wmax is the initial factor usually assigned to 0.9; wmin is the factor at the maximum number of iterations usually assigned to 0.4; tmax is the maximum number of iterations, and t is the current number of iterations [10, 54, 55, 59]. The initial position and velocity of a particle are generated randomly, and updated according to (40) and (41), respectively.

In this study, due to the non-reciprocal property of NrPCMs, the dimension of particles is mn(n − 1) and the searching range is given in (32) and (33), respectively. Based on the proposed consistency index and prioritization method, the new GDM algorithm is elaborated on as follows:

- Step 1::

-

In a GDM problem, a group of experts E = {e1,e2,…,em} are invited to provide their opinions by pairwisely comparing alternatives in X = {x1,x2,…,xn}.

- Step 2::

-

NrPCM A(k) is determined to represent the initial position of expert ek with flexibility degree αk for k ∈ Im.

- Step 3::

-

The fitness function Q is constructed and the constraint conditions (32) and (33) are considered.

- Step 4::

-

The PSO algorithm is used to solve the optimization problem (37) with the constraint conditions in (38). The matrices A(k) (k ∈ Im) are optimized and written as \(\tilde {A}^{(k)}\) (k ∈ Im).

- Step 5::

-

Using the optimized matrices \(\tilde {A}^{(k)} (k\in I_{m})\), the collective one \(\tilde {A}^{c}\) is obtained by using (28).

- Step 6::

-

According to \(\tilde {A}^{c}=(a_{ij}^{c})_{n\times n}\), the weight of alternatives is derived by using optimization model (22).

It is convenient to provide the algorithm to solve the GDM problem with NrPCMs by controlling the consistency and consensus levels. The resolution process of a GDM with NrPCMs is shown in Fig. 1.

5.3 Numerical results and discussion

Now we carry out a numerical example to illustrate the above algorithm and investigate the effects of parameters αk, p and q on the objective functions Q, Q1 and Q2, respectively. It is considered that four DMs E = {e1,e2,e3,e4} are invited to compare and analyze five alternatives in X = {x1,x2,x3,x4,x5}. Four NrPCMs {A(1),A(2),A(3),A(4)} are provided as follows:

It is easy to compute the initial values of consistency index and consensus level as CSCI(A(1)) = 0.1280, CSCI(A(2)) = 0.2324, CSCI(A(3)) = 0.0906, CSCI(A(4)) = 0.1423 and cd = 0.2740.

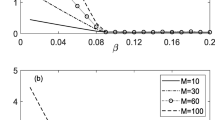

For convenience, it is assumed that each NrPCM is equipped with the same flexibility degree α and λk = 1/4 (k = 1,2,3,4). When the PSO algorithm is run, the selected values of some parameters are given in Table 4. Figure 2 is drawn to show the variations of the fitness function Q versus the generation number for (μ,ν) = (0.25,0.75) with α = 0.05,0.1,0.15 respectively. We can observe from Fig. 2 that the values of Q decrease with the increase of generation number. Then a stable value of Q is obtained for a sufficiently large generation number such as 100, meaning that the optimal value of Q is determined. In addition, with the increase of α, the value of Q decreases for a fixed generation number, indicating that the greater the flexibility degree is, the better the value of Q is. The above findings are in agreement with the existing results [10, 46, 47].

Furthermore, we investigate the effects of α on the values of Q, Q1 and Q2, respectively. The variations of Q, Q1 and Q2, versus α are shown in Fig. 3 by choosing (μ,ν) = (0.25,0.75) and performing 100 iterations. It is found from Fig. 3 that the values of Q, Q1 and Q2 generally decrease with the increase of α. Additionally, we analyze the effects of the parameters μ and ν on Q. As shown in Fig. 4, a series of (μ,ν) are selected to compute. It is noted that for a fixed value of μ (or ν), the values of Q decreases with the increase of ν (or μ). The above findings are in accordance with the phenomenon in [48]. This means that the values of μ and ν can be used to adjust the value of Q.

At the end, the optimized individual matrices and the final collective ones can be determined. For example, by selecting (μ,ν) = (0.25,0.75), when α = 0.05, we have

and

When α = 0.1, it gives the following results:

and

When α = 0.15, one arrives at the following matrices:

and

The values of consistency index and consensus level are given in Tables 5 and 6 under the selected flexibility degrees. The values of CSCIc, Q1, Q2 and Q decrease with the increasing values of α. The phenomenon is in agreement with the observation in [47]. Moreover, for the optimized individual matrices, there are some different situations in the values of consistency indexes and consensus levels. For example, when α = 0.15, the values of CSCI2 and cl1 increase with the increasing values of α. The observation shows that although the individual levels of consistency and consensus may be worse, the global levels of consistency and consensus could be better. When the threshold of consensus level δ = 0.2 is selected, the group consensus is reached for α > 0.10 according to Table 6. However, we consider the threshold of CSCI for acceptable consistency in Table 1, the consistency degree of CSCIc is not acceptable. In order to achieve the condition (39), we only need to increase the value of flexibility degree to α = 0.2. The detailed procedure is only based on the straightforward computation and it has been omitted.

The final issue of concern is the priorities and ranking of alternatives. For comparisons, the priorities and rankings of alternatives are given in Tables 7 and 8 by using the proposed method and the eigenvector method [1], where the collective matrices under α = 0.00,0.05,0.10 and α = 0.15 are considered. Here it should be pointed out that although the considered matrices could be with non-reciprocal property, the eigenvector method may still be suitable to derive the priorities of alternatives [6]. It is found from Tables 7 and 8 that for different values of α, the ranking of alternatives obtained by the two methods are the same.

5.4 Comparative analysis

In this paper, we constructed a consensus reaching model in GDM based on NrPCMs, where the consistency index, the prioritization method and the consensus level have been proposed. An optimization model has been constructed by controlling the consistency degrees of individual and collective matrices under the given consensus level of group. A thorough comparative analysis with the existing consensus models in GDM is worth making to show the advantages and disadvantages of the proposed one.

First, the uncertainty experienced by DMs is characterized using the non-reciprocal property of NrPCMs. Moreover, IMRMs [7] and intuitionistic multiplicative preference relations (IMPRs) [63] have the same capability as NrPCMs to describe the uncertainty. But the expression of NrPCMs is simpler than those of IMRMs and IMPRs, which is one of the advantages of NrPCMs. In addition, the workload of providing a NrPCM is less than giving an IMRM or an IMPR. From the mechanism of causing uncertainty, NrPCMs clearly show that the uncertainty is caused by the inherent indeterminacy of human-originated information when exchanging the order to paired alternatives.

Second, the consistency index is constructed by considering the cosine similarity degree between two row/column vectors in a NrPCM. Although this idea comes from the existing works [21, 22], the procedure of forming the consistency index is an extension of that in [22] by considering the non-reciprocal property of NrPCMs. When comparing with multiplicative reciprocal PCMs, the expression of NrPCMs and the method of computing the consistency index are more complex. Moreover, the prioritization method of NrPCMs is also an extension of the finding in [22]. The comparative analysis with EV, WLS, AN and LLS shows the advantage and disadvantage of the proposed method.

Third, the consensus reaching process is based on the optimization model by controlling the consistency degree of matrices and consensus level of group. The PSO algorithm is used to simulate the dynamic process of adjusting experts’ judgements. The developed consensus model follows the initial work of Pedrycz and Song [10]. In addition, here the collective matrix is obtained using an aggregation operator, which is different with that in [10], where the collective priority vector was used.

6 Conclusions

It is common to experience some uncertainty when comparing alternatives for decision makers (DMs). Interval multiplicative reciprocal matrices (IMRMs) have been used to characterize the experienced uncertainty. In this paper, the concept of non-reciprocal pairwise comparison matrices (NrPCMs) has been proposed to generally consider the case with the reciprocal symmetry breaking. Then the consistency index and prioritization method of NrPCMs have been established. A consensus model in group decision making (GDM) has been proposed where the opinions of DMs are expressed as NrPCMs. The main findings are covered as follows:

-

The transformation relation between IMRMs and NrPCMs has been constructed due to the similar ability to capture the uncertainty experienced by DMs.

-

It is concluded that NrPCMs and IMRMs are inconsistent in nature. The cosine similarity degree-based consistency index has been given to quantify the inconsistency degree of NrPCMs and IMRMs.

-

The prioritization method has been constructed to elicit the priority vector from NrPCMs and it is effective as compared to some extended methods.

-

The consensus model in GDM with NrPCMs has been reported by constructing an optimization problem, which is solved by the particle swarm optimization (PSO) algorithm.

We should point out that except for the transformation between IMRMs and NrPCMs, NrPCMs are related to intuitionistic multiplicative preference relations (IMPRs) [63], which could be investigated in the future. In addition, this paper only focuses on the typical consensus reaching model with NrPCMs, and some further studies could be made. For example, some GDM algorithms could be developed under uncertainty and incomplete decision information by following the idea shown in NrPCMs. Multiple-objective optimization models could be constructed and solved such that the Pareto solutions can be found. The effectiveness and performance of decision-making models could be evaluated by considering the differences of decision information. By considering the large-scale and cooperative/non-cooperative relationship of DMs [45, 50, 52], some GDM models could be proposed.

Data Availability

Data sharing not applicable to this article as no data sets were generated or analysed during the current study.

References

Saaty TL (1980) The analytic hierarchy process. McGraw-Hill, New York

Ho W, Ma X (2018) The state-of-the-art integrations and applications of the analytic hierarchy process. Eur J Oper Res 267:399–414

Saaty TL (1986) Axiomatic foundation of the analytic hierarchy process. Manag Sci 32(7):841–855

Herrera-Viedma E, Palomares I, Li CC, Cabrerizo F, Dong YC, Chiclana F, Herrera F (2021) Revisiting fuzzy and linguistic decision making: Scenarios and challenges for making wiser decisions in a better way. IEEE Trans Syst Man Cybern Syst 51(1):191–208

Xu ZS, Lei TT, Qin Y (2022) An overview of probabilistic preference decision-making based on bibliometric analysis. Appl Intell. https://doi.org/10.1007/s10489-022-03189-w

Liu F, Qiu MY, Zhang WG (2021) An uncertainty-induced axiomatic foundation of the analytic hierarchy process and its implication. Expert Syst Appl 183:115427

Saaty TL, Vargas LG (1987) Uncertainty and rank order in the analytic hierarchy process. Eur J Oper Res 32(1):107–117

Linares P, Lumbreras S, Santamaría A, Veiga A (2016) How relevant is the lack of reciprocity in pairwise comparisons? an experiment with AHP. Ann Oper Res 245(1):227–244

Nishizawa K (2018) Non-reciprocal pairwise comparisons and solution method in AHP. In: Int conf on intell dec technol. Springer, Cham, pp 158–165

Pedrycz W, Song M (2011) Analytic hierarchy process (AHP) in group decision making and its optimization with an allocation of information granularity. IEEE Trans Fuzzy Syst 19(3):527–539

Dong YC, Xu JP (2016) Consensus building in group decision making. Springer, New York

Li MQ, Xu YJ, Liu X, Chiclana F, Herrera F (2022) A trust risk dynamic management mechanism based on third-party monitoring for the conflict-eliminating process of social network group decision making. IEEE Trans Cybern. https://doi.org/10.1109/TCYB.2022.3159866

Wu J, Ma X, Chiclana F, Liu Y, Wu Y (2022) A consensus group decision-making method for hotel selection with online reviews by sentiment analysis. Appl Intell. https://doi.org/10.1007/s10489-021-02991-2https://doi.org/10.1007/s10489-021-02991-2

Lu KY, Liao HC (2022) A survey of group decision making methods in Healthcare Industry 4.0:bibliometrics, applications, and directions. Appl Intell. https://doi.org/10.1007/s10489-021-02909-yhttps://doi.org/10.1007/s10489-021-02909-y

Akram M, Ilyas F, Garg H (2021) ELECTRE-II method for group decision-making in pythagorean fuzzy environment. Appl Intell 51:8701–8719

Ahn BS (2017) The analytic hierarchy process with interval preference statements. Omega 67:177–185

Brunelli M (2017) Studying a set of properties of inconsistency indices for pairwise comparisons. Ann Oper Res 248:143–161

Aguarón J, Moreno-Jiménez JM (2003) The geometric consistency index: Approximated thresholds. Eur J Oper Res 147:137–145

Peláez JI, Lamata MT (2003) A new measure of consistency for positive reciprocal matrices. Comput Math Appl 46:1839–1845

Lin CS, Kou G, Ergu D (2013) An improved statistical approach for consistency test in AHP. Ann Oper Res 211:289–299

Kou G, Lin C (2014) A cosine maximization method for the priority vector derivation in AHP. Eur J Oper Res 235(1):225– 232

Liu F, Zou SC, Li Q (2020) Deriving priorities from pairwise comparison matrices with a novel consistency index. Appl Math Comput 374:125059

Brunelli M, Fedrizzi M (2015) Axiomatic properties of inconsistency indices for pairwise comparisons. J Oper Res Soc 66: 1–15

Brunelli M, Cavallo B (2020) Distance-based measures of incoherence for pairwise comparisons. Knowl-Based Syst 187:104808

Dong YC, Li CC, Chiclana F, Herrera-Viedma E (2016) Average-case consistency measurement and analysis of interval-valued reciprocal preference relations. Knowl-Based Syst 114:108– 117

Conde E, Pérez M (2010) A linear optimization problem to derive relative weights using an interval judgement matrix. Eur J Oper Res 201:537–544

Dong YC, Chen X, Li CC, Hong WC, Xu YF (2015) Consistency issues of interval pairwise comparison matrices. Soft Comput 19:2321–2335

Liu F, Yu Q, Pedrycz W, Zhang WG (2018) A group decision making model based on an inconsistency index of interval multiplicative reciprocal matrices. Knowl-Based Syst 145(1):67–76

Xu YJ, Liu XW, Wang HM (2018) The additive consistency measure of fuzzy reciprocal preference relations. Int J Mach Learn Cybern 9(7):1141–1152

Xu YJ, Li MQ, Cabrerizo FJ, Chiclana F, Herrera-Viedma E (2021) Algorithms to detect and rectify multiplicative and ordinal inconsistencies of fuzzy preference relations. IEEE Trans Syst Man Cybern Syst 51(6):3498–3511

Xu YJ, Patnayakuni R, Wang HM (2013) The ordinal consistency of a fuzzy preference relation. Inf Sci 224:152–164

Li CC, Dong YC, Xu YJ, Chiclana F, Herrera-Viedma E, Herrera F (2019) An overview on managing additive consistency of reciprocal preference relations for consistency-driven decision making and fusion: taxonomy and future directions. Inf Fusion 52(2019):143–156

Choo EU, Wedley WC (2004) A common framework for deriving preference values from pairwise comparison matrices. Comput Oper Res 31:893–908

Saaty TL (1990) Eigenvector and logarithmic least squares. Eur J Oper Res 48:156–160

Saaty TL (1977) A scaling method for priorities in hierarchical structures. J Math Psychol 15:234–281

Crawford G, Williams C (1985) A note on the analysis of subjective judgment matrices. J Math Psychol 29:387–405

Gass SI, Rapcsák T (2004) Singular value decomposition in AHP. Eur J Oper Res 154:573–584

Bryson N (1995) A goal programming method for generating priority vectors. J Oper Res Soc 46:641–648

Liu W, Zhang H, Chen X, Yu S (2018) Managing consensus and self-confidence in multiplicative preference relations in group decision making. Knowl-Based Syst 162:62–73

Liu F (2009) Acceptable consistency analysis of interval reciprocal comparison matrices. Fuzzy Sets Syst 160(18):2686–2700

Meng FY, Chen XH, Zhu MX, Lin J (2015) Two new methods for deriving the priority vector from interval multiplicative preference relations. Inform Fusion 26:122–135

Wang ZJ (2021) Eigenvector driven interval priority derivation and acceptability checking for interval multiplicative pairwise comparison matrices. Comput Ind Eng 156:107215

Cheng XJ, Wan SP, Dong JY (2021) A new consistency definition of interval multiplicative preference relation. Fuzzy Sets Syst 409:55–84

Wang ZJ, Lin J (2019) Consistency and optimized priority weight analytical solutions of interval multiplicative preference relations. Inf Sci 482:105–122

Rodríguez RM, Labella Á, Dutta B, Martínez L (2021) Comprehensive minimum cost models for large scale group decision making with consistent fuzzy preference relations. Knowl-Based Syst 215:106780

Liu F, Wu YH, Pedrycz W (2018) A modified consensus model in group decision making with an allocation of information granularity. IEEE Tran Fuzzy Syst 26(5):3182–3187

Cabrerizo FJ, Ureña R, Pedrycz W, Herrera-Viedma E (2014) Building consensus in group decision making with an allocation of information granularity. Fuzzy Sets Syst 255(16):115–127

Liu F, Zou SC, Wu YH (2020) A consensus model for group decision making under additive reciprocal matrices with flexibility. Fuzzy Sets Syst 398:61–77

Zhang Z, Li ZL, Gao Y (2021) Consensus reaching for group decision making with multi-granular unbalanced linguistic information: a bounded confidence and minimum adjustment-based approach. Inform Fusion 74:96–110

Wang S, Wu J, Chiclana F, Sun Q, Herrera-Viedma E (2022) Two stage feedback mechanism with different power structures for consensus in large-scale group decision-making. IEEE Trans Fuzzy Syst. https://doi.org/10.1109/TFUZZ.2022.3144536

Xing Y, Cao M, Liu Y, Zhou M, Wu J (2022) A Choquet integral based interval type-2 trapezoidal fuzzy multiple attribute group decision making for sustainable supplier selection. Comput Ind Eng 165:107935

Gao Y, Zhang Z (2021) Consensus reaching with non-cooperative behavior management for personalized individual semantics-based social network group decision making. J Oper Res Soc. https://doi.org/10.1080/01605682.2021.1997654

Dong QX, Sheng Q, Martínez L, Zhang Z (2022) An adaptive group decision making framework: individual and local world opinion based opinion dynamics. Inform Fusion 78:218–231

Cabrerizo FJ, Herrera-Viedma E, Pedrycz W (2013) A method based on PSO and granular computing of linguistic information to solve group decision making problems defined in heterogeneous contexts. Eur J Oper Res 230(3):624–633

Zhou X, Ji F, Wang L, Ma Y, Fujita H (2020) Particle swarm optimization for trust relationship based social network group decision making under a probabilistic linguistic environment. Knowl-Based Syst 200:105999

Zhang Z, Li GL (2021) Personalized individual semantics-based consistency control and consensus reaching in linguistic group decision making. IEEE Trans Syst Man Cybern Syst. https://doi.org/10.1109/TSMC.2021.3129510

Chu A, Kalaba RE, Spingarn K (1979) A comparison of two methods for determining the weights of belonging to fuzzy sets. J Opt Theory Appl 27(4):531–538

Srdjevic B (2005) Combining different prioritization methods in the analytic hierarchy process synthesis. Comput Oper Res 32:1897–1919

Kennedy J, Eberhart RC (1995) Particle swarm optimization. In: Proc IEEE int conf neural networks, vol 4. IEEE Press, Australia, pp 1942–1948

Kennedy J, Eberhart RC, Shi Y (2001) Swarm intelligence. Academic press, USA

Xu ZS, Da QL (2003) An overview of operators for aggregating information. Int J Intell Syst 18(9):953–969

Yager RR (1993) Families of OWA operators. Fuzzy Sets Syst 59:125–148

Xia MM, Xu ZS, Liao HC (2013) Preference relations based on intuitionistic multiplicative information. IEEE Trans Fuzzy Syst 21:113–133

Acknowledgements

The authors would like to thank the anonymous referees for the valuable comments and suggestions for improving the paper. The work was supported by the National Natural Science Foundation of China (No. 71871072), the Guangxi Natural Science Foundation (No. 2022GXNSFDA035075), and the Innovation Project of Guangxi Graduate Education (No. YCSW2022110).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Liu, F., Liu, T. & Hu, YK. Reaching consensus in group decision making with non-reciprocal pairwise comparison matrices. Appl Intell 53, 12888–12907 (2023). https://doi.org/10.1007/s10489-022-04136-5

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-022-04136-5