Abstract

In group decision support systems, it is important on how to process and manage individual decision information. In the paper, a sequential model is proposed to manage individual judgements with additively reciprocal property over paired alternatives. The process of realizing additive complementary pairwise comparisons (ACPCs) is captured. A real-time feedback mechanism is constructed to address the irrational behavior of individuals. An optimization model is established and solved by using the particle swarm optimization (PSO) algorithm, such that the consistency of individual judgements can be improved fast yet effectively. For the aggregation of individual decision information in group decision making (GDM), the weighted averaging operator is used. It is found that when all individual judgements are acceptably additively consistent, the collective matrix is with acceptable additive consistency. Under the control of individual consistency degrees, the approach of reaching consensus in GDM is further proposed. By comparing with some existing models, the observations reveal that the sequential model of originating additive complementary pairwise comparisons possesses the ability to rationally manage individual decision information.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In decision support models and systems, the basic decision information usually comes from the judgements of decision makers (DMs) on alternatives [23]. For a finite set of alternatives X = {x1,x2,⋯ ,xn}, it is convenient to express the DMs’ opinions as a matrix such as multiplicative reciprocal matrices (MRMs) [30], additive complementary judgement matrices (ACJMs) or fuzzy preference relations (FPRs) [25, 33], linguistic preference relations (LPRs) [6, 10], intuitionistic fuzzy preference relations (IFPRs) [16, 44] and others [34, 40]. The derived preference relations are always used as the basic tools for analyzing a decision making problem and reaching a finial solution. Here we mainly focus on the consensus model where ACJMs are used to express individual decision information in a GDM problem.

It is noted that an ACJM is originated by applying the sense of fuzzy sets to the binary relations of alternatives [33, 54]. That is, when defining a mapping as follows:

and writing \(\mathcal {R}(x_{i},x_{j})=r_{ij}\) for i,j ∈ In = {1,2,⋯ ,n}, a matrix R = (rij)n×n is determined. In general, it is supposed that the additively reciprocal property is satisfied with the relation of rij + rji = 1 (∀i,j ∈ In) [33]. Therefore, the matrix R(xi,xj) = rij is called as an ACJM to distinguish the concept of MRMs. One can see that the decision support models based on ACJMs have attracted a great deal of attention [8, 29, 47]. As shown in the above mentioned works, a completed ACJM is always used to make a decision analysis. It means that only when completing all pairwise comparisons of alternatives, the obtained matrix is considered to be applicable. However, in a practical case, the preference intensities rij (i,j ∈ In) are given by using a sequential pairwise comparisons of alternatives. For example, a comparison sequence could be chosen as follows:

The preference intensity rij could be given by carefully studying the relation of xi and xj. After repeated comparisons, finally the DM could offer the values of the preference intensities. It is seen that the comparison process is complex and related to the knowledge, the experience, and the psychology of DMs. When a completed ACJM is used, the process of giving additive complementary pairwise comparisons rij has been neglected. In order to capture the complexity of a pairwise comparison process, it is worth noting that a sequential model based on leading principal submatrices of a reciprocal preference relation has been proposed in [20, 21]. Based on the developed sequential model [20, 21], the process of paired comparisons can be simulated, then the evolutionary processes associated with the inconsistency degrees of judgements and the priorities of alternatives in pairwise comparisons can be captured. Hence, the sequential model in [20, 21] has the advantages that (i) the process of giving pairwise comparisons could be described carefully; (ii) the irrational behavior of the DM could be checked accurately; (iii) the DM could be reminded timely to reconsider or modify her/his judgements. In the present study, we follow the idea in [20, 21] to consider the process of giving rij and propose a sequential model of ACPCs. It is an attempt to manage individual decision information with rationality and efficiency in a consensus reaching process of GDM.

Moreover, it is seen that two important issues are worth to be investigated in a GDM model with ACJMs. One is the consistency of decision information and the other is the process of building consensus. For the consistency of ACJMs, additive and multiplicative consistency definitions have been proposed in [33]. A functional consistency definition of ACJMs has been further developed in [11] by considering the relationship among entries. In addition, one can find that a consistent matrix corresponds to an ideal case and an inconsistent one is more common in a practical situation [30]. Hence, the inconsistency degree of an ACJM should be quantified and some indexes have been proposed [12, 37, 46, 50]. In particular, it is noted that the threshold of acceptable additive consistency for ACJMs has been discussed in [50] by using the distance-based method. When a comparison matrix is not acceptably consistent, the method of improving consistency should be proposed [42]. In order to improve the consistency of an inconsistent ACJM, many methods have been proposed [24, 37, 38, 41, 49]. For example, an iteration algorithm was proposed in [24] to repair the consistency degree of ACJMs by considering the distance to a consistent one. By defining a deviation measure, an algorithm was proposed in [37] to adjust an inconsistent ACJM to a new one with acceptable consistency. The PSO algorithm was applied to improve the consistency degree of ACJMs by equipping a granularity level in [1]. The ordinal consistency and multiplicative consistency of ACJMs were improved by proposing an ordinal consistency index in [51]. It is seen that the above-mentioned consistency improving methods are usually based on a completed ACJM. Here since a sequential model of additive complementary pairwise comparisons is utilized, the consistency improving method should be developed correspondingly.

On the other hand, for reaching the consensus in GDM with ACJMs, a great number of models have been proposed [12, 13, 33]. For instance, a feedback-mechanism-based iteration algorithm has been reported in [3, 12], where the process of improving the consensus level in GDM has been controlled. The group decision support model in [37] was based on the improvement of the consensus level of experts. With the knowledge of Abelian linearly ordered group, the generalized GDM consensus model has been addressed in [41], where the consensus index was used. Cabrerizo et al. [2] have introduced the concept of the information granularity and the consensus in GDM was reached by using the PSO algorithm. A consensus reaching process with individual consistency control has been proposed by Li et al. [15]. Liu et al. [22] have proposed a consensus model for GDM with ACJMs based on the technique for order preference by similarity to an ideal solution (TOPSIS). In addition, the social network analysis and the prospect theory have been introduced into the process of reaching consensus [7, 35, 39, 56]. The trust measures in the recommender systems were incorporated into the process of reaching consensus in GDM [4, 36, 55]. In this study, we further develop the method of reaching consensus in GDM with ACJMs by using the sequential model of ACPCs.

For the purpose of achieving the above objectives, the remaining parts of this paper is organized as follows. Section 2 briefly recalls the definitions of ACJMs and additive consistency index in [50]. A sequential model of ACPCs is proposed by comparing the existing one. In Section 3, a novel method of improving the additive consistency of ACJMs is proposed according to the developed sequential model. A novel optimization model is constructed to adjust an inconsistent ACJM to a new one with acceptable additive consistency. The PSO algorithm is adopted to effectively solve the constructed optimization model. It is found that the initial decision information can be kept as much as possible. Section 4 addresses a new method of building consensus based on individual consistency control in GDM. A feedback mechanism is established to remind DMs avoiding the irrational and illogical judgements. The novel finding is attributed to the fact that when individual ACJMs are acceptably additively consistent, the collective matrix is acceptably additively consistent by using the weighted averaging operator. Conclusions are covered in the last section.

2 Modeling additive complementary pairwise comparisons

Assume that there are a set of alternatives X = {x1,x2,⋯ ,xn}, and the binary relation is defined as (1). If the alternative xi is preferred to xj, the value of rij satisfies 0.5 < rij ≤ 1. If the alternative xj is preferred to xi, we have 0 ≤ rij < 0.5. If the alternative xi is indifferent to xj, it gives rij = 0.5. Moreover, when the preference intensity of xi over xj is given, it is considered that the preference intensity of xj over xi is determined simultaneously. Then the additively reciprocal property is satisfied with rij + rji = 1 [33]. In addition, one can see that the process of creating the preference intensities rij (i,j ∈ In) means that of pairwisely comparing the alternatives. By considering the property of rij + rji = 1, we call the process of giving rij (i,j ∈ In) as that of realizing ACPCs.

2.1 A completed model of ACPCs

When the ACPCs for all alternatives are completed, an ACJM R = (rij)n×n is formed. In the known literature, the completed ACJM is usually used as a basic tool for decision analyzing and modelling [7, 12, 20, 33, 39]. For convenience, the definition of an ACJM is given as follows:

Definition 1

[33] R = (rij)n×n is called an ACJM, where rij ∈ [0,1] and rij + rji = 1 for ∀i,j ∈ In.

Furthermore, the ideal case of ACPCs is to keep the perfect consistency. That is, we have the following definition:

Definition 2

[33] An ACJM R = (rij)n×n is additively consistent if

However, it is difficult to provide a consistent matrix R = (rij)n×n due to the complexity of decision environments. Hence, a consistency index is requisite to measure the inconsistency degree of an ACJM [12, 37, 46, 50]. Here we recall the additive consistency index (ACI) proposed in [50] as follows:

Definition 3

[50] Let R = (rij)n×n be an ACJM. The matrix \(\bar {R}=(\bar {r}_{ij})_{n\times n}\) with additive consistency is determined from R = (rij)n×n according to the following relation:

The additive consistency index (ACI) of R is defined as follows:

Meanwhile, the threshold of ACI for an ACJM with acceptable consistency is proposed as [50]

where λα is the critical value of the χ2 distribution under the significance level α. The values of \(\overline {ACI}\) for different n are shown in Table 1 when setting α = 0.1 and σ0 = 0.2. If \(ACI(R)\leq \overline {ACI},\) the matrix R is considered to be with acceptable additive consistency; otherwise, the matrix R is not acceptably additively consistent.

2.2 A sequential model of ACPCs

It is seen that the completed model of ACPCs offers a final ACJM R = (rij)n×n. The other information of comparing alternatives has been neglected. The complex decision behavior of DMs in producing rij (i,j ∈ In) has been packaged as an unknown whole. With the requirement of intensively managing individual decision information, the packaged unknown behavior of DMs is worth to be unpacked. It is seen that a sequential model of pairwise comparison process within the framework of AHP has been proposed in [20, 21]. Here we follow the idea in [20, 21] to offer a sequential model of ACPCs and simulate the process of comparing alternatives. That is, the process of ACPCs is based on the following steps:

- (1):

-

The first alternative marked as x1 is offered to DMs to give the preference intensity r11 = 0.5; and the matrix is obtained as

Hereafter the symbol C stands for the criterion of DMs.

- (2):

-

The second alternative x2 is considered to produce r12 and r22 = 0.5. According to the additively reciprocal property r21 = 1 − r12, the matrix is determined as

- (3):

-

The alternatives x3,x4,⋯ ,xn are used to form a series of matrices written as R3,R4,⋯ , and Rn.

When the full process of ACPCs is completed, the final matrix Rn is expressed as:

In the above process, an ACJM R = (rij)n×n is decomposed as the leading principal submatrices R1,R2,⋯ ,Rn. Moreover, the process of \(R_{1}\rightarrow R_{2}\rightarrow \cdots \rightarrow R_{n}\) contains a great deal of individual decision information. Some possible meanings according to the sequential model are given as follows:

-

The values of rij (i,j ∈ In) could be with some uncertainty such as fuzzy numbers and a possibility distribution. Some other properties could be offered to the term rij (i,j ∈ In) such as the times of modifying its values for DMs.

-

The preference intensities rij (i,j ∈ In) could be provided by DMs under repetitive thought and modification.

-

The relationship between two adjacent submatrices Ri and Ri+ 1 could reflect the logic of decision information for i = 1,2,⋯ ,n − 1.

-

The complex decision behavior of DMs could exhibit due to the complexity of decision environments.

In general, the sequential model of individual decision information can be used to record the full decision behavior of DMs in a decision support system. The typical model with a completed matrix could be improved to possess the ability of characterizing the complex decision behavior of DMs.

3 An implication of sequential additive complementary pairwise comparisons

In the following, the sequential model of ACPCs is used to propose a novel method of improving additive consistency of inconsistent ACJMs, which can be considered as its implication to the reasonability of individual judgements.

3.1 The relationship between two adjacent submatrices

It is seen that the consistency is one of the important properties of a preference relation. By considering the additive consistency of two adjacent submatrices, we obtain the following result:

Theorem 1

Suppose that R1, R2, ⋯ , and Rn are the leading principal submatrices of an ACJM R = (rij)n×n. When Rk (k = 2,⋯ ,n) is additively consistent, Rt (1 ≤ t < k) is additively consistent.

Proof

Let the matrix Rk be additively consistent. Based on the relation (3), one has rij = ris − rsj + 0.5 (i,j,s = 1,2,⋯ ,k). When i,j,s ≤ k, the relation (3) still holds, implying that Rt is additively consistent. The proof is completed. □

According to Theorem 1, the inconsistency of Rk leads to the inconsistency of Rs (s > k). Although the additive consistency of Rk means the additive consistency of Rt (t < k), the additive consistency of Rk does not imply the additive consistency of Rs (s > k). Moreover, it is noted that the additive consistency of ACJMs is only the ideal case and acceptable additive consistency is more applicable in a practical decision problem. However, acceptable additive consistency of Rk and Rk+ 1 does not exhibit a logical relationship analogous to the observation in Theorem 1. For example, we consider the following ACJM:

It can be computed that \(ACI(\bar {R}_{1})=0.0913<0.1212\). For the leading principal submatrix:

we obtain \(ACI(\bar {R}_{2})=0.1>0.0882\). Obviously, according to the criterion in Table 1 [50], the matrix \(\bar {R}_{1}\) is of acceptable additive consistency and \(\bar {R}_{2}\) is not of acceptable additive consistency. The above observation is in agreement with the finding in [20] for the leading principal submatrices of MRMs. Moreover, similar to the method in [20], the first implication of the sequential model is to give an additive consistency improving method for inconsistent ACJMs. There are the following two situations:

-

When Rk is with acceptable additive consistency and Rk+ 1 is not acceptably additively consistent, the entries of ri(k+ 1) (i = 1,2,⋯ ,k) are only adjusted to obtain a new Rk+ 1 with acceptable additive consistency.

-

When Rk and Rk+ 1 are all not acceptably additively consistent, we can obtain a new Rk+ 1 with acceptable additive consistency by adjusting partial entries of Rk+ 1 such as ri(k+ 1) (i = 1,2,⋯ ,k) and others belonging to Rk.

It is obvious that the second situation is more generic than the first one. As an example, we consider the following matrix:

and its submatrix:

The values of additive consistency index can be computed as \(ACI(\bar {R}_{3})=0.1732>0.1212\) and \(ACI(\bar {R}_{4})=0.1333>0.0882,\) respectively. By adjusting the entries r14 = 0.60, r24 = 0.60 and r43 = 0.70, it follows

with \(ACI(\bar {R}_{5})=0.1212 \leq 0.1212\). In addition, when adjusting the entries in \(\bar {R}_{4},\) we have

with \(ACI(\bar {R}_{6})=0.1212 \leq 0.1212\). The method of improving the additive consistency of inconsistent ACJMs will be used to propose a group decision support model, where the feedback mechanism will be constructed from the additive consistency of individual decision information to the consensus reaching in GDM.

3.2 A method of improving additive consistency

In order to adjust an inconsistent ACJM to a new one with acceptable additive consistency, a feasible approach should be proposed. By considering the convenience and high efficiency of decision support systems, it is suitable to ask DMs to give as little information as possible when improving the consistency of decision information. Following the idea in [1, 21], the DM only need to offer a flexibility degree such that the consistency degree of ACJMs can be improved. For the purpose of achieving a fast yet effective adjustment, we construct an algorithm similar to that in [21]. There are two important situations to be considered. One is the standard of acceptable ACJMs, and the other is to keep the initial information as much as possible. Hence, the first function is written as:

where \(\bar {R}\) stands for the adjusted ACJM. The less the value of Q1 is, the higher the additive consistency degree of the matrix \(\bar {R}\). As shown in Table 1, the standard of acceptable ACJMs can be used. The second function is defined as:

where \(\bar {r}_{ij}\) belongs to \(\bar {R}=(\bar {r}_{ij})_{n\times n}\). Obviously, the less the value of Q2 is, the more the initial decision information is kept.

From the viewpoint of optimization, it seems that one should minimize simultaneously the values of Q1 and Q2. The simplest method is to address the following linear combination [18, 19, 21]:

where p and q are two nonnegative constants. When p = 0 or q = 0, then we have Q = qQ2 or Q = pQ1, meaning that only one of the two situations is considered. However, for a GDM problem, it is unnecessary to require individual decision information to be perfectly consistent. It is sufficient to make individual decision information be acceptable additive consistency. Hence, by considering the threshold of acceptable additive consistency, here we construct the following optimization problem:

subject to the following condition:

On the other hand, the attitude of DMs towards the adjustment of ACJMs should be considered. Similar to those in [2, 21, 27], the flexibility degree β of DMs is equipped. The other constraint conditions are given as follows [22]:

for 0.5 ≤ rij ≤ 1, and

for 0 ≤ rij ≤ 0.5. According to the additively reciprocal property of \(\bar {R},\) it is sufficient to consider one of Cases I and II. In order to solve the optimization problem (9) subject to (10) and (11)/(12), The penalty function method [32] is applied to rewrite the optimization problem as

where M is a sufficiently large positive number. For numerically computations, Case I is chosen and the PSO algorithm [14, 28, 31] is performed to solve the nonlinear optimization problem (13). Moreover, it is noted that there are many methods to solve an optimization problem, for example, the typical mathematical analysis and the metaheuristic methods [5, 32]. In the present study, we are motivated by the successful applications of the PSO algorithm to various nonlinear optimization problems [27, 28, 55]. The PSO algorithm is still chosen to obtain the optimal solution of the constructed one (13). The numerical results in the next subsection reveal that the PSO algorithm is effective in solving (13). In particular, we should point out that the value of M is chosen in advance when performing the PSO algorithm. The effects of the values of M on the optimal solution have been investigated in the following numerical computations.

In what follows, combining the sequential model of ACPCs and the optimization model (13), we elaborate on a new algorithm (Algorithm I) to improve additive consistency of inconsistent ACJMs.

- Step 1::

-

Consider an ACJM R = (rij)n×n without acceptable additive consistency and write the submatrix Rn− 1.

- Step 2::

-

Check the acceptable additive consistency of Rn− 1. When Rn− 1 is acceptably additively consistent, the entries rkn (k = 1,2,⋯ ,n − 1) are chosen to be adjusted. When Rn− 1 is not acceptably additively consistent, the entries in Rn− 1 are chosen to be adjusted.

- Step 3::

-

Construct the optimization problem (13) by considering (11) with the flexibility degree β.

- Step 4::

-

Run the PSO algorithm to solve the constructed optimization problem.

- Step 5::

-

Obtain an ACJM with acceptable additive consistency.

The above algorithm can be used to derive an ACJM with acceptable additive consistency by keeping the initial decision information as much as possible. Except for the given ACJM, the DM only needs to provide the value of the flexibility degree β. The adjustment process can be completed with a fast yet effective way. Based on the above algorithm, we have the following result:

Theorem 2

Under Algorithm I, there is a flexibility degree β such that a matrix with acceptable additive consistency can be obtained from an inconsistent ACJM R = (rij)n×n by keeping the initial information as much as possible.

Proof

According to (4), one can obtain a matrix with additive consistency from any an ACJM R = (rij)n×n. Acceptable additive consistency is a deviation from additive consistency. Hence, there is a flexibility degree β such that a matrix with acceptable additive consistency is determined by using an inconsistent ACJM R = (rij)n×n. Moreover, the optimization model (13) and the PSO algorithm mean that the obtained matrix can keep the initial information as much as possible. □

3.3 Numerical examples and discussion

Now let us carry out some numerical computations for verifying the algorithm to improve additive consistency of ACJMs. The effects of the parameters β and M on the optimal solutions of Qc and Q1 are investigated respectively. For example, an inconsistent ACJM is given as follows [37]:

Based on the standard of acceptable additive consistency in Table 1, it is easily found that the 5 × 5 and 6 × 6 leading principle matrices are unacceptably consistent. According to the above proposed algorithm, there are the following two approaches to the additive consistency improvement of \(\bar {R}_{7}:\)

-

Adjusting the entries of the 5 × 5 leading principle matrix;

-

Modifying the entries in the fifth and the sixth columns and rows of \(\bar {R}_{7}\).

Moreover, we consider all the entries bigger than 0.5 and the additively reciprocal property. The relation (11) is used when running the PSO algorithm to solve the optimization problem (13). The sizes of swarm and the maximal generation number are chosen as 100. Under each step of β, the optimal solution of Qc is determined for a fixed parameter M.

Figure 1 is drawn to show the variations of the optimal solution of Qc versus β for the selected values of the parameter M under the cases of (a) and (b), respectively. It is seen from Fig. 1 that with the increasing of β, the values of the optimal solution of Qc are decreasing, then tending to a stable one for any parameter M. This means that for a sufficiently large value of β, the inconsistent matrix \(\bar {R}_{7}\) can be adjusted to a new one with \(Q_{1}=\overline {ACI}\). For a small value of β satisfying \(Q_{1}>\overline {ACI},\) the parameter M has great influences on the value of Qc. In addition, the variations of Q1 versus M are shown in Fig. 2 for the selected values of β under the cases of (a) and (b), respectively. With the increasing of the values of M, the values of Q1 are tending a constant for a fixed β, meaning that a sufficiently lager value of M can be determined to obtain the optimal value of Q1. Comparisons between the cases of (a) and (b) in Figs. 1 and 2 show that the two approaches exhibit the similar effects on adjusting an inconsistent matrix to an acceptable one.

On the other hand, it is noted that many other methods have been proposed to improve the additive consistency of ACJMs [24, 37, 38, 41, 46,47,48, 50, 51]. One of the important issues is how to determine the effectiveness of the consistency improving method. Here, the departure of the original matrix R = (rij)n×n from the modified one \(\bar {R}=(\bar {r}_{ij})_{n\times n}\) is measured by the following criteria [42]:

The determined matrix is considered to be acceptable when δ < 0.2 and σ < 0.1. As shown in Figs. 1 and 2, it is feasible to obtain an acceptably consistent matrix by choosing β = 0.1 and M = 10 to run the PSO algorithm under the cases of (a) and (b), respectively. Figure 3 is depicted to show the variations of Qc versus the generation number under the cases of (a) and (b), respectively. One can see from Fig. 3 that with the increasing of the generation number, the values of Qc drop down to a stable one. This implies that the optimal solution of Qc is determined by choosing a sufficiently large generation number. For example, when the generation number is 100, the adjusted matrices with additively acceptable consistency can be written as

for Case (a) and

for Case (b). It is easy to compute that \(\delta (\bar {R}_{8})=0.0870,\) \(\sigma (\bar {R}_{8})=0.0457,\)\(\delta (\bar {R}_{9})=0.0761,\) and \(\sigma (\bar {R}_{9})=0.0308\). The obtained results satisfy the criteria δ < 0.2 and σ < 0.1, meaning that the consistency improving method is effective and convincing.

4 A novel group decision support model with a feedback mechanism

The aim of the sequential model of ACPCs is to finely managing individual decision information. A real-time feedback mechanism can be established to remind DMs avoiding the irrational and illogical behavior. Then a consensus reaching process in GDM is proposed by controlling individual consistency level of decision information.

4.1 A real-time feedback mechanism of individual decision information

Let us assume that there are a set of alternatives X = {x1,x2,⋯ ,xn} and a group of experts E = {e1,e2,⋯ ,em} in a GDM problem. The process of providing DMs’ opinions can be recorded in real time. Based on the sequential model of ACPCs, the expert ek gives a series of ACJMs as \(R^{(k)}_{s}=(r_{ij}^{(k)})_{s\times s}\) for s ∈ In and k ∈ Im = {1,2,⋯ ,m}. The decision behavior of the expert ek can be captured by the process of providing \(R^{(k)}_{s}\). Moreover, we compute the priorities of alternatives by using \(R^{(k)}_{s}=(r_{ij}^{(k)})_{s\times s}\) and the simple formula in [9] is used for xi with

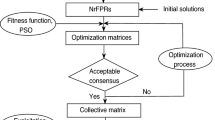

Then the corresponding priority vector is \(\omega ^{(k)}=(\omega _{1}^{(k)}, \omega _{2}^{(k)}, \cdots , \omega _{s}^{(k)})\) and it is normalized into [0,1], then \(\sum \limits _{i=1}^{s}\omega _{i}^{(k)}=1\). In the following, the real-time feedback mechanism is established by offering two reference quantities to DMs. One is the values of additive consistency index and the other is the ranking of the compared alternatives. The algorithm of the real-time feedback mechanism is shown in Fig. 4 and elaborated on as follows:

- Step 1::

-

The expert ek is asked to input her/his opinions on a series of alternatives \(x_{1}\rightarrow x_{2}\rightarrow \cdots \rightarrow x_{n}\) in a decision support system.

- Step 2::

-

When the matrix \(R^{(k)}_{s}\) is completed, the values of additive consistency index of \(R^{(k)}_{t}\) (1 ≤ t ≤ s) and the rankings of alternatives are output by using a figure.

- Step 3::

-

The values of additive consistency index and the rankings of the compared alternatives can remind the DM revise her/his opinions.

- Step 4::

-

When the DM offers a flexibility degree β, the adjustment procedure of improving the consistency starts automatically.

- Step 5::

-

When the DM does not want to adjust her/his judgements, the final matrix is originated.

Remark 1

The feedback mechanism is based on the sequential model of ACPCs. The value of the additive consistency index and the ranking of alternatives can be offered with the process of giving the preference intensities of alternatives. The DMs can be reminded in real time such that their final opinions are rational enough.

The advantage of the feedback mechanism lies in the reminding for DMs in real time such that the complexity of decision process can be decomposed and captured. As an example, we investigate the following final matrix:

The leading principal submatrices of \(\bar {R}_{10}\) are analyzed and the priority process of alternatives is illustrated. For convenience, the normalized priority vector of alternatives is determined according to (16) in the following computations. By considering the comparison process of \(x_{1}\rightarrow x_{2}\rightarrow \cdots \rightarrow x_{n},\) The leading principal submatrices of \(\bar {R}_{10}\) are written as Ri(i = 1,2,⋯ ,5), where

It is easy to compute that ACI(R4) = 0.1429 > 0.1212 and ACI(R5) = 0.1440 > 0.1359, meaning that R4 and R5 are of unacceptable additive consistency. In addition, one can see that the ranking of x2 ≻ x4 ≻ x1 ≻ x3 for R4 is changed to x2 ≻ x4 ≻ x3 ≻ x5 ≻ x1 for R5. The order of x3 and x1 has been changed due to the introduction of x5. The information related to the additive consistency index and the ranking of alternatives can be feedback to the DM. When the DM wants to modify the judgements such that the derived matrix is of acceptably additive consistency, two approaches are provided. One is to adjust the entries in the fourth and fifth columns and rows of \(\bar R_{10},\) then the modified matrix \(\bar R_{11}\) is obtained as follows:

with \(ACI(\bar R_{11})=0.1359\). The ranking of alternatives is determined as x2 ≻ x4 ≻ x3 ≻ x5 ≻ x1. The other is to modify the entries in the 4 × 4 leading principle matrix of \(\bar R_{10}\). The new matrix \(\bar {R}_{12}\) is given as follows:

with \(ACI(\bar R_{12})=0.1359\). The ranking of alternatives is obtained x2 ≻ x4 ≻ x3 ≻ x5 ≻ x1. Then following the idea in [20], the priority processes of alternatives for \(\bar {R}_{11}\) and \(\bar {R}_{12}\) are drawn in Fig. 5. Regardless of \(\bar {R}_{11}\) and \(\bar {R}_{12},\) there is an intersection point of the lines for x1 and x3. This implies that the standard of acceptable additive consistency cannot eliminate the phenomenon of rank reversal. The finding is similar to the result about the acceptable consistency of pairwise comparison matrices in the AHP [20].

Furthermore, when the DM wants to avoid the occurrence of the rank reversal phenomenon, the standard of weak transitivity should be used [24]. For example, applying the method in [24], \(\bar {R}_{11}\) and \(\bar {R}_{12}\) are readjusted to \(\bar {R}_{13}\) and \(\bar {R}_{14}\) with transitivity as follows:

One can compute that \(ACI(\bar R_{13})=ACI(\bar R_{14})=0.0408<0.1359,\) meaning the two matrices are of acceptable additive consistency. Figure 6 shows that the priority processes of alternatives for \(\bar R_{13}\) and \(\bar R_{14}\). It is seen from Fig. 6 that there is not any intersection point, meaning that the phenomenon of rank reversal does not occur.

In the proposed feedback mechanism, the values of additively consistency index and the priority processes are offered such that the DMs can adjust their opinions in real times.

4.2 Building consensus based on individual consistency control

Based on the feedback mechanism, the final matrices can be considered as the optimal results derived from a sequence of rational comparisons of DMs. The remaining issue is how to build the consensus among the experts and reach the final solution. It is assumed that the collective matrix is obtained by using the weighted averaging operator [37, 51]. That is, let \(R^{(k)}=(r_{ij}^{(k)})_{n\times n}\) be the k th ACJM provided by the expert ek and λk ∈ [0,1] be the corresponding weight for k ∈ Im. Then the collective ACJM Rc is determined as:

where

In addition, by considering the acceptable additive consistency of R(k) (k ∈ Im), we have the following result:

Theorem 3

When individual ACJMs R(k) (k ∈ Im) are with acceptable additive consistency satisfying

the collective one Rc exhibits acceptable additive consistency.

Proof

With the knowledge (5) and (17), we have the following equality:

where

Then, it is computed that

where the relation 2ab ≤ a2 + b2 and the equality \({\sum }_{t=1}^{m}\lambda _{t}=1\) have been used. Therefore, one has

This means that the matrix Rc is acceptably additively consistent and the proof is completed. □

The observation in Theorem 3 shows that when the consistency levels of individual matrices are controlled to be acceptable, the collective matrix is also with acceptable additive consistency. It is worth noting that the similar result to Theorem 3 has been observed by Xu [43] for the aggregation of MRMs using the geometric averaging operator. Here we use the additive consistency index of ACJMs in [51] to develop the interesting result. Moreover, it is seen that the aggregation operators have been developed widely to aggregate individual decision information [12, 43, 52, 53]. When the induced ordered weighted averaging operator is used to aggregate R(k) (k ∈ Im), the similar result as Theorem 3 can be obtained. The detail procedure has been neglected due to the straight extension of the finding in Theorem 3.

In what follows, similar to the existing works [37, 51], we define the consensus level (CL) by using the distance-based method as follows:

where \(R^{c}=({r}_{ij}^{(c)})_{n\times n}\) and \(R^{(k)}=({r}_{ij}^{(k)})_{n\times n}\) represent the collective and individual matrices, respectively. When CL(R(k)) = 0, there is the greatest consensus between R(k) and Rc. With the increasing of the values of CL, the consensus level is decreasing. In addition, a threshold of the consensus level can be set such as \(\overline {CL}\). When the consensus level CL(R(k)) is bigger than \(\overline {CL},\) the adjustment formula is suggested as follows [37]:

When γ = 0, one has \(\bar {R}^{(k)}=R^{c};\) when γ = 1, it gives \(\bar {R}^{(k)}=R^{(k)}\). In other words, when the value of γ is changed from 1 to 0, the new matrix \(\bar {R}^{(k)}\) approaches to Rc. Hence, there is a value of γ such that the consensus level of \(\bar {R}^{(k)}\) is smaller than \(\overline {CL}\). Furthermore, we have the following result:

Theorem 4

If the matrices Rk and Rc are with acceptable additive consistency, \(\bar {R^{k}}\) determined by (21) is acceptably additively consistent.

Proof

The proof can be obtained by assuming λ1 = γ and λ2 = 1 − γ in Theorem 3. □

At the end, it is convenient to show the consensus reaching process in Fig. 7. Except for the method of improving additive consistency of individual matrices, the key step is the consensus reaching process. Under the control of acceptable additive consistency, the final matrices are all with acceptable additive consistency and acceptable consensus level.

4.3 Case studies and comparison

In this subsection, let us carry out two examples to illustrate the consensus model and compare with the other methods.

Example 1

It is supposed that there are four alternatives {x1,x2,x3,x4}. Four experts with the same weights ωi = 0.25 (i = 1,2,3,4) evaluate their opinions as the following ACJMs [3, 15, 37]:

It is easy to compute that ACI(R(1)) = 0, ACI(R(2)) = 0.1707, ACI(R(3)) = 0.2165, and ACI(R(4)) = 0.1190. Considering the threshold \(\overline {ACI}=0.1212,\) one can see that the matrices R(2) and R(3) should be revised to those with acceptable additive consistency. Based on the proposed group decision support model, the following steps should be completed.

In the first step, we improve the consistency levels of R(2) and R(3) by using the proposed method for improving additive consistency. The revised matrices of R(2) and R(3) are given as follows:

Using R(1), \({R}^{(2)}_{r},\) \({R}^{(3)}_{r}\) and R(4), the collective ACJM Rc is obtained by considering (17) and λi = 0.25 (i = 1,2,3,4) as follows:

According to (20), the consensus levels are computed as CL(R(1)) = 0.1101, \(CL(R^{(2)}_{r})=0.1691,\) \(CL(R^{(3)}_{r})=0.1348\) and CL(R(4)) = 0.1130.

Second, the threshold of the consensus level should be set such as \(\overline {CL}=0.1\). Then, the matrices R(1), \({R}^{(2)}_{r},\) \({R}^{(3)}_{r}\) and R(4) should be modified by using the formula (21). For example, by choosing γ1 = 0.9050, γ2 = 0.5900, γ3 = 0.7400 and γ4 = 0.8850, we have

It is computed that \(CL(R^{(1)}_{1})=0.0997,\) \(CL(R^{(2)}_{1})=0.0998,\) \(CL(R^{(3)}_{1})=0.0998\) and \(CL(R^{(4)}_{1})=0.1\). Then, the collective matrix \({R^{c}_{1}}\) can be determined as follows:

In what follows, the consensus levels are recomputed as \(CL(R^{(1)}_{1})=0.0949,\) \(CL(R^{(2)}_{1})=0.1074,\) \(CL(R^{(3)}_{1})=0.0921\) and \(CL(R^{(4)}_{1})=0.0928\). One can see that the matrix \(R^{(2)}_{1}\) should be adjusted. After some computations, it gives

Then the collective matrix \({R^{c}_{2}}\) is determined as follows:

Finally, it is seen that all the consensus levels are determined and smaller than the threshold. The consensus reaching process is completed. Applying (16), one has ω1 = 0.2411, ω2 = 0.2923, ω3 = 0.2346, and ω4 = 0.2320. So the ranking of alternatives is x2 ≻ x1 ≻ x3 ≻ x4.

In order to make a comparison, some results are given in Table 2 by using the existing methods. It is seen from Table 2 that the optimal solution x2 is in accordance with the findings in [3, 15, 37]. Moreover, the ranking x2 ≻ x1 ≻ x4 ≻ x3 is in agreement with that in [37]. There exists a small difference for the ranking of x3 and x4 as compared to the results in [3, 15], which is attributed to the application of different consensus reaching processes.

Example 2

It is seen that the proposed consensus model has been verified in Example 1. We further notice that an application to a practical case should be considered [17, 26, 37, 45]. Now let us investigate the practical problem about how to evaluate and select suitable locations for a shopping center in Istanbul, Turkey [26, 37, 45]. It is noted that the growth in population yields the spending demand in Istanbul [26]. This means that attractive shopping centers are requisite to match the requirements of people. An investor company wants to locate an appropriate shopping center by establishing strategies. Five experts are invited to provide decision information to evaluate six feasible locations xi(i = 1,2,⋯ ,6) [26]. By carefully comparing the six locations in pairs, the ACJMs are given as follows:

The values of ACI are computed as ACI(G(1)) = 0.0789, ACI(G(2)) = 0.0730, ACI(G(3)) = 0.1193, ACI(G(4)) = 0.2033 and ACI(G(5)) = 0.1193. In terms of the threshold \(\overline {ACI}=0.1530,\) it is found that G(4) is unacceptable and should be revised to \({G}^{(4)}_{r}\) with acceptable additive consistency as:

Making use of G(1), G(2), G(3), \({G}^{(4)}_{r}\) and G(5), the collective ACJM Gc is obtained by using (17) and λi = 0.2 (i = 1,2,3,4,5) as follows:

Then the consensus levels can be computed as CL(G(1)) = 0.0816, CL(G(2)) = 0.0850, CL(G(3)) = 0.0814, \(CL(G^{(4)}_{r})=0.1374\) and CL(G(5)) = 0.0697, respectively.

In the following, let us still set the threshold of the consensus level as \(\overline {CL}=0.1\). One can see that \(G^{(4)}_{r}\) should be adjusted by (21). When choosing γ = 0.72, we obtain

The consensus level is calculated as \(CL(G^{(4)}_{1})=0.0996\). Then, we determine the collective matrix \({G^{c}_{1}}\) as follows:

Furthermore, we give CL(G(1)) = 0.0761, CL(G(2)) = 0.0810, CL(G(3)) = 0.0840, \(CL(G^{(4)}_{1})=0.1071,\) and CL(G(5)) = 0.0712, respectively. It is noted that the matrix \(G^{(4)}_{1}\) should be adjusted and a new matrix is obtained as:

Hence, the collective matrix \({G^{c}_{2}}\) is determined as follows:

Now it is found that all the consensus levels are smaller than the threshold and the consensus reaching process is completed. Applying (16), one has ω1 = 0.1709, ω2 = 0.2255, ω3 = 0.2775, ω4 = 0.1345, ω5 = 0.0871, and ω6 = 0.1045. For the sake of comparisons, some existing results are shown in Table 3. One can see that the ranking of alternatives are the same with x3 ≻ x2 ≻ x1 ≻ x4 ≻ x6 ≻ x5.

5 Conclusions

In group decision support systems, it is important to finely manage individual decision information and reach a fast yet effective consensus. We suppose that a group of experts compare a set of alternatives in pairs and evaluate their opinions as the preference intensities with additively reciprocal property in this paper. The sequential model of additively pairwise comparisons is proposed to finely manage individual decision information. A novel additive consistency improving method has been offered by constructing a novel optimization model. A feedback mechanism is established such that the irrational and illogical individual decision behavior can be reminded. Under the control of individual consistency degree, a consensus model in GDM has been established. Some main findings are shown as follows:

-

The sequential model of ACPCs has been proposed to record the decision information and behavior of experts. The irrational and illogical judgements can be checked such that the DMs can be reminded in real time to adjust their opinions.

-

When individual ACJMs are of acceptable additive consistency, the collective one obtained by using the weighted averaging operator is with acceptable additive consistency.

-

The consensus of a group of experts can be reached under a fixed level by controlling the consistency degrees of individual judgements.

One can find that the proposed method has the advantage of reminding the DMs to give more rational judgements. It is further seen that the particle swarm optimization (PSO) is used to achieve a fast yet intelligent adjustment of individual inconsistent decision information. The disadvantage of the proposed method is that the consensus process should be run repeatedly for many times. In the future, the proposed model could be extended to solve large GDM problems and propose some decision making models with incomplete decision information.

References

Cabrerizo FJ, Pérez IJ, Pedrycz W, Herrera-Viedma E (2007) An improvement of multiplicative consistency of reciprocal preference relations: a framework of granular computing. IEEE Inter Confer Syst Man Cybern, pp 1262–1267

Cabrerizo FJ, Ureña R, Pedrycz W, Herrera-Viedma E (2014) Building consensus in group decision making with an allocation of information granularity. Fuzzy Sets Syst 255:115–127

Chiclana F, Mata F, Martinez L, Herrera-Viedma E, Alonso E (2008) Integration of a consistency control module within a consensus model. Int J Uncertainty Fuzziness Knowl-Based Syst 16:35–53

Capuano N, Chiclana F, Herrera-Viedma E, Fujita H, Loia V (2019) Fuzzy group decision making for influence-aware recommendations. Comput Hum Behav 101:371–379

Cuevas E, Zaldívar D, Pérez-Cisneros M (2018) Advances in metaheuristics algorithms: methods and applications. Springer, Berlin

Dong YC, Xu YF, Yu S (2009) Linguistic multiperson decision making based on the use of multiple preference relations. Fuzzy Sets Syst 160(5):603–623

Dong YC, Zha QB, Zhang HJ, Kou G, Chiclana F, Herrera-Viedma E (2008) Consensus reaching in social network group decision making: Research paradigms and challenges. Knowl-Based Syst 162:3–13

Fan ZP, Ma J, Jiang YP, Sun YH, Ma L (2006) A goal programming approach to group decision making based on multiplicative preference relations and fuzzy preference relations. Eur J Oper Res 174 (1):311–321

Fedrizzi M, Brunelli M (2010) On the priority vector associated with a reciprocal relation and a pairwise comparison matrix. Soft Comput 14:639–645

Herrera F, Herrera-Viedma E, Verdegay J (1995) A sequential selection process in group decision making with linguistic assessment. Inf Sci 85:223–239

Herrera-Viedma E, Herrera F, Chiclana F, Luque M (2004) Some issues on consistency of fuzzy preference relations. Eur J Oper Res 154(1):98–109

Herrera-Viedma E, Alonso S, Chiclana F, Herrera F (2007) A consensus model for group decision making with incomplete fuzzy preference relations. IEEE Trans Fuzzy Syst 15(5):863– 877

Kacprzyk J, Nurmi H, Fedrizzi M (1997) Consensus under fuzziness. Kluwer Academic Publishers, Massachusetts

Kennedy J, Eberhart RC (1995) Particle swarm optimization. Proc IEEE Int Conf on Neural Networks, Perth, pp 1942–1948

Li CC, Rodriguez RM, Martinez L, Dong YC, Herrera F (2019) Consensus building with individual consistency control in group decision making. IEEE Trans Fuzzy Syst 27(2):319–332

Liao HC, Xu ZS (2014) Priorities of intuitionistic fuzzy preference relation based on multiplicative consistency. IEEE Trans Fuzzy Syst 22:1669–1681

Liao HC, Si GS, Xu ZS, Fujita H (2018) Hesitant fuzzy linguistic preference utility set and its application in selection of fire rescue plans. Int J Environ Res Public Health 15:664

Liu F, Wu YH, Pedrycz W (2018) A modified consensus model in group decision making with an allocation of information granularity. IEEE Trans Fuzzy Syst 26(5):3182–3187

Liu F, Wu YH, Zhang JW, Pedrycz W (2019) A PSO-based group decision making model with multiplicative reciprocalmatrices under flexibility. Soft Comput 23(21):10901–10910

Liu F, Zhang JW, Zhang WG, Pedrycz W (2020) Decision making with a sequential modeling of pairwise comparison process. Knowl-Based Syst 195:105642

Liu F, Zhang JW, Zou SC (2020) A decision making model based on the leading principal submatrices of a reciprocal preference relation. Appl Soft Comput 94:106448

Liu F, Zou SC, Wu YH (2020) A consensus model for group decision making under additive reciprocal matrices with flexibility. Fuzzy Sets Syst 398:61–77

Lu J, Zhang G, Ruan D, Wu FJ (2007) Multi-Objective Group decision making: methods. Software and Applications With Fuzzy Set Techniques. Singapore World Scientific Publishing Co Pte Ltd, Singapore

Ma J, Fan ZP, Jiang YP, Mao JY, Ma L (2006) A method for repairing the inconsistency of fuzzy preference relations. Fuzzy Sets Syst 157:20–33

Orlovsky SA (1978) Decision-making with a fuzzy preference relation. Fuzzy Sets Syst 1(3):155–167

Önüt S, Efendiğil T, Kara SS (2010) A combined fuzzy MCDM approach for selecting shopping center site: an example from Istanbul, Turkey. Expert Syst Appl 37:1973–1980

Pedrycz W, Song MI (2011) Analytic Hierarchy Process (AHP) in group decision making and its optimization with an allocation of information granularity. IEEE Trans Fuzzy Syst 19(3):527–539

Poli R, Kennedy J, Blackwell T (2007) Particle swarm optimization. Swarm Intell 1 (1):33–57

Switalski Z (2003) General transitivity conditions for fuzzy reciprocal preference matrices. Fuzzy Sets Syst 137(1):85–100

Saaty TL (1980) The analytic hierarchy process. McGraw-Hill, New York

Shi Y, Eberhart R (1999) Modified particle swarm optimizer. Proc of IEEE ICEC Confer 6:69–73

Sun W, Yuan YX (2006) Optimization theory and methods: Nonlinear programming, vol 1. Springer Science & Business Media, Berlin

Tanino T (1984) Fuzzy preference orderings in group decision-making. Fuzzy Sets Syst 12 (2):117–131

Wu P, Liu S, Zhou L, Chen HY (2018) A fuzzy group decision making model with trapezoidal fuzzy preference relations based on compatibility measure and COWGA operator. Appl Intell 48:46–67

Wu J, Chiclana F, Fujita H, Herrera-Viedma E (2017) A visual interaction consensus model for social network group decision making with trust propagation. Knowl-Based Syst 122:39–50

Wu J, Sun Q, Fujita H, Chiclana F (2019) An attitudinal consensus degree to control the feedback mechanism in group decision making with different adjustment cost. Knowl-Based Syst 164:265–273

Wu ZB, Xu JP (2012) A concise consensus support model for group decision making with reciprocal preference relations based on deviation measures. Fuzzy Sets Syst 206:58–73

Wu ZB, Xu JP (2012) A consistency and consensus based decision support model for group decision making with multiplicative preference relations. Deci Support Syst 52(3):757–767

Wu J, Zhao ZW, Sun Q, Fujita H (2021) An maximum self-esteem degree based feedback mechanism for group consensus reaching with the distributed linguistic trust propagation in social network. Inf Fusion 67:80–93

Wu P, Zhou L, Chen H, Tao ZF (2020) Multi-stage optimization model for hesitant qualitative decision making with hesitant fuzzy linguistic preference relations. Appl Intell 50:222–240

Xia MM, Xu ZS, Chen J (2013) Algorithms for improving consistency or consensus of reciprocal [0, 1]-valued preference relations. Fuzzy Sets Syst 216:108–133

Xu ZS (1999) A consistency improving method in the analytic hierarchy process. Eur J Oper Res 116:443–449

Xu ZS (2000) On consistency of the weighted geometric mean complex judgement matrix in AHP. Eur J Oper Res 126:683–687

Xu ZS (2007) Intuitionistic preference relations and their application in group decision making. Inf Sci 177:2363– 2379

Xu ZS, Cai XQ (2011) Group consensus algorithms based on preference relations. Inf Sci 181:150–162

Xu ZS, Da QL (2003) An approach to improving consistency of fuzzy preference matrix. Fuzzy Optim Decis Making 2:3–12

Xu YJ, Herrera F (2019) Visualizing and rectifying different inconsistencies for fuzzy reciprocal preference relations. Fuzzy Sets Syst 362:85–109

Xu YJ, Herrera F, Wang HM (2016) A distance-based framework to deal with ordinal and additive inconsistencies for fuzzy reciprocal preference relations. Inf Sci 328:189–205

Xu YJ, Li MQ, Cabrerizo FJ, Chiclana F, Herrera-Viedma E (2019) Algorithms to detect and rectify multiplicative and ordinal inconsistencies of fuzzy preference relations. IEEE Trans Syst, Man, Cybern: Syst. https://doi.org/10.1109/TSMC.2019.2931536

Xu YJ, Liu X, Wang HM (2018) The additive consistency measure of fuzzy reciprocal preference relations. Int J Mach Learn Cybern 9(7):1141–1152

Xu YJ, Wang QQ, Cabrerizo FJ, Herrera-Viedma E (2018) Methods to improve the ordinal and multiplicative consistency for reciprocal preference relations. Appl Soft Comput 67:479– 493

Yager RR (1988) On ordered weighted averaging aggregation operators in mulitcriteria decision making. IEEE Trans Syst. Man Cybern-Part B: Cybern 18(1):183–190

Yager RR, Filev DP (1999) Induced ordered weighted averaging operators. IEEE Trans Syst. Man Cybern-Part B: Cybern 29(2):141–150

Zadeh LA (1965) Fuzzy sets. Inf Control 8(3):338–353

Zhou XY, Ji FP, Wang LQ, Ma YF, Fujita H (2020) Particle swarm optimization for trust relationship based social network group decision making under a probabilistic linguistic environment. Knowl-Based Syst 200:105999

Zhou XY, Wang LQ, Liao HC, Wang SY, Lev B, Fujita H (2019) A prospect theory-based group decision approach considering consensus for portfolio selection with hesitant fuzzy information. Knowl-Based Syst 168:28–38

Acknowledgements

The authors would like to thank the reviewers for the valuable suggestions such that the paper can be improved greatly. The work was supported by the National Natural Science Foundation of China (Nos. 71871072, 71571054), 2017 Guangxi high school innovation team and outstanding scholars plan, the Guangxi Natural Science Foundation for Distinguished Young Scholars (No. 2016GXNSFFA380004), and the Innovation Project of Guangxi Graduate Education (No. YCSW2021).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Liu, F., Zhang, JW. & Luo, ZH. Group decision support model based on sequential additive complementary pairwise comparisons. Appl Intell 51, 7122–7138 (2021). https://doi.org/10.1007/s10489-021-02248-y

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-021-02248-y