Abstract

In this work, an improved moth-flame optimization algorithm is proposed to alleviate the problems of premature convergence and convergence to local minima. From the perspective of diversity, an inertia weight of diversity feedback control is introduced in the moth-flame optimization to balance the algorithm’s exploitation and global search abilities. Furthermore, a small probability mutation after the position update stage is added to improve the optimization performance. The performance of the proposed algorithm is extensively evaluated on a suite of CEC’2014 series benchmark functions and four constrained engineering optimization problems. The results of the proposed algorithm are compared with the ones of other improved algorithms presented in literatures. It is observed that the proposed method has a superior performance to improve the convergence ability of the algorithm. In addition, the proposed algorithm assists in escaping the local minima.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In recent years, many applications constitute non-convex optimizations problems. These non-convex problems are very complex in nature. The traditional gradient algorithms are unable to achieve ideal results [1]. Some population-based random search algorithms inspired by nature have been proposed to solve these problems. These proposed solutions are considered effectively. Since Holland proposed the Genetic Algorithm (GA) [2], the meta-heuristic random search algorithm has led to a different approach in solutions. At present, this meta-heuristic algorithm based on natural phenomena is mainly divided into three categories. These include Genetic Algorithm(GA) [2], Differential Evolution algorithm(DE) [3], and Cultural Algorithm(CA)[4]. The first group of solutions are a family of intelligent optimization algorithms inspired by the laws of natural evolution. The second set of solutions is inspired by the group behavior of creatures like the Particle Swarm Optimization(PSO) [5] that mimics the foraging behavior of birds, the Gray Wolf Optimization(GWO) [6] that mimics the hunting behavior of gray wolves. In addition, there are Salp Swarm Algorithm(SSA) [7], Ant Colony Optimization(ACO) [8], Bat Algorithm(BA) [9], Artificial Bee Colony algorithm (ABC) [10], and Firefly Algorithm(FA) [11]. The third is inspired by natural physical phenomena, such as Gravitational Search Algorithm(GSA) [12] based on the interaction force between particles, Water Cycle Algorithm (WCA) [13], Simulated Anealing algorithm(SA) [14], Ion Motion Optimization (IMO)[15], and Multiverse Optimization(MVO) [16]. Until now, intelligent computing algorithms created by taking inspiration from nature are still incessantly emerging. Kaveh et al developed the Water Strider Algorithm(WSA) [17] based on the social life behavior of water strider. Hayylalam et al. proposed a Black Widow Optimization algorithm(BWO) [18] inspired by the breeding behavior of black widow spiders. Faramarzi et al. respectively developed the Marine Predators Algorithm(MPA) [19] and the Equilibrium Optimizer(EO) [20] based on biological and physical phenomena. In addition, MPA was inspired by foraging strategies among organisms in marine ecosystems. It uses three different movement patterns to simulate the interaction between predator and prey. While the EO is a physics-based optimization algorithm, which originates from the control volume mass balance model. Due to their excellent convergence properties, there have been some research reports on their practical applications.

Metaheuristics are usually considered a promising alternative for the complex optimization problems. For example, Faris et al. [21] combines GA with Random Weight Network (RWN) and propose a hybrid spam detection system, Auto-GA-RWN. The experimental results on three email corpora show that this method achieves higher recognition and detection rate. For the Feature Selection(FS) problem, Faris et al. [22] designed an enhanced binary SSA optimizer by selecting the best transfer function. The comparison with several advanced methods proves that the proposed method has better accuracy. Taradeh et al. [23] applies GSA to solve the FS problem, and for the first time propose the use of evolutionary crossover and mutation operators to improve the exploration and exploitation capabilities of GSA. Extensive research shows that this method is significantly better than other similar methods when dealing with FS tasks.

The Moth-Flame Optimization algorithm(MFO)[24] is a new intelligent optimization algorithm proposed by Mirjalili in 2015. The algorithm is inspired by a peculiar navigation mechanism of the moth, i.e., transverse orientation. The moths are able to keep flying straight at night because they use the moon as a reference and always fly at a fixed angle to the moon. However, this mechanism is only effective when dealing with long-range reference objects. When moths are disturbed by artificial light sources, the same positioning method will only mislead them to fly around the light sources, resulting in fire-fighting behavior. Inspired by this notion, Mirjalili simulated the night flight principle of the moth and proposed this optimization method based on a mathematical model of spiral motion.

The MFO algorithm has attracted a wide attention due to its simple structure, robustness, and easy implementation[15]. This algorithm has been applied in many fields. Yildiz et al. [25] used the MFO algorithm to determine the optimal machining parameters in the manufacturing process and solved the milling optimization problem in the manufacturing process. Yousri et al. [26] used the MFO algorithm to extract the diode parameters in the polycrystalline silicon solar module. The experiment proves that MFO has a better performance when solving this type of problem. Lei et al. [27] proposed a moth-flame optimization based protein complex prediction algorithm, and applied it to solve the problem of protein complex recognition in Protein-Protein Interaction(PPI) networks. The experimental results demenstrate that this method has certain advantages over other similar methods. However, MFO has insufficient global convergence ability in the optimization process and is prone to getting stuck in the local minima. To address the aforementioned problems, Xu et al. [28] combined MFO with a series of mutations to help the algorithm escape the local optimal solution and avoid premature convergence when dealing with high-dimensional multi-modal problems. Chen et al. [29] combined the distributed computing spark platform with the MFO. The experiments of this combined setup reveal that the algorithm effectively improves the classification performance of feature selection. Wang et al. [30] used the diploid structure of replication coding to update the moth population, thereby improving the global search ability of the algorithm. Emary et al. [31] proposed an improved moth-flame optimization algorithm based on search and development speed control by changing the path of moth spiral motion. The study shows that the improved MFO can establish a better balance between exploration and exploitation when compared with other methods. Xu et al. [32] introduced the Cultural Learning(CL) mechanism and Gaussian Mutation(GM) operator into the MFO. The testing of the benchmark functions shows that it improves the insufficient search ability of the algorithm and easily mitigates the local optimal issue, thus resulting in the improved solution quality and reliability. Huang et al. [33] guided moths to constantly seek better values by establishing a ring network between flames. This technique improves the exploration ability of the algorithm and provides good results in practical engineering applications. Sapre et al. [34] combined MFO with opposite learning, Cauchy mutation and evolutionary boundary constraint handling. The simulation results show that these methods can significantly enhance the exploration and exploitation capabilities of MFO. Li et al. [35] opined that chaotic maps are one of the best ways to improve the performance of meta-heuristic algorithms. The authors use chaotic maps to improve the MFO algorithm by proposing a chaos enhanced MFO algorithm. The presented method verifies the effectiveness of the method on the basis of benchmark function tests and practical engineering applications. Zhang et al. [36] proposed an improved MFO algorithm that combines Broyin-Fletcher-Goldfarb-Shanno method(BFGS) and Orthogonal Learning (OL). The results show 0that this method effectively alleviates the shortcomings of stagnant MFO evolution to improve convergence. To mitigate the stagnation of local optimization of MFO, Pelusi et al. [1] divided the optimization process of MFO into exploration, hybrid exploration and exploitation, and exploitation to ensure that MFO is able to establish a better balance between exploration and exploitation. Li et al. [37] proposed the use of differential evolution flame generation and dynamic flame guidance to guide the population evolution and improve the global search capability of MFO. In addition, Khalilpourazari et al. [38] also introduced the water cycle algorithm into MFO, and proposed a hybrid meta-heuristic algorithm for water cycle moth flame optimization to enhance the exploitation capabilities of MFO. The results show that this method effectively assists the MFO to get rid of local minima.

The aforementioned methods tend to enhance the performance of the solution. However, sometimes the consideration of diversity is insufficient. For instance, Xu et al. [28] improved the algorithm using the notion of mutating search agents, while traditional mutation operators such as Gaussian mutation perform non-directional mutations. These mutations are unable to increase the diversity of the population. The high-frequency operation greatly increases the complexity of the algorithm. Therefore, the improvement of diversity is undoubtedly an important aspect to enhance the optimization performance of the algorithm. When the population diversity is poor and the solution has fallen into local minima, the calculation results do not exhibit any substantial improvements even if the algorithm continues. Jacques et al. [39] proposed a particle swarm optimization algorithm using diversity measures to control the population. The experiment proved that it is significantly better than other contrast algorithms in handling multimodal optimization problems. Wang et al. [40] improved the search diversity of the ant colony algorithm by using both positive and negative feedback at the same time. The experimental results show that the performance of the improved algorithm is significantly improved in dealing with combinatorial optimization problems. In order to enhance the search capability of differential evolution algorithm, Yang et al. [41] proposed an Auto-Enhanced Population Diversity (AEPD) mechanism to improve the algorithm. The simulation results demonstrate that the modified algorithm has better performance than other similar algorithms.

To improve the optimization performance of the algorithm, a diversity and mutation strategy base moth-flame optimization algorithm (DMMFO) is proposed. The main contributions of this paper are as follows:

-

In the exploration phase of the moth-flame optimization, a diversity weight component is integrated to alleviate the premature convergence caused by the rapid evolution of the algorithm. This component can continuously switch the population between divergence and contraction within a limited iteration time to maintain the diversity of the population, and balance the exploration and exploitation capabilities of the algorithm.

-

The dimensional mutation operator is employed to improve the algorithm’s ability to update the optimal solution. It allows the algorithm to explore unknown area in space in a reasonable way, so that the population can cover a wider range of feasible solutions, and avoid stagnation in evolution.

-

The optimal control parameters of the proposed method are obtained through the sensitivity test of the control parameters.

At present, there are few studies on embedding diversity and mutation strategies in MFO algorithms. This analysis forms the basis of this work. The rest of the manuscript is organized as follow. Section 2 presents the main structure of the MFO. Section 3 discusses the proposed DMMFO which combines diversity and mutation based on MFO. The experiments and results are analyzed in detail to validate the validity in Section 4. Section 5 investigates the effectiveness of the proposed algorithm in solving four constrained engineering optimization problems. Section 6 contains the conclusions of the paper.

2 Moth-flame optimization

This section presents the mathematical model of the MFO as a simple and efficient optimization technique.

2.1 Population initialization and storage mechanism

The MFO algorithm uses the following relation to initialize the population position in the search space.

where, ub and lb represent the upper and lower bounds of the searching space, respectively. n represents the population size, d represents the number of variables, i.e., the number of dimensions, R represents the random numbers that generate random numbers with a uniform distribution between (0,1). The initial position corresponding to each moth is stored in the matrix M.

When the positions of all moths in the search space are obtained, the fitness value of each moth is calculated by using fitness function, and the calculated result is stored in fitness matrix OM.

It is notable that another variable is introduced here, i.e., flame. The moths and flames are actually candidate solutions. The flame can be seen as the flag of the moth in the search space. The moth is responsible for moving in the search space and finding the best position, and the flame represents the best position that has been found so far. The position of the flames and the corresponding fitness values are stored in the matrices F and OF, respectively.

where n represents the number of moths.

2.2 Position update mechanism

This mechanism mimics the fire-fighting behavior of moths in real life. According to the sorted flames, the moths fly around the flames in a spiral motion. This phenomenon is described as

where, Mi represents the updated position of the i-th moth, S represents a logarithmic spiral curve, Fj represents the j th flame, b represents a constant, t represents a random number between [a,1], where a decreases linearly from -1 to -2 as the number of iterations increases, and Di is the distance from the i-th moth to the j-th flame.

Please note that to improve the ability to develop and explore the optimal solution and improve the convergence rate of the algorithm in the later stages, MFO adopts an adaptive reduction mechanism for the number of flames, as described by the following relation

where, N represents the maximum number of flames, T represents the current number of iterations, and Tmax represents the maximum number of iterations.

3 The proposed methods

This section elaborates on the two important components integrated in the MFO, and explains the principle of action. Finally, the computational complexity of the proposed method is analyzed.

3.1 Inertia weight

The poor diversity is often considered as the main reason for premature convergence of the MFO algorithm. Thus, its richness is of great significance for the process of population optimization. When the population has a high diversity, it searches a larger range in a limited area. In other words, the high population diversity directly represents that a large area in the search space has been searched. In contrast, the rapid decrease of diversity in the evolutionary process results in a smaller search space for the algorithm.

In the MFO, the position update of moths is divided into two categories. When the number of moths is less than or equal to the number of flames, each moth flies around the corresponding flame and updates its position. Due to the presence of an adaptive flame reduction mechanism, when the number of moths is greater than the number of flames, all moths update their positions based on only one flame. In this work, the inertia weight of a diversity feedback control [42] is added to the first case by manipulating (7). The updated relation of the moths is

Please note that a smaller value of W means that all groups in the search space are more likely to converge to the optimal advantage. The relation for calculating the inertia weight is

where, N represents the population number, dim represents the dimension, and \( M^{T}_{i,j} \) represents the value of the i-th moth in the j dimension at the T-th iteration, and \( \bar {M}^{T}_{j}\) represents the mean value of the moth in the j dimension at the T-th iteration.

The symbol L is defined as the maximum diagonal distance of the search space and is obtained as

where, ubj and lbj represent the upper bound and lower bound of the j-th dimension in the search space, respectively.

The value of C is determined by the difference in positions of the moths in different dimensions. In (10), the smaller W indicates that the whole population continuously evolves toward the optimal value. At the beginning of the iteration, as the value of T is small, the value of C has a greater impact on (11). When the overall trend of convergence reaches to a certain point, W increases rapidly, making the group approach the optimal point in a stable form. The current convergence result will reduces W in the next iteration, so that the moths conduct a more detailed search within a smaller flight radius near a better solution.

The effect of diversity on population evolution is graphically presented in Fig. 1. It is evident from Fig. 1a that in the early stage of the iterations, due to the influence of weights, the population in the search domain is relatively scattered. For some complex functions, the algorithm easily falls into the local minima trap during the evolution process. However, increasing the diversity of the population reduces this risk as presented in (Fig. 1b). Even though most solutions fall into local optima, there are still search agents that jump out of the trap and approach global optima due to the expansion of search space. In the later stages of iteration, W decreases due to the gradual approach of \( 1-\frac {T}{T_{max}} \) to zero. At this time, more moths will find the best flame mainly based on (10). Finally, the population converges when a termination criterion is met as presented in (Fig. 1c).

3.2 Position variation

In the MFO algorithm, the pros and cons of flame fitness are all calculated and ranked by the moth’s position calculation. The sorted moth can only be updated according to the corresponding flame. If the first moth falls into the local minima, the algorithm faces a hard time to exit this minima. Therefore, at the end of each position update, (1) with a small probability is usded to initialize the positions of the three moths with the best fitness values in the random dimensions. This is presented in Fig. 2.

This not only retains some of the original attributes of the moth, but also changes its position in a certain direction ,causing the search starting point to shift. In other words, the flame corresponding to the first moth is not necessarily the brightest. There may be brighter flames around it, but the distance dimmed their glow. The random variation is similar to the wind in nature. It has the probability to blow from different directions to change the position of the moths, thereby helping the moths to find a better solution. Because the amplitude and frequency of the mutations are low, the moth do not lose the search for the original solution significantly. The probability calculation procedure is (if rand < 0.1). The random dimension selection procedure is

3.3 Computational complexity analysis

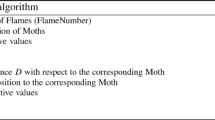

The time computational complexity of the DMMFO mainly depends on the maximum number of iterations (Q), initialization, sorting, position update, inertia weight and mutation mechanism. The time complexity during the initialization process is O(n × d), where n is the number of moths. Since the fast method is adopted, the complexity of each iteration sort is O(Q × n2) in the worst case. Considering the population with n moths and d-dimensional optimization problem, the positions are updated and the diversity weight requires O(Q × n × d). Since it is a probabilistic mutation and only the best three moths are selected for mutation, the worst case requires O(Q × 3 × d). After the data is sorted out, the upper bound of DMMFO computational complexity is:O(Q(n2 + nd)). The pseudocode of the proposed DMMFO is as presented below.

4 Simulation experiment

The CEC’2014 benchmark functions [43] were selected to test the performance of the proposed DMMFO method (see Table 1) and were compared with the basic MFO, PSO, and other improved algorithms [28]. CEC’2014 includes the four parts, namely 3 unimodal functions (1-3), 13 simple multimode functions (4-16), 6 hybrid function (17-22), and 8 composition functions (23-30).

4.1 Discussion of control parameters

The parameters of meta-heuristic algorithms often have a greater impact on the convergence performance of the algorithm. The main control parameter of MFO is b in (7). This parameter defines the shape of the logarithmic spiral curve. To explore the evolution caused by the different values of b and analyze the effect of b on convergence, the algorithm runs in six different types of functions with the following fixed parameters: population number n = 50, maximum fitness evaluation times MaxFEs = 10000 × dim. Tables 2, 3, 4 show the test results in three different dimensions under different values of b.

As presented in Table 2, the optimization results are obtained when b = 1 with the best results among the five functions. But the situation has changed when the dimension is 30, the best results are only achieved when b = 1 in the F7 and F30 functions. Except for b = 1.5, the other values have the same effect. For 50 dimensions, when b = 1, the best optimization effect will be achieved, followed by 0.2. Therefore, this paper sets b to 1 in order to ensure best performance.

For the purpose of the fairness in comparisons, all algorithms are executed in the same environment in this paper. The software used for executing all the algorithms is Matlab2018b. The OS used of the PC used for performing simulations is Windows10. The CPU is Intel Core i7 3.0Hz. The experimental parameters are the number of population equal to 50, and the maximum fitness evaluation times are MaxFEs = 10000 × dim. Each algorithm runs independently 50 times and the execution results of the algorithm in l0 dimensions, 30 dimensions, and 50 dimensions are collected. The collected results are then subject to statistical analysis. The control parameters of all algorithms and their variant versions are consistent with the corresponding literatures.

4.2 Evaluation standard

To evaluate the performance of the algorithm, this paper introduces the commonly used evaluation indexes, namely, average convergence value and standard deviation. The mean value reflects the convergence accuracy of the algorithm, while the standard deviation shows the stability of the algorithm. So as to further compare the algorithms more comprehensively, wilcoxon symbol rank test is also used in this work [44]. This method uses a limited number of samples to evaluate the pros and cons of the algorithm. First, the final convergence result indicators of the two algorithms in the same test function are paired and subtracted, and the differences are accumulated and recorded as R + and R −, respectively. It is generally believed that the former algorithm is better when the number of R + is greater than the number of R −, and vice versa. Generally, the significance level is set to 0.05 in this paper. Please note that, symbols “ + ”, “ - ” and “ = ” indicate that the algorithm proposed in this work is superior to, inferior to, and equal to the algorithm to which it is being compared. gm represents the total difference between the symbols “ + ” and “ - ”.

4.3 Unimodal test functions result analysis

Tables 5, 6, 7 summarize the performance indicators of several algorithms on the unimodal test functions in different dimensions. Except for F3, the proposed DMMFO is dominant in all evaluation indicators. These functions are unimodal and non-separable plate shape problems. So even though there is only a single minimum, it is difficult for the algorithm to converge on this minimum. Although the DMMFO is less accurate than LGCMFO in the F3 of each dimension, it is better than CMFO. The convergence graphs of average best-so-far solutions are shown in Figs. 3, 4, 5, 6, 7 and 8. Note that, the proposed DMMFO does not converge prematurely as compared to MFO and CMFO in the test function F1 and nor does the echelon form appear. In addition, the convergence speed of the proposed DMMFO is faster than LGCMFO. This indicates that DMMFO has the ability to continuously converge when dealing with this type of problem. The underlying reason is that our added diversity weight raises the search space of the population and enables the algorithm to find a better solution in a limited range. Moreover, it also guides the population to evolve towards the global optimum, thereby improving the convergence speed of the algorithm. This shows that the exploitation ability of the proposed method on the unimodal problem has been greatly improved.

4.4 Simple multimodal text functions result analysis

As presented in Tables 8, 9, 10, the DMMFO has a great advantage in the calculation of different dimensions. The main reason is that the diversity weight and mutation strategy in DMMFO establishes a balance between exploration and exploitation. For F5 and F12 functions, the gaps of several algorithms are very small. The DMMFO still has a slight lead after several runs. For function F7, the optimization performance of the DMMFO displays a qualitative leap as the number of dimension increases. From Figs. 9, 10 11, 12, 13 and 14, the convergence graph of the simple multimodal test function has a step-like or cliff-like decline. This suggests that the algorithm has a relatively prominent exploration ability. Although the functions F7, F14, and F15 have experienced several local optimal solutions, they always evolve continuously. This shows that the diversity weight in this work effectively improves the diversity of the population and alleviates the clustering and trampling of the population in the multimodal problem. The mutation strategy added after the position update is completed changes the evolution direction of the population after the algorithm falls into the local optimum. This helps the proposed algorithm to jump out of the local solutions.

4.5 Hybrid text functions result analysis

The proposed algorithm is applied to the hybrid text functions in Tables 11, 12 and 13. Except for F20 and F23, the proposed DMMFO has the best optimization ability. For the function F20, although the final convergence result of the DMMFO is not as good as for the PSO algorithm, its performance is clearly better as compared to MFO. In the F18 test, the proposed DMMFO is significantly better than the LGCMFO and a higher latitude yields a better optimization performance. Compared with CMFO and MFO, the solution accuracy has been greatly improved. This may be the following reasons that the diversity weight embedded in the exploration stage enhance the algorithm’s global search capabilities, and the mutation strategy increases the randomness of population movement to a certain extent. Thus, DMMFO achieves a balance between exploration and exploitation in the testing of hybrid functions of different dimensions. From the box plot presented in Fig. 18, it is observed that the results of the algorithm are stable after multiple runs. In the tests of the function F21 for different dimensions, the indicators of the DMMFO outperform other presented algorithms. Hence, although the proposed DMMFO has insufficient advantages in low dimensions, its performance is quite competitive for medium and high number of dimensions (Figs.15, 16, 17, 18, 19, and 20).

4.6 Composition text functions result analysis

Tables 14 15 and 16 present the comparison results for the selected composition text functions. The performance of the proposed DMMFO in comparison with MFO and CMFO on F29 and F30 demonstrates that the former has a better performance. It still maintains obvious advantages in the remaining seven test functions. Although LGCMFO’s performance is very stable on the F23 in each dimension and F24, F25, F27, and F28 in tmiddle and high number of dimensions. However, all five algorithms are unable to find the global optimum. In the 50-dimensional F26 function test, the results of the two improved algorithms are worse than MFO, but the proposed DMMFO improves the accuracy of the solution. Hence, the performance of this algorithm in the composition test functions is better than MFO, CMFO, PSO, and second to LGCMFO. The aforementioned results show that the diversity weight and mutation strategy proposed in this work significantly enhance the optimization performance of the algorithm (Figs. 21, 22, 23, 24, 25, and 26).

4.7 Population diversity comparison

This section will discuss the role of the proposed method in improving population diversity. Figures 27, 28, 29 show a comparison chart of the diversity of MFO and DMMFO under six different types of functions.The average distance between all solutions during each iteration is presented in the diversity chart of the algorithm.

As presented in Fig. 27, the population of proposed DMMFO is very active in three different dimensions. For the functions F2, F7, and F8 under 10 dimensions condition, the MFO curve becomes smoother at the initial stage of iteration due to the rapid loss of algorithm’s diversity. In functional tests of 30 and 50 dimensions, it can be clearly observed that the average distance between the proposed DMMFO populations in the exploration stage is greater than that of the MFO. Moreover, the diversity curve of the algorithm quickly approaches 0 when MFO optimizes 50 dimensional functions F8, F18, and F30. From the results in Section 4.4, this indicates that the population has fallen into the trap of local minima. Due to the effect of the diversity weight, the population of proposed DMMFO is evenly distributed over the search space at the beginning of the iterations. It balances the exploration and exploitation ability of the algorithm. In addition, this allows the algorithm to perform a global search more efficiently and jump out of the local minima if trapped in one.

The convergence of the algorithm includes two stages, divergence and contraction of particles. The divergence process of the particles of the algorithm is the premise of the shrinking process, i.e., the diversity of the population is reduced only if the diversity of population is increased first. This is the purpose of introducing diversity weight in the proposed DMMFO.

5 Engineering optimization examples

In this section, four typical pratical complex constraints engineering optimization problems are employed to further investigate the performance of DMMFO and compared with other reported methods.

5.1 Problem of tension/compression spring design

This problem was raised by Belegundu (1982) [45], and it requires solving for minimizing the weight of a tension / compression spring (Fig. 30).

There are three design variables for this problem, namely, the wire diameter d (x1), the coil diameter D (x2), and the number of active coils P (x3). The optimization model of the problem is formulated as

Minimize:

Subject to:

Variable range:

This problem has been solved by using different heuristic algorithms, for instance, PSO [46], ES[47], GA[48], DE[49]. In Table 17, the best results of this paper are compared with the other methods. It is observed that the proposed DMMFO effectively solves this problem and provides the best design solution.

5.2 Problem of pressure vessel design

The design problem of a pressure vessel was first proposed by Kannanet al. [50]. This problem focuses on computing the minimum total cost (including material, forming, and welding costs) of the pressure vessel. As presented in Fig. 31, the vessel has four design variables, namely, Ts (X1, cylinder thickness), Th (X2, head cover thickness), R (X3, inner radius of the cylinder),and L (X4, cylinder length). Two variables of Ts, Th are an integer multiple of 0.0625in of the thickness of the steel plate. The optimization model of this problem is formulated as below.

Minimize:

Subject to:

Variable range:

The proposed DMMFO is used to solve this problem, and its results are compared with PSO [46], GA [51], DE [49] and ACO [52] as presented in Table 18. Table 18 presents that an optimal design cost is obtained by using the algorithm proposed in this work.

5.3 Problem of cantilever beam design

It is a weight minimizing problem for solving square cross section cantilever beam [53]. As presented in Fig. 32, the cantilever beam is composed of five hollow blocks, so the number of parameters is also five, and the design parameter Xi is the side length of the square block. The comparison results of the proposed algorithm with the methods GOA[54], CS[55], MMA[56], and SOS[57] as presented in Table 19. The optimization model of this problem is formulated as

Minimize:

Subject to:

Variable range:

Table 19 presents the comparisons of the results. It is observed that the proposed DMMFO performs significantly better than other compared algorithms.

5.4 Problem of three-bar truss design

The three-bar truss problem [58] (Fig. 33) is a structural optimization problem. It is focused on minimizing the weight. There are two parameters that need to be addressed. Due to its difficult constrained search space, this problem has a wide range of application prospects. The formulation of this problem is

Minimize:

Subject to:

Variable range:

The proposed algorithm is used to solve this problem and compare the results with the methods presented in [53, 58], and [59]. As presented in Table 20, the proposed algorithm outperforms the other presented methods.

As a summary, this section investigates the performance of the proposed DMMFO on four practical engineering examples. These examples are some complex constraint problems within the unknown search space. The experimental results show that DMMFO can obtain a better solution than other methods. All these provide a strong proof for the applicability of DMMFO in dealing with real-life problems.

6 Conclusions and future work

This paper integrates two efficient mechanisms into the MFO to improve the performance of the algorithm. The global exploration ability of the algorithm is enhanced by embeding an inertia weight of diversity feedback control in a specific position update stage. The optimal solution of the algorithm is obtained by updating and ranking according to the position of the moth,reducing the optimization ability of some moths. To mitigate this issue, the better moths are mutated with a certain probability in the improved algorithm to further ensure the diversity of the population and improve the exploration capacity of the algorithm. CEC’2014 series of functions were used to test the proposed algorithm and comprehensively evaluate it with three indicators, namely, average value, standard deviation, and Wilcoxon rank test. Furthermore, the proposed algorithm was employed to resolve four engineering problems. The experimental results show that the improved algorithm proposed in this work performs better in terms of convergence accuracy and the ability to jump out of the local optimal solutions.

Although the DMMFO showed an acceptable performance in the tested problems it has some limitations. It is still prone to fall into the trap of local minima when it is adopted to deal with the optimization of high-dimensional problems. The duty of moths in DMMFO is to perform a global search. If all the moths are trapped in a large local minima, the underlying reason is that the radius of the moth’s spiral motion is not large enough to find a better solution to help the algorithm jump out of local optima. Therefore, DMMFO’s exploration ability still needs to be further improved.

There are many complex optimization problems, for instance in practical design tasks, the barrel vault structure design to meet the optimal weights and the marine propeller shape optimization to achieve the least loss. These problems are highly constrained and computationally expensive. The results of this paper prove that DMMFO is a simple and effective method to solve this type of constraint problem within the unknown search space. In addition, apply it to optimize the penalty factor and gamma of the support vector machine to improve its adaptability, or to fit the best undetermined parameters from the photovoltaic system to improve the conversion efficiency is worth researching. Moreover, it is also an interesting problem to use DMMFO to optimize the site selection coordinates of the base station on the map to obtain higher coverage. On the other hand, the current study of MFO has focused on the single-objective optimization problem. To find the techniques to apply this algorithm to multi-objective problems, it can be investigated in future studies.

References

Pelusi D, Mascella R, Tallini LG, Nayak J, Naik B, Deng Y (2020) An improved moth-flame optimization algorithm with hybrid search phase. Knowl Based Syst 191:105277

Holland JH (1973) Genetic algorithms and the optimal allocation of trials. SIAM J Comput 2 (2):88–105

Storn R, Price K (1997) Differential evolution-a simple and efficient heuristic for global optimization over continuous spaces. J Glob Optim 11(4):341–359

Khodabakhshian A, Hemmati R (2013) Multi-machine power system stabilizer design by using cultural algorithms. Int J Electr Power Energy Syst 44(1):571–580

Kennedy J, Eberhart RC (2002) Particle swarm optimization. Proceedings of the 1995 IEEE International Conference on Neural Networks 4:1942–1948

Mirjalili S, Mirjalili SM, Lewis A (2014) Grey wolf optimizer. Adv Eng Softw 69:46–61

Mirjalili S, Gandomi AH, Mirjalili SZ, Saremi S, Faris H, Mirjalili SM (2017) Salp swarm algorithm. Adv Eng Softw 114:163–191

Dorigo M, Maniezzo V, Colorni A, et al. (1996) Ant system: optimization by a colony of cooperating agents. IEEE Trans Syst Man Cybern Syst 26(1):29–41

Yang X, Gandomi AH (2012) Bat algorithm: a novel approach for global engineering optimization. Eng Comput 29(5):464–483

Karaboga D, Akay B (2009) A comparative study of artificial bee colony algorithm. Appl Math Comput 214(1):108–132

Yang X (2010) Firefly algorithm, stochastic test functions and design optimisation. International Journal of Bio-inspired Computation 2(2):78–84

Rashedi E, Nezamabadipour H, Saryazdi S (2009) Gsa: A gravitational search algorithm. Inform Sci 179(13):2232–2248

Eskandar H, Sadollah A, Bahreininejad A, Hamdi M (2012) Water cycle algorithm - a novel metaheuristic optimization method for solving constrained engineering optimization problems. Comput Struct 110 (1):151–166

Kirkpatrick S, Gelatt CD, Vecchi MP (1983) Optimization by simulated annealing. Science 220(4598):671–680

Wang C, Wang B, Cen Y, Xie N (2019) Ions motion optimization algorithm based on diversity optimal guidance and opposition-based learning. Control and Decision: 1–13

Mirjalili S, Mirjalili SM, Hatamlou A (2016) Multi-verse optimizer: A nature-inspired algorithm for global optimization. Neural Comput and Applic 27(2):495–513

Kaveh A, Dadras Eslamlou A (2020) Water strider algorithm: A new metaheuristic and applications. Structures 25:520–541

Hayyolalam V, Kazem AAP (2019) Black widow optimization algorithm: A novel meta-heuristic approach for solving engineering optimization problems. Eng Appl Artif Intell 87:103249

Faramarzi A, Heidarinejad M, Mirjalili S, Gandomi AH (2020) Marine predators algorithm: A nature-inspired metaheuristic. Expert Syst Appl 152:113377

Faramarzi A, Heidarinejad M, Stephens B, Mirjalili S (2020) Equilibrium optimizer: A novel optimization algorithm. Knowledge Based Systems 191

Faris H, Alzoubi AM, Heidari AA, Aljarah I, Mafarja M, Hassonah MA, Fujita H (2019) An intelligent system for spam detection and identification of the most relevant features based on evolutionary random weight networks. Information Fusion 48:67–83

Faris H, Mafarja M, Heidari AA, Aljarah I, Alzoubi AM, Mirjalili S, Fujita H (2018) An efficient binary salp swarm algorithm with crossover scheme for feature selection problems. Knowl Based Syst 154:43–67

Taradeh M, Mafarja M, Heidari AA, Faris H, Aljarah I, Mirjalili S, Fujita H (2019) An evolutionary gravitational search-based feature selection. Inform Sci 497:219–239

Mirjalili S (2015) Moth-flame optimization algorithm: A novel nature-inspired heuristic paradigm. Knowl Based Syst 89:228–249

Yíldíz BS, Yíldíz AR (2017) Moth-flame optimization algorithm to determine optimal machining parameters in manufacturing processes. Materials Testing 59(5):425–429

Allam D, Yousri D, Eteiba M (2016) Parameters extraction of the three diode model for the multi-crystalline solar cell/module using moth-flame optimization algorithm. Energy Convers Manag 123:535–548

Lei X, Fang M, Fujita H (2019) Moth-flame optimization-based algorithm with synthetic dynamic ppi networks for discovering protein complexes. Knowl Based Syst 172:76–85

Xu Y, Chen H, Luo J, Zhang Q, Jiao S, Zhang X (2019) Enhanced moth-flame optimizer with mutation strategy for global optimization. Inform Sci 492:181–203

Chen H, Fu H, Cao Q, Han L, Yan L (2019) Feature selection of parallel binary moth-flame optimization algorithm based on spark. In: 2019 IEEE 3rd Information Technology, Networking, Electronic and Automation Control Conference (ITNEC). IEEE, pp 408–412

Wang P, Zhou Y, Luo Q, Fan C, Xiang Z (2019) A complex-valued encoding moth-flame optimization algorithm for global optimization. In: International Conference on Intelligent Computing. Springer, pp 719–728

Emary E, Zawbaa HM (2016) Impact of chaos functions on modern swarm optimizers. PloS one 11(7):e0158738

Xu L, Li Y, Li K, Beng GH, Jiang Z, Wang C, Liu N (2018) Enhanced moth-flame optimization based on cultural learning and Gaussian mutation. J Bionic Eng 15(4):751–763

Huang L, Yang B, Zhang X, Yin L, Yu T, Fang Z (2019) Optimal power tracking of doubly fed induction generator-based wind turbine using swarm moth–flame optimizer. Trans Inst Meas Control 41(6):1491–1503

Sapre S, Mini S (2019) Opposition-based moth flame optimization with cauchy mutation and evolutionary boundary constraint handling for global optimization. Soft Comput 23(15):6023–6041

Hongwei LI, Liu J, Chen L, Bai J, Sun Y, Kai LU (2019) Chaos-enhanced moth-flame optimization algorithm for global optimization. J Syst Eng Electron 30(6):1144–1159

Zhang H, Li R, Cai ZN, Gu Z, Chen M (2020) Advanced orthogonal moth flame optimization with broyden-fletcher-goldfarb-shanno algorithm: Framework and real-world problems. Expert Syst Appl 159:113617

Li C, Niu Z, Song Z, Li B, Fan J, Liu PX (2018) A double evolutionary learning moth-flame optimization for real-parameter global optimization problems. IEEE Access 6:76700–76727

Khalilpourazari S, Khalilpourazary S (2019) An efficient hybrid algorithm based on water cycle and moth-flame optimization algorithms for solving numerical and constrained engineering optimization problems. Soft Comput 23(5):1699–1722

Riget J, Vesterstrøm JS (2002) A diversity-guided particle swarm optimizer-the arpso, Dept. of Computer Science, University of Aarhus, Denmark 2 (2002)

Wang Y (2013) An improved ant colony algorithm based on the search for diversity. Computer Digital Engineering 41(6):896–898

Yang M, Li C, Cai Z, Guan J (2014) Differential evolution with auto-enhanced population diversity. IEEE Trans Cybern 45(2):302–315

Fang W, Sun J, Xu W (2008) Diversity-controlled particle swarm optimization algorithm. Control and Decision 23(8):863–868

Liang J, Qu B, Suganthan P Problem definitions and evaluation criteria for the cec 2014 special session and competition on single objective real-parameter numerical optimization. Computational Intelligence Laboratory

Mirjalili S (2016) Sca: A sine cosine algorithm for solving optimization problems. Knowl Based Syst 96:120–133

Belegundu AD, Arora JS (1985) A study of mathematical programming methods for structural optimization. Part i: Theory. Int J Numer Methods Eng 21(9):1583–1599

He Q, Wang L (2007) An effective co-evolutionary particle swarm optimization for constrained engineering design problems. Eng Appl Artif Intell 20(1):89–99

Mezura-Montes E, Coello CAC (2008) An empirical study about the usefulness of evolution strategies to solve constrained optimization problems. Int J Gen Syst 37(4):443–473

Coello CAC (2000) Use of a self-adaptive penalty approach for engineering optimization problems. Comput Ind 41(2):113–127

Li L, Huang Z, Liu F, Wu Q (2007) A heuristic particle swarm optimizer for optimization of pin connected structures. Comput Struct 85(7-8):340–349

Kannan B, Kramer SN (1994) An augmented lagrange multiplier based method for mixed integer discrete continuous optimization and its applications to mechanical design. Mechanical Design 116(2):405–411

Deb K (1997) Geneas: A robust optimal design technique for mechanical component design. In: Evolutionary algorithms in engineering applications. Springer, pp 497–514

Kaveh A, Talatahari S (2010) An improved ant colony optimization for constrained engineering design problems. Eng Comput 27(1):155–182

Gandomi AH, Yang X. -S., Alavi AH (2013) Cuckoo search algorithm: a metaheuristic approach to solve structural optimization problems. Eng Comput 29(1):17–35

Saremi S, Mirjalili S, Lewis A (2017) Grasshopper optimisation algorithm: theory and application. Adv Eng Softw 105:30–47

Kaveh A, Mahdavi VR (2014) Colliding bodies optimization: A novel meta-heuristic method. Computers & Struct 139:18–27

Chickermane H, Gea H (1996) Structural optimization using a new local approximation method. Int J Numer Methods Eng 39(5):829–846

Cheng M-Y, Prayogo D (2014) Symbiotic organisms search: A new metaheuristic optimization algorithm. Comput Struct 139:98–112

Sadollah A, Bahreininejad A, Eskandar H, Hamdi M (2013) Mine blast algorithm: A new population based algorithm for solving constrained engineering optimization problems. Appl Soft Comput 13(5):2592–2612

Ray T, Saini P (2001) Engineering design optimization using a swarm with an intelligent information sharing among individuals. Eng Optim 33(6):735–748

Acknowledgments

This work was supported by the National Natural Science Foundation of China (Grant No.11705002), and the Scientific Research Foundation of Education Department of Anhui Province,China(Grant No.KJ2019A0091, Grant No.KJ2019ZD09), the Humanities and Social Science Fund of Ministry of Education of China (Grant NO.19YJAZ H098). The authors would like to thank all the anonymous referees for their valuable comments and suggestions to further improve the quality of this work.

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Declaration of competing interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Ma, L., Wang, C., Xie, Ng. et al. Moth-flame optimization algorithm based on diversity and mutation strategy. Appl Intell 51, 5836–5872 (2021). https://doi.org/10.1007/s10489-020-02081-9

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-020-02081-9