Abstract

Constructing effective classifiers from imbalanced datasets has emerged as one of the main challenges in the data mining community, due to its increased prevalence in various real-world domains. Ensemble solutions are quite often applied in this field for their ability to provide better classification ability than single classifier. However, most existing methods adopt data sampling to train the base classifier on balanced datasets, but not to directly enhance the diversity. Thus, the performance of the final classifier can be limited. This paper suggests a new ensemble learning that can address the class imbalance problem and promote diversity simultaneously. Inspired by the localized generalization error model, this paper generates some synthetic samples located within some local area of the training samples, and trains the base classifiers with the union of original training samples and synthetic neighborhoods samples. By controlling the number of generated samples, the base classifiers can be trained with balanced datasets. Meanwhile, as the generated samples can extend different parts of the original input space and can be quite different from the original training samples, the obtained base classifiers are guaranteed to be accurate and diverse. A thorough experimental study on 36 benchmark datasets was performed, and the experimental results demonstrated that our proposed method can deliver significant better performance than the state-of-the-art ensemble solutions for the imbalanced problems.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The ultimate goal of constructing a classifier is to learn a set of essential classification rules that can provide accurate classifications on previously unseen data. However, when the class imbalance problem occurs (i.e., the instances of one class significantly outnumber the others), learning essential rules can be challenging. Most of the standard classification algorithms are designed to work on balanced datasets, and they tend to generate classification models maximizing the overall accuracy. Consequently, the resulting classifier will strongly favor the instances of the majority class, because the rules that correctly predict those instances are positively weighted in favor of the overall accuracy, whereas the rules that predict instances of the minority class are fewer and weaker, because minority class is both outnumbered and under presented [1,2,3]. This could be problematic, because the instances of the minority class usually represent the concept of greater interest, and biased classification on these instances can make the classifier useless during practical use.

Learning from imbalanced datasets has become a critical area in the machine learning community [4] due to its increased prevalence in various real-world domains. Many approaches have been suggested for tackling the class imbalance problem. Data sampling is one of the most commonly used techniques in this field. These methods attempt to artificially rebalance the data distribution by increasing the number of the minority instances (oversampling) [5,6,7,8], decreasing the number of the majority instances (undersampling) [9,10,11], or combing them both [12, 13]. Data sampling techniques are classifier-independent solutions to the class imbalance problem, and their general effectiveness has been proven [2, 3]. However, undersampling methods might remove some useful information that could be very important for the classifier training, whereas oversampling methods may increase the possibility of overfitting during classifier training [2]. Cost-sensitive learning (CSL) has also been used to address the class imbalance problem. This method assigns different misclassification costs for different classes, generally a higher cost for the minority class and a lower cost for the majority class, and therefore, it attempts to minimize the overall misclassification cost. Although the idea of CSL seems to be intuitive, this method is much less popular than data sampling methods. It is difficult to determine a suitable misclassification cost for a given task, and different misclassification costs result in classifiers with different generalization ability. Thus the classification results are not stable [14]. Besides, it presents a significant technical hurdle for those researchers who are not expert in machine learning to modify the learning algorithm for incorporating the idea of CSL.

Compared with individual classifiers, ensemble solutions have acquired popularity in this domain for their better performance acquisition [2]. However, to address the class imbalance problem, the ensemble methods need to combine with techniques like oversampling and undersampling [2, 3]. The primary motivation of adopting these techniques is to train the base classifiers on a balanced dataset, not to directly promote the diversity within an ensemble. Thereby, the performance of the final ensemble can be limited. Increasing diversity and decreasing the individual error are two crucial factors for the construction of an optimal ensemble. The general anticipation for an ensemble is that the base classifiers are accurate and mutually complementary so that all the base classifiers can perform like a unified one. Diversity allows different classifiers to offer complementary information for the classification, which in turn, can result into better classification ability [15]. Although, previous study [16] has revealed that diversity-increasing technique can significantly improve the performance of ensemble methods for imbalanced problem, a limited number of studies [16, 17] have been proposed to address the class imbalance problem and promote diversity simultaneously. This study attempts to propose a new ensemble solution that brings these two issues into one unified framework. To accomplish so, a special type of samples, called the neighborhoods of the training samples, is synthetized and imported into the ensemble learning. The generation of these samples is inspired by the localized generalization error model (L-GEM) [18].

L-GEM evaluates the generalization ability of a classifier in a restricted input space. In the authors’ opinion, the commonly used learning algorithms, such as SVM and neural network, are local learning machines. The classification boundary of such classifier is shaped by the patterns hidden in the training samples, and samples far away from the training samples will not affect the construction of classification boundary. So, instead of evaluating a classifier’s classification ability in the entire input space. Yeung et al. [18] proposed a L-GEM to assess the classification ability of radial basis function neural networks (RBFNNs) in some limited neighborhoods of the training samples. The idea proves to be effective and efficient in many applications, such as model selection [19] and feature selection [20] for RBFNNs.

Our previous study [21] has incorporated the synthetic neighborhoods into an ensemble learning to maximize the overall accuracy and achieved a significant improvement in generalization ability for the balanced datasets. In this paper, we propose a sample-generation-based ensemble learning for the class imbalance problem. When training a base classifier, the proposed method randomly selects a subset of training samples from the original training set, and replaces them with their synthetic neighborhoods. The synthetic neighborhoods are beneficial extensions to the input space of original training samples, so the base classifiers can improve their classification ability in different parts of the input space by learning these synthetic samples. Moreover, as the generated samples are randomly generated and can be different from the original training samples, the diversity within the final ensemble can be promoted. In addition, the synthetic samples are different but not conflicting with the original training samples, so we can rebalance the class distribution by generating certain number of minority samples and not worrying about decreasing the base classifiers’ generalization ability.

Although L-GEM has highlighted the importance of the neighborhoods of the training samples, it does not generate any actual sample. It is essential to generate available synthetic neighborhoods that can be used to solve the class imbalance problem and promote diversity simultaneously. We provide our solution of synthetic neighborhood generation, and assess its effectiveness on 36 benchmark datasets. The experimental results demonstrated that our proposed method significantly outperformed the state-of-art ensemble learning in terms of area under receiver operation characteristic (AUC) and G-mean.

2 Related work

In this paper, the binary-class imbalanced datasets are the focus, in which there is a positive (minority) class, with the lowest number of instances, and a negative (majority) class, with a significantly higher number of instances. Of the two classes, the minority class is usually the class of interest, but it is difficult to identify because it might be associated with exceptional and significant cases, or the process of acquiring these examples is costly [22]. Over the years, a significant amount of work has been done to address class imbalance problem. These methods can be broadly divided into four categories: (1) algorithm level approaches, (2) data level approaches, (3) cost-sensitive learning, and (4) ensemble solutions. In this section, we only focus on the ensemble solutions that have been proposed to address this issue. A general introduction and additional details about the other techniques can be found in [1,2,3].

Specifically, the best ensemble solutions to the class imbalance problem are reviewed firstly. Then the commonly used assessment metrics for the imbalanced learning are given.

2.1 Ensemble solutions for the imbalanced learning

Ensembles of classifiers have been adopted and their robustness at handling imbalanced datasets has been proven [10, 16]. Ensemble techniques by themselves do not ameliorate the class imbalance problem, because they are designed to achieve a maximal accuracy on the training set. However, their combination with previous techniques leads to promising results. Ensemble techniques in this field can be classified into two types: cost-sensitive boosting approaches [23] and ensemble learning algorithms with embedded data preprocessing techniques [9, 24,25,26]. The cost-sensitive boosting approaches share a similar spirit with non-ensemble cost-sensitive approaches, which assign different misclassification cost for different classes. The main difference is that the costs minimization is guided by the boosting algorithm. Cost-sensitive boosting approaches share same idea, as well as same shortcoming, as the non-ensemble cost-sensitive methods: it is not an intuitive task to assign appropriate costs for the different classes. On the other hand, ensemble methods combined with data sampling are more popular. According to Galar et al. [2], these methods can be further classified into three subclasses: (1) bagging-, (2) boosting-, and (3) hybrid-based approaches, depending on the ensemble learning algorithm that they use. These approaches do not change the original process of ensemble learning, they just adopt data-level approaches, such as oversampling and undersampling, to rebalance the data distribution and train the base classifiers with balanced datasets. As most related works [2, 16, 17] indicate good performance of Bagging [27] and Boosting [28] in combination with data-level approaches some of these ensemble algorithms are recalled.

-

SMOTEBagging [24] has an operating procedure that is similar to that of Bagging, except that each base classifier is trained on a balanced dataset. At each iteration, the method generates a dataset that has two times the number of majority samples. Half of the instances are randomly sampled from the majority class with replacement whereas the second half is generated through a combination of SMOTE [5] and random oversampling on the minority class. The oversampling percentage varies from 10% in the first iteration to 100% in the last, always being a multiple of ten. The remaining minority instances are generated by SMOTE.

-

UnderBagging [26]. In each iteration of UnderBagging, the number of majority class instances is randomly reduced to the number of the minority class to allow the base classifier to be trained with a balanced dataset.

-

SMOTEBoost [29] is a modification of AdaBoost.M2 [30]. After each iteration, this approach uses SMOTE to balance the dataset. The newly generated instances will be assigned a weight, which is the proportion of generated instances to the overall number of instances. Other than the newly generated instances, the weights of the original instances will be normalized. During the whole procedure, the instances’ weights are updated according to the algorithm of Adaboost.M2.

-

RUSBoost [25] has a similar procedure to SMOTEBoost. However, it adopts a random undersampling method to balance the dataset. Thus, no instances will be generated, and the weights of the instances will be updated according to the algorithm of Adaboost.M2.

-

EUSBoost [10] is a modification of RUSBoost. This approach aims to improve the original method by using an evolutionary undersampling approach. To be specific, it attempts to promote diversity by selecting different subsets of the majority instances for different base classifiers with an evolutionary undersampling approach.

-

EasyEnsemble [9] has a similar procedure to UnderBagging. However, instead of training a base classifier for each new bag, it adopts an AdaBoost as a base classifier. When training each of the base classifiers, EasyEnsemble samples several subsets from the majority class, and trains a base classifier with these subsets repeatedly. So, EasyEnsemble works like an ensemble of ensembles.

Other than combining ensemble with data-level approaches, researchers in this field have been constantly seeking new framework of ensemble learning. For example, the study in [16] demonstrated that diversity-increasing techniques are effective methods for improving the performance on imbalanced problems. In addition, the study also suggested that diversity-enhancing techniques should be adopted when the overlap of the per-class bounding boxes is high. Bhowan et al. [31] adopted genetic algorithm to evolve ensemble. In their proposed approach, the construction of an ensemble was transformed to a multi-objective optimization problem, in which a set of accurate and diverse base classifiers were built by trading-off the minority and majority class during the learning of base classifier. Díez-Pastor et al. [32] proposed a data-level approach that can be used to build ensembles for class imbalance problem. In their approach, the data for training a base classifier is sampled from the training data using random class proportions. The diversity can be promoted by randomly undersampling or oversampling the original training data.

3 Proposed method

3.1 Motivation

We attempt to propose a new sample generation based ensemble learning for the class imbalance problem, which can bring the issues of addressing the imbalance problem and promoting diversity into one unified framework. Sample generation is not a new idea for ensemble learning. Importing properly synthesized samples into ensemble learning has proven to be an effective way for improving a classifier’s generalization ability [33,34,35,36]. In previous studies, the synthetic samples are generated mainly for two purposes: (1) addressing the class imbalance problem by generating some new synthetic minority samples (oversampling); (2) creating diversity in the ensembles. When applying oversampling techniques to ensemble learning, they are used to address the problem of class imbalance, not to directly enhance the diversity within an ensemble. Thereby, the diversity and generalization ability of the final classifier could be limited.

Sample generation methods [33,34,35,36] have been constantly used to increase diversity within an ensemble. These methods generate some synthetic samples and a particular base classifier is trained with the synthetic samples along with the original training samples. Theoretical and empirical studies [37, 38] reveal that one may get very promising diversity by generating very different samples with any of the existing sample generation methods, however, when addressing the imbalance problem, these methods are limited, because: (1) the diversity of the final ensemble is promoted at the cost of base classifiers’ classification ability; (2) existing sample generation based ensembles are accuracy-oriented. They are designed to maximize the overall accuracy. Although theoretically, the generated samples can be used to rebalance the class distribution, but importing too many of these samples could be problematic, because the samples generated by any of the existing methods can be quite different from the original training samples [38]. Learning too many these synthetic samples can misguide the training of a classifier.

The work of Yeung et al. [18] highlights the importance of unseen samples located within some neighborhood of the training samples. The effectiveness of L-GEM in various applications [19, 20, 39] also proves that the synthetic samples located within this area can be beneficial extensions to the original input space, and it would be desirable to design a particular ensemble learning, which incorporates these synthetic neighborhoods for the class imbalance problems because we can bring the issues of increasing diversity, decreasing individual error and addressing class imbalance problem into one unified framework, by incorporating the synthetic neighborhoods into the ensemble learning.

3.2 Synthetic neighborhoods generation

It is essential to generate available synthetic neighborhoods that are properly located within some neighboring area of the training samples. The generated samples must have appropriate distance to the training samples, so that a balance between increasing diversity and decreasing individual error can be achieved.

Central Limit Theorem

Let X i ( i = 1,2,…,n ) be a random sample from the same underlying distribution with mean μ and variance σ 2 . Let \(\overline {X} \) be the average of X i ( i = 1,2,…,n ), if n is “sufficiently large”, then \(\overline {X} \) obeys a normal distribution with mean μ and variance σ 2 .

The central limit theorem shown in (1) can be applied for any distribution. This permits us to generate synthetic neighborhoods for any unknown data distribution.

The symbols that will be used in this paper are described as follows. \(D=\{(x_{i} ,y_{i} )\vert x_{i} \in \mathbb {R}^{p},y_{i} \in \mathcal {Y}\}_{i = 1}^{N} \) is the training set, where p is the number of input features, N denotes the number of training samples, and \(\mathcal {Y}=\{label_{1} ,label_{2} \}\) indicates the class labels in a binary classification problem.

In a multiple feature dataset D, each training sample is composed of multiple features, and each feature can be treated as a random variable that obeys an unknown distribution. When generating one synthetic neighborhood for a specific training sample, a new dataset D j = {(x i ,y i )|(x i ,y i ) ∈ D,y i = l a b e l j )} is gathered by selecting all the samples labeled with l a b e l j in D. After that, the mean value μ i and standard variance σ i for the i th attribute a i in D j are calculated, and we use \(\mu _{i}^{\prime } \) and \(\sigma _{i}^{\prime } \) to represent the real mean and standard deviation of attribute a i . n is the number of samples in D j . If n is large enough, according to Central Limit Theorem, the following can be obtained:

Because (2) is satisfied only when n is “sufficiently large”, it can be used to approximate the real mean value \(\mu _{i}^{\prime } \):

Where r is a sampling value of normal distribution N(0,1). By substituting the mean value μ with an feature value, then for each instance j in D j , given its i th attribute value a i (j), a synthetic value \(a_{i}^{\prime } (j)\) can be generated as follows:

Here, \(\sigma _{i}^{\prime } \) in (4) represents the true standard deviation of the attribute a i in D j , and it is unknown. We can approximate it with σ i , and the following can be obtained:

Zhang and Li [40] use the equation (5) as an oversampling technique. The samples generated by oversampling techniques are safely located within the area that surrounds all the training samples. The method combining ensemble learning with synthetic samples generated by (5) has no essential difference to current ensemble solutions for imbalanced dataset. So, the size of the synthetic attribute is expanded, so that the diversity within final ensemble can be enhanced

Equation (6) is the one used to generate the synthetic neighborhoods, where parameter λ is a trade-off between increasing diversity and decreasing individual error. A larger λ will result into a set of synthetic neighborhoods that are very different to the corresponding training samples. The opposite scenario can happen if we decrease λ. It is also worth mentioning that the equation (6) considers some kind of global distribution information by importing the standard deviation σ i into the equation. By doing this, the proposed method can work reasonably well under some fixed settings of parameter λ.

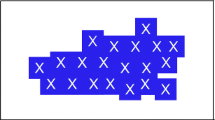

For each sample in D j = {(x i ,y i )|(x i ,y i ) ∈ D,y i = l a b e l j )}, we use (6) to generate one synthetic feature value for each of its attribute, and a feature vector can be constructed by gathering all the corresponding synthetic values. l a b e l j will be assigned to the feature vector as its class label, and then the vector is taken as a synthetic neighborhood to the training sample. In order to illustrate the proposed method in better way, we run our sample generation method on a simulated dataset. Figure 1 shows the results. Figure 1a presents the simulated dataset, where the black circle points denote the majority class instances and the red plus symbols denote the minority class instance. The blue hollow square symbols in Fig. 1b and c denote the synthetic minority instances generated by (6) under different settings of parameter λ.

3.3 Synthetic neighborhood generation based ensemble learning for imbalanced problem (SNGEIP)

We formally propose our ensemble solution for imbalanced problem (SNGEIP) in Fig. 2. The basic aim of the sample generation is to create different training sets for different base classifiers so that a promising diversity can be achieved. At the same time, the class distribution can be rebalanced by controlling the number of generated samples.

Specifically, given a training set \(D=\{(x_{i} ,y_{i} )\}_{i = 1}^{n} \), where n is the number of samples in D, and IR denotes the imbalance ratio of D. The proposed SNGEIP method first gets a copy of the original training set, and randomly selects some training samples. The selected samples will be replaced by their synthetic neighborhoods. If a majority sample is chosen, it will be replaced by its one synthetic neighborhood generated by (6). Otherwise, if a minority sample is chosen, it will be replaced by its m synthetic neighborhoods. The synthetic minority samples are more than the majority samples, so the class distribution can be rebalanced. m can be calculated as follows:

Where IR is the imbalance ratio of D, and RR is the abbreviation for the replacement ratio, a parameter used to determine the proportion of training samples that needs to be replaced. RR can be set as any value in the range [0,1]. If we set R R = 0, no synthetic sample will be generated and we will obtain a set of identical base classifiers. If we set R R = 1, every training sample must be replaced by their synthetic neighborhoods and the diversity within the final ensemble can be promoted. The choices of RR and λ have significant influences on the generalization ability of final ensemble. In this paper, the default settings for these two parameters are R R = 0.368, and λ = 0.5. We give the reason for this default setting in Section 4.6, where we can discuss the influence of these two parameters.

4 Experiments

4.1 Benchmark datasets

A series of experiments were conducted to test the performance of our method, the synthetic neighborhood generation based ensemble learning for imbalanced problem (SNGEIP). We have selected 36 imbalanced binary datasets from the KEEL repository [41], and the detailed information about these datasets is given in Table 1. The datasets are ordered according to their IR s. Before testing the performance of our method, all the datasets need to be pre-processed. All the datasets have been transformed into Libsvm format [42], a data format that only contains numeric type attributes. By doing this, we can automatically transform all the non-numeric attributes into numeric one, and calculate the standard deviation for each attribute.

4.2 Benchmark algorithms and parameter settings

We analyze the quality of our proposed method against seven other methods (described in Section 2). To be specific, we only compare our proposed method with Bagging and Boosting in combination with data-level approaches. After comparing 20 different ensembles from simple modifications of Bagging or Boosting to complex cost or hybrid approaches, Galar et al. [2] found that methods combining Bagging or Boosting with simple versions of under-sampling or SMOTE work better than more complex ensemble solutions. Other than Bagging and Boosting methods, we combined ESNG [21] method with SMOTE method. ESNG [21] incorporated the synthetic neighborhoods into an ensemble learning to maximize the overall accuracy and achieved a significant improvement in generalization ability for the balanced datasets. We combined it with SMOTE method and tested its performance on imbalanced datasets. It will better reveal the advantages or drawbacks of our method by comparing it with ESNG method. Table 2 shows the operating procedure and the abbreviations that will be used through the experiments. The C4.5 decision tree was chosen as the base classifier for all the ensemble learning.

40 decision trees were trained for each of the ensemble methods. It is worth mentioning that the choices of parameters RR and λ have significant influence on the classification ability of our proposed method. In all the experiments, we set R R = 0.368, and λ = 0.5. All experiments have been conducted using the KEEL software [41] and Weka [43]. The ESNG combined with SMOTE method has been implemented in Weka, whereas the other six learning algorithms in Table 2 are publicly available in KEEL.

4.3 Assessment metrics used in the experiments

The way of assessing a classifier is very important for properly evaluating its classification performance and guiding its modeling. In order to take the class distribution into account, we must use specific metrics to evaluate the classifier’s performance. Focusing on binary-class problems, the confusion matrix (shown in Table 3) records the results of correctly and incorrectly recognized examples of each class. Specifically, TP represents the number of positive examples that have been correctly predicted as positive, FN represents the positive example number that have been falsely predicted as negative, FP represents the negative example number that have been falsely predicted as positive, and TN represents the negative example number that have been correctly predicted as negative. From Table 3, some metrics can be calculated and used as assessment metrics in the imbalance framework:

Clearly, the first four measures in (8–11) describe classifier’s performance on positive class and negative class separately, but none of these measures is adequate enough to describe the overall performance. Both F-measure and G-mean can be used as the measures to evaluate a classifier’s performance in class imbalance problem. But when a classifier classifies all the instances as negative class (i.e., T P = 0, and F P = 0), the F-measure would be + ∞, making the experimental results incomparable.

Another well-known approach to produce an overall evaluation criterion is the receiver operation characteristic (ROC) curve [44]. ROC curve is formed by plotting T P r a t e over F P r a t e , and any point in ROC space corresponds to the performance of a single classifier on a given distribution. ROC curve provides a visual representation of the trade-off between benefits (T P r a t e ) and costs (F P r a t e ) of classification. Area under the ROC curve (AUC) [45] provides a quantitative measure of a classifier’s performance for the evaluation of which model is better. The computation of AUC depends on classifier’s type. If the classier is hard-type which outputs only discrete class labels, the AUC can be computed as:

If the classifier is soft-type which outputs a continuous numeric value to represent the confidence of an example belonging to the predicted class, a threshold can be used to produce a series of points in ROC space. The AUC is computed as the area under these points. Figure 3 shows the idea of ROC and AUC.

In this paper, G-mean and AUC are adopted to evaluate the classifier’s performance. Considering the fact that all the classifiers used in our experiment are soft-type classifiers, we used a threshold to produce a series of points in ROC space, and the AUC is computed as the area under these points.

4.4 Experimental procedure and statistical tests

We follow a procedure that is similar to that outlined in [10]. Specifically, an experiment framework using 3 × 5 cross-validation is adopted to compare the performance of different algorithms. For each dataset, 5-fold stratified cross validation is used to divide the dataset into training parts and testing parts. The training parts are used to train the classifiers, while the testing parts are used to calculate the AUC and G-mean metrics. Here, 5-fold cross validation was conducted three times with different random seeds, and the average value of all the obtained AUCs or G-means can be used as a single measure, which provides a reliable estimation on the classifier’s ability of dealing with imbalanced datasets.

Statistical analysis needs to be conducted in order to determine whether the classification ability of different algorithms are significantly different. As suggested by previous study [46], we consider two different non-parametric statistical tests to perform two comparisons:

-

Multiple comparisons. As suggested by Demšar [46], we first use Friedman test [47] with its corresponding post-hoc test to detect statistical differences between all the methods. Then, if significant differences exist, we check whether the control method (the one with lowest average ranking) is significantly better than the others using Holm test [48].

-

Pairwise comparisons. Pairwise comparison is used as a complementary test here, because the Friedman test occasionally reports a significant difference but the post-hoc test might fail to detect it. Wilcoxon paired signed-rank test was conducted to further confirm whether the classification abilities of two methods are significantly different.

Moreover, instead of simply giving an overall summary, we show the p-value associated with each comparison, which indicates the lowest level of significance of a hypothesis that results in a rejection. In such a manner, we can know whether two algorithms are significantly different and how different they are. The average rankings of each method have also been used as a complementary visualization tool. These rankings are obtained from the Friedman aligned-rank test, and a lower ranking indicates better classification ability.

4.5 Performance of SNGEIP and comparison with conventional methods

4.5.1 AUC as a measure

Table 4 shows the average AUC over 3 × 5 cross validation in the form of ‘average ± standard deviation’. For each dataset, the best AUC over eight methods is shown in bold-face type. Taking a quick glance at this table shows that the proposed work is the best performing method, because it achieves the highest number of best AUCs (on 20 of the 36 datasets) and the highest average AUC (0.9587) over all the eight methods. In order to check if the higher average AUC indicates actual better classification ability, non-parametric statistical tests have been conducted on the obtained AUCs. The average rankings from the Friedman test are shown in Fig. 4.

From Fig. 4, we can observe that our proposed work excels, followed by the SMOTE-based methods (SBO SBAG and SENSG). The performance of the undersampling-based methods (RUS, UBAG, and EUS) is mediocre, and the worst performer is the hybrid-based method (EASY). The Friedman aligned-rank test is conducted, resulting in a significance level of p = 0.000, which is low enough to reject the hypothesis of equivalence. Therefore, we continue with the Holm post-hoc test and present the adjust p-values in Table 5.

The proposed SNGEIP method was chosen as the control method, as it achieves the lowest average ranking in the Friedman test. The Holm test brings out the good performance of SNGEIP. SNGEIP statistically outperforms all the methods except for SBAG and SBO. As pointed by [46], sometimes the Friedman test reports a significant difference but the post-hoc test may fail to detect it, due to the lower power of the latter. For this reason, we use the Wilcoxon paired signed-rank test to compare the performance of SNGEIP SBAG and SBO. The test results have been reported in Table 6. The test rejects the hypothesis of equivalence with significance level at p = 0.017 and p = 0.037, and hence, being the average ranking in favor of SNGEIP, its superiority over SBAG and SBO can be confirmed. Following all the results of the non-parametric statistical tests, we can state that SNGEIP outperforms the previous methods in the framework of imbalanced datasets. In addition to all these promising results, it’s also worth pointing out that the method proposed in this paper provides significant better performance than SENSG. Both SNGEIP and SENSG have adopted the synthetic neighborhoods in their framework, but the synthetic samples in SENSG are composed of two parts: synthetic neighborhoods and synthetic samples generated by SMOTE. The superiority of our method over SENSG confirms that synthetic neighborhoods can bring more benefits than rebalancing the class distribution.

Finally, to visually show the advantage of SNGEIP with respect to the others, a scatter plot is provided in Fig. 5, where each point compares SNGEIP with one of other algorithms on a dataset. The x-coordinate of a point represents the AUC measure obtained by SNGEIP, whereas the y-coordinate represents the AUC measure obtained by other methods. Therefore, points that appear below the y = x line correspond to the datasets where SNGEIP outperforms the other methods. From Fig. 5, we can observe that most of the points lie under the y = x line. In addition, we find that when SNGEIP performs better, it usually provides much better AUCs than the benchmark algorithms (the corresponding points have higher distance to the y = x line). On the contrary, when it provides worse performance, its loss is not so significant.

4.5.2 G-mean as a measure

Table 7 reports the average G-means obtained from the cross validation. The results are presents in the form of ‘average ± standard deviation’, and the bold-face indicates the best G-mean in each dataset. The results in Table 7 also confirm the superiority of our proposed method. Among the eight ensemble solutions for class imbalance problem, our method achieves highest number of G-means (on 17 of the 36 datasets), and highest average Gmean (0.9010). We conducted Friedman aligned-rank test on the obtained G-means, and presented average rankings in Fig. 6. From Fig. 6, we can find that the average rankings computed from the G-means are different from the ones computed from AUCs, but our proposed method still achieves the lowest average ranking. The Friedman test results in a significance level at p = 0.000, which rejects the hypothesis of equivalence. As there exists a significant difference, we continue with the Holm post-hoc test (Table 8). Still, our method has been chosen as the control method. Table 8 shows the average rankings of the algorithms and the adjusted p-values computed from the Holm test. As we can see, SNGEIP statistically outperforms all the other methods.

4.5.3 Efficiency

The average runtimes of all the ensemble solutions, except the SENSG method, on all the considered datasets are presented in Table 9. We didn’t report the efficiency of SENSG because SENSG was tested on the Weka, a platform different from the other ensemble solutions. All the results were obtained on the same PC with an i5 CPU (2.7 GHz) and 8GB RAM. According to the average runtimes, all the algorithms can be classified into three groups. The first group consists of EASY, RUS and UBAG. These three algorithms combine the ensemble learning with undersampling techniques, and their base classifiers are trained on a shrinking of the original training data, so they present highest efficiency. The second group consists of SBO, SBAG and the proposed SNGEIP method. All these methods combine ensemble learning with oversampling techniques. Their base classifiers are trained on the union of the original training set and the synthetic data. Besides, it takes some extra time to generate the synthetic samples, so these three methods show lower efficiency. It is worth mentioning that our proposed method presents the lowest efficiency in the second group. It is because all the other two algorithms generate the minority samples only one time, on the contrary, our proposed method needs to generate the samples for each of the base classifiers iteratively. Among all the eight methods, EUS takes significant longer time than the other seven methods. This is because EUS adopts genetic algorithm to prepare the training set for each base classifier.

4.6 Parameters

We analyze whether the factors that account for the diversity help to improve the generalization ability of the final ensembles. In our proposed method, two parameters, the replacement ratio (RR) and λ, can determine the diversity of the final ensemble. The effects on diversity and AUCs of the varying parameter values are explored.

We follow a procedure that is similar to that outlined in [36], four datasets were randomly selected for analysis, based on variations in the number of samples, attributes, and imbalance ratio. We use the pairwise plain disagreement measure technique [49] to evaluate the diversity of our proposed method. The plain disagreement diversity for a base classifier pair C i and C j can be computed according to:

Where N is the number of training samples, and C i (x k ) is the classification result assigned by the classifier C i to sample x k . Here D i f f(a,b) = 0 if a = b, otherwise D i f f(a,b) = 1. The average value of diversity between all pairs of classifiers is used as the diversity measure for the ensemble.

A 5-fold CV was adopted to divide the dataset into training parts and testing parts. The training parts were used to learn the classifiers and calculate the diversity. Testing parts were used to calculate the AUCs. The diversity measures and AUCs obtained from the whole procedure are then averaged to produce a single measure. Figure 7 shows the obtained diversities and AUCs under different setting of RR and λ. In order to study the parameters’ influence separately, one parameter was fixed when studying the other one. Specifically, when studying the influence of RR, we set λ = 0.5 and varied the value of RR from 0.1 to 0.9. The first and second rows of Fig. 7 show the effect of RR on the classification ability and diversity. On the other hand, when studying the effect of λ, we set R R = 0.3, and varied the value of λ from 0.1 to 0.9. The third and fourth rows of Fig. 7 show the effect of λ on classification ability and diversity.

Considering all the experimental results in Fig. 7, the following conclusions and analyzes can be provided.

-

(1)

Greater diversity can be achieved by assigning a larger value to parameter RR or λ. Larger values of RR and λ indicate the generated samples can be more different from the original training samples, and the base classifiers will be more diverse. The second and forth rows of Fig. 7 show the influence of parameters on the diversity. In most of the cases, the diversity increases monotonically by increasing the parameter RR and λ. This indicates a desirable level of diversity can be achieved by setting proper values for parameter RR and λ.

-

(2)

Increasing the diversity is not always beneficial. A fundamental issue toward designing an optimal ensemble learning is how to address the conflict between increasing diversity and decreasing individual error. Sample-generation based methods try to address this conflict by generating very different samples and training the base classifier with the generated samples along with the original ones. Although greater diversity can be achieved by these methods, AKHAND et al. [38] have pointed out that greater diversity achieved by these methods does not necessarily lead to a better classification ability. The first and third rows of Fig. 7 confirm that. In first and third rows of Fig. 7, the AUC does not improve along with the increase in the diversity. Once parameter RR or λ reaches a certain level, the AUC does not improve. This seems to violate the motivation for promoting diversity. However, the experimental results in last section confirms that significant better performance can be achieved by setting proper parameters, for example R R = 0.368 and λ = 0.5.

The setting of parameter RR and λ is the trade-off between increasing diversity and decreasing individual error. It requires appropriately setting for these two parameters. For this, parameter setting in our experiments is given. All the results in Tables 4 and 7 were achieved under the setting of R R = 0.368 and λ = 0.5. The proposed method is variant of Bagging method [27]. In Bagging [27], the training set for each base classifier is randomly sampled from the original training set with replacement, and a particular training data has a probability of (1-1/n)n ≈ 0.368 of not being picked. In Bagging, each base classifier is trained with about 63.2% of the original training data. We set R R = 0.368, so that each base classifier in our proposed method can be trained with 63.2% of the original training data and 36.8% of augmented data. With such a setting, we can expect a significant improvement in performance compared to Bagging. As for the parameter λ, it is a trade-off between increasing diversity and decreasing individual error of base classifier. By now, there is no theoretic way of computing the λ value. We can select the optimal λ by using grid search method, or set it by experience. In this paper, we set λ = 0.5. This setting was determined by trial-and-error method.

However, based on our observation, better generalization ability can be ahieved by conducting a parameter optimization. An optimal RR can be chosen from the range [0.1, 0.9], and an optimal λ can be chosen from the range [0.3, 1]. These two ranges are much narrower than those of other classifiers, such as SVMs. The parameters can be set as the ones suggested in our experiments, or be selected by a standard grid search procedure, with the AUC obtained from k-fold cross validation as its generalization estimation. It is also worth mentioning that the parameter RR deserves more attention during a grid search procedure. Table 10 presents the variations of AUC and diversity during a standard grid search procedure. The results in Table 10 suggest that the change of parameter RR can lead to more dramatic change of the diversity within the final ensemble.

5 Discussion and conclusion

Recent developments in science and technology have enabled the growth and availability of raw data to occur at an explosive rate. While this data provides the opportunity of extracting knowledge that is impossible to get before, the increased prevalence of class imbalance problems in various real-world domains also presents a great challenge to extract knowledge for future prediction. Ensemble learning embedded with various data sampling techniques have proven to be a powerful way of addressing this challenge. However, in order to construct an optimal ensemble learning for the class imbalance problems, the conflict between increasing diversity and decreasing individual error needs to be properly addressed.

In this paper, we introduce a new ensemble learning for the class imbalance problem that addresses the class imbalance problem and maintain proper diversity simultaneously. Inspired by the L-GEM [18], a special type of samples, called the neighborhoods of the training samples, is synthetized and imported into the ensemble learning. Experimental results on 36 public imbalanced datasets prove that our proposed method can produce promising results in this unfavorable scenario and significantly outperform previous state-of-the-art ensemble learning.

Although the strength of our method has been proven, there also exist some limitations in the current research. First, we only test the idea of synthetic neighborhood generation on the ensemble of decision tress. Ensemble of other base classifiers, such as SVMs and neural networks, should also be tested. Second, we do not use any sophisticated ensemble pruning technique to remove the useless base classifiers in the final ensemble Third, the classification ability of our method largely relies on the quality of synthetic neighborhoods, so other method should be studied to generate more qualified synthetic neighborhoods.

References

López V, Fernández A, García S, Palade V, Herrera F (2013) An insight into classification with imbalanced data: empirical results and current trends on using data intrinsic characteristics. Inf Sci 250:113–141

Galar M, Fernandez A, Barrenechea E, Bustince H, Herrera F (2012) A review on ensembles for the class imbalance problem: bagging-, boosting-, and hybrid-based approaches. IEEE Trans Syst Man Cybern Part C Appl Rev 42(4):463–484

He H, Garcia EA (2009) Learning from imbalanced data. IEEE Trans Knowl Data Eng 21(9):1263–1284

Yang Q, Wu X (2006) 10 Challenging problems in data mining research. Int J Inf Technol Decis Mak 05 (04):597–604

Chawla NV, Bowyer KW, Hall LO, Kegelmeyer WP (2002) SMOTE: synthetic minority over-sampling technique. J Artif Int Res 16(1):321–357

Han H, Wang W-Y, Mao B-H (2005) Borderline-SMOTE: a new over-sampling method in imbalanced data sets learning. In: Advances in intelligent computing. Springer, pp 878–887

Barua S, Islam MM, Yao X, Murase K (2014) MWMOTE–majority weighted minority oversampling technique for imbalanced data set learning. IEEE Trans Knowl Data Eng 26(2):405–425

Bunkhumpornpat C, Sinapiromsaran K, Lursinsap C (2011) DBSMOTE: density-based synthetic minority over-sampling technique. Appl Intell 36(3):664–684

Liu X-Y, Wu J, Zhou Z-H (2009) Exploratory undersampling for class-imbalance learning. Trans Syst Man Cybern Part B 39(2):539–550

Galar M, Fernández A, Barrenechea E, Herrera F (2013) EUSBoost: enhancing ensembles for highly imbalanced data-sets by evolutionary undersampling. Pattern Recognit 46(12):3460–3471

Yu H, Ni J, Zhao J (2013) ACOSampling: an ant colony optimization-based undersampling method for classifying imbalanced DNA microarray data. Neurocomput 101:309–318

Qian Y, Liang Y, Li M, Feng G, Shi X (2014) A resampling ensemble algorithm for classification of imbalance problems. Neurocomputing 143:57–67

Stefanowski J, Wilk S (2008) Selective pre-processing of imbalanced data for improving classification performance. In: Song I-Y, Eder J, Nguyen TM (eds) Data warehousing and knowledge discovery: 10th international conference, DaWaK 2008 Turin, Italy, September 2–5, 2008 Proceedings. Springer, Berlin, pp 283–292

Sun Z, Song Q, Zhu X, Sun H, Xu B, Zhou Y (2015) A novel ensemble method for classifying imbalanced data. Pattern Recognit 48(5):1623–1637

Kittler J, Hatef M, Duin RPW, Matas J (1998) On combining classifiers. IEEE Trans Pattern Anal Mach Intell 20(3):226–239

Díez-Pastor JF, Rodríguez JJ, García-Osorio CI, Kuncheva LI (2015) Diversity techniques improve the performance of the best imbalance learning ensembles. Inf Sci 325:98–117

Visentini I, Snidaro L, Foresti GL (2016) Diversity-aware classifier ensemble selection via f-score. Inf Fusion 28:24–43

Yeung DS, Ng WW, Wang D, Tsang EC, Wang X-Z (2007) Localized generalization error model and its application to architecture selection for radial basis function neural network. IEEE Trans Neural Netw 18(5):1294–1305

Ng WWY, Dorado A, Yeung DS, Pedrycz W, Izquierdo E (2007) Image classification with the use of radial basis function neural networks and the minimization of the localized generalization error. Pattern Recognit 40(1):19–32

Ng WWY, Yeung DS, Firth M, Tsang ECC, Wang X-Z (2008) Feature selection using localized generalization error for supervised classification problems using RBFNN. Pattern Recognit 41(12):3706–3719

Chen Z, Lin T, Chen R, Xie Y, Xu H (2017) Creating diversity in ensembles using synthetic neighborhoods of training samples. Appl Intell 47(2):570–583

Weiss GM, Tian Y (2008) Maximizing classifier utility when there are data acquisition and modeling costs. Data Min Knowl Disc 17(2):253–282

Sun Y, Kamel MS, Wong AKC, Wang Y (2007) Cost-sensitive boosting for classification of imbalanced data. Pattern Recognit 40(12):3358–3378

Wang S, Yao X, IEEE (2009) Diversity analysis on imbalanced data sets by using ensemble models. In: 2009 IEEE symposium on computational intelligence and data mining. IEEE, New York, pp 324–331

Seiffert C, Khoshgoftaar TM, Van Hulse J, Napolitano A (2010) RUSBoost: a hybrid approach to alleviating class imbalance. IEEE Trans Syst Man Cybern Part A Syst Hum 40(1):185–197

Barandela R, Sanchez JS, Valdovinos RM (2003) New applications of ensembles of classifiers. Pattern Anal Appl 6(3):245–256

Breiman L (1996) Bagging predictors. Mach Learn 24(2):123–140

Freund Y, Schapire RE (1997) A decision-theoretic generalization of on-line learning and an application to boosting. J Comput Syst Sci 55(1):119–139

Chawla NV, Lazarevic A, Hall LO, Bowyer KW et al (2003) SMOTEBoost: improving prediction of the minority class in boosting. In: Lavrač N (ed) Knowledge discovery in databases: PKDD 2003: 7th European conference on principles and practice of knowledge discovery in databases, Cavtat-Dubrovnik, Croatia, September 22–26, 2003. Proceedings. Springer, Berlin, pp 107–119

Freund Y (1996) Experiments with a new boosting algorithm. In: Thirteenth international conference on machine learning

Bhowan U, Johnston M, Zhang M, Yao X (2014) Reusing genetic programming for ensemble selection in classification of unbalanced data. IEEE Trans Evol Comput 18(6):893–908

Díez-Pastor JF, Rodríguez JJ, García-Osorio C, Kuncheva LI (2015) Random balance: ensembles of variable priors classifiers for imbalanced data. Knowl-Based Syst 85:96–111

Melville P, Mooney RJ (2005) Creating diversity in ensembles using artificial data. Inf Fusion 6(1):99–111

Martínez-Muñoz G, Suárez A (2005) Switching class labels to generate classification ensembles. Pattern Recognit 38(10):1483–1494

Ho TK (1998) The random subspace method for constructing decision forests. IEEE Trans Pattern Anal Mach Intell 20(8):832–844

Akhand MA, Murase K (2012) Ensembles of neural networks based on the alteration of input feature values. Int J Neural Syst 22(1):77–87

Brown G, Wyatt J, Harris R, Yao X (2005) Diversity creation methods: a survey and categorisation. Inf Fusion 6(1):5–20

Akhand MAH, Islam MM, Murase K (2009) A comparative study of data sampling techniques for constructing neural network ensembles. Int J Neural Syst 19(02):67–89

Sun B, Ng WWY, Yeung DS, Chan PPK (2013) Hyper-parameter selection for sparse LS-SVM via minimization of its localized generalization error. Int J Wavelets Multiresolution Inf Process 11(03):1350030

Zhang H, Li M (2014) RWO-sampling: a random walk over-sampling approach to imbalanced data classification. Inf Fusion 20:99–116

Alcalá-Fdez J, Sánchez L, García S, del Jesus MJ, Ventura S, Garrell JM, Otero J, Romero C, Bacardit J, Rivas VM, Fernández JC, Herrera F (2009) KEEL: a software tool to assess evolutionary algorithms for data mining problems. Soft Comput 13(3):307–318

Chang CC, Lin CJ (2007) LIBSVM: a library for support vector machines. ACM Trans Intell Syst Technol 2(3, article 27):389–396

Hall M, Frank E, Holmes G, Pfahringer B, Reutemann P, Witten IH (2009) The WEKA data mining software: an update. SIGKDD Explor Newsl 11(1):10–18

Bradley AP (1997) The use of the area under the ROC curve in the evaluation of machine learning algorithms. Pattern Recognit 30(7):1145–1159

Huang J, Ling CX (2005) Using AUC and accuracy in evaluating learning algorithms. IEEE Trans Knowl Data Eng 17(3):299–310

Demšar J (2006) Statistical comparisons of classifiers over multiple data sets. J Mach Learn Res 7(1):1–30

Hodges JL, Lehmann EL (1962) Rank methods for combination of independent experiments in analysis of variance. Ann Math Stat 33(2):482–497

Holm S (1979) A simple sequentially rejective multiple test procedure. Scand J Stat 6(2):65–70

Tsymbal A, Pechenizkiy M, Cunningham P (2005) Diversity in search strategies for ensemble feature selection. Inf Fusion 6(1):83–98

Acknowledgements

The research is partly supported by Science and Technology Supporting Program, Sichuan Province, China (2013GZX0138 and 2014GZ0154).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Chen, Z., Lin, T., Xia, X. et al. A synthetic neighborhood generation based ensemble learning for the imbalanced data classification. Appl Intell 48, 2441–2457 (2018). https://doi.org/10.1007/s10489-017-1088-8

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-017-1088-8