Abstract

Existing work on Automated Negotiations commonly assumes the negotiators’ utility functions have explicit closed-form expressions, and can be calculated quickly. In many real-world applications however, the calculation of utility can be a complex, time-consuming problem and utility functions cannot always be expressed in terms of simple formulas. The game of Diplomacy forms an ideal test bed for research on Automated Negotiations in such domains where utility is hard to calculate. Unfortunately, developing a full Diplomacy player is a hard task, which requires more than just the implementation of a negotiation algorithm. The performance of such a player may highly depend on the underlying strategy rather than just its negotiation skills. Therefore, we introduce a new Diplomacy playing agent, called D-Brane, which has won the first international Computer Diplomacy Challenge. It is built up in a modular fashion, disconnecting its negotiation algorithm from its game-playing strategy, to allow future researchers to build their own negotiation algorithms on top of its strategic module. This will allow them to easily compare the performance of different negotiation algorithms. We show that D-Brane strongly outplays a number of previously developed Diplomacy players, even when it does not apply negotiations. Furthermore, we explain the negotiation algorithm applied by D-Brane, and present a number of additional tools, bundled together in the new BANDANA framework, that will make development of Diplomacy-playing agents easier.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In any multiagent system (MAS) the outcome of the actions taken by one agent may also depend on the actions taken by other agents. These agents may have conflicting goals and, since the other agents may be unknown and may not be benevolent, an agent generally cannot assume that other agents are willing to help without receiving any benefits in return. If each agent simply chooses those actions that are individually best, the outcome can be sub-optimal for each of them, as illustrated by the well-known Prisoner’s Dilemma [28]. Therefore, agents in a MAS need to negotiate on what actions each will take, even if those agents are entirely selfish and are not interested in reaching any socially optimal solution. Generally, we can say that if a Nash equilibrium [26] is not Pareto optimal then negotiations allow the agents to reach a more efficient solution by making a binding agreement in which each agent promises not to deviate from the efficient solution.

Automated Negotiations have been studied extensively, but most work focuses purely on the strategy to determine which deals to propose given the utility values of the possible deals. A point that has received little attention however, is the fact that in many real-world negotiation settings, for any given proposal a negotiator would first need to spend time determining its utility value before he or she could decide whether to propose, accept, or reject it. In most existing work this process of evaluating the proposal is simply abstracted away and it is assumed that this does not require any domain knowledge or reasoning. In such studies the negotiators are usually assumed to know the utility value of any deal instantaneously, or after solving a simple linear equation (see for example [3]). The utility functions of the agent’s opponents on the other hand, are often assumed to be completely unknown.

We argue, however, that in real negotiations it is important to have knowledge of the domain and one should be able to reason about it. One cannot, for example, expect to make profitable deals in the antique business if one does not have extensive knowledge of antique, no matter how good one is at bargaining. Moreover, a good negotiator should also be able to reason about the desires of its opponents. A good car salesman, for example, would try to find out what type of car best suits his client’s needs, in order to increase the chances of coming to a successful deal, and therefore increase the salesman’s own expected utility.

Another point that is rarely taken into account, is that an agent’s utility may not always solely depend on the agreements it makes, but may also depend on decisions taken outside the negotiation thread, either by the agent itself or by its opponents. Imagine for example buying a small car which is easy to park and consumes little fuel. This may initially be a great deal. However, if one year later you decide to extend your family and have children, that small car suddenly is not so practical anymore. We see that the value of the car deal has changed as a result of decisions made long after the negotiations had finished. As another example, imagine renting a property to open a restaurant in a street with no other restaurants. This might be a good deal until later several other restaurants also open in that same street, presenting you with so much competition that you can no longer afford the rent. Again, what was initially a good deal, later became a bad deal, only this time as a result of decisions taken by competitors.

For these reasons, we think the game of Diplomacy [9] provides a much more realistic, and therefore more interesting, test bed for Automated Negotiations. Diplomacy is important for Automated Negotiations (and for AI in general) because it includes many of the difficulties one would also have to deal with in real-life negotiations. It involves constraint satisfaction, coalition formation, game theory, trust, and even psychology. Being a good Diplomacy player does not only require strategic insight, but also requires social skills, making it a particularly hard game for computers. It is not surprising therefore, that computer Diplomacy is only in its infancy and automatic players are not nearly as well developed as for example Chess or Go programs. Now that modern Chess computers are already far superior to any human player, we expect that Diplomacy will draw more and more attention in the future, as a more interesting challenge for computer scientists. In line with this expectation in July 2015 the first edition of the Computer Diplomacy ChallengeFootnote 1 was held as part of the ICGA Computer Olympiad.

In this paper we wish to highlight the fact that Diplomacy satisfies the following three important properties:

-

1.

For any potential deal the calculation of its utility value (for any agent) is a hard, time-consuming problem.

-

2.

An agent’s utility does not only depend on the agreements it makes, but also on decisions it makes outside the negotiation thread.

-

3.

Moreover, an agent’s utility also depends on decisions made by its opponents outside the negotiation thread.

Another important point is that the number of possible deals in Diplomacy is extremely large, so it would be impossible to exhaustively determine the utility values of all possible deals.

The game of Diplomacy has been under attention of the Automated Negotiations community for a long time. Nevertheless, to date very few really successful negotiating Diplomacy players have been developed. The problem with Diplomacy is that before one can test a negotiation algorithm one first needs to have an agent that can play the strategic part of the game and implementing such player is already a daunting task. Therefore, existing work has focused either on building only non-negotiating players, or on building negotiation algorithms on top of existing (often poorly playing) agents. In this paper however, we introduce a new Diplomacy playing agent, called D-Brane, that is a good strategic player, but that is also capable of negotiation. Moreover, we have decoupled its strategic algorithm from its negotiation algorithm so that they can be studied and reused independently. This will allow new negotiation algorithms in the future to be implemented on top of D-Brane’s strategic component. We think that this will mean an important step forward in the research of computer Diplomacy and Automated Negotiations, as it will make it much easier for Automated Negotiations researchers to test algorithms for highly complex domains.

We have performed a number of experiments in which we compare our player against several existing Diplomacy playing agents. The interesting outcome of those experiments is that even if our agent does not apply negotiations it still outplays the existing players, which do apply negotiations. From this we draw the important conclusion that the success of a negotiating agent may sometimes depend more on the accuracy and efficiency in which it calculates utility values than on the bargaining strategy it applies.

In short, this paper makes the following contributions to the field of Automated Negotiations:

-

We define a new, more realistic, formal model of negotiations which we call a Negotiation Game.

-

We present a strategic Diplomacy player that allows researchers to build negotiation algorithms on top of it.

-

We present the negotiation algorithm used by our player.

-

We show that in complex domains it can be more important to have an efficient and accurate algorithm to determine utility values of potential deals, than to have a good bargaining strategy.

-

We present a new framework, called BANDANA, which consists of a number of tools to make development of Diplomacy players easier.

The rest of this paper is organized as follows: in Section 2 we give an overview of existing work on Automated Negotiations and Diplomacy. In Section 3 we give an informal description of the game of Diplomacy. In Section 4 we define the notion of a Negotiation Game, which puts negotiations into a larger context so that the agents’ utility values do not only depend on the agreements made, but also on decisions made after the negotiations. In Section 5 we present the Diplomacy playing agent that we have implemented and in Section 6 we present the results of our experiments with this agent and compare them with the results of a number of other existing Diplomacy agents. In Section 7 we draw our conclusions and discuss future work. In Appendix A we present the BANDANA framework which comprises a number of tools to facilitate the development of Diplomacy playing agents. Finally, in Appendix B we give a formal description of Diplomacy.

2 Related work

Much work has been done on Automated Negotiations, which can roughly be divided into two categories: the Game Theoretical Approach and the Heuristic Approach.

The Game Theoretical Approach focuses on the theoretical properties of negotiation, such as the existence of equilibrium strategies. A seminal paper in this area is a paper by Nash [25] in which he shows that under the assumption of certain axioms the outcome of a bilateral negotiation is the solution that maximizes the product of the players’ utilities. This is known as the Nash Bargaining Solution. Many papers have been written afterwards that generalize or adapt some of these axioms. Multilateral versions of the bargaining problem have been studied for example in [2, 21], while a non-linear generalization has been made in [12]. These studies give hard guarantees about the success of their approach, but the downside is that they need to make very strong assumptions about their respective domains, which makes them hard to apply in real-world settings. An example of such an assumption is the existence of a discount factor that reduces the utility of any deal by some known factor depending on the time it takes to come to an agreement. Other examples are the assumption that the negotiation has a fixed number of rounds and the negotiators take turns, or even that the negotiators have perfect knowledge about each others’ utility functions. A general overview of such game theoretical studies is made in [31].

In this paper, on the other hand, we apply the Heuristic Approach. The Heuristic Approach focuses on the implementation of algorithms that can negotiate under circumstances where no equilibrium results are known, or where the equilibria cannot be determined in a reasonable amount of time. It is usually not possible to give hard guarantees about the success of such algorithms, but they are more suitable to real-world negotiation scenarios.

However, even in this branch of Automated Negotiations one often still makes many simplifying assumptions. One often assumes that negotiations are only bilateral, that there is only a small set of possible agreements to make, and that the utility functions are given as linear additive functions or can be calculated without much computational cost. Also, most of these studies assume an alternating offers protocol, which is fine for automated agents, but not desirable for negotiations with humans, because with humans there is no guarantee that they will indeed follow the protocol. All these assumptions were made for example in the first four editions of the annual Automated Negotiating Agent Competition (ANAC 2010–2013) [3]. Important examples in this area are [10, 11]. They propose a strategy that amounts to determining for each time t which utility value should be demanded from the opponent (the aspiration level). However, they do not take into account that one first needs to find a deal that indeed yields that aspired utility level. They simply assume that such a deal always exists, and that the negotiator can find it without any effort.

Recently, more focus has been given to more realistic negotiation settings. Negotiations with non-linear utility functions, for example, have been studied in [22]. The negotiations are, however, still bilateral, the agreement space is continuous and it is assumed the agreements at least have a known closed-form expression. Also in [17] the utility functions are strictly spoken non-linear over the issues, but they are still linearly additive over pairs of issues. Moreover, the approach of [17] requires a trusted mediator, or a trusted ‘fair die’.

Domains in which the number of possible deals is very large so that one needs to apply search algorithms to find good deals have been studied for example in [16, 23, 24]. Although their utility functions are non-linear over the vector space that represents the space of possible deals, the value of any given deal can still be calculated quickly by solving a linear equation. It is true, as the authors claim, that in theory any non-linear function can be modeled in such a way, but the problem is that in real-world settings utility functions are not always given in this way (e.g. there is no known closed-form expression for the utility function over the set of all possible configurations of a chess game). In order to apply their method one would first need to transform the given expression of a utility function into the expression required by their model, which may easily turn out to be an unfeasible task. Therefore, we have taken the idea of non-linear utility functions a step further in [4], where for any proposal the evaluation of its utility value required solving a Traveling Salesman Problem. However, we still assumed that utility values were assigned directly to deals. The utility values did not depend on any decisions made outside the negotiation thread.

Most research on Automated Negotiations is restricted to bilateral negotiations. Work on multilateral negotiations often focuses on developing protocols (e.g. [7, 15]) or on non-selfish negotiations [18]. An example of a negotiation algorithm for selfish, multilateral negotiations is given in [27]. In this study however, a strict separation is made between buyers and sellers, so a buyer can only come to an agreement with a seller. In real-life negotiations one cannot always make such a distinction. A retailer, for example, sells its products to consumers, but buys them from a wholesaler, so acts both as buyer and seller. Moreover, [27] considers that only one buyer is present, therefore excluding competition between buyers. Furthermore, although multiple sellers are present, they still assume that all agreements are strictly bilateral. Also [1] describes multilateral negotiations in which one buyer negotiates with n sellers, in parallel bilateral negotiation threads, but negotiations are only about the price of a single item. In this paper on the other hand, we do assume multilateral negotiations in which there is no distinction between buyers and sellers, and in which a single deal may involve more than two agents.

An alternative way to subdivide the field of Automated Negotiations is to distinguish between Argumentation Based Negotiations (ABN), and non-Argumentation Based Negotiations. In ABN one assumes that agents are capable of exchanging arguments with one another in order to influence the opponents actions. One agent may for example argue why a certain outcome is unacceptable, or why the opponent should change its mind about a certain proposal. For example [33] describes a model of how the beliefs and behavior of negotiators can be changed via persuasive argumentation. In [32] the authors present “a framework for negotiation in which agents exchange proposals backed by arguments which summarize the reasons why the proposals should be accepted”. A general overview of ABN is provided in [6]. Since argumentation plays an essential role in Diplomacy, we think that this game would also be an excellent test case for ABN. However, in this paper we will not apply ABN. Instead, we will leave this as future work.

Pioneering work on negotiations in Diplomacy was presented in [19, 20]. These papers, however, focus mainly on the modular structure of their agent rather than on the algorithms it applies. It remains unclear how their agent searches through the large space of possible deals and determines what to propose. Moreover, they have only been able to play a very small number of games, as they had to play them with humans, which takes a long time. An informal online community called DAIDE exists which is dedicated to the development of Diplomacy playing agents.Footnote 2 Many agents have been developed by this community but only very few are capable of negotiation. In [9] a new platform called DipGame was introduced to make the development of Diplomacy agents easier for scientific research. Several negotiating agents have been developed on this platform such as in [8, 13]. Later on in this paper we compare our agent with the agents presented in these studies.

3 Diplomacy, an informal description

Diplomacy is a popular board game invented in the 1950’s which is nowadays also widely played over the Internet.Footnote 3 It is a game for seven players, over multiple rounds, with complete information and no chance moves. In order to play well negotiation is an essential skill. Although for each player the ultimate goal of the game is to defeat all other players, players often form coalitions and agree to end the game in a draw between the members of one coalition once all players outside that coalition have been defeated.

The full set of rules of Diplomacy is rather complex, so we only give a simplified description. The differences with the full set of rules are not relevant to this paper anyway.Footnote 4 Furthermore, in this Section we will keep the discussion informal. For a formal definition of the rules we refer to Appendix B.

Diplomacy is a game over multiple rounds. The seven players (also referred to as the seven Great Powers) are called England, Russia, Germany, France, Turkey, Austria and Italy, which are usually abbreviated to ENG, RUS, GER, FRA, TUR, AUS, and ITA. Each player begins with 3 or 4 units (also called armies or fleets) that are placed on a map of Europe in the early 20th century. The map is divided into 75 provinces, which can each hold 0 or 1 units. Some of the provinces (34 in total) are marked as Supply Centers. A player becomes the owner of a Supply Center if he moves one of his units into that Supply Center. If that player later moves his unit out of that Supply Center he remains the owner, until another player moves one of his units into it.

If the owner of a Supply Center changes the new owner will receive an extra unit in the next round, and the previous owner will lose one unit. A player is eliminated when he loses all his units. The game ends either when one player becomes the winner by owning 18 or more Supply Centers (a Solo Victory), or when all players that have not yet been eliminated agree to end the game in a draw.

In each round each player needs to decide what to do with each of his units. In Diplomacy-terminology we say that each player must submit an order for each of his units. He can either submit a move-to order, meaning that he tries to move the unit from its current location to an adjacent province, a hold order, meaning that the unit intends to stay in its current location, or a support order, meaning that the unit will not move, but instead will give extra strength to a moving or holding unit. A unit u can only support a unit \(u^{\prime }\) that moves to (or holds in) a province p if u is located in a province adjacent to p.

The players all submit their orders simultaneously, which means that each player must decide his orders without knowing which orders the other players are submitting in that round.Footnote 5

If two units of two different players are both ordered to move to the same province (or one of them holds in that province), then only the unit that receives the most supports will indeed move, while the other one will stay in its current province (or will be expelled from it if it was trying to hold). In case two units have an equal amount of support then both units will stay in their current province. When a player moves one of his units into a Supply Center he does not own, he will become the new owner of that Supply Center, and therefore we say the player conquers that Supply Center. An interesting aspect of this game is the fact that a unit u of one player may give support to a unit \(u^{\prime }\) that belongs to another player. Therefore, players can help each other to conquer Supply Centers.

The main difference between Diplomacy and purely strategic games like Chess and Checkers is that in Diplomacy players are allowed to negotiate with each other and form coalitions. Each round consists of two stages: a negotiation stage followed by an action stage. During the action stage the players submit their orders, while during the preceding negotiation stage the players negotiate about which orders they will (or will not) submit during the action stage. Typically, players agree not to attack each other, or they agree that one player will use some of its units to support a unit of the other player.

When a player α i tries to move into a Supply Center p, or supports a coalition partner to move into p, we say he is participating in a battle for p. In order to win the battle (i.e. to successfully move into p and thus become its new owner) α i must submit a move-to order for some unit u to move into p (or hold order to stay in p) and α i or any of his coalition partners may submit any number of support orders to support the unit u. We refer to these orders as the battle plan of α i for province p. Typically, more than one player will try to conquer the same province at the same time, so only the player with the strongest battle plan will succeed. Furthermore, the units of a player are often spread around the map of Europe, so during any round a player may be involved in several battles at the same time.

Example

Let us focus on the three players ENG, FRA and GER and suppose that ENG and FRA together form a coalition. These players submit the following orders:

-

1.

ENG moves his unit in the North Sea to Holland.

-

2.

FRA’s unit in Belgium supports ENG’s unit in the North Sea.

-

3.

GER moves his unit in Kiel to Holland.

-

4.

FRA moves his unit in Burgundy to Munich.

-

5.

GER holds with his unit in Munich.

-

6.

GER’s unit in Silesia supports GER’s own unit in Munich.

We see here there are two battles going on: a battle for Holland and a Battle for Munich. The first two orders together form a battle plan of the coalition {ENG, FRA} to conquer Holland and the third order is the battle plan of GER to conquer Holland. The fourth order is a battle plan of FRA to conquer Munich, and the fifth and sixth orders form GER’s battle plan to defend Munich.

Although ENG and GER both try to move to Holland, only ENG will succeed, because ENG’s unit has support from FRA. Furthermore, FRA is trying to expel GER ’s unit from Munich, but fails, because FRA ’s unit does not have any support, while GER’s unit in Munich does have support (in fact, even without support GER’s unit would not be expelled from Munich, because FRA and GER would have equal strength).

In the rest of this paper we will consider each round of Diplomacy as a separate negotiation scenario that satisfies the three properties we highlighted in the introduction. Indeed, determining the influence of an agreement on the number of conquered Supply Centers is a complex combinatorial problem. Furthermore, the utility of a player α i is not directly determined by the agreements it makes, but rather by the orders submitted by α i , as well as the orders submitted by its opponents. In the following sections we will formalize these properties, by defining the notion of a Constraint Optimization Game, which captures the first property, and the notion of a Negotiation Game, which captures the second and third properties.

4 Negotiation Games

As explained, in this paper we assume that the agents’ utility values depend not only on the agreements they make, but also on the decisions they take outside the negotiation thread. In order to model this formally we define the concept of a Negotiation Game.

The idea of a negotiation game is that the players are playing some game G, but before doing so they have the opportunity to negotiate binding agreements about which actions each player will take. The players’ utilities are purely determined by their actions in the game G, but since their choice of actions is partially restricted by the agreements they make, the agreements between the players indirectly influence the players’ utility values. The negotiation thread followed by the actual playing of the game G are together referred to as the Negotiation Game over G and is denoted as N(G).

Definition 1

A one-shot game G consists of a set of players, P l G={α 1,α 2,...α n }, for each player α i ∈P l G a set of actions \(\mathcal {M}_{i}^{G}\) and for each player α i ∈P l G a utility function \({f^{G}_{i}}\) which is a function from \(\mathcal {M}^{G} = \mathcal {M}_{1}^{G} \times \mathcal {M}_{2}^{G}\times ...\mathcal {M}_{n}^{G}\) to \(\mathbb {R}\). An element μ of the set \(\mathcal {M}^{G}\) is called an action profile and its utility vector f G(μ) is the vector \(f^{G}(\mu ) = ({f^{G}_{1}}(\mu ), {f^{G}_{2}}(\mu ), ...{f^{G}_{n}}(\mu ) ) \in \mathbb {R}^{n}\).

We now want to allow the players to negotiate over the actions they take. For this reason, we need to define the concept of a deal.

Definition 2

A deal x over a one-shot game G is a Cartesian product of nonempty subsets \(S_{i} \subseteq \mathcal {M}_{i}^{G}\) , one for each player: \(x = S_{1} \times S_{2}\times {\dots } S_{n}\). The set of all possible deals over a game G is called the agreement space A g r G of G.

A deal should be interpreted as an agreement between the players that each of them will only choose its action from the subset S i .

Example

Let G be the Prisoner’s Dilemma. There are two players: P l G={α 1,α 2} and their actions are: \(\mathcal {M}_{1}^{G} = \mathcal {M}_{2}^{G} = \) {confess, deny}. The agreement space A g r G consists of all products S 1×S 2 where S 1 and S 2 can be either {confess}, {deny} or {confess, deny}. So A g r G contains 9 possible deals. The deal {confess} × {confess, deny} for example would represent the agreement that α 1 will play ‘confess’, while α 2 can play either ‘confess’ or ‘deny’.

Regarding to this example, we should note that in the Prisoner’s Dilemma the players are of course not allowed to negotiate. However, this does not mean that we cannot define its Agreement Space. After all, according to Definition 2 a deal is a well-defined concept for any one-shot game G even if players are not allowed to negotiate. This is important because we first need to define the Agreement Space of a non-negotiation game G before we can define the negotiation version N(G) of that game. Therefore, A g r G should be interpreted as the space of deals that the players could make if they were allowed to negotiate.

In the following we will use the notation \(\mathcal {M}_{i}^{G}[x]\) instead of S i to explicitly indicate that it is part of a deal x. Note that if \(\mathcal {M}_{i}^{G}[x] = \mathcal {M}_{i}^{G}\) player α i is not affected by the deal; the set of actions he can choose from is not restricted by the deal. We therefore say that an agent is participating in a deal x if \(\mathcal {M}_{i}^{G}[x]\) is a strict subset of \(\mathcal {M}_{i}^{G}\).

Definition 3

The set of participating agents p a(x) of a deal x is defined as:

Definition 4

Given a one-shot game G and a deal x of A g r G , the restricted game G[x] is the same game as G except that each player α i is only allowed to choose its action from the subset \(\mathcal {M}_{i}^{G}[x]\) rather than its full set of actions \(\mathcal {M}_{i}^{G}\). Similarly, for a set of deals X the game G[X] is the game G with the restriction that each player α i can only choose its action from the intersection \(\bigcap _{x \in X} \mathcal {M}_{i}^{G}[x]\).

Definition 5

A set of deals X is called consistent iff its intersection is nonempty: \(\bigcap _{x \in X} x \neq \emptyset \).

Note that if X is not consistent, it means that there is no action profile that satisfies all agreements in X, so it is impossible to obey them all.

Given a one-shot game G and a positive integer d the negotiation game over G, denoted as N d (G), is a game over d+1 rounds which are labeled as: t 0,t 1,t 3...t d . The first d rounds are referred to as the negotiation stage, and the last round is called the action stage. The idea is that only in the last round the players take an action from the game G, while during the first d rounds the players negotiate which actions they will take in the last round.

We will now define the negotiation protocol that is applied during the negotiation stage. In each round of the negotiation stage each player takes an action which is either: ‘accept(x)’, or ‘none’, where x can be any deal from the agreement space A g r G associated with the game G. The players take their actions simultaneously, and the accepted deal x can be different for each of the players.

Definition 6

We say a deal x is confirmed in round t j if j is the smallest number for which both the following predicates are true:

-

For each α i ∈p a(x) there is some round \(t_{k_{i}}\) with k i ≤j in which α i has taken the action ‘accept(x)’.

-

The set \(X_{j} \cup \{x\}\), where X j is the set of all deals that have been confirmed in any round t k with k<j, is consistent.

We say a deal x is confirmed if it was confirmed in any round t j with j<d.

This means that a deal x is considered confirmed if at some point all its participating agents have played ‘accept(x)’, and x is consistent with the deals that have already been confirmed earlier.

This negotiation protocol is called the Unstructured Negotiation Protocol, and was introduced in [4]. It allows each player to make any proposal whenever he or she wants, unlike the more common Alternating Offers Protocol [29] in which players take turns. The definition of a negotiation game could be easily changed to reflect the Alternating Offers Protocol instead, but we think that this protocol is too restrictive to model real negotiations. In fact, the rules of Diplomacy do not specify any protocol at all. Players are allowed to negotiate however they want.

In most literature on negotiation protocols one agent proposes a deal, and then another agent may or may not accept the deal. To keep our formalization simple however we do not make this distinction, so both the proposal and the acceptance are represented by the ‘accept’ action. Furthermore, we should note that under this protocol the negotiators are not obliged to respond to a proposal. Instead of rejecting it or making a counter proposal they may simply remain silent, by playing the ‘none’ action. Furthermore, we do not explicitly model the option of withdrawing from the negotiations. However, a negotiator can still withdraw simply by remaining silent for the rest of the negotiation stage.

If X d denotes the set of all deals that were confirmed during the negotiation stage, then during the action stage of N d (G) the players play the game G[X d ]. This means they can only pick actions that are consistent with the agreements they made during the negotiation stage.

Definition 7

Let G be a one-shot game and d a positive integer. The Negotiation Game over G, denoted as N d (G), is a game over d+1 rounds, with the same players as G. In the first d rounds (the negotiation stage) in each round each player can play either the action ‘none’ or an action accept(x) for any x∈A g r G , and in the last round (the action stage) the players play the game G[X d ], where X d is the set of deals confirmed during the negotiation stage. Each player α i receives the utility \({f_{i}^{G}}(\mu )\) where \(\mu \in \bigcap _{x\in X_{d}}x\) is the action profile chosen by the players during the action stage.

For simplicity we have here defined the negotiations to take place over a sequence of d discrete rounds. However, one can easily use this model to approximate negotiations that take place in continuous time, simply by taking d to be a very large number and setting the duration of each round in the negotiation stage to a very small number. This may mean that players do not have enough time to decide which action to take in each round, but that is not a problem if we assume that not taking an action is interpreted as taking the action ‘none’. In the following, we will often write N(G) instead of N d (G) since the actual value of d is mostly irrelevant for our purposes.

Finally, we would like to stress that a player’s set of allowed actions \(\mathcal {M}_{i}^{G}[X_{d}]\) in the action stage can only be smaller than its full set of actions \(\mathcal {M}_{i}^{G}\) if that player α i himself has agreed with those restrictions by accepting the deals in X d . Of course, restricting your set of actions is never beneficial by itself, but when the other players return the favor by also restricting their sets of actions, this can be highly beneficial.

Example

Again, let G be the Prisoner’s Dilemma, then N 1(G) is the negotiation game over the Prisoner’s Dilemma with d=1. In round t 0 both players have the opportunity to suggest a deal; for example, they could play the action accept({d e n y}×{deny}). If they both suggest this deal then this deal is confirmed, meaning that in round t 1 each player α i can only play the action ‘deny’.

Proposition 1

If G is the Prisoner’s Dilemma then the negotiation game N 1 (G) has a Subgame Perfect Equilibrium that consists of both players playing accept({deny}×{deny}) in t 0 and both playing ‘deny’ in t 1.

Note that the Nash Equilibrium of N 1(G) dominates the Nash Equilibrium of the pure Prisoner’s Dilemma G without negotiations, in which both players play ‘confess’. Therefore, this demonstrates how the introduction of negotiations improves the individual outcomes of the players.

In the introduction we mentioned that we want utility functions to satisfy three properties. We see that for any Negotiation Game the second and third property are indeed satisfied: the utility values obtained by the players are determined by the actions they take in the action stage, rather than the agreements made in the negotiation stage. The agreements only influence the players’ utilities indirectly, because they restrict the actions that can be taken during the action stage. We think this model reflects how negotiations work in real life. After all, signing a contract is not an action that is valuable by itself. Instead, it merely binds all signing parties to undertake certain actions (or refrain from undertaking certain actions), and it is these actions that determine the utilities obtained by those parties.

5 D-Brane

We have implemented a Diplomacy-playing agent called D-Brane (Diplomacy BRAnch & bound NEgotiator). As a heuristic, in each round it tries to maximize the number of Supply Centers conquered during that round. In this way we can regard the action stage of a single round of Diplomacy as a game in itself, which we denote as D i p 𝜖 . The parameter 𝜖 represents the configuration of the units on the map, which is of course different in each round. Our agent’s utility function f for the game D i p 𝜖 is then the number of Supply Centers conquered, and a full round of Diplomacy can then be seen as an instance of the negotiation game N ( D i p 𝜖 ). A formal definition of D i p 𝜖 can be found in Appendix B.

Of course, the fact that our player only tries to maximize its number of Supply Centers for the current round is a very greedy heuristic. We know from personal experience that real players often think ahead more than one round. Nevertheless, as we will see in the experimental section, we did manage to implement a successful player using this heuristic.

D-Brane consists of two independent components: the strategic component (see Section 5.1) and the negotiating component (Section 5.3). The negotiating component searches for deals during the negotiation stage to propose to the coalition partners and determines whether or not to accept proposals received from the coalition partners. The strategic component determines, given any deal x, which are the best actions to take if x is confirmed. The strategic component is used in two ways: during the negotiation stage the negotiating component applies the strategic component to determine whether any deal is valuable enough to be proposed or accepted, and during the the action stage it is used to determine which orders to submit, under the restriction of the agreements that were made during the negotiation stage. This modular decomposition of D-Brane is important because it will allow future researchers to replace D-Brane’s negotiation algorithm with their own algorithms, allowing them to compare these negotiation algorithms independent of the underlying strategy.

It is important to understand that D-Brane is a selfish negotiator: it does help its allies in the game, but only because it expects help from them in return in later rounds. Another important aspect of D-Brane is that it always obeys all agreements it makes and always assumes that the other agents will do the same. This is a simplification, because in a real Diplomacy game players may not always obey their agreements, and therefore the notion of trust is an important factor. However, the subject of trust is beyond the scope of our work so we only focus on strategy and negotiations. Furthermore, D-Brane does not try to form the best possible coalition. Instead, it assumes that a certain coalition structure is given from the start. Again, this is because the problem of coalition formation is beyond the scope of our work.

D-Brane was implemented in Java, using the DipGame framework [9]. However, we did not use the negotiation language and negotiation server provided by DipGame, because it turned out easier to implement our experiments with a custom made negotiation server and language, which we have bundled into the new BANDANA framework (see Appendix A).

In the following, we will always assume that the algorithms we describe are running on the agent with name α 1. The other agents, \(\alpha _{2} {\dots } \alpha _{n}\), may also be copies of D-Brane, but they may just as well be any other Diplomacy playing agent, or they may even be human players.

5.1 The strategic component

Given a game state 𝜖 (i.e. a configuration of units on the Diplomacy map), a deal x and any player α i the strategic component returns a set of orders for α i for the game D i p 𝜖 [x] plus the number of Supply Centers that α i is guaranteed to conquer if it submits those orders. Note that, although this component is part of agent α 1, it can also be used to predict the orders and the the number of conquered Supply Centers of any other player α i . This is important, because it allows α 1 to assess how valuable the deal x is to the other participants in the deal. After all, it does not make sense to propose deals that are unprofitable for the other participants.

In theory one could try to determine the set of all possible actions for each player and then calculate the Nash Equilibrium, but this is computationally too expensive as the number of possible action profiles can easily be of the order 1010. Our algorithm, however, manages to quickly make very good approximations by decomposing the game into a number of smaller games which each correspond to the battle for a single Supply Center. Then, after determining the best battle plans for each battle, it tries to find the strongest combination of such battle plans.

We say that a battle plan for a player α i to conquer a province p is invincible if no opponent can choose any battle plan that would prevent α i from conquering p (see Appendix B for a formal definition). Once we have determined all battle plans for a given province p, for all players, it is easy to determine which of those battle plans are invincible. Similarly, we can determine the invincible pairs of battle plans. An invincible pair is a pair of battle plans (β p ,β q ) for two different Supply Centers p and q respectively, such that if α 1 plays both of these battle plans, then at least one of them is guaranteed to succeed (again, see Appendix B for a formal definition). The idea behind the definition of an invincible pair is the following. Suppose that our battle plan β p can be defeated by an opponent’s battle plan \(\beta _{p}^{\prime }\) and that our battle plan β q can be defeated by an opponent’s battle plan \(\beta _{q}^{\prime }\). One might be inclined to jump to the conclusion that playing β p and β q cannot guarantee us to conquer any Supply Center. However, it might be the case that \(\beta _{p}^{\prime }\) and \(\beta _{q}^{\prime }\) are the only plans that can defeat β p and β q , and that \(\beta _{p}^{\prime }\) and \(\beta _{q}^{\prime }\) are incompatible with each other, so the opponent cannot play them both. In that case, if we play both β p and β q we are still guaranteed that at least one of the two will succeed.

5.1.1 The basic algorithm

If 𝜖 denotes some state of the game the set of all battle plans for player α i to conquer or defend a Supply Center p is denoted \(B_{i,p}^{\epsilon }\). The set of all Supply Centers is denoted SC.

Given a game state 𝜖, an agreement x, and a player α i , the strategic component works as follows:

-

1.

For each p∈S C determine all invincible plans from the set \(B_{i,p}^{\epsilon }\).

-

2.

For all pairs of Supply Centers (p,q)∈S C×S C (with p≠q) determine all invincible pairs from the set \(B_{i,p}^{\epsilon } \times B_{i,q}^{\epsilon }\).

-

3.

Remove all invincible plans and invincible pairs that are not consistent with x.

-

4.

Find the largest consistent combination of invincible plans and pairs, using And/Or tree search with Branch & Bound (Section 5.1.2).

-

5.

For each province for which we have not been able to select an invincible plan or pair, select the strongest non-invincible battle plan that is consistent with x and the plans and pairs chosen in the previous step.

-

6.

Return the full set of battle plans we have selected.

The number of invincible plans and pairs returned equals the number of Supply Centers α i is guaranteed to conquer. The plans chosen in step 5 merely form a best effort to try to conquer some more Supply Centers even though the opponents might be able to defeat those attempts. Of course, the opponents may not be perfect so it is at least worth trying.

The battle plans in \(B_{i,p}^{\epsilon }\) may contain support orders for units of coalition partners of α i . However, α i cannot be sure that these coalition partners will indeed submit those orders, unless the deal x ensures that. Therefore, any plan that contains orders for units that do not belong to α i and that are not ensured by the agreement x are discarded.

In theory, the algorithm would be even stronger if it did not only determine invincible plans and pairs, but also invincible triples, invincible quadruples, etcetera. However, the number of such n-tuples grows exponentially with n, so checking which ones are invincible would slow down the algorithm considerably.

5.1.2 And/Or tree search

And/Or tree search [5] is a variant of regular tree search that can be used to solve Constraint Optimization Problems. The power of this technique lies in the fact that the depth of the search tree is drastically decreased with respect to a naive tree search, by exploiting the knowledge that certain variables are independent.

Note that step 4 of the algorithm above is indeed a Constraint Optimization Problem: for each Supply Center we want to pick an invincible plan or an invincible pair, but not all combinations of such plans and pairs are consistent. Since every invincible plan or invincible pair guarantees a conquered Supply Center, we aim to pick as many of such plans as possible. However, because of the constraints we may not be able to pick an invincible plan or pair for every Supply Center; we sometimes need to pick the ‘empty plan’ (i.e. none of our units will try to conquer the Supply Center).

The variables of this constraint optimization problem are the Supply Centers p∈S C. For each such variable, its domain consists of the set of invincible plans, the set of invincible pairs,Footnote 6 and the empty plan. The constraints between the variables are given by the fact that a battle plan for Supply Center p and a battle plan for Supply Center q may be incompatible if they contain two different orders for the same unit. The value to optimize is the number of Supply Centers for which we have not chosen the empty plan.

In this setting it is very easy to see which variables depend on each other, and which are independent. Two Supply Centers p and q are independent if there is no unit that is involved in the battle plan for p as well as in a battle plan for q. This means that it is an ideal case for And/Or Tree Search.

5.2 Constraint optimization games

Above we have shown how our player solves the complex game D i p 𝜖 by decomposing it into smaller games (the battles), determining the best moves in every such battle, and then using Constraint Optimization techniques to find the best combination of these best moves.

However, the way we presented this was completely ad-hoc for the game of Diplomacy. In this section we will therefore generalize these ideas, by only looking at the abstract properties of the game that allowed us to follow this ‘divide-and-conquer’ approach. We will define a new class of games that we call Constraint Optimization Games (COG). A COG is a game that can be decomposed as a number of smaller games that are played simultaneously but that are not independent. We then show how the above described algorithm for Diplomacy can be generalized to any other Constraint Optimization Game.

Let M G be a set of m one-shot games: M G={G 1,G 2,...G m }, each with the same n players. We call these games the micro-games. The set of actions for player α i in micro-game G j is denoted as \(\mathcal {M}_{i}^{G_{j}}\).

Definition 8 (A Constraint Optimization Game)

G M G is a game with n players, such that the set of actions \(\mathcal {M}_{i}^{G_{MG}}\) for player α i in G M G is a subset of the Cartesian product of the actions of each micro-game: \(\mathcal {M}_{i}^{G_{MG}} \subseteq \mathcal {M}_{i}^{G_{1}} \times \mathcal {M}_{i}^{G_{2}} \times ... \mathcal {M}_{i}^{G_{m}} \). The utility function for a player α i is defined as the sum of its utility functions in each of the micro-games:

Note that an action profile μ of G M G can then be viewed as a matrix in which each row μ j represents an action profile from the micro-game G j and each entry μ j,i represents the action taken by player α i in micro-game G j .

We see that the game G M G consists of a number of smaller one-shot games that cannot be played independently from each other, because the set of actions \(\mathcal {M}_{i}^{G_{MG}}\) of α i is a subset of the product of the sets \(\mathcal {M}_{i}^{G_{j}}\). Each player α i can pick for any micro-game G j any action from \(\mathcal {M}_{i}^{G_{j}}\), but not all combinations of such actions are allowed. In other words: there are constraints between the actions of the several micro-games that need to be satisfied. Therefore, the best strategy for the game G M G is not simply the combination of the best strategies of each individual micro-game. For example, player α 1 may have the optimal action \(a \in \mathcal {M}_{i}^{G_{1}}\) in micro-game G 1 and an optimal action \(b\in \mathcal {M}_{i}^{G_{2}}\) in micro-game G 2, but the combination of a and b may be illegal, so α 1 is forced to choose a suboptimal action in at least one of these two micro-games.

Example

In the game D i p 𝜖 the battle for a single Supply Center p j can be seen as a micro-game G j . The utility for a player α i in micro-game G j is 1 if α i succeeds in conquering p j , and 0 otherwise. Its utility for the entire COG is then the total number of Supply Centers it conquers. For each Supply Center p a player α i must choose which orders to submit for its units located around p. However, some of those units may also be adjacent to another Supply Center q. Therefore, if α i decides to order a unit u to move to p, it can no longer use that unit to move to q. So we see that indeed there are constraints between the micro-games.

The concept of a COG combines aspects from Constraint Optimization Problems (COP) with aspects from Game Theory. Just like in a COP an agent α i needs to pick m values for m different variables, such that the combination is consistent and maximizes its utility [30]. However, unlike normal COPs, the utility of an agent does not only depend on the values it chooses, but also on those chosen by its opponents, which have different utility functions to maximize, just as in Game Theory. Another way to look at it, is to see it as a variation of a Distributed Constraint Optimization Problem in which there is not one single utility function to maximize, but rather each agent aims to maximize its own individual utility function.

Let us now present a rough sketch of how one can generalize the algorithm described in Section 5.1 to other COGs. The essence of our algorithm can be described in three steps:

-

1.

Assign a value to every action of α i in every micro-game.

-

2.

Do the same for every pair of actions of α i for every pair of micro-games.

-

3.

Use And/Or search to find the combination of actions that maximizes the sum of its action-values, under the restriction that the chosen set of actions must be consistent.

The idea is that assigning a single value to each action in each micro-game in fact reduces the COG to a standard COP, which can be solved with an And/Or search. One straightforward way to assign a value to an action μ j,i is to find the set of opponent actions μ j,−i that minimize the utility function f i (μ j,i ,μ j,−i ). In other words: the value obtained in the worst-case scenario that the opponents pick those actions that minimize α i ’s utility. If we apply this principle to D i p 𝜖 , then this means that every invincible plan or pair receives a value of 1 and all other plans receive a value of 0, which essentially means that all non-invincible plans are discarded, which is indeed what we did.

Finally, we would like to remark that finding the best actions to take in a COG is a hard combinatorial problem, so if G is a COG, then N(G) satisfies all three properties that we mentioned in the introduction.

5.3 The negotiating component

During the negotiation stage of N ( D i p 𝜖 ), given a coalition \(C \subset Pl\) that includes α 1, the negotiating component explores the agreement space by means of a best-first Branch & Bound tree search in order to find good deals to propose to the coalition partners. At regular time intervals it determines whether it should make a new proposal to its coalition partners and, if yes, which one. Furthermore, whenever the agent receives a proposal from any of the coalition partners it determines whether to accept that proposal or not.

The negotiating tree search algorithm is a previously developed algorithm for general negotiation settings, called NB3, applied to Diplomacy. We only give a brief description here. For more information we refer to [4].

5.3.1 Tree search

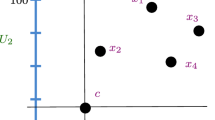

We will now use the notation \(p_{1}, p_{2}, {\dots } p_{34}\) to denote the 34 Supply Centers of Diplomacy. Let C denote the set of coalition partners of α 1 (including α 1 itself), let \(B_{C, p}^{\epsilon }\) denote the set of battle plans with target p∈S C involving only players in C, and let \(B_{C}^{\epsilon }\) denote the set of battle plans for all coalitions partners, for any Supply Center:

The NB3 algorithm expands a search tree in which each node ν (except the root node) is labeled with a battle plan \(\beta _{\nu } \in B_{C, p}^{\epsilon }\) for some Supply Center p. The search starts with a single tree node (the root). Then, if \(B_{C, p_{1}}^{\epsilon }\) has size k 1, the algorithm will add k 1 child nodes to the root, with each child labeled with a different battle plan \(\beta \in B_{C, p_{1}}^{\epsilon }\). Next, it uses a heuristic function to determine which of these new nodes is the “best” and continues expanding that node ν. If \(B_{C, p_{2}}^{\epsilon }\) has size k 2 then the algorithm creates k 2 children for node ν, each labeled with a different battle plan \(\beta \in B_{C, p_{2}}^{\epsilon }\). Again, the heuristic function chooses the next node to split, and this is repeated until either the deadline for negotiations has finished, or until the tree has been explored exhaustively. Any node for which the battle plan is incompatible with the battle plans of its ancestors is pruned immediately.

Each node in this tree represents a deal that may be proposed to the coalition partners. Specifically, if p a t h(ν) denotes the set of nodes that make up the path from the root node to ν, and p l a n(ν) the set of battle plans corresponding to the labels of the nodes in p a t h(ν):

then with each such plan p l a n(ν) we can associate a deal x ν ∈A g r D i p 𝜖 in which each participating player is committed to submit his or her ordersFootnote 7 that appear in p l a n(ν), which means that any action profile μ is allowed, as long as it contains all orders that the players have committed themselves to.

Note that x ν as defined here is indeed a deal in the sense of Definition 2. The battle plans in p l a n(ν) consist of a number of orders, and if for some player α i there are a number of orders in p l a n(ν) then its restricted set of actions \(\mathcal {M}_{i}^{\epsilon }[x_{\nu }]\) consists of those actions in \(\mathcal {M}_{i}^{\epsilon }\) that contain all α i ’s orders in p l a n(ν) .

Note that the set of deals that are considered in this way is much smaller than the full agreement space \(Agr_{Dip_{\epsilon }}\), because we are only looking at deals in which players commit themselves to battle plans, rather than any random set of orders. In this way we have filtered out for example actions containing invalid supports, and action profiles in which coalition partners take contradictory actions (e.g. two coalition partners both trying to attack the same province).

In order to determine which of the deals represented in the tree are good enough to propose to the coalition partners our algorithm calculates for each node ν and each coalition partner α i ∈C a utility value u i (x ν ). This utility value is not the same as the utility function f as defined for D i p 𝜖 , but rather it is a value that indicates how profitable the deal x ν is to player α i . In Section 5.3.2 we will explain how u i (x ν ) is defined.

Furthermore, for each node ν and for each coalition partner α i ∈C the algorithm stores an upper bound u b i (ν) and a lower bound l b i (ν), which are used for pruning and to calculate the search heuristic. The upper bound u b i (ν) is the highest utility \(u_{i}(x_{\nu ^{\prime }})\) agent α i could possibly receive from any plan \(x_{\nu ^{\prime }}\) where node \(\nu ^{\prime }\) is any descendent of ν. This means that if u b i (ν) is lower than α i ’s reservation value, any plan that could appear in the subtree under ν would be unprofitable for α i so the node ν can be pruned. Similarly, the l b i (ν) is the lowest possible value of \(u_{i}(x_{\nu }^{\prime })\) then one could find for any node \(\nu ^{\prime }\) that is a descendant of ν.

If for a certain node ν the utility value u i (x ν ) is higher than the reservation value r v i for every participating agent α i ∈p a(x ν ), it means the deal is in principle profitable for every participating agent, and therefore D-Brane may consider to propose it to the others. In that case, the deal will be stored in a list of potential proposals, which will be used by the negotiation strategy as we will explain in Section 5.3.3.

5.3.2 Credits

The algorithm tries to find deals that are profitable for each player participating in the deal. For example, α 1 may support α 2 to attack some Supply Center p while in return α 2 will support α 1 to attack some other Supply Center q. This is mutually beneficial if neither of these players is able to conquer its targeted Supply Center without support from the other player. Unfortunately, it turns out that such situations in which two or more players have the opportunity to help each other do not occur very often.

To increase the number of opportunities to make beneficial deals D-Brane applies a ‘credit’ system, which means that when one player α i gives support to another player α j , the supported player α j is considered indebted to α i . This means that α i can expect α j to return the favor and give support to α i at some later phase of the game. Thanks to this system a player may be willing to support another player even if the favor cannot be returned immediately, which strongly increases the number of potential deals.

In order to assess the value of a deal, a player should therefore not only take the number Supply Centers that are conquered thanks to the deal into account, but also assign some extra value to the deal to represent future supports that may be received as a consequence of the current deal.

Specifically, D-Brane stores a number d i,j which is the credit balance between players α i and α j : the number of supports α i has so far given to α j , minus the number of supports α j has given to α i . If d i,j <0 it means that α j still owes a number of supports to α i .

D-Brane then calculates the value u i (x) of a deal x for player α i as:

where \( E(f_{i}^{Dip_{\epsilon }[x]}) \) is the expected number of conquered Supply Centers in the current turn given the deal x, where \(E(f_{i}^{Dip_{\epsilon }})\) is the expected number of conquered Supply Centers in the current turn if no deal is made, and C r(d i,j ) is given by:

One should understand that while \(f_{i}^{Dip_{\epsilon }[x]}\) is the short-term utility (the number of Supply Centers conquered directly in the current round), the utility u i (x) is a sort of long-term expected utility value, which takes into account the number of supports α i still owes to other players and the number of supports other players still owe to α i .

We will now show that the values 0.4 and 0.6 that appear in (3) are chosen such that D-Brane exhibits behavior that one would indeed expect from a selfish negotiator.

Proposition 2

A rational player α i that evaluates deals according to (2) would only be willing to give support to another player α j if that does not cause α i to lose a Supply Center.

Proof

Losing a Supply Center causes \(E(f_{i}^{Dip_{\epsilon }[x]})\) to decrease by 1, while giving support only increases C r(d i,j ) by either 0.4 or 0.6, so in total u would decrease. □

Proposition 3

A rational player α i that evaluates deals according to (2) would only ask support from another player α j if α i expects that this will yield an extra Supply Center for α i .

Proof

If no extra Supply Center is gained the received support decreases u by either 0.4 or 0.6. □

Proposition 4

If a rational player α i that evaluates deals according to (2) has a positive credit balance w.r.t α j , then α i would prefer to receive support from α j and gain a Supply Center, rather than give more support to α j .

Proof

If α i has a positive credit balance, then the combination of a gained Supply Center and a received support increases u i by 1−0.4=0.6, while giving more support would increase u i by only 0.4. □

Proposition 5

If a rational player α i that evaluates deals according to (2) has a negative credit balance w.r.t α j , then α i would prefer to give support to α j rather than to ask for more support from α j and gain a Supply Center.

Proof

With a negative balance, the extra support would yield 1−0.6=0.4, while giving support would increase u i by 0.6. □

Without Proposition 2 D-Brane would not be selfish because it would be inclined to constantly give support to others, while losing its own Supply Centers. Proposition 3 guarantees that D-Brane does not request any unnecessary supports. Proposition 4 makes sure that D-Brane only gives support because it expects that others will return the favor. And without Proposition 5 D-Brane would never be willing to return any favors, and hence nobody would like to negotiate with D-Brane.

5.3.3 Negotiation strategy

Every time a new node ν is generated the algorithm determines whether the corresponding deal x ν is rational to all participating agents p a(x ν ). That is, it checks whether for each participating agent the utility u i (x ν ) is larger than its reservation value r v i . If this is the case, then it is stored in a repository of potential deals.

Then, at set intervals during the negotiation stage, the NB3 negotiation algorithm determines whether any of the deals in this repository can be proposed. It does this by applying a time-based strategy: the closer to the deadline, the more it is willing to propose or accept deals with less utility for α 1. Furthermore, the closer to the deadline, the more it will be inclined to propose deals that yield high utility for the other participating agents.

5.3.4 Implicit vs. explicit agreements

When two or more players in Diplomacy are in a coalition, this usually implies two things: it means they will not attack each other and it means that they may support each other when attacking an opponent. We call the first kind of agreement an implicit agreement because it is implied by the fact that the players are allies, so no negotiation is needed to establish such agreements. The second kind we call an explicit agreement and can only be made by negotiating. The deals investigated and proposed by the Negotiating Component are therefore exclusively of the explicit type. The ability of D-Brane to negotiate (i.e. to make explicit agreements) and its ability to obey implicit agreements can both be switched on and off, so it can play in 4 different modes: with negotiations on or off and with implicit agreements on or off.

It is important to make this distinction for the experimental evaluation of D-Brane. On the one hand it is unrealistic to play without implicit agreements, because any rational and trustworthy player would always apply them. On the other hand however, we want to investigate the importance of negotiations. Therefore, if D-Brane defeats its opponents, we want to know whether this is caused by its negotiation algorithm, or by the fact that it has a strong strategic module, or simply because it plays in a coalition and the mere fact that the coalition partners do not attack one another gives them an advantage over the opponents. Testing the agent in all 4 different modes allows us to identify to what extent each of these three elements is responsible for playing well.

Let X d denote the set of explicit agreements that were confirmed during the negotiation stage of N(D i p 𝜖 ), and let X C denote the set of implicit agreements implied by the coalition C that α 1 is in. Then during the action stage of N(D i p 𝜖 ) the strategic component tries to determine the best possible action of the game:

-

\(Dip_{\epsilon }[X_{d} \cup X_{C}]\), if both implicit and explicit agreements are obeyed.

-

D i p 𝜖 [X d ], if only explicit agreements are obeyed.

-

D i p 𝜖 [X C ], if only implicit agreements are obeyed.

-

D i p 𝜖 , if no agreements are obeyed.

In order to correctly interpret the experimental results we feel it is important to stress that if both implicit and explicit agreements are switched off this essentially means that D-Brane plays entirely individually, and does not consider itself part of any coalition.

6 Experiments

In this section we compare D-Brane with two other Diplomacy playing agents (also known as ‘bots’) recently presented in [8, 13]. In both papers a negotiating agent was tested by letting it play against a standard non-negotiating bot called the DumbBot. We have done similar experiments with our D-Brane agent and compared the results with theirs. Moreover, we have submitted D-Brane to participate in the Computer Diplomacy Challenge. Our agent turned out to be the winner of this competition, which confirms the results of our experiments.

We did experiments with the number of D-Branes varying from 2 to 5, while all other players always being DumbBots. Every such experiment was repeated 4 times; one time for each different mode of D-Brane. In each of the experiments we performed with negotiations off (i.e. not making any explicit agreements) we played 500 games, which took up to four-and-a-half hours. For experiments with negotiations we set the deadline for negotiations to 5 s per round. Since such experiments took much more time we only played 200 games in each such experiment, which still took up to 17 h. In order to prevent games from continuing forever because they get stuck in a stalemate, we have programmed the agents to automatically declare a draw after 40 rounds.Footnote 8 For our experiments we used the Parlance game server.Footnote 9 In each new game this server randomly determines which player will play which Great Power.

In all experiments with implicit agreements turned on, the D-Branes were instructed to form a coalition against the DumbBots. D-Brane never breaks any promises and never leaves the coalition, and assumes its coalition partners will not do so either. As explained, this is because trust and coalition formation are beyond the scope of our work. All experiments were performed on a single HP Z1 G2 desktop computer with Intel Xeon E3 4x3.3GHz CPU and 8 GB RAM.

6.1 D-Brane vs. DumbBot

As explained above, we did a number of experiments, with the number of D-Branes in each experiment varying between 2 and 5, and for each of these numbers, we performed an experiment with each of the 4 modes of D-Branes, resulting in a total of 16 experiments. For each of these experiments we measured the performance of D-Brane by counting the number of Supply Centers conquered. In total there are 34 Supply Centers, and 7 players, so if all players are equally strong we can expect each player to conquer \(\frac {34}{7}\) Supply Centers, so if there are n D-Branes then we can conclude that D-Brane is better than DumbBot if the D-Branes obtain more than \(n\cdot \frac {34}{7}\) Supply Centers. We see in Table 1 that this was clearly the case in every experiment. We can therefore conclude that D-Brane plays significantly better than DumbBot. For example, in the case of 4 D-Branes and 3 DumbBots, without negotiations and without implicit agreements, on average the D-Branes together conquer almost 30 Supply Centers, leaving only 4 Supply Centers for the DumbBots.

As expected, we see that playing with implicit agreements improves the outcome for the D-Branes. However, we also see that playing with negotiations only improves the result if it is in combination with implicit agreements. Surprisingly, if seems that if the implicit agreements are turned off, the negotiation algorithm even has a detrimental effect, although this is effect too small compared the standard errors to call it significant.

It is at this point unknown to us why exactly negotiations seem to be ineffective without implicit agreements. One hypothesis is that this effect might be caused by the fact that without implicit agreements but with negotiations the D-Branes will mainly spend their efforts on closing deals to protect themselves from one another, and can therefore spend less effort on eliminating DumbBots. Without explicit agreements and without negotiations, it would be easier for one D-Brane to quickly eat up another D-Brane, which would lead to one very strong D-Brane, which could then focus on eliminating the DumbBots. Of course, this is bad for the eliminated D-Branes, but for the group of D-Branes as a whole this could lead to a higher total number of conquered Supply Centers. With implicit agreements and with negotiations, the D-Branes do not attack each other anyway, so all their efforts can be focused entirely on eliminating the DumbBots. More research is required to determine whether this hypothesis holds or not.

6.2 D-Brane compared with DipBlue

In [13] a negotiating Diplomacy agent called DipBlue was introduced. In their experiments they let 2 instances of DipBlue play against 5 DumbBots. As a measure of success they used the average rank of their two agents over 75 games. That is: after each game the best player gets rank 1, the second best player gets rank 2, etcetera, and the worst player gets rank 7. A player is considered better than another player if it finishes with more Supply Centers, or if it is eliminated later. If all players in a game are equally strong then you may expect all players to receive an average rank of 4.0. With 2 instances of the player to test, the best possible average rank is 1.5 and the worst is 6.5. The best average rank that DipBlue achieves in their experimentsFootnote 10 is 3.57.

To compare the performance of D-Brane with DipBlue we have also measured the average rank of D-Brane in the experiments with 2 instances of D-Brane against 5 instances of DumbBot. The results are displayed in Table 2. It displays the average rank of the D-Branes in the four different modes, with their respective standard errors. We note that in all cases the average rank is around 2.5, even when negotiations were switched off. This means that not only our agent is better than the DumbBot, but also that even without negotiating D-Brane plays significantly better than DipBlue with negotiations.

We should remark here, that comparing the negotiation skills of D-Brane with the negotiation skills of DipBlue is not entirely fair, because D-Brane always obeys all confirmed agreements, and assumes its coalition partners obey all confirmed agreements, whereas DipBlue takes into account that the other players may not obey their commitments. Thus, when negotiating, D-Brane has an unfair advantage with respect to DipBlue. However, this advantage is not present when both implicit and explicit agreements are turned off, since in that case D-Brane essentially plays with no coalition partners at all. So at least for that case it is fair to say that D-Brane outplays DipBlue. The same also holds for our statement that D-Brane outplays the DumbBot.

6.3 D-Brane compared with Fabregues’ agent

In [8] a nameless agent was presented by Fabregues, which was also compared with the DumbBot. Fabregues did 8 experiments, in which the number of instances of her bot varied between 0 and 7, and the remaining players were DumbBots. In each experiment she played 100 games. As a measure of success she counted the number of victories of her agent. To compare our results with hers, we have also counted the number of victories of D-Brane in our experiments and displayed them in Table 3.

It is important to note here, that Fabregues’ experiments were performed on a super computer, with deadlines of 5 minutes per round, while we did our experiments on a desktop computer, and with deadlines of only 5 s per round. Furthermore, the results displayed in the table are for the cases that the D-Branes did not negotiate at all, and played without implicit agreements.

Here, we count a ‘victory’ if a D-Brane ends with the highest number of supply centers. If 2 agents both end with the highest supply centers we count ‘half a victory’ for each of them. We should note that we have rounded off the percentages displayed for Fabregues’ agent to multiples of 5. This is because in her thesis Fabregues does not provide the exact percentages, but only displays a graph from which it is hard to read off the exact values.

We see that in all cases D-Brane scored significantly better than Fabregues’ negotiating agent, even if D-Brane was not negotiating, and even though our experiment ran on a single desktop computer rather than on a super computer, and even though our deadlines were much shorter.

6.4 Varying negotiation deadlines

In order to see how the length of the deadlines affects the performance of our negotiation algorithm we have done some more experiments, with varying deadlines. In these cases the we had 3 D-Branes playing against 4 DumbBots, with negotiations and with implicit agreements. The results are displayed in Table 4. Each of the results is an average over 50 games, except in the case of 5 s, which was averaged over 200 games. We conclude from this experiment that increasing the negotiation time does not improve the results any further. If D-Brane is capable of finding a potential deal, it will find it within the first 5 s.

6.5 Computer Diplomacy Challenge

Finally, we have also submitted D-Brane to the first Computer Diplomacy Challenge which was part of the 2015 ICGA Computer Olympiad. For this competition we only submitted the non-negotiating version (no implicit agreements and no explicit agreements) of D-Brane, because our negotiation language is not compatible with the DipGame negotiation framework, which was used for the competition.

The competition had three participants: D-Brane, DipBlue, and another non-negotiating player called SuperBot. The agents played a number of games in two different competition set-ups. The first set-up consisted of one instance of each of these participating bots and four instances of a RandomBot (a player that only takes entirely random actions). The second set-up consisted of two instances of each participating bot and one RandomBot.

The results were measured according to two criteria: the number of victories and the number of Supply Centers conquered. The results are displayed in Table 5. We see that D-Brane won the competition by a large difference, both measured in terms of victories and in terms of conquered Supply Centers. This again confirms the previous results that even without negotiations D-Brane is able to outplay negotiating players.

7 Conclusions and future work

Our experiments make clear that D-Brane plays better than DumbBot, DipBlue and Fabregues’ agent. We also see however that our negotiation algorithm only has a relatively small positive effect, and only when applied in combination with implicit agreements. Of course, one could argue that the NB3 negotiation algorithm itself is not good enough, but we know from experiments presented in [4] that in at least one other domain NB3 does produce good results.

Apparently, only negotiating joint battle plans for the current round is not enough to really benefit strongly from negotiations. Indeed, we know from personal experience playing Diplomacy that a good player not only negotiates battle plans for the current round, but also looks farther ahead and negotiates future actions. This again confirms our claim that the field of Automated Negotiations should give more attention to the modeling of complex domains, rather than only the development of bargaining strategies.

In [8, 13] negotiations had a stronger positive impact on the respective agents. We think this is because both their agents were implemented by extending the DumbBot with negotiation capabilities, while the DumbBot is not a very strong player. It is likely that negotiations have a much bigger impact on bad players, because good players have less room for improvement.

Furthermore, it is currently still unknown to us why our negotiation algorithm only works well in combination with implicit agreements. We will need to investigate this further in the future.

The most striking result of our experiments, however, is that even when D-Brane does not negotiate it still achieves much better results then DipBlue and Fabregues’ agent, which do negotiate. From this we draw the very important conclusion that in some cases successful negotiation may depend more on the way the underlying domain is tackled, rather than on the applied bargaining strategy. We therefore argue that future research in the field of Automated Negotiations should put more emphasis on domains where calculating the utility values of potential deals is a complex task. In realistic negotiation settings one simply cannot assume that an explicit representation of the utility functions is given and easy to calculate. Instead, negotiation algorithms should apply more sophisticated forms of reasoning to determine which deals are profitable.