Abstract

Naïve Bayes learners are widely used, efficient, and effective supervised learning methods for labeled datasets in noisy environments. It has been shown that naïve Bayes learners produce reasonable performance compared with other machine learning algorithms. However, the conditional independence assumption of naïve Bayes learning imposes restrictions on the handling of real-world data. To relax the independence assumption, we propose a smooth kernel to augment weights for the likelihood estimation. We then select an attribute weighting method that uses the mutual information metric to cooperate with the proposed framework. A series of experiments are conducted on 17 UCI benchmark datasets to compare the accuracy of the proposed learner against that of other methods that employ a relaxed conditional independence assumption. The results demonstrate the effectiveness and efficiency of our proposed learning algorithm. The overall results also indicate the superiority of attribute-weighting methods over those that attempt to determine the structure of the network.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Naïve Bayes classification is a supervised learning method based on Bayes rule of probability theory. The classification uses labeled training examples, and is driven by the strong assumption that all attributes in the training examples are independent of one another, given the class labels. This is known as the naïve Bayes assumption or naïve Bayes conditional independence assumption. Naïve Bayes classifiers exhibit high performance and rapid classification speed, and their effectiveness has been demonstrated using huge training instances with multiple attributes. This strong performance is mainly because of the independence assumption [11].

In practice, classification performance is affected by the attribute independence assumption, which is often violated in real-world data. However, the advantages of efficiency and simplicity, both stemming from the attribute independence assumption, have led many researchers to propose effective methods to further improve the performance of naïve Bayes classifiers by weakening the attribute independence without neglecting its advantages. We categorize and briefly review some typical methods of relaxing the naïve Bayes assumption in Section 3. However, attribute weighting methods have received relatively little attention among techniques to improve naïve Bayes classification, particularly when attribute weighting is combined with a kernel method in a reasonable manner.

Although [4] proposed an attribute weighting method with the kernel, their weighting scheme generates a series of parameters from least-squares cross-validation, which is less meaningful in terms of interpretation than our proposed method. In contrast, we propose an attribute weighting framework with a kernel method, which enables the weights embedded in the kernel to have relatively interpretable meaning. Thus, we can flexibly choose different metrics and methods to measure the weights based on our attribute weighting framework.

The contributions of this paper are threefold:

-

We have briefly conducted a survey on ways to improve naïve Bayes classification, focusing on naïve Bayes weighting methods.

-

We propose a novel attribute weighting framework called Attribute Weighting with Smooth Kernel Density Estimation (AW-SKDE). The AW-SKDE framework employs a smooth kernel whereby the weights dominate the probabilistic estimation of likelihood. This enables kernel methods to be combined with weighting methods. After setting up the kernel, we generate a set of weights directly via various methods that cooperate with the kernel.

-

Under the AW-SKDE framework, we propose a learner called AW-SKDE MI. This uses the mutual information criterion to measure the dependency between an attribute and its class label.

Our experimental results show that the mutual information criterion based on the AW-SKDE framework exhibits superior performance over standard naïve Bayes classifiers, and is comparable to other approaches that have included the relaxation of the conditional independence assumption.

The remainder of this paper is organized as follows: we first conduct a brief survey on methods to improve naïve Bayes classification in Section 2. In Section 3, we introduce the background to our study. Section 4 describes the proposed attribute weighting framework based on kernel density estimation. We then propose a method that uses the mutual information criterion for attribute weighting based on our proposed framework. In Section 5, we describe a series of experiments and discuss the results in detail. Finally, we draw conclusions from our study and describe avenues for future research in Section 6.

2 Related work

In recent years, a number of methods that weaken the attribute independence assumption of naïve Bayes learning have been proposed. [7] conducted a survey on improved naïve Bayes methods. Such methods can be divided into five main categories: data expansion, structure extension, attribute weighting, feature selection, and local learning. We now briefly review these categories.

For data expansion, [9] presented an algorithm called the propositionalized attribute taxonomy learner (PAT-learner). The PAT-learner first disassembles the training dataset into small pieces with attribute values, then rebuilds a new dataset called the PAT-Table using the divergence between the distribution of class labels associated with the corresponding attributes and the disassembled dataset. [8] also proposed a Bayes learner based on the PAT-learner, called propositionalized attribute taxonomy guided naïve Bayes learner (PAT-NBL). They used the propositionalized dataset and PAT-Table generated by the PAT-learner to build naïve Bayes classifiers.

[16] focused on the discretization of attributes to improve naïve Bayes classification. Wong proposed a hybrid method for continuous attributes, and mentioned that the discretization of continuous attributes in a dataset using different methods can improve the performance of naïve Bayes learning. Additionally, [16] provided a nonparametric measure to evaluate the level of dependence between a continuous attribute and the class.

In terms of structure extension, [15] proposed a system of aggregating one-dependence estimators (AODE). Under AODE, the conditional probability of the test instances given the class is tuned by one attribute value that occurs in the test instances. After the training stage, AODE outputs an average one-dependence estimator. AODE is considered a lazy method of extending the structure of a Bayesian network. [7] proposed a hidden naïve Bayes (HNB) learner, which is also a type of structure extension method.

Some approaches have attempted to discern the network structure. [5] proposed a Bayesian scoring metric and a heuristic search algorithm named K2. K2 learns the network structure using a greedy search by maximizing the tradeoff metric between network complexity and accuracy over the training data. [6] developed Tree Augmented Naïve Bayes (TAN) learning, in which each attribute has a single class variable and at most one other attribute as parents. With this approach, TAN augments the maximum weight spanning tree of the naïve Bayes learner.

There are two main approaches for determining the attribute weights. The first method constructs a function with the attribute weight parameters, and allows this function to fit itself to the training data by estimating the weights. [18] proposed a weighted naïve Bayes algorithm, called weighting to alleviate the naïve Bayes independence assumption (WANBIA). Based on the WANBIA framework, the authors described two methods to obtain the attribute weights: WANBIA CLL, which maximizes the conditional log-likelihood function, and WANBIA MSE, which minimizes the mean squared error function.

[4] also reported an algorithm to minimize the mean squared error function in order to obtain the attribute weights. In another paper, [3] developed a method called subspace weighting naïve Bayes (SWNB), which can deal with high-dimensional data. Using the local feature-weighting technique, SWNB has the ability to describe different contributions of attributes in the training dataset, and outputs an optimal set of attribute weights fitting a Logit normal a priori distribution.

There are many other techniques for attribute weighting. For example, weights can be directly obtained by measuring the relationship among the attributes or the relationship between the attributes and class labels by some given metric, or measured by the Gain Ratio method [14]. [10] calculated the attribute weights via the Kullback–Leibler divergence between the attributes and class labels. [17] proposed the decision tree-based attribute weighted AODE (DTWAODE) method. DTWAODE generates a set of attribute weights directly, and the weights decrease according to the attribute depth in the decision tree. [13] developed the confidence weight for naïve Bayes method, whereby the confidence weight is derived from the probabilities of the majority class in the training dataset.

3 Background

In this section, we explain the concepts behind the machine learning methodologies used in this paper, including the naïve Bayes classifier, naïve Bayes attribute weighting, and kernel density estimation for naïve Bayes categorical attributes. The symbols used in this paper are summarized in Table 1.

3.1 Naïve Bayes classifier

In a supervised learning scenario, consider a training dataset \(\mathcal {D}=\left \{ \mathrm {x}^{(1)},\dots ,\mathrm {x}^{(n)} \right \}\) composed of n instances, where each instance \(\mathrm {x}=\left <x_{1},\dots ,x_{m}\right >\in \mathcal {D}\) (m-dimensional vector) is labeled with some class label c ∈ C. For the posterior probability of c given x, we have

However, in practice, the likelihood p(x|c) cannot be directly estimated from \(\mathcal {D}\) because of insufficient data. Naïve Bayes learning uses the attribute independence assumption to alleviate this problem. From this assumption, p(x|c) is given as follows:

In the training phase, only p(x i |c) and p(c) need to be estimated for each class c ∈ C and each attribute value x i ∈ A i . The estimation method uses the frequency of x i given c and the frequency of c for p(x i |c) and p(c) respectively.

In the classification phase, if there is a test instance t = <t 1,…,t m >, where t m is an attribute value of the attribute m in the test instance, the naïve Bayes classifier will output a class label prediction of t based on the frequency estimations of p(x i |c) and p(c) generated in the training phase. The naïve Bayes classifier is then characterized as follows:

As mentioned above, the naïve Bayes assumption conflicts with most real-world applications (note that it is rare that attributes in the same dataset have no relationship between one another). Therefore, many researchers have attempted to effectively relax the naïve Bayes assumption, as reviewed in Section 2.

In this paper, we focus on attribute weighting methods combined with the kernel density estimation technique applied to naïve Bayes learners in order to relax the conditional independence assumption.

3.2 Naïve Bayes attribute weighting

Generally, the naïve Bayes attribute weighting scheme can be formulated in several ways. First, the weight of each attribute is defined as follows:

If the weight depends on the attribute and class, the corresponding formula is as follows:

The following formula is used when the weight depends on the attribute value:

When ∀w i = w, (4) becomes:

It is worth mentioning that (7) is considered to be a special case of the naïve Bayes classifier in which each attribute A i has the same weight, w i = w = 1∀i. In other words, this naïve Bayes classifier ignores the importance of the attributes. From an information-theoretic perspective, naïve Bayes classifiers abandon the possibility of obtaining more information from \(\mathcal {D}\) to reduce the entropy of each class. This is one of the reasons why attribute weighting methods provide more accurate classification results than naïve Bayes classifiers.

In our approach, we use (4), which assigns w i according to the attribute A i . However, instead of using w i as an exponential parameter, we incorporate w i into \(\hat {p}(x_{i}|c)\) so that it works in a more generalized form. In our method, the weights are applied in the kernel, as shown in (13). This is described in Section 4.1.

From an information-theoretic perspective, attribute weighting attempts to determine which attributes provide more information for classification than other attributes. If an attribute A i in dataset \(\mathcal {D}\) provides more information to reduce the entropy of class label C than other attributes, then A i will be assigned a higher weight.

3.3 Kernel density estimation for naïve Bayes categorical attributes

In the naïve Bayes learner discussed in Section 3.1, the likelihood \(p(a_{i}^{(j)}|c)\) is often estimated as \(\bar {f}_{c}(a_{i}^{(j)})\), the frequency of \(a_{i}^{(j)}\) given c. Note that \(a_{i}^{(j)}\) is the value of attribute i in the j th instance of dataset \(\mathcal {D}\). From a statistical perspective, a non-smooth estimator has the least sample bias, but has a large estimation variance [4, 12]. [1] proposed a kernel function, and [4] presented a variant of a smooth kernel function with an alternating frequency. The kernel function defined in [4] is as follows:

Given a test instance t = <t 1,…,t m >, where t m is the attribute value of attribute m in the test instance:

Note that \(\kappa \left (t_{i},a_{i}^{(j)},\lambda _{ci}\right )\) is a kernel function for A i given c, which may become an indicator if λ c i = 0. λ c i ( = w c i ⋅λ c ) is the bandwidth such that \(\lambda _{c} = \frac {1}{\sqrt {n_{c}}}\), λ c i ∈ [0, 1], and n c is the number of instances in \(\mathcal {D}\) given c.

In [4], (8) was used to estimate p(t i |c) as follows:

where p(t i |c, λ c i ) is used instead of p(t i |c). (Note that p(c) is still estimated from the frequency.) They minimized a cost function to estimate a series w c i for each A i in class c. The cost function is defined as follows:

Hence, the classifier can be formulated as follows:

4 AW-SKDE framework and AW-SKDE MI learner

In this section, we describe the proposed attribute weighting framework for categorical attributes, which we call Attribute Weighting with Smooth Kernel Density Estimations. Based on the AW-SKDE framework, we propose a learner named AW-SKDE MI, in which mutual information is used to determine the attribute weights.

4.1 AW-SKDE framework

In (8), we made the assumption that, if a certain attribute A i has more importance for classification given the class label (in other words, A i provides more information to reduce the indeterminacy of class c), then the value of \(p(a_{i}^{(j)}|c)\) should be closer to \(\bar {f}_{c}(a_{i}^{(j)})\); otherwise, if A i is less meaningful for classification, then \(p(a_{i}^{(j)}|c)\) should be closer to \(\frac {1}{|A_{i}|}\). We let the bandwidth λ c i = (1−w i )2×λ c , where \(w_{i}\in [0, 1], \lambda _{c}=\frac {1}{\sqrt {n_{c}}} \), and n c is the number of instances labeled C = c. In the proposed method, (8) is modified as follows:

The estimate p(t i |c, w i ) of probability p(t i |c) is described as follows:

Hence, the AW-SKDE framework can be defined as:

The AW-SKDE framework incorporates a smooth kernel to allow the probabilistic estimation of likelihood to be dominated by the weights. This enables the natural combination of kernel methods and weighting methods. After setting up the kernel, we can generate a set of weights that are estimated by various methods and cooperate with the kernel.

4.2 AW-SKDE MI learner

The AW-SKDE MI learner generates a set of attribute weights w i ∈ [0, 1] by calculating the mutual information between A i and C. If one attribute shares more mutual information with the class label, that attribute will provide more classification ability than other attributes, and should therefore be assigned a higher weight.

The average weight \(w_{i\_avg}\) of each attribute A i is defined as follows:

where:

We also incorporate the split information used in C4.5 [14] into our weighting scheme with \(w_{i\_split}\) to avoid choosing attributes with lots of values. The split information for each A i is defined as follows:

where \(a_{i}^{(j)}\) is the value of attribute A i in instance j th (as described in Table 1). Now, the weight of A i is defined as follows:

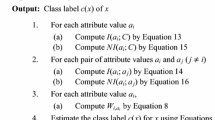

We supply AW-SKDE MI with a training dataset \(\mathcal {D}\). In the training stage, we generate \(w_{i\_avg}\), \(A_{i\_split}\), and w i for each A i . In the classification phase, given a test instance t, the AW-SKDE MI classifier predicts the class label. The learning algorithm of AW-SKDE MI is illustrated in Fig. 1.

During the training phase, AW-SKDE MI only needs to construct conditional probability tables , which contain the joint probabilities of attributes and a class label. In terms of time complexity, the calculation of I(A i ;C), \(w_{i\_avg}\), \(A_{i\_split}\), and w i takes O(m n k), O(m 2), O(m v), and O(m 2) time, respectively. Therefore, the total time complexity of the training phase is O(m n k + m 2 + m v). In the classification phase, the algorithm’s time complexity is O(k m). The time complexity of AW-SKDE MI and naïve Bayes classification is summarized in Table 2.

We now describe a framework named Attribute Weighting with Light Smooth Kernel Density Estimation (AW-LSKDE), which does not consider the bandwidth. AW-LSKDE can be regarded as a simplified version of AW-SKDE. According to (8), we directly set λ c i = 1−w i , where w i ∈ [0, 1]. Hence, the kernel \(\kappa \left (t_{i},a_{i}^{(j)},\lambda _{ci}\right )\) becomes \(\kappa \left (t_{i},a_{i}^{(j)},w_{i}\right )\), which is defined as follows:

The estimate p(t i |c, w i ) is then:

We can also construct an attribute weighting naïve Bayes learner with the mutual information metric based on this AW-LSKDE framework. This is referred to as AW-LSKDE MI. The weights of attributes A i are obtained in the same manner as for the AW-SKDE MI learner. Unfortunately, the AW-LSKDE framework does not produce encouraging results. The experimental results for the AW-LSKDE MI learner are presented in Table 4.

5 Experimental results

To compare AW-SKDE MI, AW-LSKDE MI, and naïve Bayes learning in terms of classification accuracy, we conducted experiments on UCI Machine Learning Repository Benchmark Datasets [2]. The UCI benchmark datasets used in the experiments are listed in Table 3. Note that we have discretized the numerical attribute values for each dataset.

In the implementation of the proposed algorithm, all probabilities (including \(\hat {p}(C=c)\), \(\hat {p}(A_{i}=a_{i},C=c)\)) were estimated via the following Laplacian smoothing:

where n is the number of training examples for which the class value is known, and n i is the number of training examples for which both the attribute i and the class are known. The function c o u n t(∙) is the count value of ∙. Dividing \(\hat {p}(A_{i}=a_{i},C=c)\) by \(\hat {p}(C=c)\) gives the conditional probability \(\hat {p}(A_{i}=a_{i}|C=c)\).

To compare the performance of the algorithms, we used an adapted t-test with 10-fold cross-validation. Using the same training datasets and test datasets, we conducted experiments on the proposed algorithms, standard naïve Bayes, PAT-NBL [8], AODE [15], HNB [7], K2 [5], and TAN [6]. The algorithms performance was evaluated in terms of the classification accuracy.

Table 4 compares the accuracy of standard naïve Bayes, AW-SKDE MI, AW-LSKDE MI, and the previously developed algorithms that use a relaxed conditional independence assumption. Mutual information (MI) was used for AW-SKDE and AW-LSKDE. The conditional minimum description length (CMDL), conditional Akaike information criterion (CAIC), and conditional log-likelihood (CLL) were used in PAT-NBL, and the Bayesian information criteria (BIC) was used in K2 and TAN. Some results are missing for the HNB algorithm, because HNB cannot handle datasets with missing values. Instead of using traditional methods to fill the missing values or treating each missing value as a new attribute value, we have simply omitted HNB from the experiments using datasets with missing values for fair comparison.

It can be seen that the AW-SKDE MI learner produced four better results, six comparative results, and seven worse results than the naïve Bayes classifier. However, the AW-LSKDE MI learner only outperformed the naïve Bayes learner on one dataset. Note that the accuracies were estimated using 10-fold cross-validation with a 95% confidence interval. The win/tie/lose results are summarized in Table 5. Note that the overall win/tie/lose record between naïve Bayes and our AW-SKDE MI was 8/5/4. Although the naïve Bayes classifier achieved more wins than AW-SKDE MI, the overall average accuracy of AW-SKDE MI (84.81 ±3.22) was higher than that of the naïve Bayes method (84.78 ±3.23).

These experimental results prove that our new attribute weighting model AW-SKDE MI achieves comparable and sometimes better performance than the classical naïve Bayes method. AW-SKDE MI exhibited better performance than PAT-NBL, with a win/tie/lose record of 11/0/6.

Unfortunately, AW-SKDE MI does not outperform AODE and HNB. We have examined the results, and found that the training error of AW-SKDE MI is usually higher than that of AODE and HNB. This indicates that AW-SKDE MI suffers from over-fitting. Overcoming this problem will be considered in future research.

Compared with K2 and TAN, AW-SKDE MI exhibits comparable and sometimes better performance, with win/tie/lose records of 6/2/9 and 8/0/9, respectively.

The AW-LSKDE MI learner performed poorly because of its ignorance of bandwidth parameters in the kernel methods, which results in a relatively large bias.

In the experiments we performed, it is interesting to note that attribute weighting methods (AW-SKDE, AODE, and HNB) were generally superior to network structure elicitation methods (K2 and TAN). This indicates that stressing significant attributes may be more important than eliciting the dependence relationship between attributes.

6 Conclusions and future work

In this paper, a novel attribute weighting framework called Attribute Weighting with Smooth Kernel Density Estimations has been proposed. The AW-SKDE framework enables the estimation of likelihood to be dominated by attribute weights. Based on the AW-SKDE framework, mutual information was exploited to give the AW-SKDE MI classifier. We conducted experiments on seventeen UCI benchmark datasets, and compared the accuracy of the standard naïve Bayes learner, AW-SKDE MI, AW-LSKDE MI, PAT-NBL, AODE, HNB, K2, and TAN. The experimental results demonstrated that our new learner, AW-SKDE MI, is as efficient and effective as naïve Bayes, and has comparable performance to K2 and TAN. However, the relatively large bias in the AW-LSKDE MI algorithm resulted in poor performance.

Even though AW-SKDE MI produced comparable results, as shown in Table 4, it did not completely outperform naïve Bayes. In future work, we plan to improve the AW-SKDE framework and investigate more effective attribute weighting methods to reduce over-fitting. We will also investigate attribute weighting methods other than the weight measurement method with mutual information between attributes and class labels.

References

Aitchison J, Aitken CGG (1976) Multivariate binary discrimination by the kernel method. Biometrika 63(3):413–420

Bache K, Lichman M (2013) UCI Machine Learning Repository. http://archive.ics.uci.edu/ml

Chen L, Wang S (2012a) Automated feature weighting in naïve Bayes for high-dimensional data classification. In: Proceedings of the 21st acm international conference on information and knowledge management. Cikm ’12. ISBN 978-1-4503-1156-4. ACM, New York, NY, USA, pp 1243–1252

Chen L, Wang S (2012b) Semi-naïve Bayesian classification by weighted kernel density estimation. In: Proceedings of the 8th international conference on advanced data mining and applications (adma 2012). Springer, Nanjing, China, pp 260–270

Cooper G, Herskovits E (1992) A bayesian method for the induction of probabilistic networks from data. Mach Learn 9(4):309–347

Friedman N, Geiger D, Goldszmidt M (1997) Bayesian network classifiers. Mach Learn 29(2-3):131–163

Jiang L, Zhang H, Cai Z (2009) A novel Bayes model: Hidden naive Bayes. IEEE Trans Knowl Data Eng 21(10):1361– 1371

Kang D-K, Kim M-J (2011) Propositionalized attribute taxonomies from data for data-driven construction of concise classifiers. Expert Systems with Applications 38(10):12739– 12746

Kang D-K, Sohn K (2009) Learning decision trees with taxonomy of propositionalized attributes. Pattern Recogn 42(1):84–92

Lee C-H, Gutierrez F, Dou D (2011) Calculating feature weights in naïve Bayes with Kullback-Leibler measure. In: Proceedings of the 2011 ieee 11th international conference on data mining. Icdm ’11. ISBN 978-0-7695-4408-3. IEEE Computer Society, Washington, pp 1146–1151

Lewis D D (1998) Naïve (Bayes) at forty: The independence assumption in information retrieval. In: Proceedings of the 10th european conference on machine learning. Ecml ’98. ISBN 3-540-64417-2. Springer, London, pp 4–15

Li Q, Racine JS (2006) Nonparametric econometrics: Theory and practice. Princeton University Press

Omura K, Kudo M, Endo T, Murai T (2012) Weighted naïve Bayes classifier on categorical features. In: Abraham A, Zomaya AY, Ventura S, Yager RR, Snásel V, Muda AK, Samuel P (eds) Proceedings of the 12th international conference on intelligent systems design and applications (isda 2012). ISBN 978-1-4673-5117-1. IEEE, India, pp 865–870

Quinlan JR (1993) C4.5: programs for machine learning. Morgan Kaufmann Publishers Inc, San Francisco

Webb GI, Boughton JR, Wang Z (2005) Not so naïve Bayes: Aggregating one-dependence estimators. Mach Learn 58(1):5– 24

Wong T-T (2012) A hybrid discretization method for naïve Bayesian classifiers. Pattern Recogn 45 (6):2321–2325

Wu J, Cai Z (2011) Learning averaged one-dependence estimators by attribute weighting. Journal of Information & Computational Science 8(7):1063–1073

Zaidi NA, Cerquides J, Carman MJ, Webb GI (2013) Alleviating naïve Bayes attribute independence assumption by attribute weighting. J Mach Learn Res 14:1947–1988

Acknowledgments

This work was supported by Dongseo University, “Dongseo Frontier Project” Research Fund of 2012.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Xiang, ZL., Yu, XR. & Kang, DK. Experimental analysis of naïve Bayes classifier based on an attribute weighting framework with smooth kernel density estimations. Appl Intell 44, 611–620 (2016). https://doi.org/10.1007/s10489-015-0719-1

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-015-0719-1