Abstract

The ability of an Evolutionary Algorithm (EA) to find a global optimal solution depends on its capacity to find a good rate between exploitation of found-so-far elements and exploration of the search space. Inspired by natural phenomena, researchers have developed many successful evolutionary algorithms which, at original versions, define operators that mimic the way nature solves complex problems, with no actual consideration of the exploration-exploitation balance. In this paper, a novel nature-inspired algorithm called the States of Matter Search (SMS) is introduced. The SMS algorithm is based on the simulation of the states of matter phenomenon. In SMS, individuals emulate molecules which interact to each other by using evolutionary operations which are based on the physical principles of the thermal-energy motion mechanism. The algorithm is devised by considering each state of matter at one different exploration–exploitation ratio. The evolutionary process is divided into three phases which emulate the three states of matter: gas, liquid and solid. In each state, molecules (individuals) exhibit different movement capacities. Beginning from the gas state (pure exploration), the algorithm modifies the intensities of exploration and exploitation until the solid state (pure exploitation) is reached. As a result, the approach can substantially improve the balance between exploration–exploitation, yet preserving the good search capabilities of an evolutionary approach. To illustrate the proficiency and robustness of the proposed algorithm, it is compared to other well-known evolutionary methods including novel variants that incorporate diversity preservation schemes. The comparison examines several standard benchmark functions which are commonly considered within the EA field. Experimental results show that the proposed method achieves a good performance in comparison to its counterparts as a consequence of its better exploration–exploitation balance.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Global optimization [1] has delivered applications for many areas of science, engineering, economics and others, where mathematical modeling is used [2]. In general, the goal is to find a global optimum for an objective function which is defined over a given search space. Global optimization algorithms are usually broadly divided into deterministic and stochastic methods [3]. Since deterministic methods only provide a theoretical guarantee of locating a local minimum of the objective function, they often face great difficulties in solving global optimization problems [4]. On the other hand, evolutionary algorithms are usually faster in locating a global optimum [5]. Moreover, stochastic methods adapt easily to black-box formulations and extremely ill-behaved functions, whereas deterministic methods usually rest on at least some theoretical assumptions about the problem formulation and its analytical properties (such as Lipschitz continuity) [6].

Evolutionary algorithms, which are considered as members of the stochastic group, have been developed by a combination of rules and randomness that mimics several natural phenomena. Such phenomena include evolutionary processes such as the Evolutionary Algorithm (EA) proposed by Fogel et al. [7], De Jong [8], and Koza [9], the Genetic Algorithm (GA) proposed by Holland [10] and Goldberg [11], the Artificial Immune System proposed by De Castro et al. [12] and the Differential Evolution Algorithm (DE) proposed by Price and Storn [13]. Some other methods which are based on physical processes include the Simulated Annealing proposed by Kirkpatrick et al. [14], the Electromagnetism-like Algorithm proposed by İlker et al. [15] and the Gravitational Search Algorithm proposed by Rashedi et al. [16]. Also, there are other methods based on the animal-behavior phenomena such as the Particle Swarm Optimization (PSO) algorithm proposed by Kennedy and Eberhart [17] and the Ant Colony Optimization (ACO) algorithm proposed by Dorigo et al. [18].

Every EA needs to address the issue of exploration-exploitation of the search space. Exploration is the process of visiting entirely new points of a search space whilst exploitation is the process of refining those points within the neighborhood of previously visited locations, in order to improve their solution quality. Pure exploration degrades the precision of the evolutionary process but increases its capacity to find new potential solutions. On the other hand, pure exploitation allows refining existent solutions but adversely driving the process to local optimal solutions. Therefore, the ability of an EA to find a global optimal solution depends on its capacity to find a good balance between the exploitation of found-so-far elements and the exploration of the search space [19]. So far, the exploration–exploitation dilemma has been an unsolved issue within the framework of EA.

Although PSO, DE and GSA are considered the most popular algorithms for many optimization applications, they fail in finding a balance between exploration and exploitation [20]; in multimodal functions, they do not explore the whole region effectively and often suffers premature convergence or loss of diversity. In order to deal with this problem, several proposals have been suggested in the literature [21–46]. In most of the approaches, exploration and exploitation is modified by the proper settings of control parameters that have an influence on the algorithm’s search capabilities [47]. One common strategy is that EAs should start with exploration and then gradually change into exploitation [48]. Such a policy can be easily described with deterministic approaches where the operator that controls the individual diversity decreases along with the evolution. This is generally correct, but such a policy tends to face difficulties when solving certain problems with multimodal functions that hold many optima, since a premature takeover of exploitation over exploration occurs. Some approaches that use this strategy can be found in [21–29]. Other works [30–34] use the population size as reference to change the balance between exploration and exploitation. A larger population size implies a wider exploration while a smaller population demands a shorter search. Although this technique delivers an easier way to keep diversity, it often represents an unsatisfactory solution. An improper handling of large populations might converge to only one point, despite introducing more function evaluations. Recently, new operators have been added to several traditional evolutionary algorithms in order to improve their original exploration-exploitation capability. Such operators diversify particles whenever they concentrate on a local optimum. Some methods that employ this technique are discussed in [35–46].

Either of these approaches is necessary but not sufficient to tackle the problem of the exploration–exploitation balance. Modifying the control parameters during the evolution process without the incorporation of new operators to improve the population diversity makes the algorithm defenseless against premature convergence and may result in poor exploratory characteristics of the algorithm [48]. On the other hand, incorporating new operators without modifying the control parameters leads to an increase in computational cost and weakens the exploitation process of candidate regions [39]. Therefore, it does seem reasonable to incorporate both of these approaches into a single algorithm.

In this paper, a novel nature-inspired algorithm, known as the States of Matter Search (SMS) is proposed for solving global optimization problems. The SMS algorithm is based on the simulation of the states of matter phenomenon. In SMS, individuals emulate molecules which interact to each other by using evolutionary operations based on the physical principles of the thermal-energy motion mechanism. Such operations allow the increase of the population diversity and avoid the concentration of particles within a local minimum. The proposed approach combines the use of the defined operators with a control strategy that modifies the parameter setting of each operation during the evolution process. In contrast to other approaches that enhance traditional EA algorithms by incorporating some procedures for balancing the exploration–exploitation rate, the proposed algorithm naturally delivers such property as a result of mimicking the states of matter phenomenon. The algorithm is devised by considering each state of matter at one different exploration–exploitation ratio. Thus, the evolutionary process is divided into three stages which emulate the three states of matter: gas, liquid and solid. At each state, molecules (individuals) exhibit different behaviors. Beginning from the gas state (pure exploration), the algorithm modifies the intensities of exploration and exploitation until the solid state (pure exploitation) is reached. As a result, the approach can substantially improve the balance between exploration–exploitation, yet preserving the good search capabilities of an evolutionary approach. To illustrate the proficiency and robustness of the proposed algorithm, it has been compared to other well-known evolutionary methods including recent variants that incorporate diversity preservation schemes. The comparison examines several standard benchmark functions which are usually employed within the EA field. Experimental results show that the proposed method achieves good performance over its counterparts as a consequence of its better exploration–exploitation capability.

This paper is organized as follows. Section 2 introduces basic characteristics of the three states of matter. In Sect. 3, the novel SMS algorithm and its characteristics are both described. Section 4 presents experimental results and a comparative study. Finally, in Sect. 5, some conclusions are discussed.

2 States of matter

The matter can take different phases which are commonly known as states. Traditionally, three states of matter are known: solid, liquid, and gas. The differences among such states are based on forces which are exerted among particles composing a material [49].

In the gas phase, molecules present enough kinetic energy so that the effect of intermolecular forces is small (or zero for an ideal gas), while the typical distance between neighboring molecules is greater than the molecular size. A gas has no definite shape or volume, but occupies the entire container in which it is confined. Figure 1a shows the movements exerted by particles in a gas state. The movement experimented by the molecules represent the maximum permissible displacement ρ 1 among particles [50]. In a liquid state, intermolecular forces are more restrictive than those in the gas state. The molecules have enough energy to move relatively to each other still keeping a mobile structure. Therefore, the shape of a liquid is not definite but is determined by its container. Figure 1b presents a particle movement ρ 2 within a liquid state. Such movement is smaller than those considered by the gas state but larger than the solid state [51]. In the solid state, particles (or molecules) are packed together closely with forces among particles being strong enough so that the particles cannot move freely but only vibrate. As a result, a solid has a stable, definite shape and a definite volume. Solids can only change their shape by force, as when they are broken or cut. Figure 1c shows a molecule configuration in a solid state. Under such conditions, particles are able to vibrate (being perturbed) considering a minimal ρ 3 distance [50].

In this paper, a novel nature-inspired algorithm known as the States of Matter Search (SMS) is proposed for solving global optimization problems. The SMS algorithm is based on the simulation of the states of matter phenomenon that considers individuals as molecules which interact to each other by using evolutionary operations based on the physical principles of the thermal-energy motion mechanism. The algorithm is devised by considering each state of matter at one different exploration–exploitation ratio. Thus, the evolutionary process is divided into three stages which emulate the three states of matter: gas, liquid and solid. In each state, individuals exhibit different behaviors.

3 States of matter search (SMS)

3.1 Definition of operators

In the approach, individuals are considered as molecules whose positions on a multidimensional space are modified as the algorithm evolves. The movement of such molecules is motivated by the analogy to the motion of thermal-energy.

The velocity and direction of each molecule’s movement are determined by considering the collision, the attraction forces and the random phenomena experimented by the molecule set [52]. In our approach, such behaviors have been implemented by defining several operators such as the direction vector, the collision and the random positions operators, all of which emulate the behavior of actual physics laws.

The direction vector operator assigns a direction to each molecule in order to lead the particle movement as the evolution process takes place. On the other side, the collision operator mimics those collisions that are experimented by molecules as they interact to each other. A collision is considered when the distance between two molecules is shorter than a determined proximity distance. The collision operator is thus implemented by interchanging directions of the involved molecules. In order to simulate the random behavior of molecules, the proposed algorithm generates random positions following a probabilistic criterion that considers random locations within a feasible search space.

The next section presents all operators that are used in the algorithm. Although such operators are the same for all the states of matter, they are employed over a different configuration set depending on the particular state under consideration.

3.1.1 Direction vector

The direction vector operator mimics the way in which molecules change their positions as the evolution process develops. For each n-dimensional molecule p i from the population P, it is assigned an n-dimensional direction vector d i which stores the vector that controls the particle movement. Initially, all the direction vectors \((\mathbf{D} = \{\mathbf{d}_{1},\mathbf{d}_{2}, \ldots,\mathbf{d}_{N_{p}}\})\) are randomly chosen within the range of [−1,1].

As the system evolves, molecules experiment several attraction forces. In order to simulate such forces, the proposed algorithm implements the attraction phenomenon by moving each molecule towards the best so-far particle. Therefore, the new direction vector for each molecule is iteratively computed considering the following model:

where a i represents the attraction unitary vector calculated as a i =(p best−p i )/∥p best−p i ∥, being p best the best individual seen so-far, while p i is the molecule i of population P. k represents the iteration number whereas gen involves the total iteration number that constitutes the complete evolution process.

Under this operation, each particle is moved towards a new direction which combines the past direction, which was initially computed, with the attraction vector over the best individual seen so-far. It is important to point out that the relative importance of the past direction decreases as the evolving process advances. This particular type of interaction avoids the quick concentration of information among particles and encourages each particle to search around a local candidate region in its neighborhood, rather than interacting to a particle lying at distant region of the domain. The use of this scheme has two advantages: first, it prevents the particles from moving toward the global best position in early stages of algorithm and thus makes the algorithm less susceptible to premature convergence; second, it encourages particles to explore their own neighborhood thoroughly, just before they converge towards a global best position. Therefore, it provides the algorithm with local search ability enhancing the exploitative behavior.

In order to calculate the new molecule position, it is necessary to compute the velocity v i of each molecule by using:

being v init the initial velocity magnitude which is calculated as follows:

where \(b_{j}^{low}\) and \(b_{j}^{high}\) are the low j parameter bound and the upper j parameter bound respectively, whereas β∈[0,1].

Then, the new position for each molecule is updated by:

where 0.5≤ρ≤1.

3.1.2 Collision

The collision operator mimics the collisions experimented by molecules while they interact to each other. Collisions are calculated if the distance between two molecules is shorter than a determined proximity value. Therefore, if ∥p i −p q ∥<r, a collision between molecules i and q is assumed; otherwise, there is no collision, considering i,q∈{1,…,N p } such that i≠q. If a collision occurs, the direction vector for each particle is modified by interchanging their respective direction vectors as follows:

The collision radius is calculated by:

where α∈[0,1].

Under this operator, a spatial region enclosed within the radius r is assigned to each particle. In case the particle regions collide with each other, the collision operator acts upon particles by forcing them out of the region. The radio r and the collision operator provide the ability to control diversity throughout the search process. In other words, the rate of increase or decrease of diversity is predetermined for each stage. Unlike other diversity-guided algorithms, it is not necessary to inject diversity into the population when particles gather around a local optimum because the diversity will be preserved during the overall search process. The collision incorporation therefore enhances the exploratory behavior in the proposed approach.

3.1.3 Random positions

In order to simulate the random behavior of molecules, the proposed algorithm generates random positions following a probabilistic criterion within a feasible search space.

For this operation, a uniform random number r m is generated within the range [0,1]. If r m is smaller than a threshold H, a random molecule’s position is generated; otherwise, the element remains with no change. Therefore, such an operation can be modeled as follows:

where i∈{1,…,N p } and j∈{1,…,n}.

3.1.4 Best Element Updating

Despite the fact that this updating operator does not belong to the State of Matter metaphor, it is used to simply store the best so-far solution. In order to update the best molecule p best seen so-far, the best found individual from the current k population p best,k is compared to the best individual p best,k−1 of the last generation. If p best,k is better than p best,k−1 according to its fitness value, p best is updated with p best,k, otherwise p best remains with no change. Therefore, p best stores the best historical individual found so-far.

3.2 SMS algorithm

The overall SMS algorithm is composed of three stages corresponding to the three States of Matter: the gas, the liquid and the solid state. Each stage has its own behavior. In the first stage (gas state), exploration is intensified whereas in the second one (liquid state) a mild transition between exploration and exploitation is executed. Finally, in the third phase (solid state), solutions are refined by emphasizing the exploitation process.

3.2.1 General procedure

At each stage, the same operations are implemented. However, depending on which state is referred, they are employed considering a different parameter configuration. The general procedure in each state is shown as pseudo-code in Algorithm 1. Such procedure is composed of five steps and maps the current population P k to a new population P k+1. The algorithm receives as input the current population P k and the configuration parameters ρ,β, α, and H, whereby it yields the new population P k+1.

3.2.2 The complete algorithm

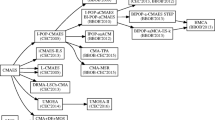

The complete algorithm is divided into four different parts. The first corresponds to the initialization stage, whereas the last three represent the States of Matter. All the optimization process, which consists of a gen number of iterations, is organized into three different asymmetric phases, employing 50 % of all iterations for the gas state (exploration), 40 % for the liquid state (exploration–exploitation) and 10 % for the solid state (exploitation). The overall process is graphically described by Fig. 2. At each state, the same general procedure (see Algorithm 1) is iteratively used considering the particular configuration predefined for each State of Matter. Figure 3 shows the data flow for the complete SMS algorithm.

Initialization

The algorithm begins by initializing a set P of N p molecules (\(\mathbf{P} = \{\mathbf{p}_{1},\mathbf{p}_{2},\ldots,\mathbf{p}_{N_{p}}\}\)). Each molecule position p i is an n-dimensional vector containing the parameter values to be optimized. Such values are randomly and uniformly distributed between the pre-specified lower initial parameter bound \(b_{j}^{low}\) and the upper initial parameter bound \(b_{j}^{high}\), just as it is described by the following expressions:

where j and i are the parameter and molecule index, respectively, whereas zero indicates the initial population. Hence, \(p_{i}^{j}\) is the j-th parameter of the i-th molecule.

Gas state

In the gas state, molecules experiment severe displacements and collisions. Such state is characterized by random movements produced by non-modeled molecule phenomena [52]. Therefore, the ρ value from the direction vector operator is set to a value close to one so that the molecules can travel longer distances. Similarly, the H value representing the random positions operator is also configured to a value around one, in order to allow the random generation for other molecule positions. The gas state is the first phase and lasts for the 50 % of all iterations which compose the complete optimization process. The computational procedure for the gas state can be summarized as follows:

-

Step 1:

Set the parameters ρ∈[0.8,1],β=0.8, α=0.8 and H=0.9 being consistent with the gas state.

-

Step 2:

Apply the general procedure which is illustrated in Algorithm 1.

-

Step 3:

If 50 % of the total iteration number is completed (1≤k≤0.5⋅gen), then the process continues to the liquid state procedure; otherwise go back to step 2.

Liquid state

Although molecules currently at the liquid state exhibit restricted motion in comparison to the gas state, they still show a higher flexibility with respect to the solid state. Furthermore, the generation of random positions which are produced by non-modeled molecule phenomena is scarce [53]. For this reason, the ρ value from the direction vector operator is bounded to a value between 0.3 and 0.6. Similarly, the random position operator H is configured to a value close to zero in order to allow the random generation of fewer molecule positions. In the liquid state, collisions are also less common than in the gas state, so the collision radius, that is controlled by α, is set to a smaller value in comparison to the gas state. The liquid state is the second phase and lasts the 40 % of all iterations which compose the complete optimization process. The computational procedure for the liquid state can be summarized as follows:

-

Step 4:

Set the parameters ρ∈[0.3,0.6],β=0.4, α=0.2 and H=0.2 being consistent with the liquid state.

-

Step 5:

Apply the general procedure that is defined in Algorithm 1.

-

Step 6:

If 90 % (50 % from the gas state and 40 % from the liquid state) of the total iteration number is completed (0.5⋅gen<k≤0.9⋅gen), then the process continues to the solid state procedure; otherwise go back to step 5.

Solid state

In the solid state, forces among particles are stronger so that particles cannot move freely but only vibrate. As a result, effects such as collision and generation of random positions are not considered [52]. Therefore, the ρ value of the direction vector operator is set to a value close to zero indicating that the molecules can only vibrate around their original positions. The solid state is the third phase and lasts for the 10 % of all iterations which compose the complete optimization process. The computational procedure for the solid state can be summarized as follows:

-

Step 7:

Set the parameters ρ∈[0.0,0.1] and β=0.1, α=0 and H=0 being consistent with the solid state.

-

Step 8:

Apply the general procedure that is defined in Algorithm 1.

-

Step 9:

If 100 % of the total iteration number is completed (0.9⋅gen<k≤gen), the process is finished; otherwise go back to step 8.

It is important to clarify that the use of this particular configuration (α=0 and H=0) disables the collision and generation of random positions operators, which have been illustrated in the general procedure.

4 Experimental results

A comprehensive set of 24 functions, collected from Refs. [54–61], has been used to test the performance of the proposed approach. Tables 8–11 in Appendix A present the benchmark functions used in our experimental study. Such functions are classified into four different categories: Unimodal test functions (Table 8), multimodal test functions (Table 9), multimodal test functions with fixed dimensions (Table 10) and functions proposed for the GECCO contest (Table 11). In such tables, n indicates the dimension of the function, f opt is the optimum value of the function and S is the subset of R n. The function optimum position (x opt ) for f 1, f 2, f 4, f 6, f 7, f 10, f 11 and f 14 is at x opt =[0]n, for f 3, f 8 and f 9 is at x opt =[1]n, for f 5 is at x opt =[420.96]n, for f 18 is at x opt =[0]n, for f 12 is at x opt =[0.0003075]n and for f 13 is at x opt =[−3.32]n. In case of functions contained in Table 11, the x opt and f opt values have been set to default values which have been obtained from the Matlab© implementation for GECCO competitions, as it is provided in [59]. A detailed description of optimum locations is given in Appendix A.

4.1 Performance comparison to other meta-heuristic algorithms

We have applied the SMS algorithm to 24 functions whose results have been compared to those produced by the Gravitational Search Algorithm (GSA) [16], the Particle Swarm Optimization (PSO) method [17] and the Differential Evolution (DE) algorithm [13]. These are considered the most popular algorithms in many optimization applications. In order to enhance the performance analysis, the PSO algorithm with a territorial diversity-preserving scheme (TPSO) [39] has also been added into the comparisons. TPSO is considered a recent PSO variant that incorporates a diversity preservation scheme in order to improve the balance between exploration and exploitation. In all comparisons, the population has been set to 50. The maximum iteration number for functions in Tables 8, 9 and 11 has been set to 1000 and for functions in Table 10 the iterations have been set to 500. Such stop criterion has been selected to maintain compatibility to similar works reported in the literature [4, 16].

The parameter setting for each algorithm in the comparison is described as follows:

-

1.

GSA [16]: The parameters are set to G o =100 and α=20; the total number of iterations is set to 1000 for functions f 1 to f 11 and 500 for functions f 12 to f 14. The total number of individuals is set to 50. Such values are the best parameter set for this algorithm according to [16].

-

2.

PSO [17]: The parameters are set to c 1=2 and c 2=2; besides, the weight factor decreases linearly from 0.9 to 0.2.

-

3.

DE [13]: The DE/Rand/1 scheme is employed. The crossover probability is set to CR=0.9 and the weight factor is set to F=0.8.

-

4.

TPSO [39]: The parameter α has been set to 0.5. This value is found to be the best configuration according to [39]. The algorithm has been tuned according to the set of values which have been originally proposed by its own reference.

The experimental comparison between metaheuristic algorithms, with respect to SMS, has been developed according to the function-type classification as follows:

-

1.

Unimodal test functions (Table 8).

-

2.

Multimodal test functions (Table 9).

-

3.

Multimodal test functions with fixed dimension (Table 10).

-

4.

Test functions from the GECCO contest (Table 11).

4.1.1 Unimodal test functions

This experiment is performed over the functions presented in Table 8. The test compares the SMS to other algorithms such as GSA, PSO, DE and TPSO. The results for 30 runs are reported in Table 1 considering the following performance indexes: the Average Best-so-far (AB) solution, the Median Best-so-far (MB) and the Standard Deviation (SD) of best-so-far solution. The best outcome for each function is boldfaced. According to this table, SMS delivers better results than GSA, PSO, DE and TPSO for all functions. In particular, the test remarks the largest difference in performance, which is directly related to a better trade-off between exploration and exploitation. Just as it is illustrated by Fig. 4, SMS, DE and GSA have similar convergence rates at finding the optimal minimal, yet are faster than PSO and TPSO.

A non-parametric statistical significance proof known as the Wilcoxon’s rank sum test for independent samples [62, 63] has been conducted over the “average best-so-far” (AB) data of Table 1, with an 5 % significance level. Table 2 reports the p-values produced by Wilcoxon’s test for the pair-wise comparison of the “average best so-far” of four groups. Such groups are formed by SMS vs. GSA, SMS vs. PSO, SMS vs. DE and SMS vs. TPSO. As a null hypothesis, it is assumed that there is no significant difference between mean values of the two algorithms. The alternative hypothesis considers a significant difference between the “average best-so-far” values of both approaches. All p-values reported in Table 2 are less than 0.05 (5 % significance level) which is strong evidence against the null hypothesis. Therefore, such evidence indicates that SMS results are statistically significant and that it has not occurred by coincidence (i.e. due to common noise contained in the process).

Multimodal test functions

Multimodal functions represent a good optimization challenge as they possess many local minima (Table 9). In the case of multimodal functions, final results are very important since they reflect the algorithm’s ability to escape from poor local optima and are able to locate a near-global optimum. Experiments using f 5 to f 11 are quite relevant as the number of local minima for such functions increases exponentially as their dimensions increase. The dimension of such functions is set to 30. The results are averaged over 30 runs, reporting the performance index for each function in Table 3 as follows: the Average Best-so-far (AB) solution, the Median Best-so-far (MB) and the Standard Deviation (SD) best-so-far (the best result for each function is highlighted). Likewise, p-values of the Wilcoxon signed-rank test of 30 independent runs are listed in Table 4.

In the case of functions f 8, f 9, f 10 and f 11, SMS yields much better solutions than other methods. However, for functions f 5, f 6 and f 7, SMS produces similar results to GSA and TPSO. The Wilcoxon rank test results, which are presented in Table 4, demonstrate that SMS performed better than GSA, PSO, DE and TPSO considering four functions f 8–f 11, whereas, from a statistical viewpoint, there is no difference between results from SMS, GSA and TPSO for f 5, f 6 and f 7. The progress of the “average best-so-far” solution over 30 runs for functions f 5 and f 11 is shown by Fig. 5.

Multimodal test functions with fixed dimensions

In the following experiments, the SMS algorithm is compared to GSA, PSO, DE and TPSO over a set of multidimensional functions with fixed dimensions, which are widely used in the meta-heuristic literature. The functions used for the experiments are f 12, f 13 and f 14 which are presented in Table 10. The results in Table 5 show that SMS, GSA, PSO, DE and TPSO have similar values in their performance. The evidence shows how meta-heuristic algorithms maintain a similar average performance when they face low-dimensional functions [54]. Figure 6 presents the convergence rate for the GSA, PSO, DE, SMS and TPSO algorithms considering functions f 12 to f 13.

Test functions from the GECCO contest

The experimental set in Table 11 includes several representative functions that are used in the GECCO contest. Using such functions, the SMS algorithm is compared to GSA, PSO, DE and TPSO. The results have been averaged over 30 runs, reporting the performance indexes for each algorithm in Table 6. Likewise, p-values of the Wilcoxon signed-rank test of 30 independent executions are listed in Table 7. According to results of Table 6, it is evident that SMS yields much better solutions than other methods. The Wilcoxon test results in Table 7 provide information to statistically demonstrate that SMS has performed better than PSO, GSA, DE and TPSO. Figure 7 presents the convergence rate for the GSA, PSO, DE, SMS and TPSO algorithms, considering functions f 17 to f 24.

5 Conclusions

In this paper, a novel nature-inspired algorithm called as the States of Matter Search (SMS) has been introduced. The SMS algorithm is based on the simulation of the State of Matter phenomenon. In SMS, individuals emulate molecules which interact to each other by using evolutionary operations that are based on physical principles of the thermal-energy motion mechanism. The algorithm is devised by considering each state of matter at one different exploration–exploitation ratio. The evolutionary process is divided into three phases which emulate the three states of matter: gas, liquid and solid. At each state, molecules (individuals) exhibit different movement capacities. Beginning from the gas state (pure exploration), the algorithm modifies the intensities of exploration and exploitation until the solid state (pure exploitation) is reached. As a result, the approach can substantially improve the balance between exploration–exploitation, yet preserving the good search capabilities of an EA approach.

SMS has been experimentally tested considering a suite of 24 benchmark functions. The performance of SMS has also been compared to the following evolutionary algorithms: the Particle Swarm Optimization method (PSO) [17], the Gravitational Search Algorithm (GSA) [16], the Differential Evolution (DE) algorithm [13] and the PSO algorithm with a territorial diversity-preserving scheme (TPSO) [39]. Results have confirmed a high performance of the proposed method in terms of the solution quality for solving most of the benchmark functions.

The SMS’s remarkable performance is associated with two different reasons: (i) the defined operators allow a better particle distribution in the search space, increasing the algorithm’s ability to find the global optima; and (ii) the division of the evolution process at different stages, provides different rates between exploration and exploitation during the evolution process. At the beginning, pure exploration is favored at the gas state, then a mild transition between exploration and exploitation features during the liquid state. Finally, pure exploitation is performed during the solid state.

References

Han M-F, Liao S-H, Chang J-Y, Lin C-T (2012) Dynamic group-based differential evolution using a self-adaptive strategy for global optimization problems. Appl Intell. doi:10.1007/s10489-012-0393-5

Pardalos Panos M, Romeijn Edwin H, Tuy H (2000) Recent developments and trends in global optimization. J Comput Appl Math 124:209–228

Floudas C, Akrotirianakis I, Caratzoulas S, Meyer C, Kallrath J (2005) Global optimization in the 21st century: advances and challenges. Comput Chem Eng 29(6):1185–1202

Ying J, Ke-Cun Z, Shao-Jian Q (2007) A deterministic global optimization algorithm. Appl Math Comput 185(1):382–387

Georgieva A, Jordanov I (2009) Global optimization based on novel heuristics, low-discrepancy sequences and genetic algorithms. Eur J Oper Res 196:413–422

Lera D, Sergeyev Ya (2010) Lipschitz and Hölder global optimization using space-filling curves. Appl Numer Math 60(1–2):115–129

Fogel LJ, Owens AJ, Walsh MJ (1966) Artificial intelligence through simulated evolution. Wiley, Chichester

De Jong K (1975) Analysis of the behavior of a class of genetic adaptive systems. Ph.D. Thesis, University of Michigan, Ann Arbor, MI

Koza JR (1990) Genetic programming: a paradigm for genetically breeding populations of computer programs to solve problems. Rep. No. STAN-CS-90-1314, Stanford University, CA

Holland JH (1975) Adaptation in natural and artificial systems. University of Michigan Press, Ann Arbor

Goldberg DE (1989) Genetic algorithms in search, optimization and machine learning. Addison Wesley, Boston

de Castro LN, Von Zuben FJ (1999) Artificial immune systems: Part I—basic theory and applications. Technical report TR-DCA 01/99

Storn R, Price K (1995) Differential evolution—a simple and efficient adaptive scheme for global optimisation over continuous spaces. Tech. Rep. TR-95–012, ICSI, Berkeley, CA

Kirkpatrick S, Gelatt C, Vecchi M (1983) Optimization by simulated annealing. Science 220(4598):671–680

İlker B, Birbil S, Shu-Cherng F (2003) An electromagnetism-like mechanism for global optimization. J Glob Optim 25:263–282

Rashedia E, Nezamabadi-pour H, Saryazdi S (2011) Filter modeling using gravitational search algorithm. Eng Appl Artif Intell 24(1):117–122

Kennedy J, Eberhart R (1995) Particle swarm optimization. In: Proceedings of the 1995 IEEE international conference on neural networks, December 1995, vol 4, pp 1942–1948

Dorigo M, Maniezzo V, Colorni A (1991) Positive feedback as a search strategy. Technical Report No. 91-016, Politecnico di Milano

Tan KC, Chiam SC, Mamun AA, Goh CK (2009) Balancing exploration and exploitation with adaptive variation for evolutionary multi-objective optimization. Eur J Oper Res 197:701–713

Chen G, Low CP, Yang Z (2009) Preserving and exploiting genetic diversity in evolutionary programming algorithms. IEEE Trans Evol Comput 13(3):661–673

Liu S-H, Mernik M, Bryant B (2009) To explore or to exploit: an entropy-driven approach for evolutionary algorithms. Int J Knowl-Based Intell Eng Syst 13(3):185–206

Alba E, Dorronsoro B (2005) The exploration/exploitation tradeoff in dynamic cellular genetic algorithms. IEEE Trans Evol Comput 9(3):126–142

Fister I, Mernik M, Filipič B (2010) A hybrid self-adaptive evolutionary algorithm for marker optimization in the clothing industry. Appl Soft Comput 10(2):409–422

Gong W, Cai Z, Jiang L (2008) Enhancing the performance of differential evolution using orthogonal design method. Appl Math Comput 206(1):56–69

Joan-Arinyo R, Luzon MV, Yeguas E (2011) Parameter tuning of pbil and chc evolutionary algorithms applied to solve the root identification problem. Appl Soft Comput 11(1):754–767

Mallipeddi R, Suganthan PN, Pan QK, Tasgetiren MF (2011) Differential evolution algorithm with ensemble of parameters and mutation strategies. Appl Soft Comput 11(2):1679–1696

Sadegh M, Reza M, Palhang M (2012) LADPSO: using fuzzy logic to conduct PSO algorithm. Appl Intell 37(2):290–304

Yadav P, Kumar R, Panda SK, Chang CS (2012) An intelligent tuned harmony search algorithm for optimization. Inf Sci 196(1):47–72

Khajehzadeh M, Raihan Taha M, El-Shafie A, Eslami M (2012) A modified gravitational search algorithm for slope stability analysis. Eng Appl Artif Intell 25(8):1589–1597

Koumousis V, Katsaras CP (2006) A saw-tooth genetic algorithm combining the effects of variable population size and reinitialization to enhance performance. IEEE Trans Evol Comput 10(1):19–28

Han M-F, Liao S-H, Chang J-Y, Lin C-T (2012) Dynamic group-based differential evolution using a self-adaptive strategy for global optimization problems. Appl Intell. doi:10.1007/s10489-012-0393-5

Brest J, Maučec, MS (2008) Population size reduction for the differential evolution algorithm. Appl Intell 29(3):228–247

Li Y, Zeng X (2010) Multi-population co-genetic algorithm with double chain-like agents structure for parallel global numerical optimization. Appl Intell 32(3):292–310

Paenke I, Jin Y, Branke J (2009) Balancing population- and individual-level adaptation in changing environments. Adapt Behav 17(2):153–174

Araujo L, Merelo JJ (2011) Diversity through multiculturality: assessing migrant choice policies in an island model. IEEE Trans Evol Comput 15(4):456–468

Gao H, Xu W (2011) Particle swarm algorithm with hybrid mutation strategy. Appl Soft Comput 11(8):5129–5142

Jia D, Zheng G, Khan MK (2011) An effective memetic differential evolution algorithm based on chaotic local search. Inf Sci 181(15):3175–3187

Lozano M, Herrera F, Cano JR (2008) Replacement strategies to preserve useful diversity in steady-state genetic algorithms. Inf Sci 178(23):4421–4433

Ostadmohammadi B, Mirzabeygi P, Panahi M (2013) An improved PSO algorithm with a territorial diversity-preserving scheme and enhanced exploration–exploitation balance. Swarm Evol Comput 11:1–15

Yang G-P, Liu S-Y, Zhang J-K, Feng Q-X (2012) Control and synchronization of chaotic systems by an improved biogeography-based optimization algorithm. Appl Intell. doi:10.1007/s10489-012-0398-0

Hasanzadeh M, Meybodi MR, Ebadzadeh MM (2012) Adaptive cooperative particle swarm optimizer. Appl Intell. doi:10.1007/s10489-012-0420-6

Aribarg T, Supratid S, Lursinsap C (2012) Optimizing the modified fuzzy ant-miner for efficient medical diagnosis. Appl Intell 37(3):357–376

Fernandes CM, Laredo JLJ, Rosa AC, Merelo JJ (2012) The sandpile mutation Genetic Algorithm: an investigation on the working mechanisms of a diversity-oriented and self-organized mutation operator for non-stationary functions. Appl Intell. doi:10.1007/s10489-012-0413-5

Gwak J, Sim KM (2013) A novel method for coevolving PS-optimizing negotiation strategies using improved diversity controlling EDAs. Appl Intell 38(3):384–417

Cheshmehgaz HR, Ishak Desa M, Wibowo A (2013) Effective local evolutionary searches distributed on an island model solving bi-objective optimization problems. Appl Intell 38(3):331–356

Cuevas E, González M (2012) Multi-circle detection on images inspired by collective animal behaviour. Appl Intell. doi:10.1007/s10489-012-0396-2

Adra SF, Fleming PJ (2011) Diversity management in evolutionary many-objective optimization. IEEE Trans Evol Comput 15(2):183–195

Črepineš M, Liu SH, Mernik M (2011) Exploration and exploitation in evolutionary algorithms: a survey. ACM Comput Surv 1(1):1–33

Ceruti MG, Rubin, SH (2007) Infodynamics: Analogical analysis of states of matter and information. Inf Sci 177:969–987

Chowdhury D, Stauffer D (2000) Principles of equilibrium statistical mechanics. Wiley-VCH, New York

Betts DS, Turner RE (1992) Introductory statistical mechanics, 1st edn. Addison Wesley, Reading

Cengel YA, Boles MA (2005) Thermodynamics: an engineering approach, 5th edn. McGraw-Hill, New York

Bueche F, Hecht E (2011) Schaum’s outline of college physics, 11th edn. McGraw-Hill, New York

Piotrowski AP, Napiorkowski JJ, Kiczko A (2012) Differential evolution algorithm with separated groups for multi-dimensional optimization problems. Eur J Oper Res 216(1):33–46

Cocco Mariani V, Justi Luvizotto LG, Alessandro Guerra F, dos Santos Coelho L (2011) A hybrid shuffled complex evolution approach based on differential evolution for unconstrained optimization. Appl Math Comput 217(12):5822–5829

Yao X, Liu Y, Lin G (1999) Evolutionary programming made faster. IEEE Trans Evol Comput 3(2):82–102

Moré JJ, Garbow BS, Hillstrom KE (1981) Testing unconstrained optimization software. ACM Trans Math Softw 7(1):17–41

Tsoulos IG (2008) Modifications of real code genetic algorithm for global optimization. Appl Math Comput 203(2):598–607

Black-Box Optimization Benchmarking (BBOB) 2010, 2nd GECCO Workshop for Real-Parameter Optimization. http://coco.gforge.inria.fr/doku.php?id=bbob-2010

Abdel-Rahman Hedar, Ali AF (2012) Tabu search with multi-level neighborhood structures for high dimensional problems. Appl Intell 37(2):189–206

Vafashoar R, Meybodi MR, Momeni Azandaryani AH (2012) CLA-DE: a hybrid model based on cellular learning automata for numerical optimization. Appl Intell 36(3):735–748

Garcia S, Molina D, Lozano M, Herrera F (2008) A study on the use of non-parametric tests for analyzing the evolutionary algorithms’ behaviour: a case study on the CEC’2005 special session on real parameter optimization. J Heuristics. doi:10.1007/s10732-008-9080-4

Shilane D, Martikainen J, Dudoit S, Ovaska S (2008) A general framework for statistical performance comparison of evolutionary computation algorithms. Inf Sci 178:2870–2879

Acknowledgements

The proposed algorithm is part of the optimization system used by a biped robot supported under the grant CONACYT CB 181053.

Author information

Authors and Affiliations

Corresponding author

Appendix A: List of benchmark functions

Appendix A: List of benchmark functions

Rights and permissions

About this article

Cite this article

Cuevas, E., Echavarría, A. & Ramírez-Ortegón, M.A. An optimization algorithm inspired by the States of Matter that improves the balance between exploration and exploitation. Appl Intell 40, 256–272 (2014). https://doi.org/10.1007/s10489-013-0458-0

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-013-0458-0